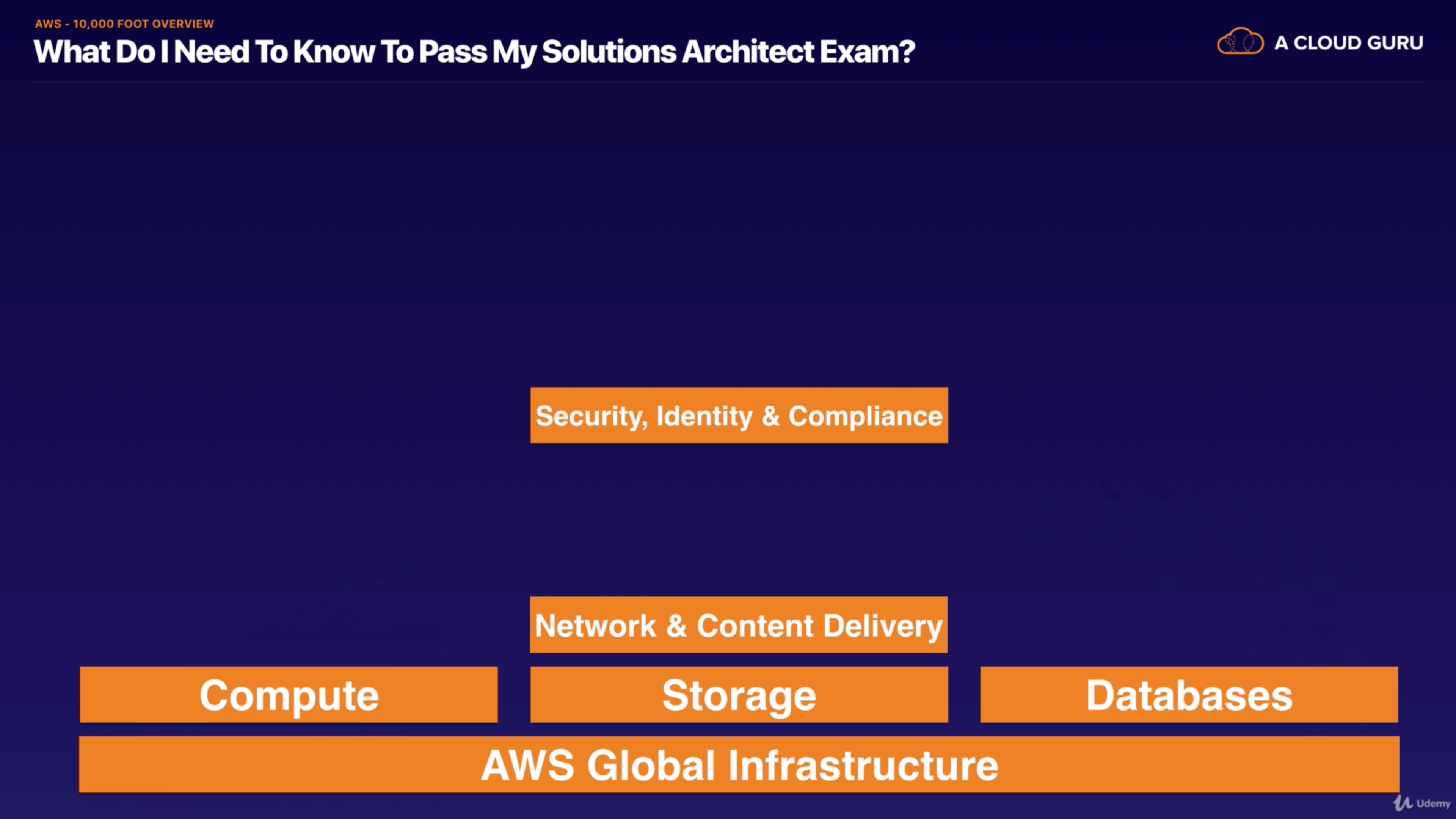

AWS Certified Solutions Architect

Exam Guidance

Identity Access Management & S3

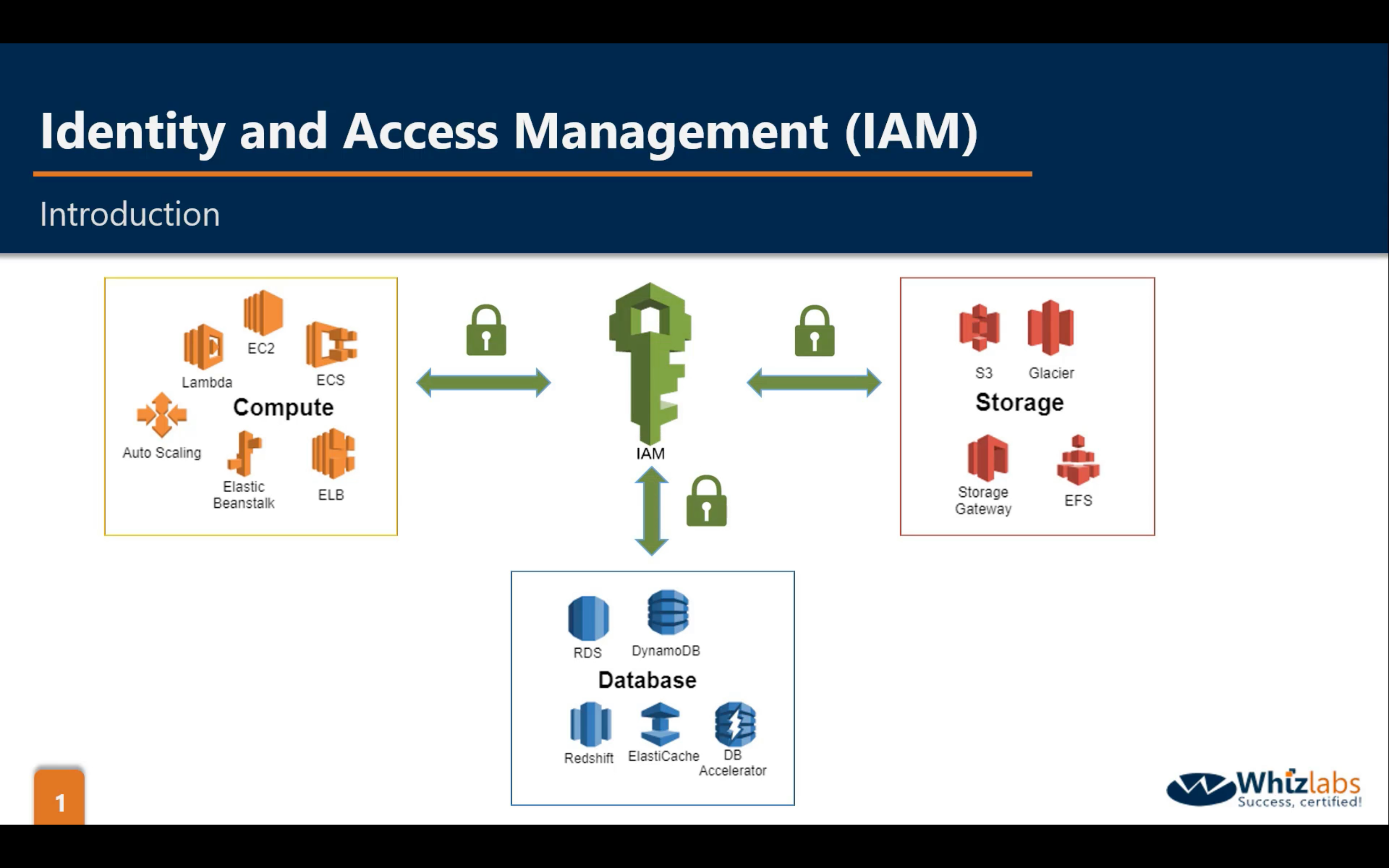

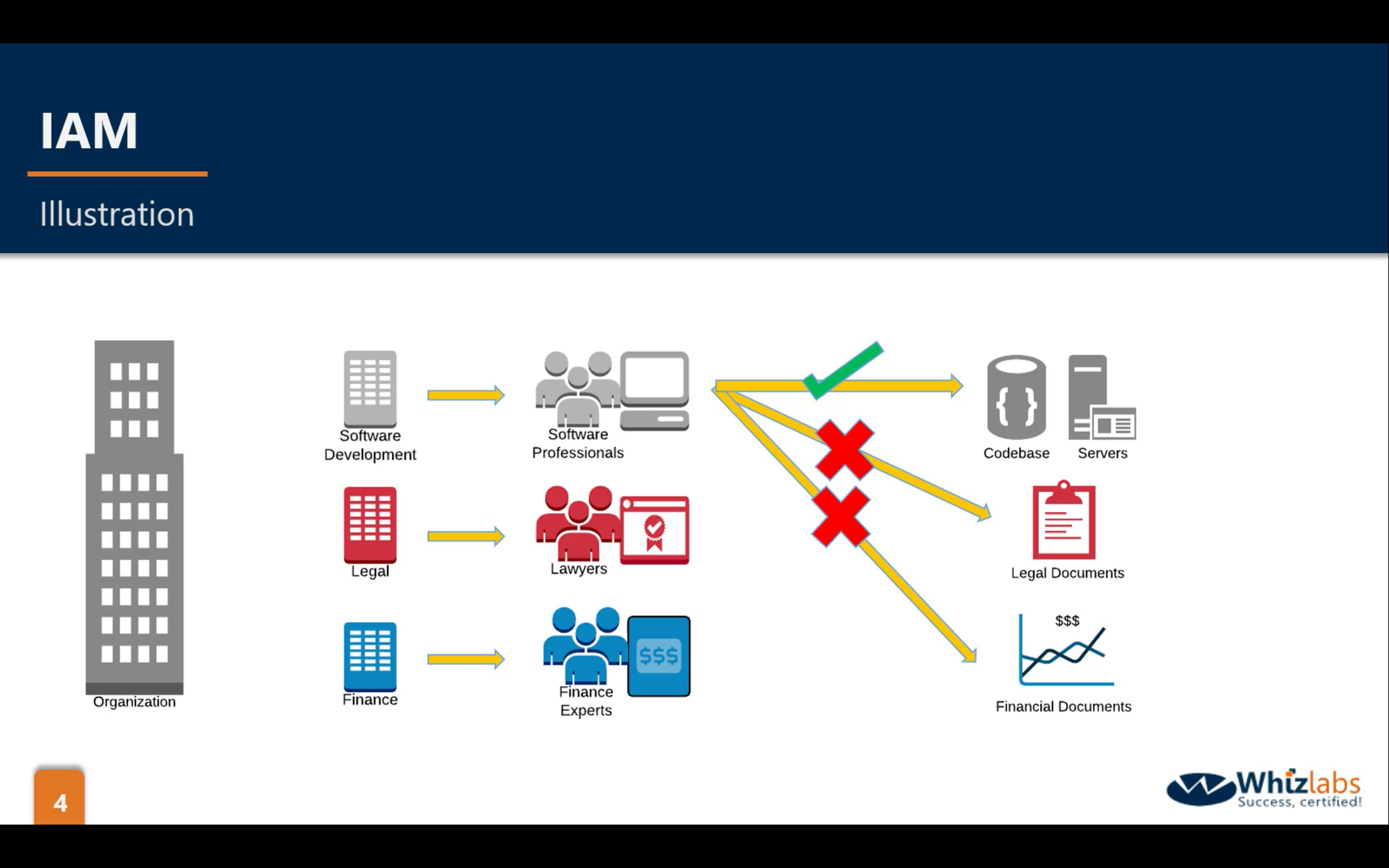

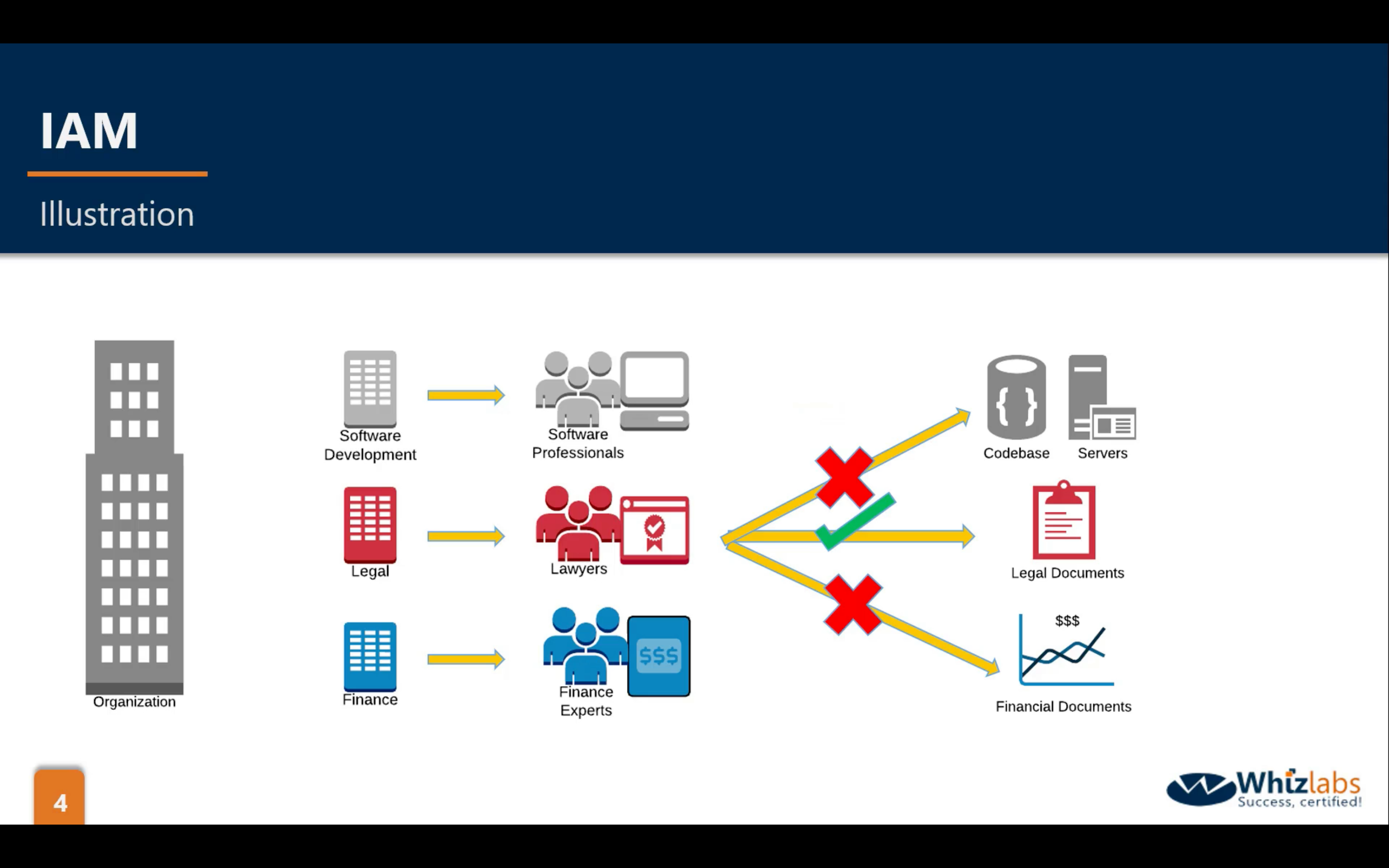

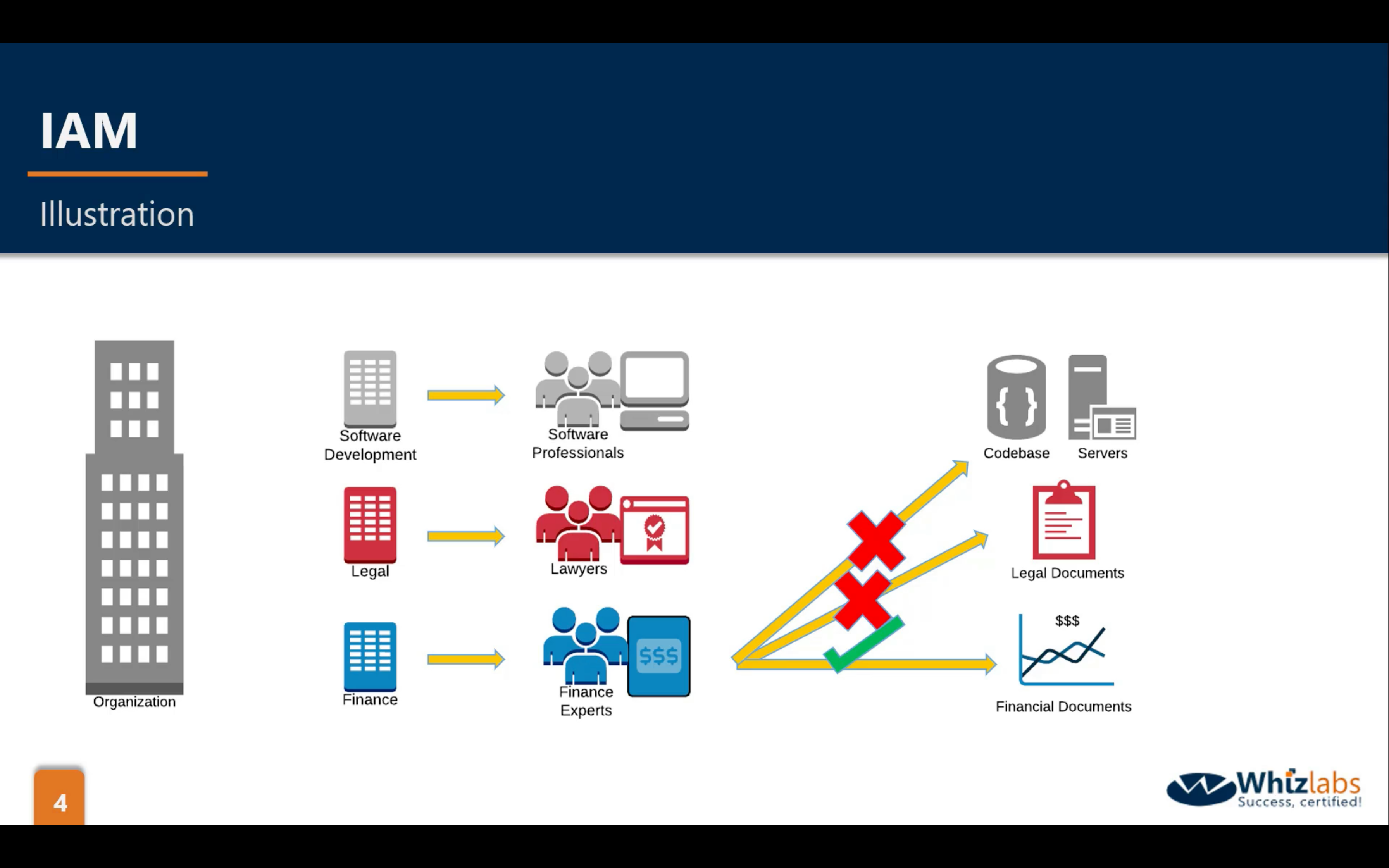

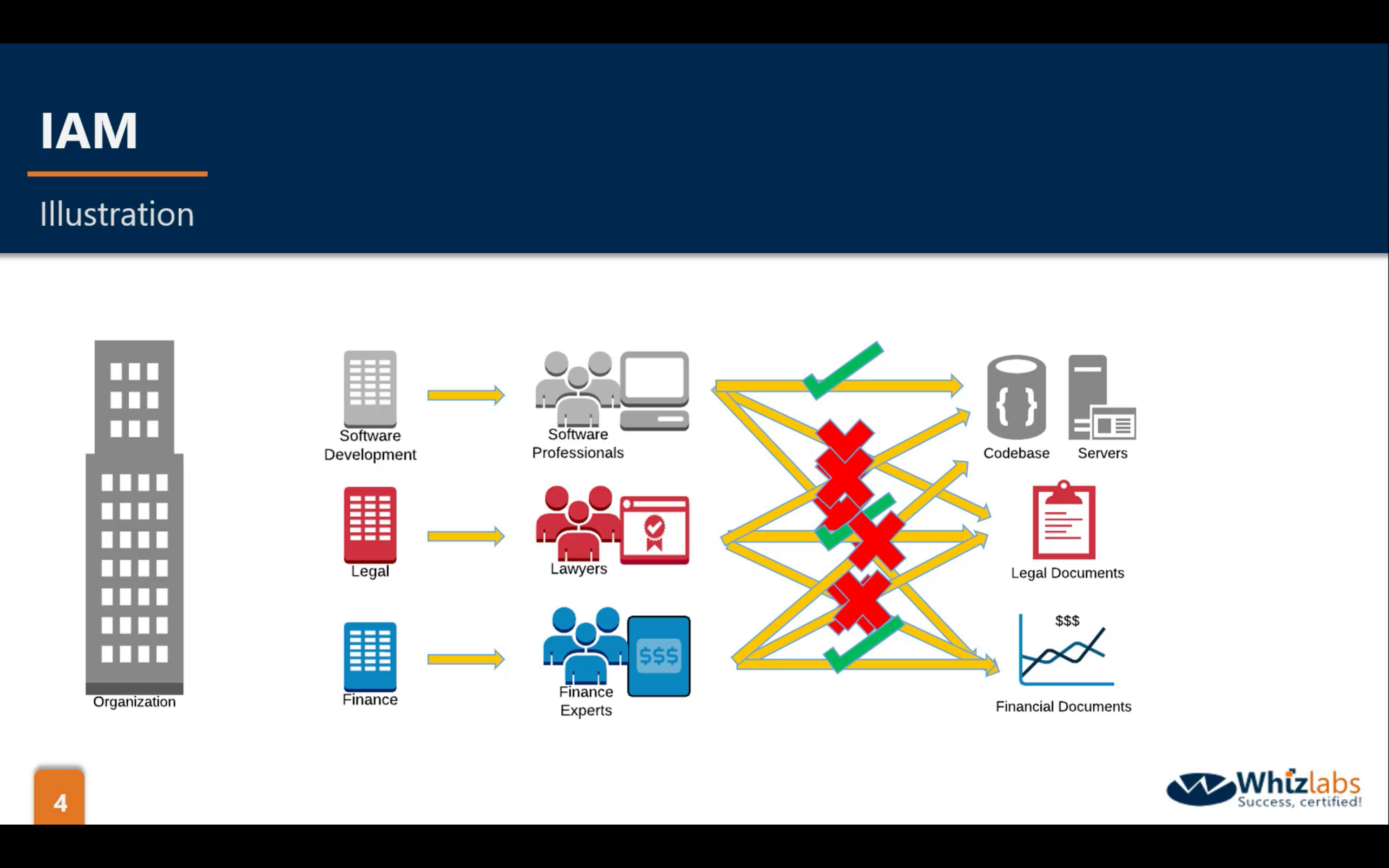

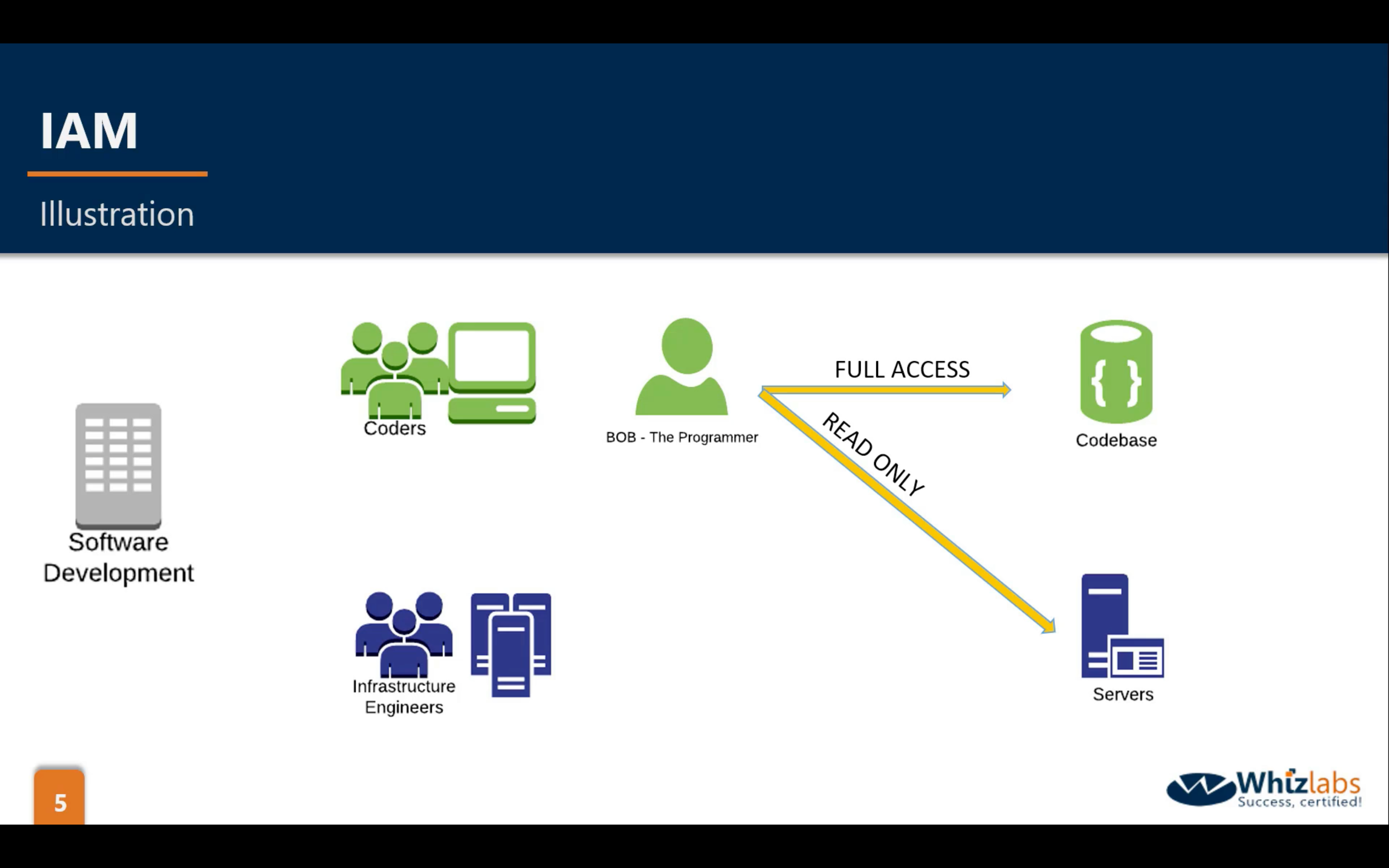

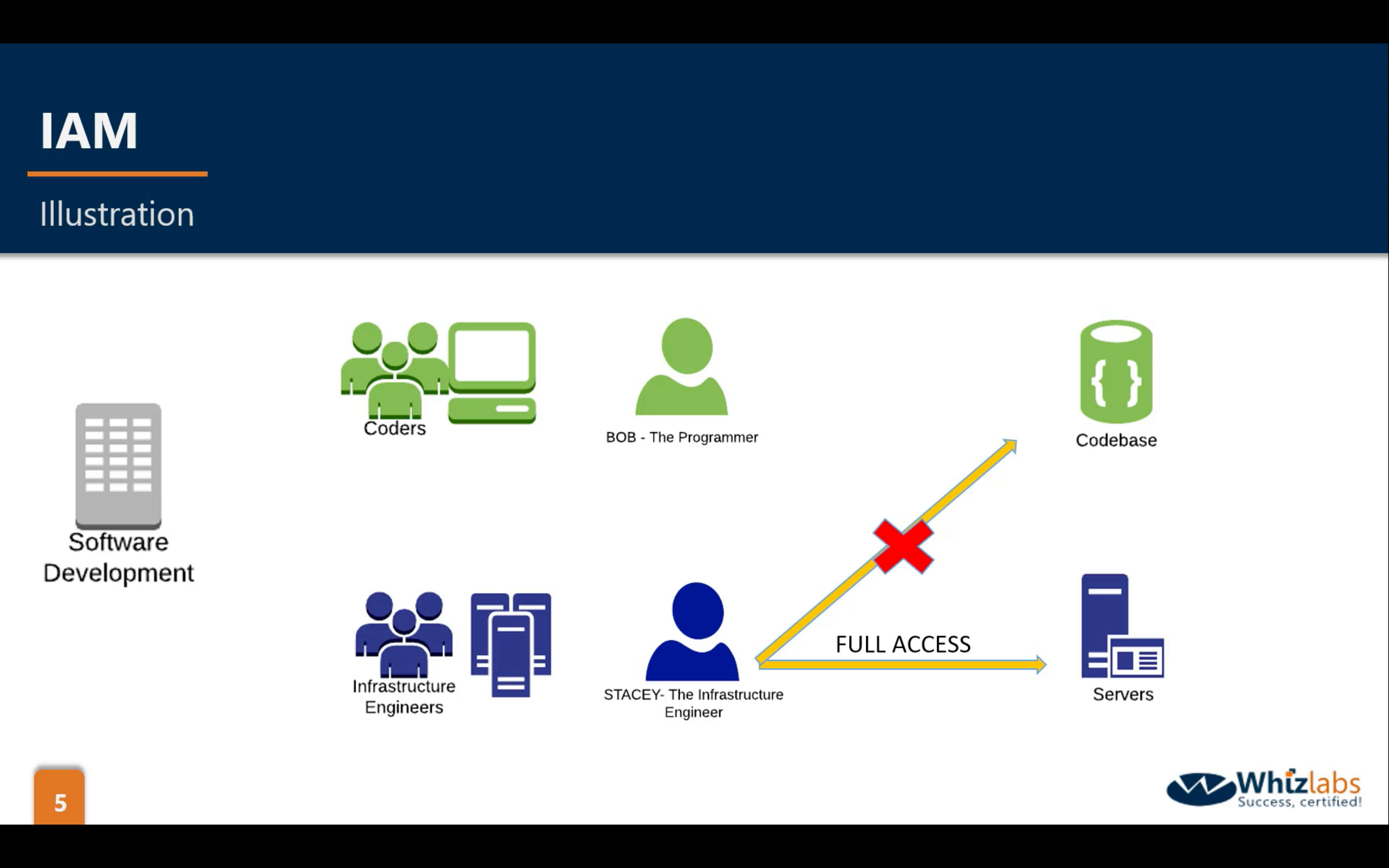

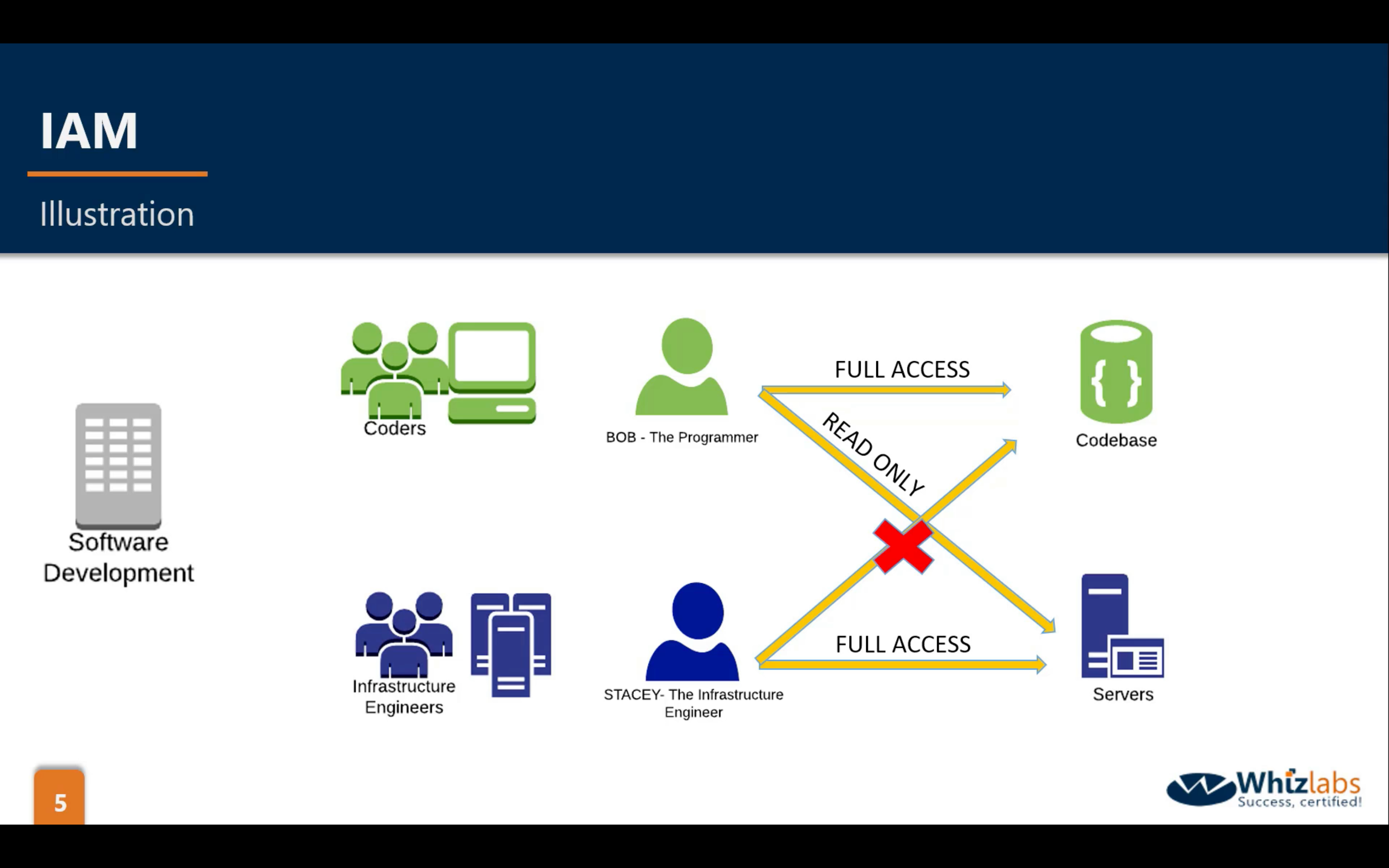

AWS IAM (Identity and Access Management)

- Centralized control of your AWS account

- Shared Access to your AWS account

- Granular Permissions

- Identity Federation (including Active Directory, Facebook, LinkedIn etc)

- Multi-factor Authentication

- Provide temporary access for users/devices and services were necessary

- Allows you to set up your own password rotation policy

- Integrated with many different AWS services

- Supports PCI DSS Compliance

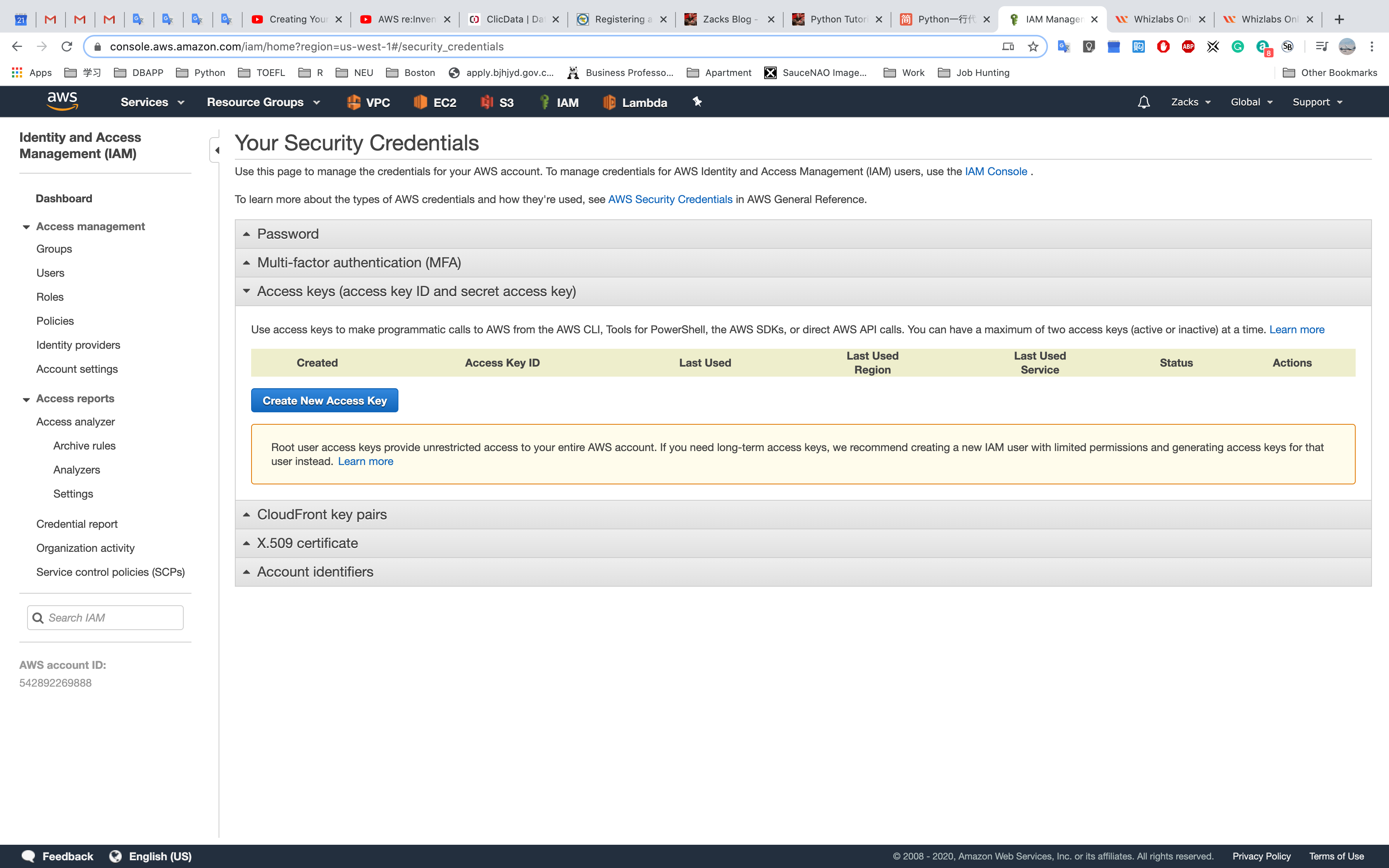

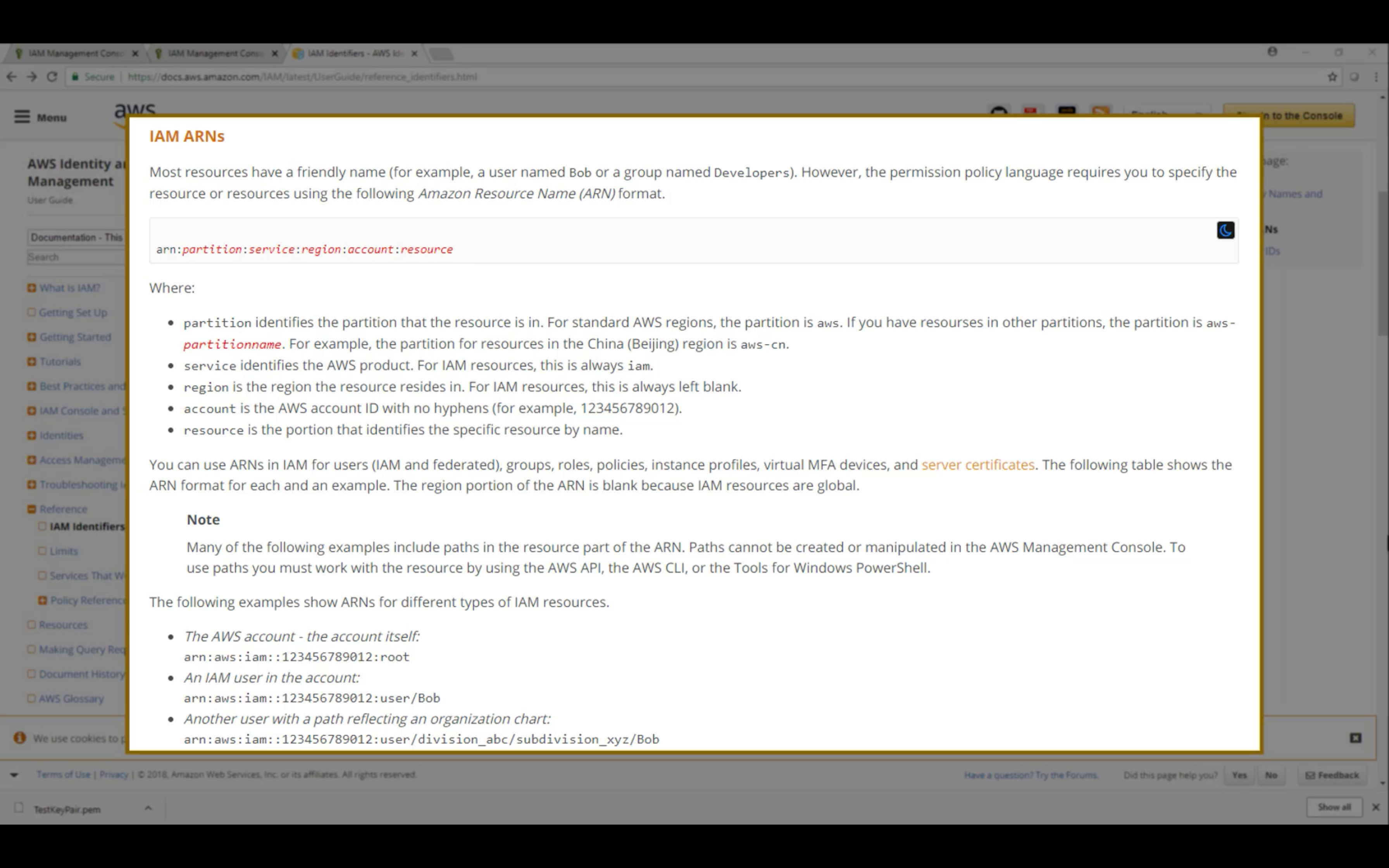

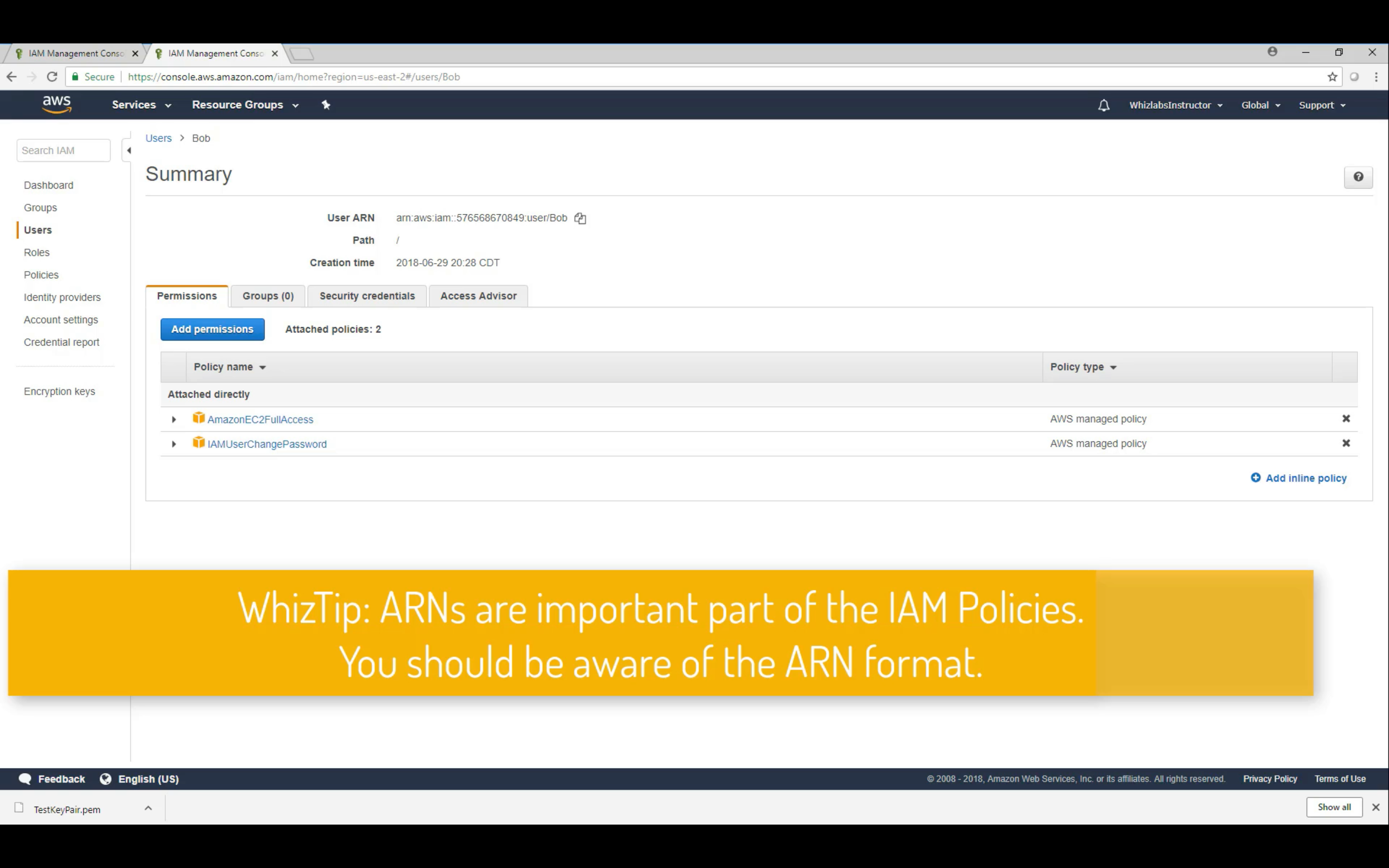

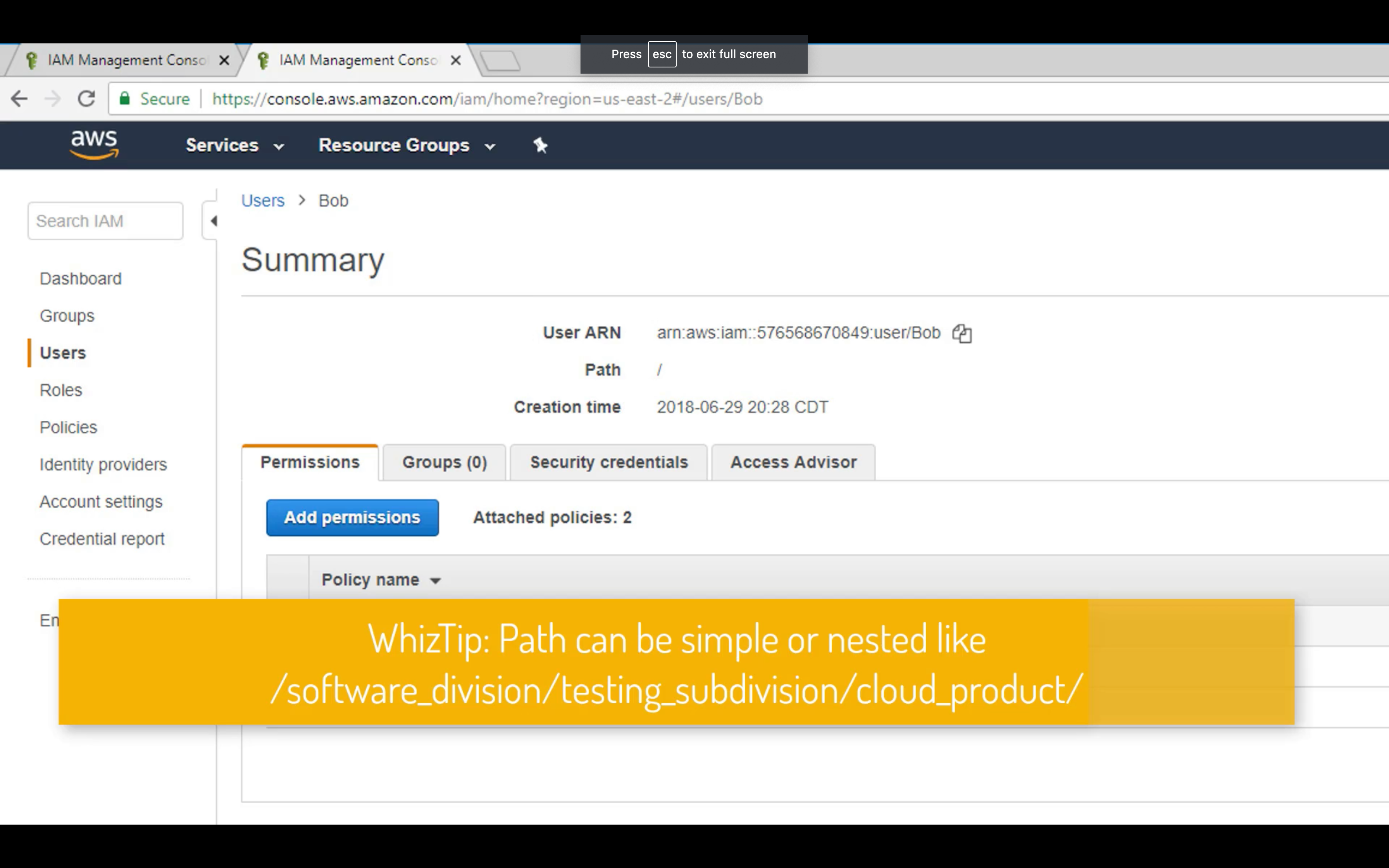

arn: Amazon Resource Name

arn:aws:iam::542892269888:user/Zacks

arn:partition:service:region:account:resource

Key Terminology For IAM

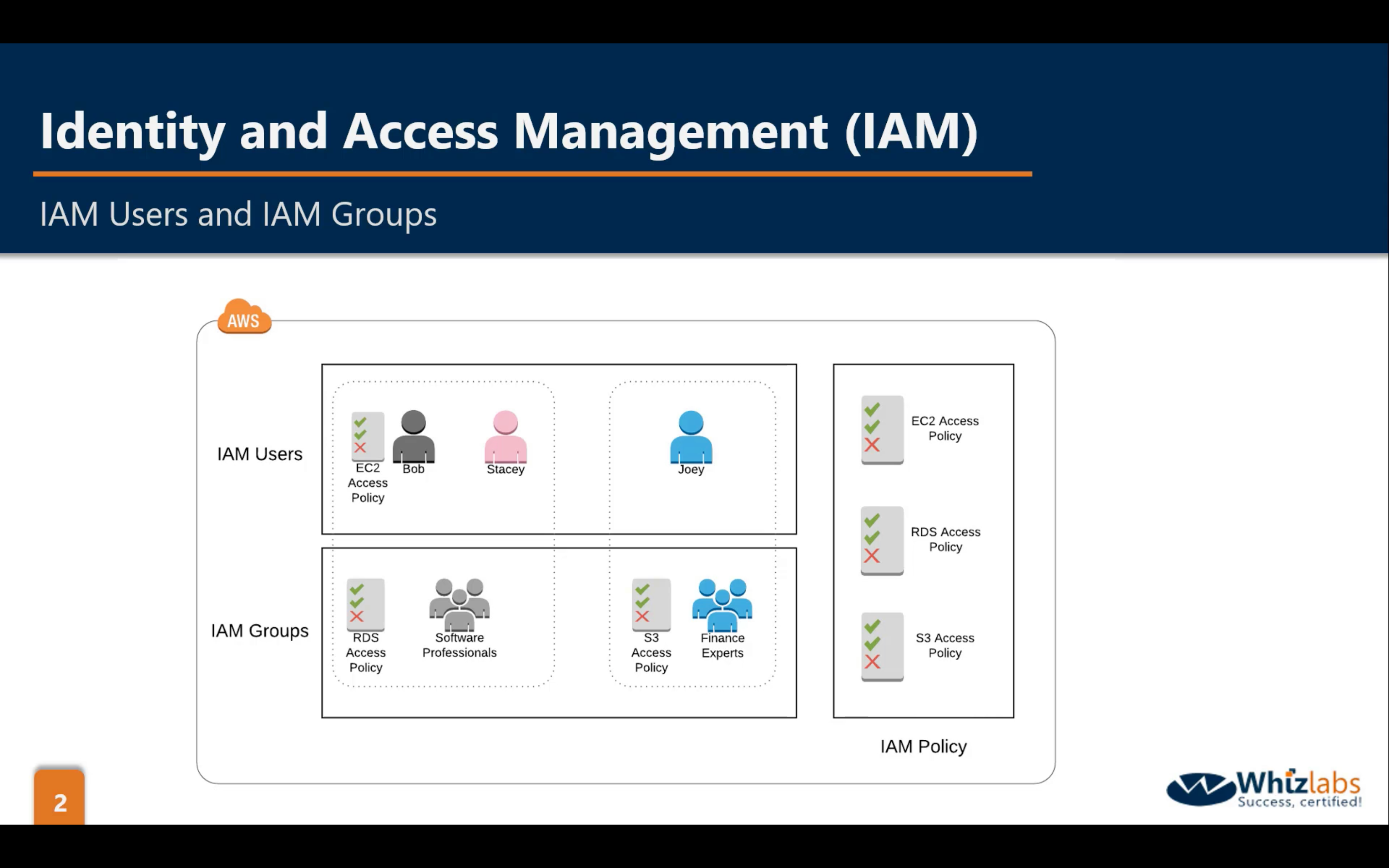

- Users

- End Users such as people, employees of an organization etc.

- Groups

- A collection of users. Each user in the group will inherit the permissions of the group.

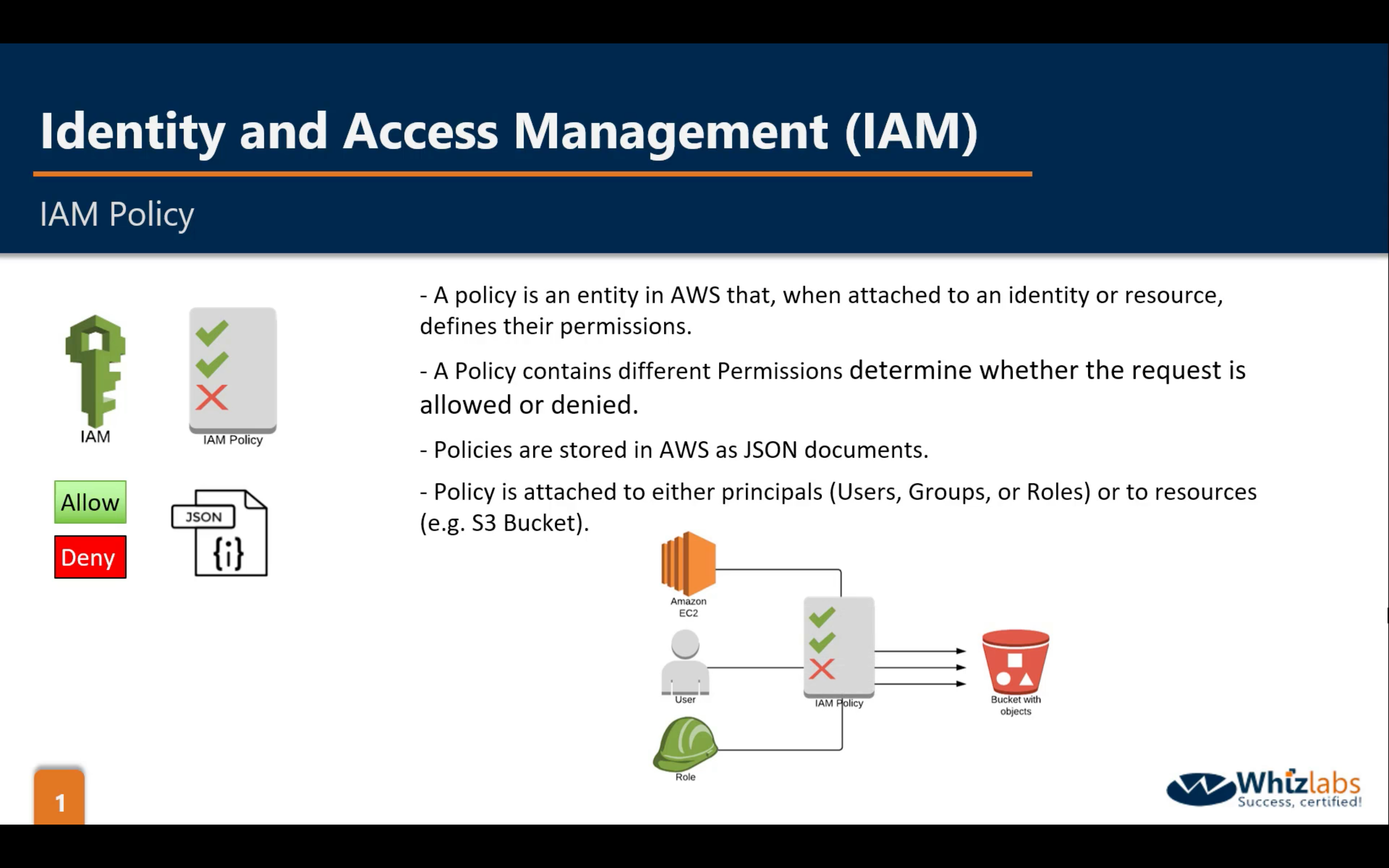

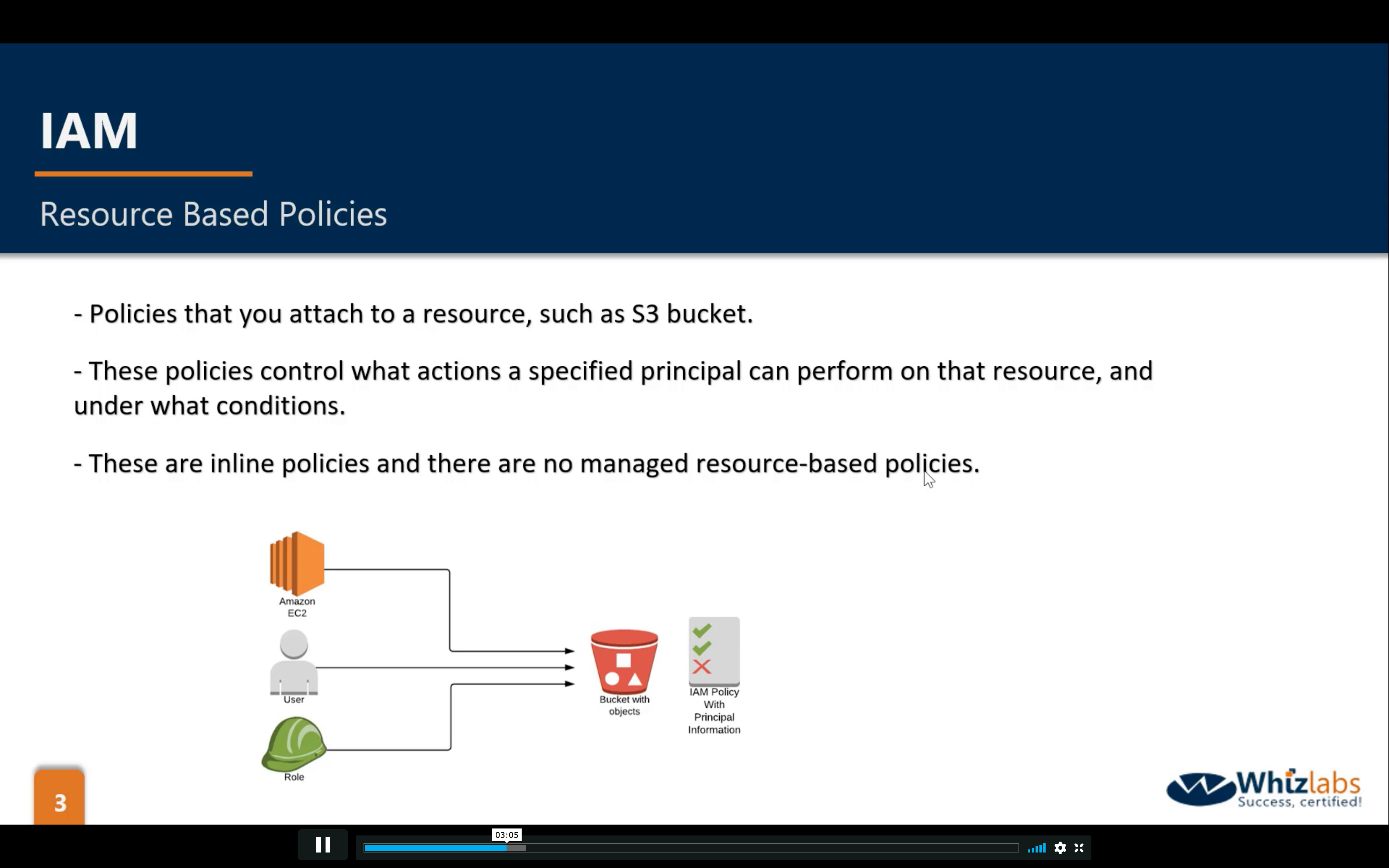

- Policies

- Polices are made up of documents, called Policy documents. These documents are in a format called JSON and they give permissions as what a User/Group/Role is able to do.

- Roles

- You create roles and then assign them to AWS Resources.

IAM Policies

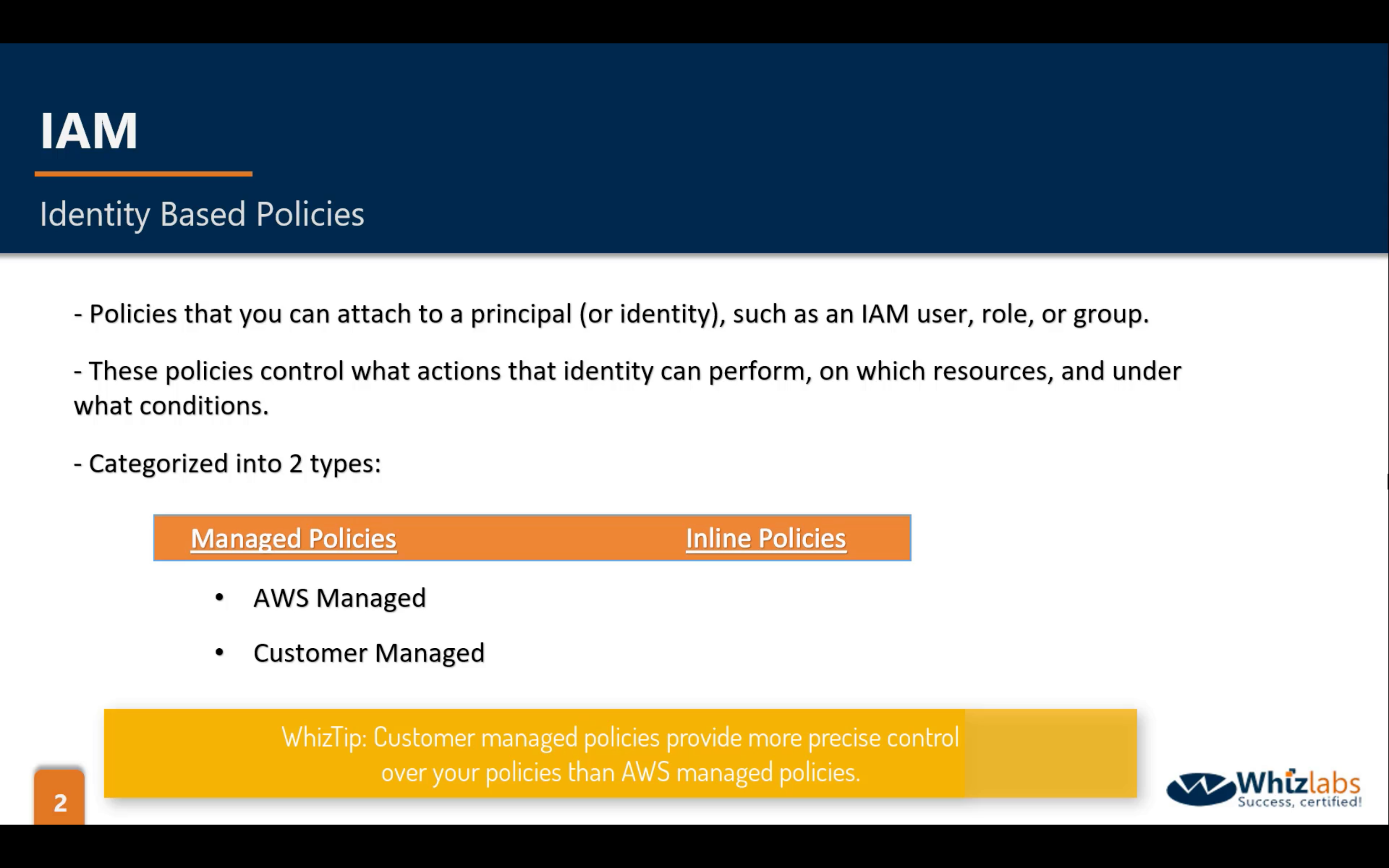

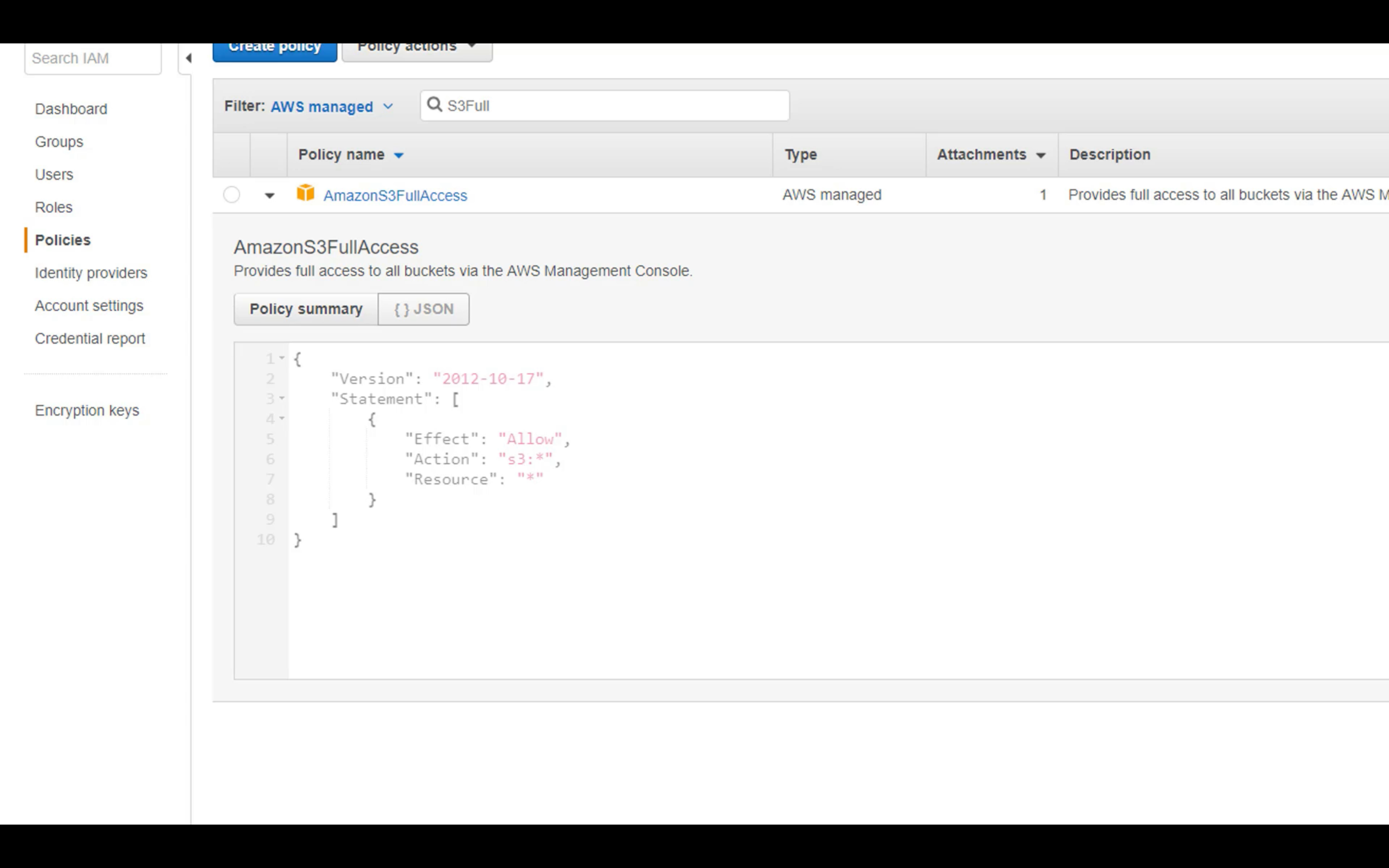

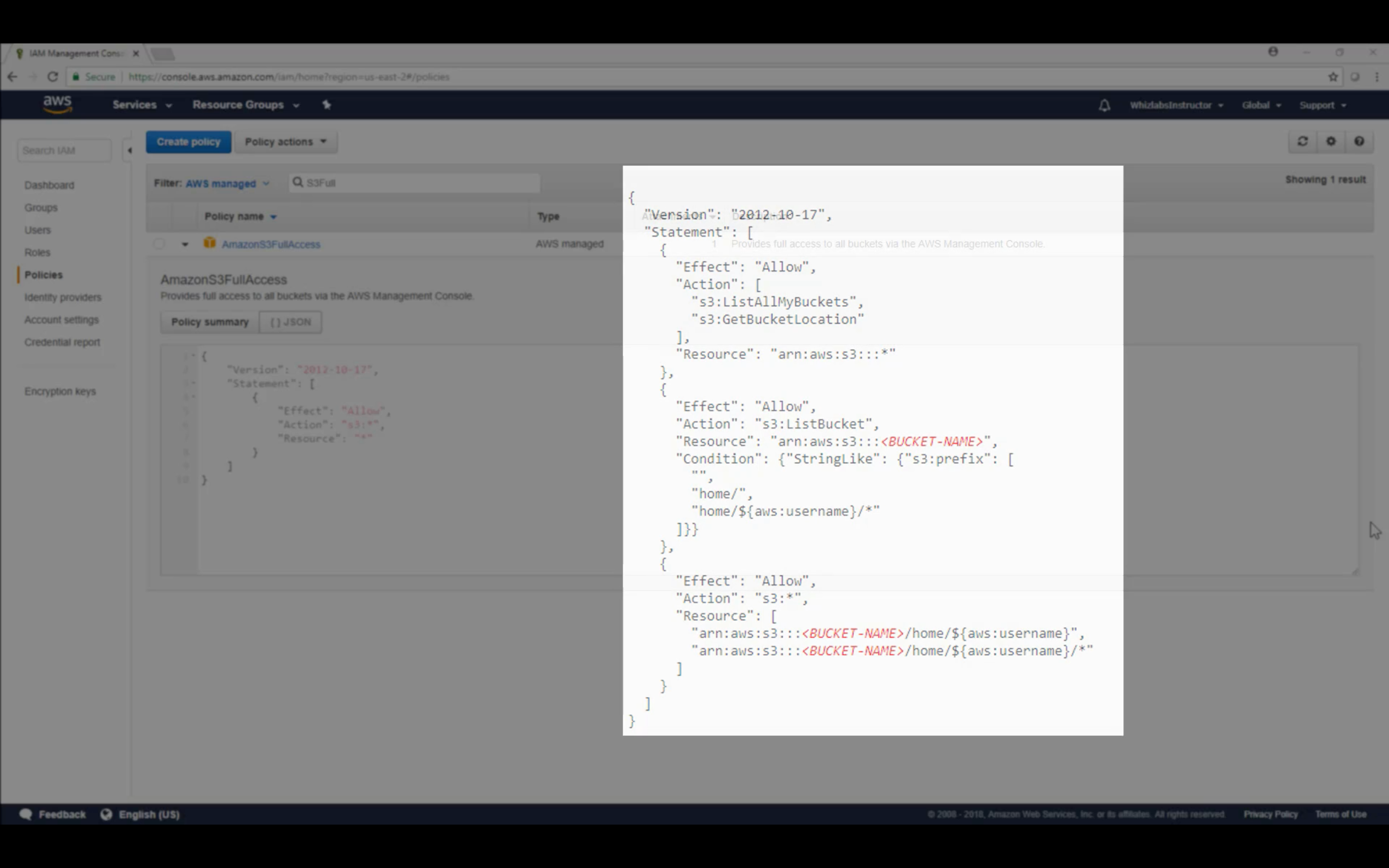

Managed Policies:

- AWS Managed

- Customer Managed

Inline Policies:

- JSON

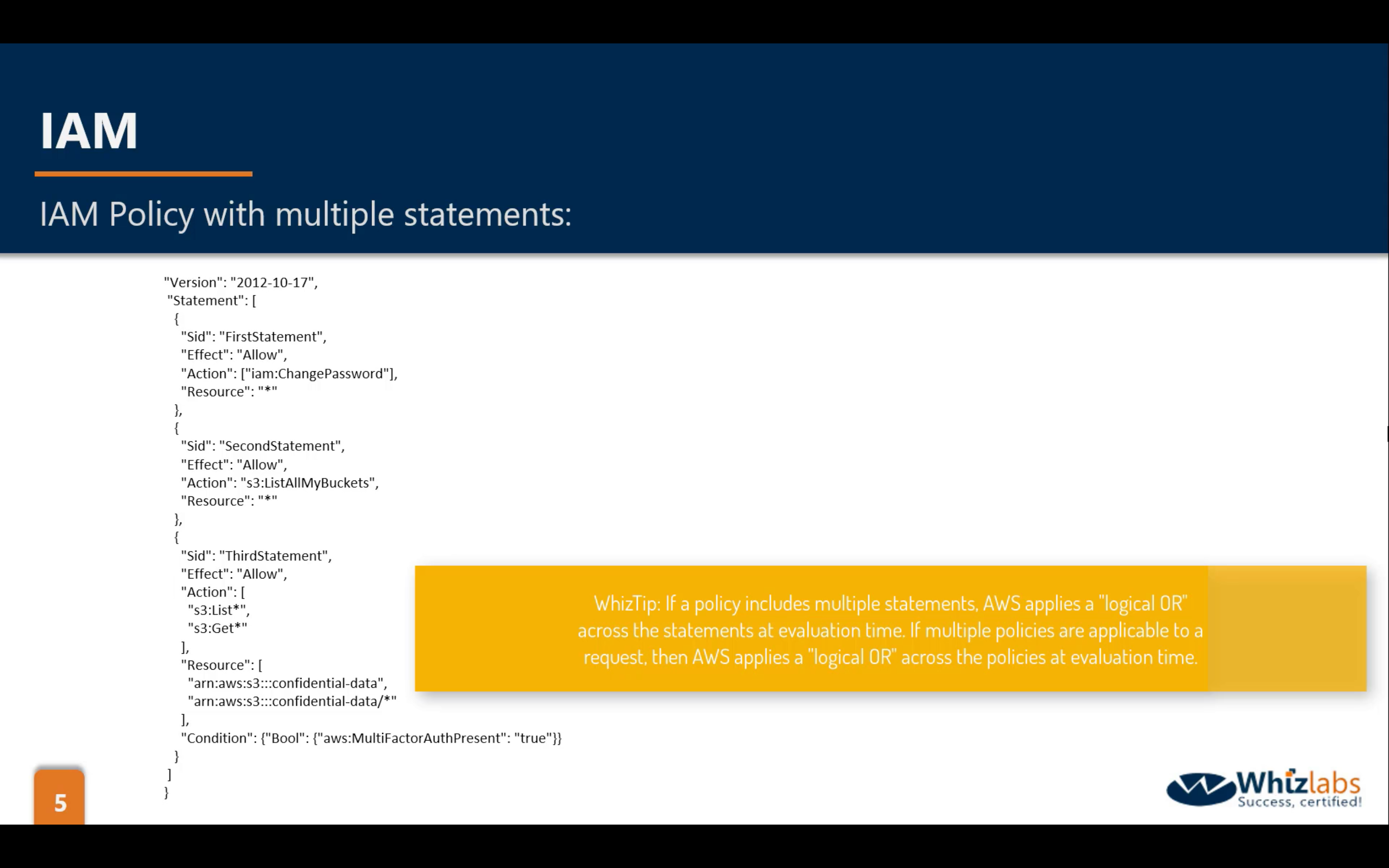

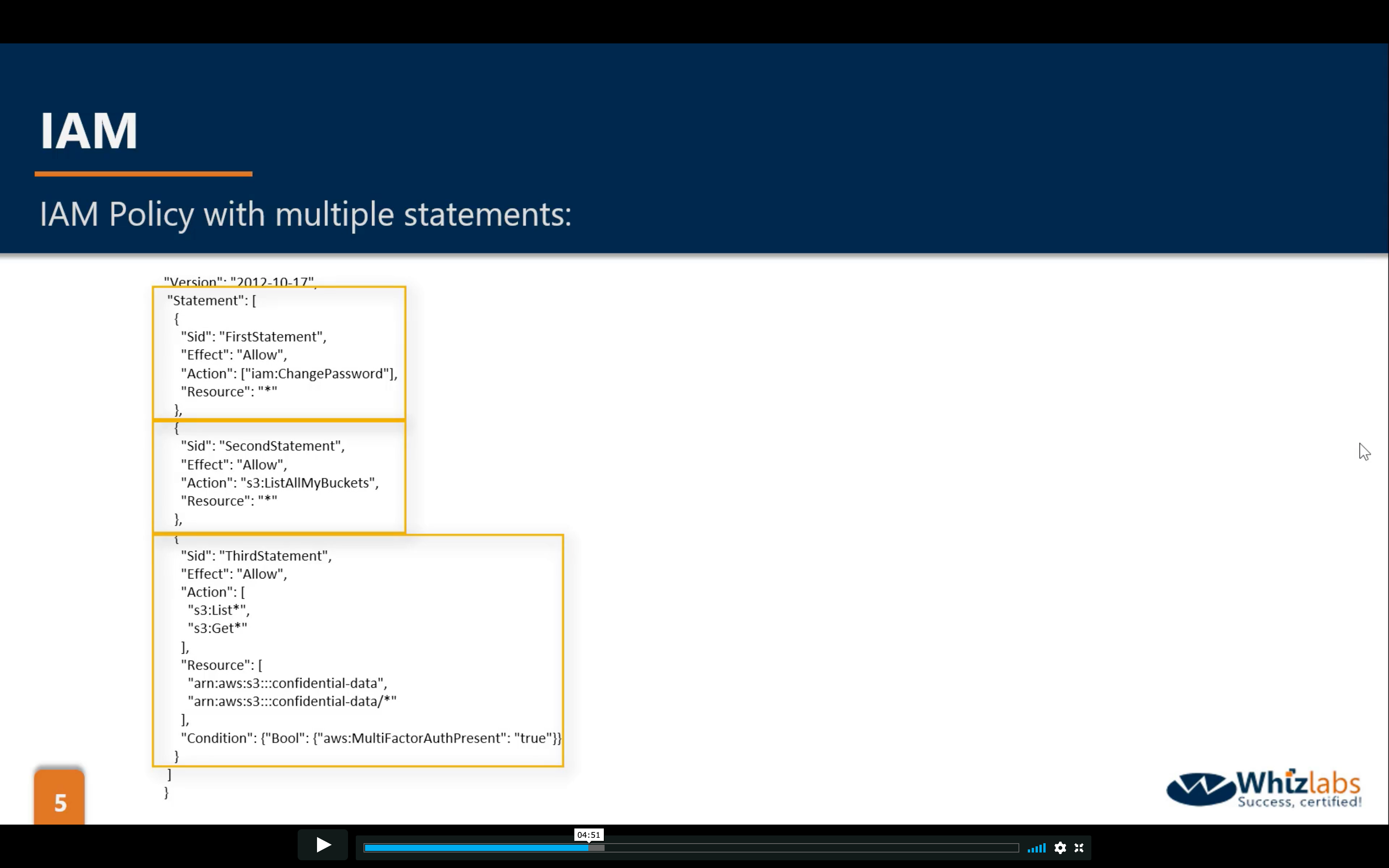

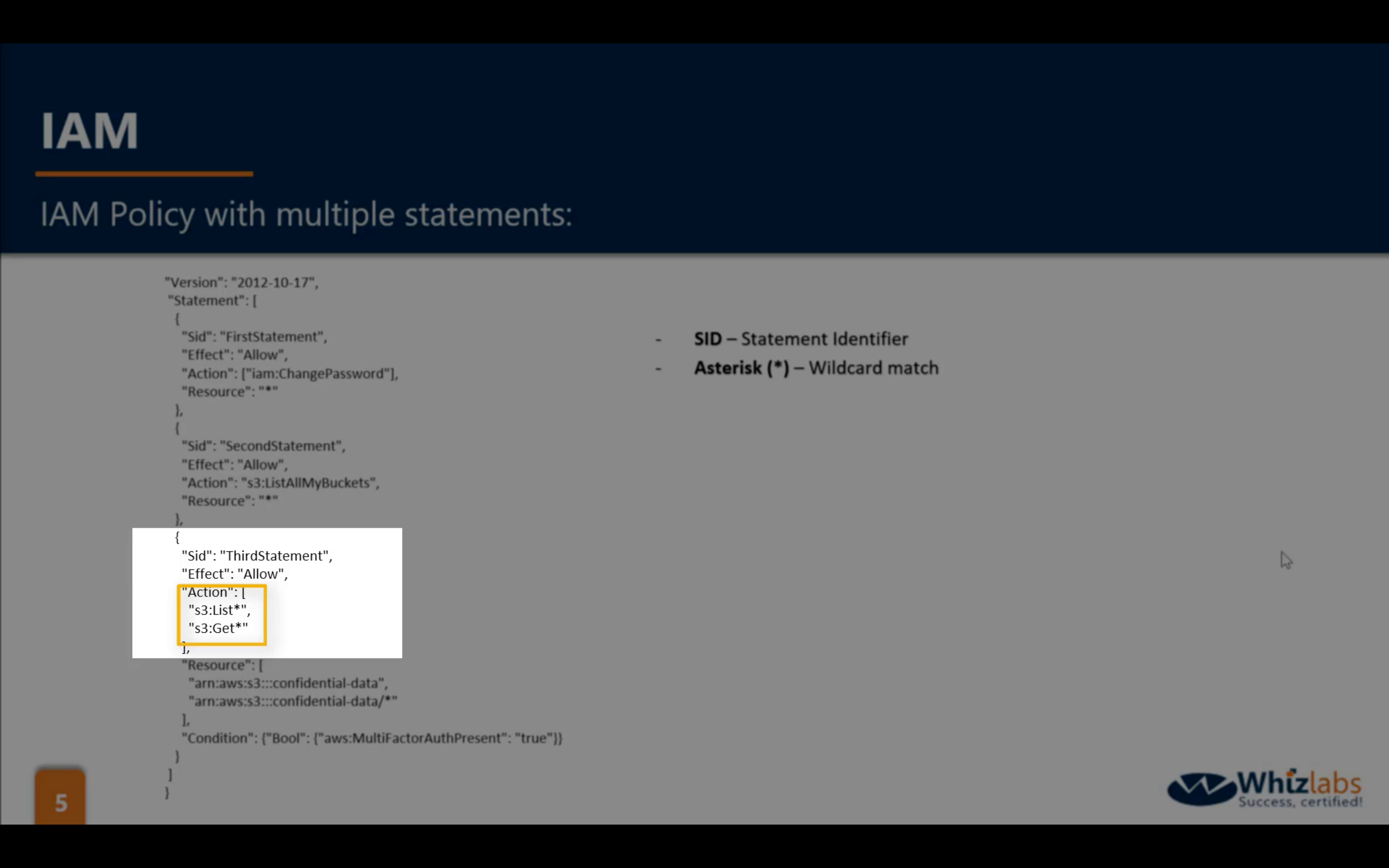

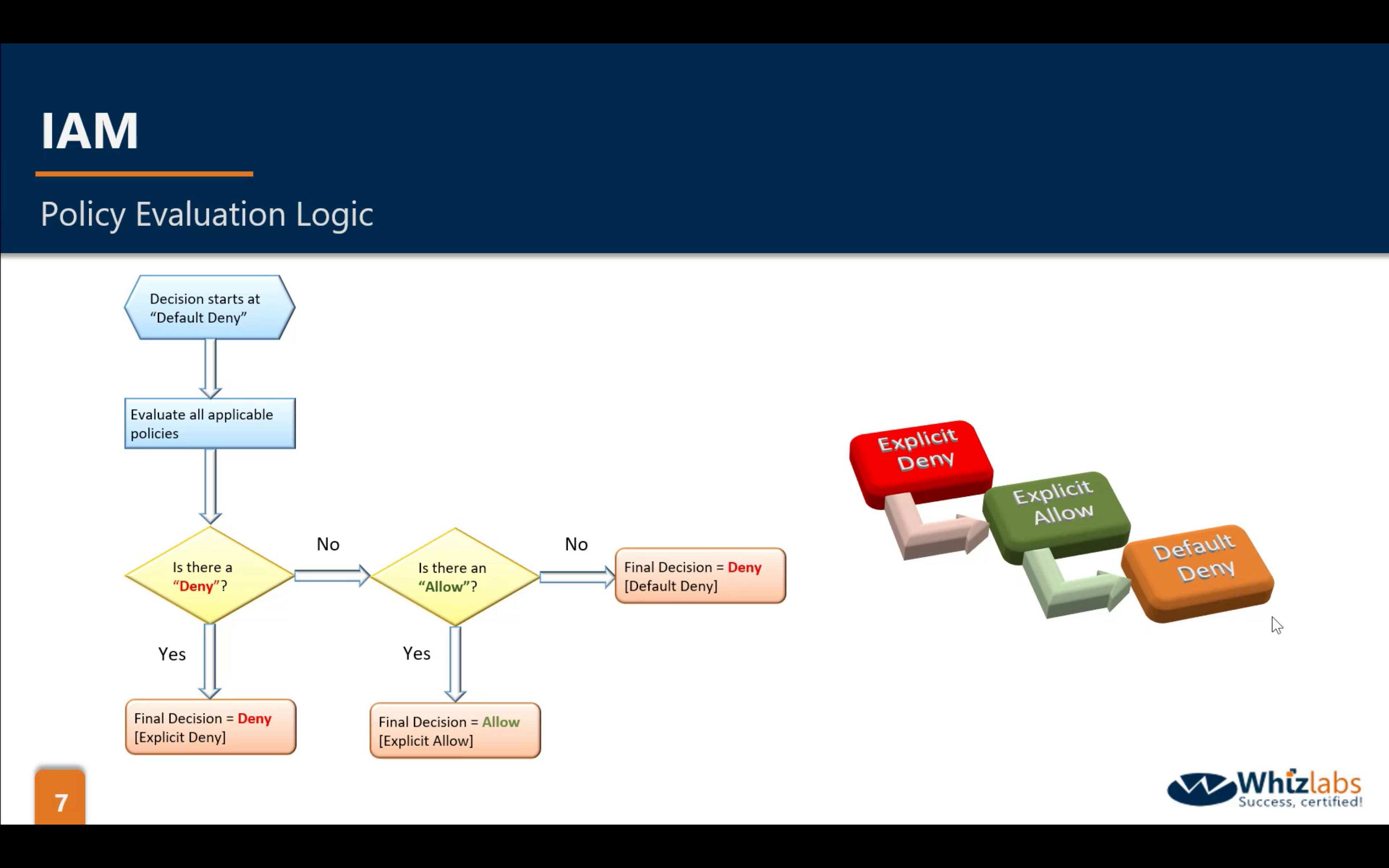

logical OR in IAM Policies

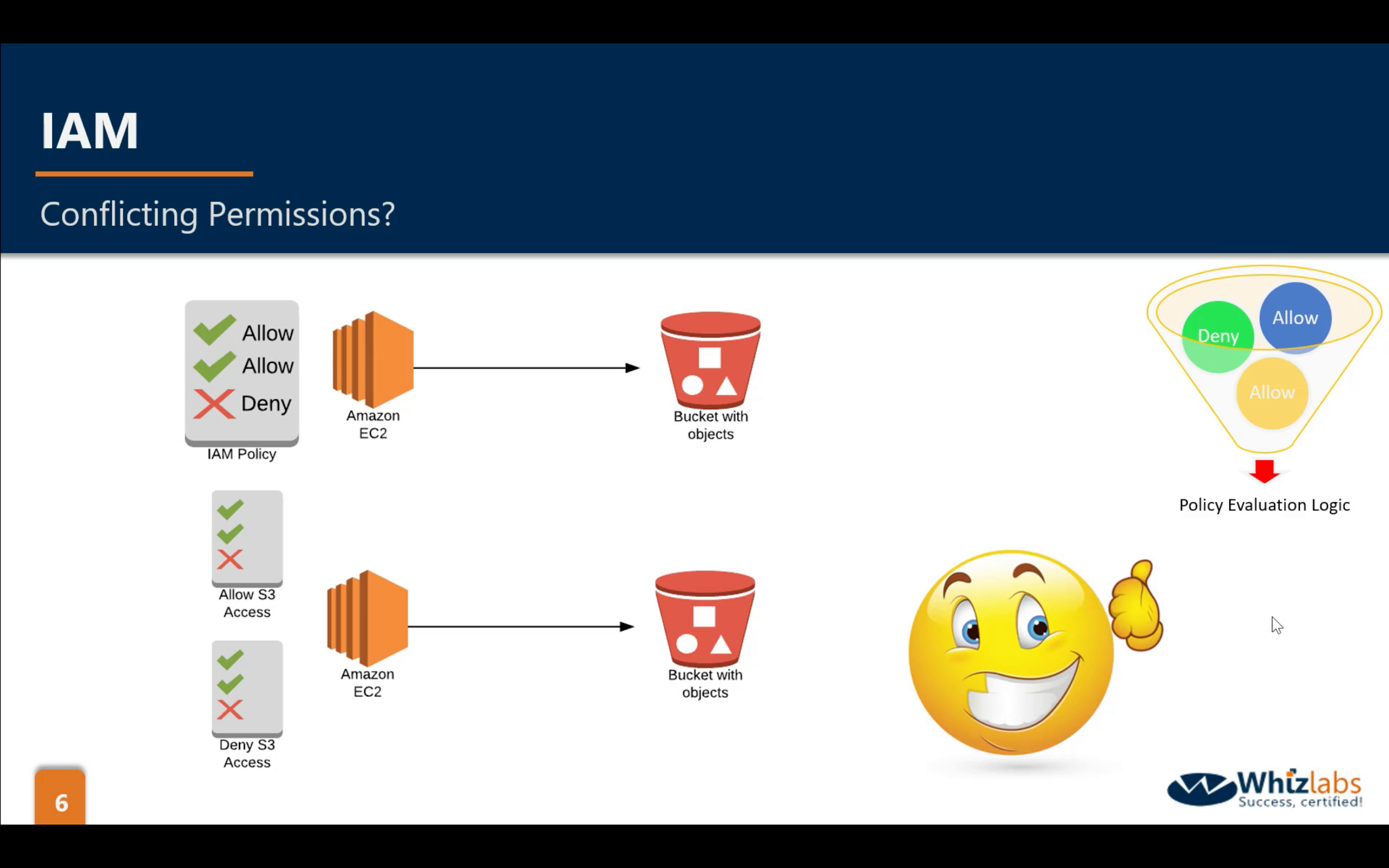

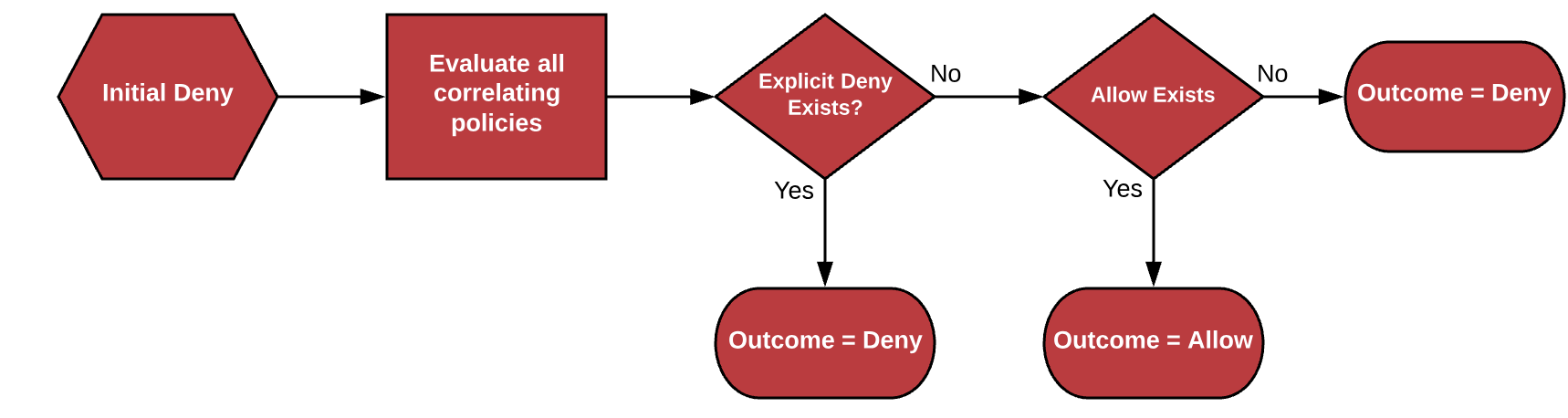

Policy Evaluation Logic

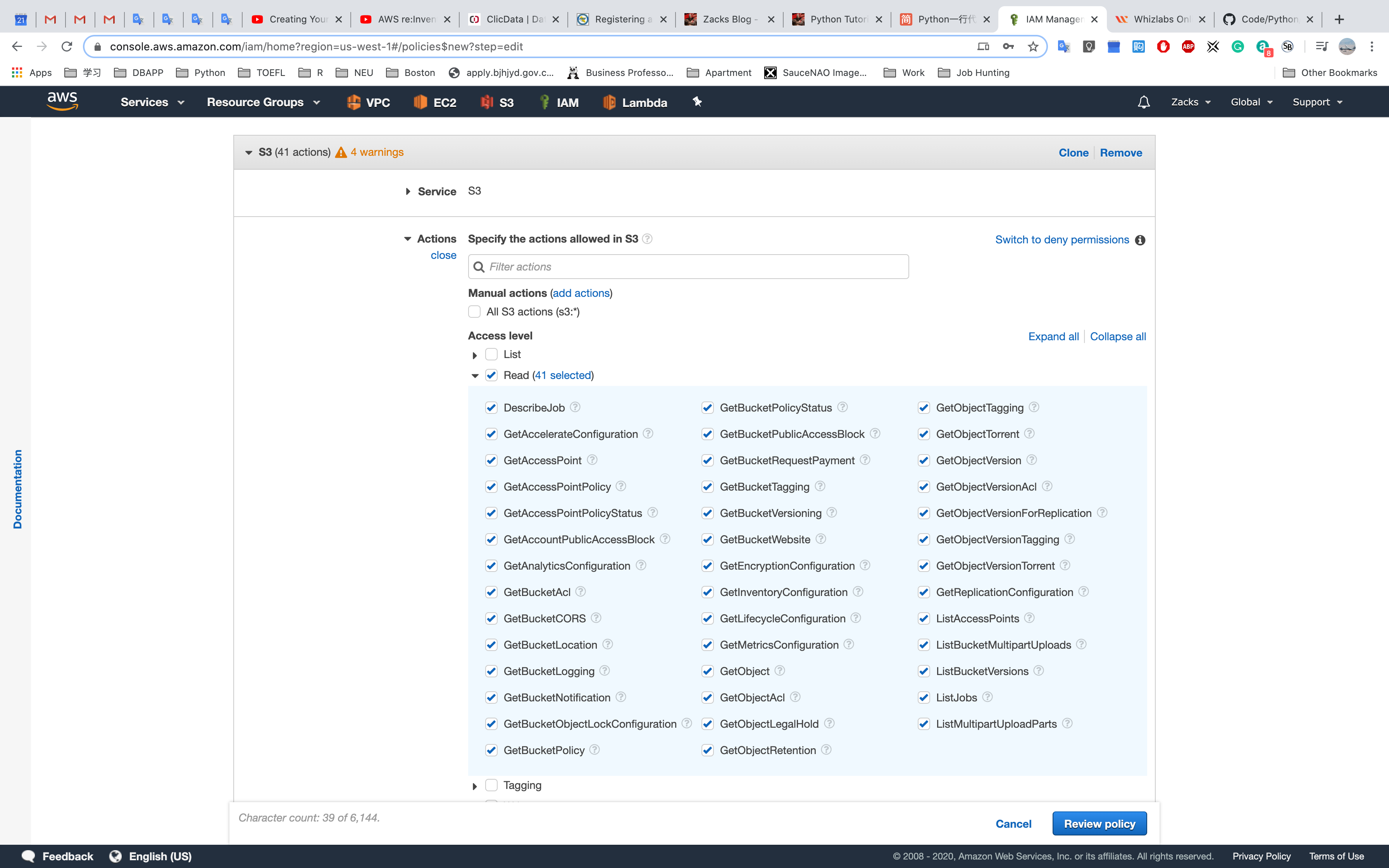

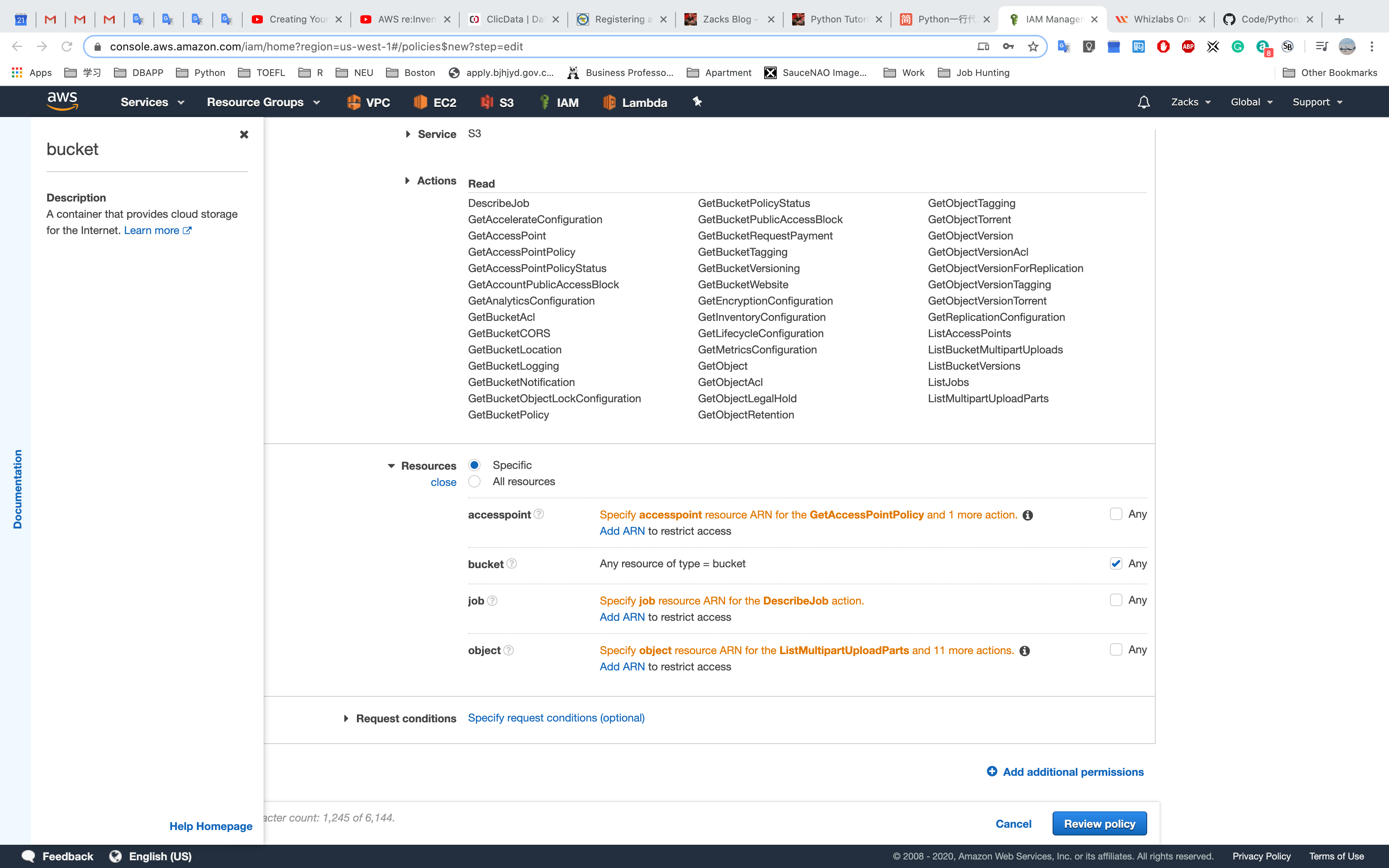

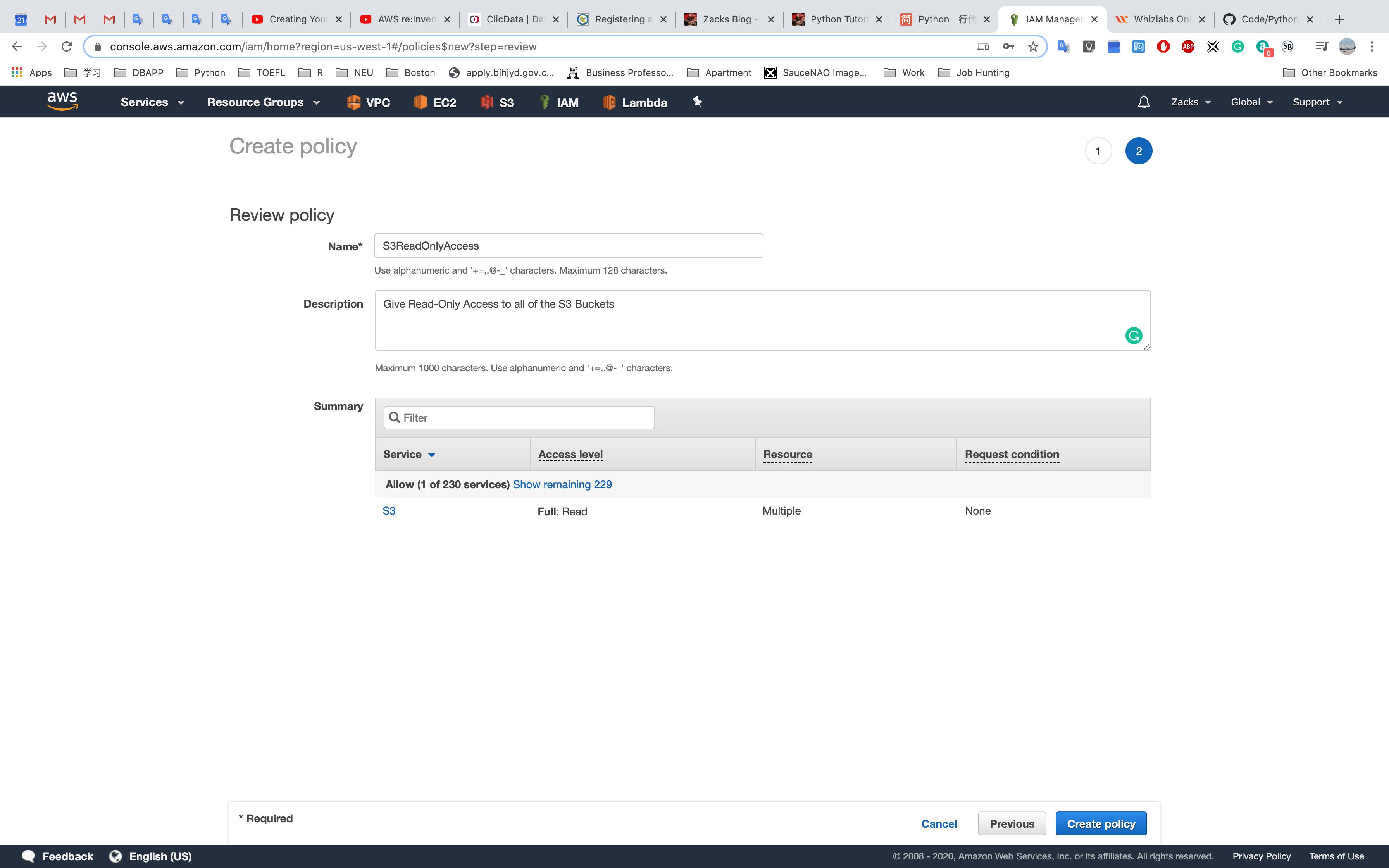

Create a policy from Visual editor

Read permission

Resources for the Actions

Any for all of the buckets

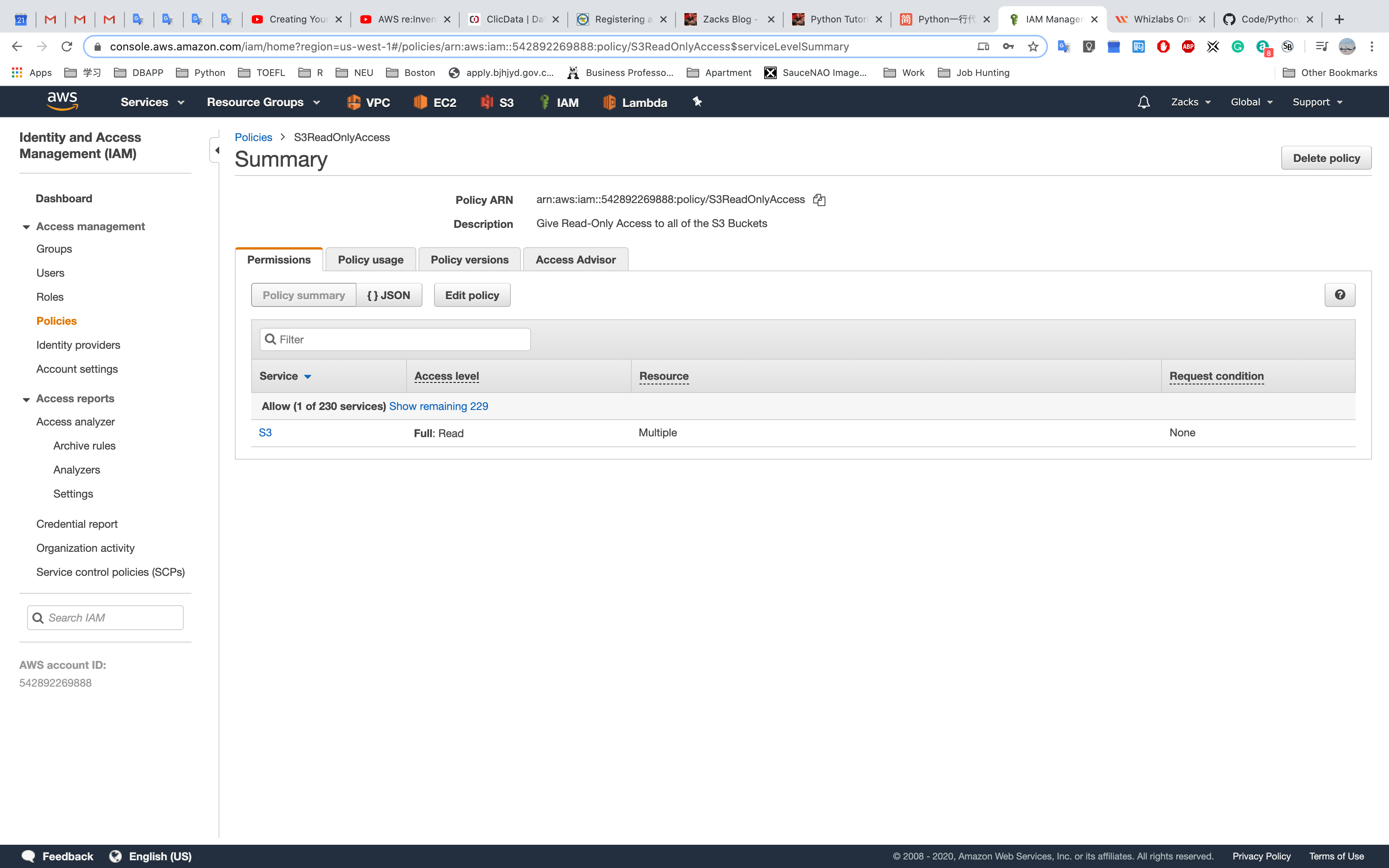

Click the name of a policy

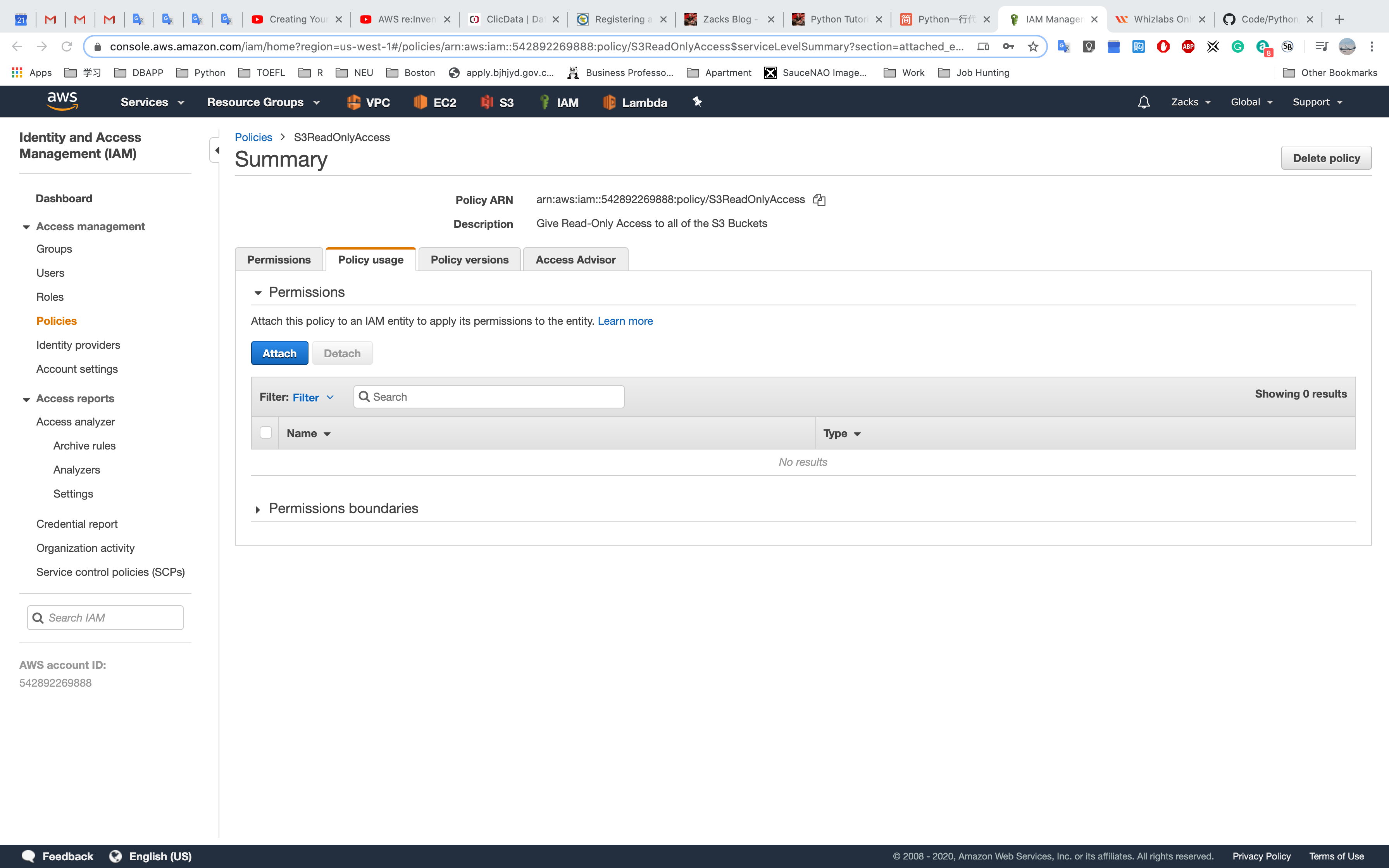

Click policy usage to attch the policy to users, groups, or entities.

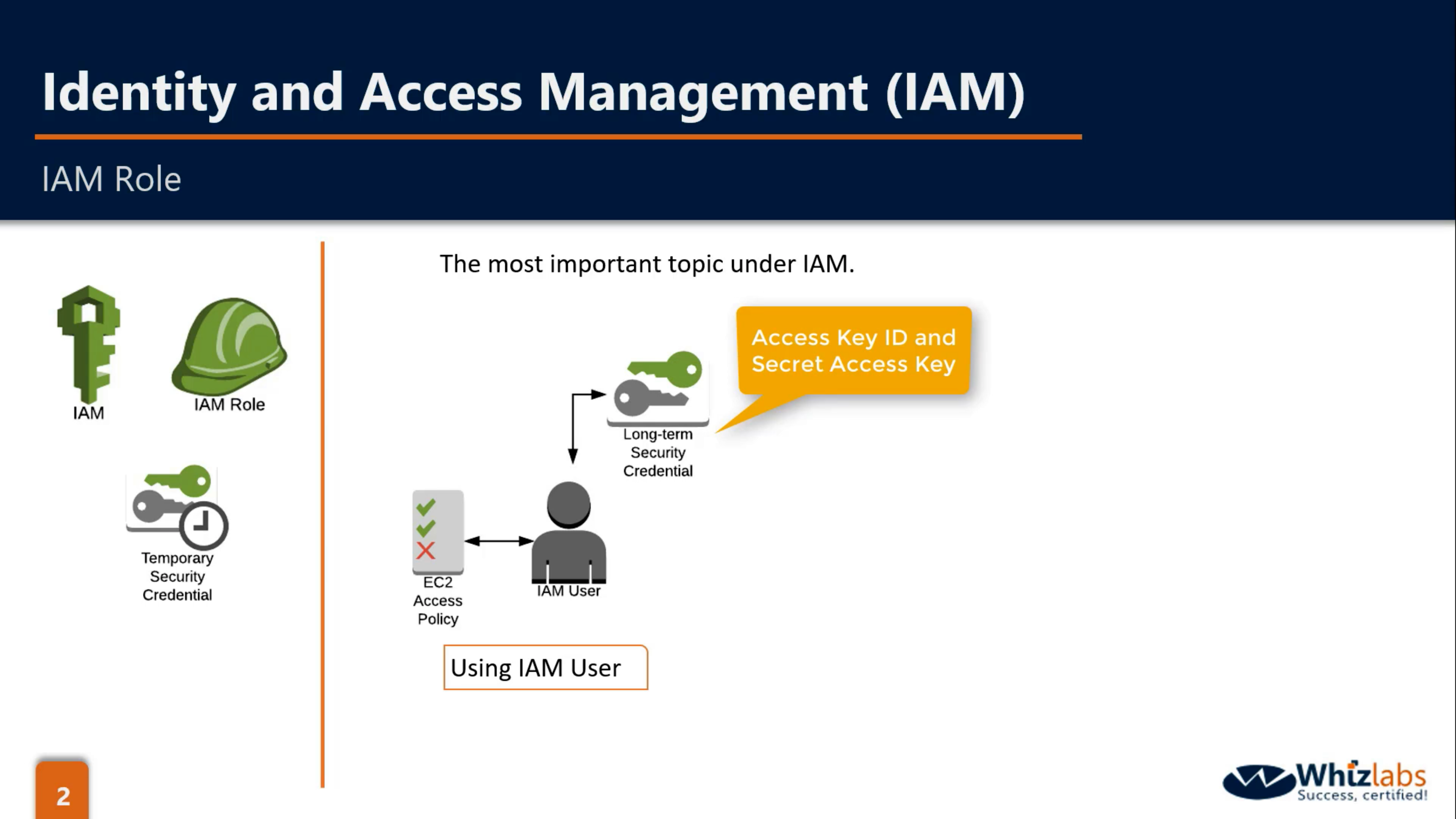

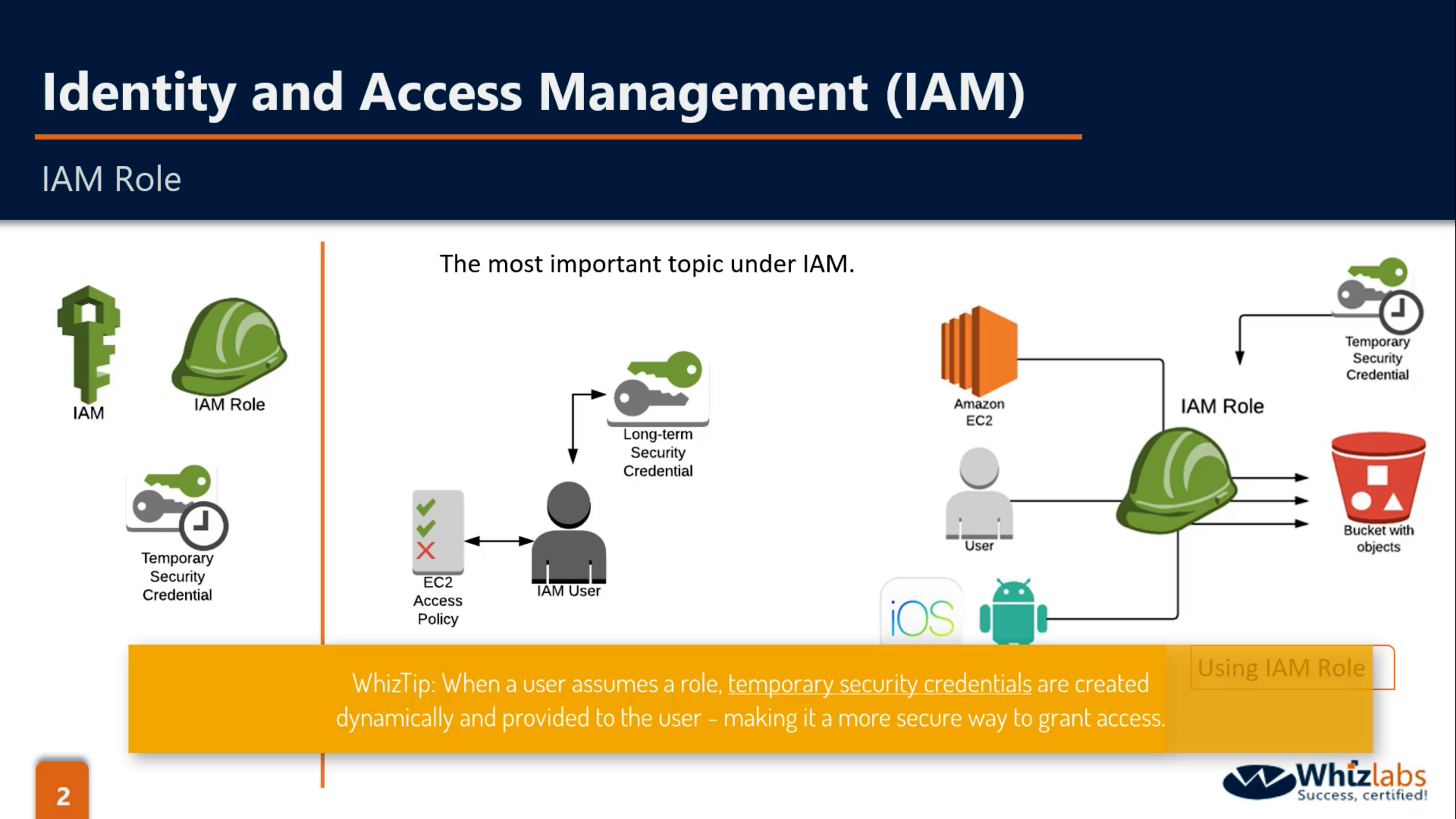

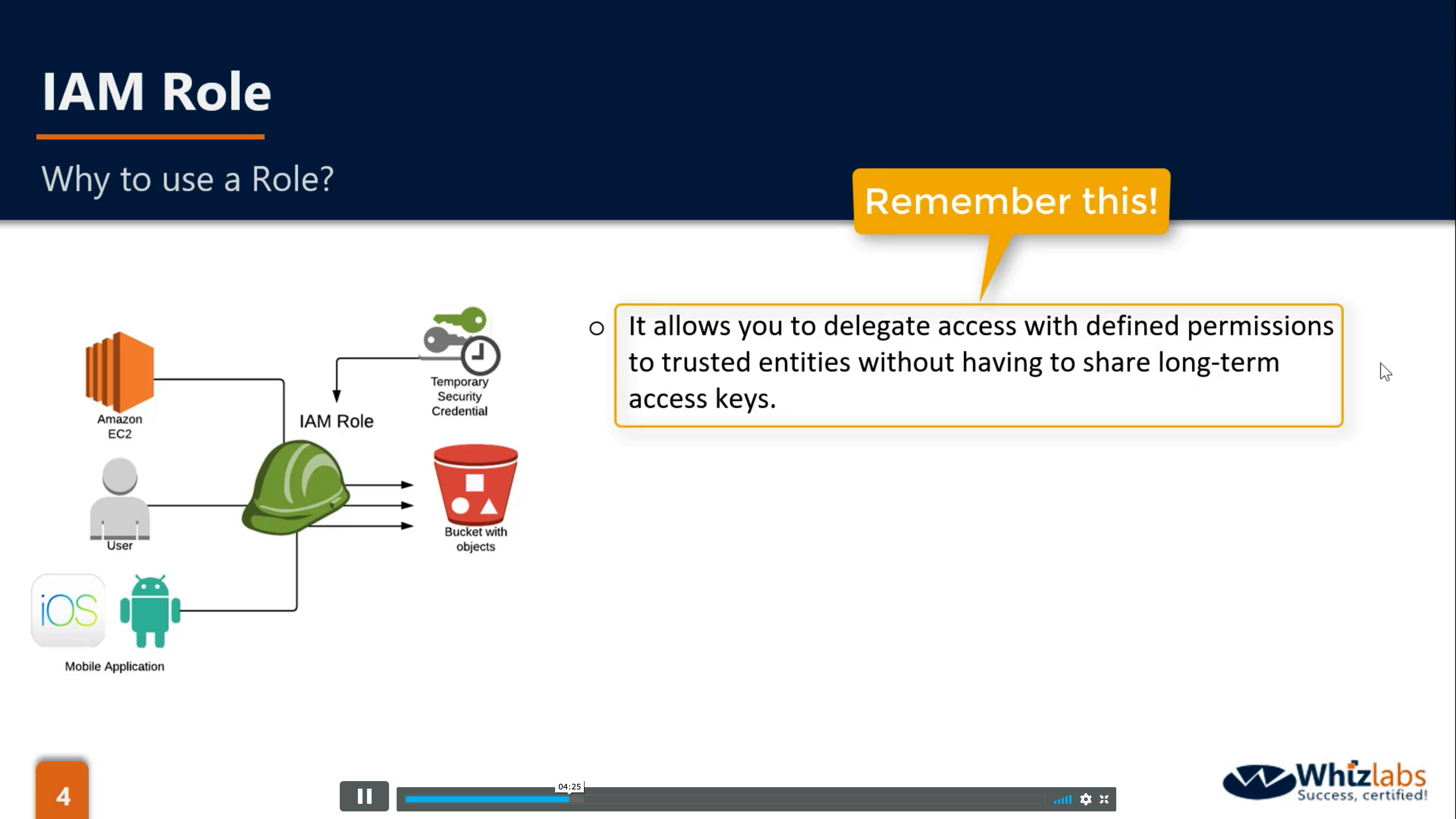

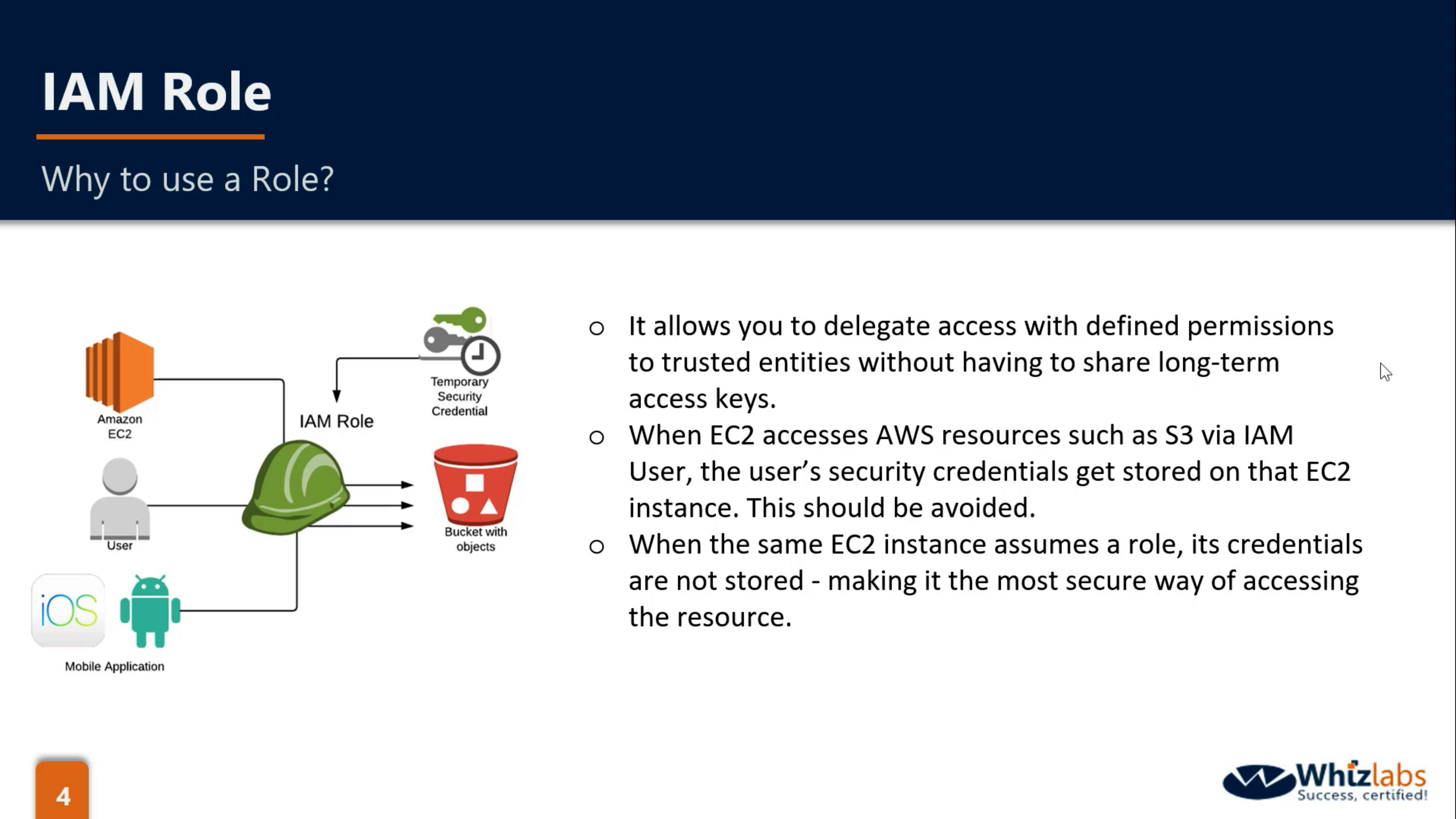

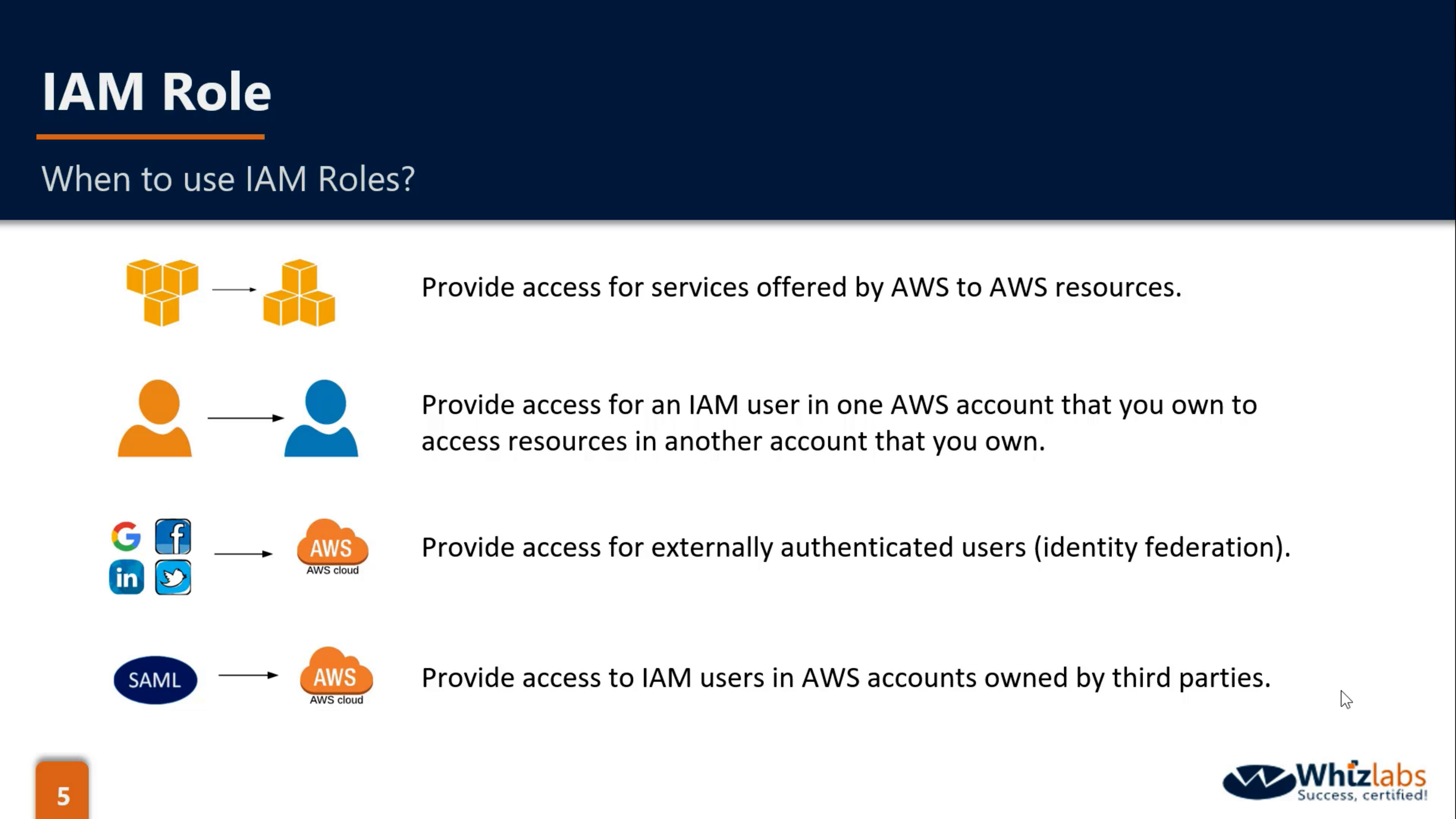

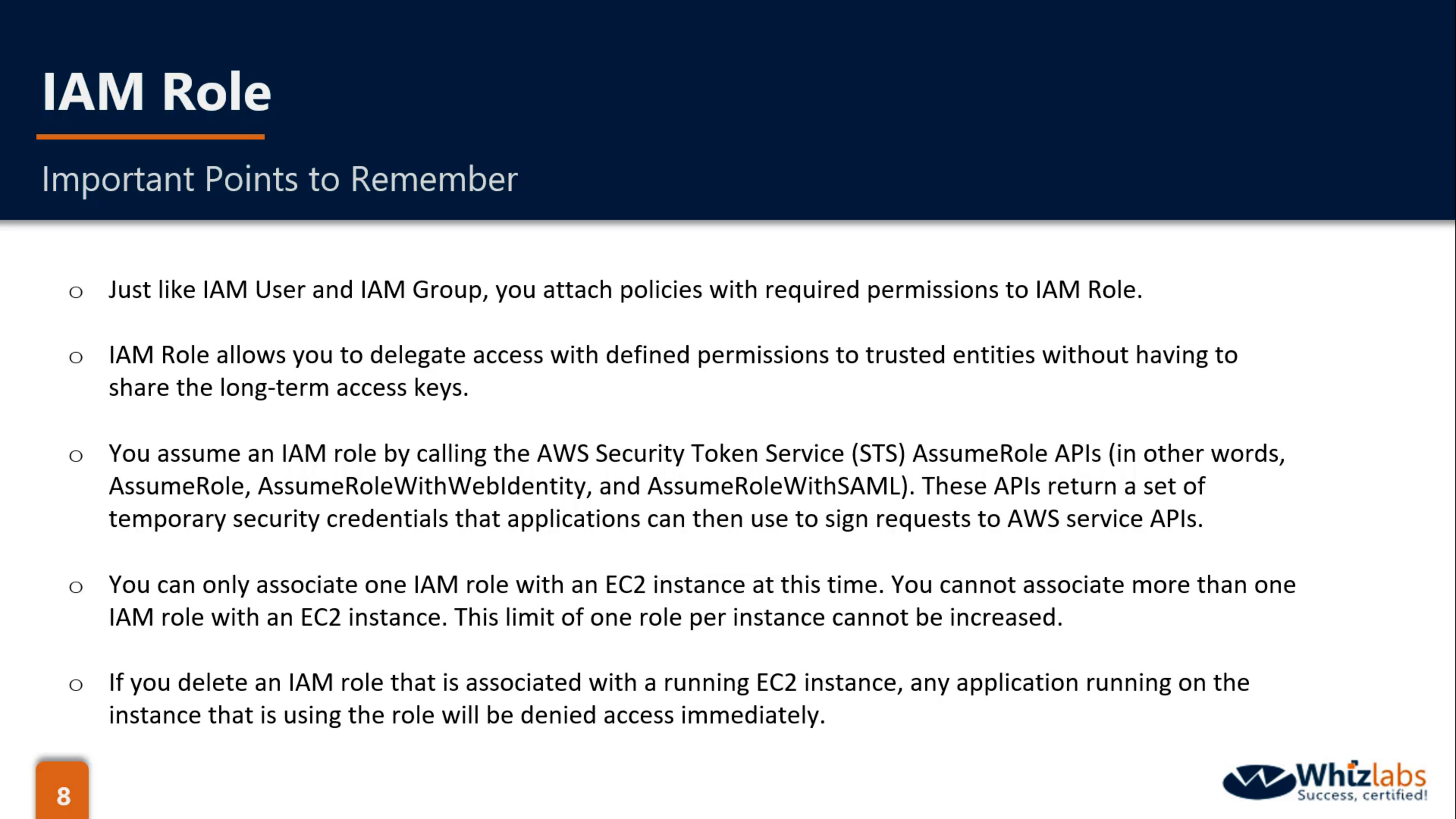

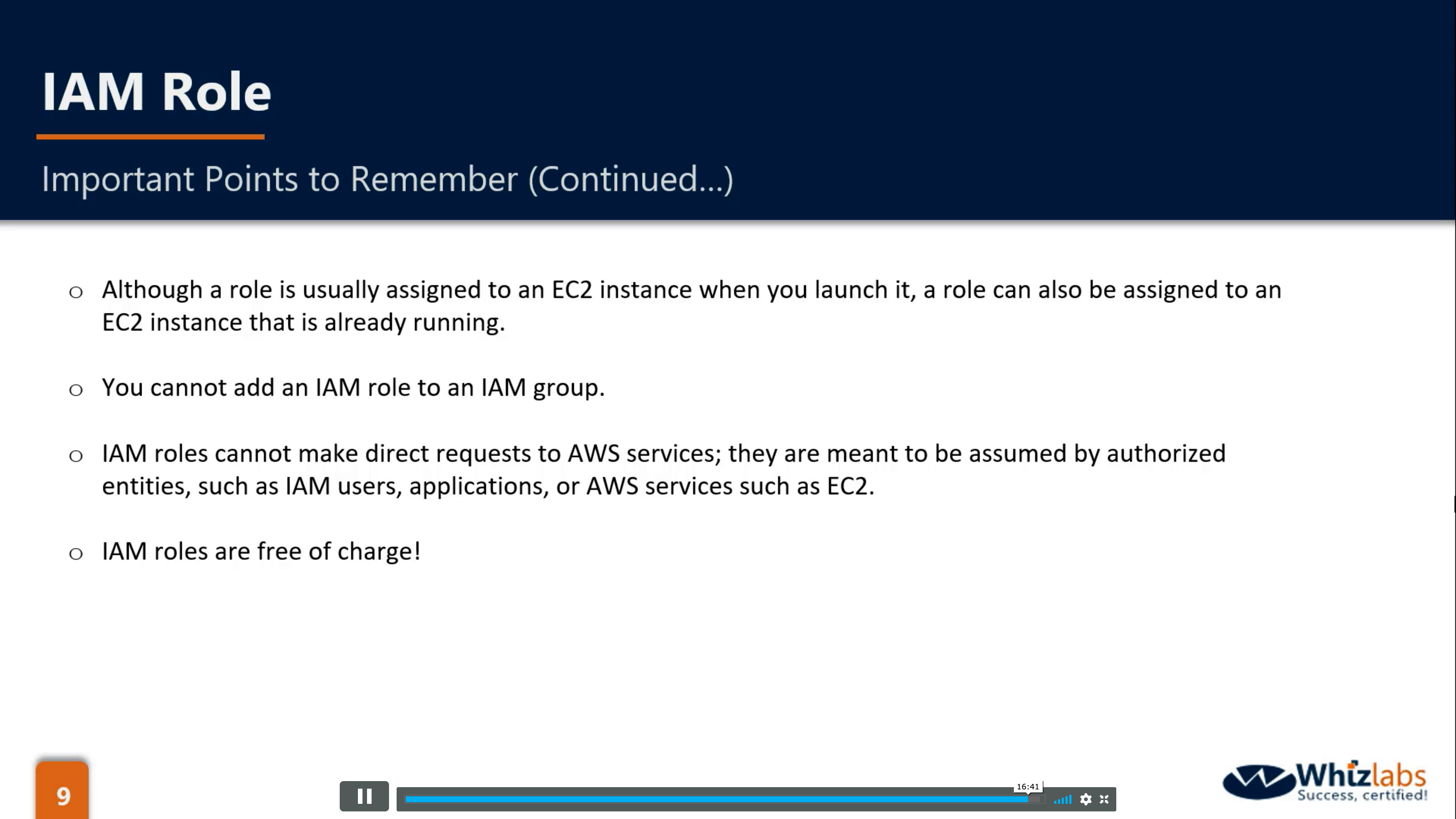

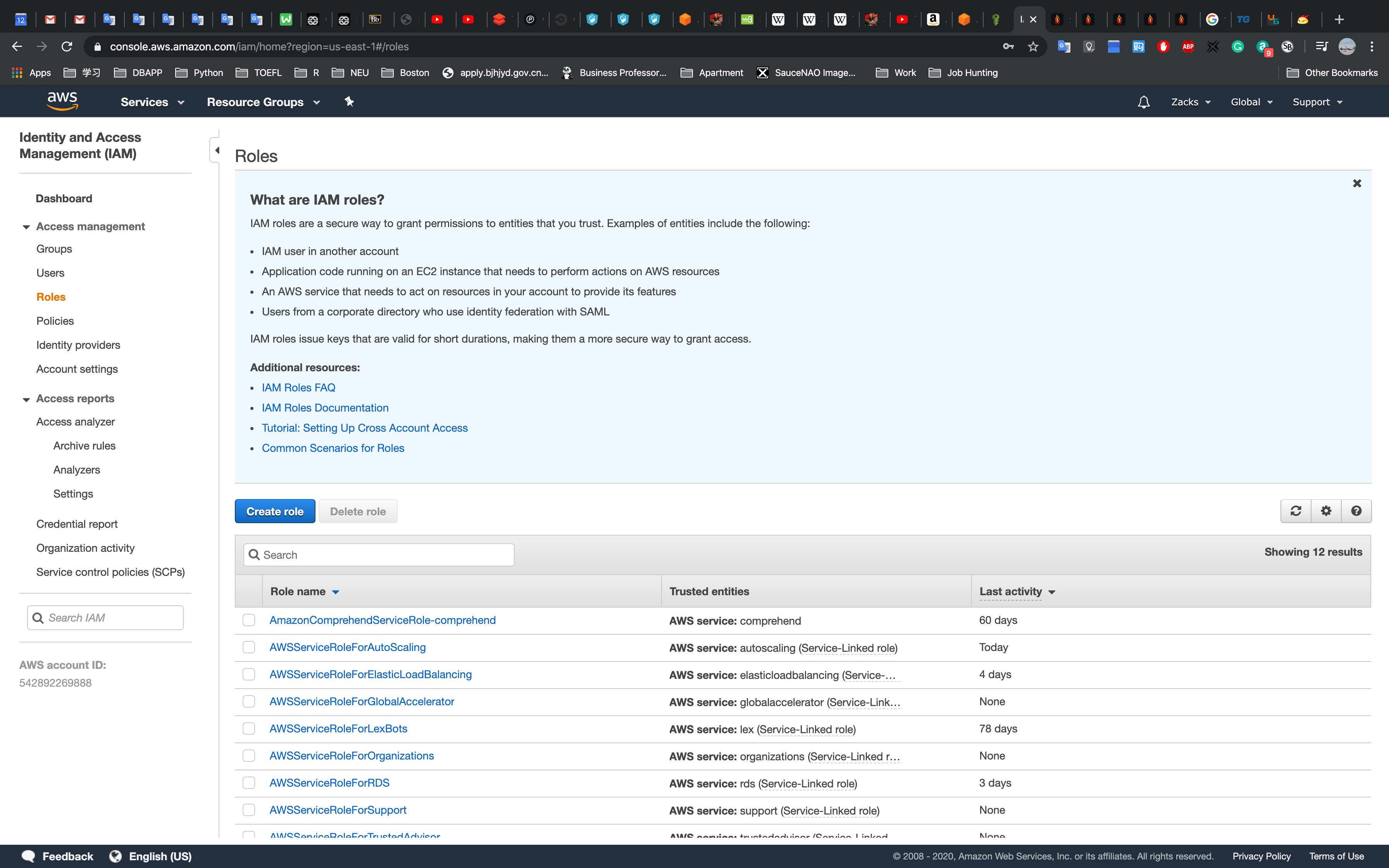

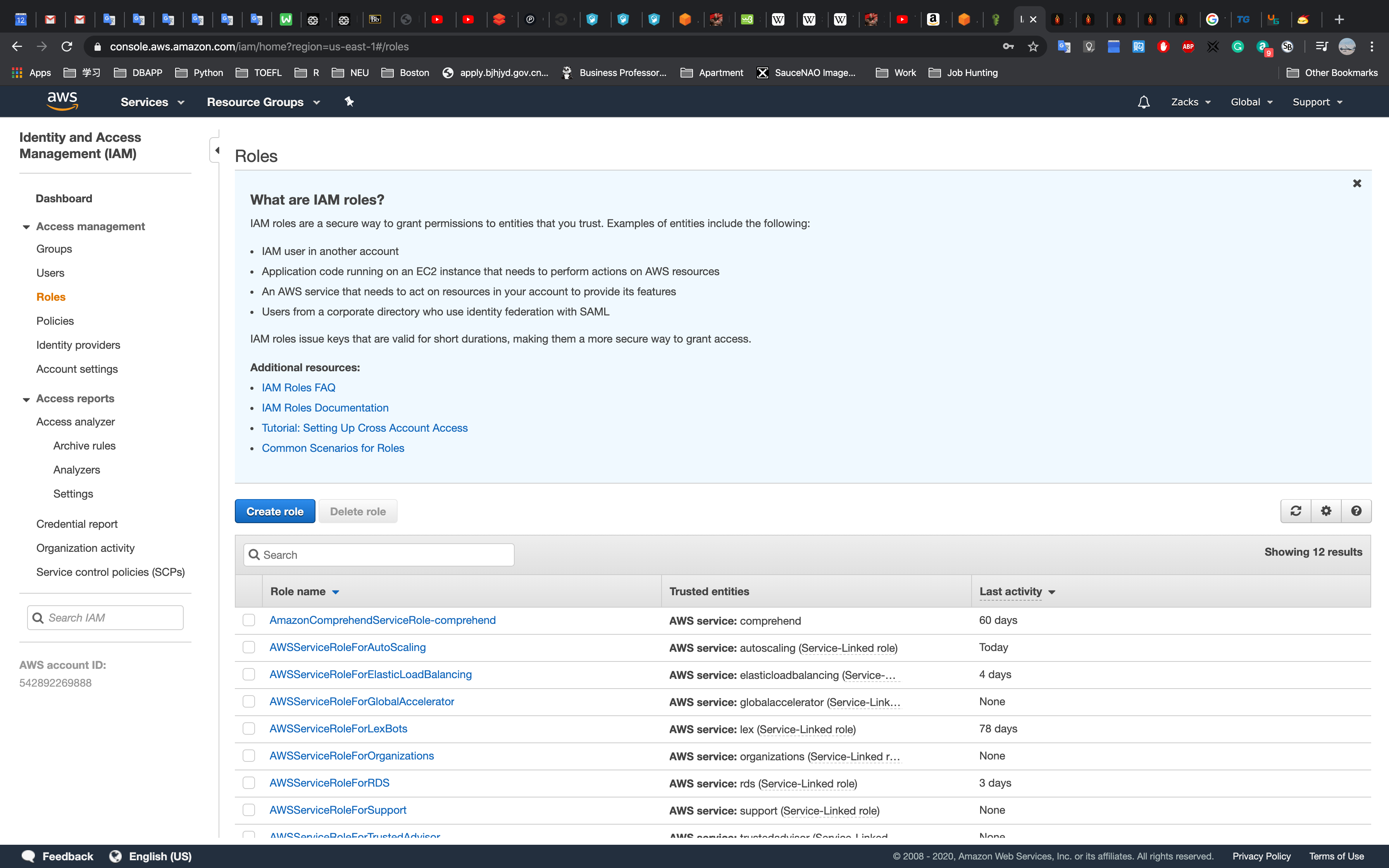

IAM Roles

What are IAM roles?

IAM roles are a secure way to grant permissions to entities that you trust. Examples of entities include the following:

IAM user in another account

Application code running on an EC2 instance that needs to perform actions on AWS resources

An AWS service that needs to act on resources in your account to provide its features

Users from a corporate directory who use identity federation with SAML

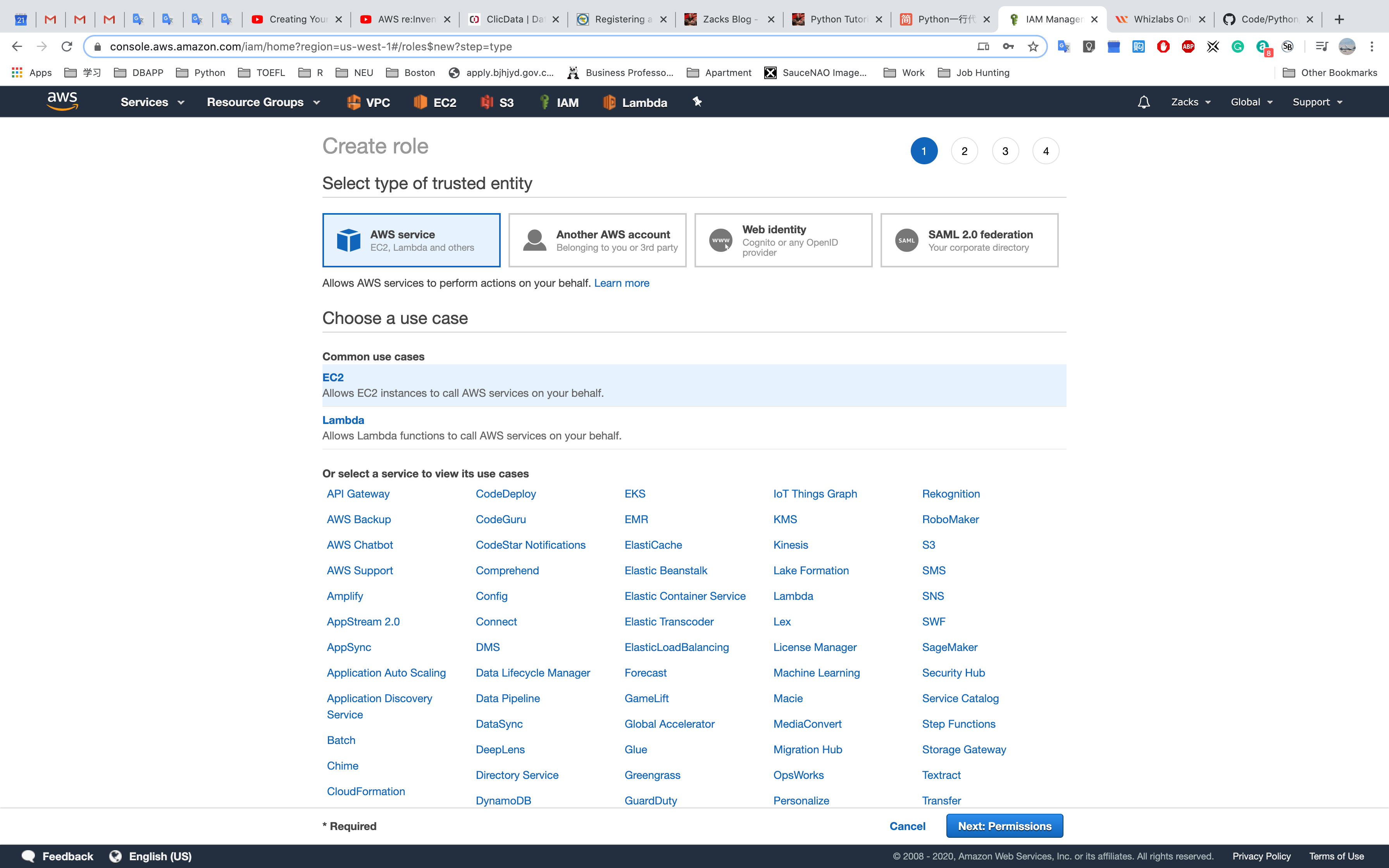

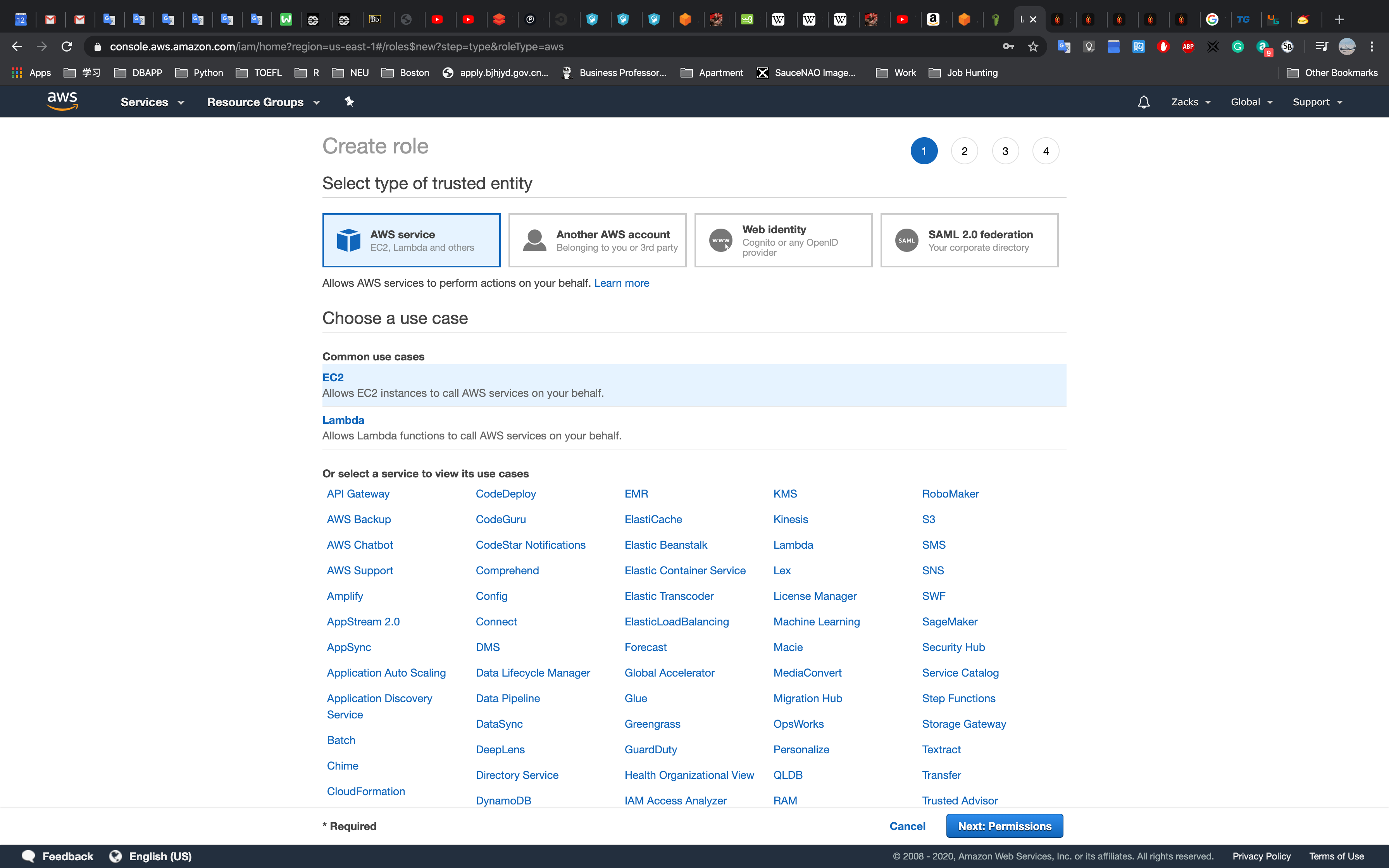

Create a role for EC2

Select EC2 and Click Next Permissions

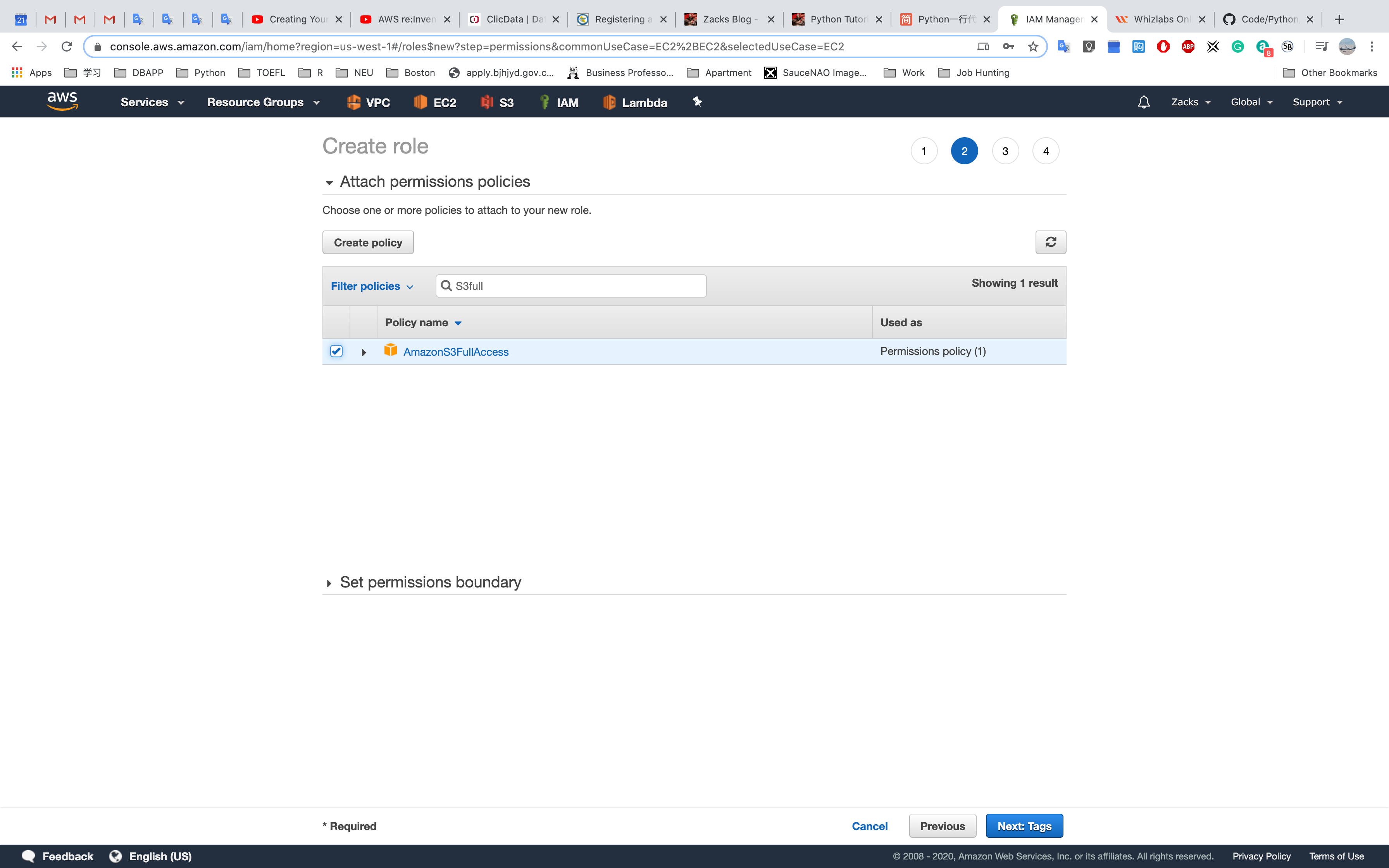

Select a policy

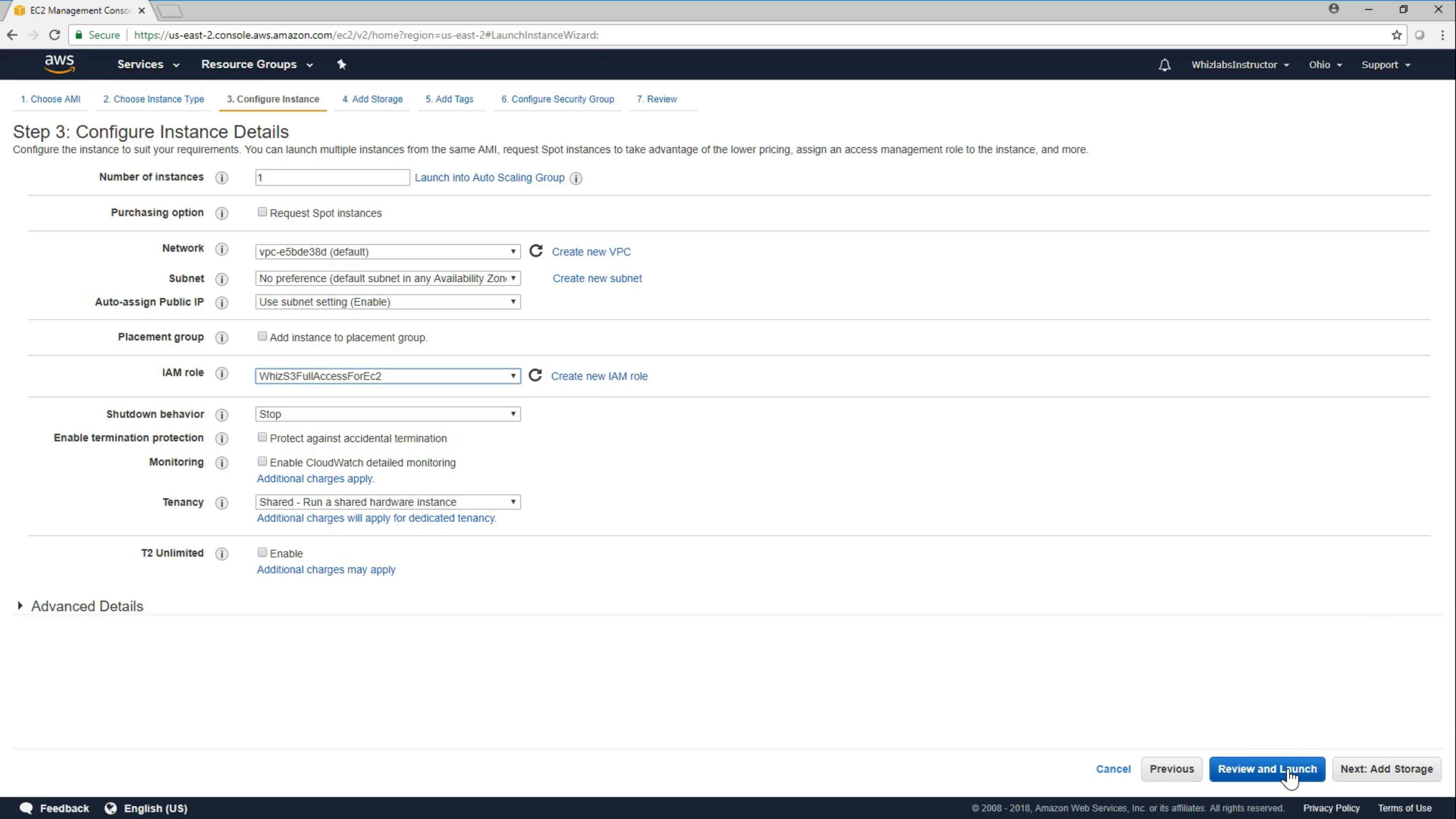

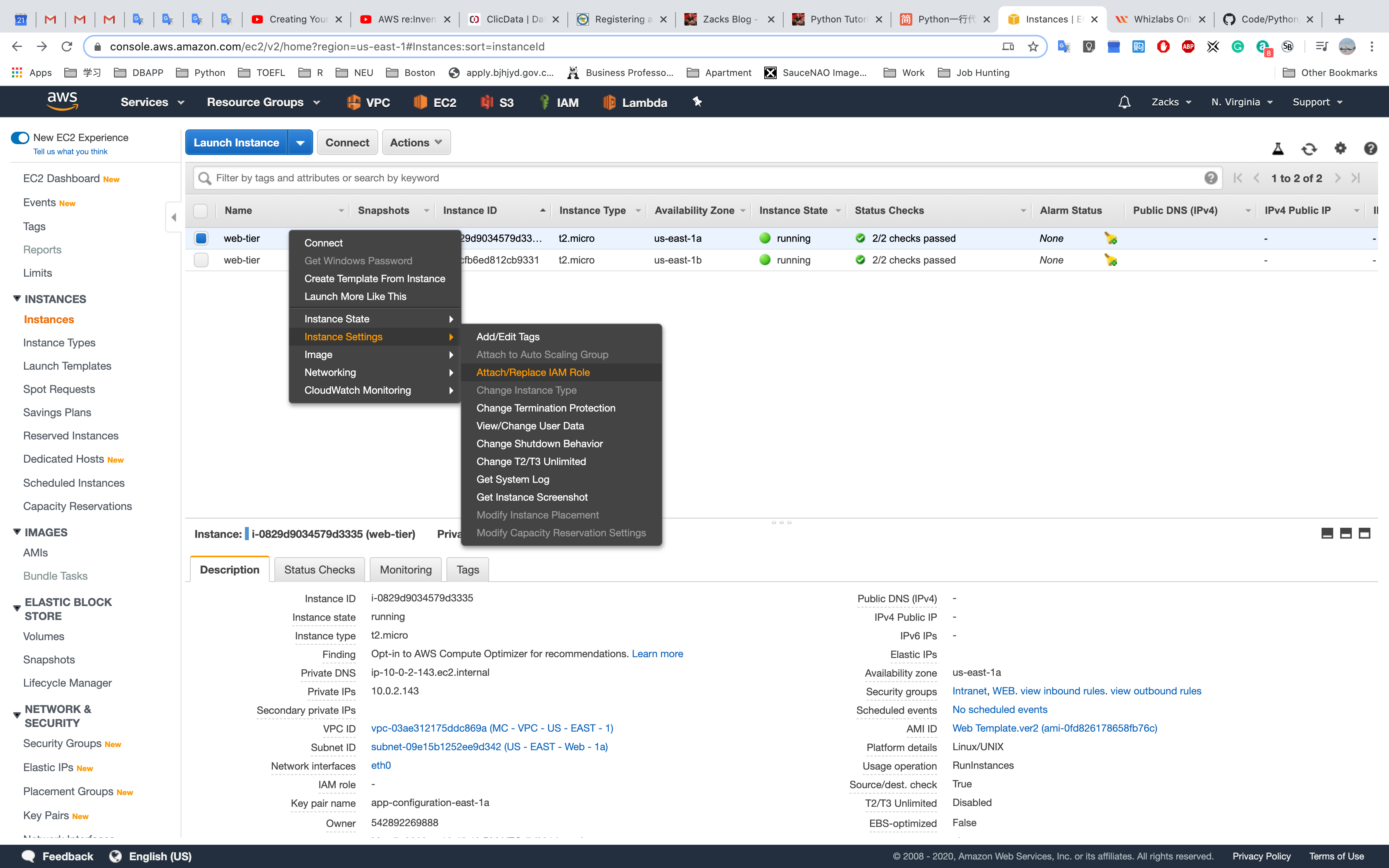

Attach a role for a EC2 instance during creating

OR Attach a role for a EC2 instance when it is running

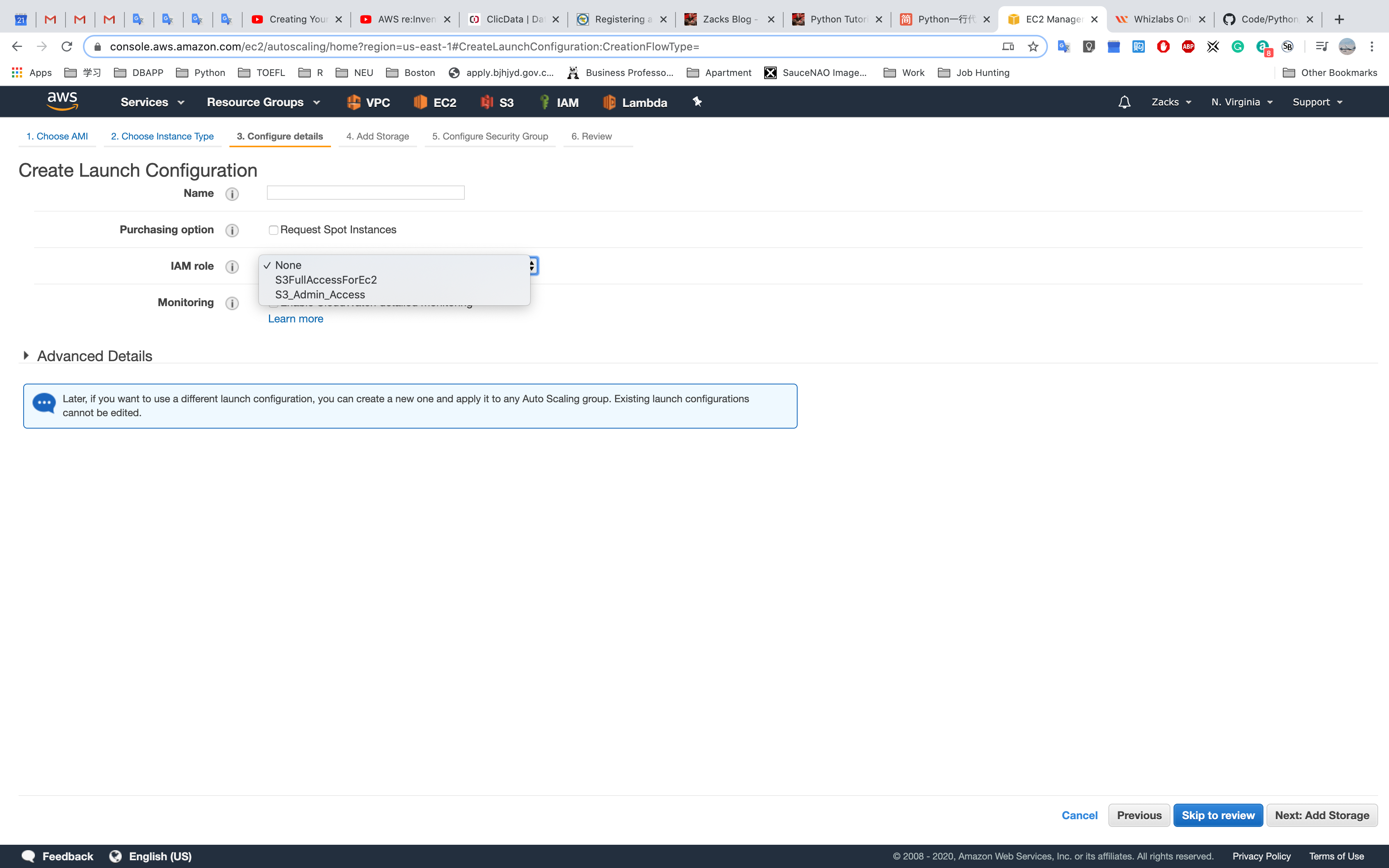

OR Attach a role for a EC2 Auto Scaling Launch Configurations

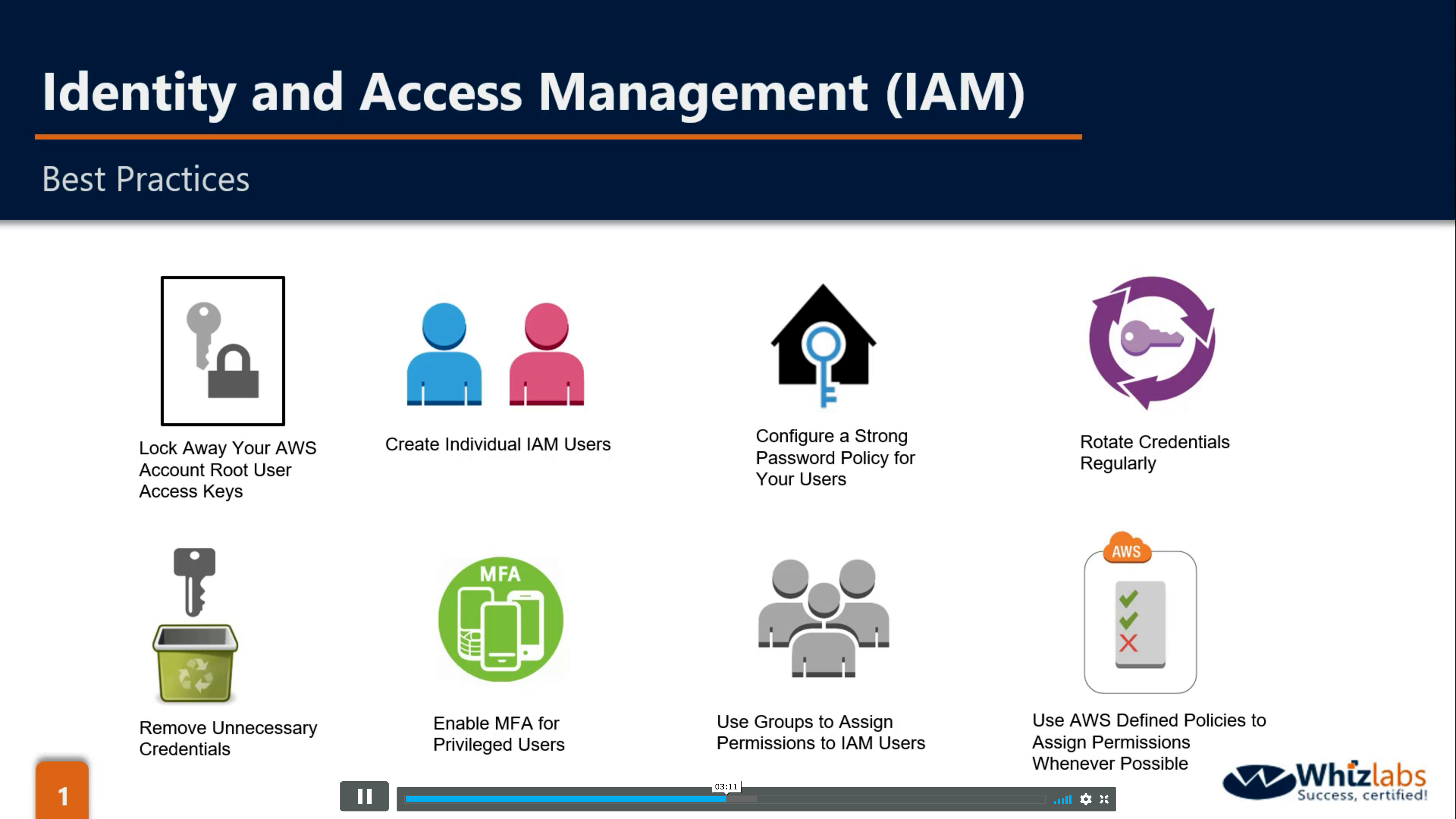

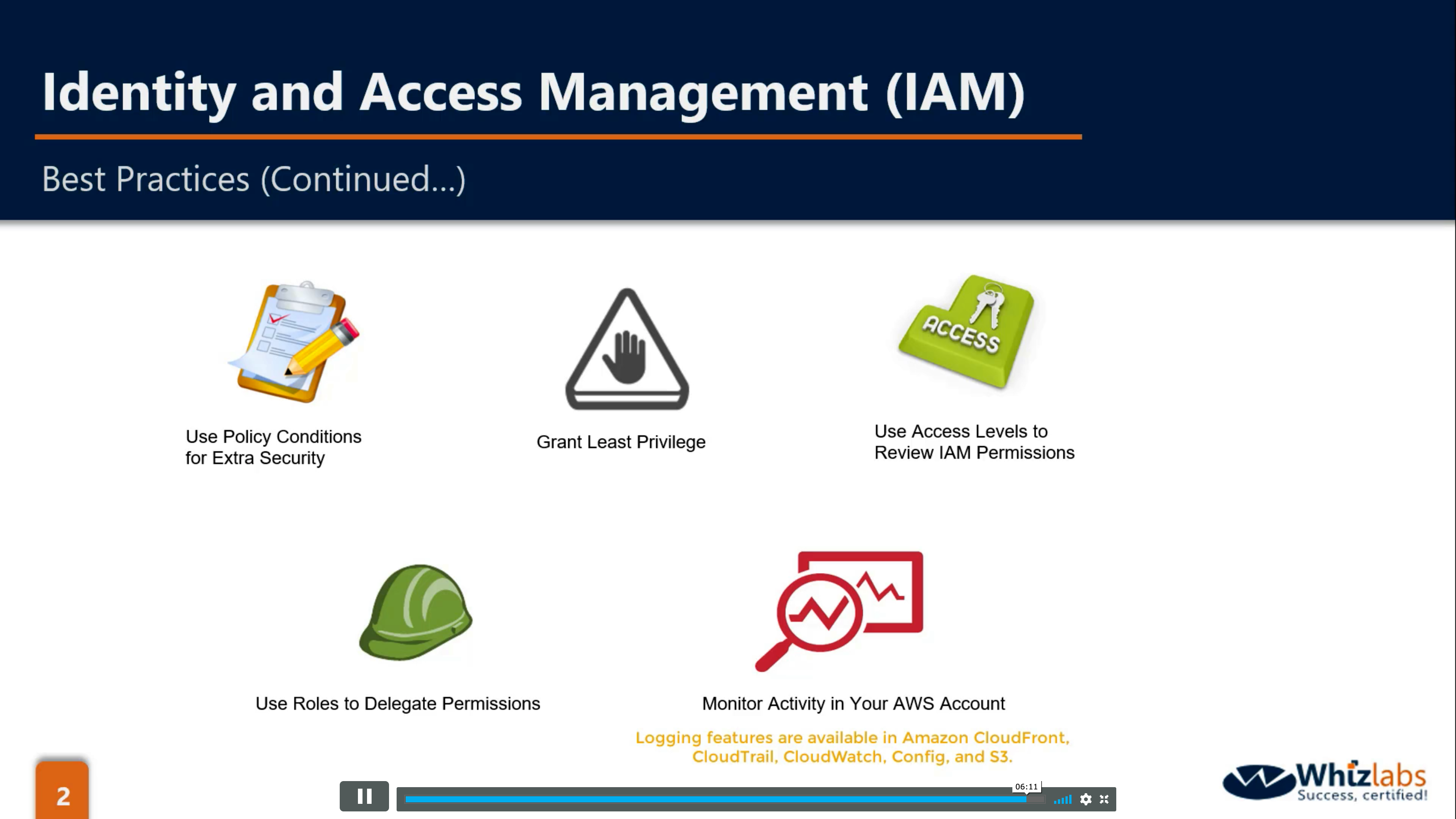

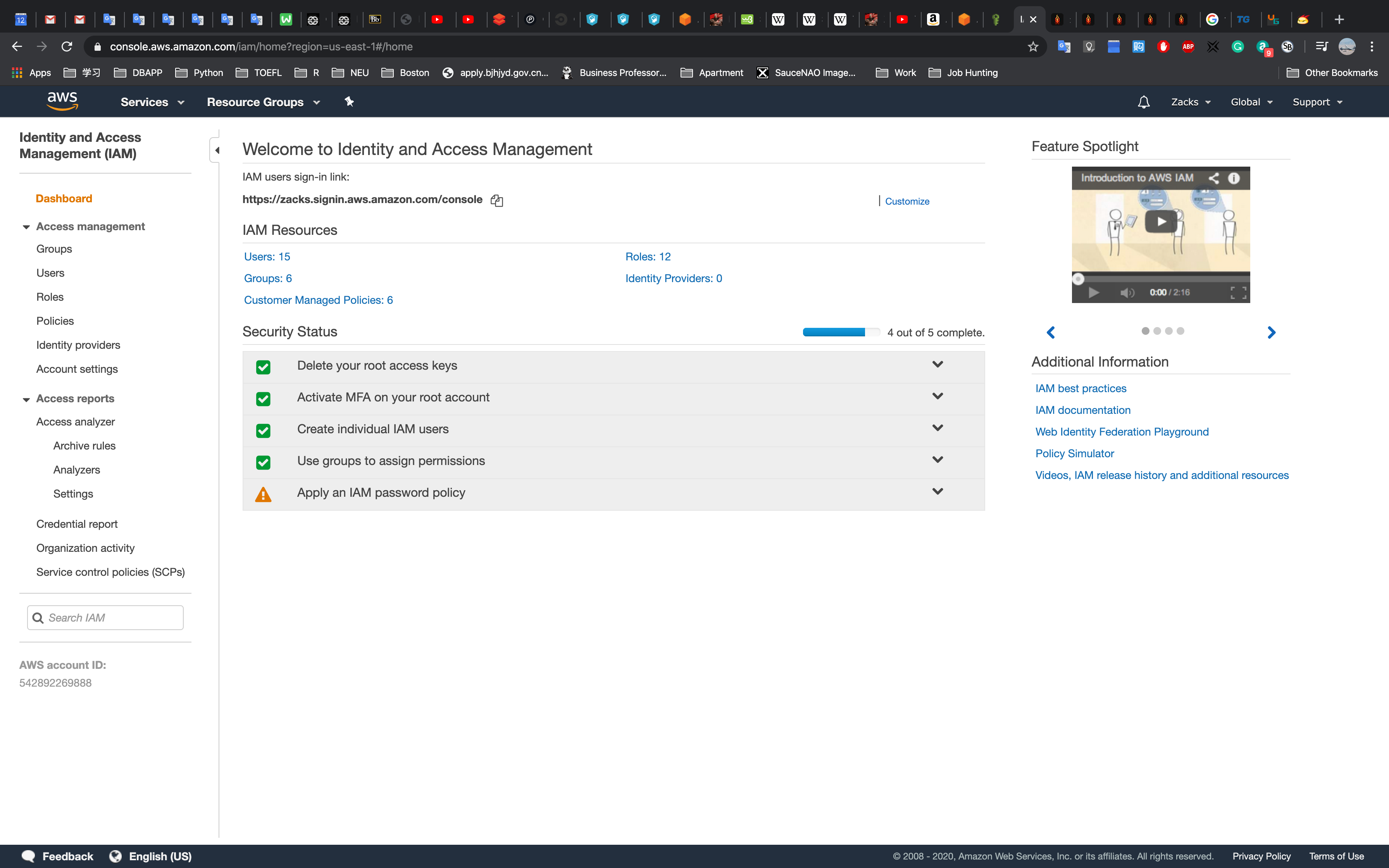

Best Practices

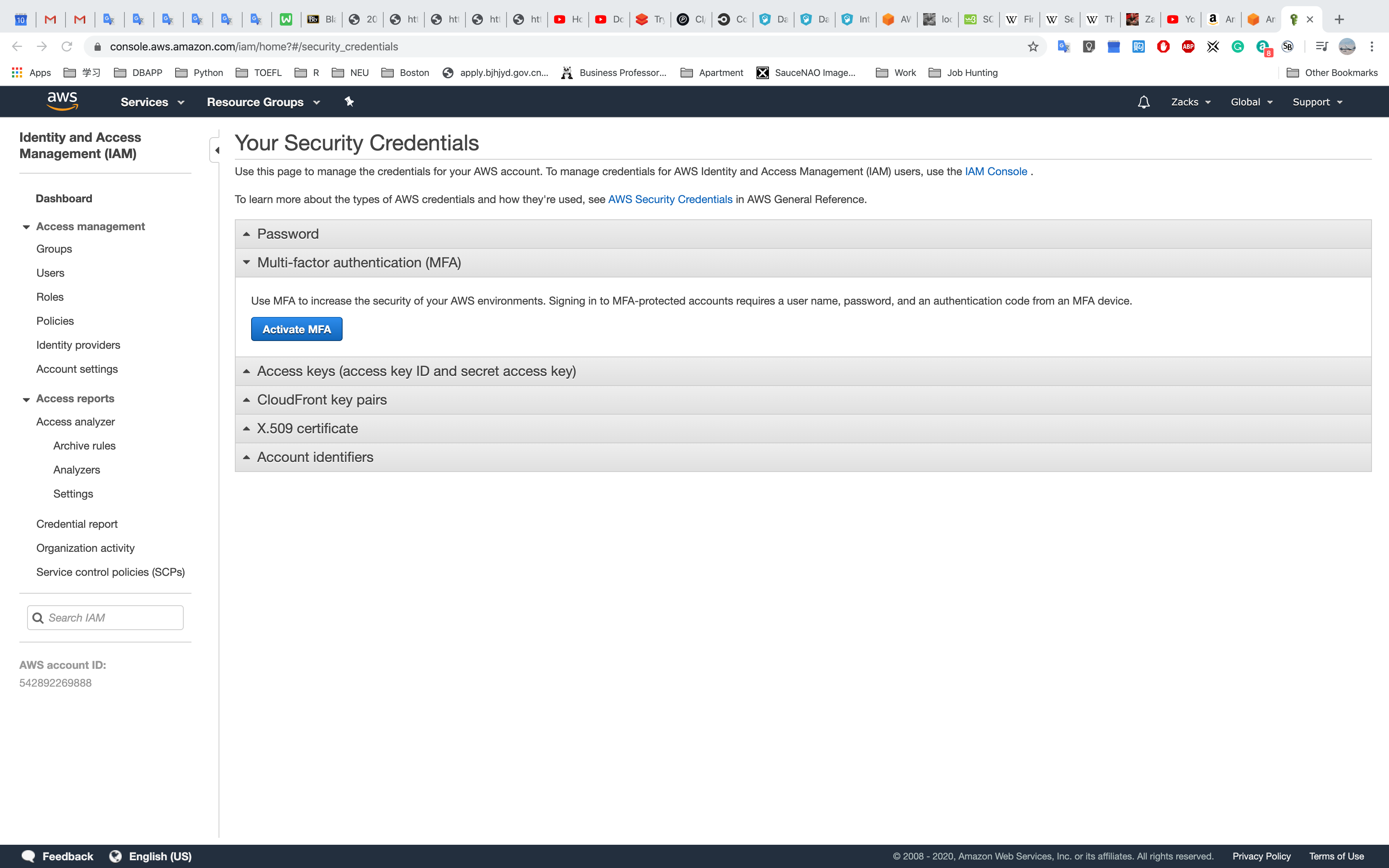

MFA

AWS Management Console

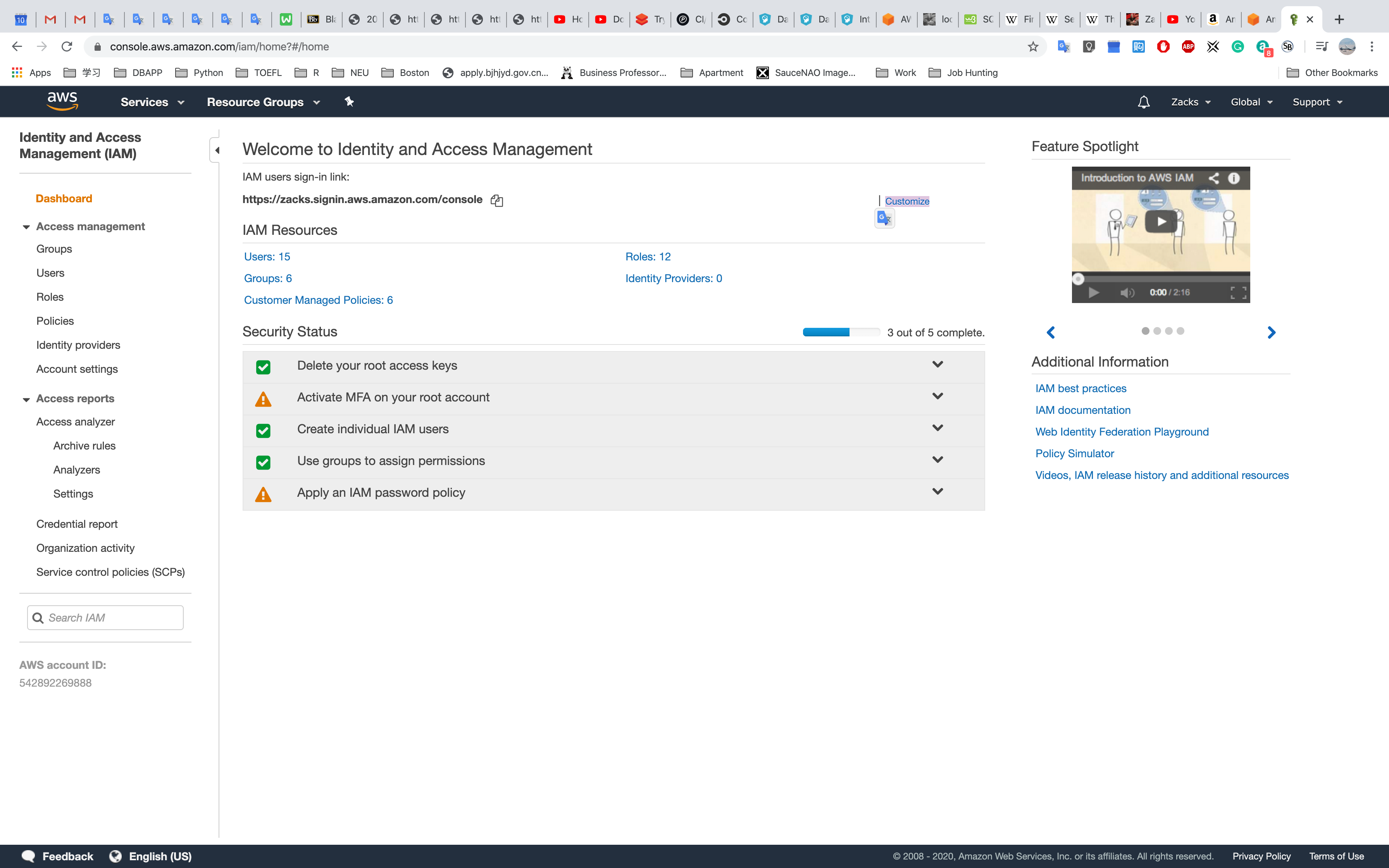

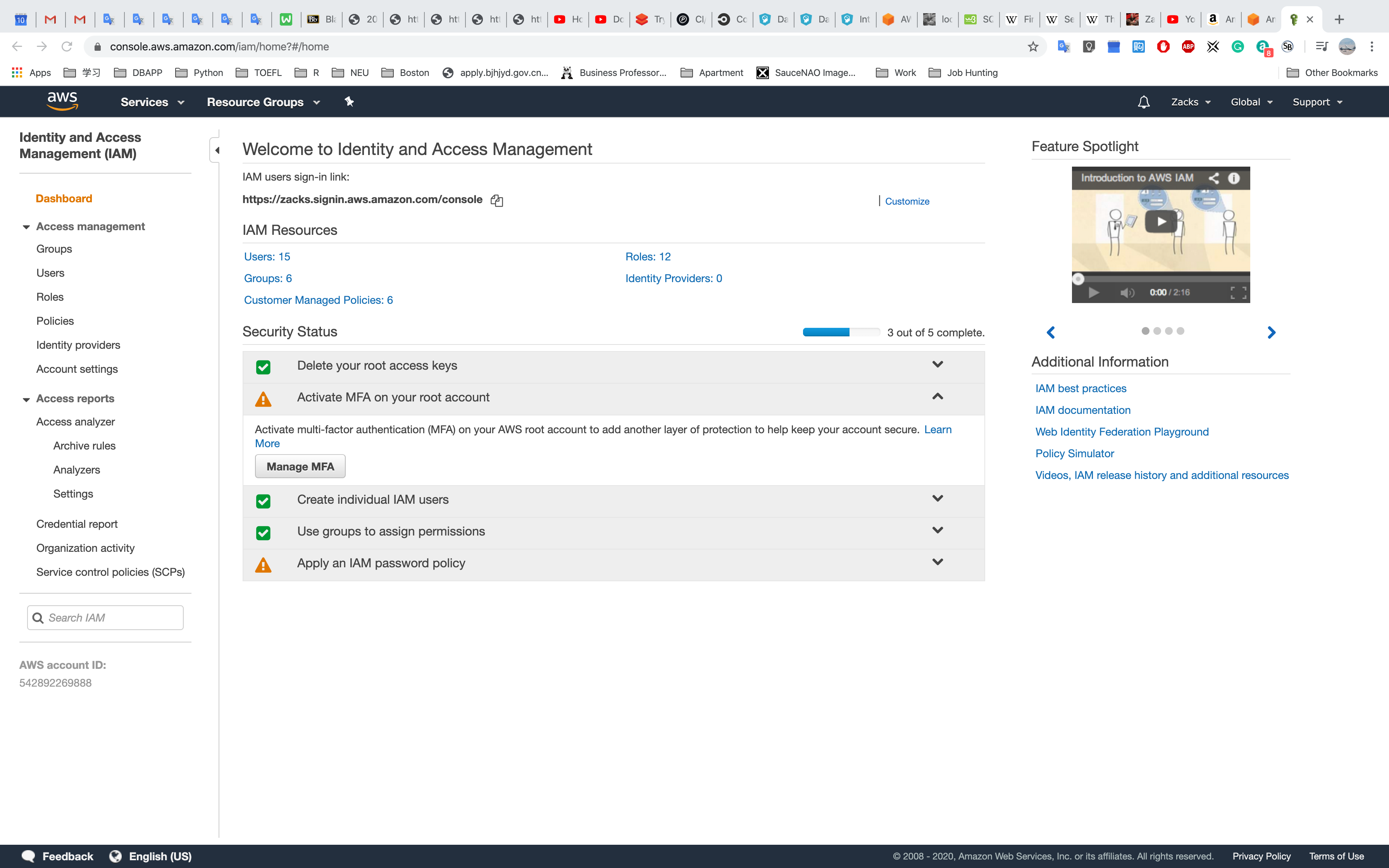

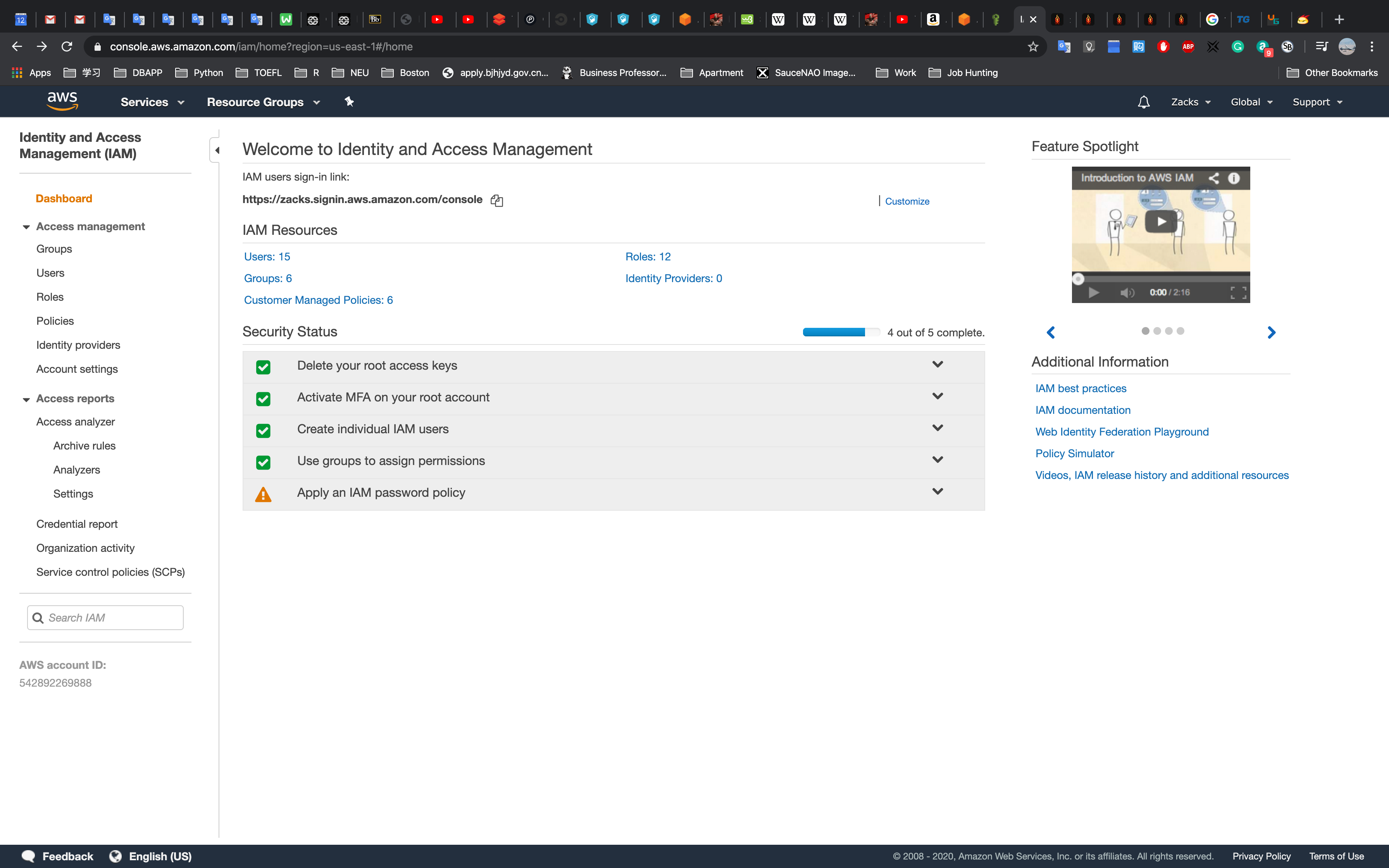

Click Customize to customize your IAM users sign-in link:

Click Activate MFA on your root account and click Manage MFA

Click Activate MFA

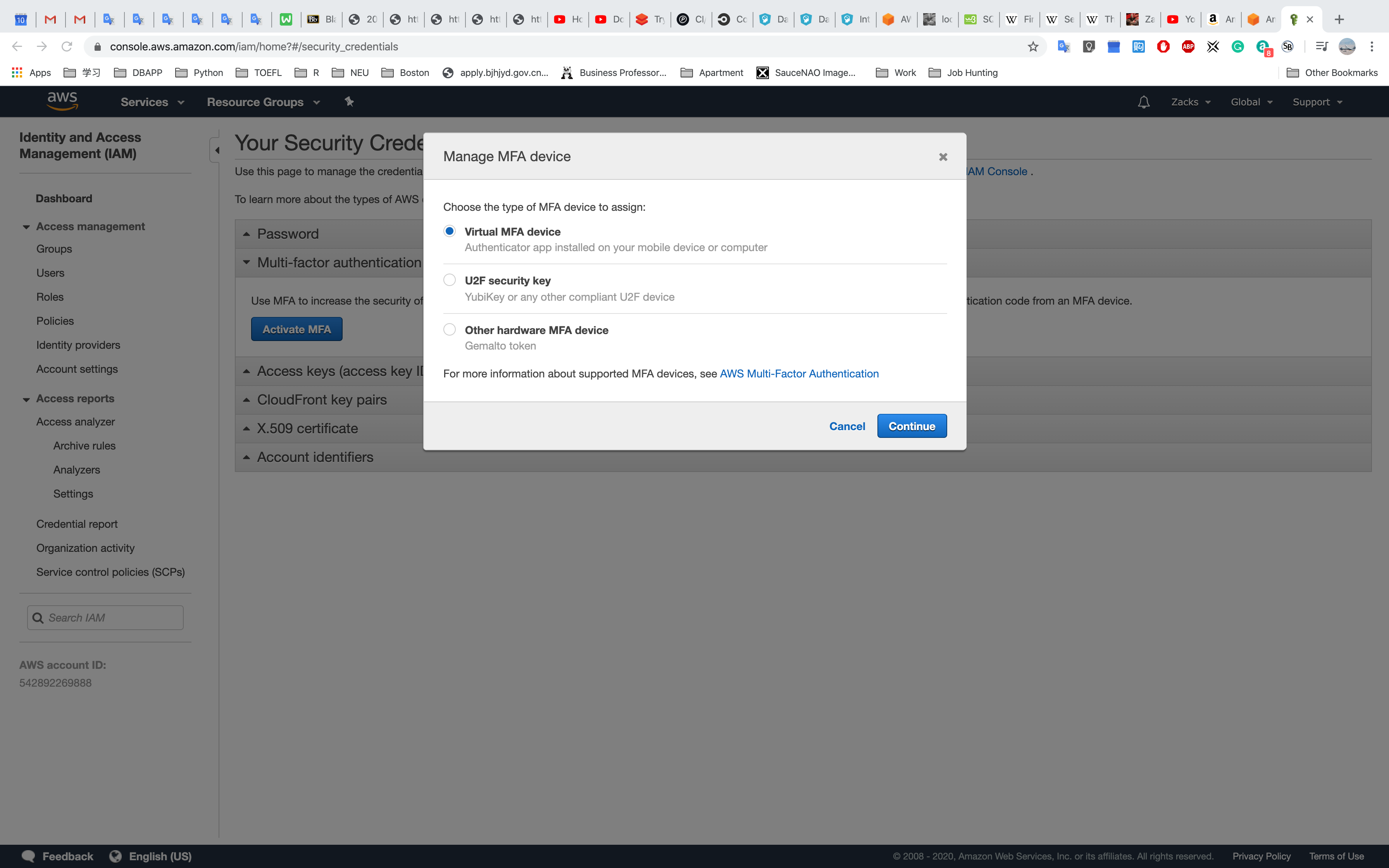

Choose Virtual MFA device and click Continue

See more detail, click Multi-factor Authentication. And download one Virtual MFA Applications. For example, I choose Google Authenticator.

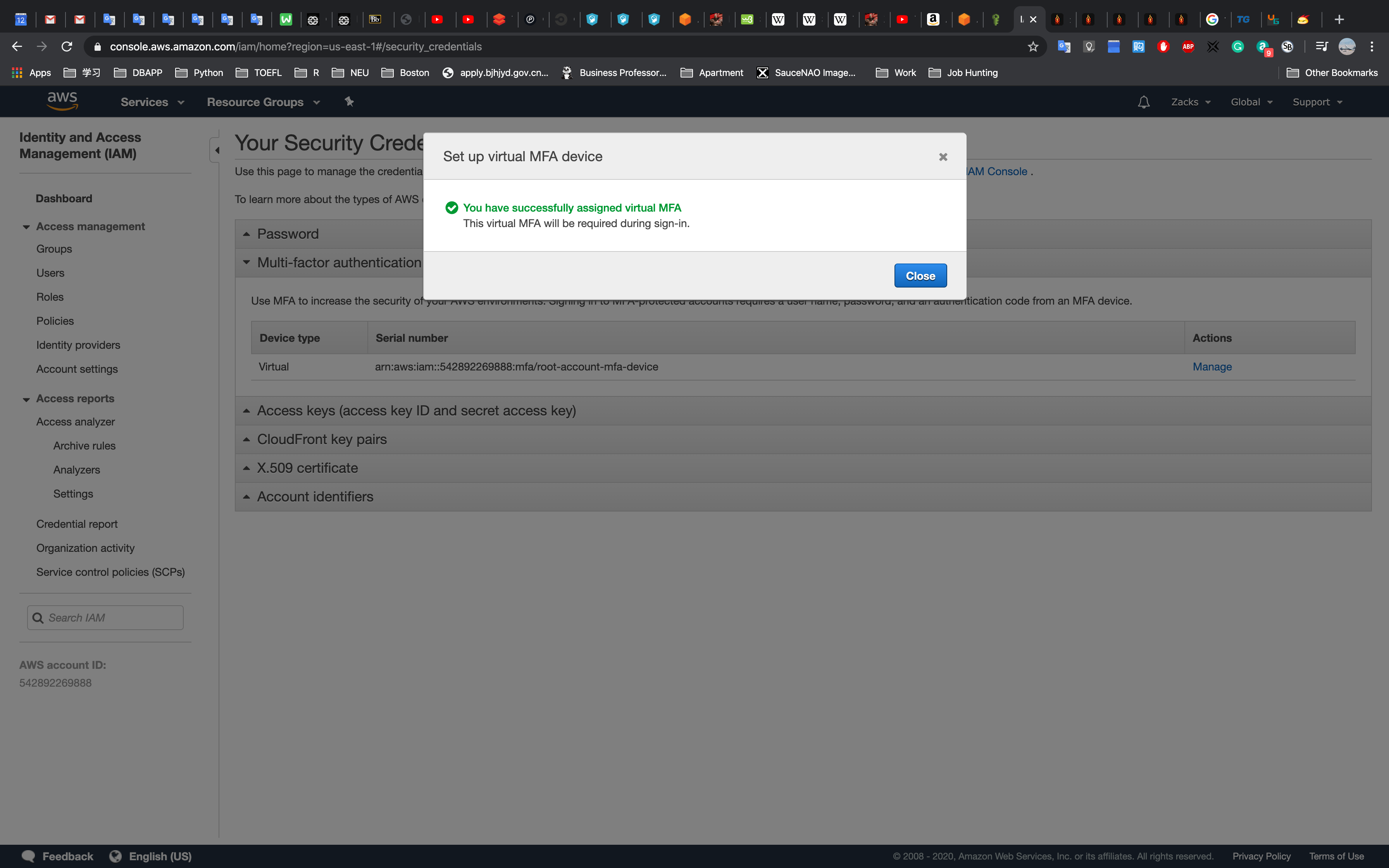

After scanning your QR Code and Click Assign MFA

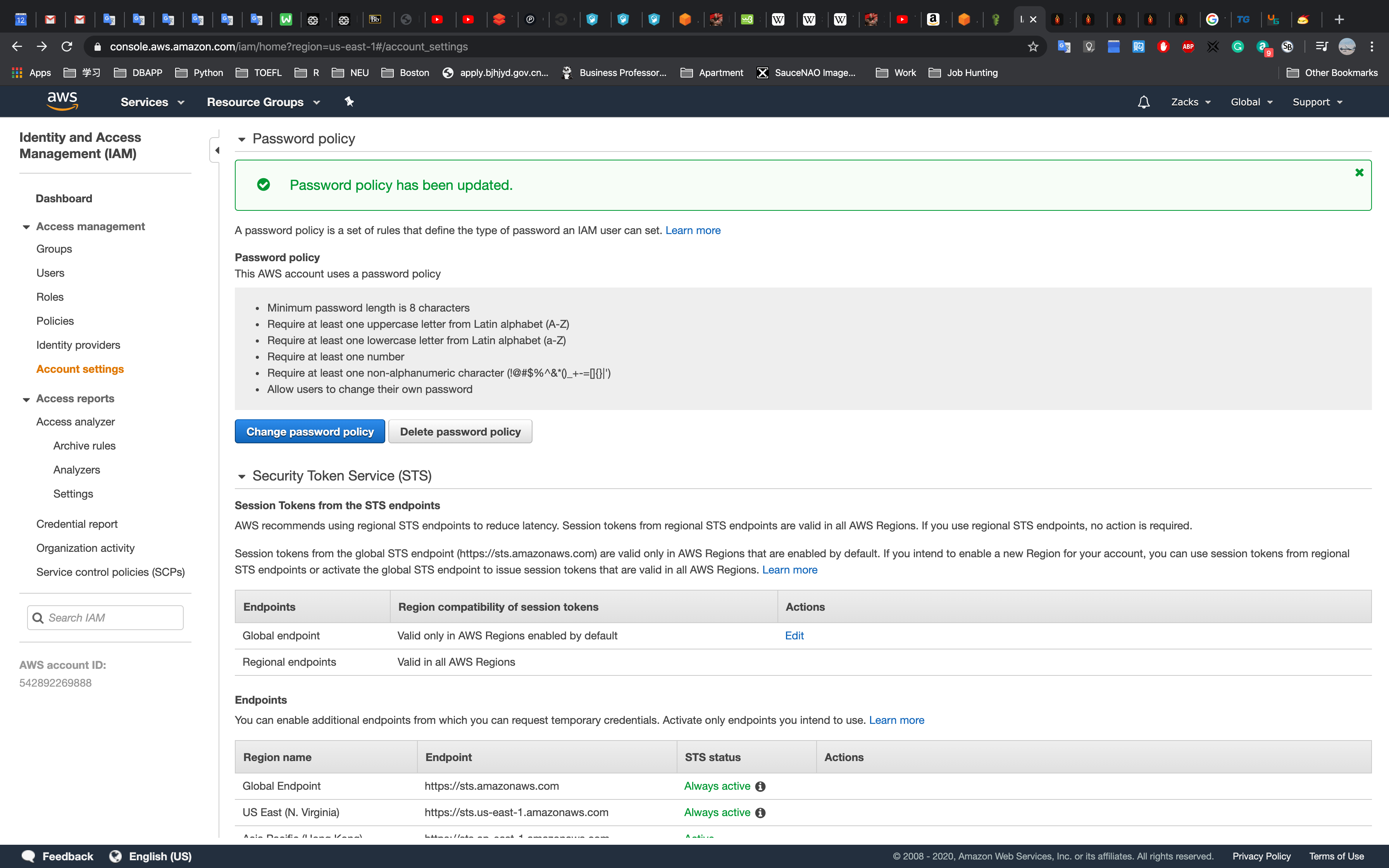

IAM password policy

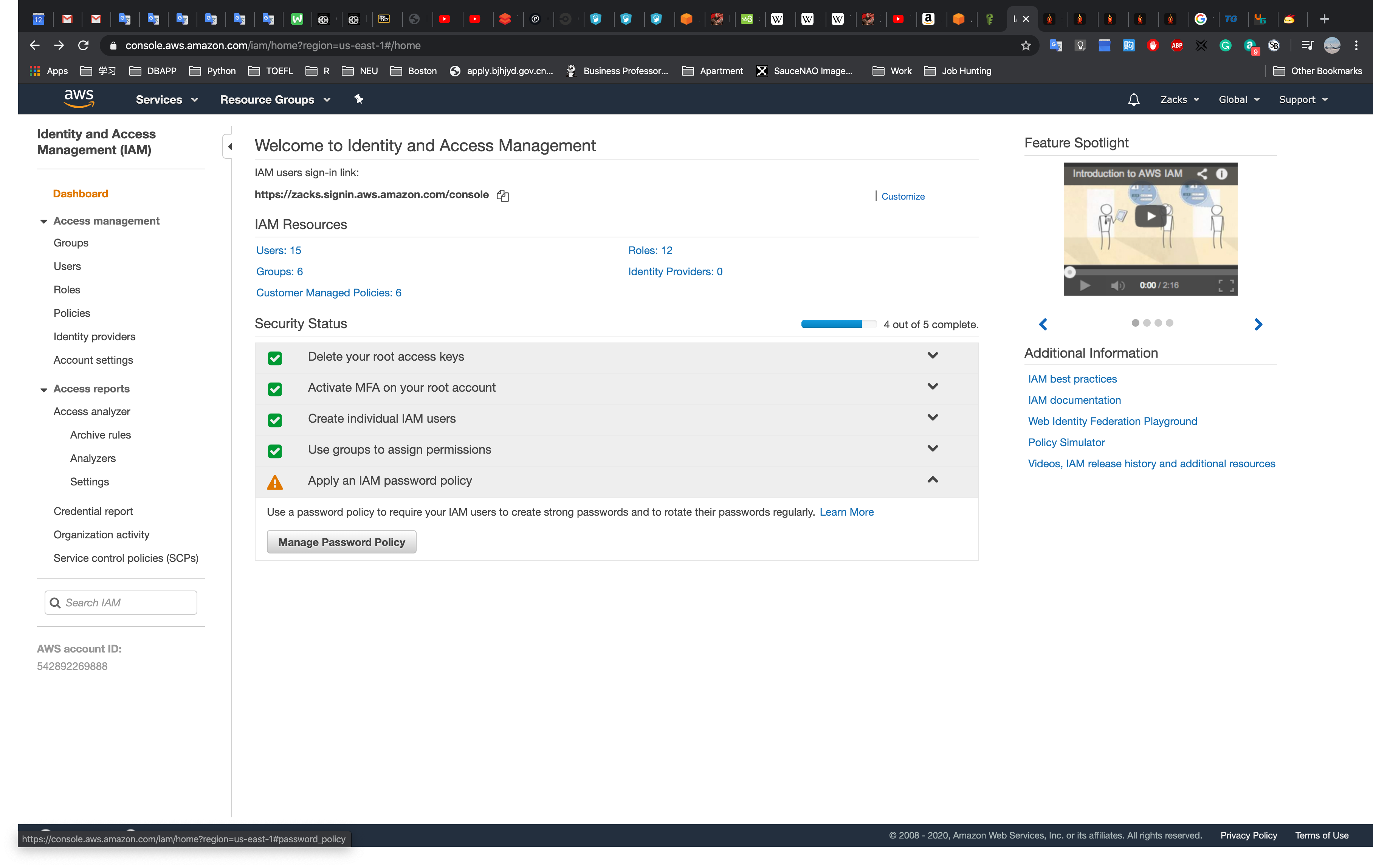

Click Apply an IAM password policy

Click Manage password policy

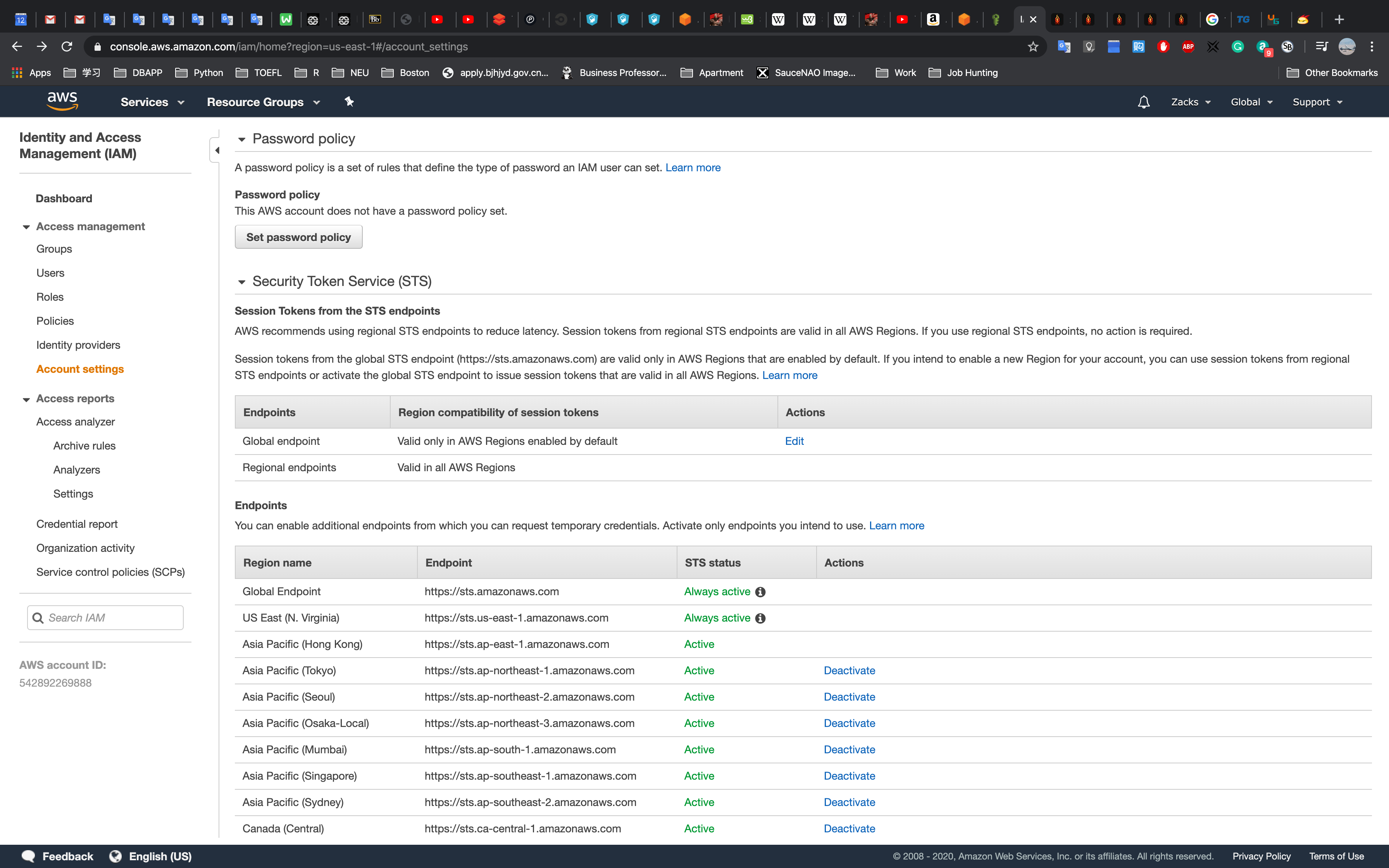

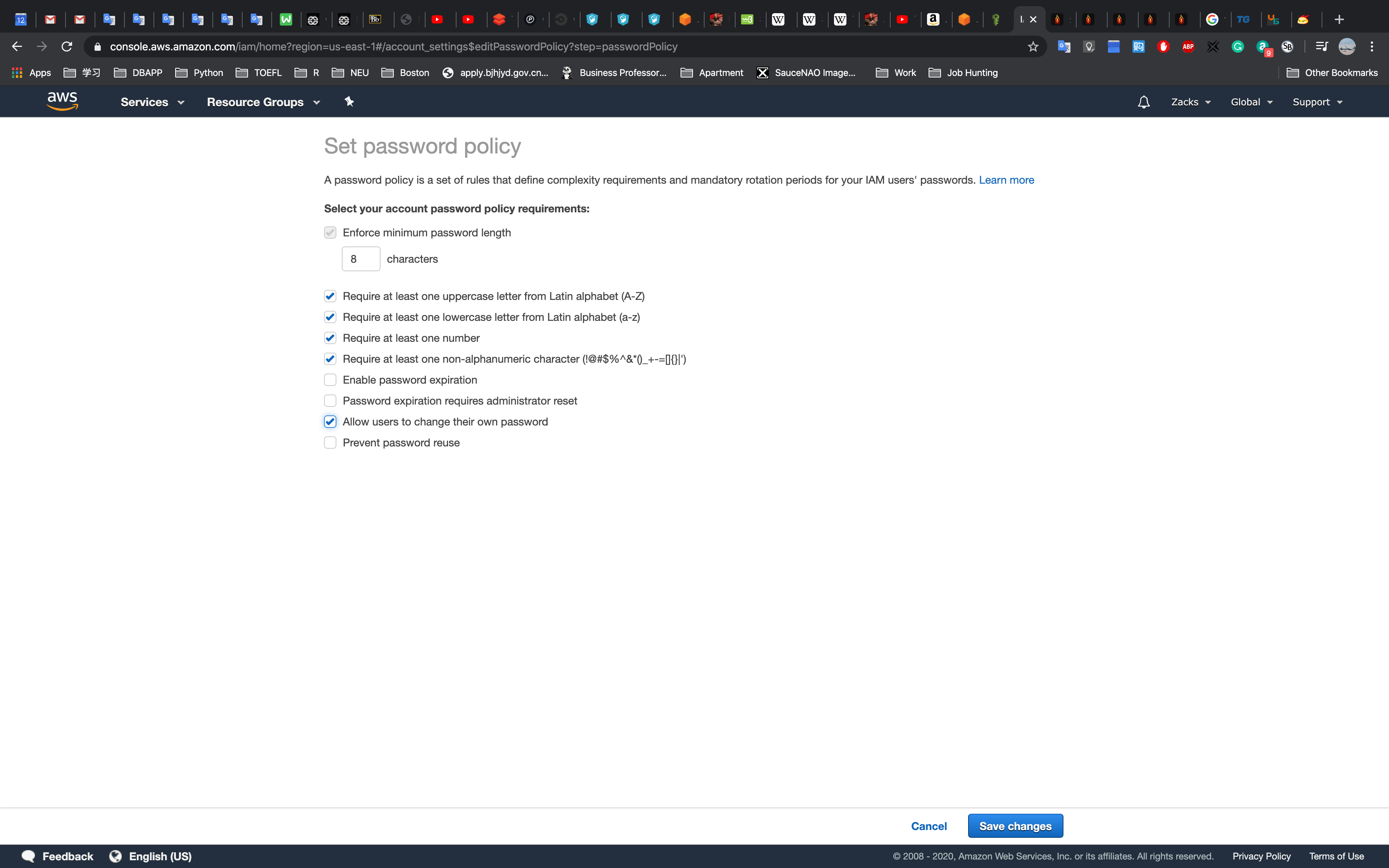

Click Set password policy

Set as you need and Click Save Changes

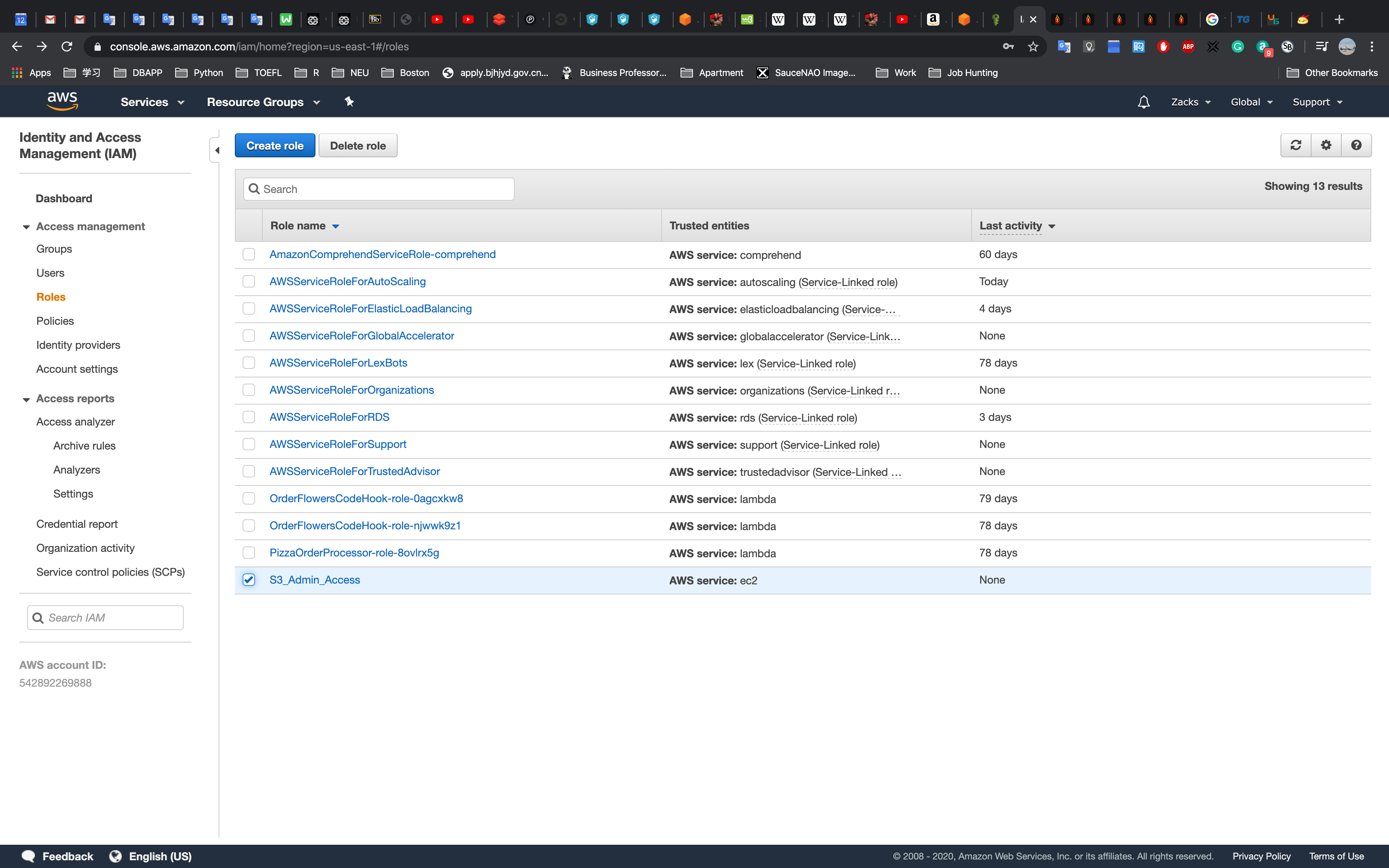

IAM Roles

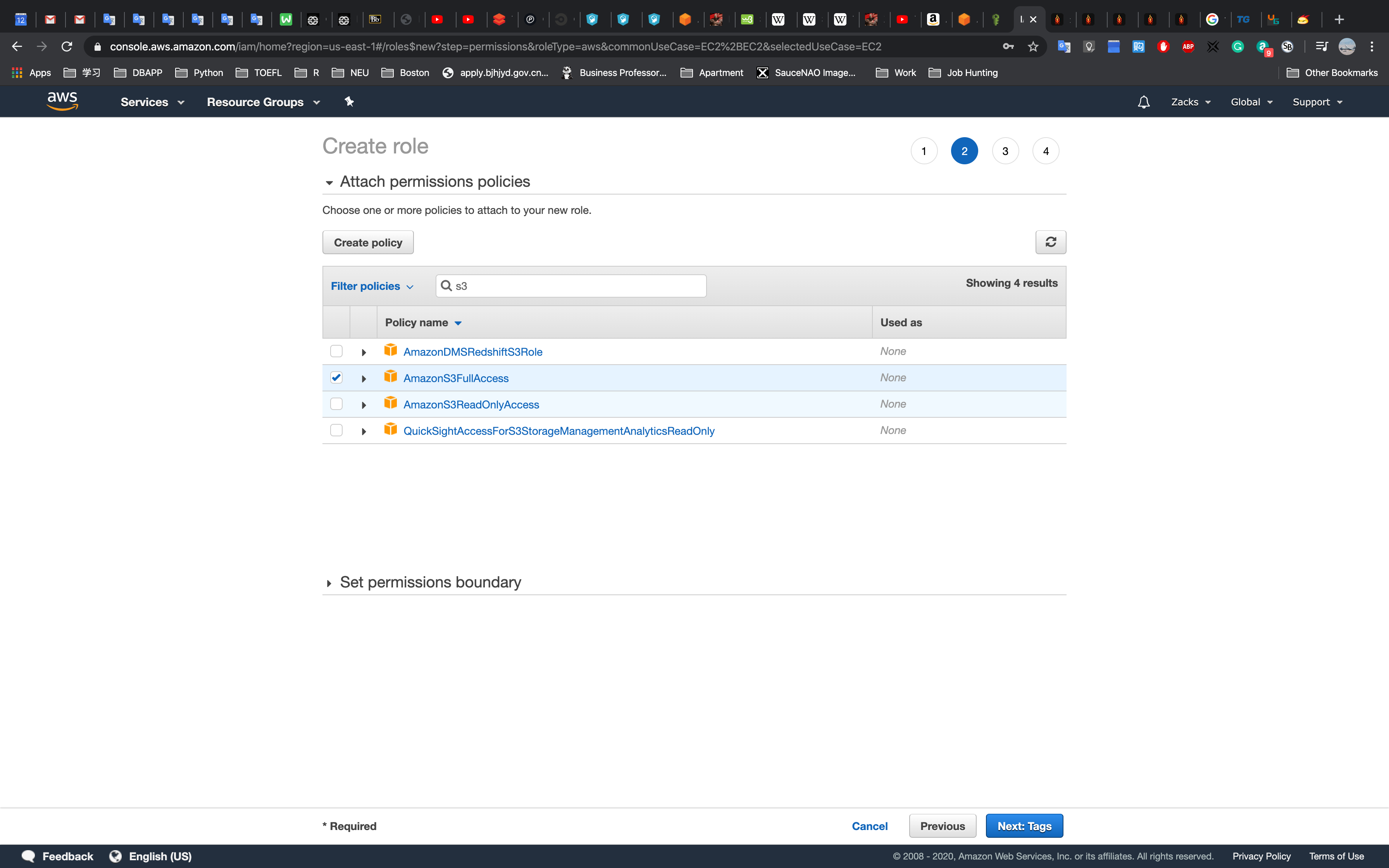

Click Create Role

Click EC2 then Click Next: Permissions

Select AmazonS3FullAccess and Click Next: Tag

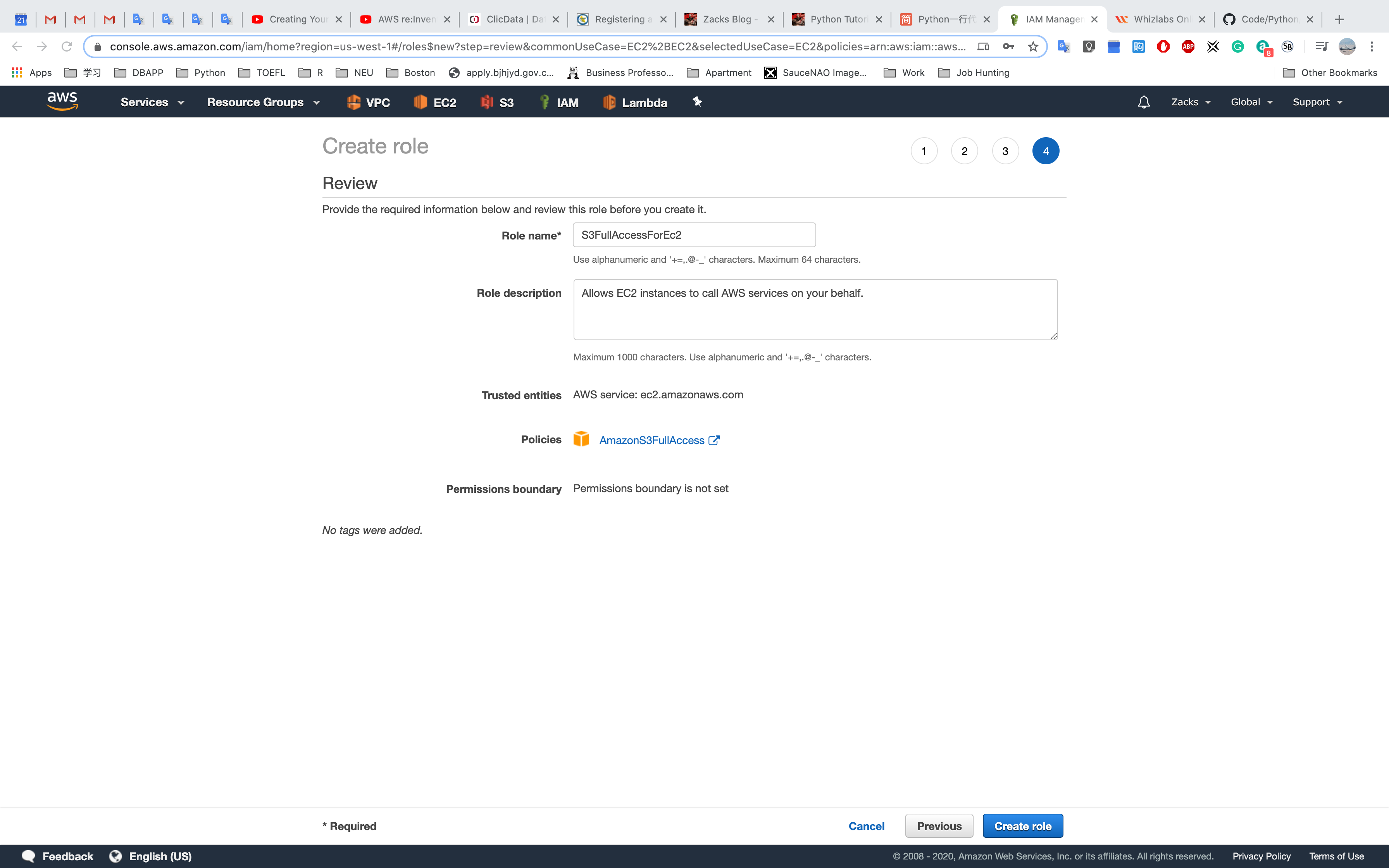

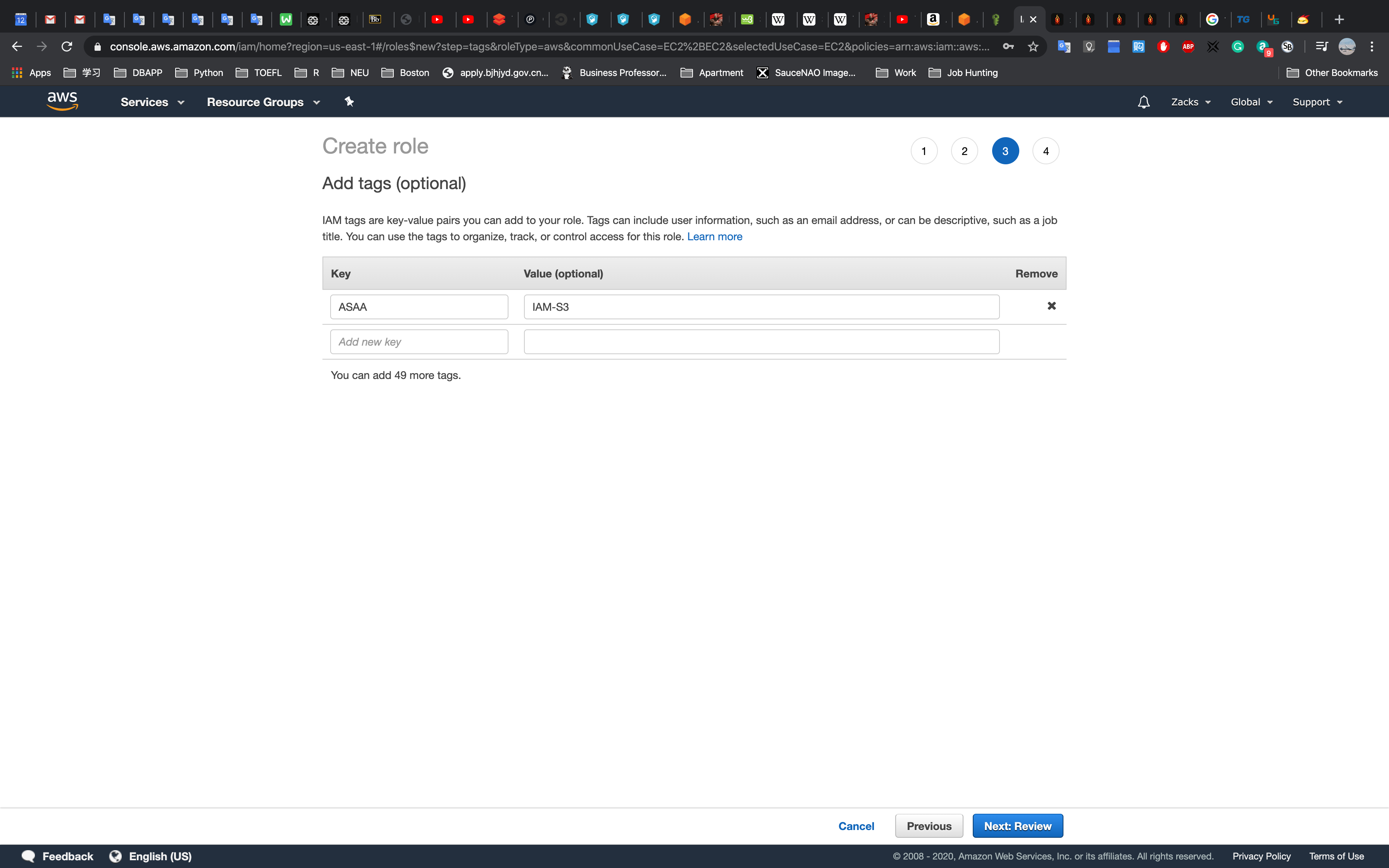

Give it a key and value, then Click Next: Review

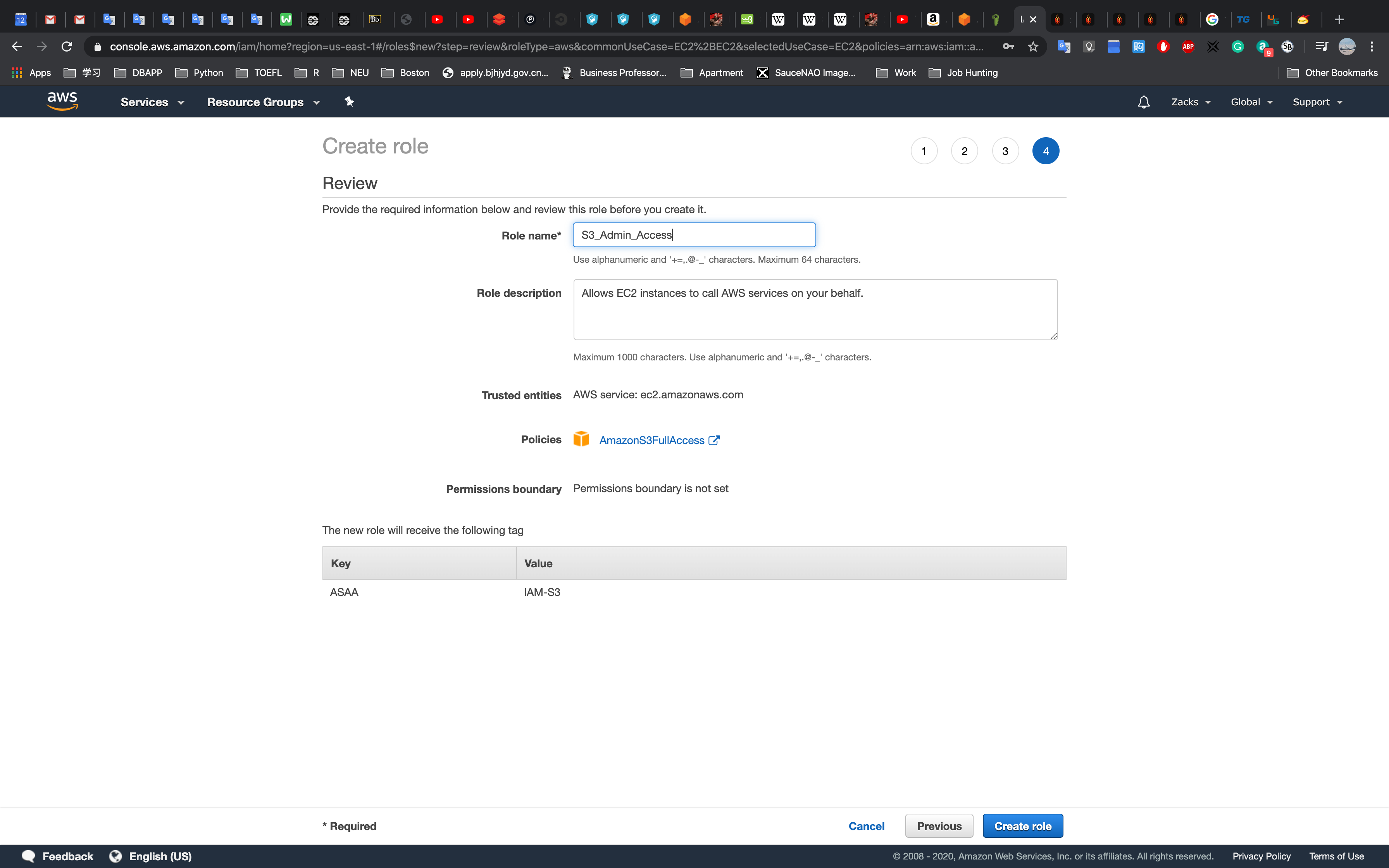

Name it and Click Create Role

Summary

- IAM is universal. It does not apply to regions at this time.

- The “root account” is simply the account created when first setup your AWS account. It has complete Admin access.

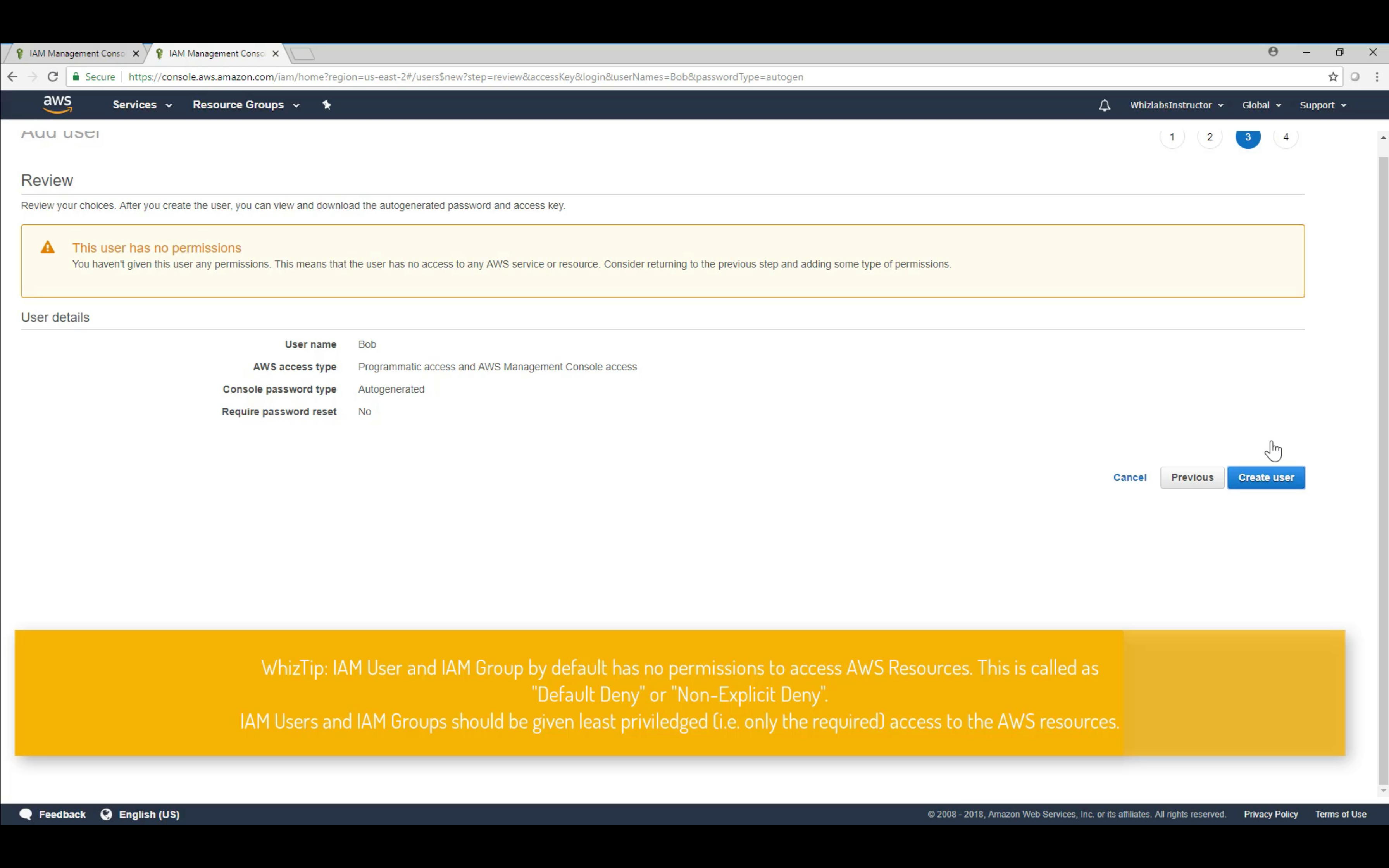

- New Users have NO permissions when first created.

- New Users are assigned Access Key ID & Secret Access Keys when first created.

- These are not the same as a password. You cannot use the Access key ID & Secret Access Key to Login in to the console. You can use this to access AWS via the APIs and Command Line, however.

- You only get to view these once. If you lose them, you have to regenerate them. So, save the in a secure location.

- Always setup Multi factor Authentication on your root account.

- You can create and customize your own password rotation policies.

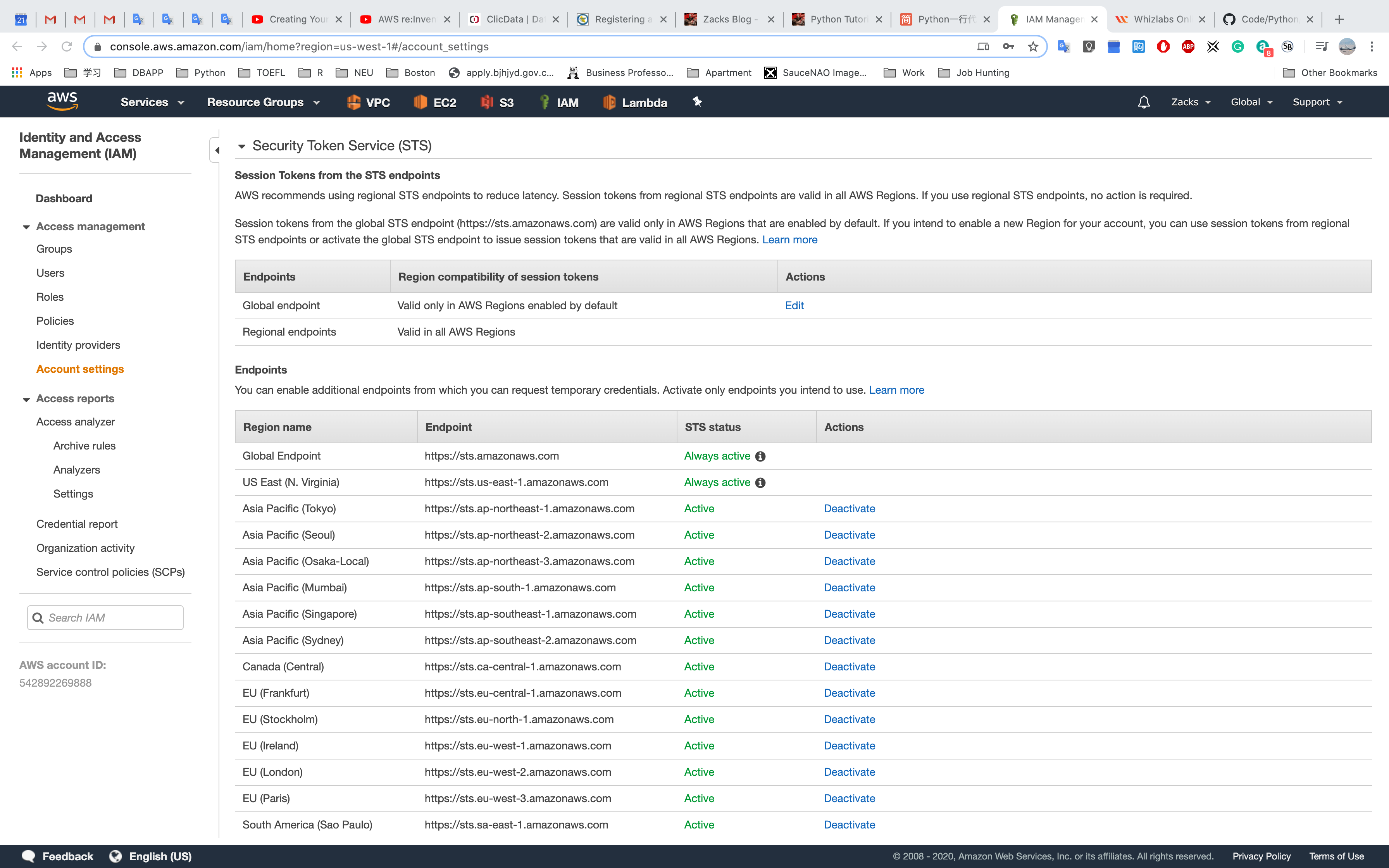

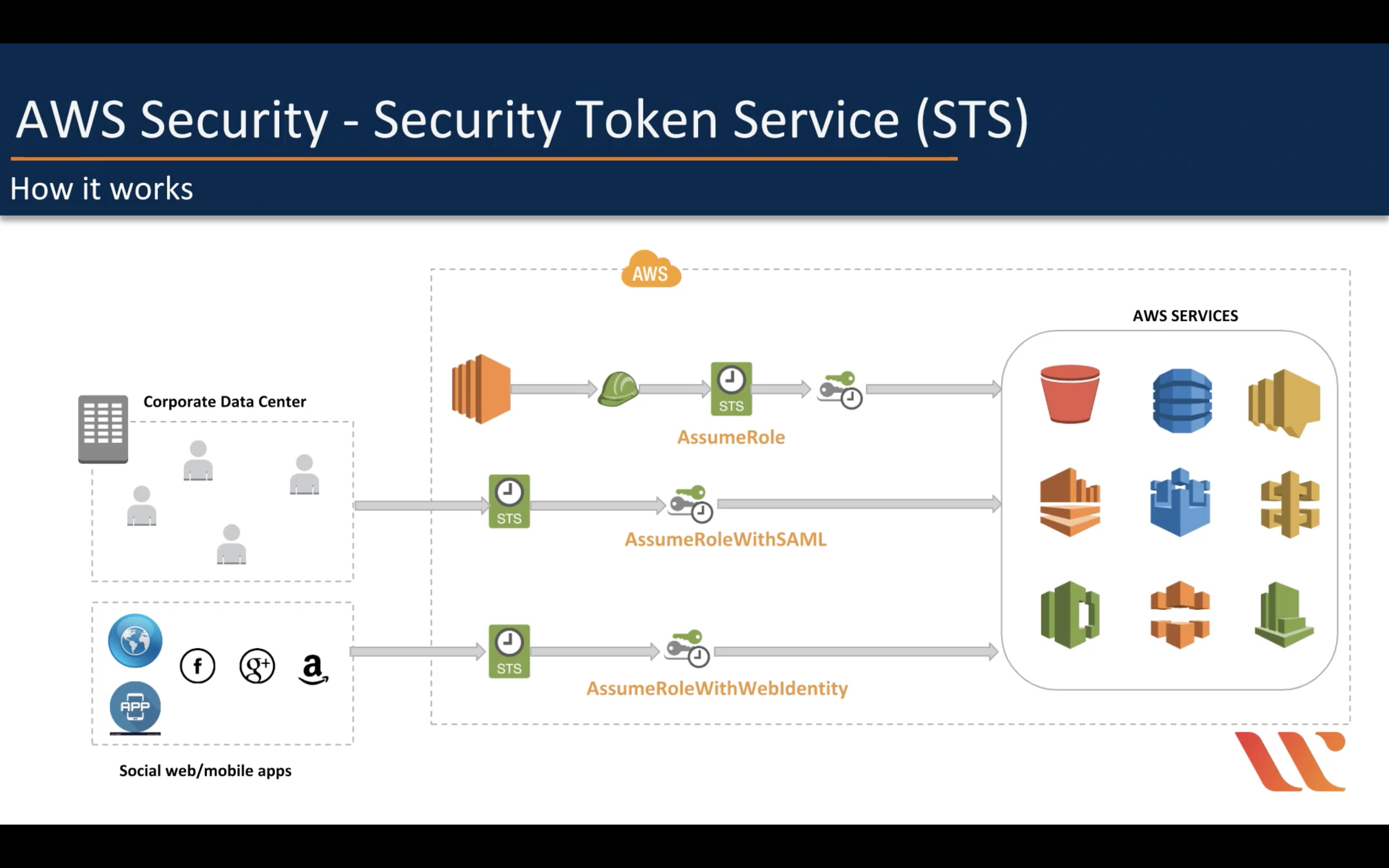

Amazon STS (Security Token Service)

Though the session policy parameters are optional, if you do not pass a policy, then the resulting federated user session has no permissions. When you pass session policies, the session permissions are the intersection of the IAM user policies and the session policies that you pass. This gives you a way to further restrict the permissions for a federated user. You cannot use session policies to grant more permissions than those that are defined in the permissions policy of the IAM user.

IAM Role is not a good solution for temporarily using AWS service

AssumeRole

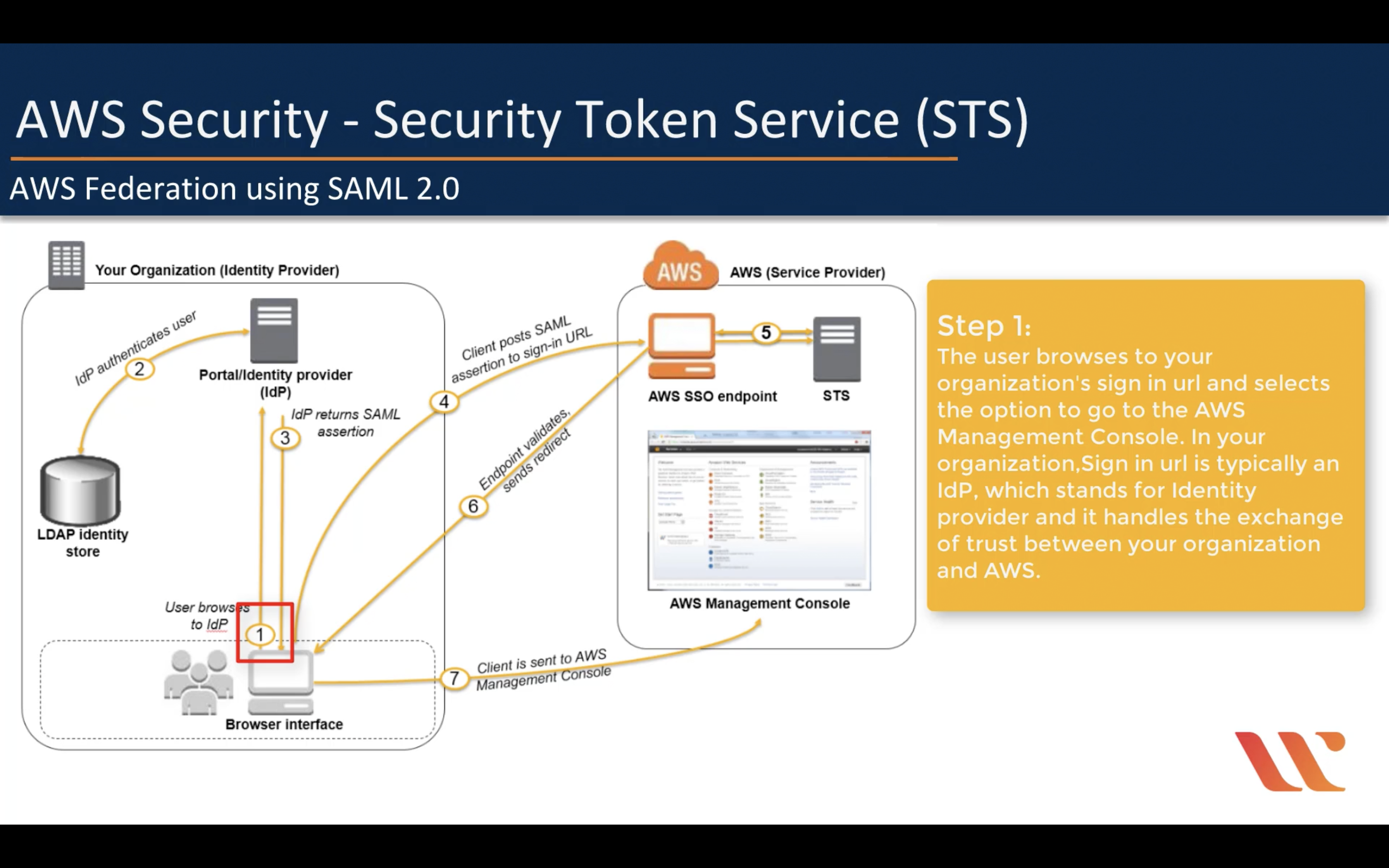

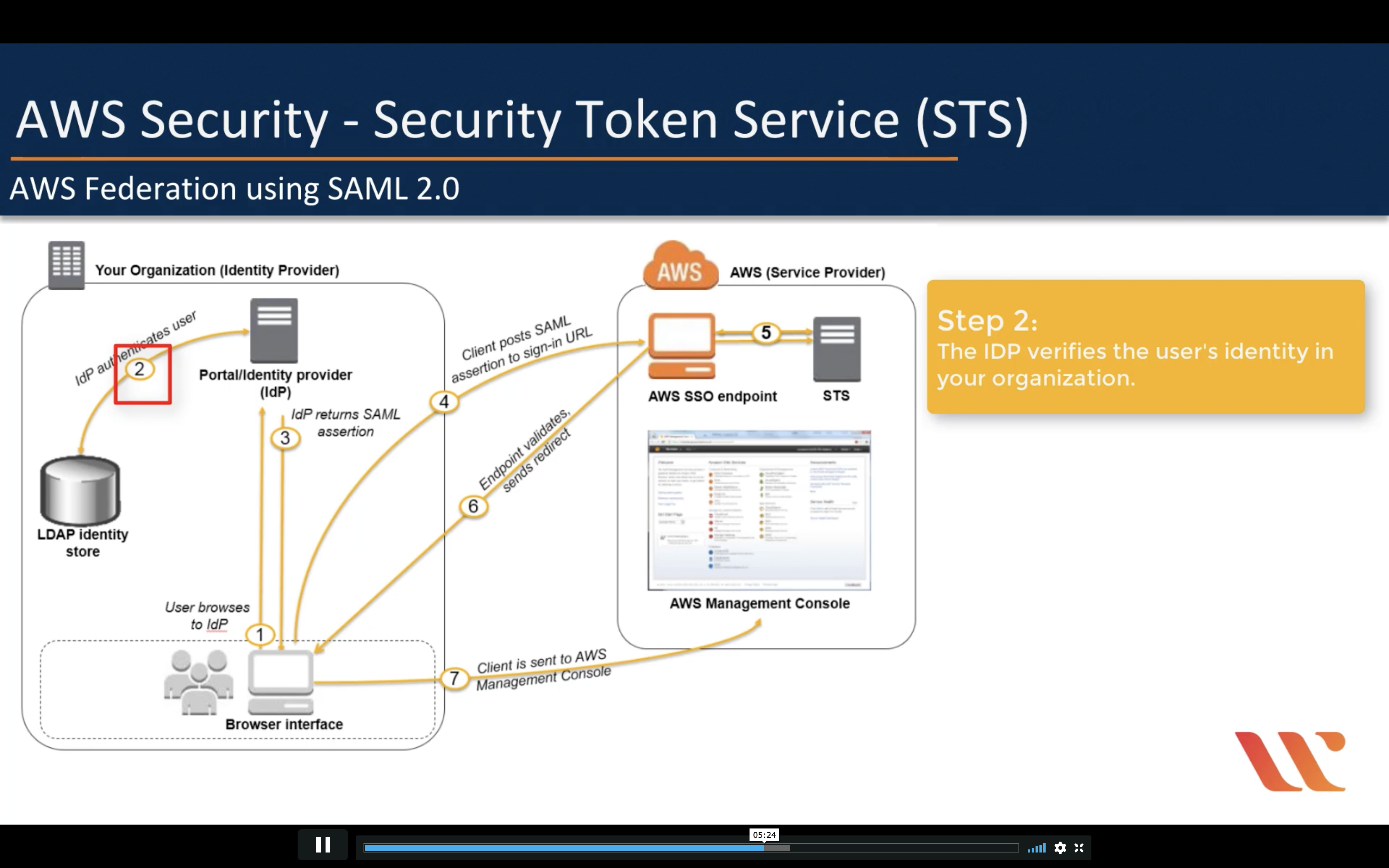

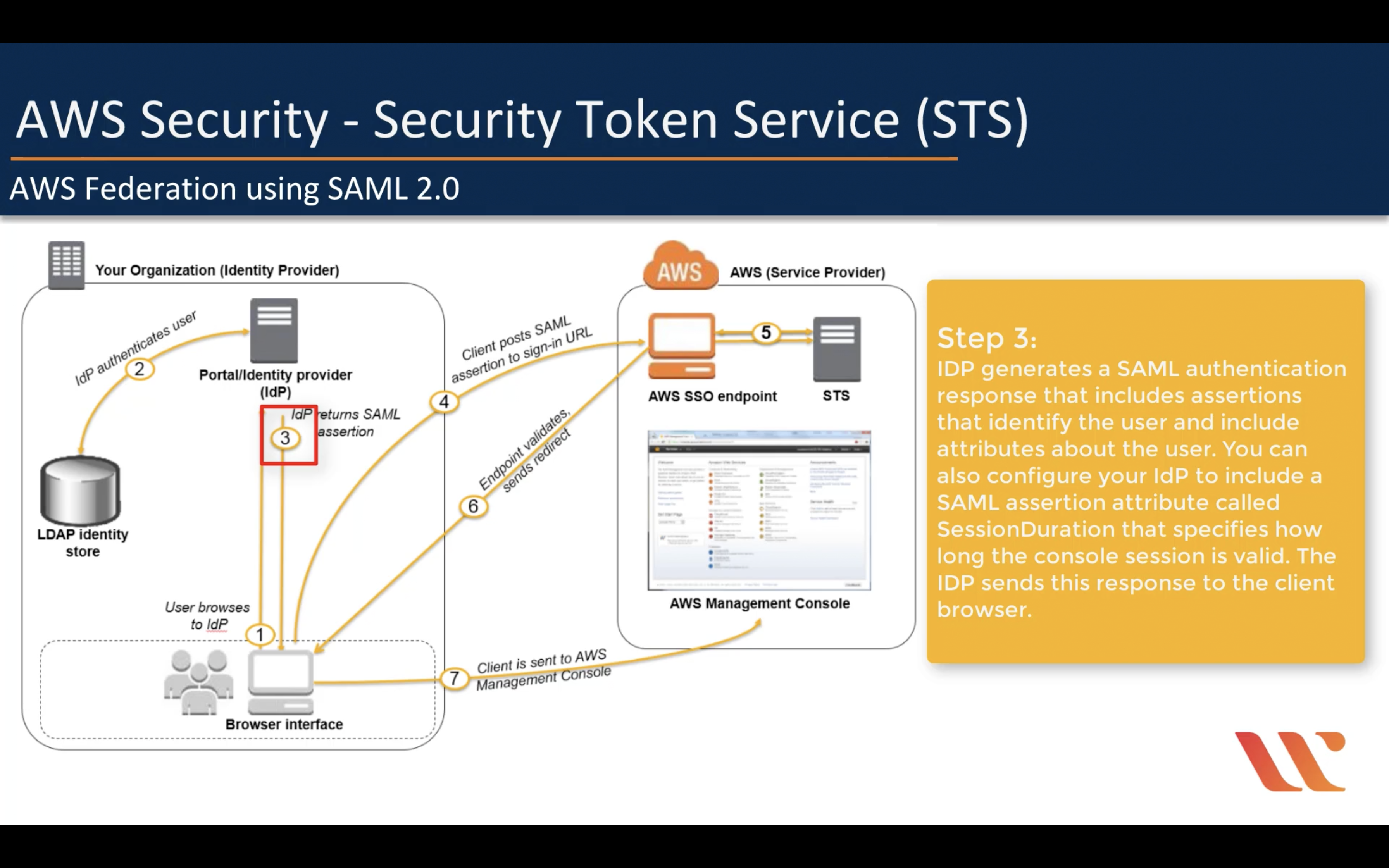

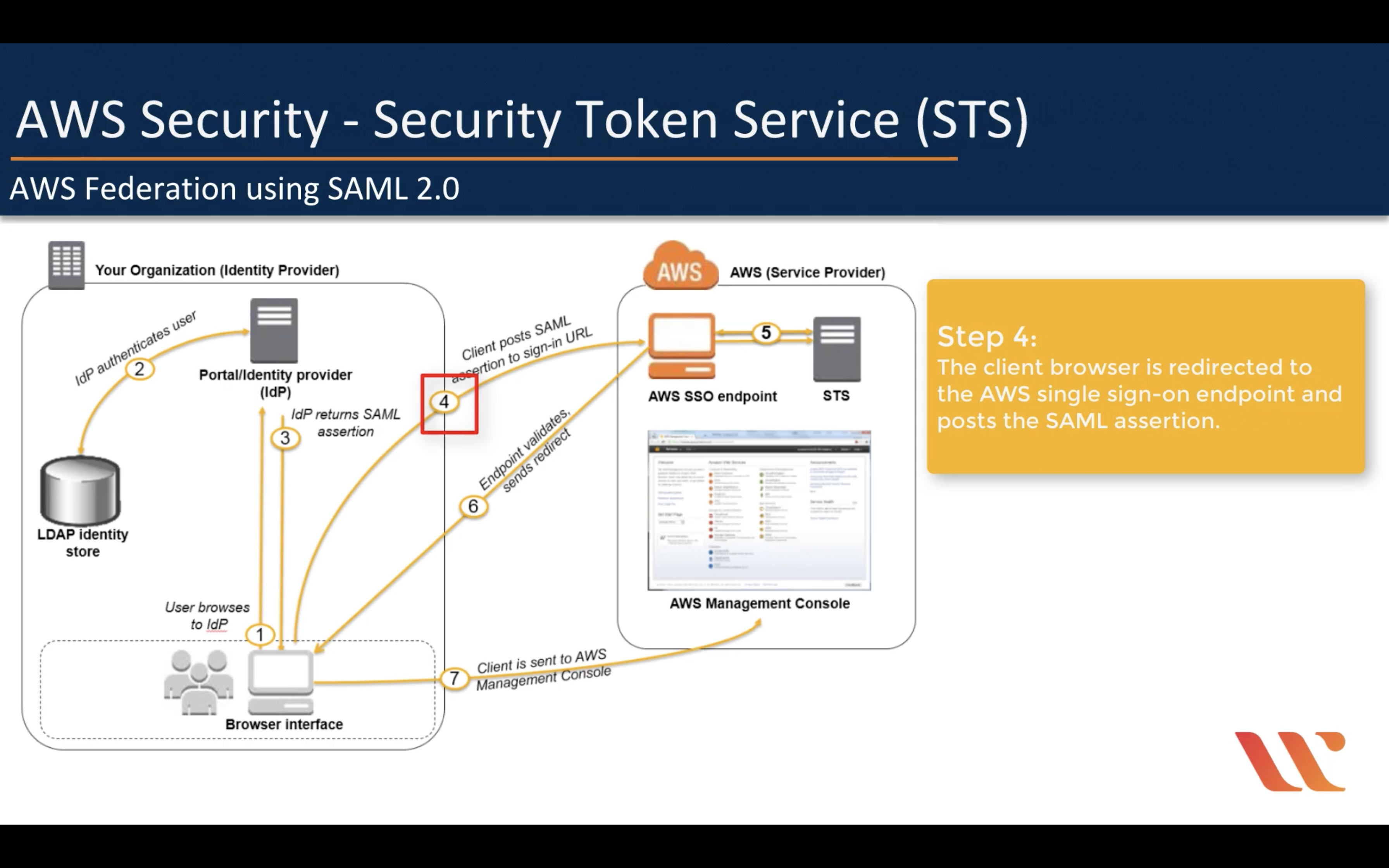

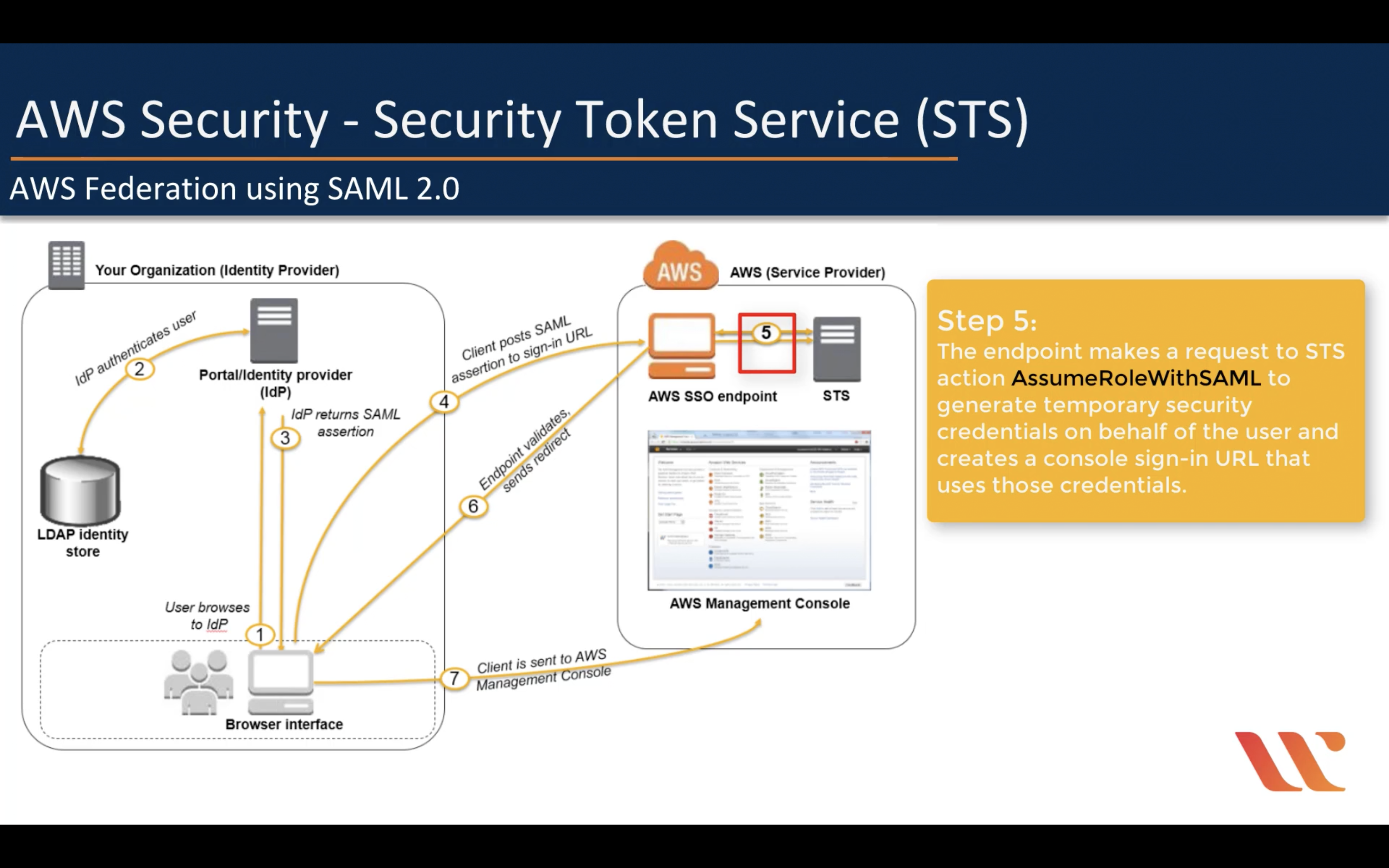

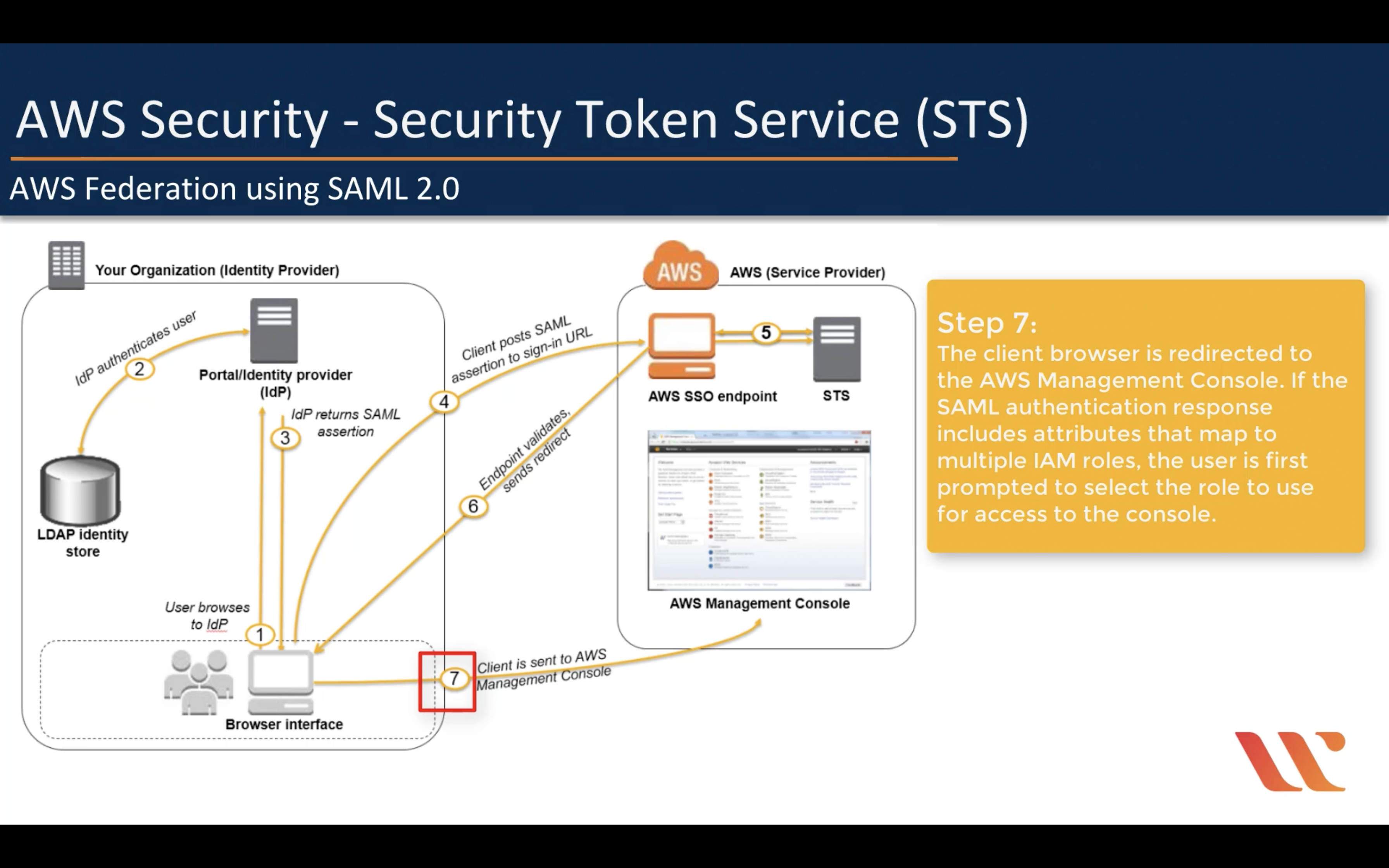

AWS Federation using SAML 2.0 (For single sign-in)

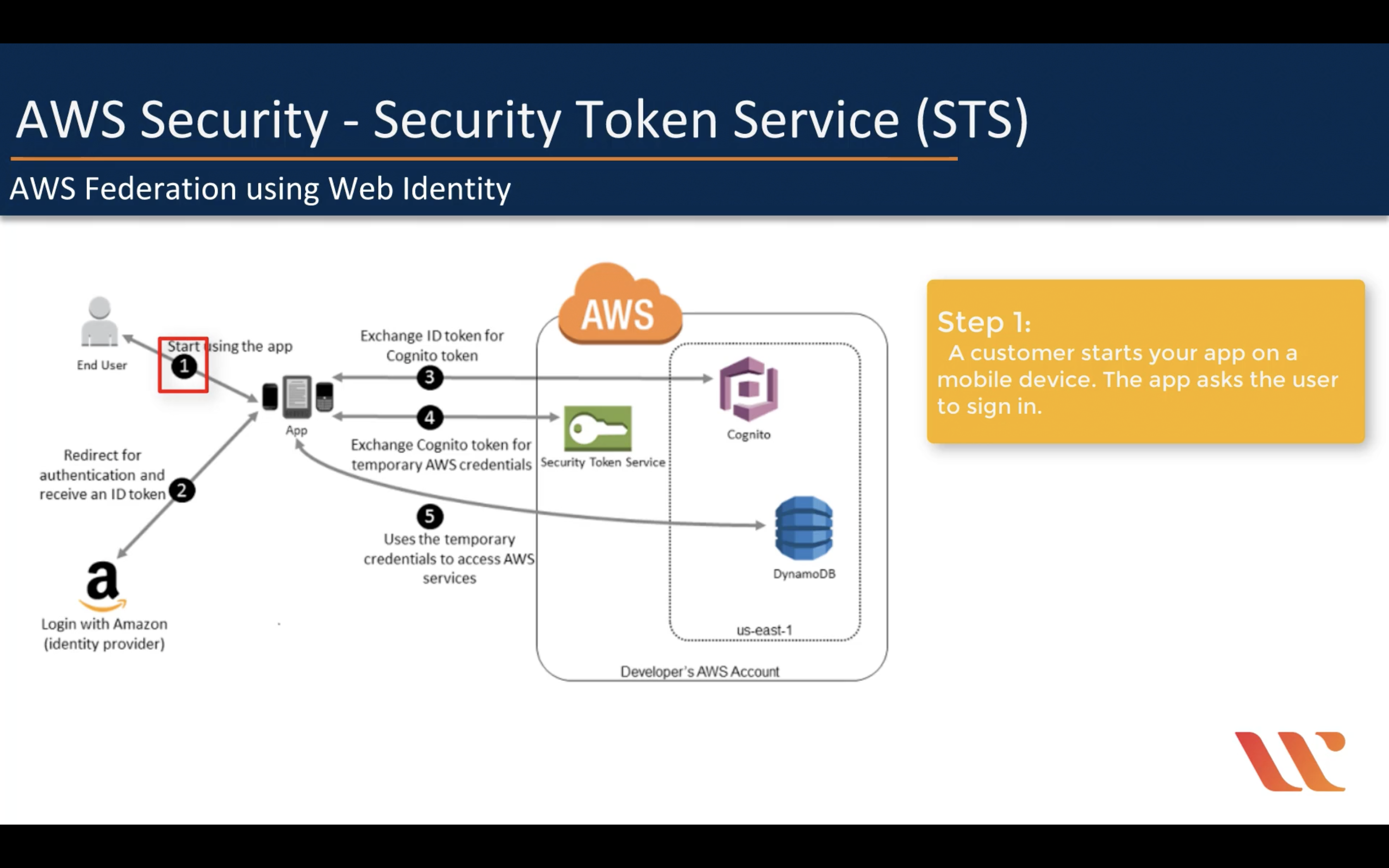

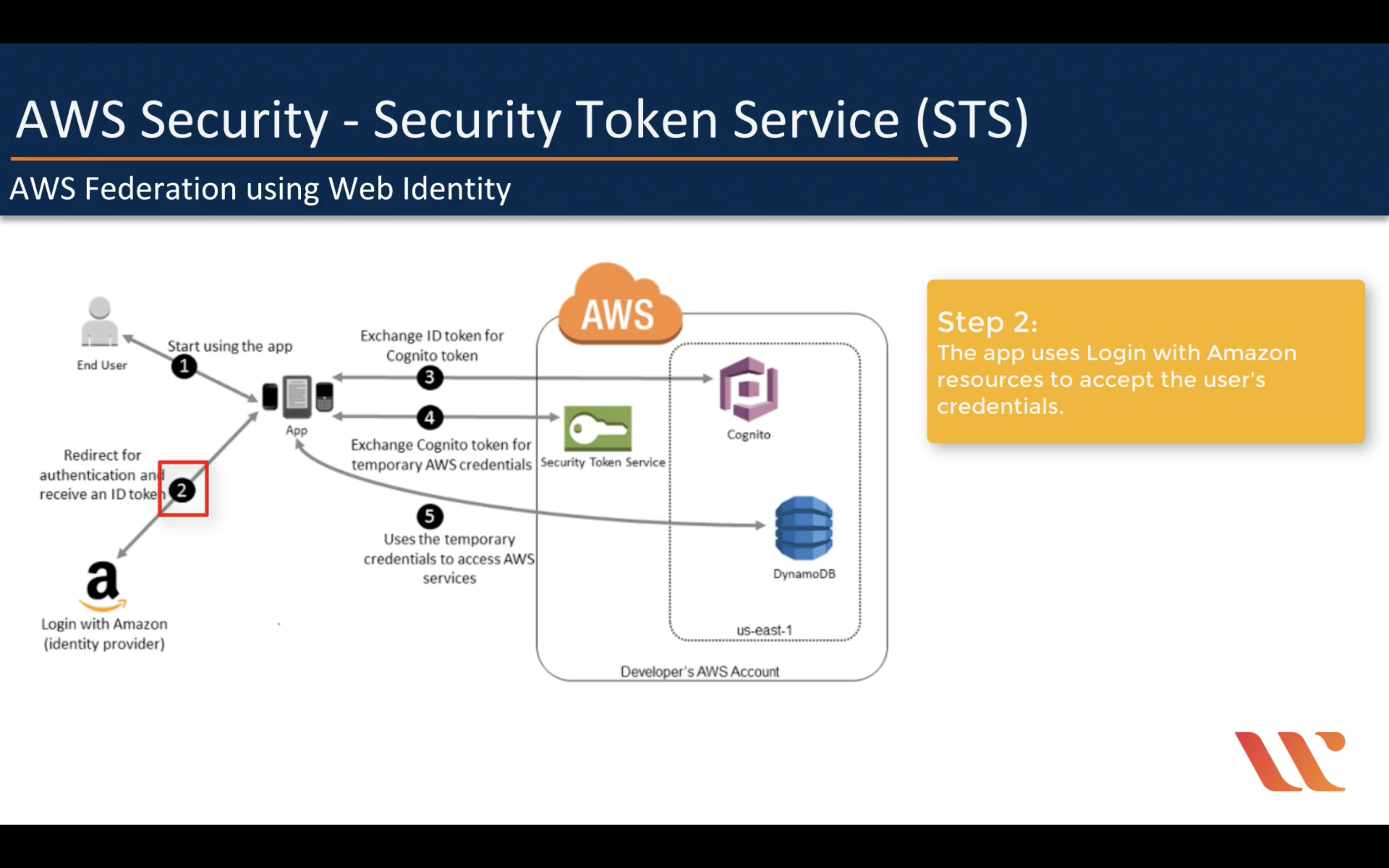

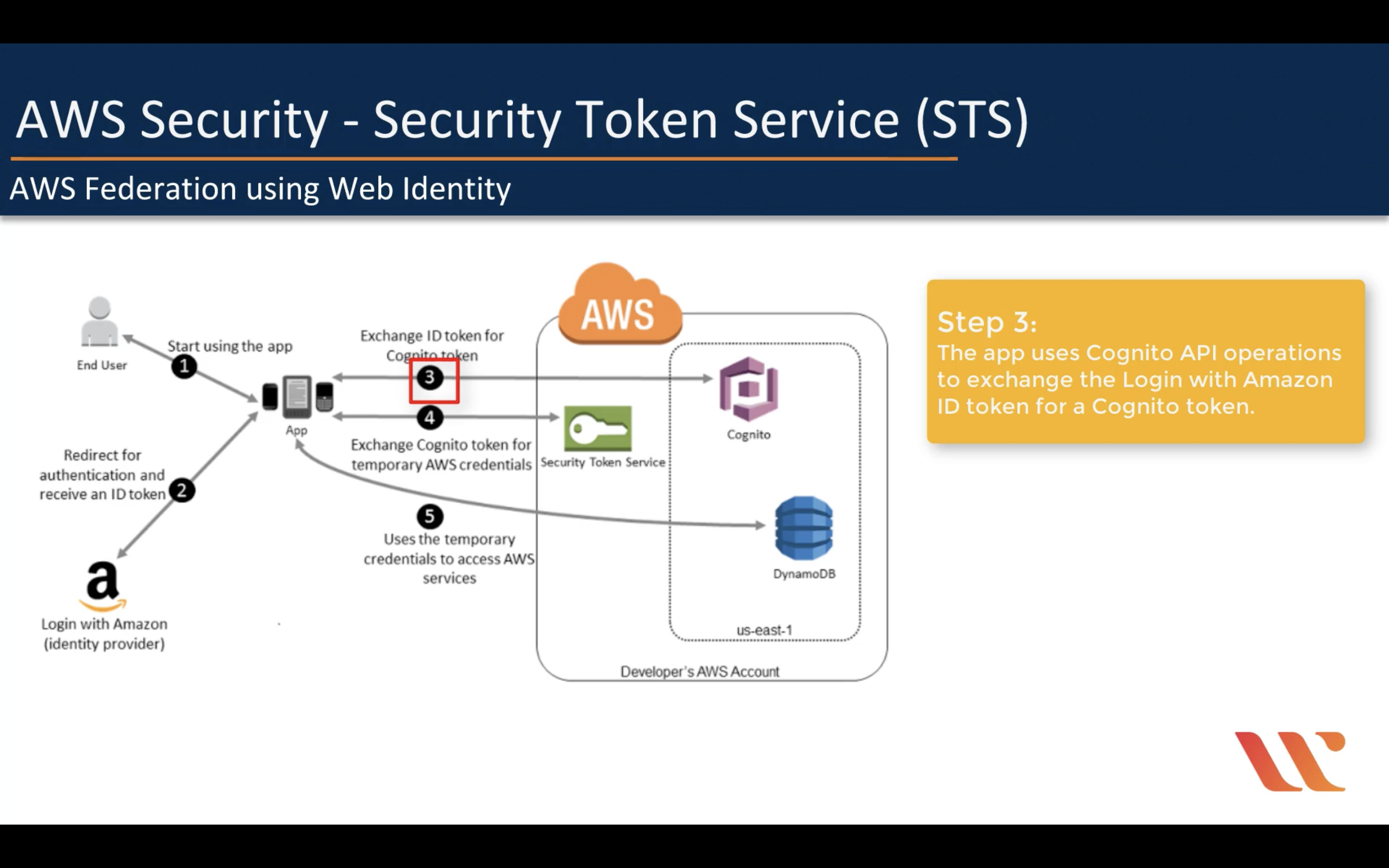

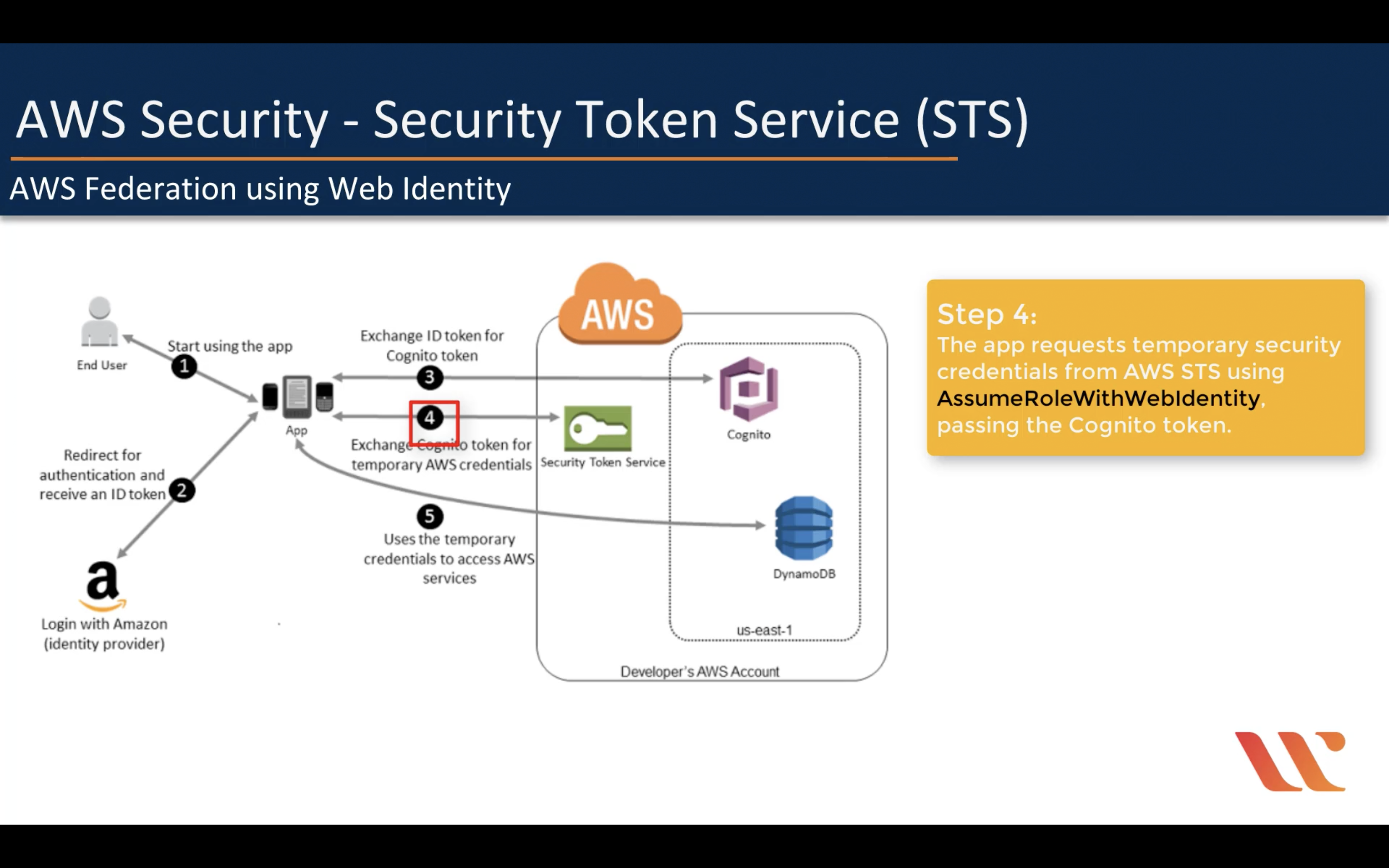

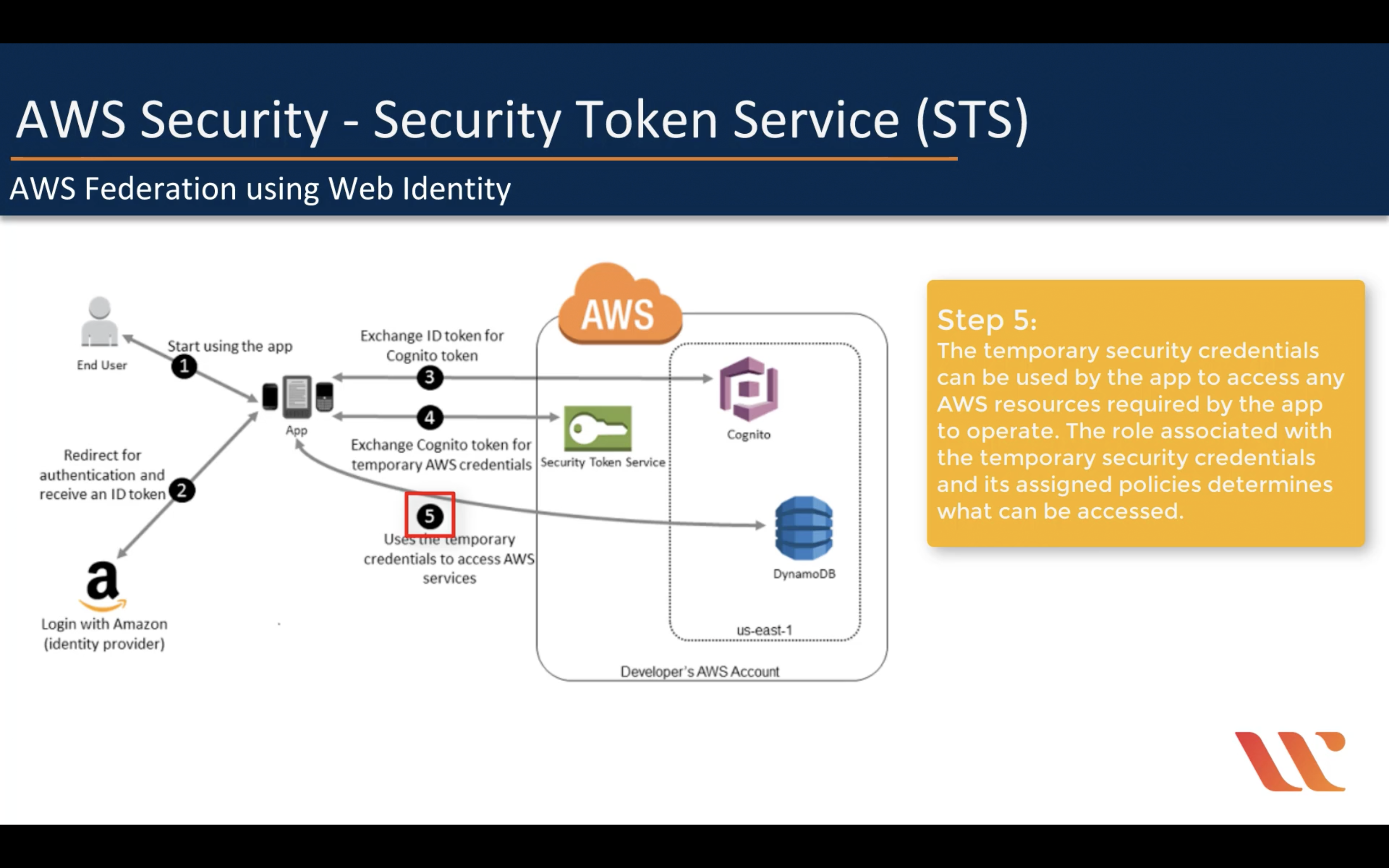

AWS Federation using Web Identity

IAM Summary

Amazon S3 (Object Level Storage)

Introduction

Amazon Simple Storage Service (Amazon S3) provides developers and IT teams with secure, durable, highly-scalable object storage. Amazon S3 is easy to use, with a simple web services interface to store and retrieve any amount of data from anywhere on the web.

- S3 is a safe place to store your files

- It is Object-based storage.

- The data is is spread across multiple devices and facilities.

The basics of S3 are as follows:

- S3 is Object-based – i.e. allows you to upload files.

- Files can be from 0 Bytes to 5TB.

- There is unlimited storage.

- Files are stored in Buckets.

Amazon Web Services Flashcards

Analytics

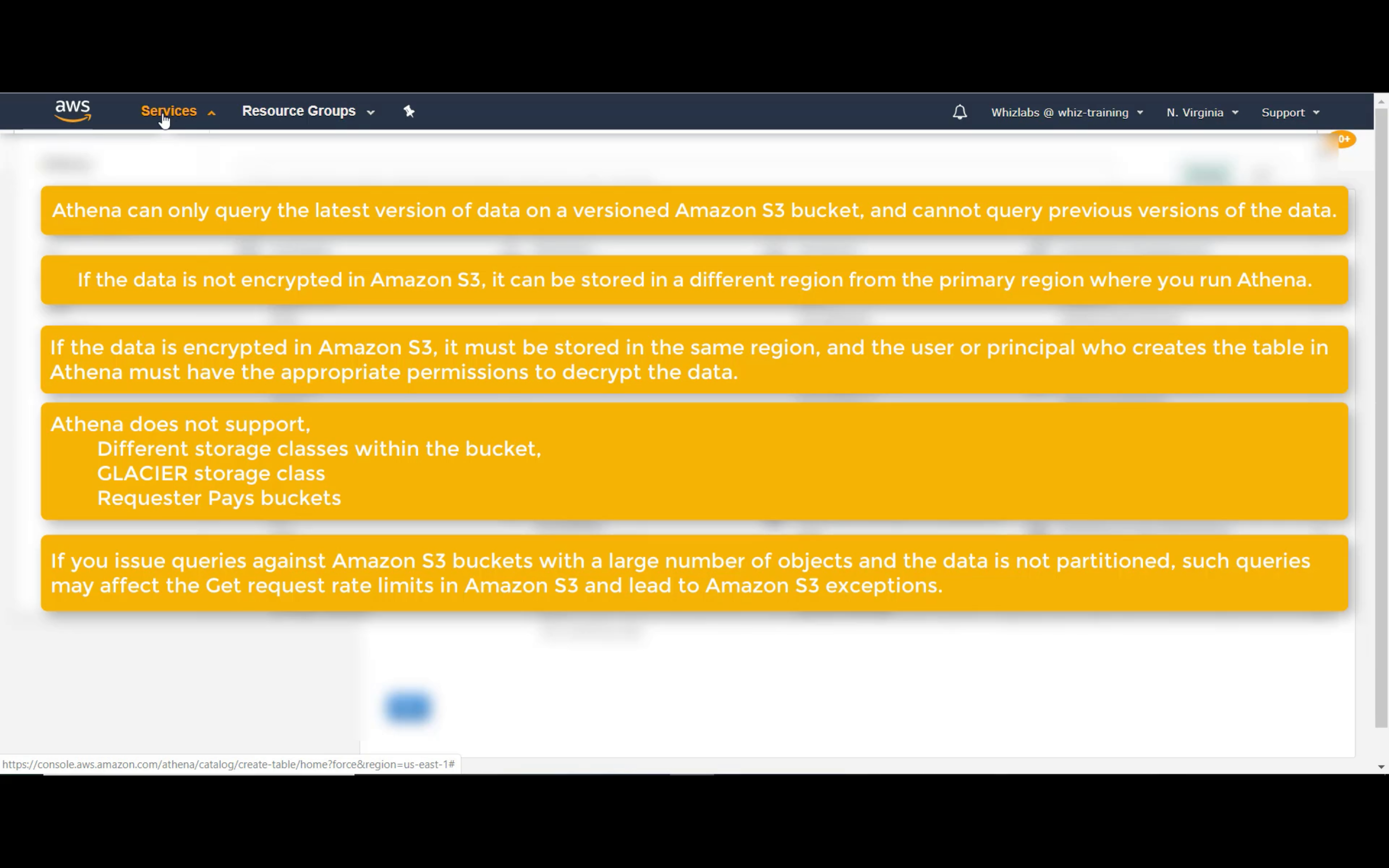

Amazon Athena

Query data in S3 using SQL

- Serverless. Zero infrastructure. Zero administration.

- Easy to get started

- Easy to query, just use standard SQL

- Pay per query

- Fast performance

- Highly available & durable

- Secure

- Integrated

- Federated query [in preview]

- Machine learning [in preview]

Amazon CloudSearch

Managed search service

Amazon Elasticsearch Service

Run and scale Elasticsearch clusters

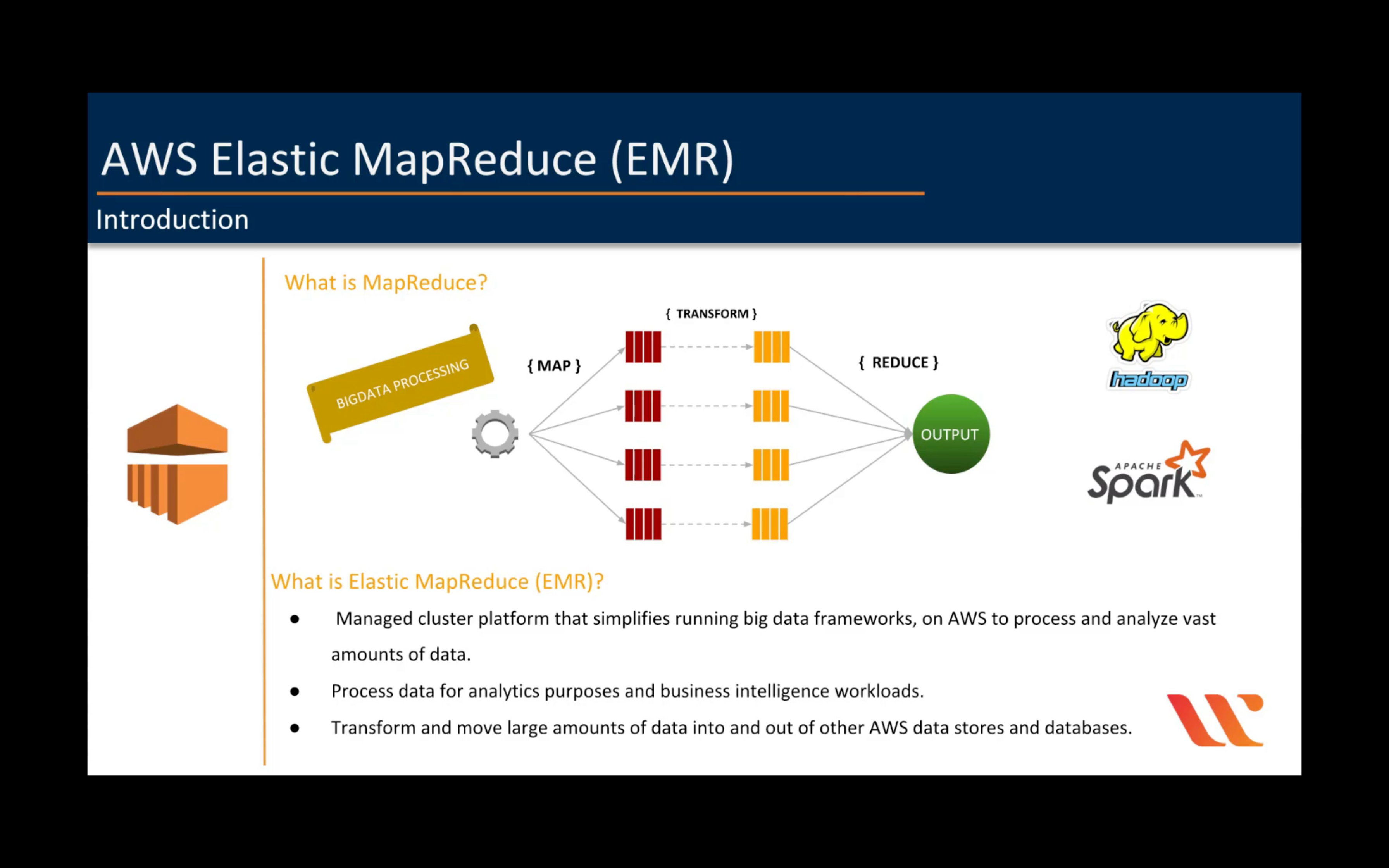

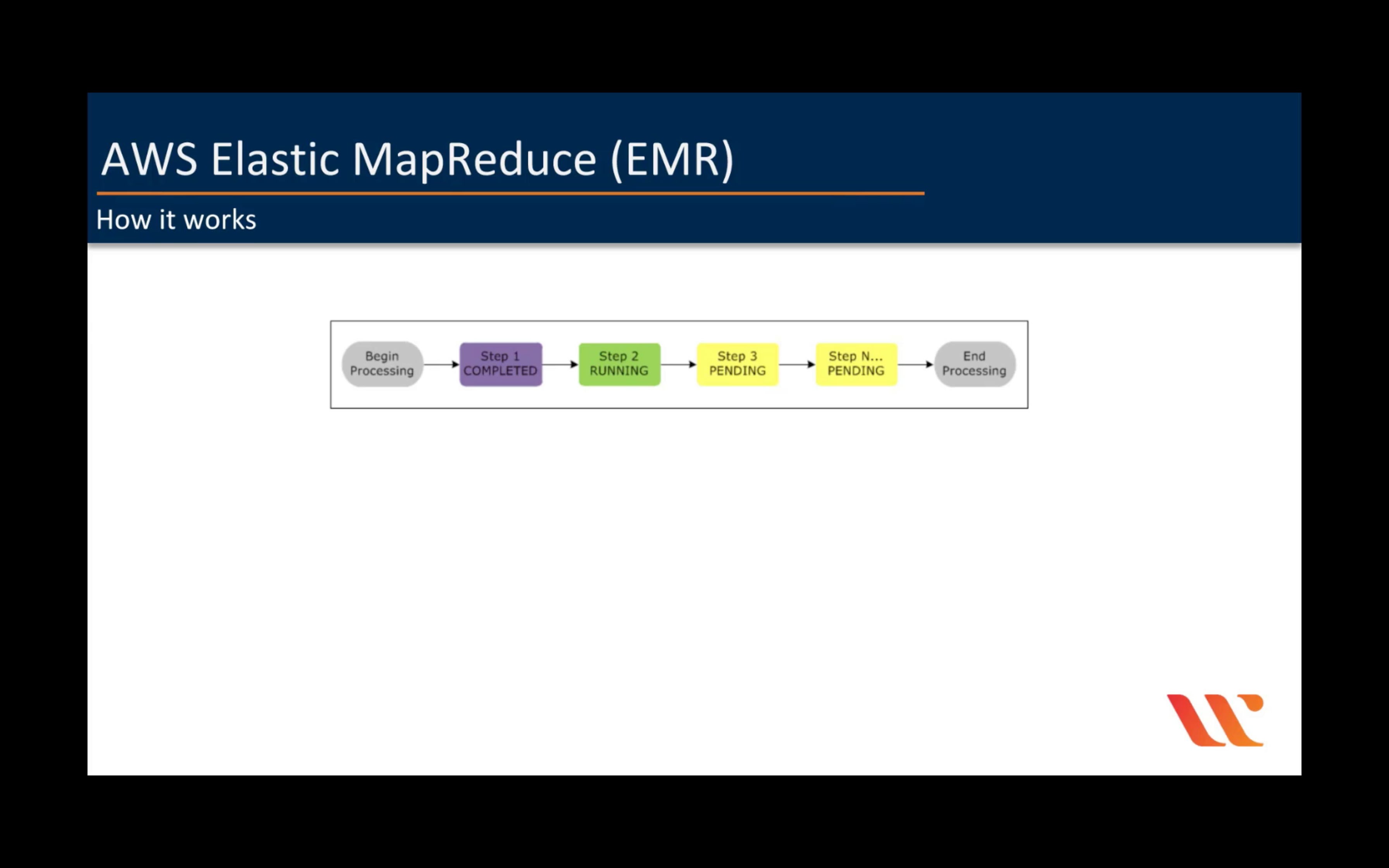

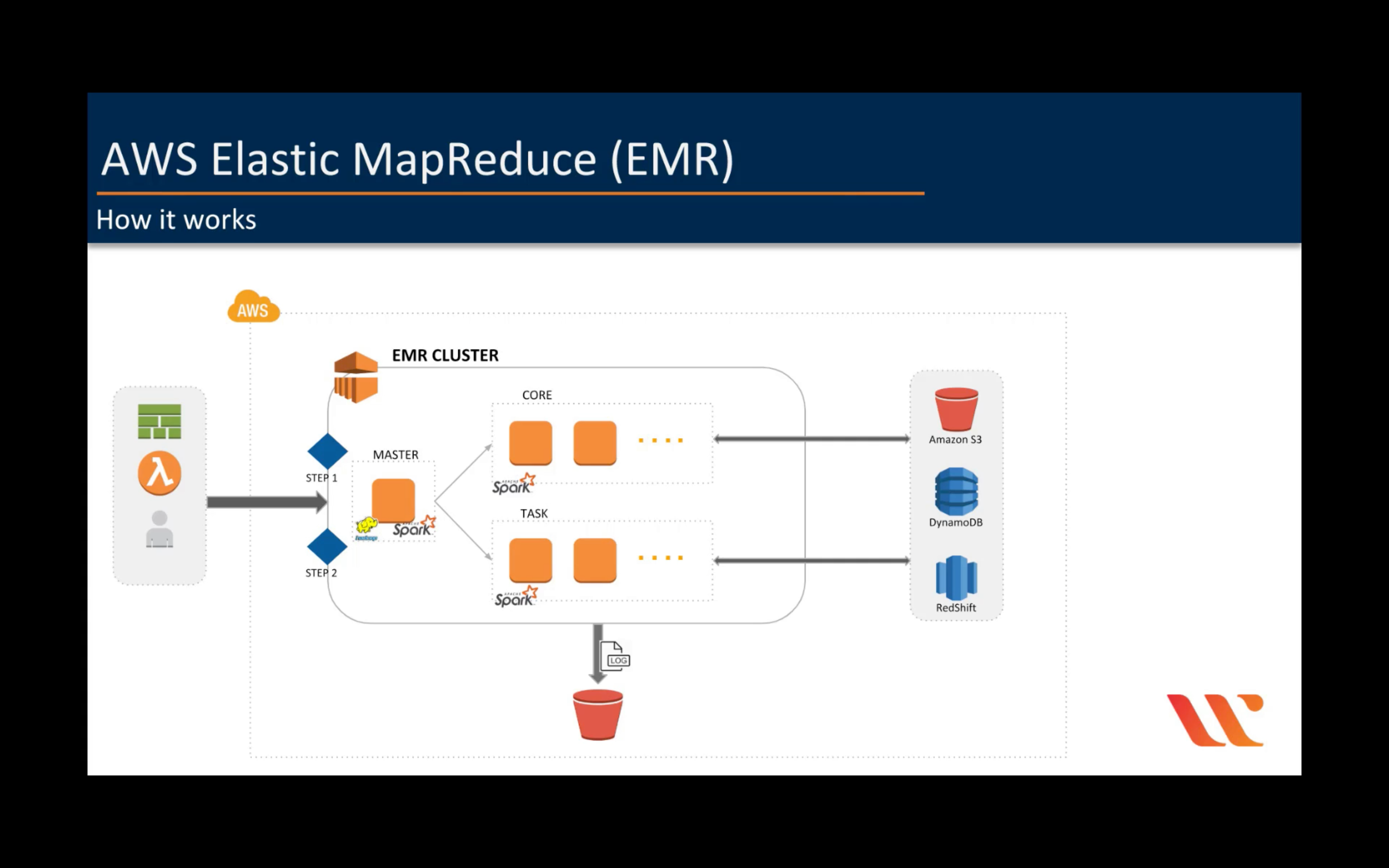

Amazon EMR

Hosted Hadoop framework

- Easy to use

- Elastic

- Low cost

- Flexible data stores

- Use your favorite open source applications

- Hadoop tools

- Consistent Hybrid Experience

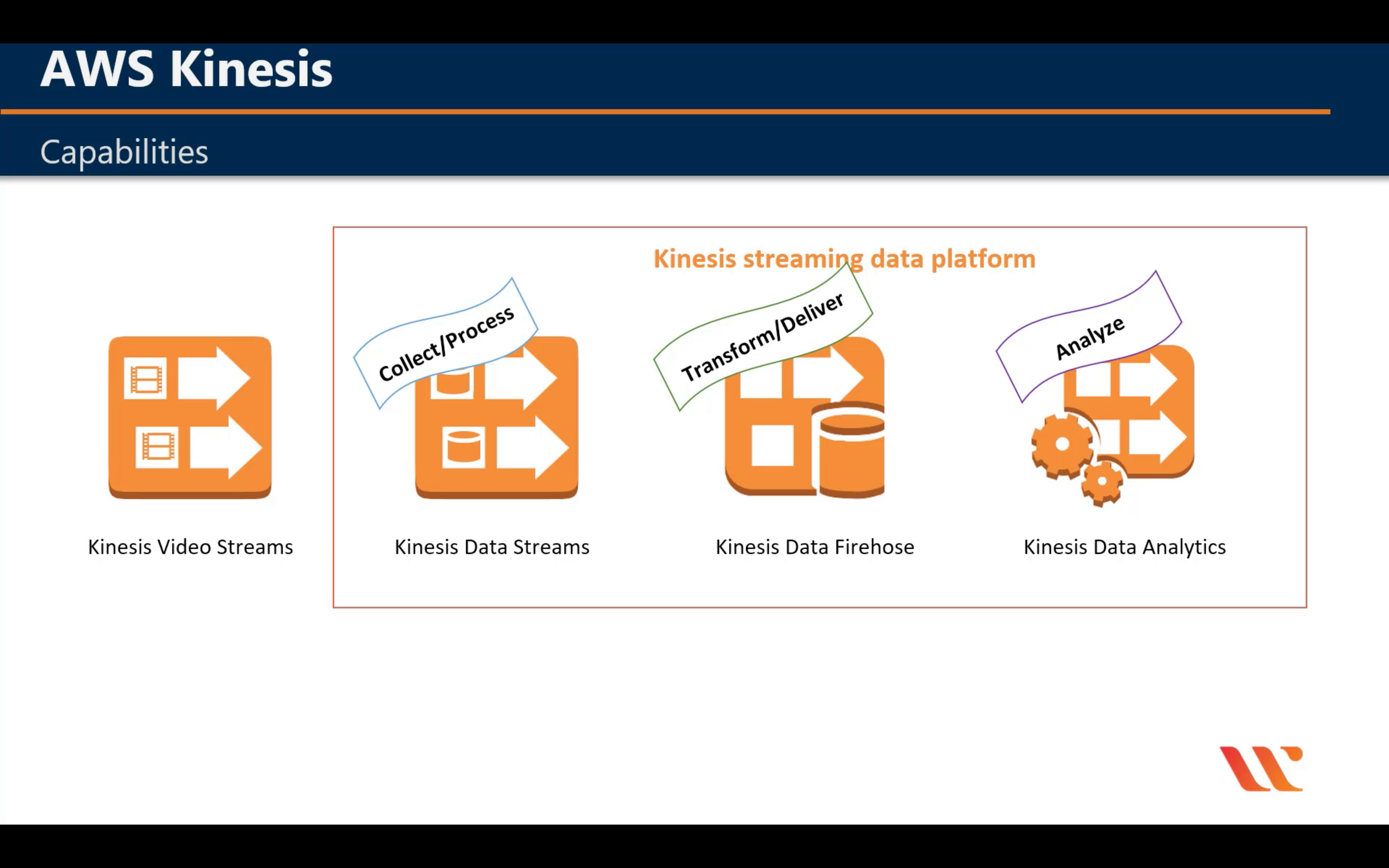

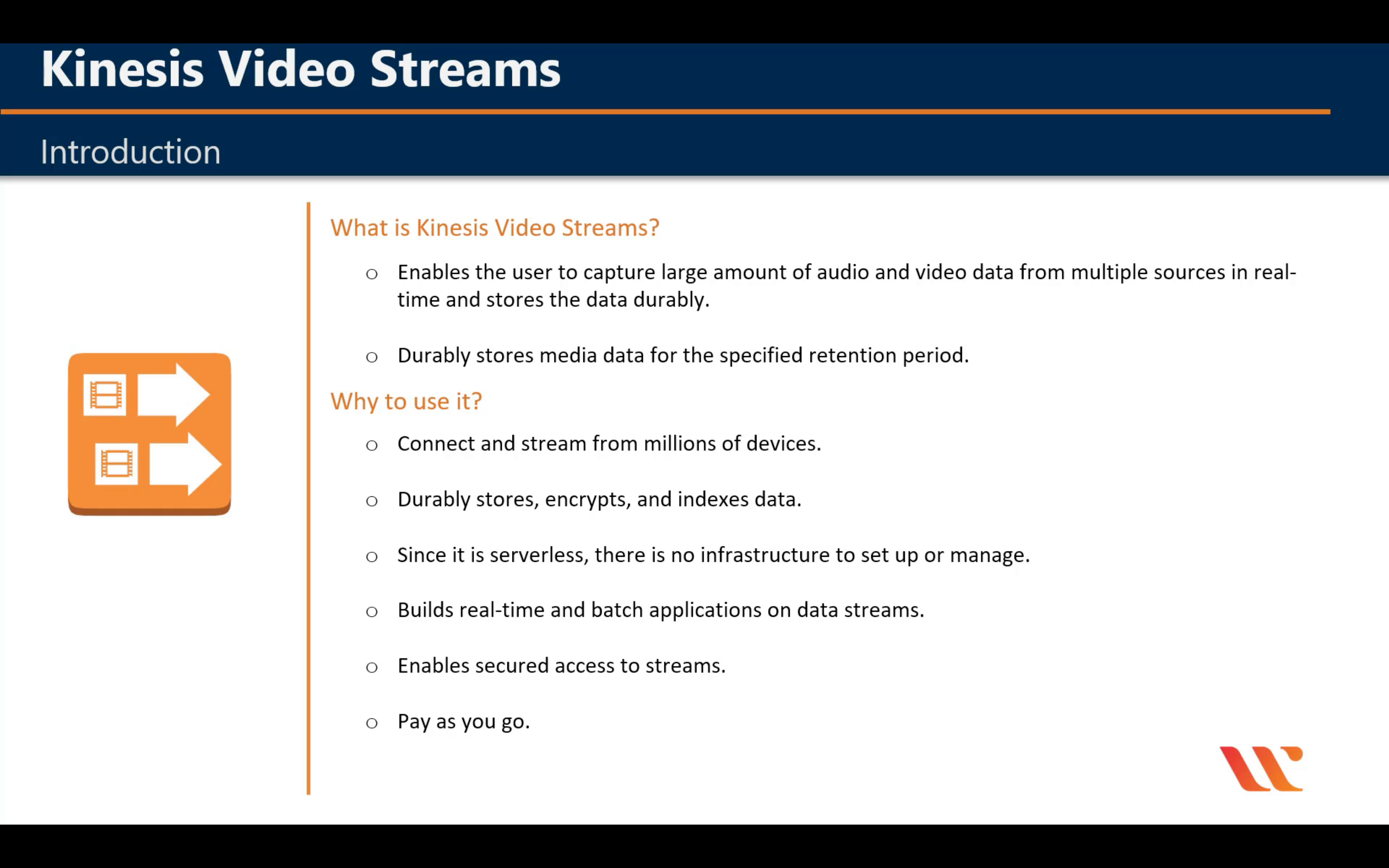

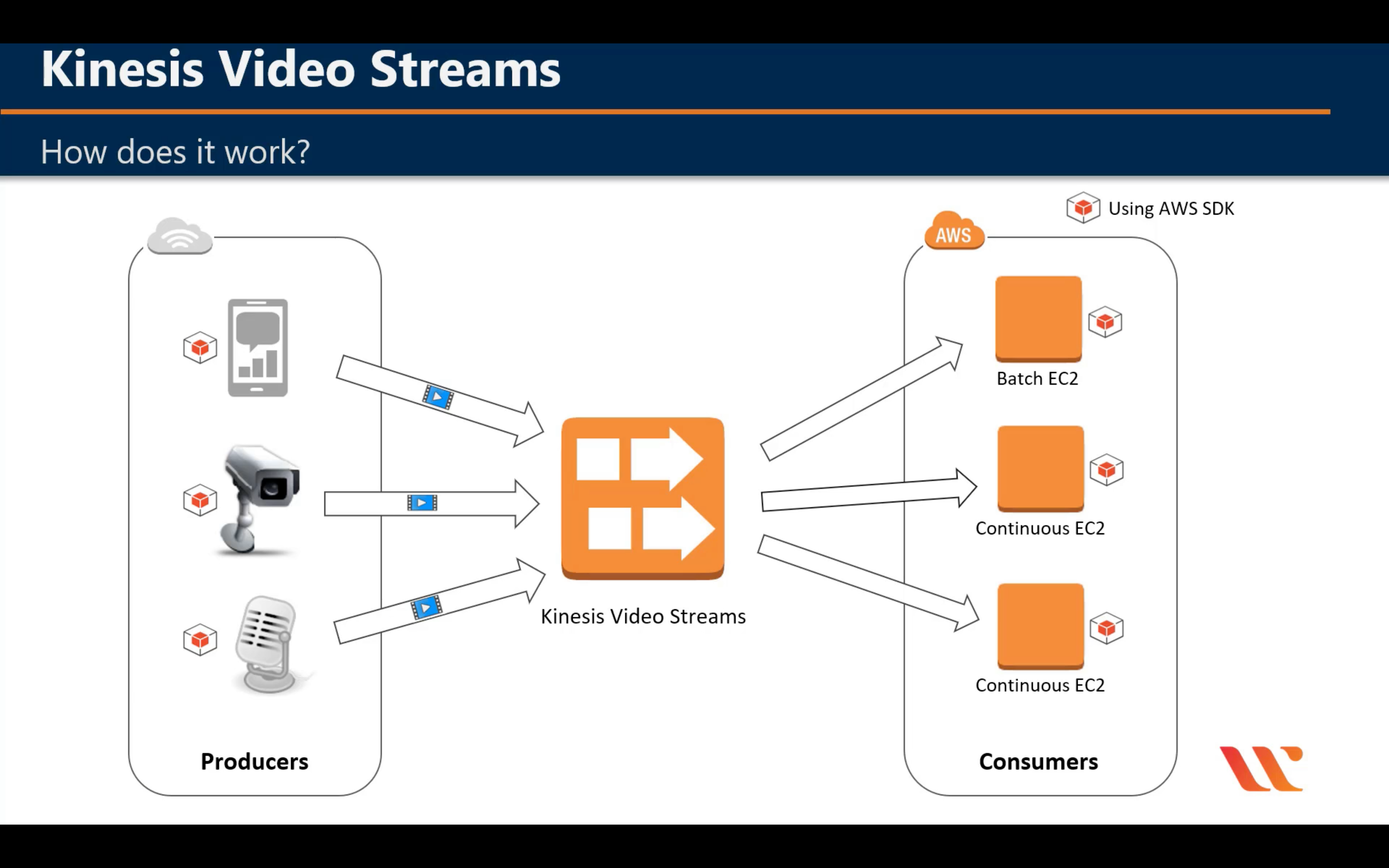

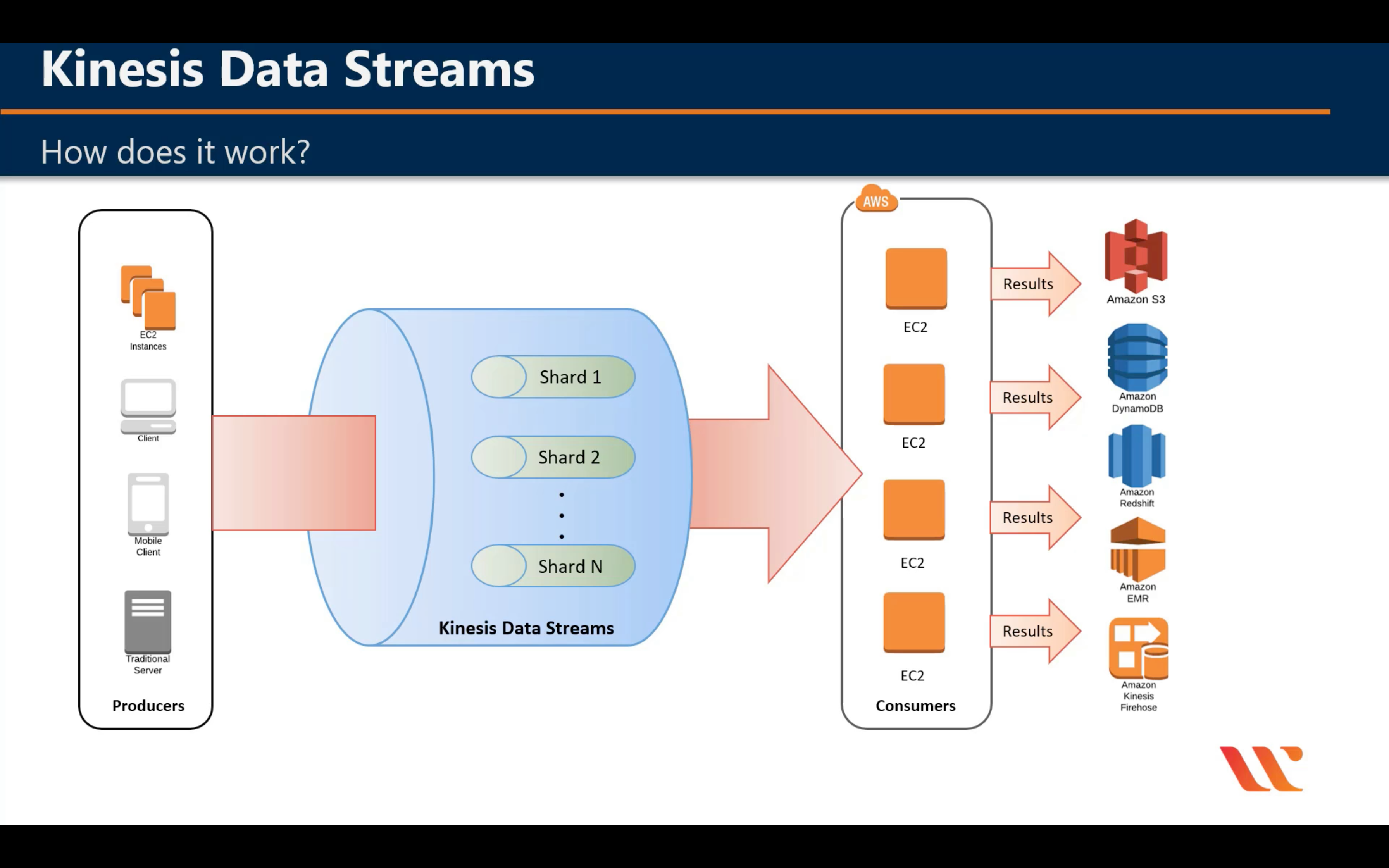

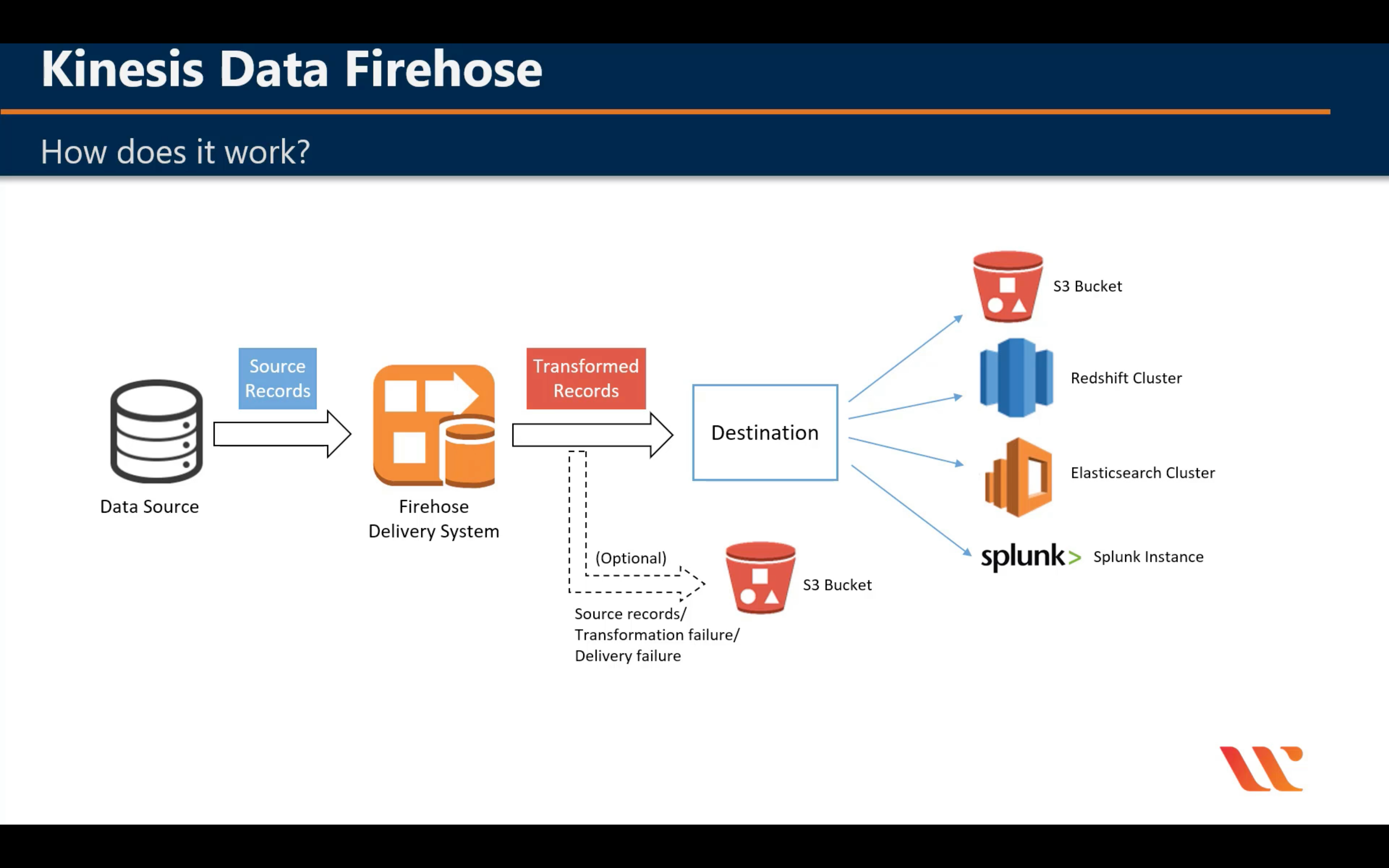

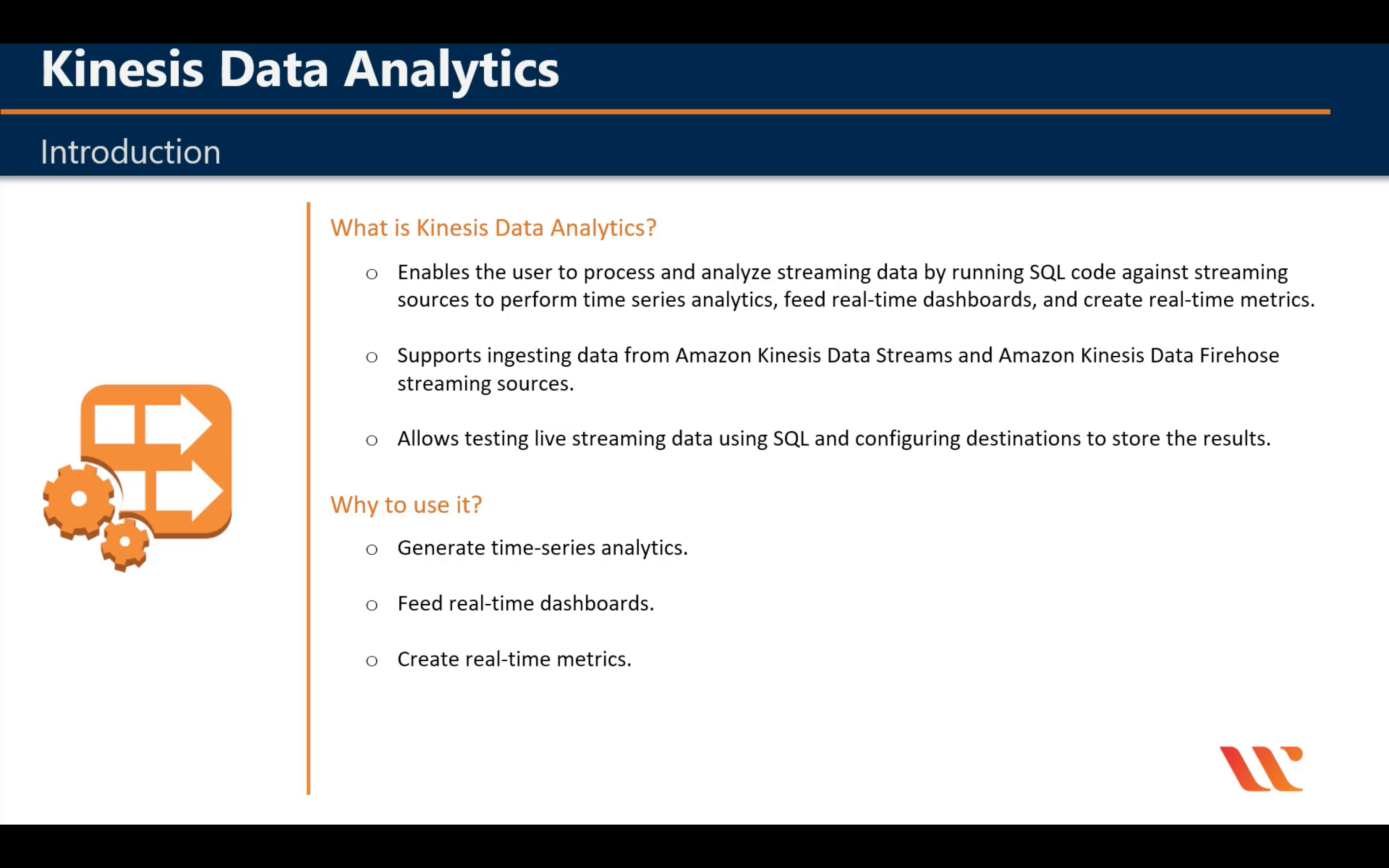

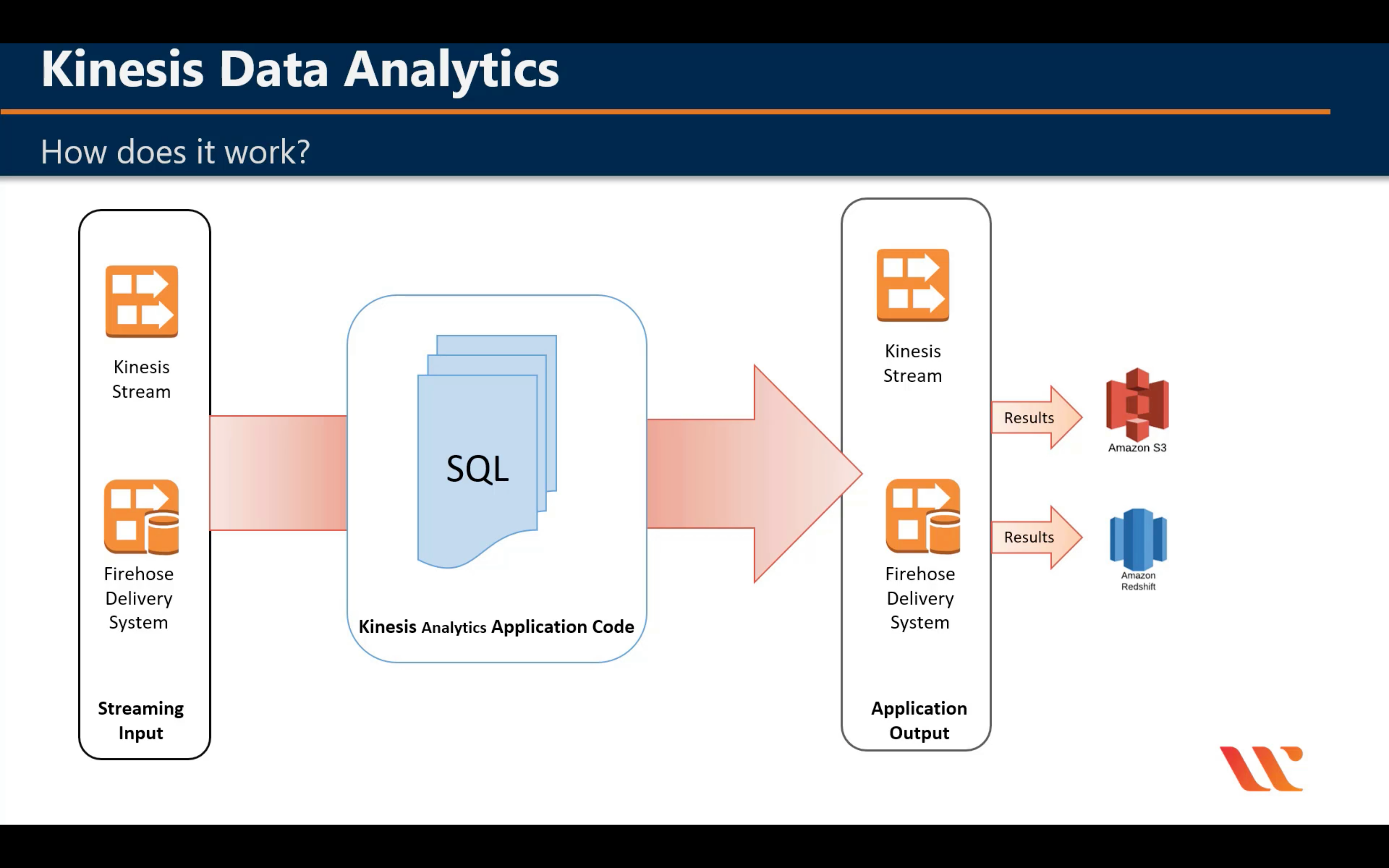

Amazon Kinesis

Analyze real-time video and data streams

Capability

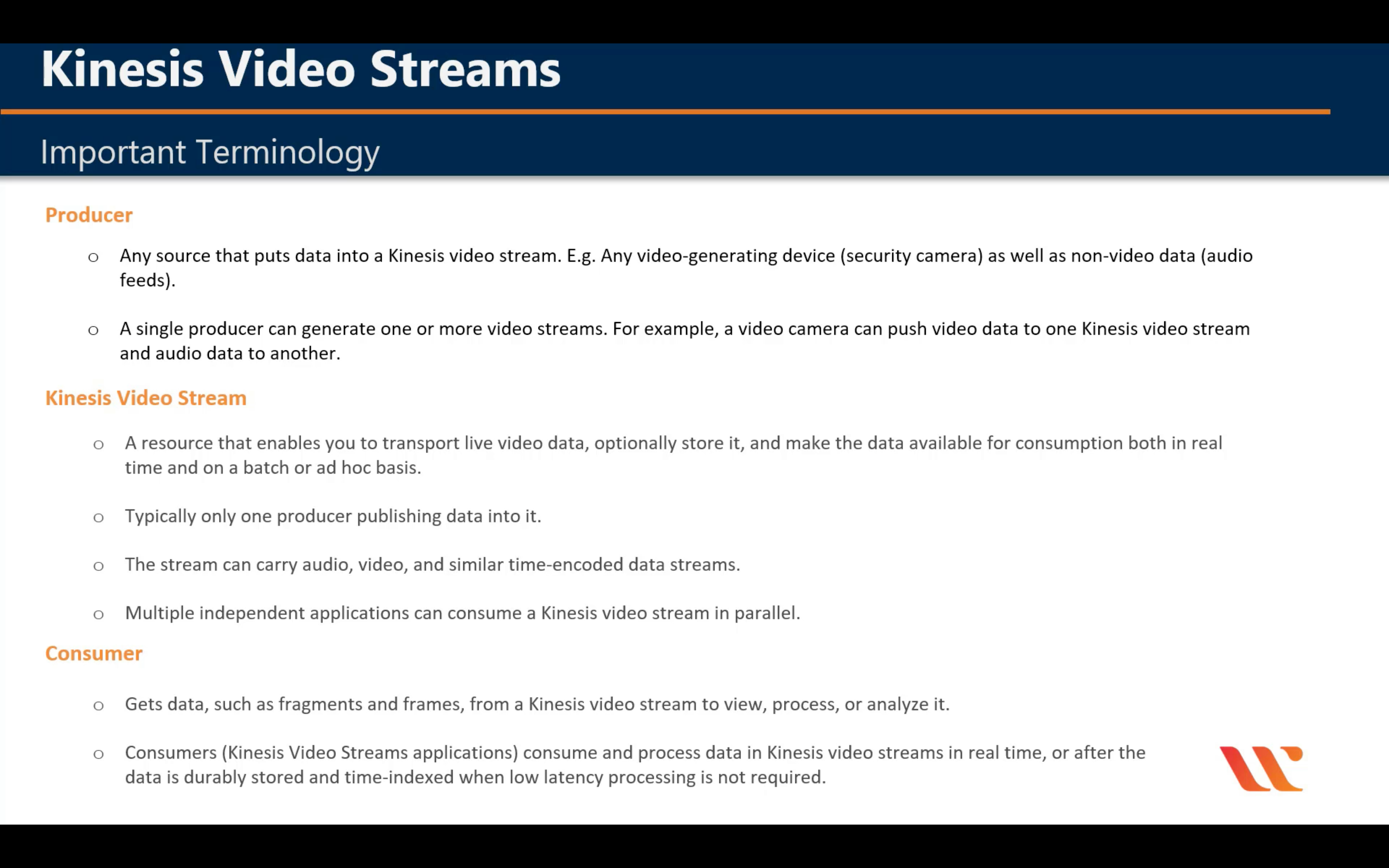

Introduction

Terminologies

Amazon Managed Streaming for Apache Kafka

Fully managed Apache Kafka service

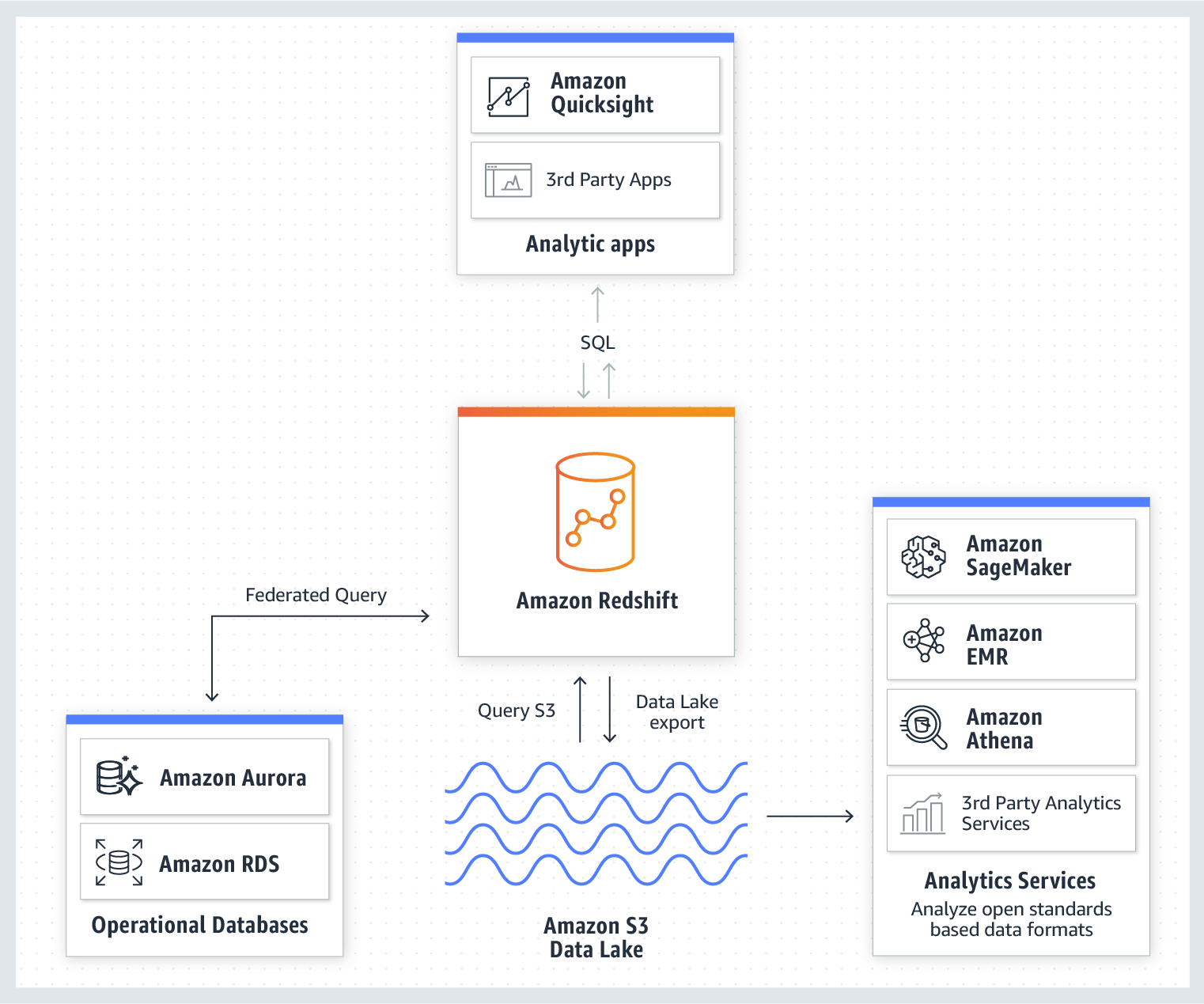

Amazon Redshift

Fast, simple, cost-effective cloud data warehouse

- Deepest integration with your data lake and AWS services

- Best performance

- Most scalable

- Best value

- Easy to manage

- Most secure and compliant

Amazon QuickSight

Fast business analytics service

- Pay only for what you use

- Pay-per-session pricing

- Scale to tens of thousands of users

- Deliver rich, interactive dashboards for your readers

- Explore, analyze, collaborate

- SPICE (super-fast, parallel, in-memory, calculation engine)

- Discover hidden insights

- ML insights

- Leverage Amazon SageMaker models (Preview)

- Build predictive dashboards with Amazon SageMaker

- Embedded analytics

- Embed dashboards and APIs

- Build end-to-end BI solutions

- Connect to your data, wherever it is

- A Global Solution

- Achieve security and compliance

- Mobile

AWS Data Exchange

Find, subscribe to, and use third-party data in the cloud

AWS Data Pipeline

Orchestration service for periodic, data-driven workflows

AWS Glue

Prepare and load data

AWS Lake Formation

Build a secure data lake in days

Application Integration

AWS Step Functions

Coordination for distributed applications

Amazon EventBridge

Serverless event bus for SaaS apps & AWS services

Amazon MQ

Managed message broker for ActiveMQ

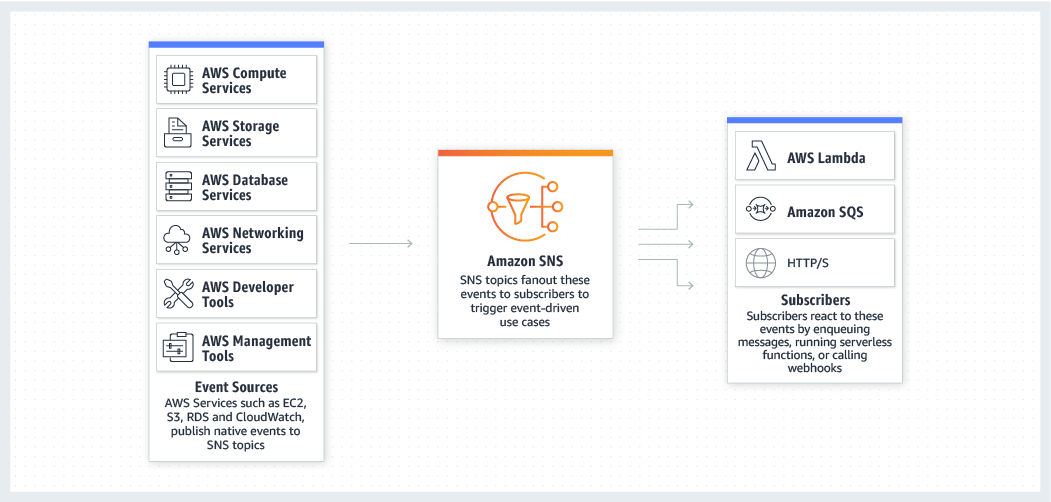

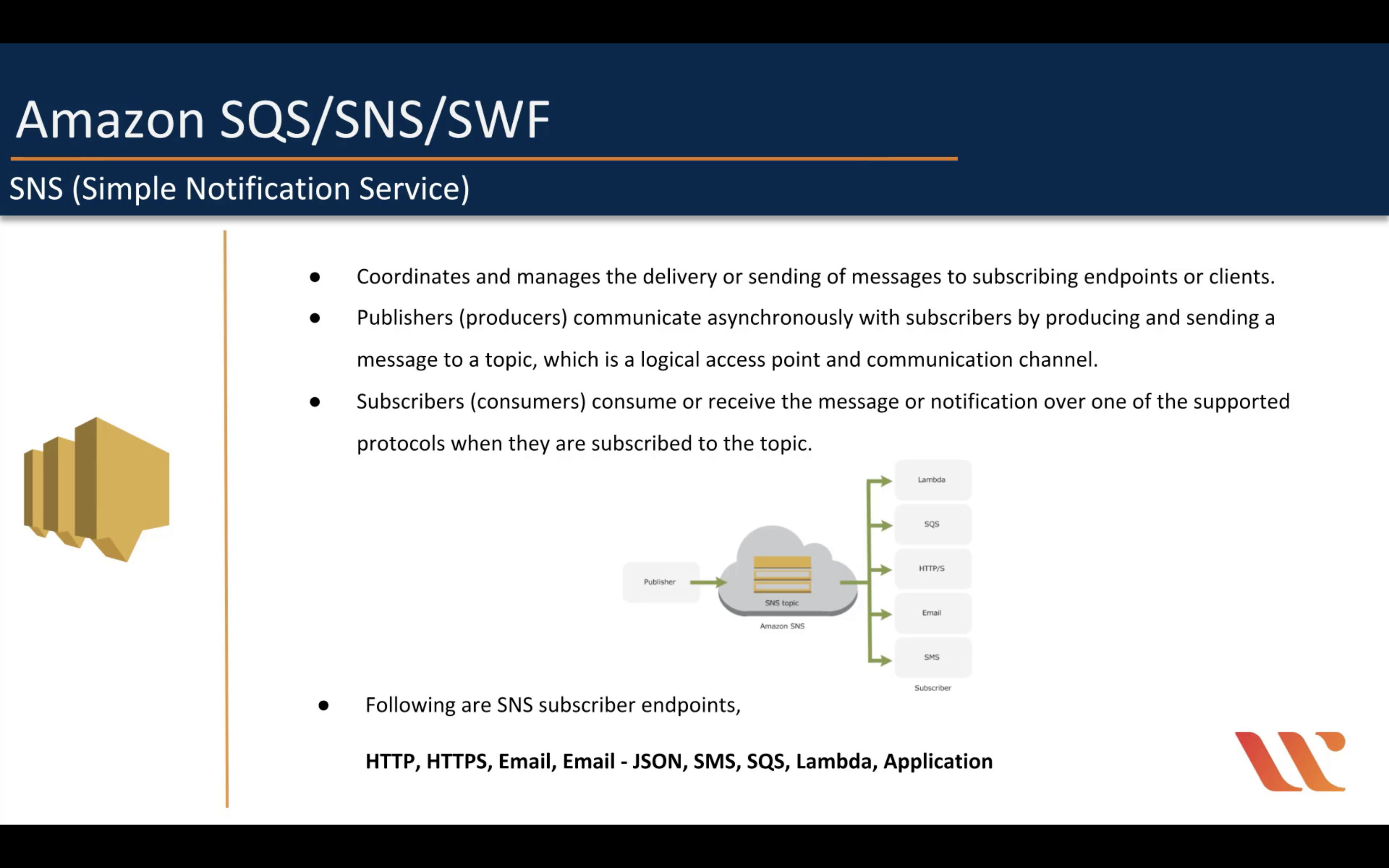

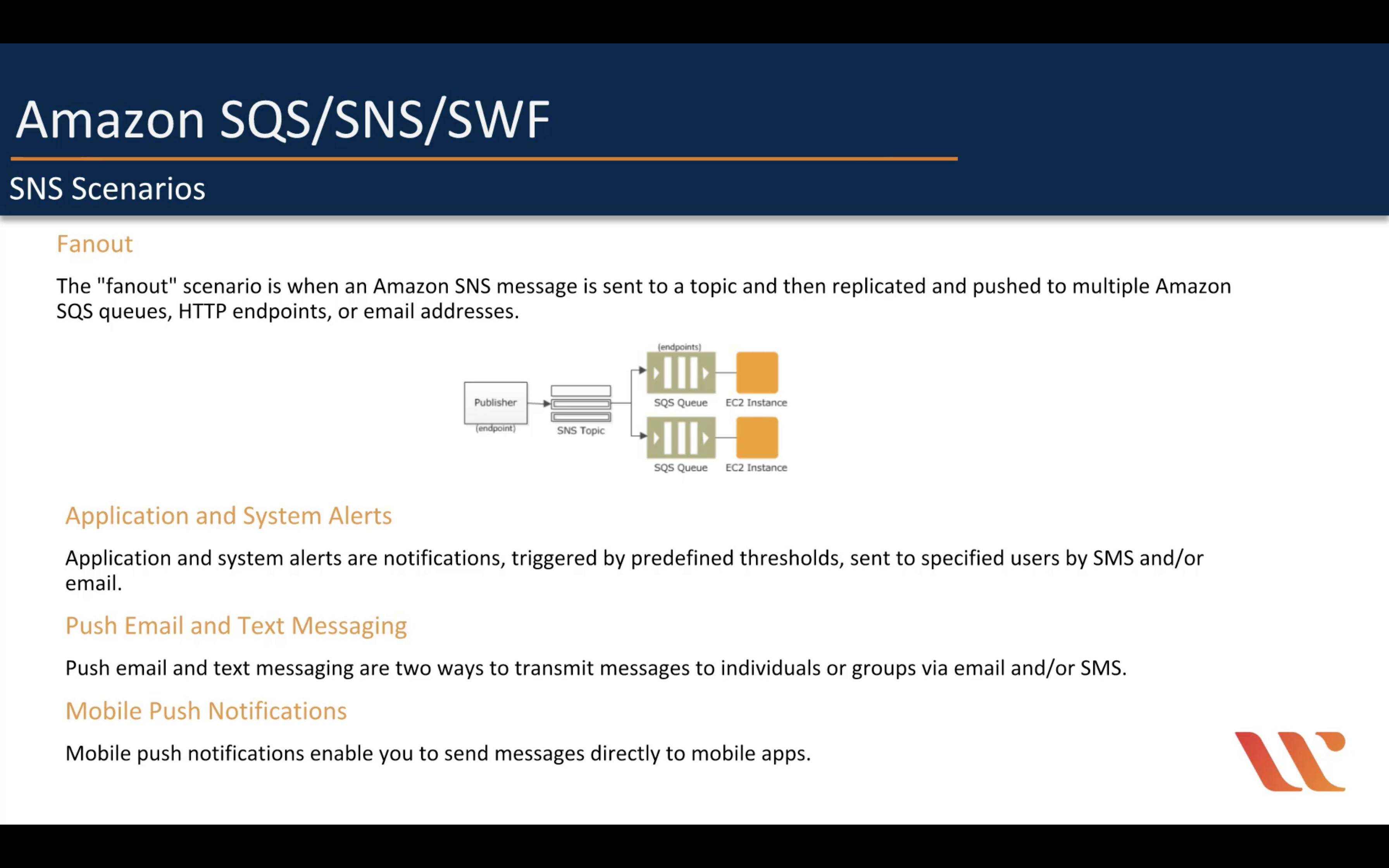

Amazon Simple Notification Service (SNS)

Managed message topics for pub/sub

- Event sources and destinations

- Message filtering

- Message fanout

- Message durability

- Message encryption

- Message privacy

- Mobile notifications

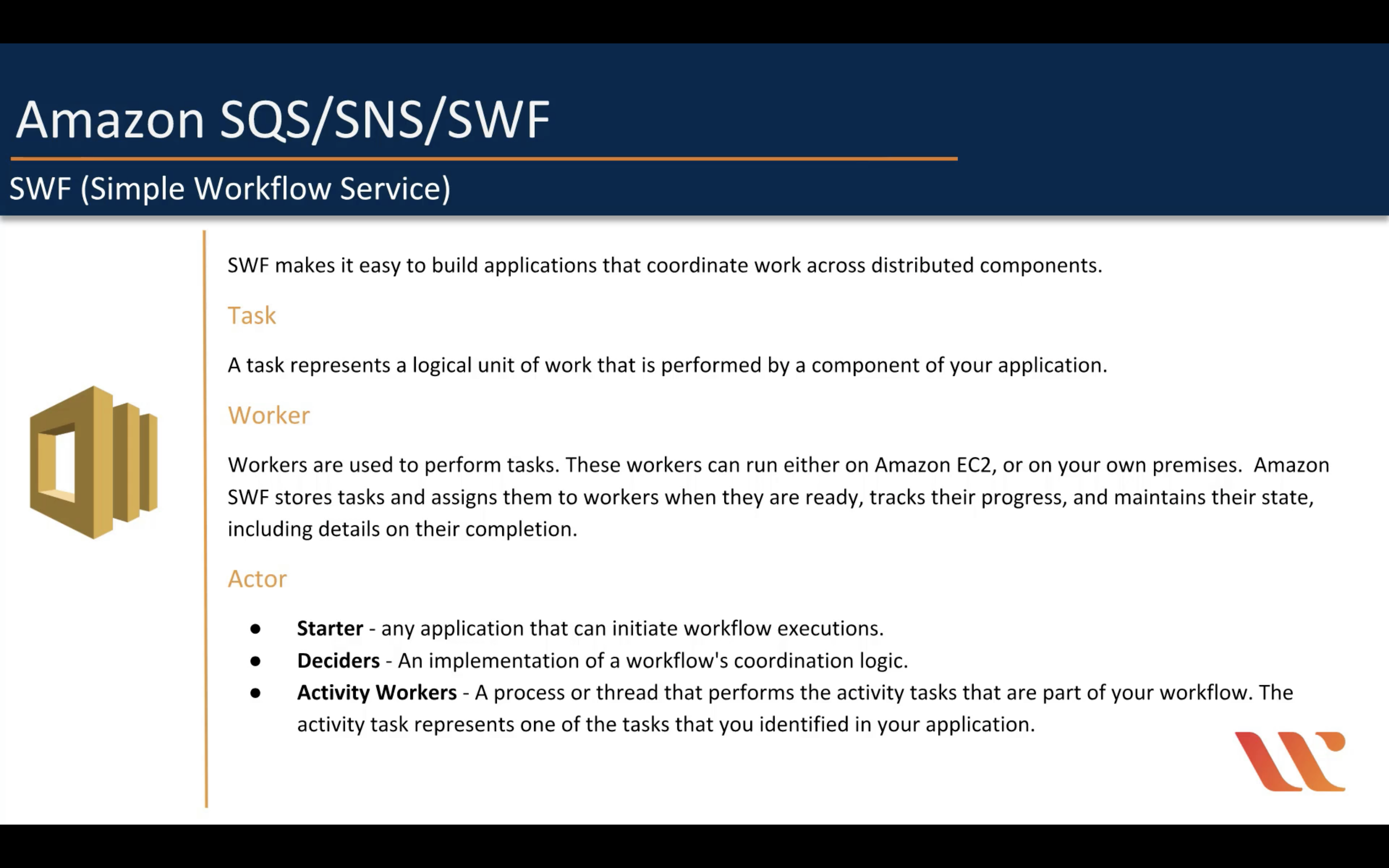

Amazon Simple Workflow (SWF)

Amazon Simple Queue Service (SQS)

Managed message queues

- Standard Queues

- Unlimited Throughput: Standard queues support a nearly unlimited number of transactions per second (TPS) per API action.

- At-Least-Once Delivery: A message is delivered at least once, but occasionally more than one copy of a message is delivered.

- Best-Effort Ordering: Occasionally, messages might be delivered in an order different from which they were sent.

- FIFO Queues

- High Throughput: By default, FIFO queues support up to 300 messages per second (300 send, receive, or delete operations per second). When you batch 10 messages per operation (maximum), FIFO queues can support up to 3,000 messages per second. To request a quota increase, file a support request.

- Exactly-Once Processing: A message is delivered once and remains available until a consumer processes and deletes it. Duplicates aren’t introduced into the queue.

- First-In-First-Out Delivery: The order in which messages are sent and received is strictly preserved (i.e. First-In-First-Out).

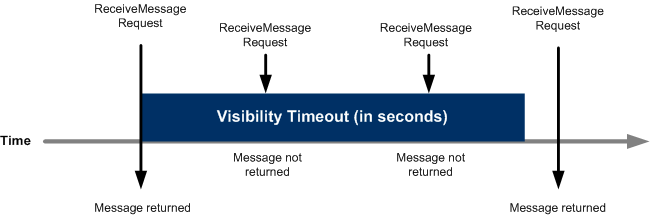

When a consumer receives and processes a message from a queue, the message remains in the queue. Amazon SQS doesn’t automatically delete the message. Because Amazon SQS is a distributed system, there’s no guarantee that the consumer actually receives the message (for example, due to a connectivity issue, or due to an issue in the consumer application). Thus, the consumer must delete the message from the queue after receiving and processing it.

AWS AppSync

Power your apps with the right data from many sources, at scale

AR & VR

Amazon Sumerian

Build and run AR and VR applications

AWS Cost Management

AWS Cost Explorer

Analyze your AWS cost and usage

AWS Budgets

Set custom cost and usage budgets

AWS Cost and Usage Report

Access comprehensive cost and usage information

Reserved Instance Reporting

Dive deeper into your reserved instances (RIs)

Savings Plans

Save up to 72% on compute usage with flexible pricing

Blockchain

Amazon Managed Blockchain

Create and manage scalable blockchain networks

Amazon Quantum Ledger Database (QLDB)

Fully managed ledger database

Business Applications

Alexa for Business

Empower your organization with Alexa

- Meeting Rooms Experience

- Meeting Room Utilization

- Employee Productivity

- Administration and Management

- Provision Alexa devices

- Enable access to your corporate calendar

- Manage shared devices

- Manage skills

- Configure conference rooms

- Manage user enrollment

- Delete voice & response history via API

- Enable or disable voice recording reviews

- Developer

- Build private skills

- Access Alexa for Business APIs

- Integrate AVS devices with Alexa for Business

- Send Announcements

Amazon Chime

Frustration-free meetings, video calls, and chat

Amazon WorkDocs

Secure enterprise document storage and sharing

Amazon WorkMail

Secure email and calendaring

Compute

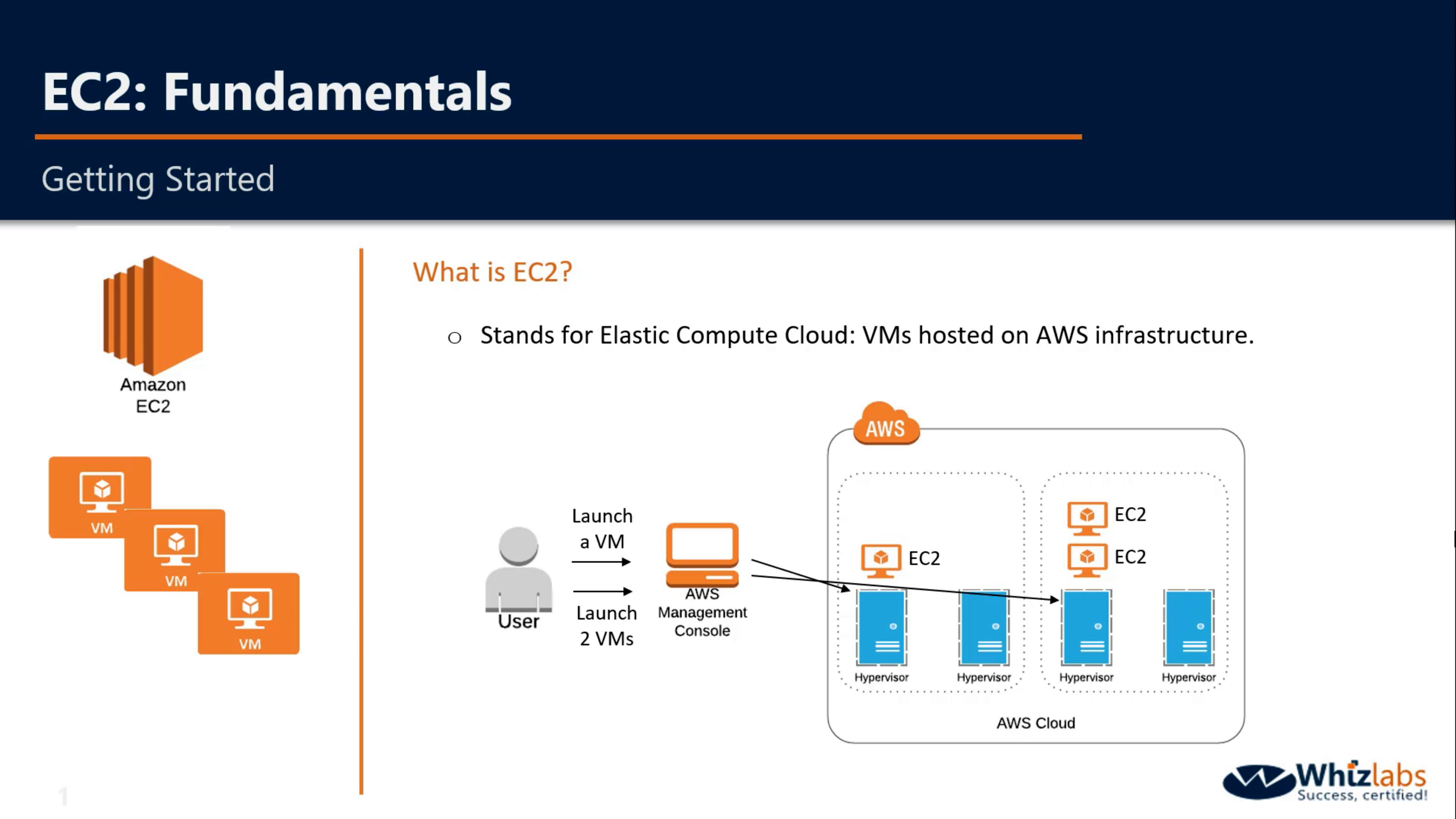

Amazon EC2

Virtual servers in the cloud

- Functionality

- OS

- Amazon Linux

- Windows Server 2012

- CentOS 6.5

- Debian 7.4

- Software

- SAP BusinessObjects

- LAMP Stacks

- Drupal

Summary

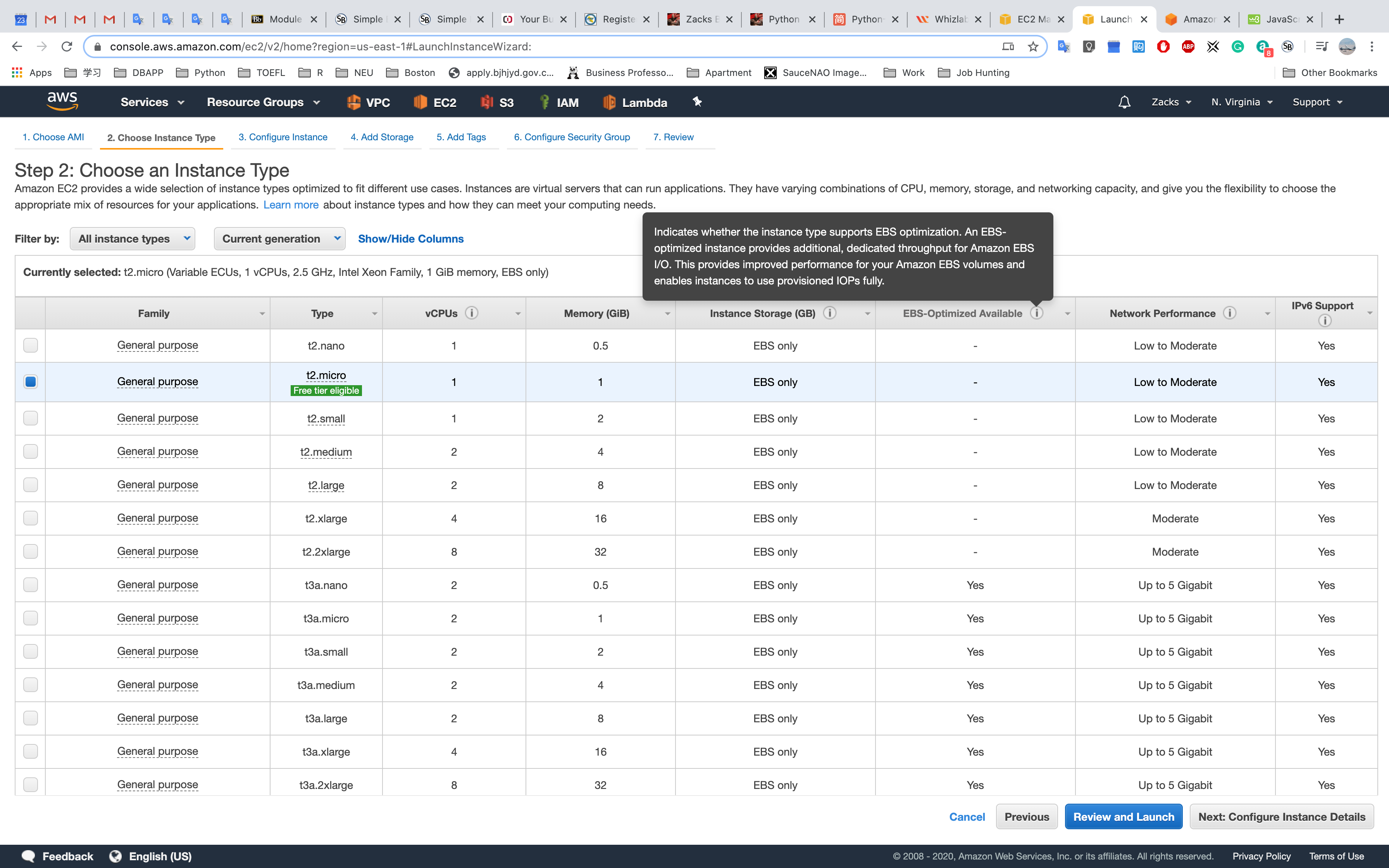

EC2 Fundamentals

- General Purpose - T, M

- Compute Optimized - C

- Memory Optimized - R, X

- Accelerated Computing

- Storage Optimized

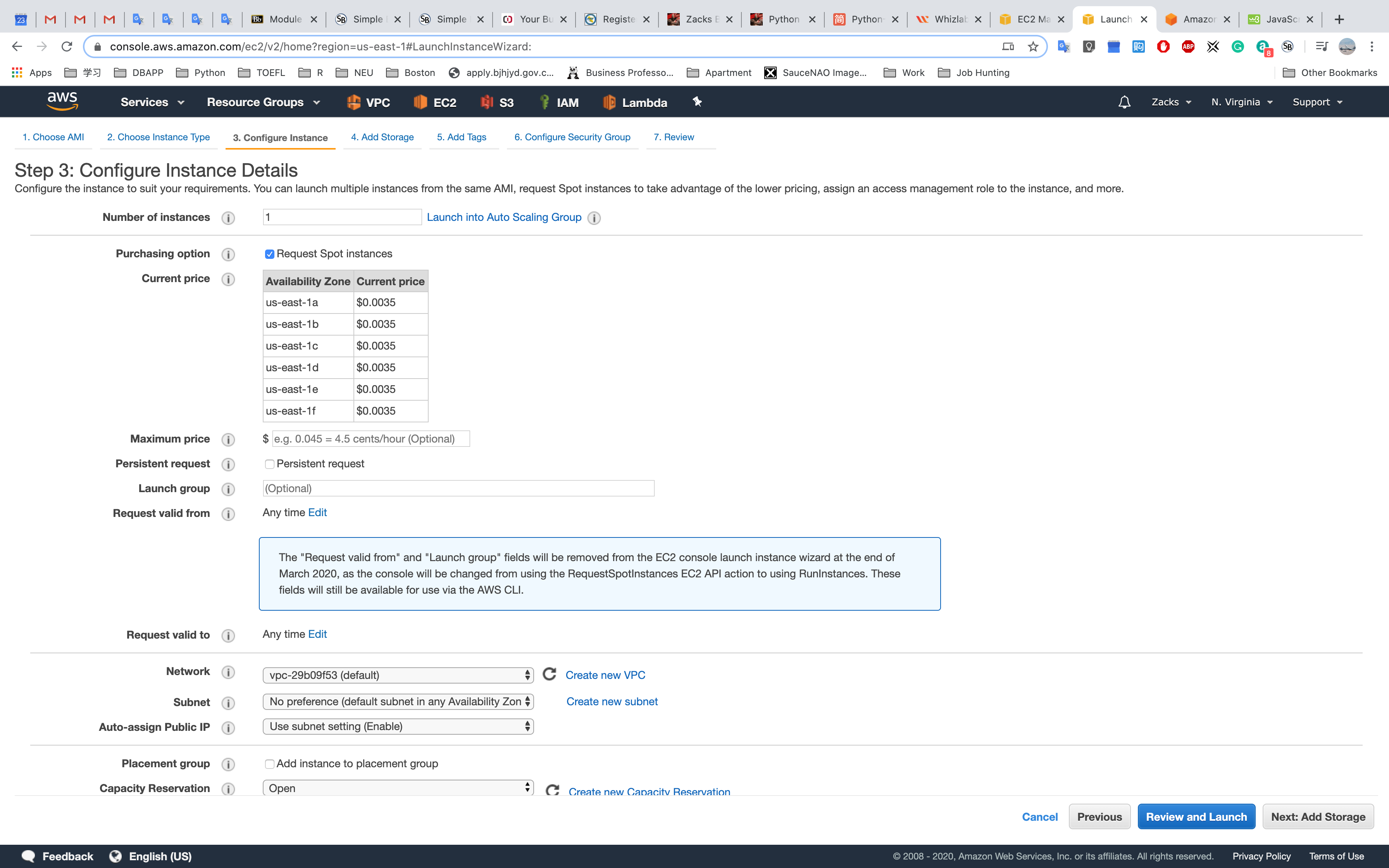

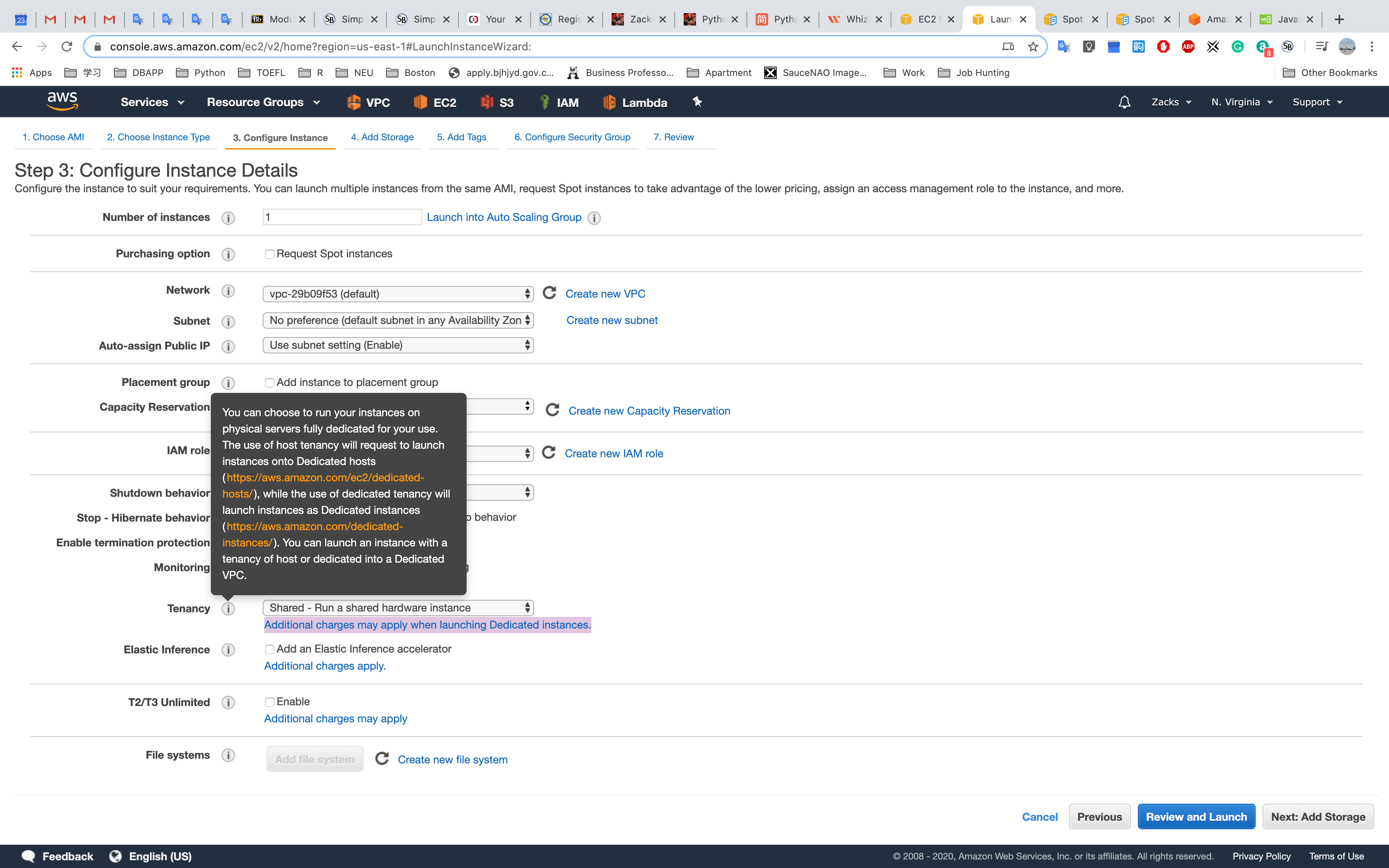

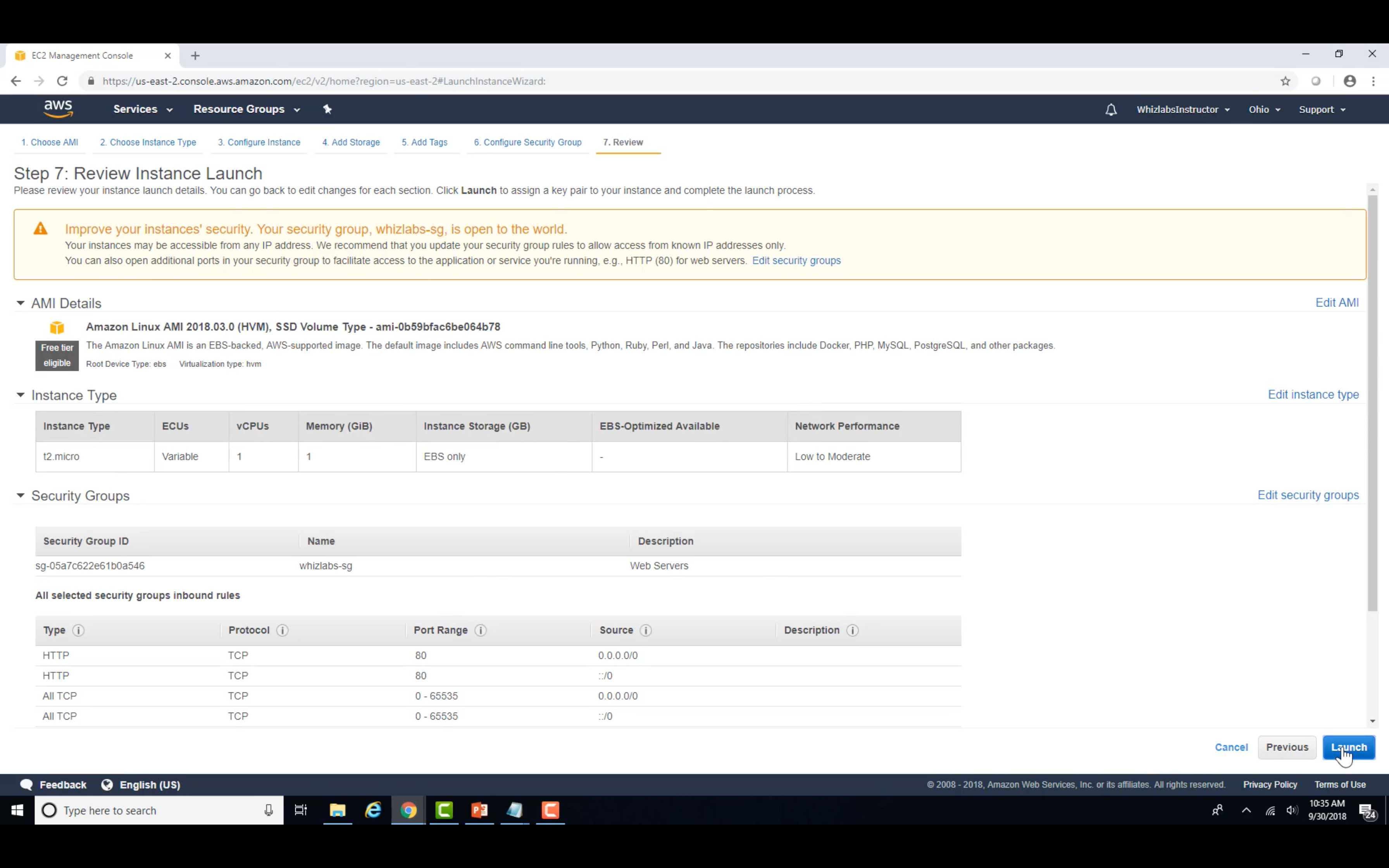

EC2 Launch Details

Number of instances

Purchasing option:

On-Demand

Reserved

Scheduled

Spot Instances

You have the option to request Spot Instances and specify the maximum price you are willing to pay per instance hour. If you bid higher than the current Spot Price, your Spot Instance is launched and will be charged at the current Spot Price. Spot Prices often are significantly lower than On-Demand prices, so using Spot Instances for flexible, interruption-tolerant applications can lower your instance costs by up to 90%. Learn more about Spot Instances.Dedicated Host

Dedicated Instance

On-Demand

Reserved

Scheduled

Spot

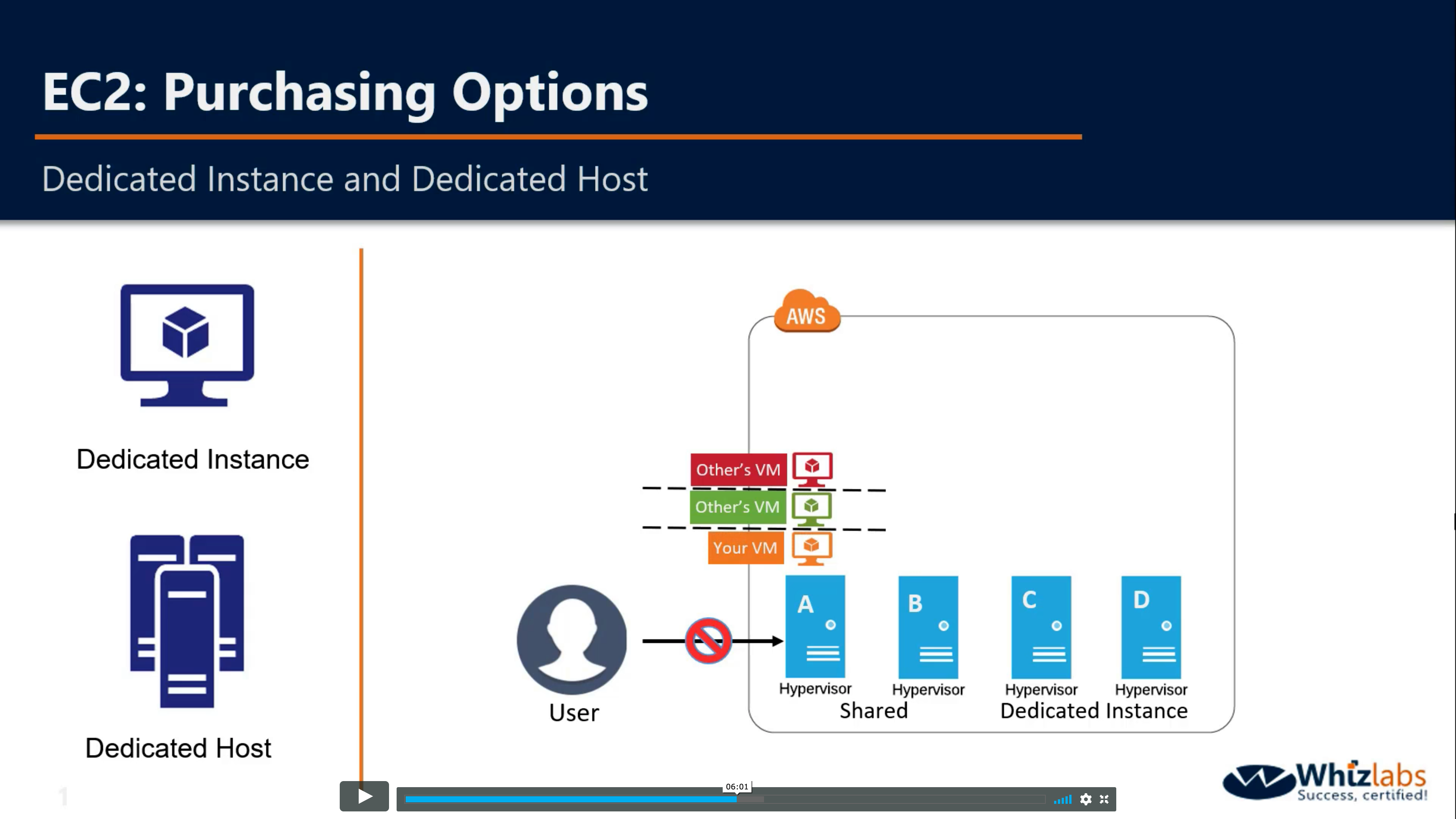

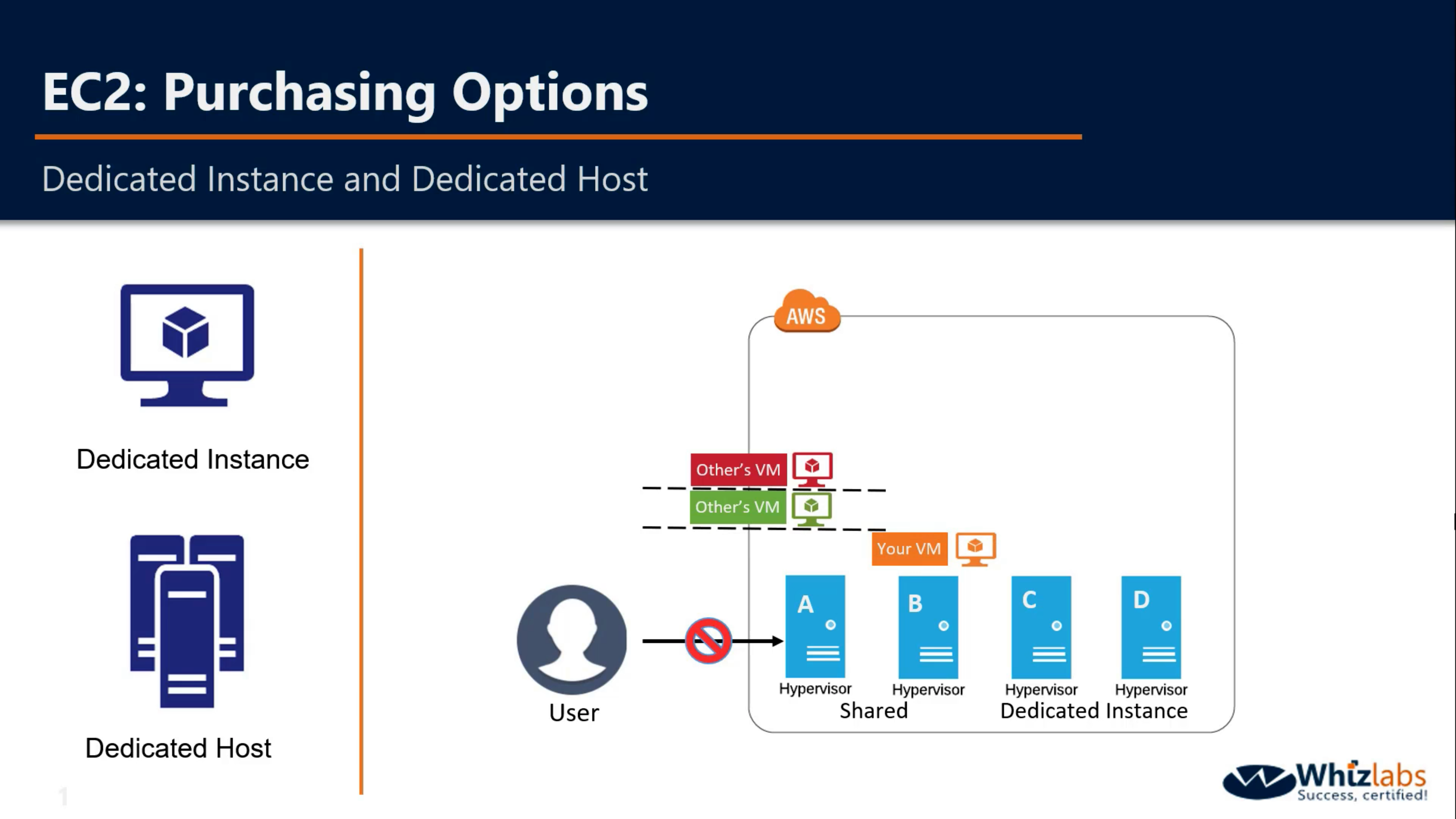

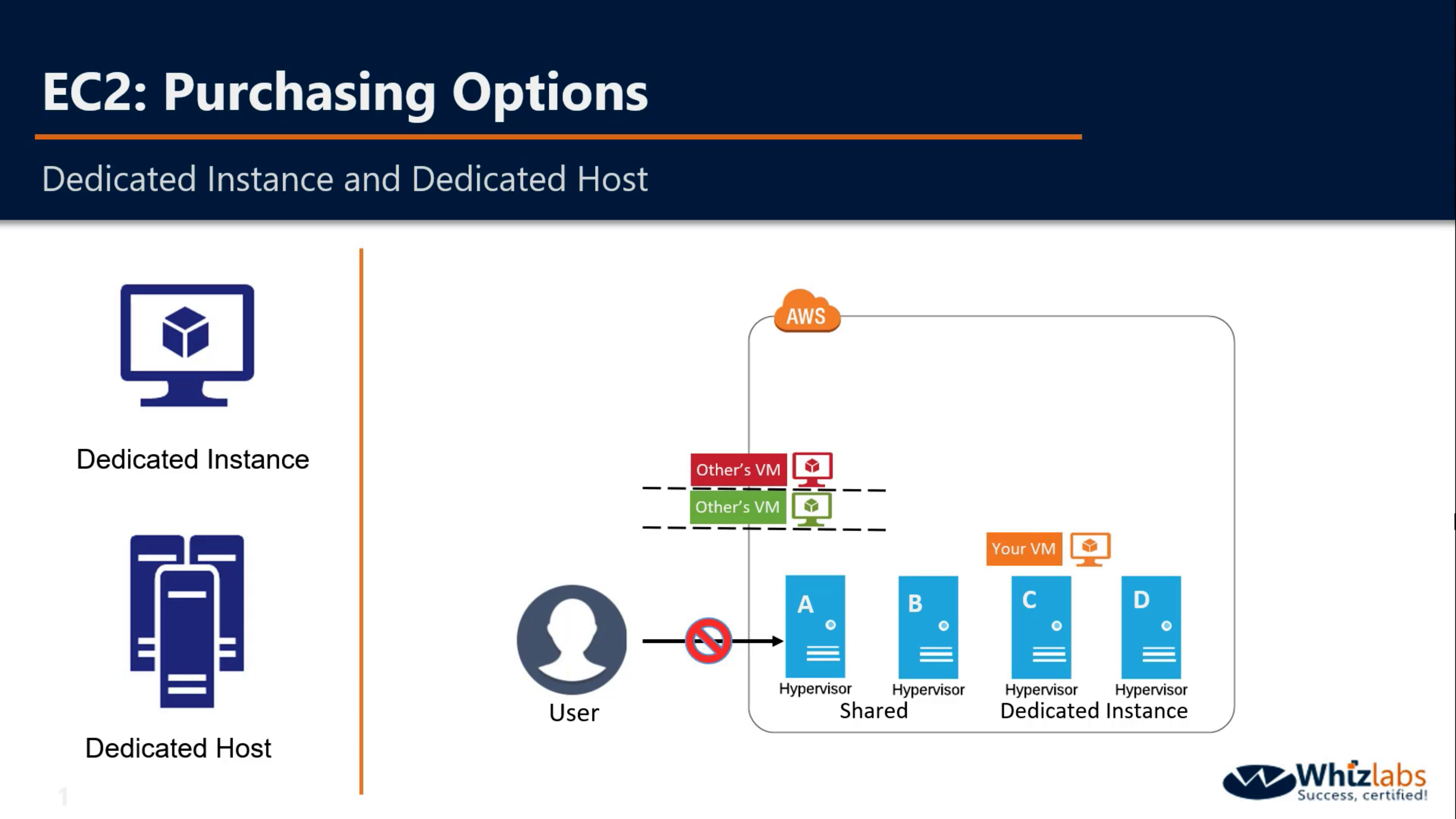

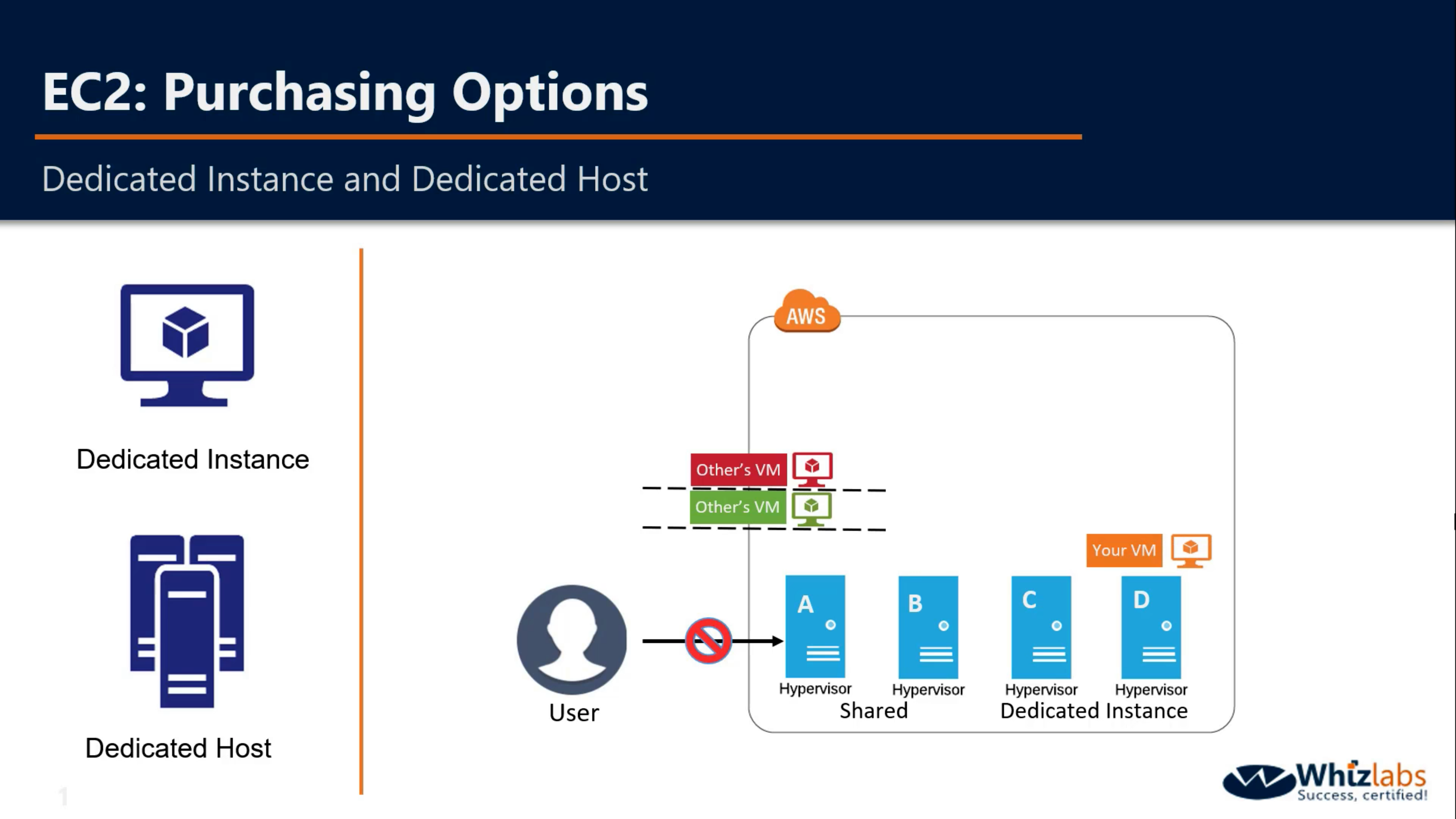

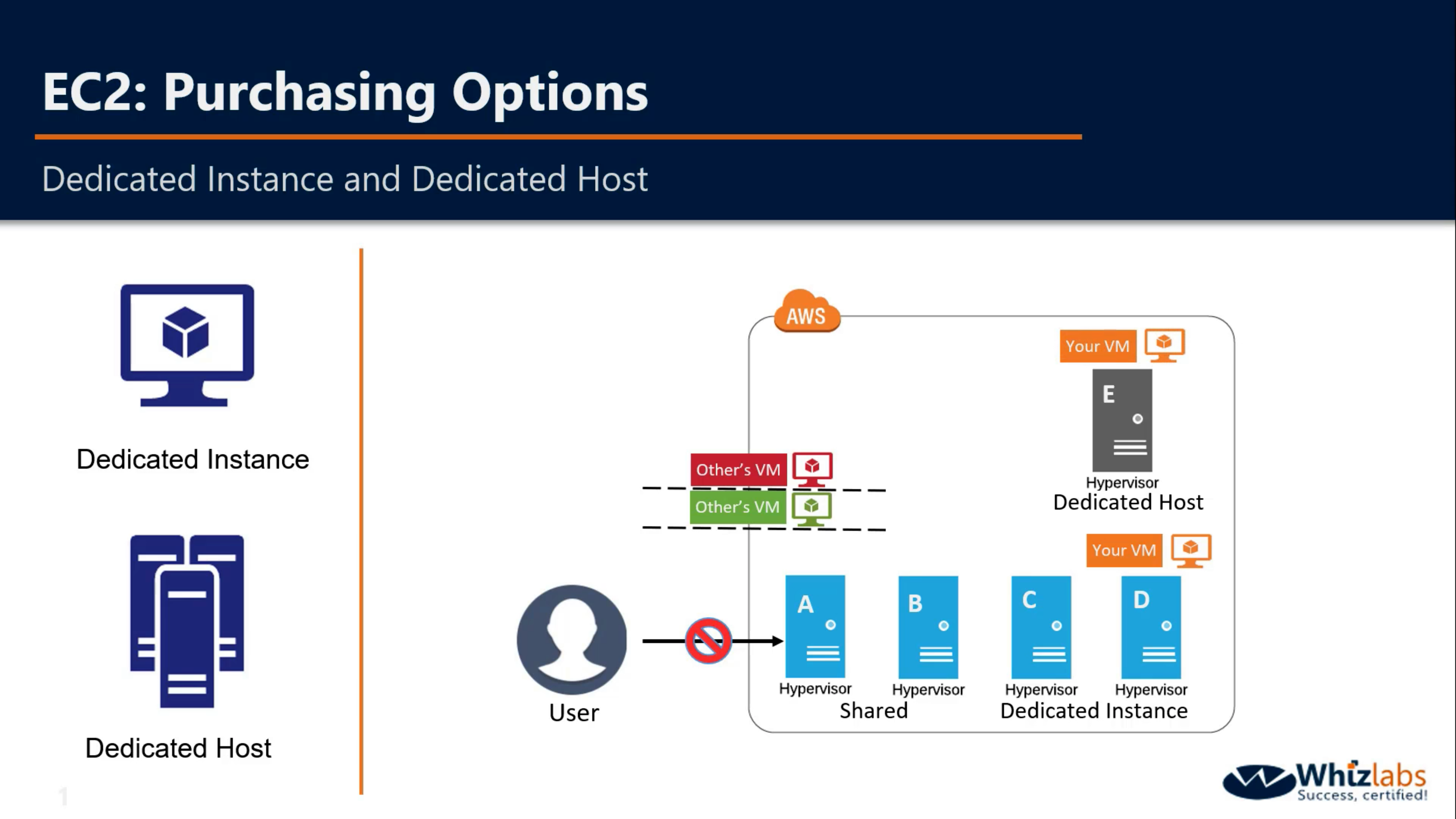

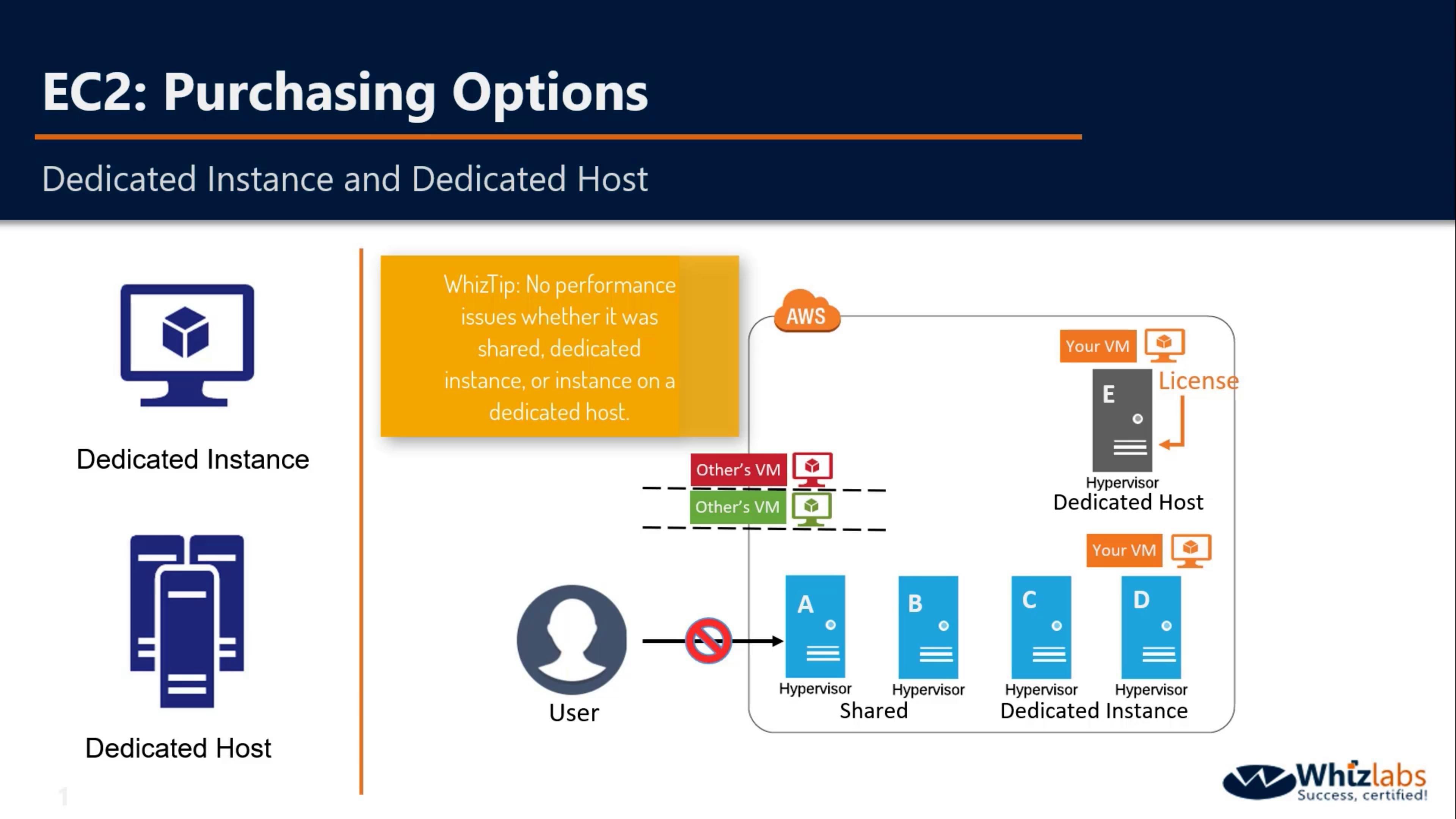

Dedicated Host and Dedicated Instance

You cannot visit the hardware directly.

If you reboot your VM, it will be launched in the same Hypervisor

If you stop and start the same VM again, your VM may be launched in another shared Instance.

If you enable dedicated instance, your VM will launched in one of them, not shared Hypervisor.

Dedicated Instances are Amazon EC2 instances that run in a VPC on hardware that’s dedicated to a single customer. Your Dedicated instances are physically isolated at the host hardware level from instances that belong to other AWS accounts. Dedicated instances may share hardware with other instances from the same AWS account that are not Dedicated instances.

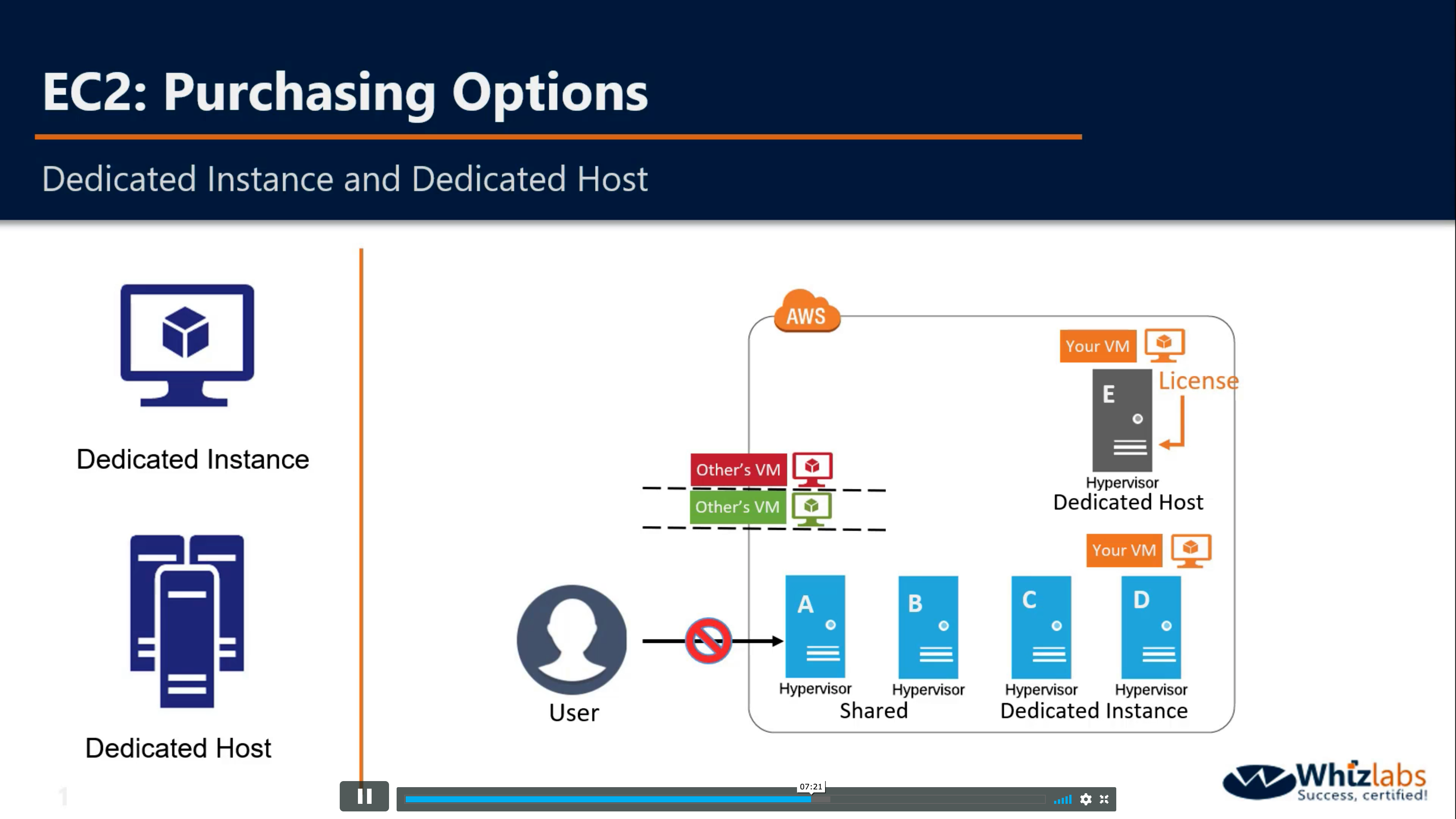

Amazon EC2 Dedicated Hosts allow you to use your eligible software licenses from vendors such as Microsoft and Oracle on Amazon EC2, so that you get the flexibility and cost effectiveness of using your own licenses, but with the resiliency, simplicity and elasticity of AWS. An Amazon EC2 Dedicated Host is a physical server fully dedicated for your use, so you can help address corporate compliance requirements.

Amazon EC2 Dedicated Host is also integrated with AWS License Manager, a service which helps you manage your software licenses, including Microsoft Windows Server and Microsoft SQL Server licenses. In License Manager, you can specify your licensing terms for governing license usage, as well as your Dedicated Host management preferences for host allocation and host capacity utilization. Once setup, AWS takes care of these administrative tasks on your behalf, so that you can seamlessly launch virtual machines (instances) on Dedicated Hosts just like you would launch an EC2 instance with AWS provided licenses.

Hibernate

Hibernation stops your instance and saves the contents of the instance’s RAM to the root volume. You cannot enable hibernation after launch.

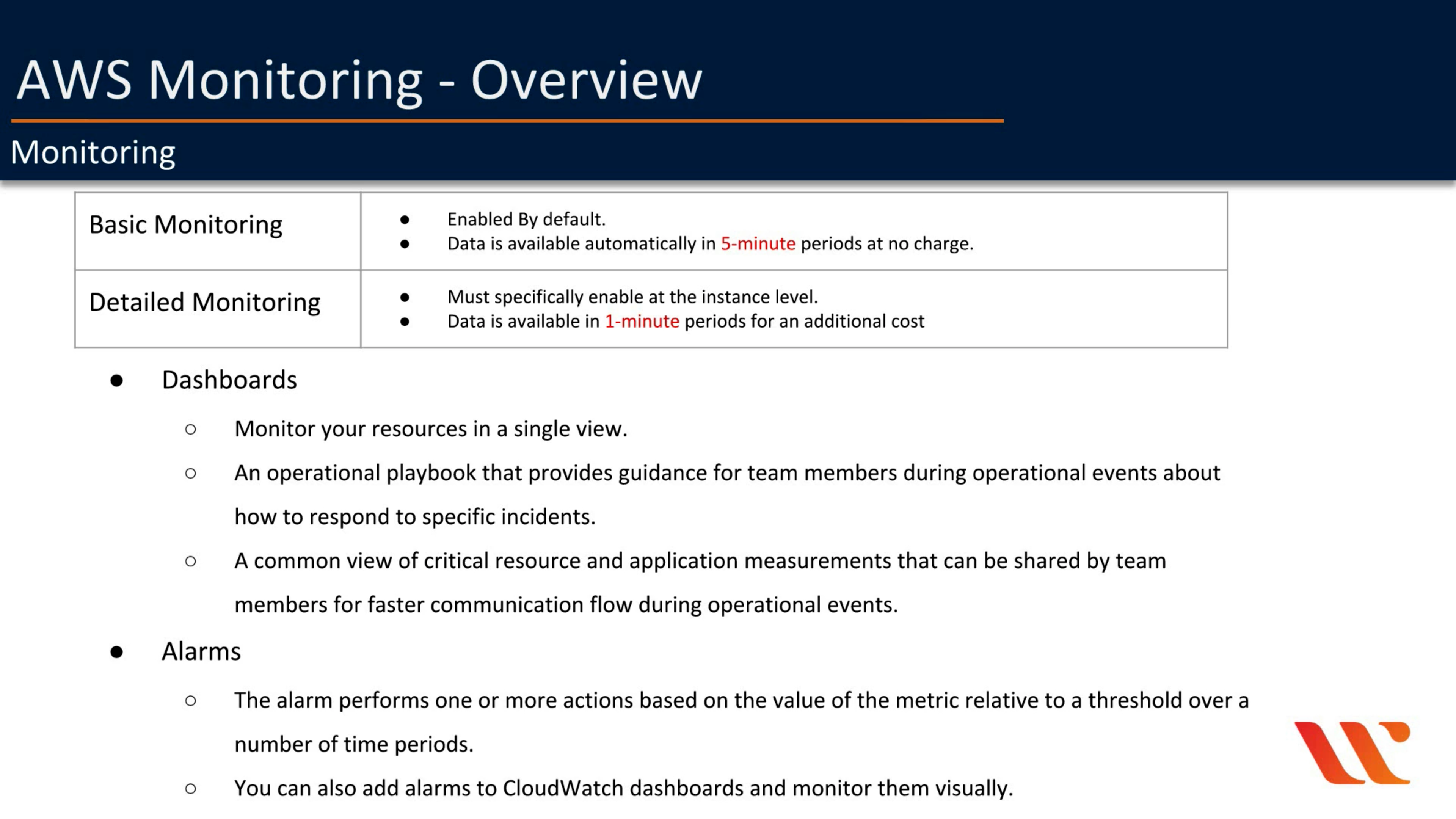

Monitoring

By default, your instance is enabled for basic monitoring. You can optionally enable detailed monitoring. After you enable detailed monitoring, the Amazon EC2 console displays monitoring graphs with a 1-minute period for the instance.

- Basic monitoring

Data is available automatically in 5-minute periods at no charge. - Detailed monitoring (You can enable it later)

Data is available in 1-minute periods for an additional charge.

To get this level of data, you must specifically enable it for the instance. For the instances where you’ve enabled detailed monitoring, you can also get aggregated data across groups of similar instances.

T2/T3 Unlimited

Enabling T2/T3 Unlimited allows applications to burst beyond the baseline for as long as needed at any time. If the average CPU utilization of the instance is at or below the baseline, the hourly instance price automatically covers all usage. Otherwise, all usage above baseline is billed.

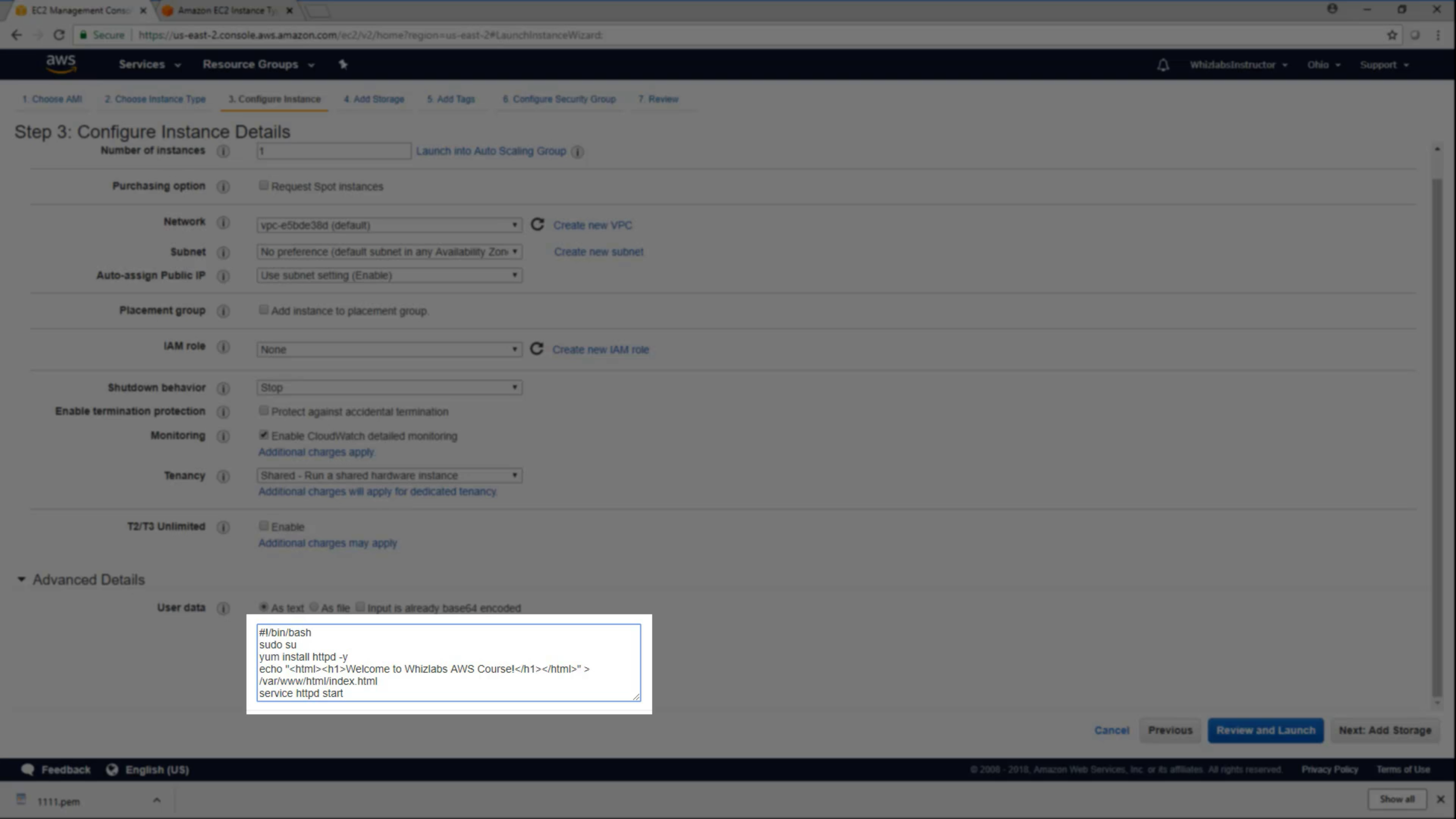

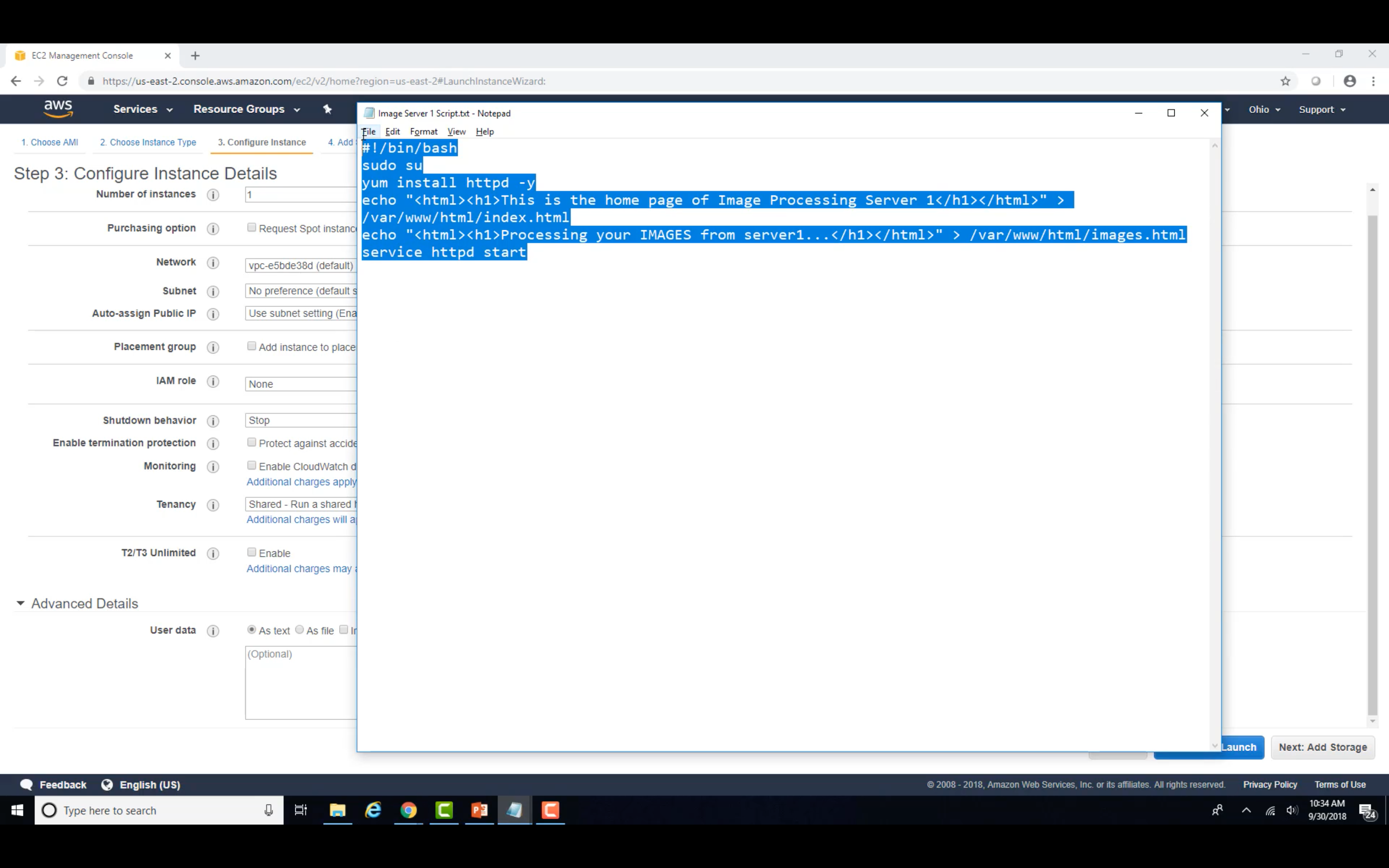

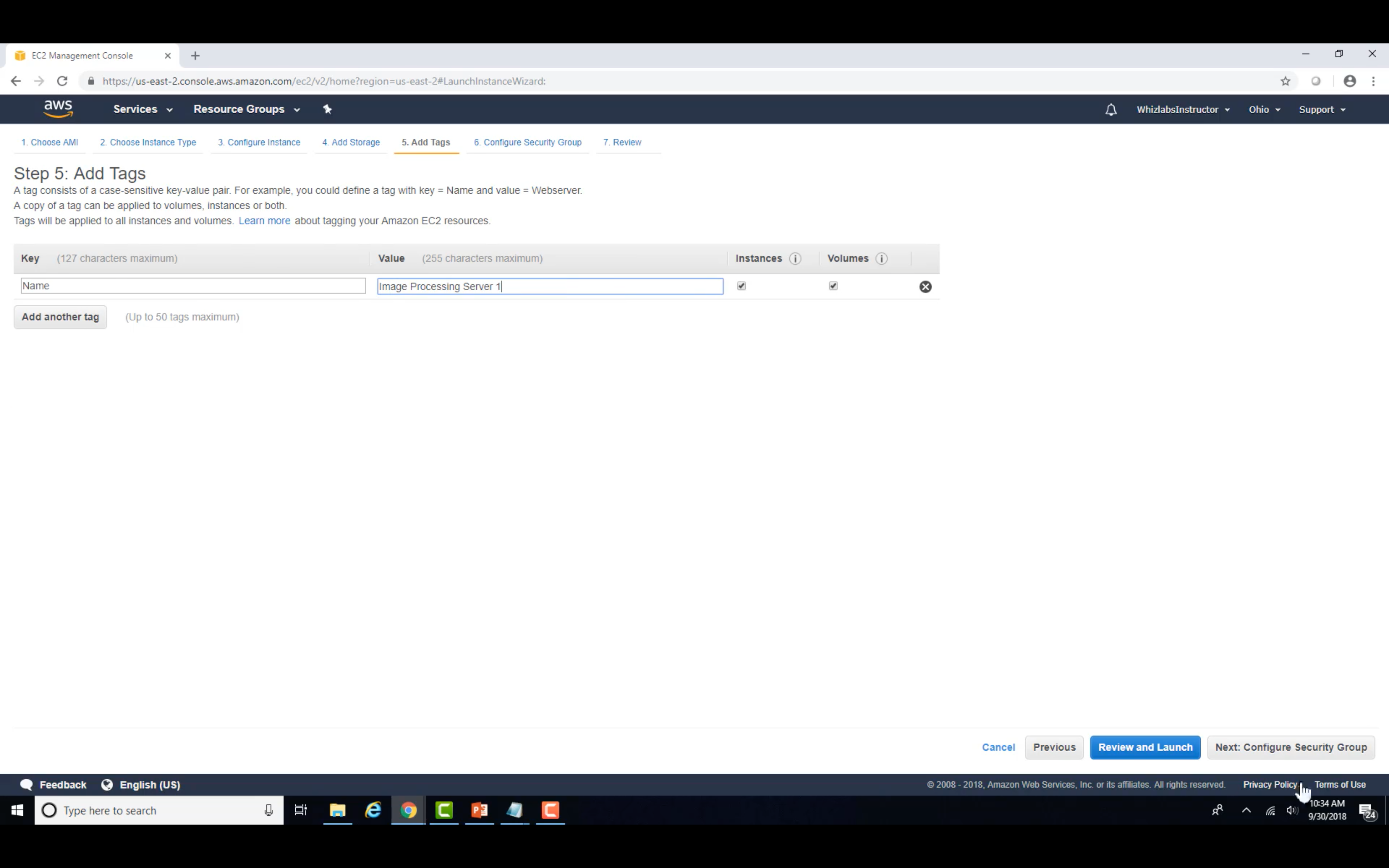

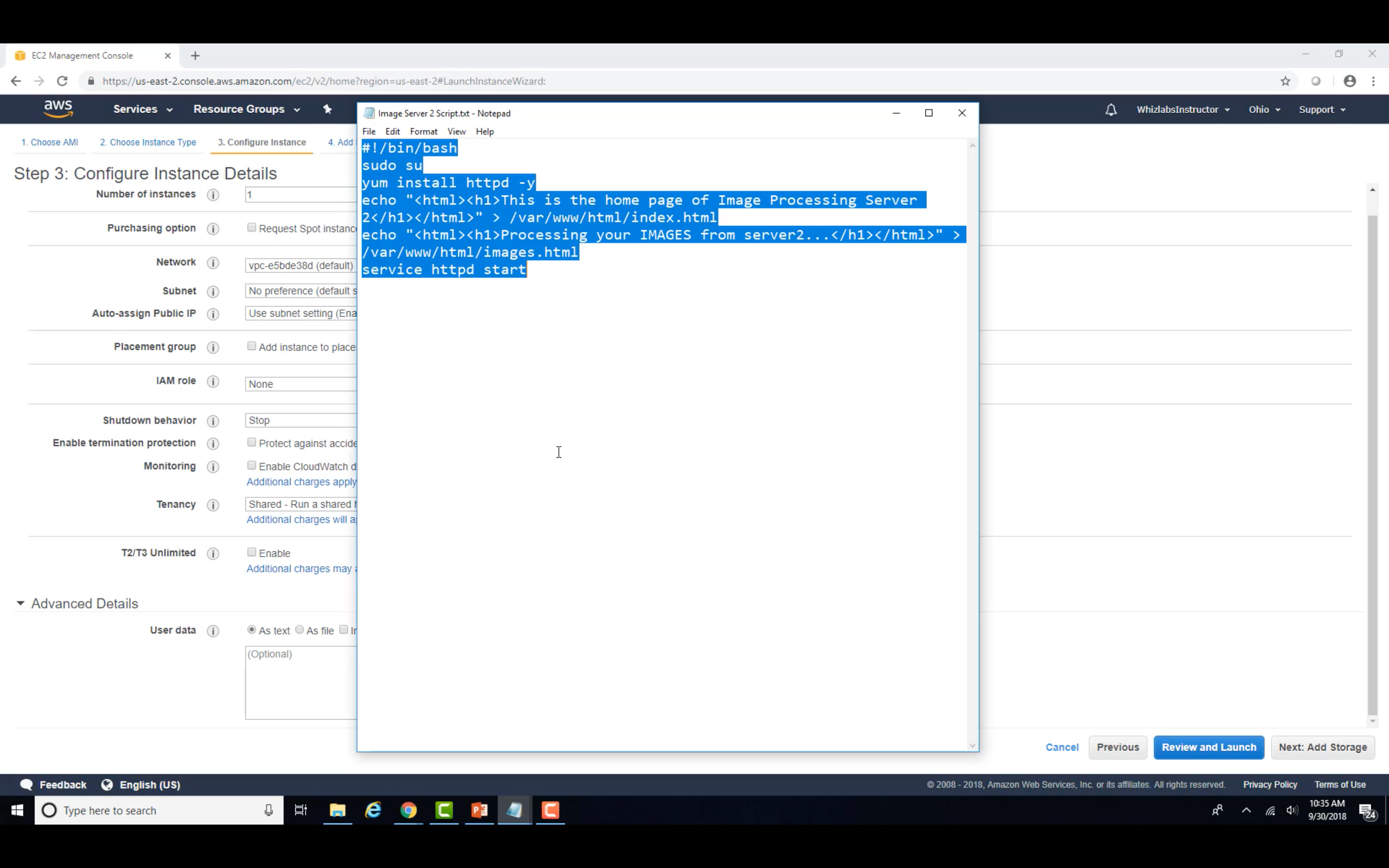

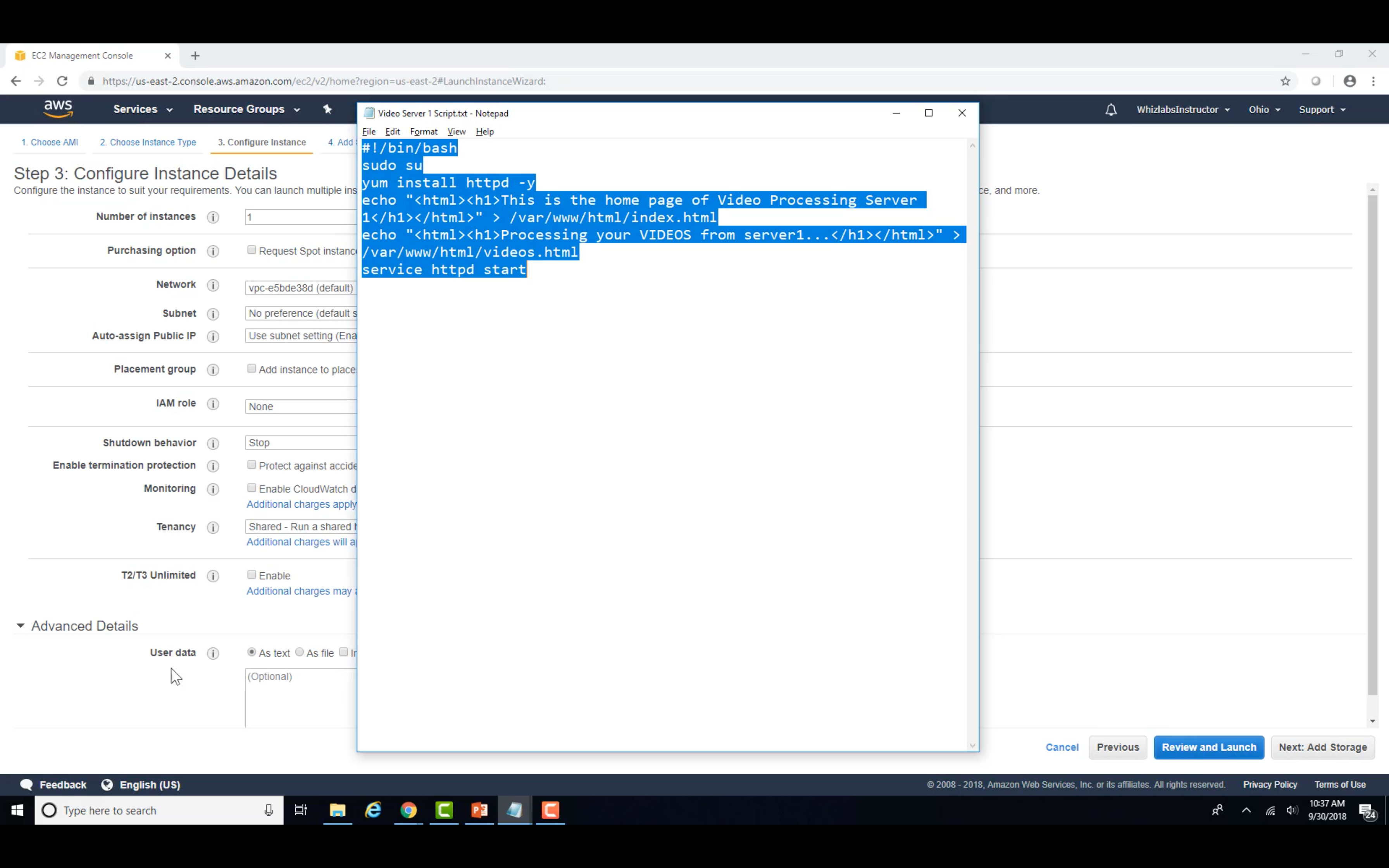

Advanced Details - User data

You can specify user data to configure an instance or run a configuration script during launch. If you launch more than one instance at a time, the user data is available to all the instances in that reservation.

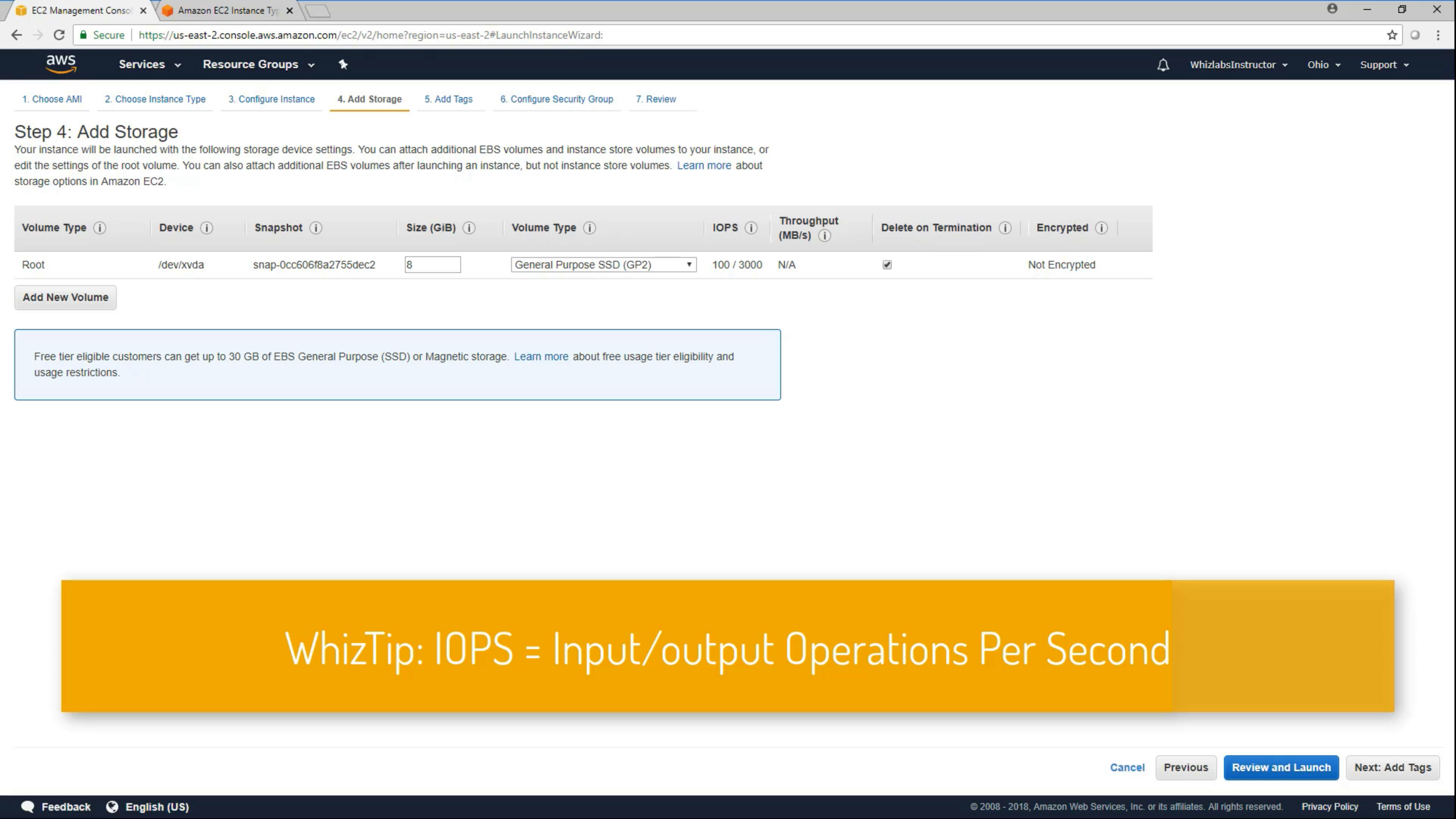

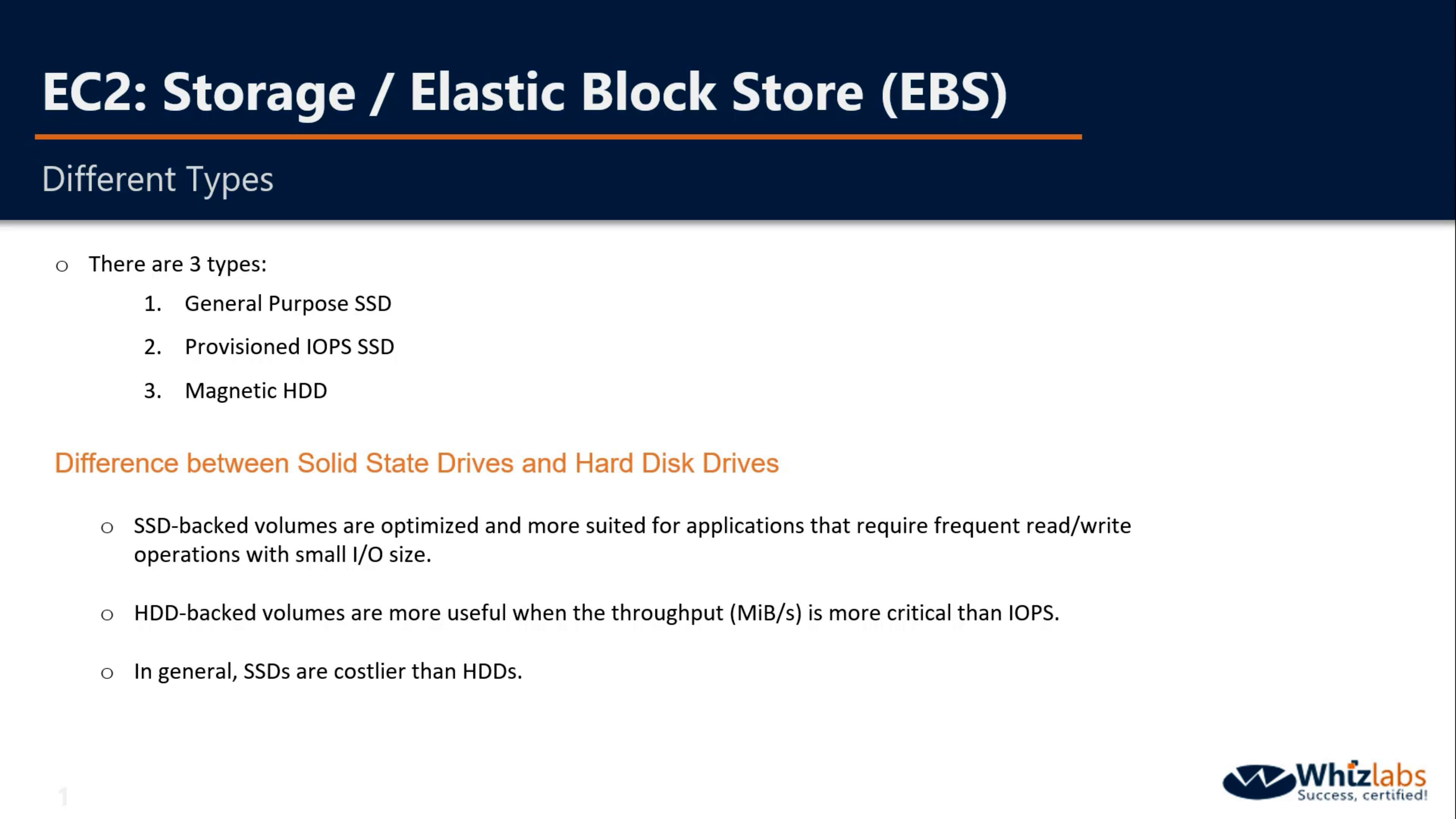

EC2 Storage

- SSD: Small I/O size with high frequently read/write operations.

- HDD: Throughput is more important than IOPS

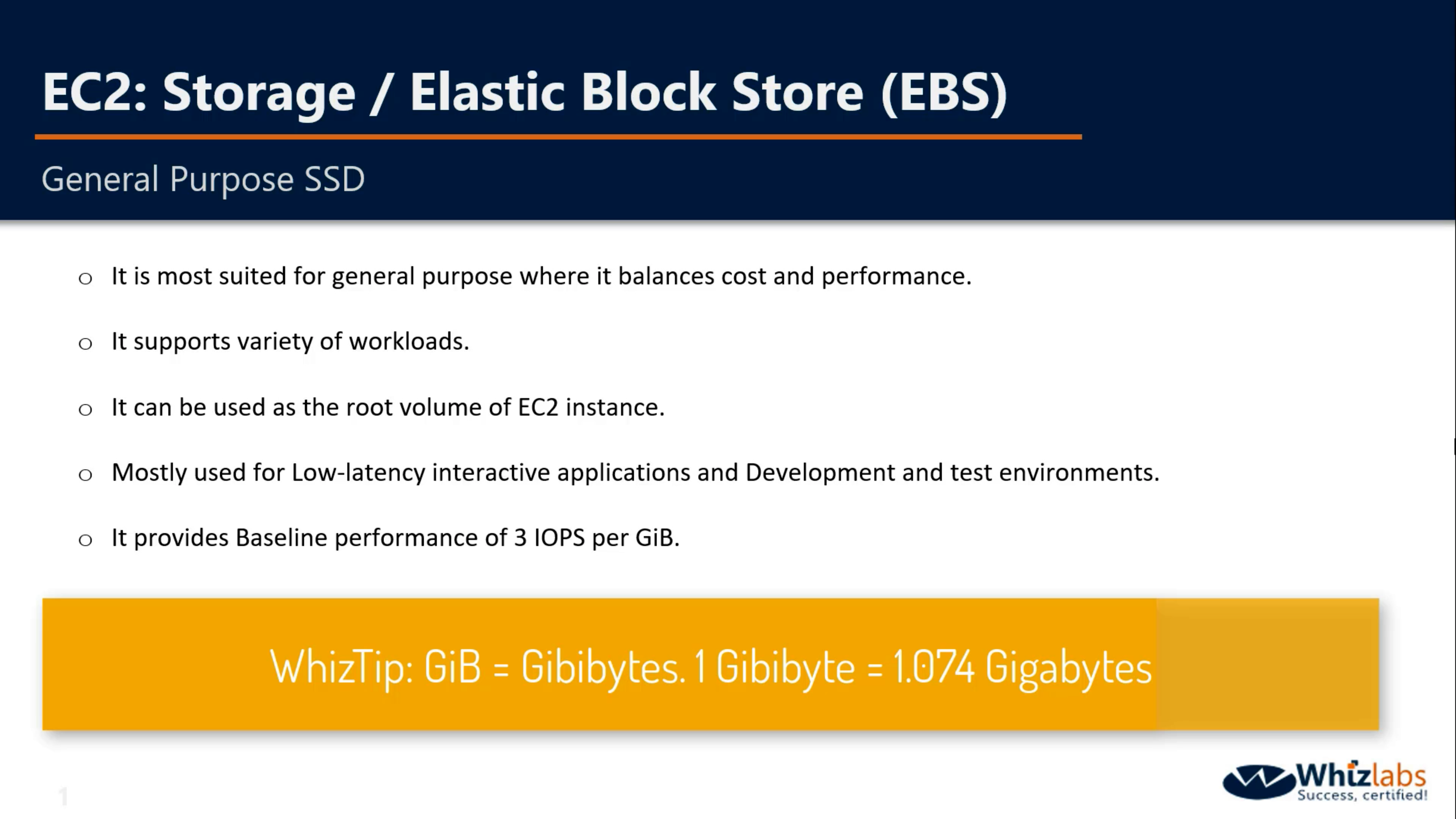

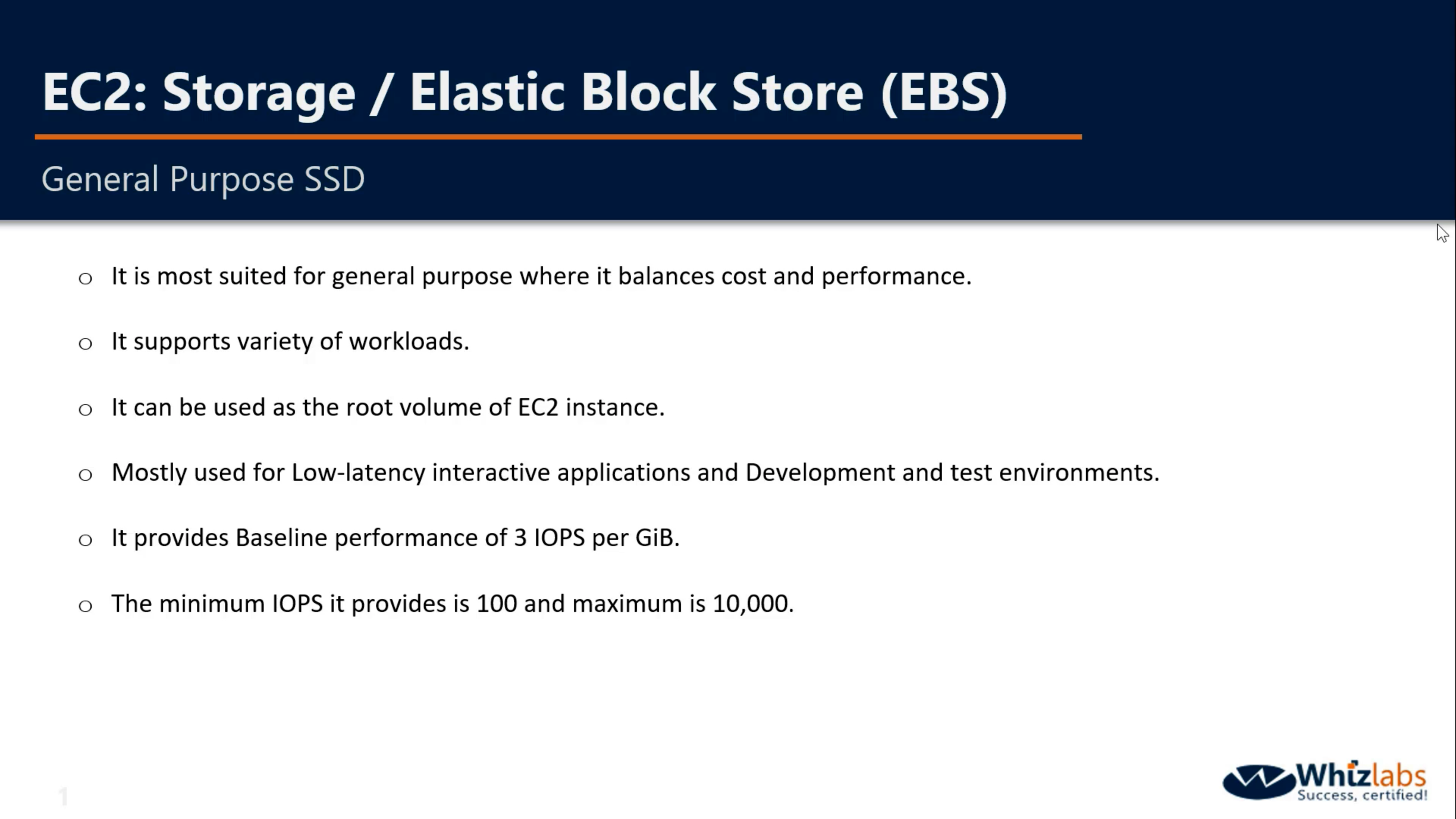

General SSD

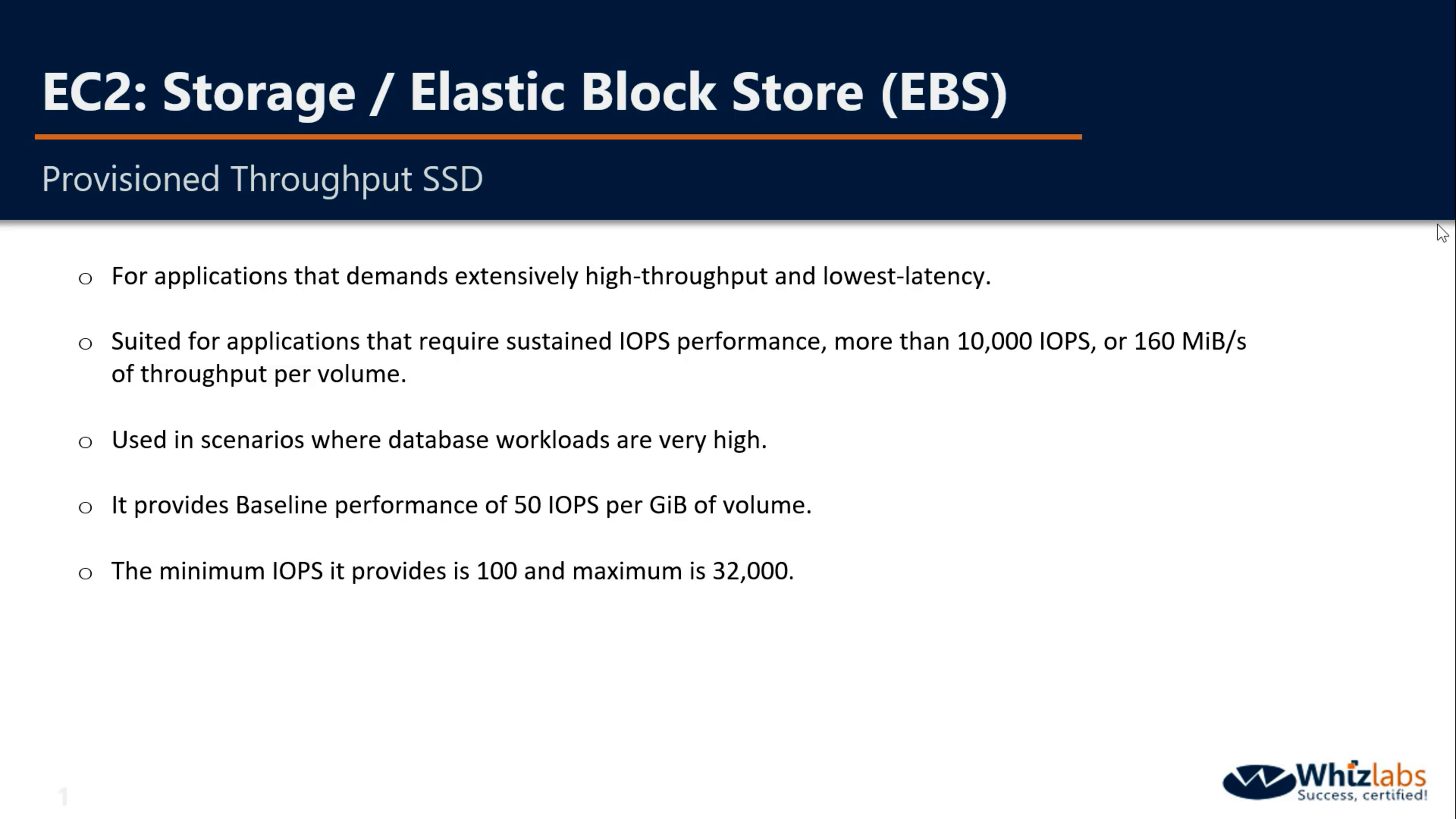

Provisioned Throughout SSD

Magnetic HDD

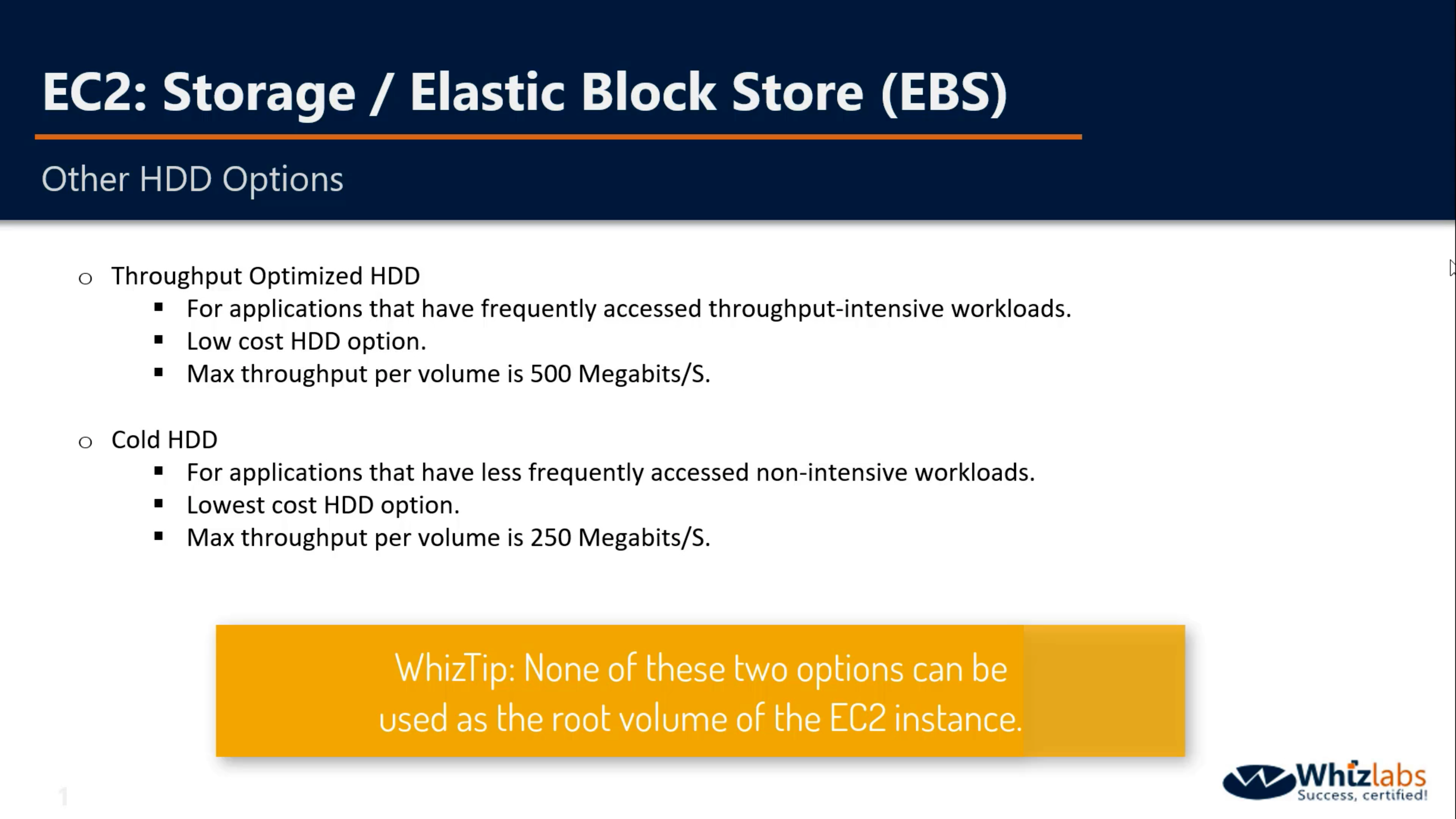

Other HDD & Cold HDD

| Solid State Drives (SSD) | Hard Disk Drives (HDD) | |||

|---|---|---|---|---|

| Volume Type | EBS Provisioned IOPS SSD (io1) | EBS General Purpose SSD (gp2)* | Throughput Optimized HDD (st1) | Cold HDD (sc1) |

Short Description |

Highest performance SSD volume designed for latency-sensitive transactional workloads |

General Purpose SSD volume that balances price performance for a wide variety of transactional workloads |

Low cost HDD volume designed for frequently accessed, throughput intensive workloads | Lowest cost HDD volume designed for less frequently accessed workloads |

Use Cases |

I/O-intensive NoSQL and relational databases |

Boot volumes, low-latency interactive apps, dev & test |

Big data, data warehouses, log processing | Colder data requiring fewer scans per day |

API Name |

io1 |

gp2 |

st1 | sc1 |

Volume Size |

4 GB - 16 TB |

1 GB - 16 TB |

500 GB - 16 TB | 500 GB - 16 TB |

Max IOPS**/Volume |

64,000 |

16,000 |

500 | 250 |

Max Throughput***/Volume |

1,000 MB/s |

250 MB/s |

500 MB/s | 250 MB/s |

Max IOPS/Instance |

80,000 |

80,000 |

80,000 | 80,000 |

Max Throughput/Instance |

2,375 MB/s |

2,375 MB/s |

2,375 MB/s | 2,375 MB/s |

Price |

$0.125/GB-month $0.065/provisioned IOPS |

$0.10/GB-month |

$0.045/GB-month | $0.025/GB-month |

Dominant Performance Attribute |

IOPS |

IOPS |

MB/s | MB/s |

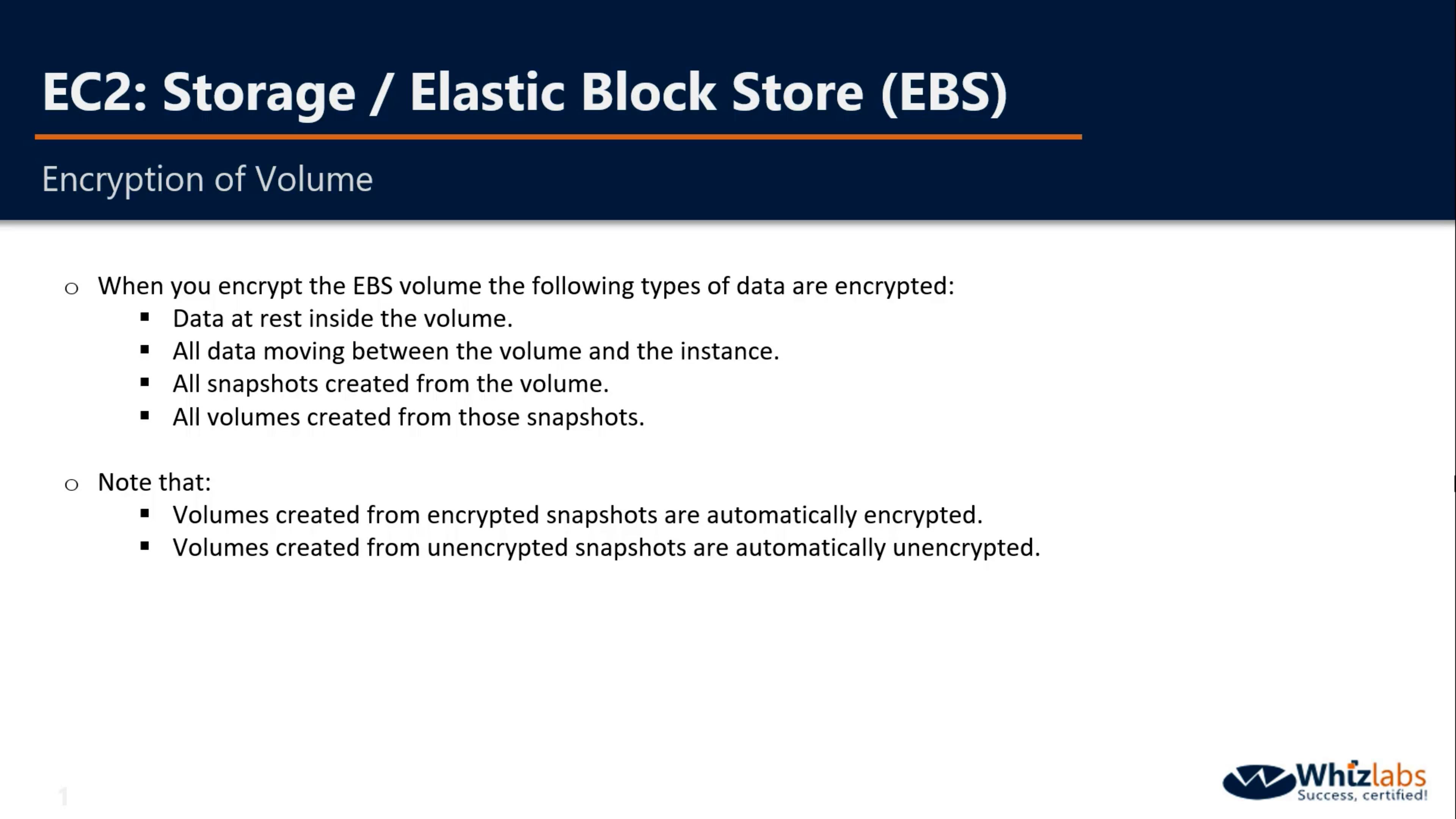

Encryption

Key-Pair

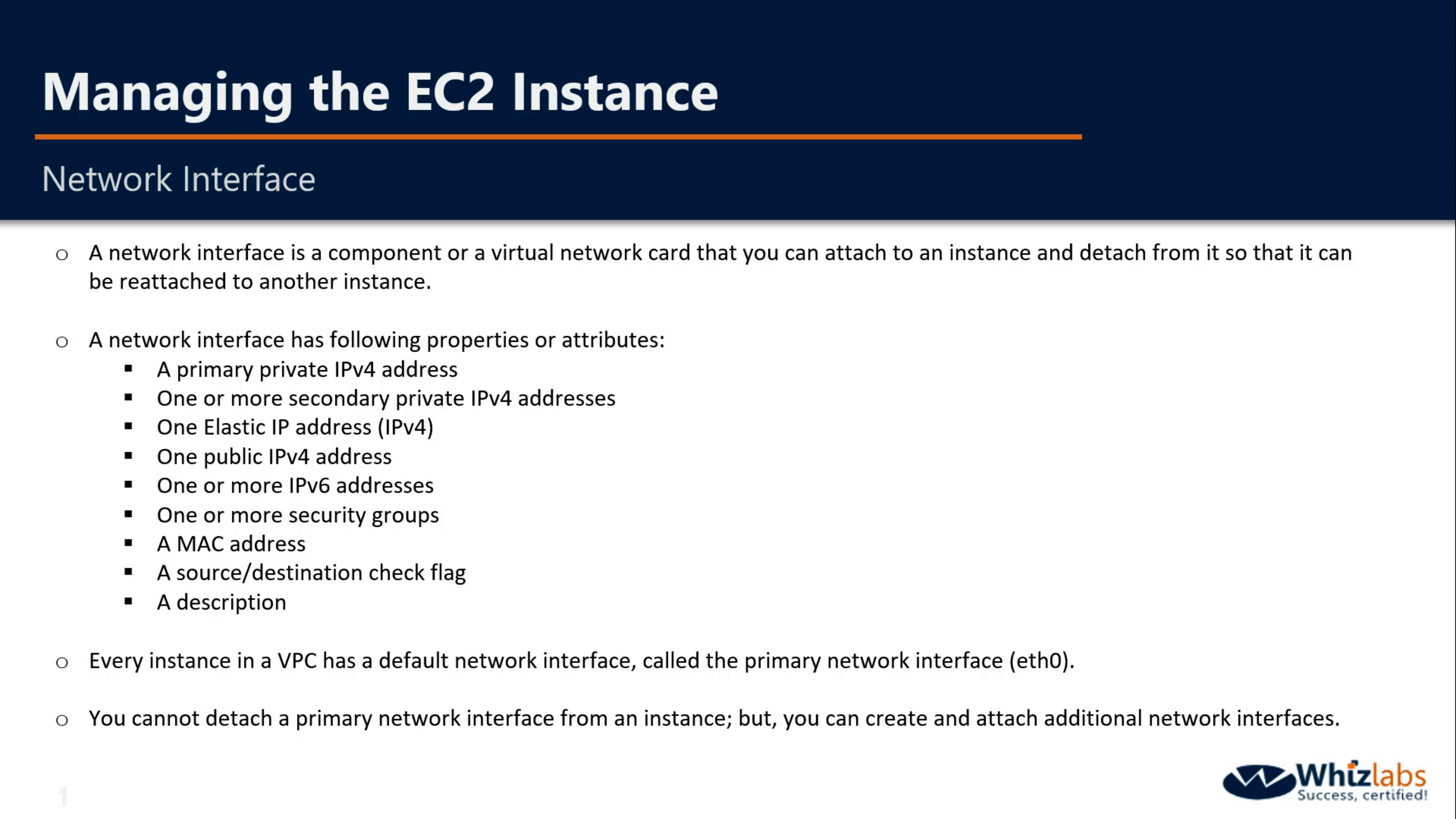

Network Interface

AMIs

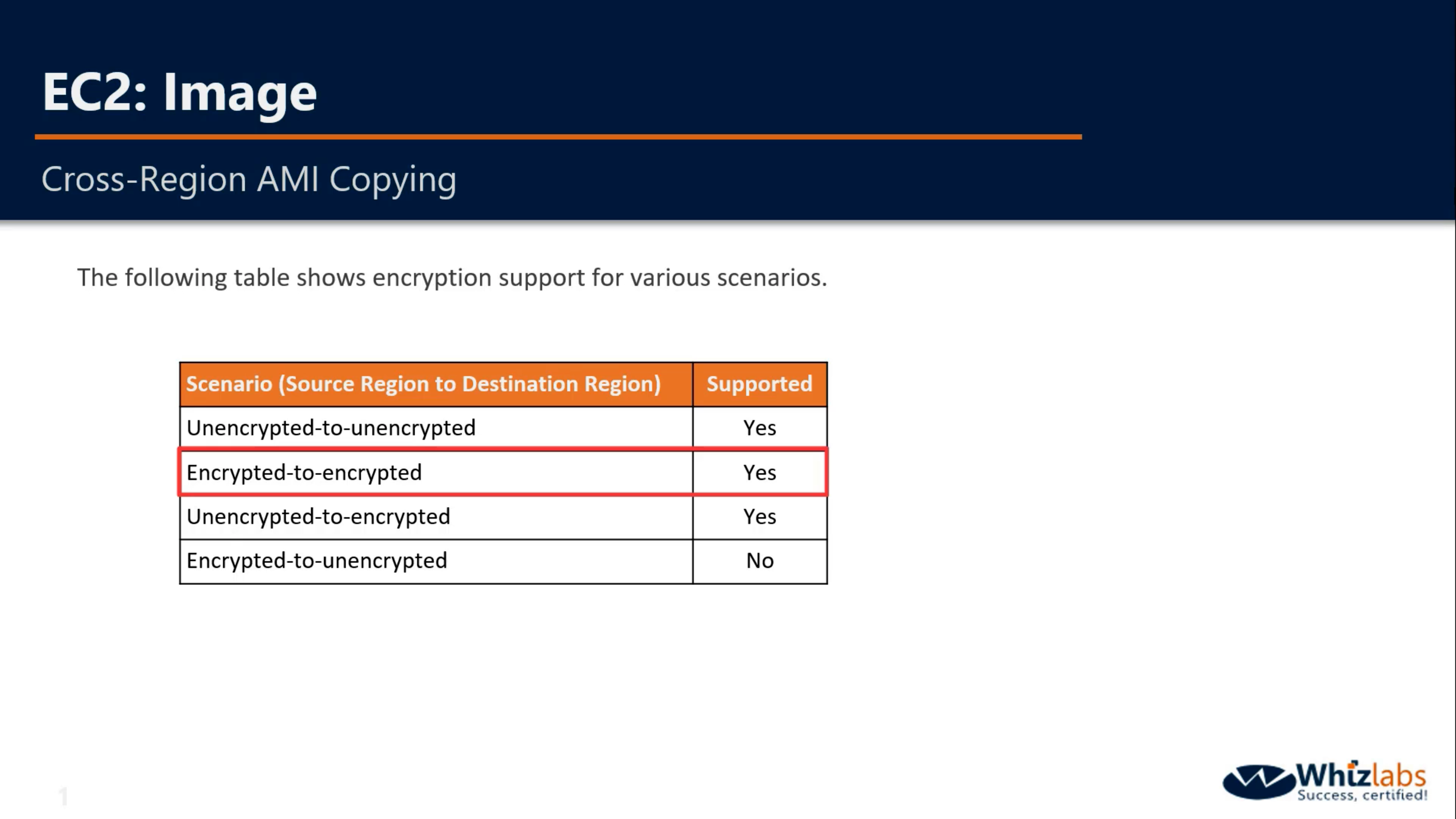

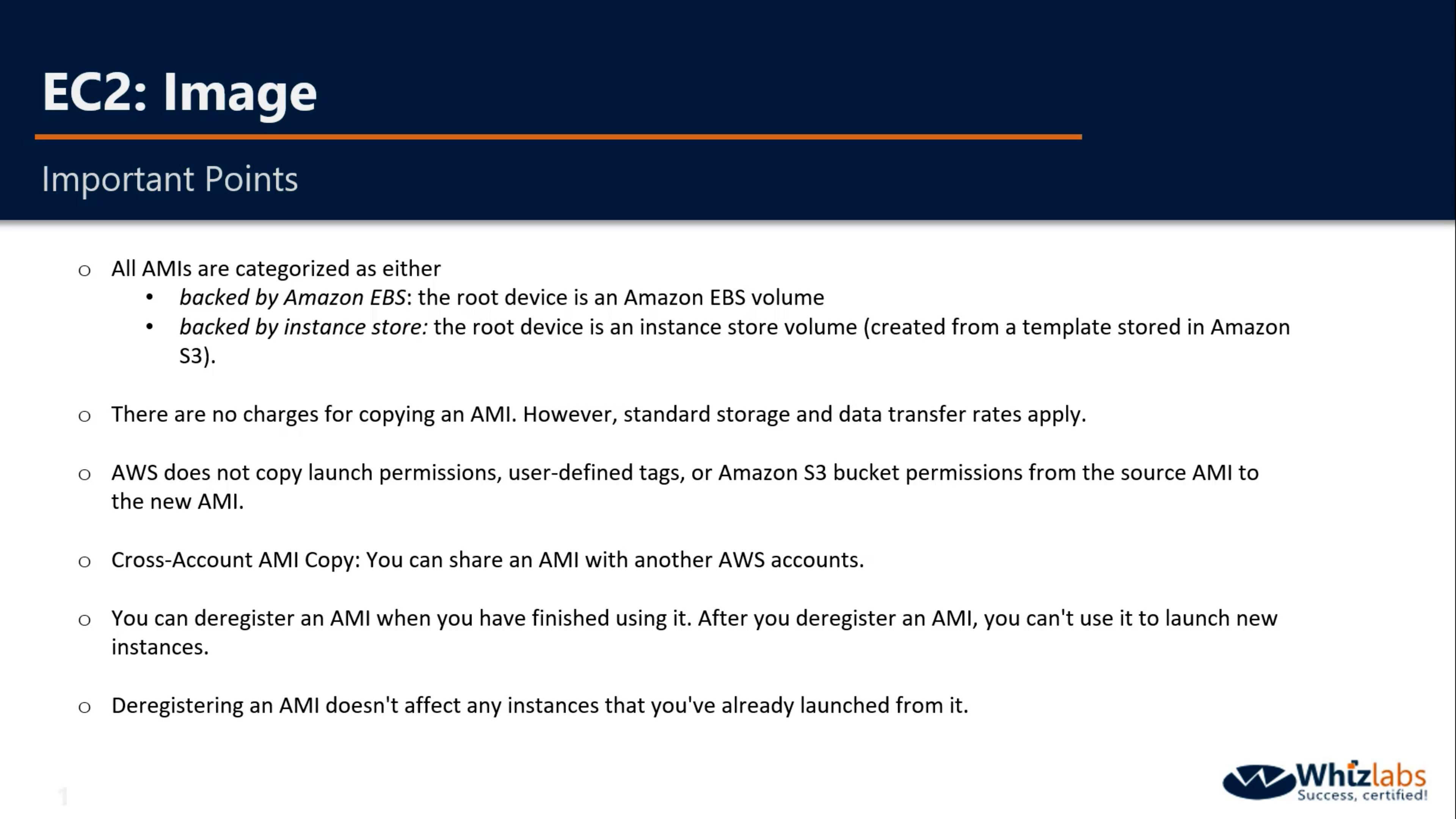

You cannot share AMIs unless you make it public or copy it to another region.

When you creating an AMI, you cannot disable encryption on an encrypted instance or enable encryption on an not encrypted instance. But you can encrypt an AMI by copying it.

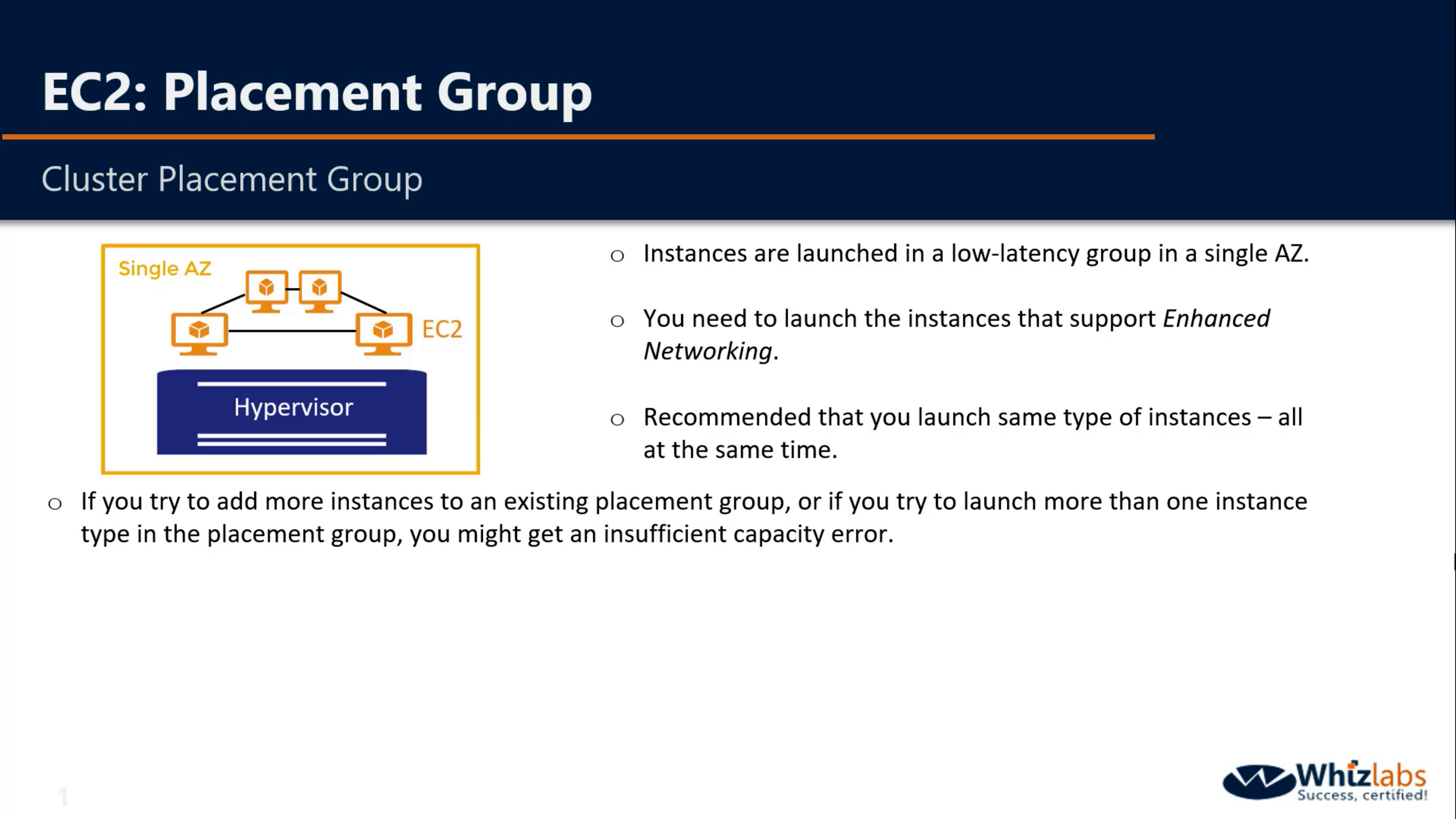

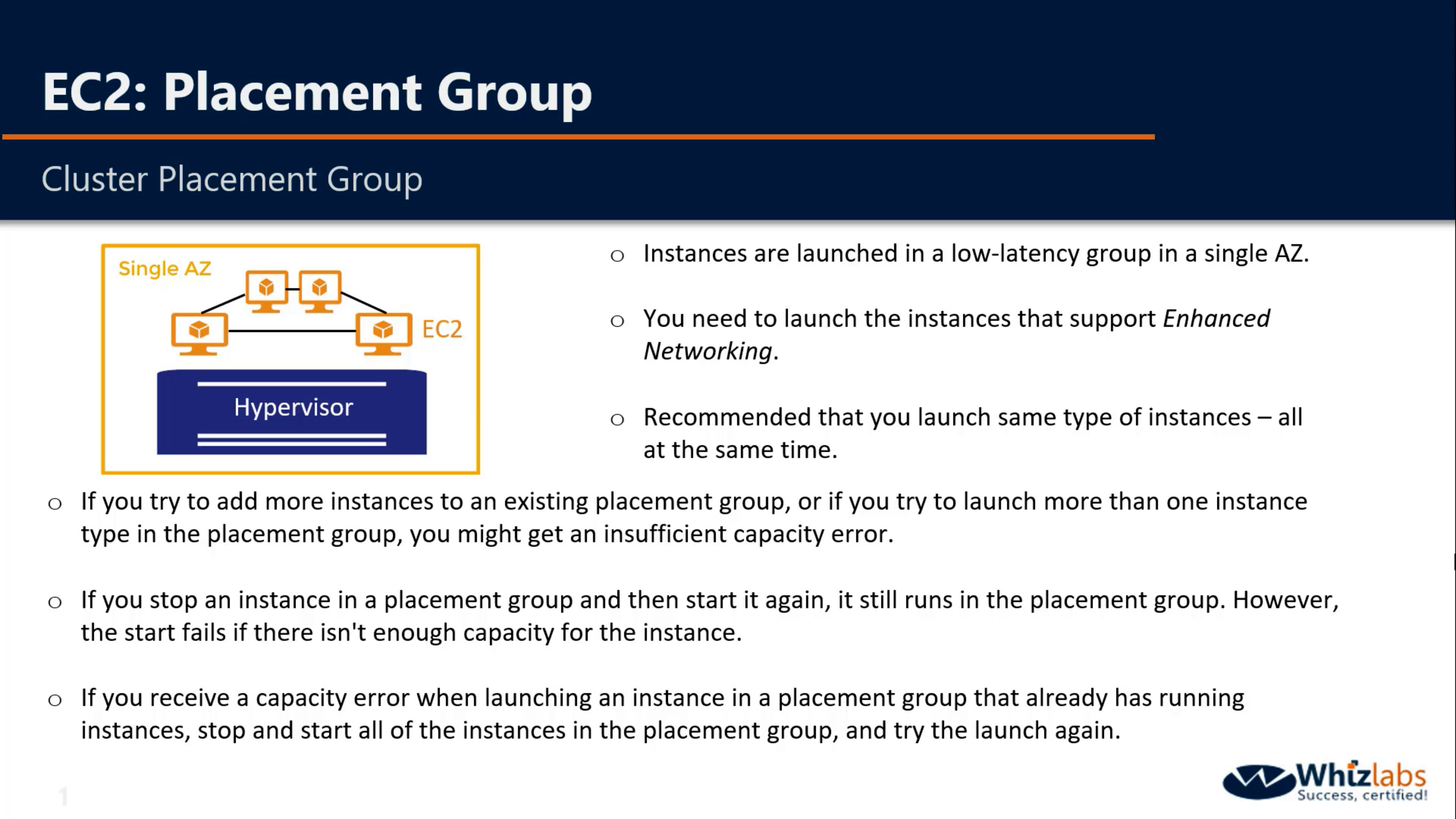

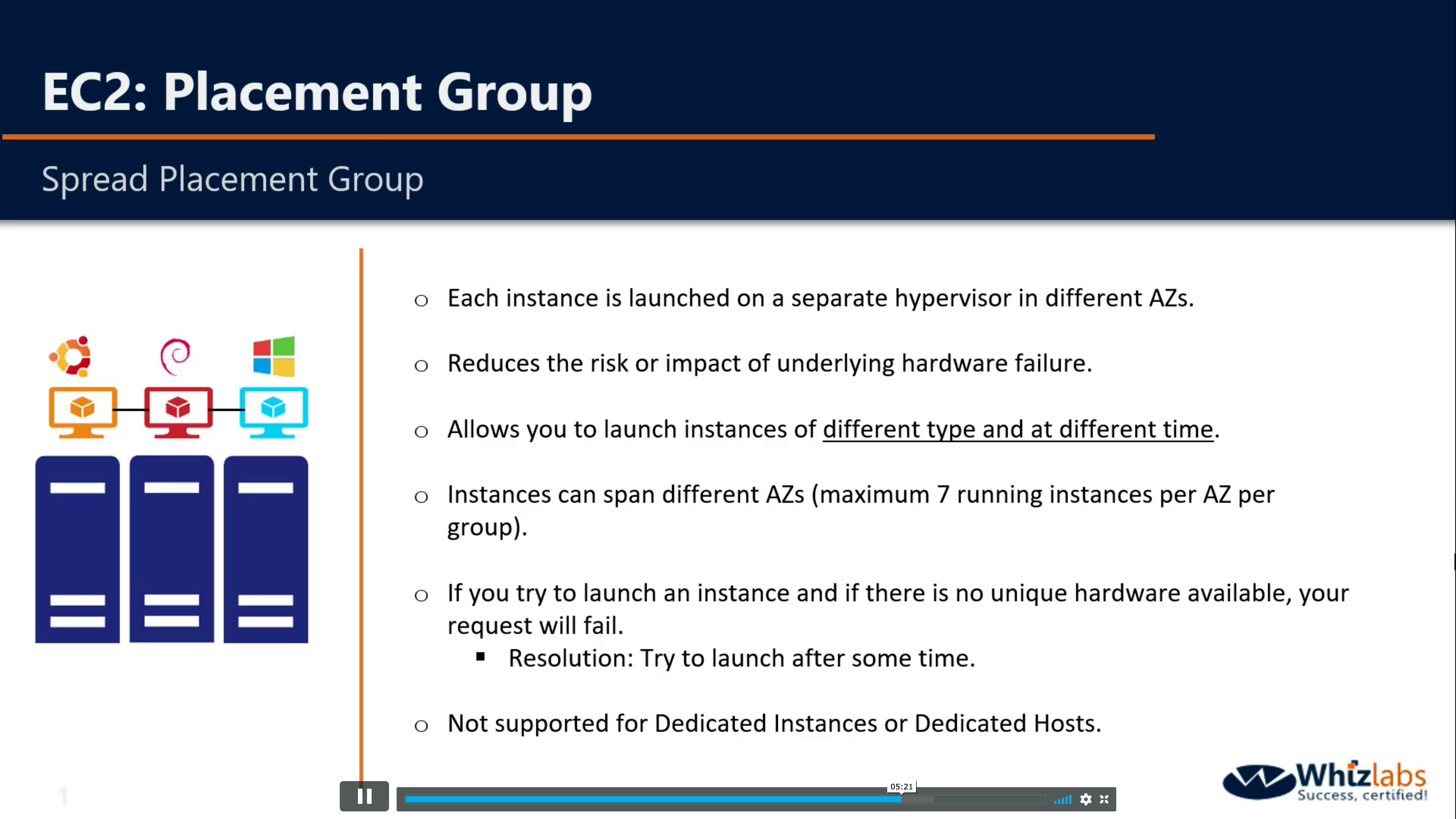

Placement Groups and Instance Store

When you launch a new EC2 instance, the EC2 service attempts to place the instance in such a way that all of your instances are spread out across underlying hardware to minimize correlated failures. You can use placement groups to influence the placement of a group of interdependent instances to meet the needs of your workload. Depending on the type of workload, you can create a placement group using one of the following placement strategies:

- Cluster – packs instances close together inside an Availability Zone. This strategy enables workloads to achieve the low-latency network performance necessary for tightly-coupled node-to-node communication that is typical of HPC applications.

- Partition – spreads your instances across logical partitions such that groups of instances in one partition do not share the underlying hardware with groups of instances in different partitions. This strategy is typically used by large distributed and replicated workloads, such as Hadoop, Cassandra, and Kafka.

- Spread – strictly places a small group of instances across distinct underlying hardware to reduce correlated failures.

There is no charge for creating a placement group.

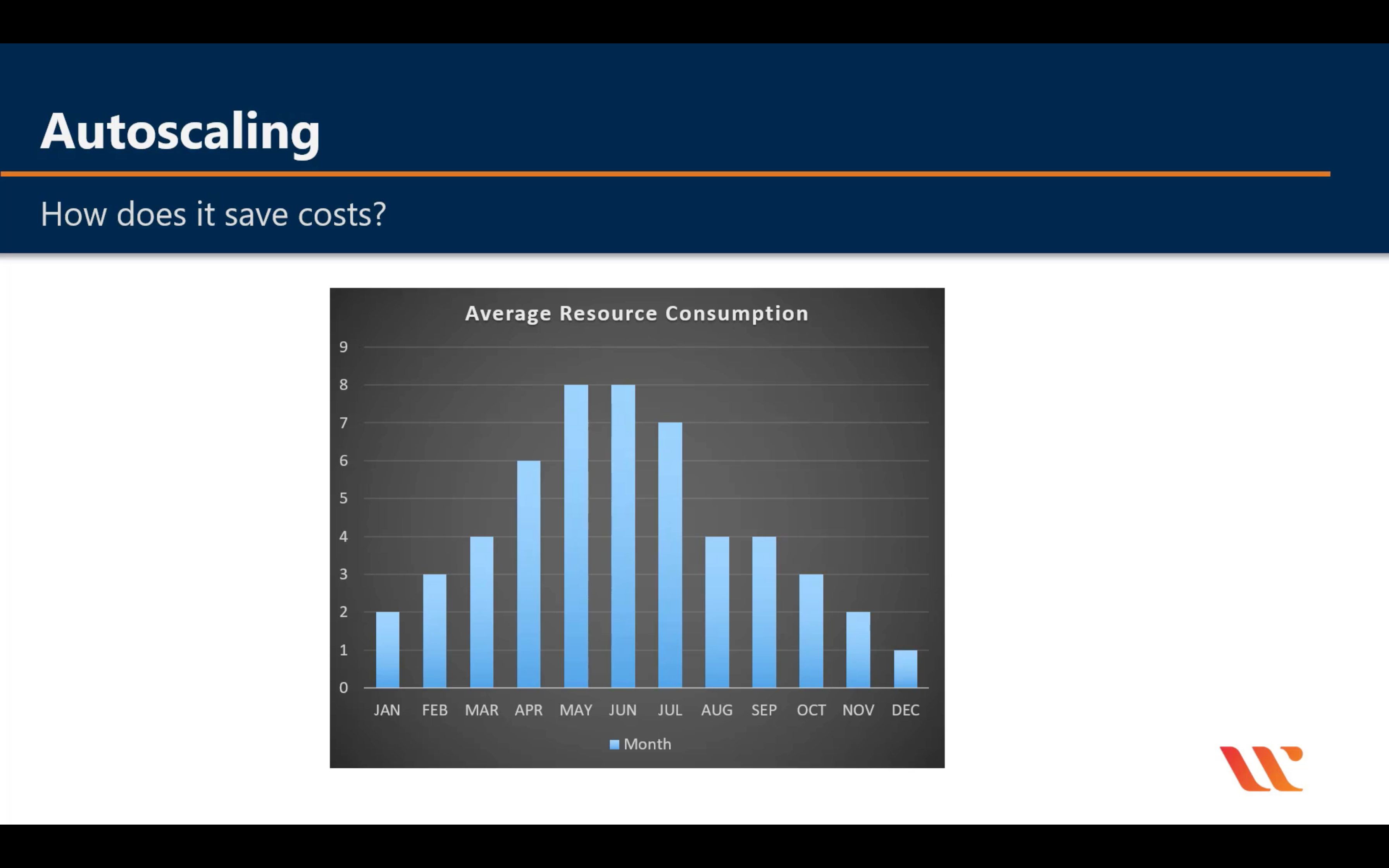

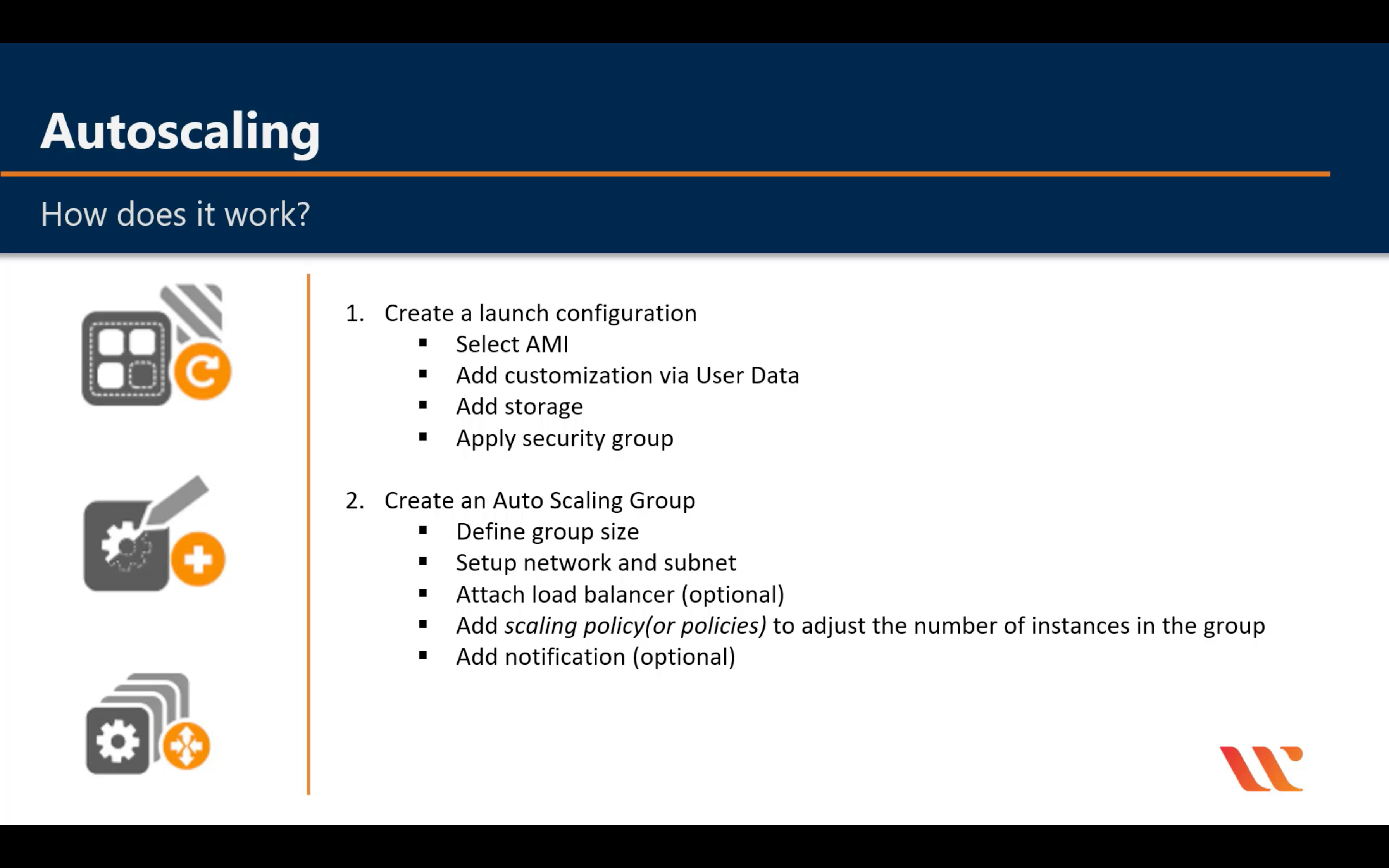

Amazon EC2 Auto Scaling

Scale compute capacity to meet demand

- Automatically scale in and out

- Choose when and how to scale

- Fleet management

- Predictive Scaling

- Support for multiple purchase models, instance types, and AZs

- Included with Amazon EC2

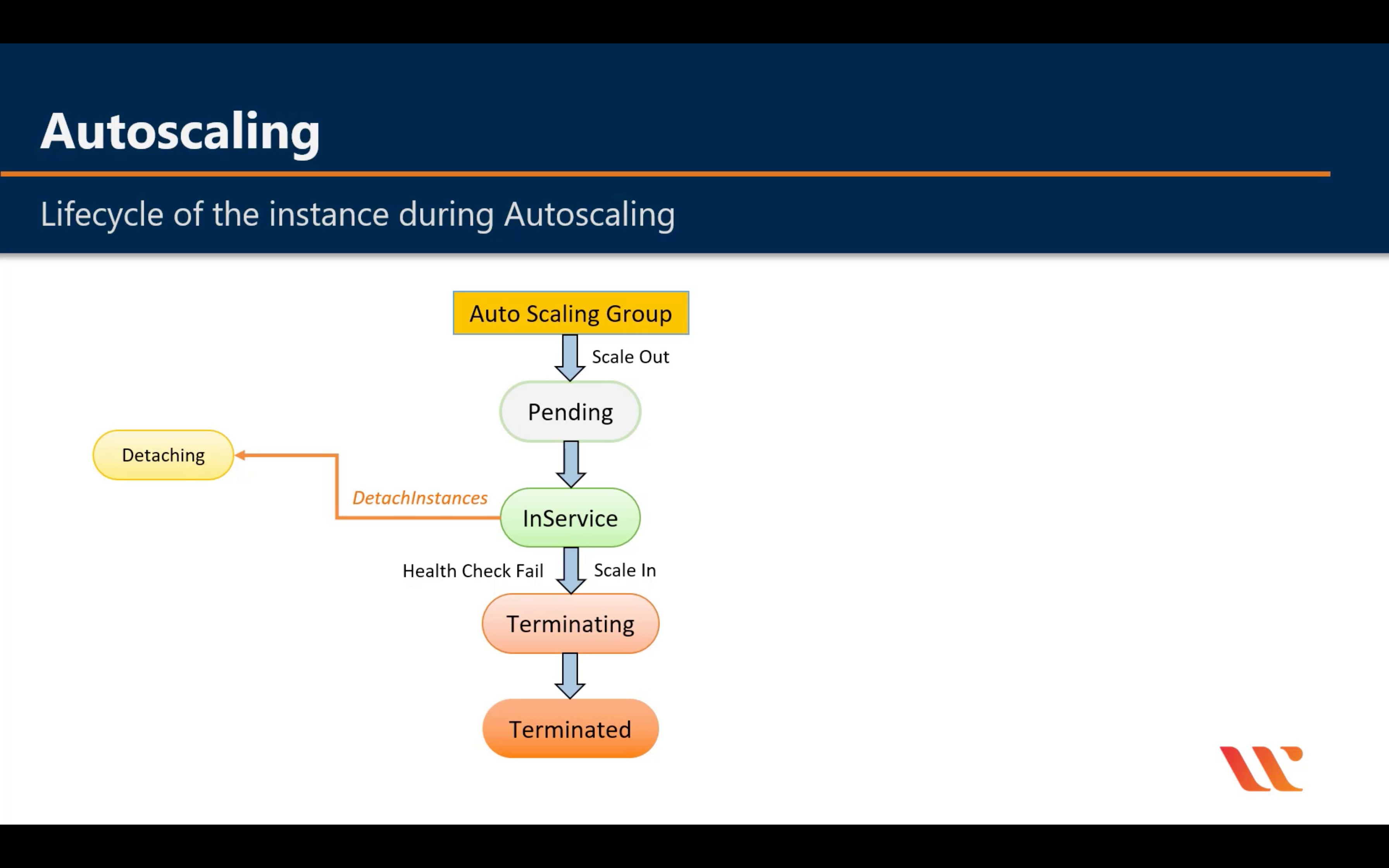

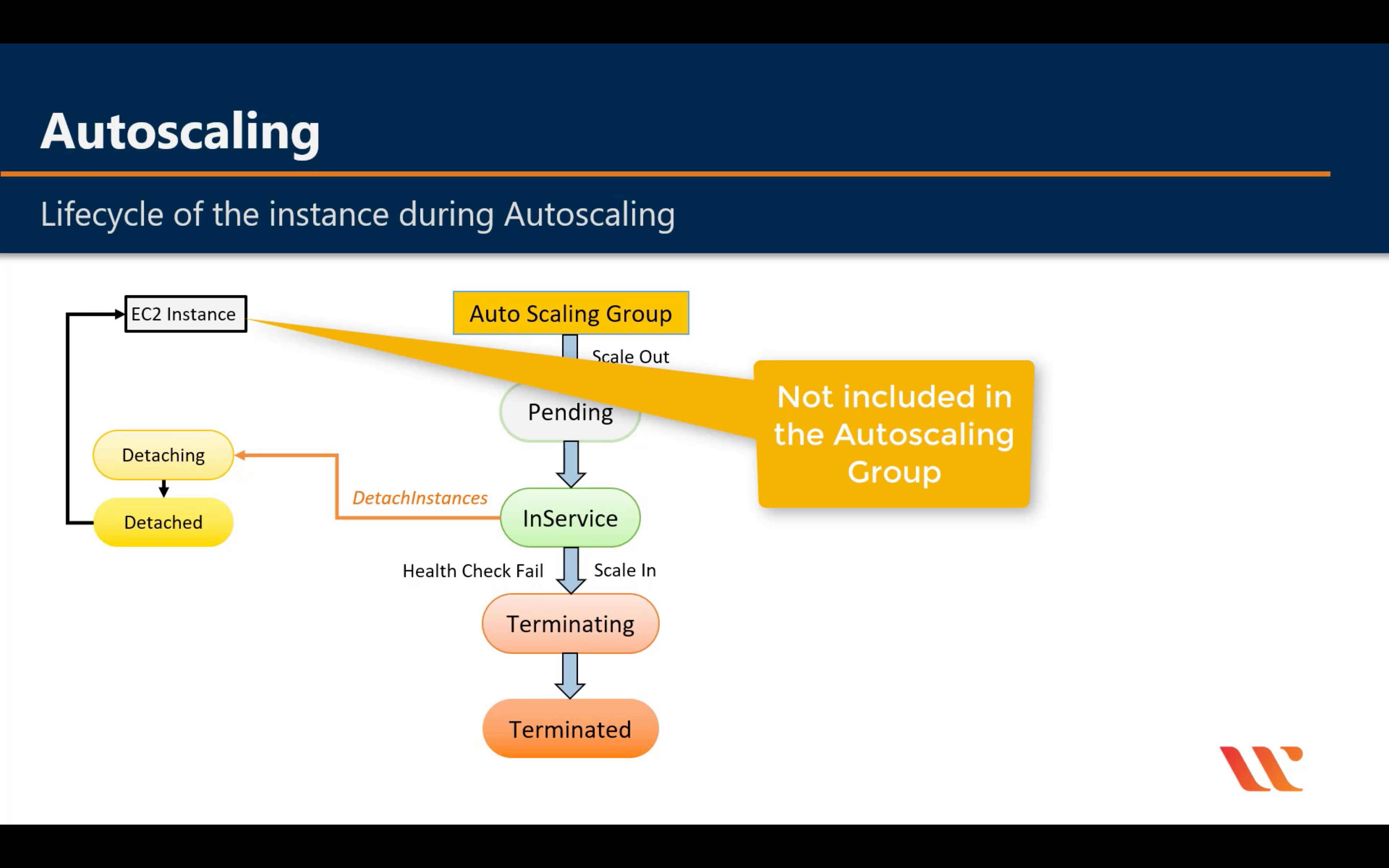

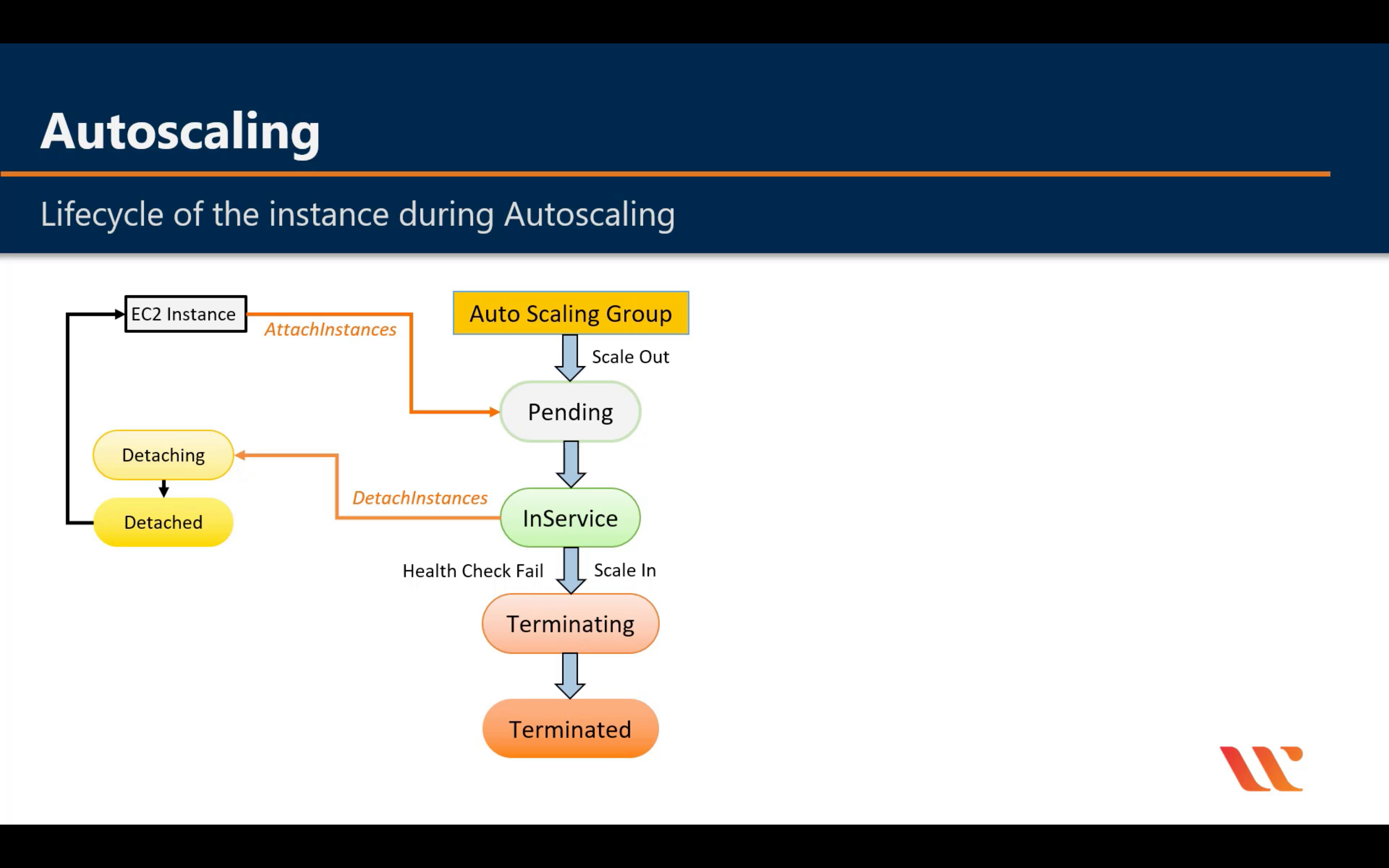

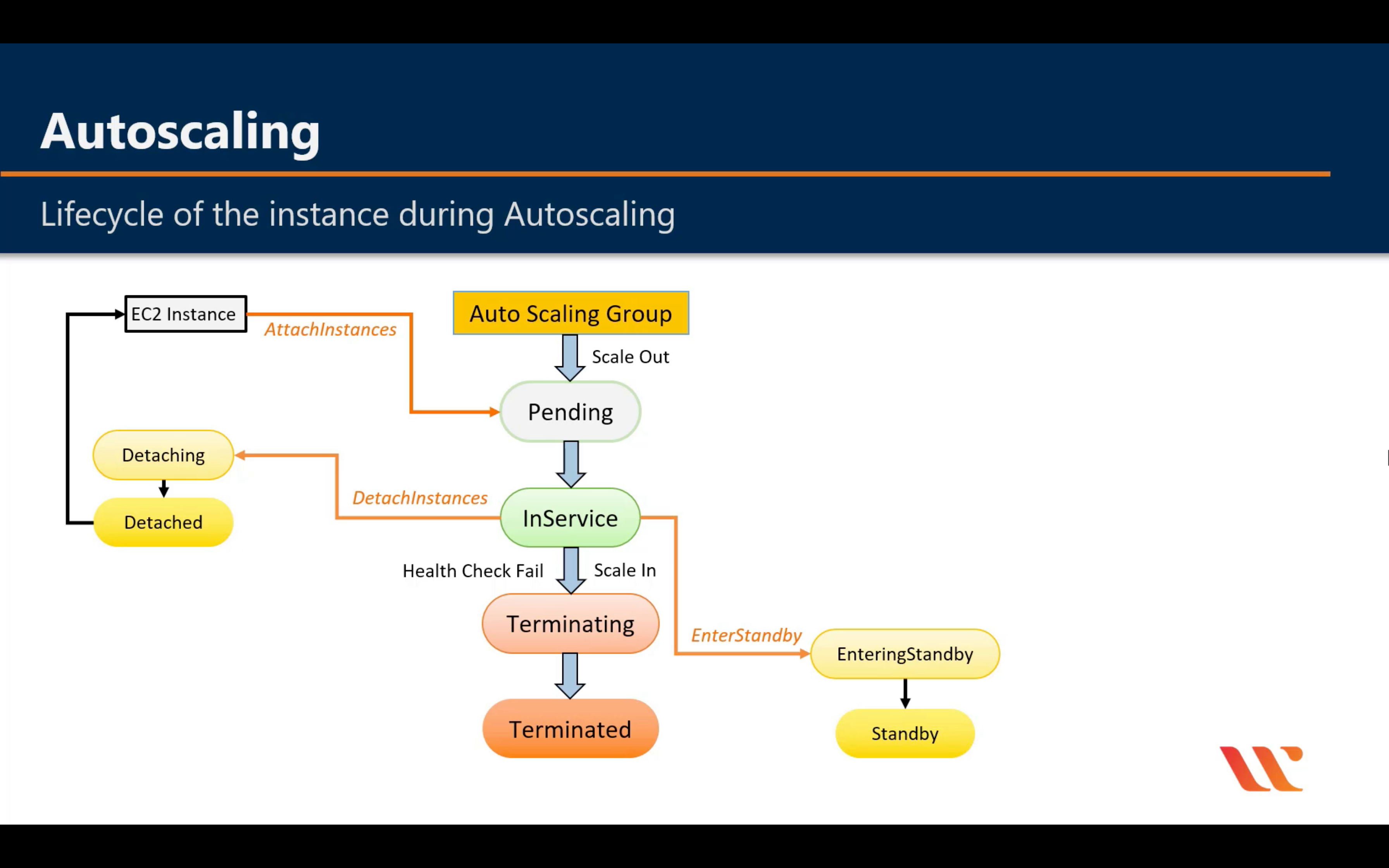

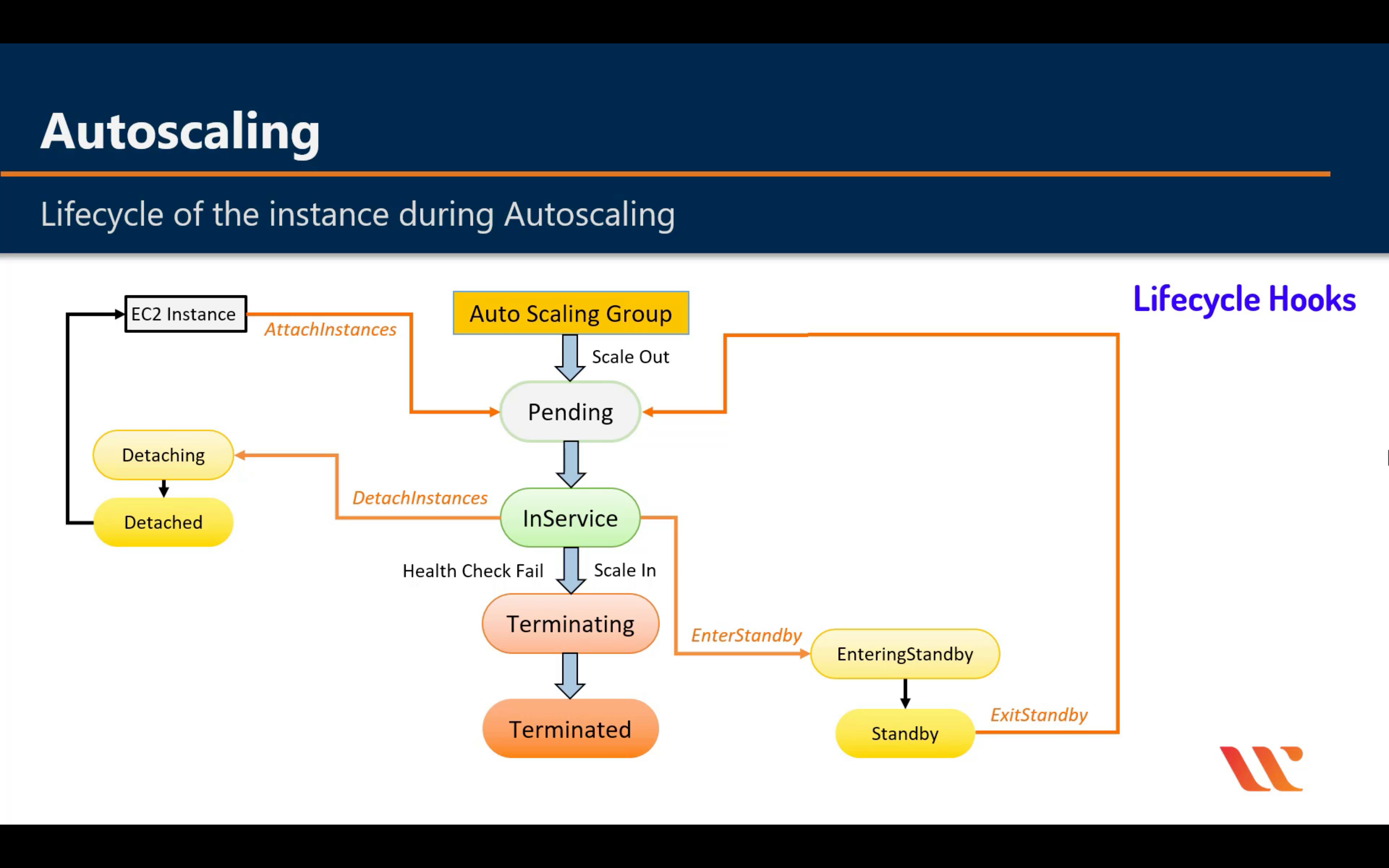

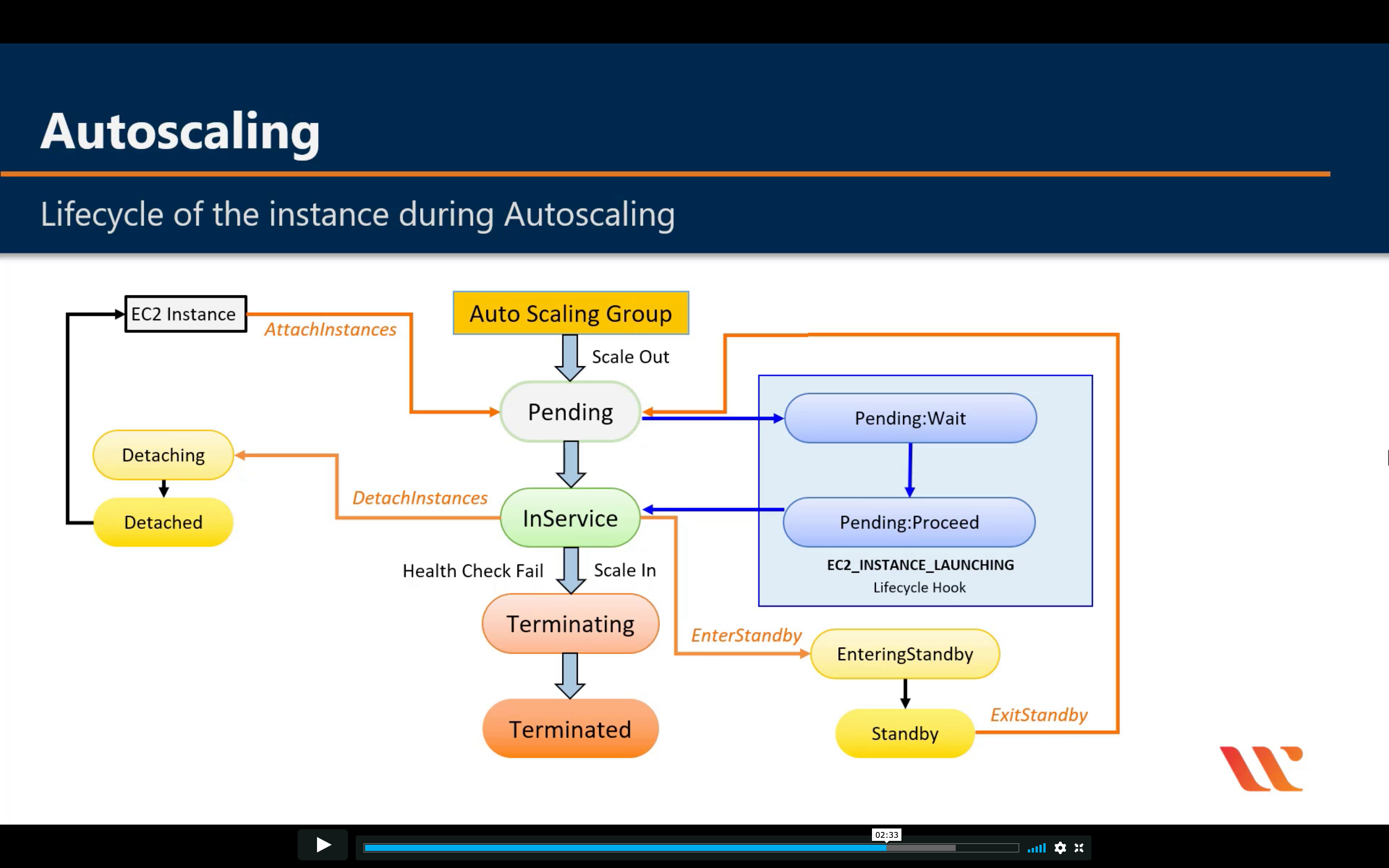

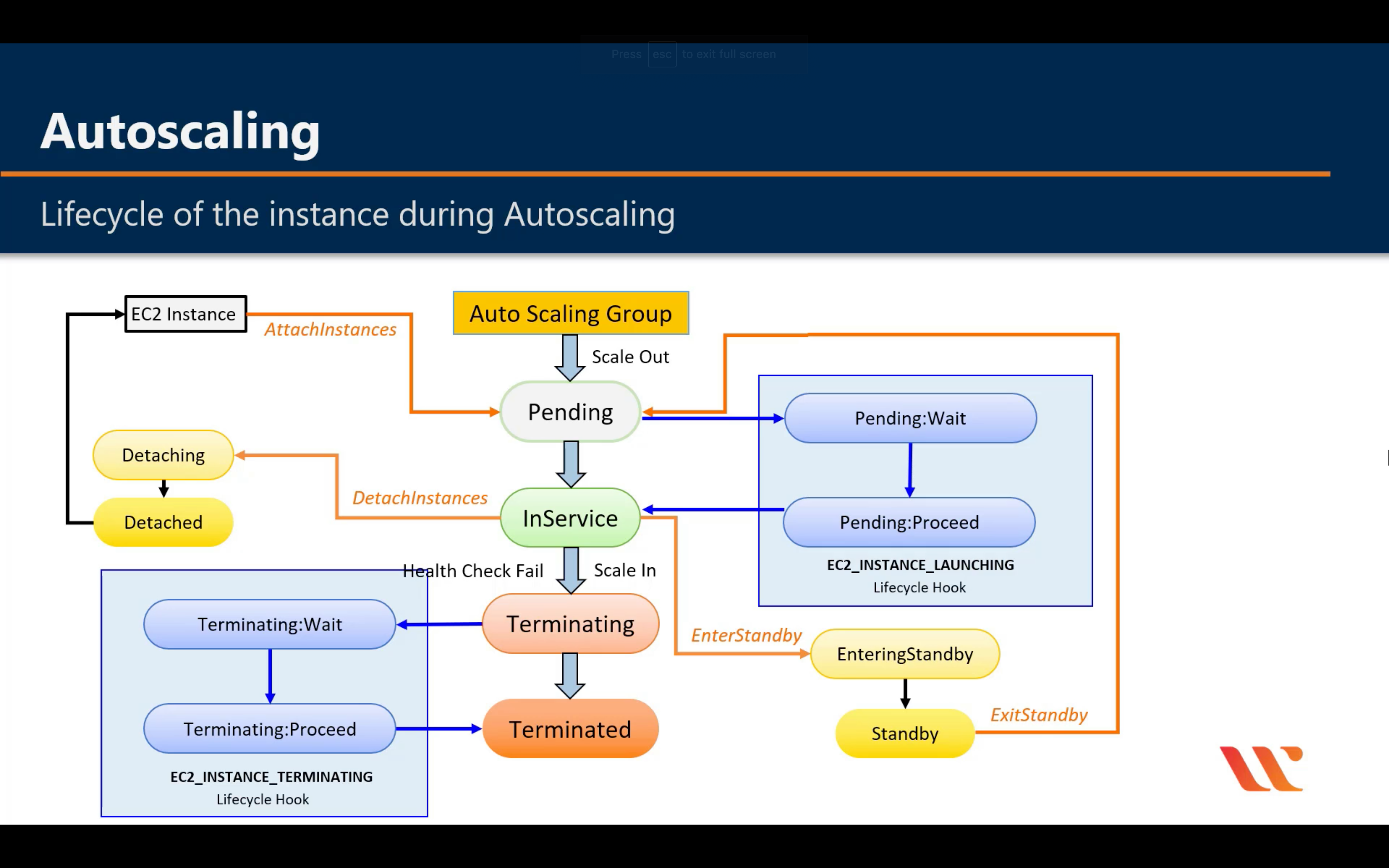

Lifecycle

For big instance

Cooldown Periods for Simple Scaling

When an Auto Scaling group launches or terminates an instance due to a simple scaling policy, a cooldown period takes effect. The cooldown period helps ensure that the Auto Scaling group does not launch or terminate more instances than needed before the effects of previous simple scaling activities are visible. When a lifecycle action occurs, and an instance enters the wait state, scaling activities due to simple scaling policies are paused. When a newly launched instance enters the InService state, the cooldown period starts. For more information, see Scaling Cooldowns for Amazon EC2 Auto Scaling.

Health Check Grace Period

If you add a lifecycle hook, the health check grace period does not start until the lifecycle hook actions complete and the instance enters the InService state.

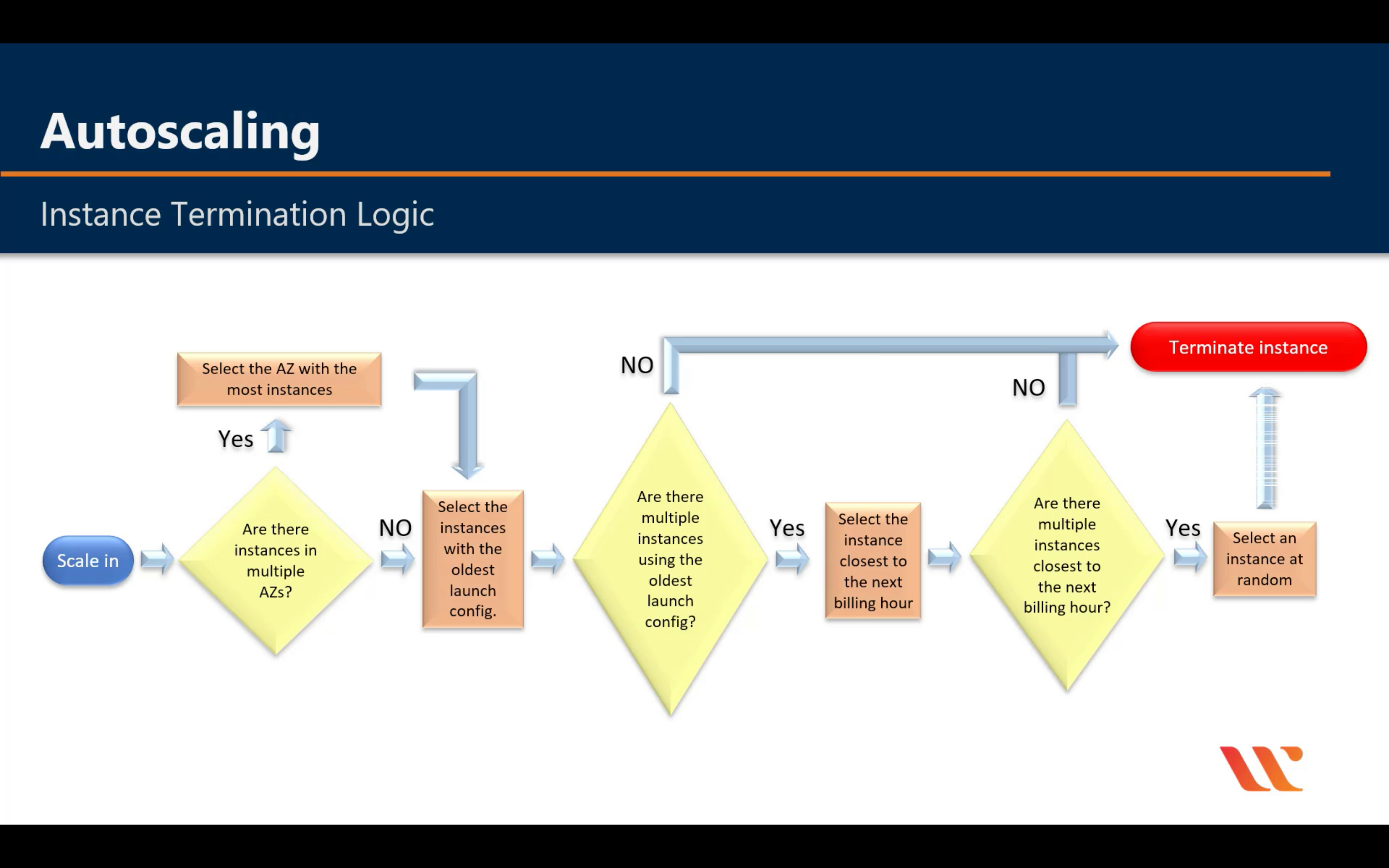

Instance Termination Logic

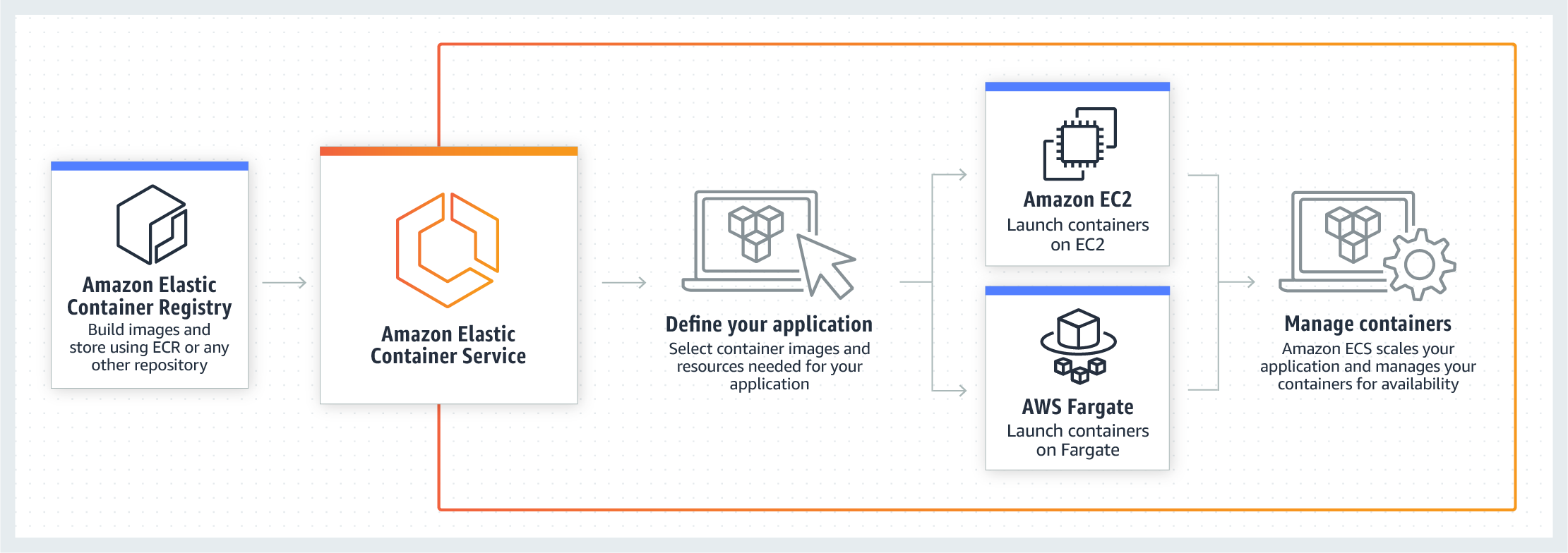

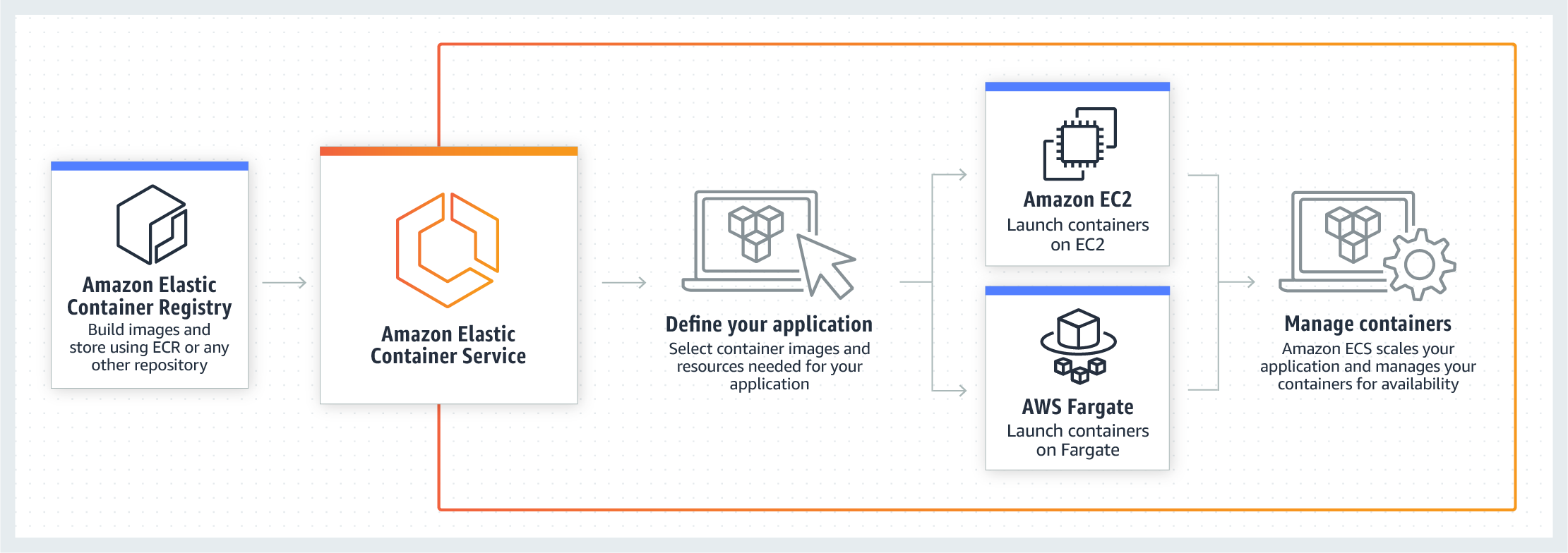

Amazon EC2 Container Registry

Store and retrieve docker images

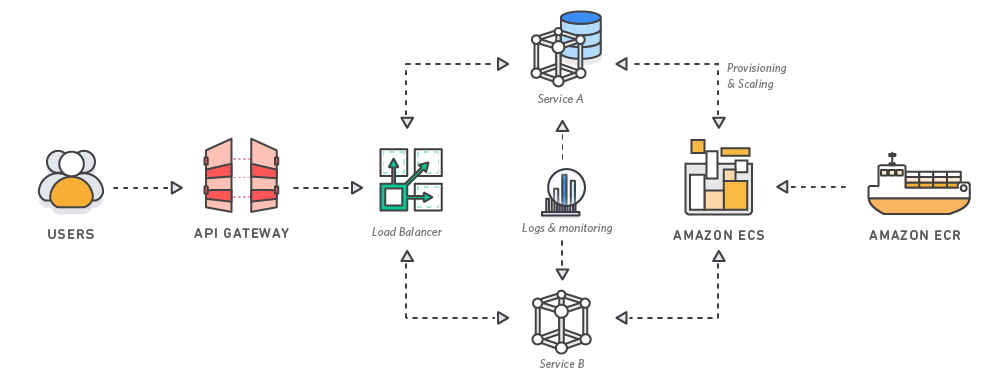

ECS (Amazon Elastic Container Service)

Run and manage docker containers

What is Amazon Elastic Container Service?

Container: define the code, runtime, system tools, system libraries, etc.

Task Definitions:

A task definition is required to run Docker containers in Amazon ECS. Some of the parameters you can specify in a task definition include:

- The Docker image to use with each container in your task

- How much CPU and memory to use with each task or each container within a task

- The launch type to use, which determines the infrastructure on which your tasks are hosted

- The Docker networking mode to use for the containers in your task

- The logging configuration to use for your tasks

- Whether the task should continue to run if the container finishes or fails

- The command the container should run when it is started

- Any data volumes that should be used with the containers in the task

- The IAM role that your tasks should use

- a logical grouping of resource.

- Networking only

- EC2 Linux + Networking

- EC2 Windows + Networking

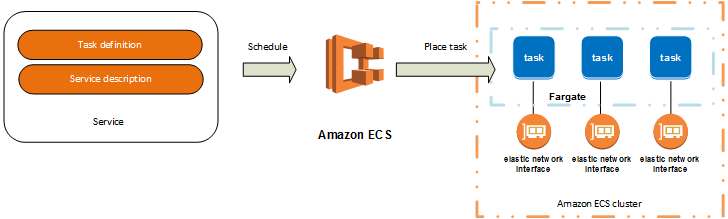

Service:

A service definition defines which task definition to use with your service, how many instantiations of that task to run, and which load balancers (if any) to associate with your tasks.

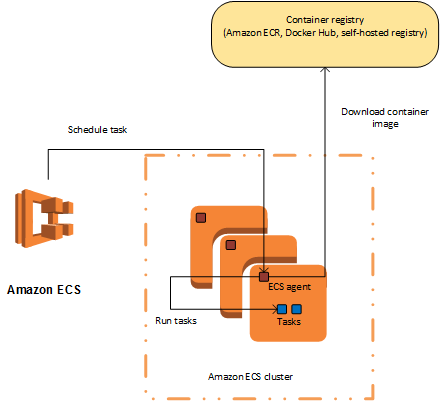

Amazon ECS Container Agent

The Amazon ECS container agent allows container instances to connect to your cluster. The Amazon ECS container agent is included in the Amazon ECS-optimized AMIs, but you can also install it on any Amazon EC2 instance that supports the Amazon ECS specification. The Amazon ECS container agent is only supported on Amazon EC2 instances.

When you launch an Amazon ECS container instance, you have the option of passing user data to the instance. The data can be used to perform common automated configuration tasks and even run scripts when the instance boots. For Amazon ECS, the most common use cases for user data are to pass configuration information to the Docker daemon and the Amazon ECS container agent.

Monitoring

- CloudWatch for monitoring ECS resources not the API action from ECS.

- Amazon ECS is integrated with AWS CloudTrail, a service that provides a record of actions taken by a user, role, or an AWS service in Amazon ECS. CloudTrail captures all API calls for Amazon ECS as events, including calls from the Amazon ECS console and from code calls to the Amazon ECS APIs.

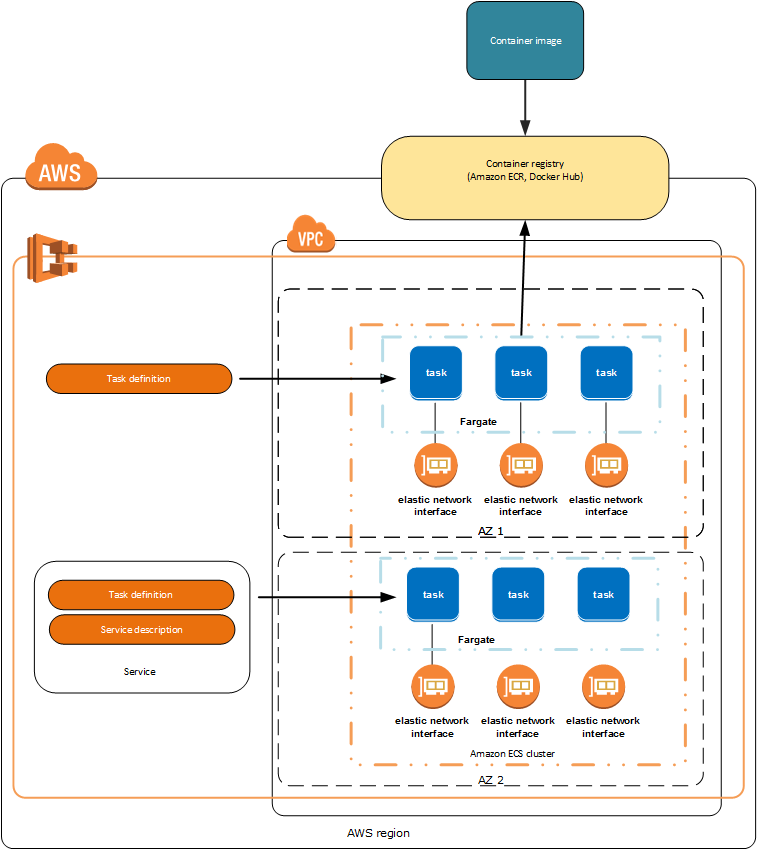

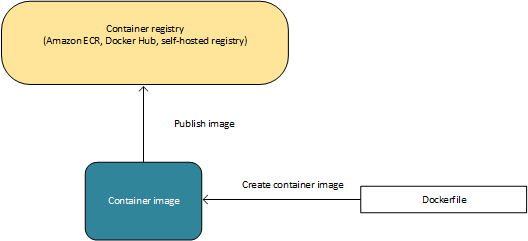

Containers and Images

To deploy applications on Amazon ECS, your application components must be architected to run in containers. A Docker container is a standardized unit of software development, containing everything that your software application needs to run: code, runtime, system tools, system libraries, etc. Containers are created from a read-only template called an image.

Images are typically built from a Dockerfile, a plain text file that specifies all of the components that are included in the container. These images are then stored in a registry from which they can be downloaded and run on your cluster.

Task Definitions

To prepare your application to run on Amazon ECS, you create a task definition. The task definition is a text file, in JSON format, that describes one or more containers, up to a maximum of ten, that form your application. It can be thought of as a blueprint for your application. Task definitions specify various parameters for your application. Examples of task definition parameters are which containers to use, which launch type to use, which ports should be opened for your application, and what data volumes should be used with the containers in the task. The specific parameters available for the task definition depend on which launch type you are using.

1 | { |

Tasks and Scheduling

A task is the instantiation of a task definition within a cluster. After you have created a task definition for your application within Amazon ECS, you can specify the number of tasks that will run on your cluster.

Each task that uses the Fargate launch type has its own isolation boundary and does not share the underlying kernel, CPU resources, memory resources, or elastic network interface with another task.

The Amazon ECS task scheduler is responsible for placing tasks within your cluster. There are several different scheduling options available. For example, you can define a service that runs and maintains a specified number of tasks simultaneously.

Clusters

When you run tasks using Amazon ECS, you place them on a cluster, which is a logical grouping of resources. When using the Fargate launch type with tasks within your cluster, Amazon ECS manages your cluster resources. When using the EC2 launch type, then your clusters are a group of container instances you manage. An Amazon ECS container instance is an Amazon EC2 instance that is running the Amazon ECS container agent. Amazon ECS downloads your container images from a registry that you specify, and runs those images within your cluster.

Container Agent

The container agent runs on each infrastructure resource within an Amazon ECS cluster. It sends information about the resource’s current running tasks and resource utilization to Amazon ECS, and starts and stops tasks whenever it receives a request from Amazon ECS.

Amazon Elastic Kubernetes Service

Run managed Kubernetes on AWS

Kubernetes is open source software that allows you to deploy and manage containerized applications at scale. Kubernetes manages clusters of Amazon EC2 compute instances and runs containers on those instances with processes for deployment, maintenance, and scaling. Using Kubernetes, you can run any type of containerized applications using the same toolset on-premises and in the cloud.

Amazon Lightsail

Launch and manage virtual private servers

AWS Batch

Run batch jobs at any scale

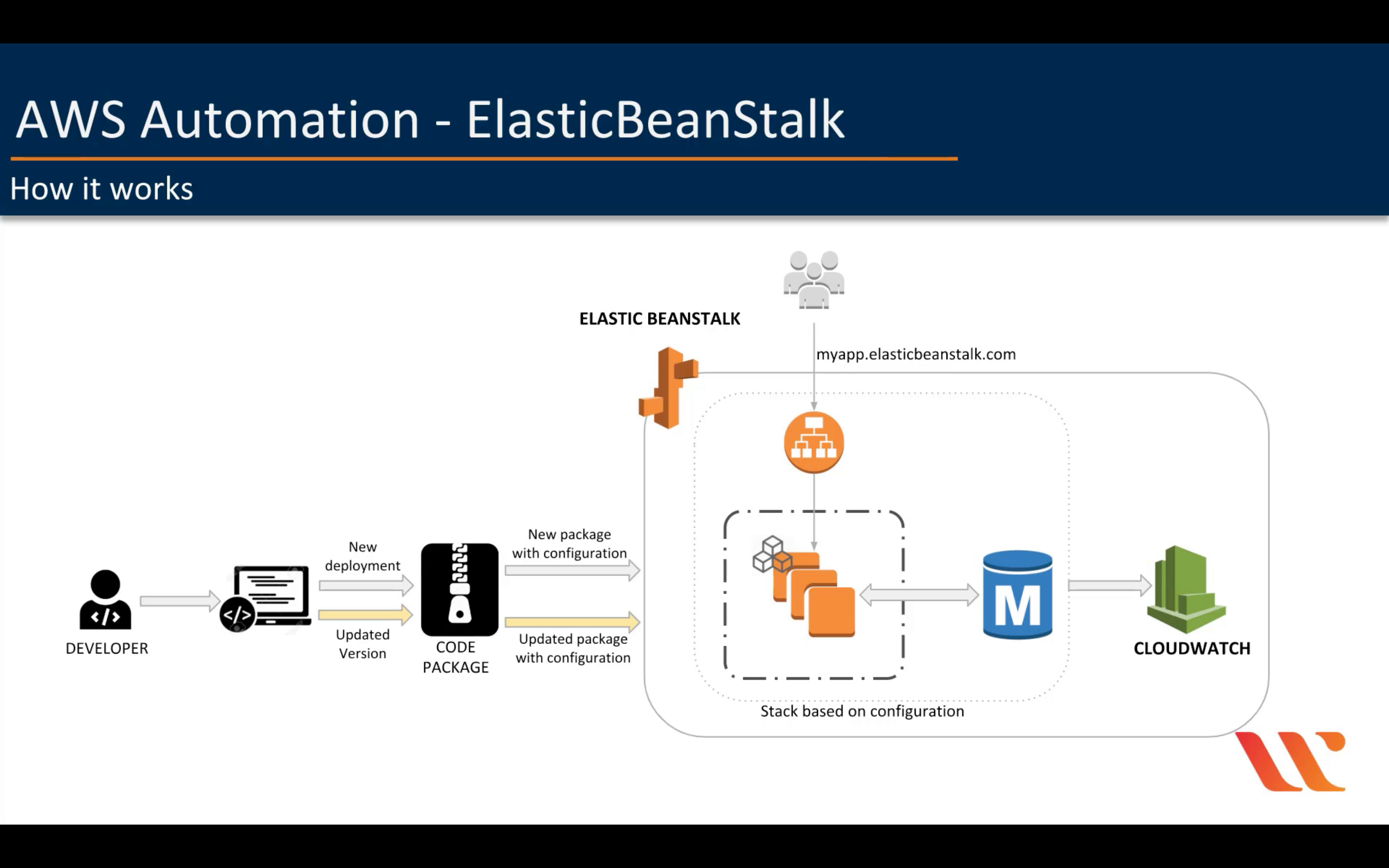

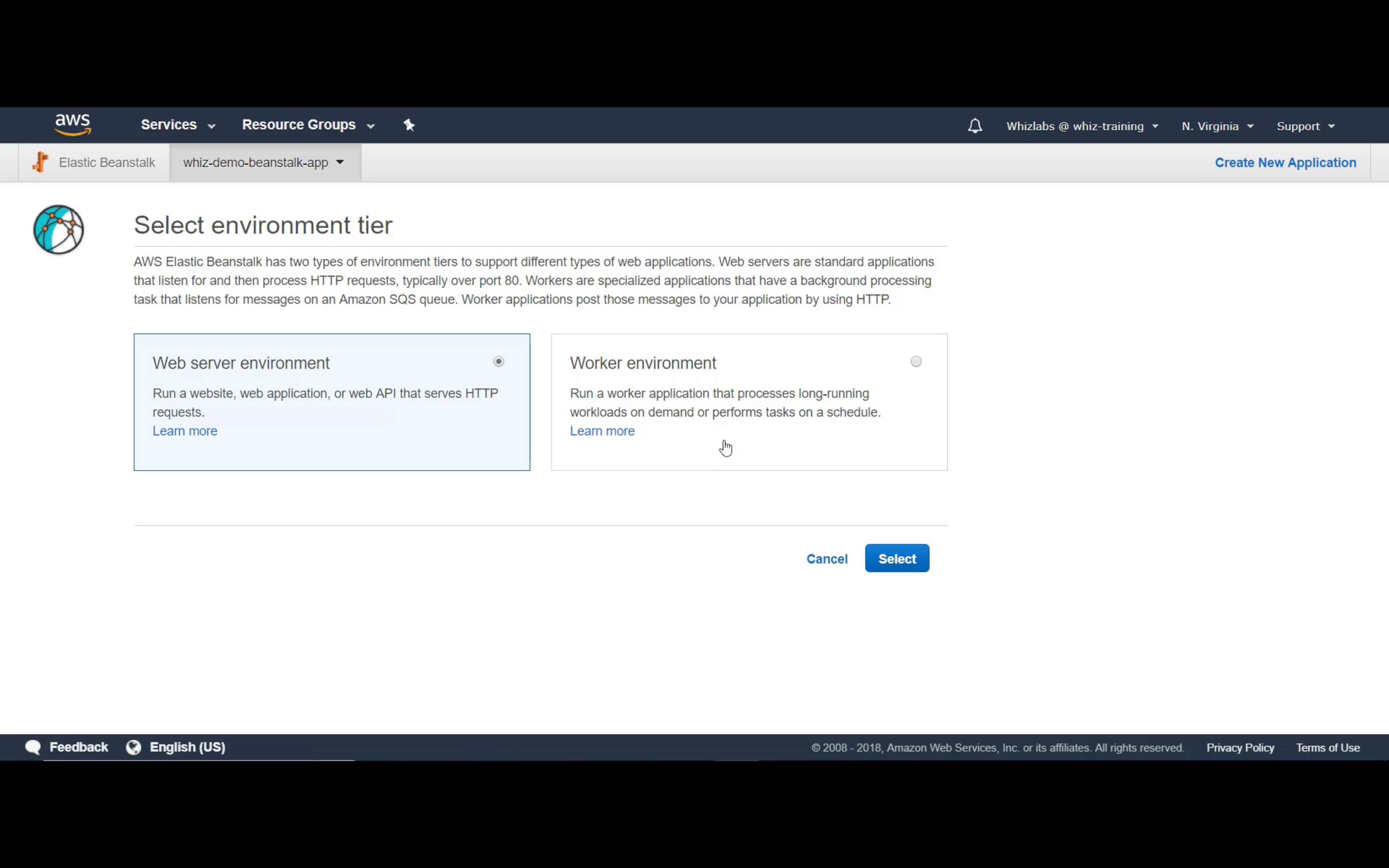

AWS Elastic Beanstalk

Run and manage web apps

With Elastic Beanstalk, you can quickly deploy and manage applications in the AWS Cloud without having to learn about the infrastructure that runs those applications. Elastic Beanstalk reduces management complexity without restricting choice or control. You simply upload your application, and Elastic Beanstalk automatically handles the details of capacity provisioning, load balancing, scaling, and application health monitoring.

AWS Fargate

Run containers without managing servers or clusters

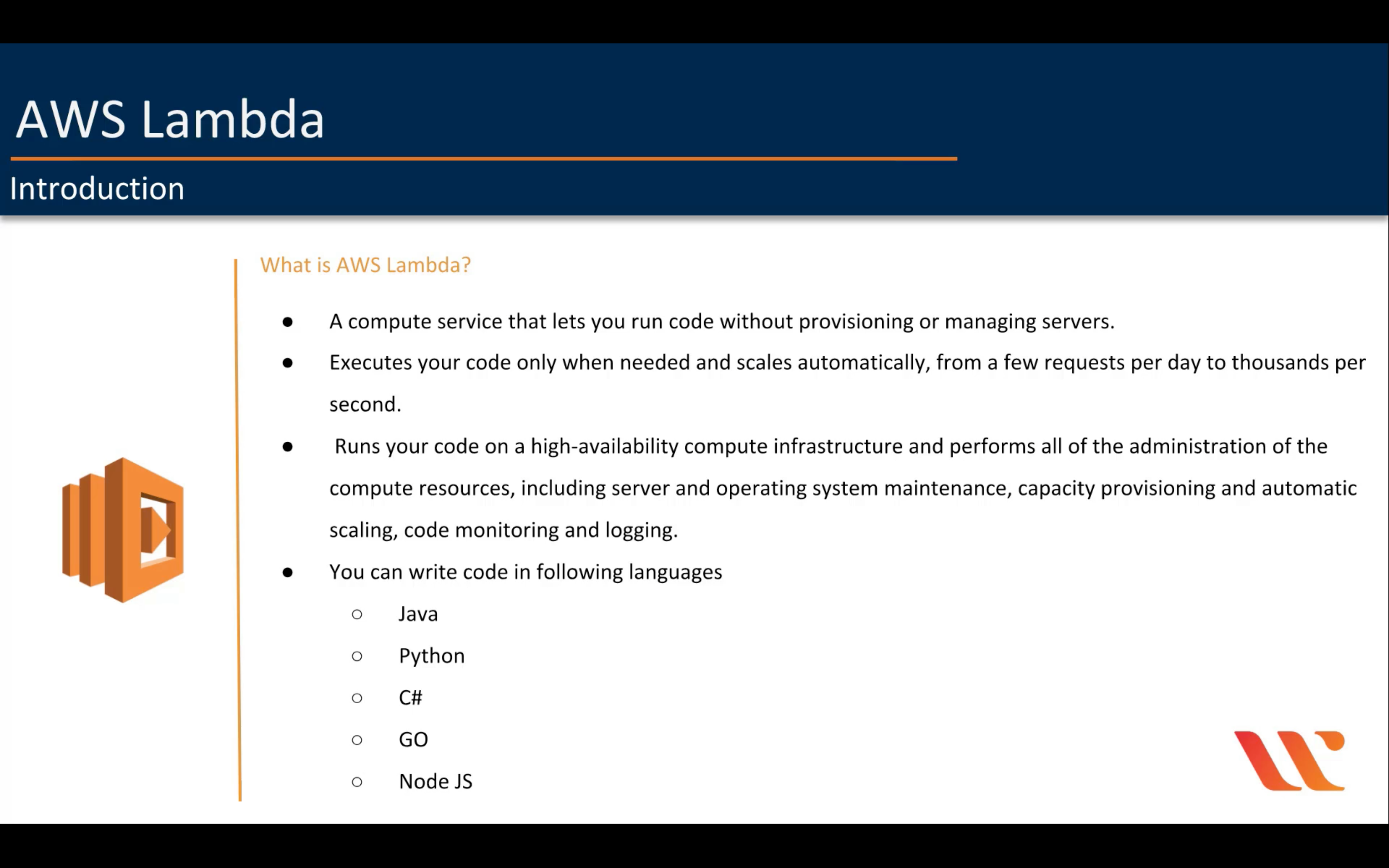

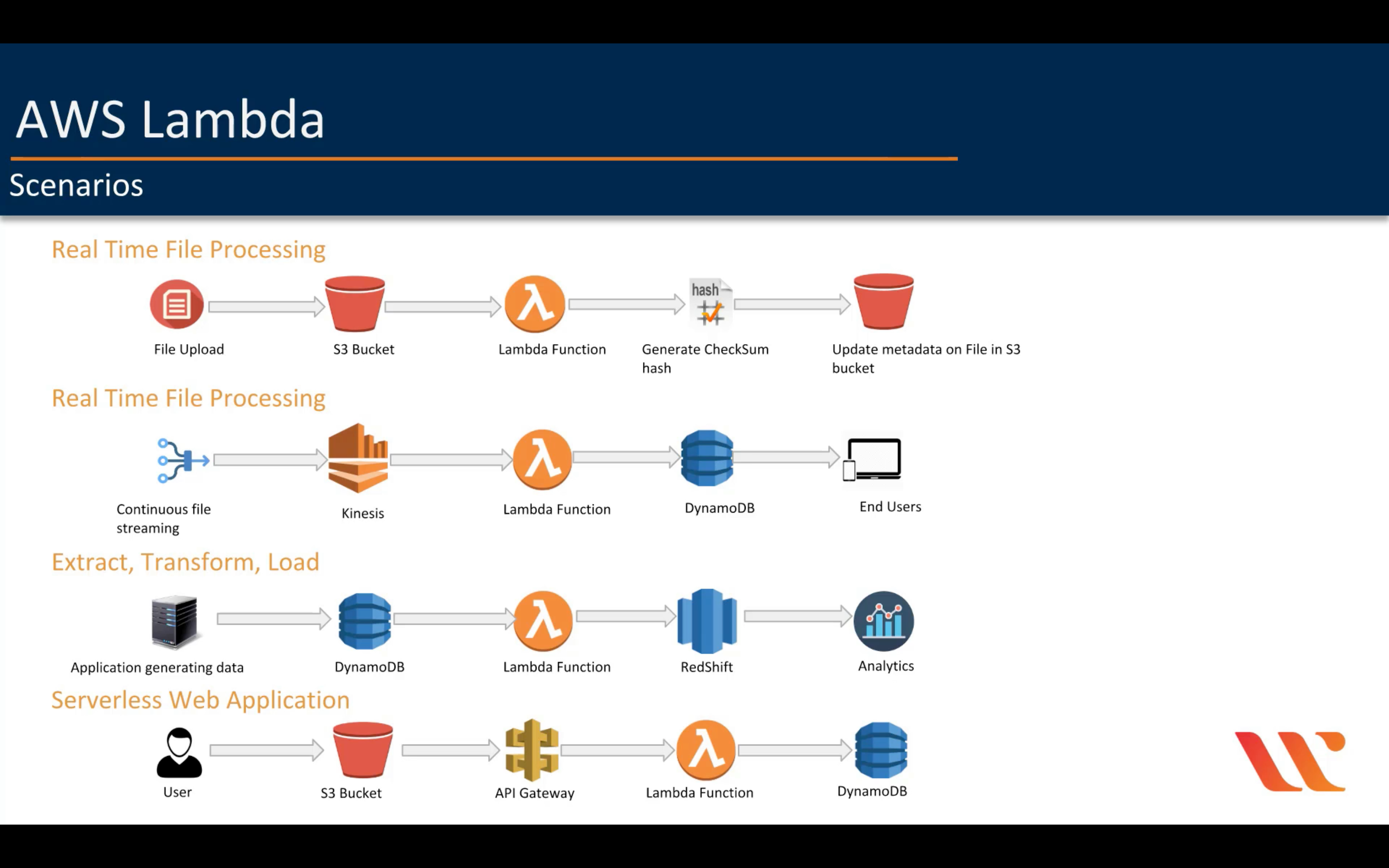

AWS Lambda

Run code without thinking about servers

- Extend other AWS services with custom logic

- Build custom back-end services

- Bring your own code

- Completely automated administration

- Built-in fault tolerance

- Automatic scaling

- Connect to relational databases

- Fine grained control over performance

- Run code in response to Amazon CloudFront requests

- Orchestrate multiple functions

- Integrated security model

- Only pay for what you use

- Flexible resource model

Timeout

- Lambda may run in private subnet without NAT gateway or VPC endpoint

Services that invoke Lambda functions Synchronously

- ELB

- Cognito

- Lex

- Alexa

- API Gateway

- CloudFront

- Kniesis Data Firehose

- Step Functions

- S3 Batch

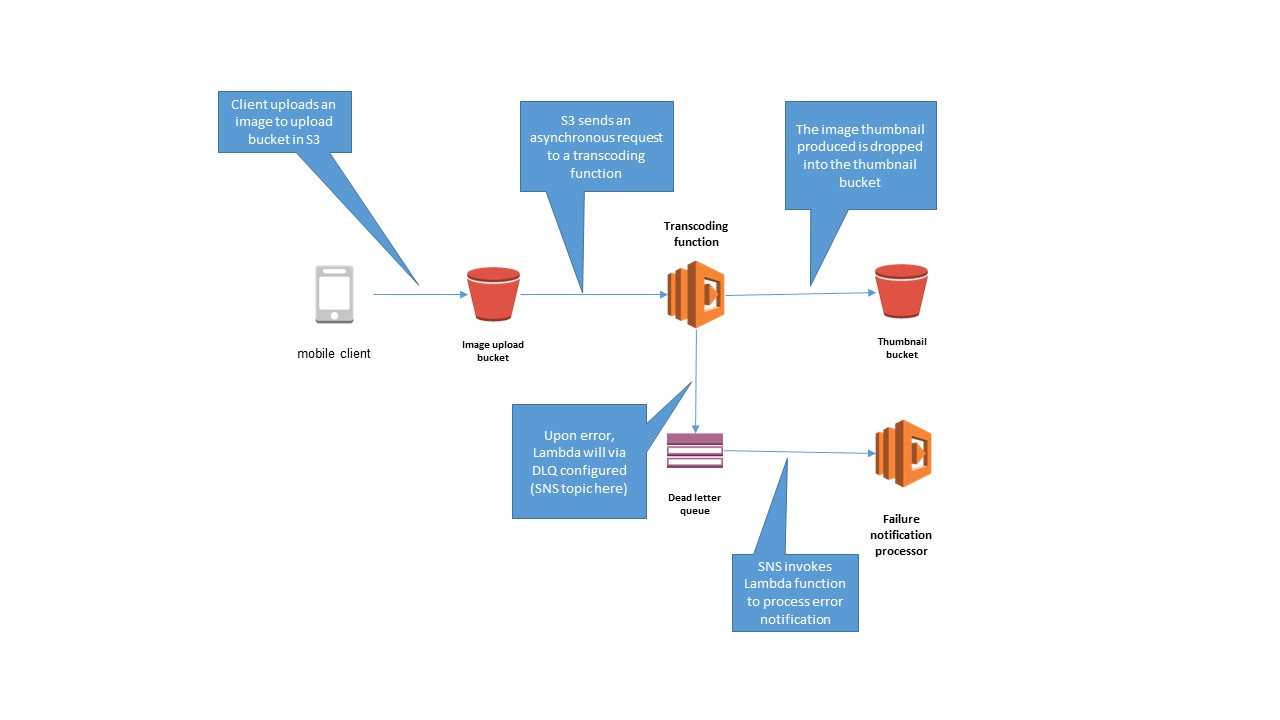

Services that invoke Lambda functions Asynchronously

- S3

- SNS

- Simple Email Service

- CloudFormation

- CloudWatch Logs

- CloudWatch Events

- CodeCommit

- Config

- IoT

- IoT Events

- CodePipeline

- Message that is sent to a queue that does not exist.

- Queue length limit exceeded.

- Message length limit exceeded.

- Message is rejected by another queue exchange.

- Message reaches a threshold read counter number, because it is not consumed. Sometimes this is called a “back out queue”.

USE SNS & SQS to analyze the DLQ failure

no-VPC mode will deny the connection from Lambda function to any private VPC.

- /tmp limitation: 500 MB

- timeout: 900s

- Memory: 128 MB to 3,008 MB, in 64 MB increments.

- Function Layers: 5

SQS Queue for Lambda: ReceiveMessage Call 10 Queue message per batch

CloudFront Events That Can Trigger a Lambda Function

- Viewer Request

- Origin Request

- Origin Response

- Viewer Response

Change Code: a brief window of time, < 1 minute to run your old code or new code.

Poll-based service

- Kinesis

- SQS

- DynamoDB

PROD: alias and ARN to point a specific version

Function policy should be edited through CLI

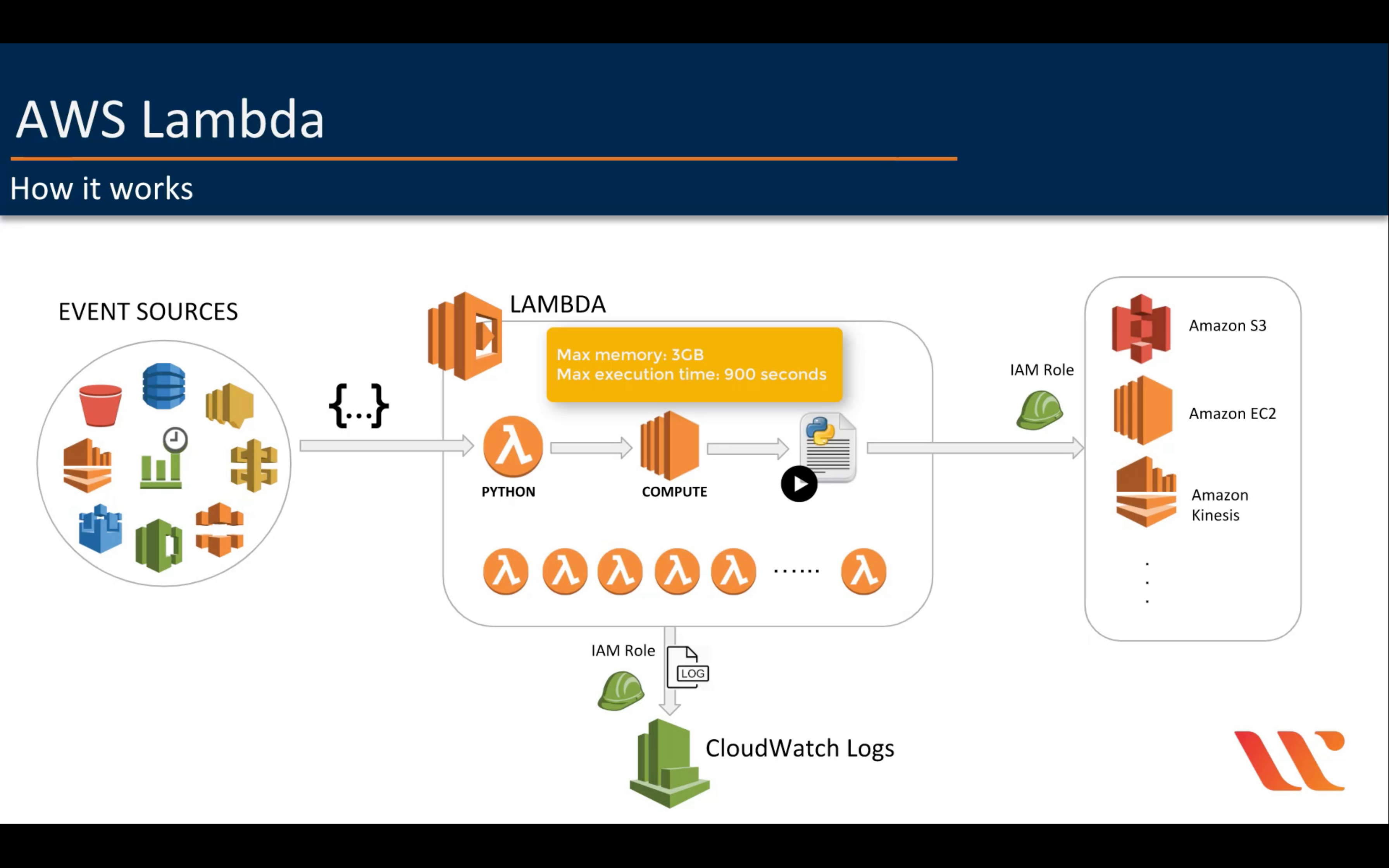

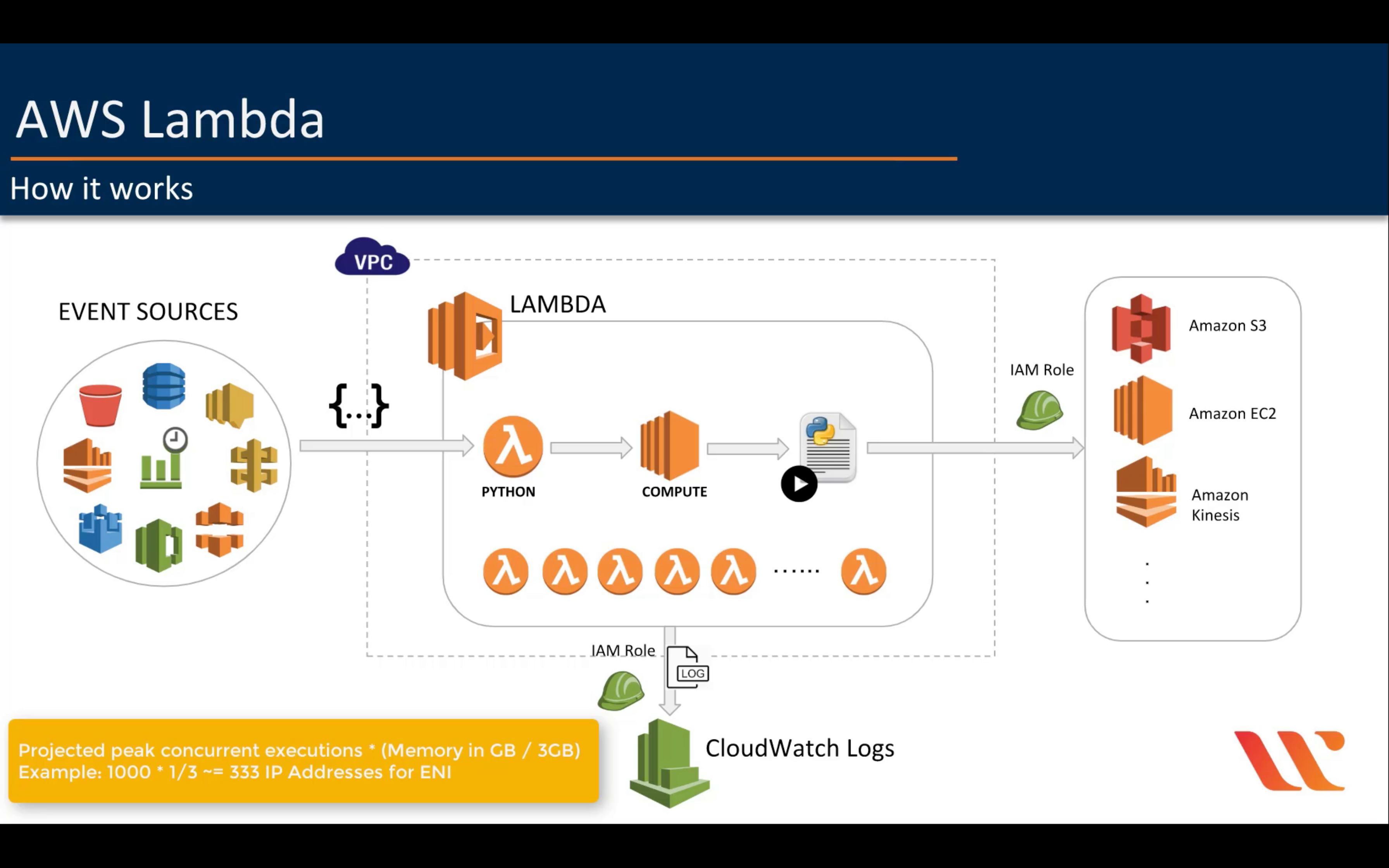

Project Peak Concurrent executions * (Memory in GB / 3GB)

A step-by-step guide to ensure your function is optimally configured for scalability:

Calculate your Peak Concurrent Executions with this formula:

Peak Concurrent Executions = Peak Requests per Second * Average Function Duration (in seconds)

Now calculate your Required ENI Capacity:

Required ENI Capacity = Projected peak concurrent executions * (Function Memory Allocation in GB / 3GB)

If Peak Concurrent Executions > Account Level Concurrent Execution Limit (default=1,000), then you will need to ask AWS to increase this limit.

Configure your function to use all the subnets available inside the VPC that have access to the resource that your function needs to connect to. This both maximizes Actual ENI Capacity and provides higher availability (assuming subnets are spread across 2+ availability zones).

Calculate your Actual ENI Capacity using these steps.

If Required ENI Capacity > Actual ENI Capacity, then you will need to do one or more of the following:

Decrease your function’s memory allocation to decrease your Required ENI Capacity.

Refactor any time-consuming code which doesn’t require VPC access into a separate Lambda function.

Implement throttle-handling logic in your app (e.g. by building retries into a client).

If Required ENI Capacity > your EC2 Network Interfaces per region account limit then you will need to request that AWS increase this limit.

Consider configuring a function-level concurrency limit to ensure your function doesn’t hit the ENI Capacity limit and also if you wish to force throttling at a certain limit due to downstream architectural limitations.

Monitor the concurrency levels of your functions in production using CloudWatch metrics so you know if invocations are being throttled or erroring out due to insufficient ENI capacity.

If your Lambda function communicates with a connection-based backend service such as RDS, ensure that the maximum number of connections configured for your database is less than your Peak Concurrent Executions, otherwise, your functions will fail with connection errors. See here for more info on managing RDS connections from Lambda. (kudos to Joe Keilty for mentioning this in the comments)

Permissions for Lambda function to write log into CloudWatch

- logs: CreateLogGroup

- logs: CreateLogStream

- logs: PutLogEvents

Working with AWS Lambda function metrics

InvocationsErrorsDeadLetterErrorsDestinationDeliveryFailuresThrottlesProvisionedConcurrencyInvocationsProvisionedConcurrencySpilloverInvocations

getFunctionVersion from Context object

AWS_LAMBDA_FUNCTION_VERSION

200 for function error

AWS Outposts

Run AWS infrastructure on-premises

AWS Serverless Application Repository

Discover, deploy, and publish serverless applications

AWS Wavelength

Deliver ultra-low latency applications for 5G devices

VMware Cloud on AWS

Build a hybrid cloud without custom hardware

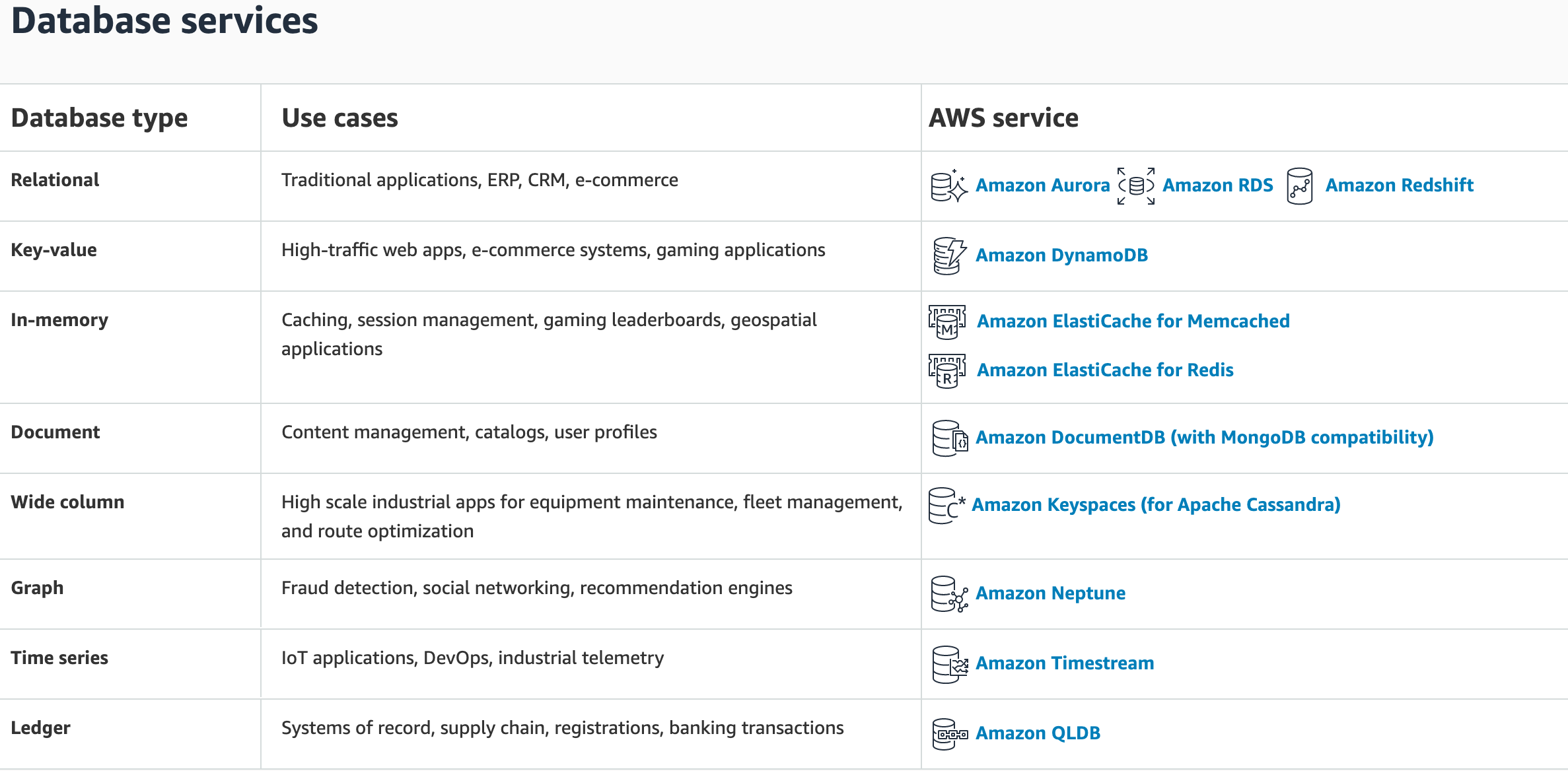

Database

Amazon Aurora

High performance managed relational database

- High Performance and Scalability

- High Availability and Durability

- Highly Secure

- MySQL and PostgreSQL Compatible

- Fully Managed

- Migration Support

Amazon DocumentDB (with MongoDB compatibility)

Fully managed document database

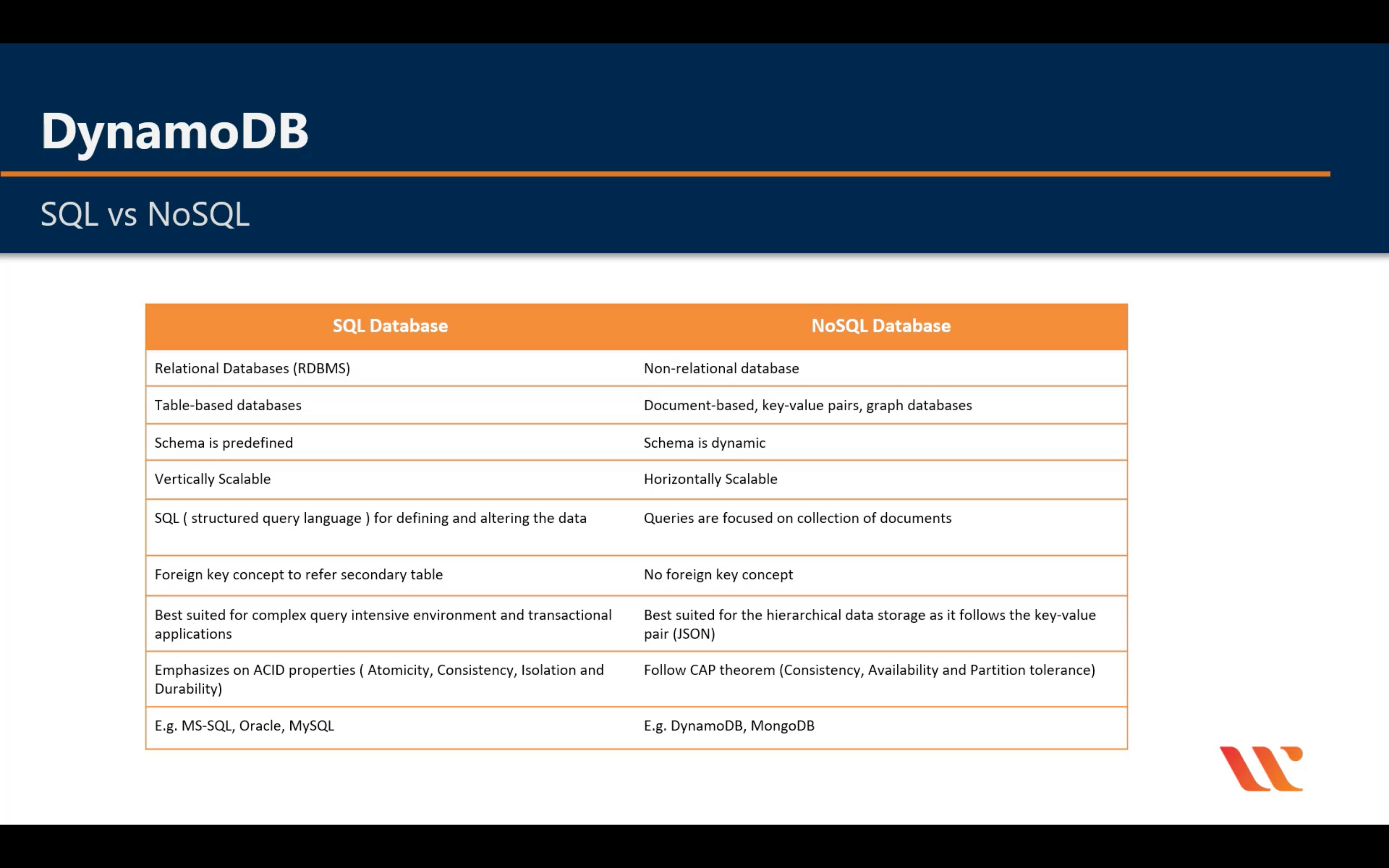

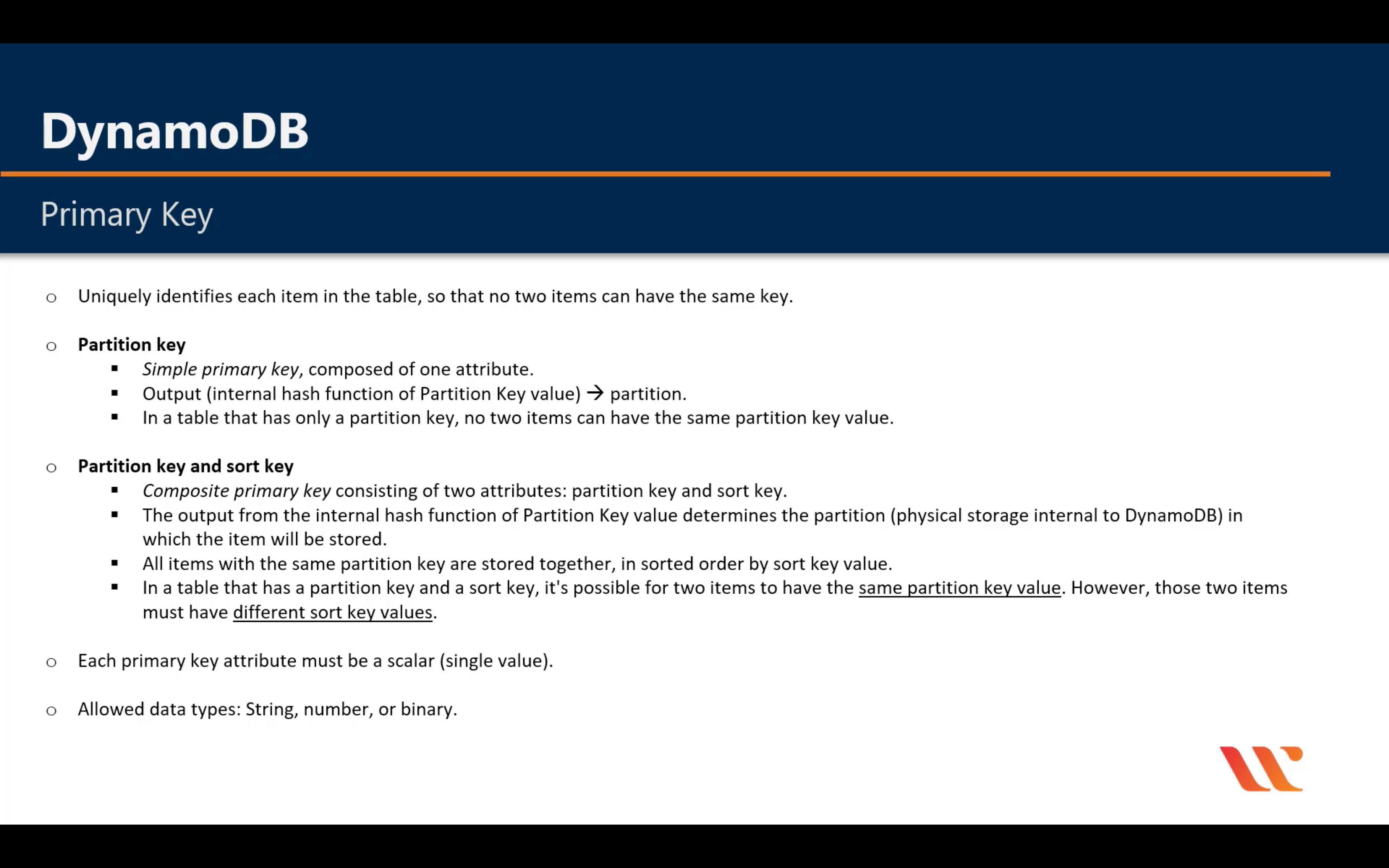

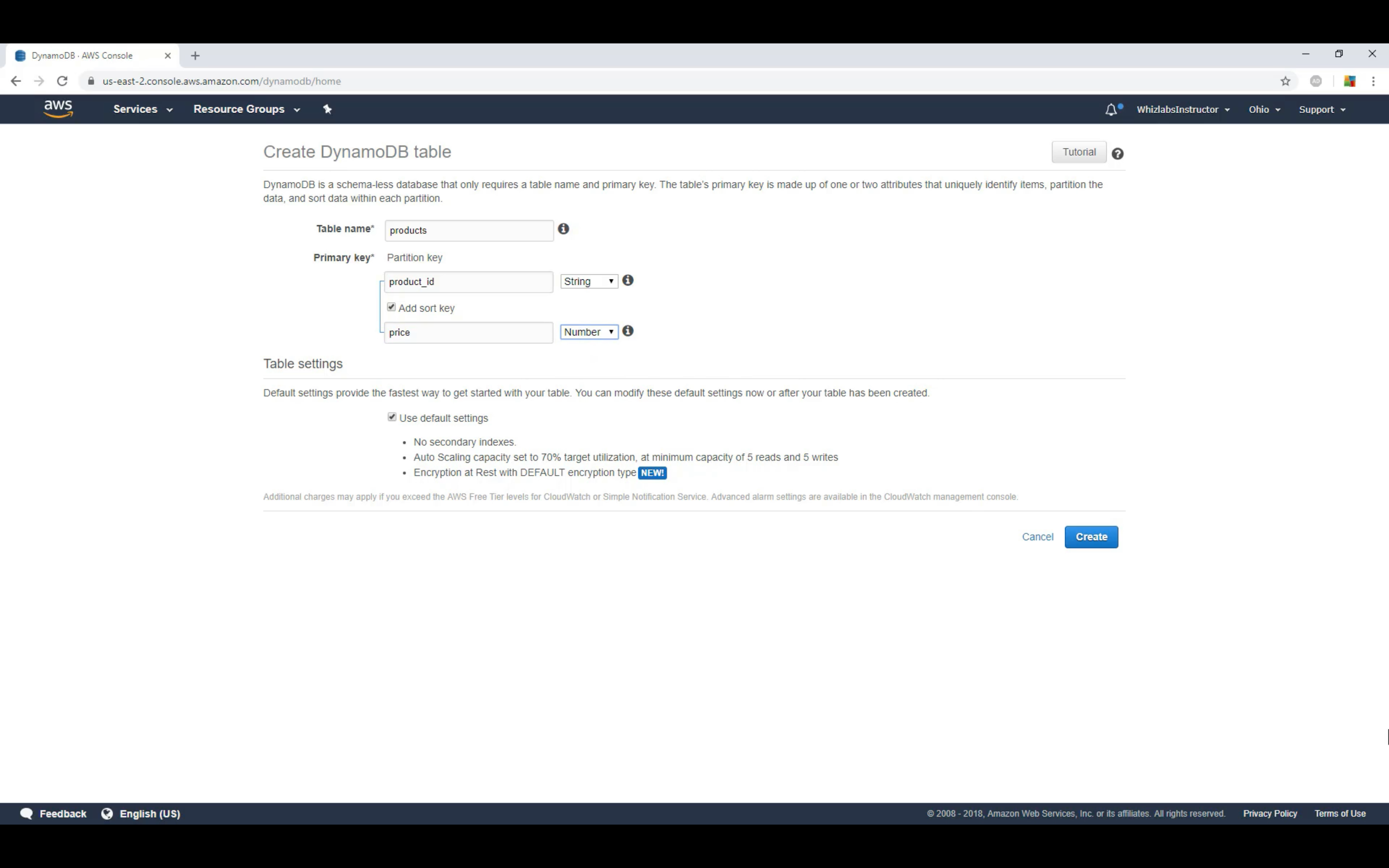

Amazon DynamoDB

Managed NoSQL database

SQL vs. NoSQL

Introduction

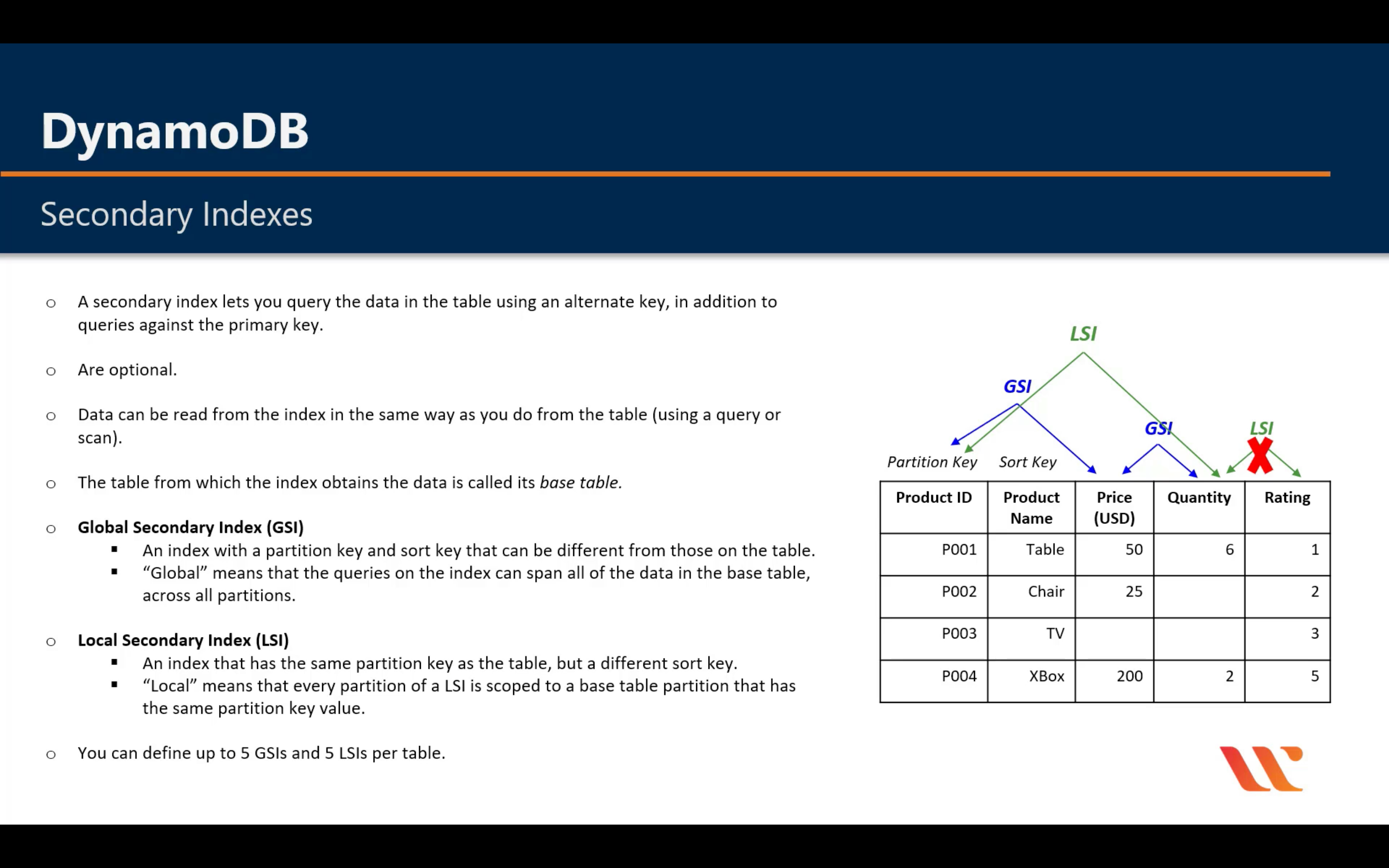

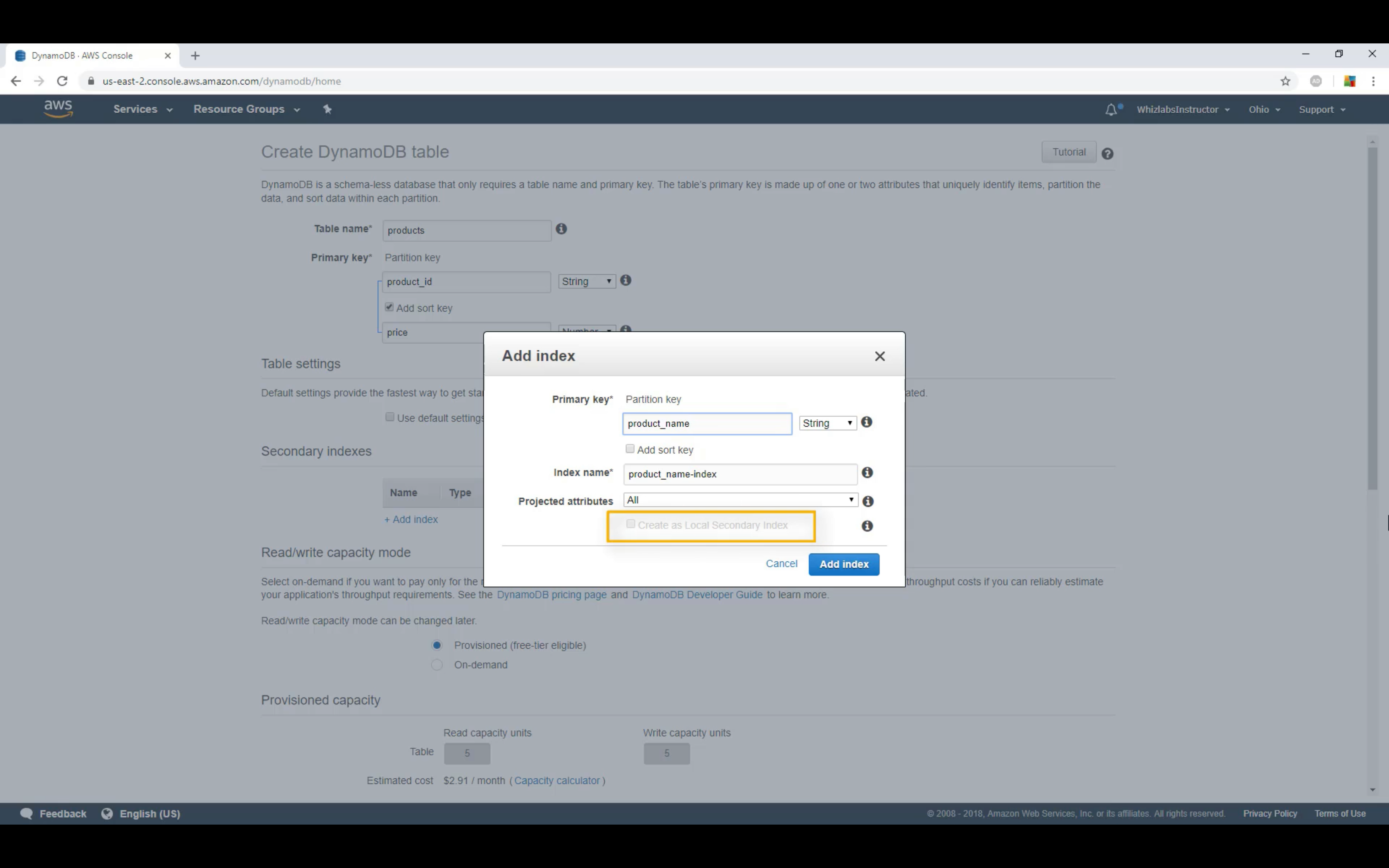

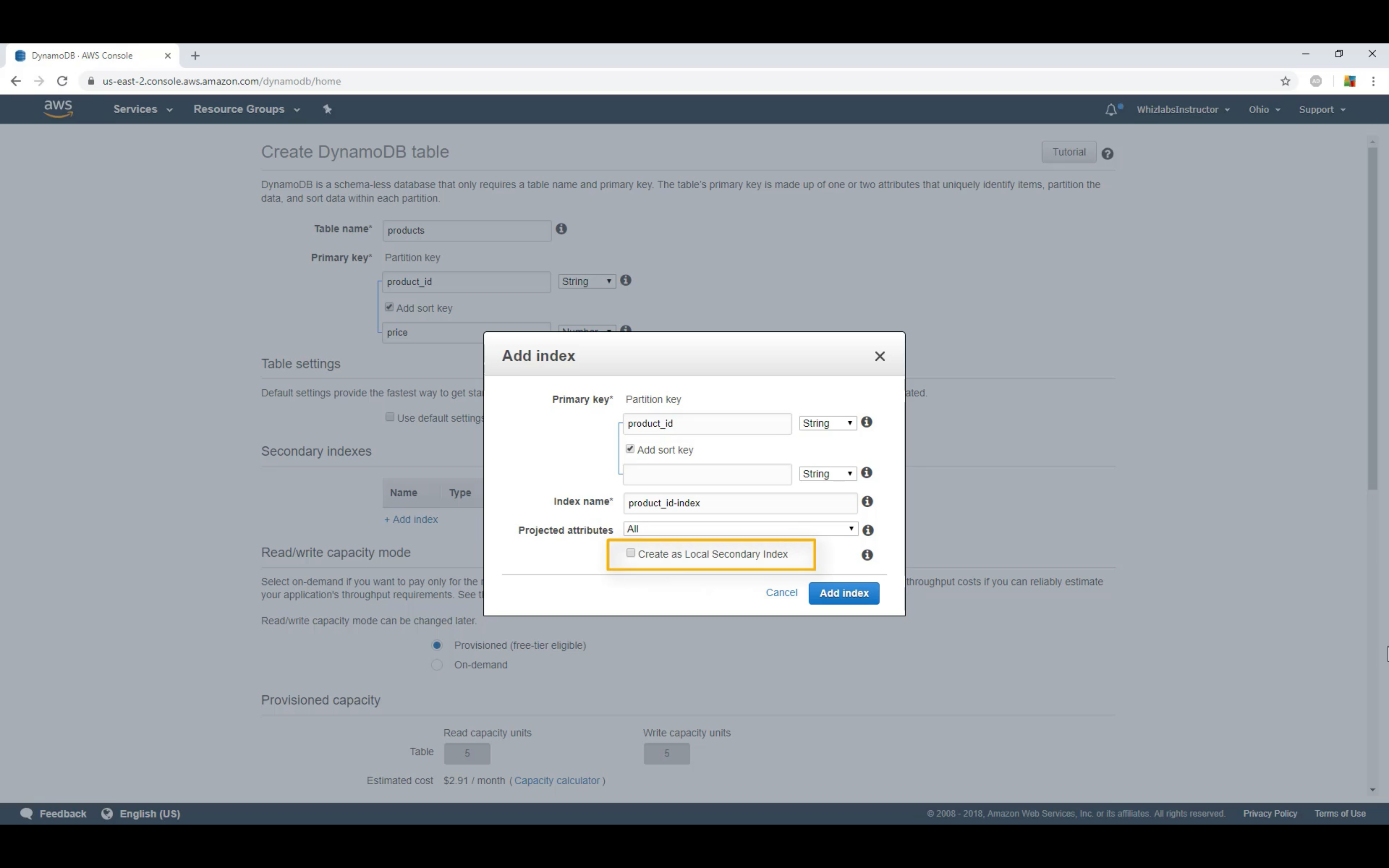

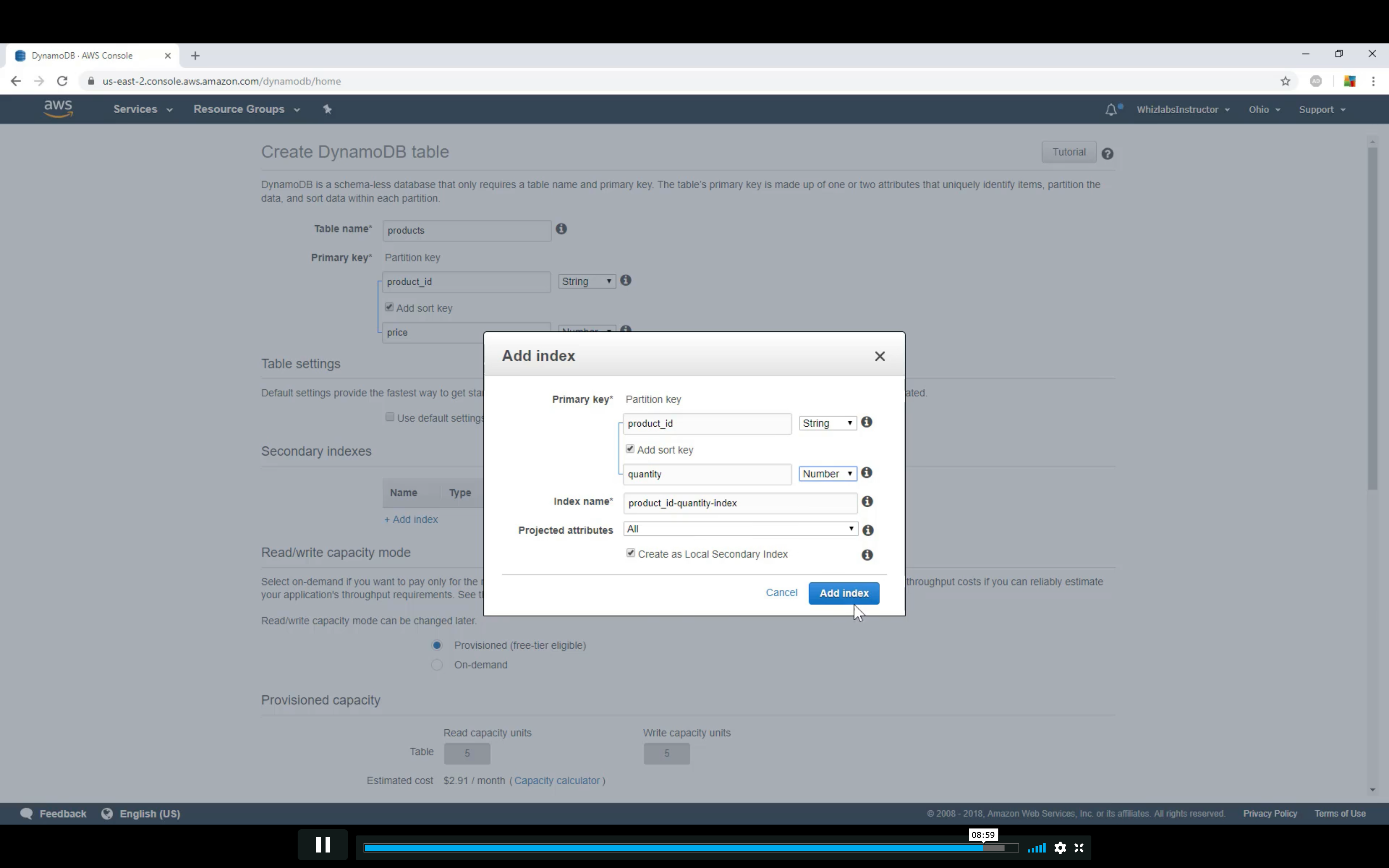

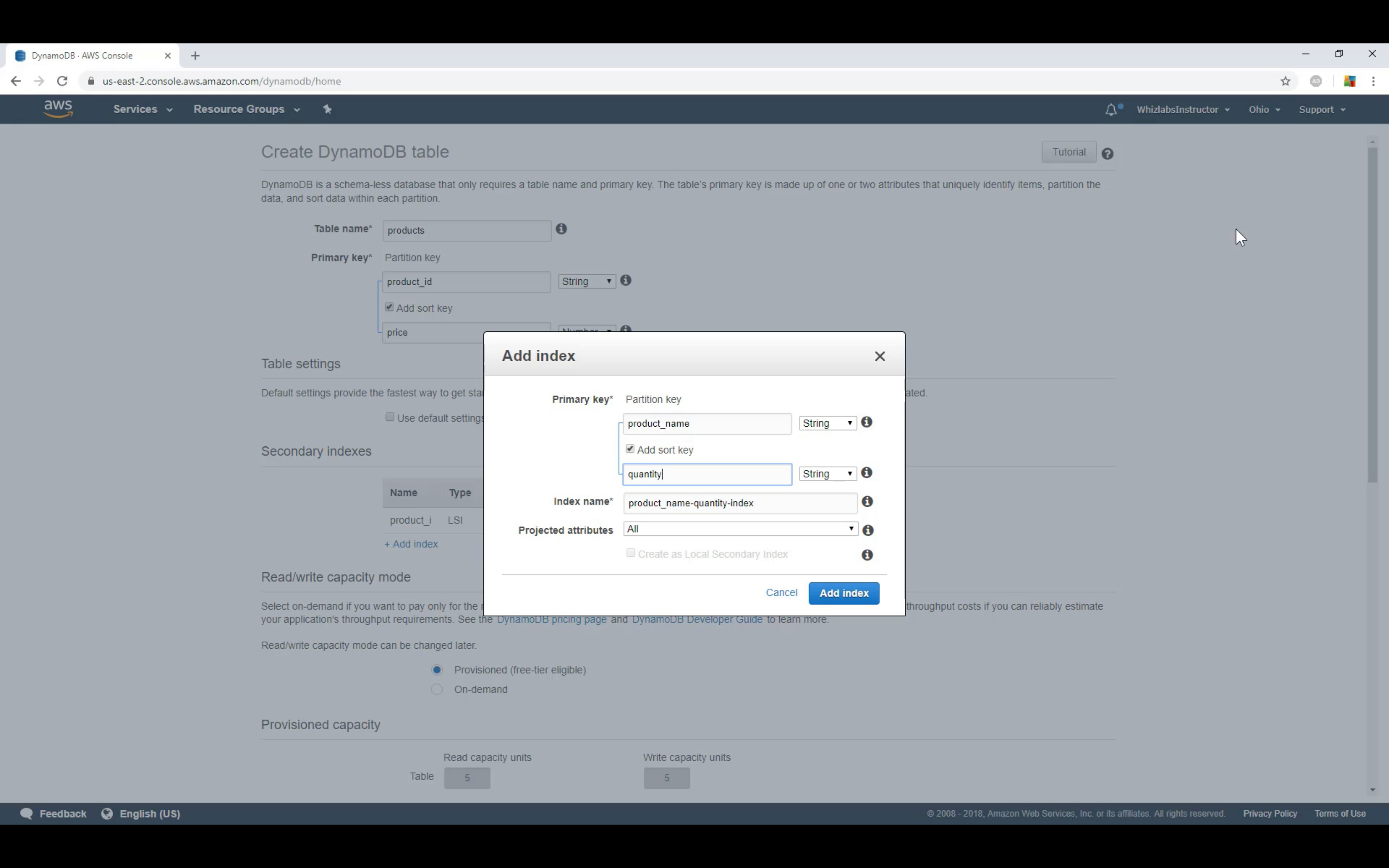

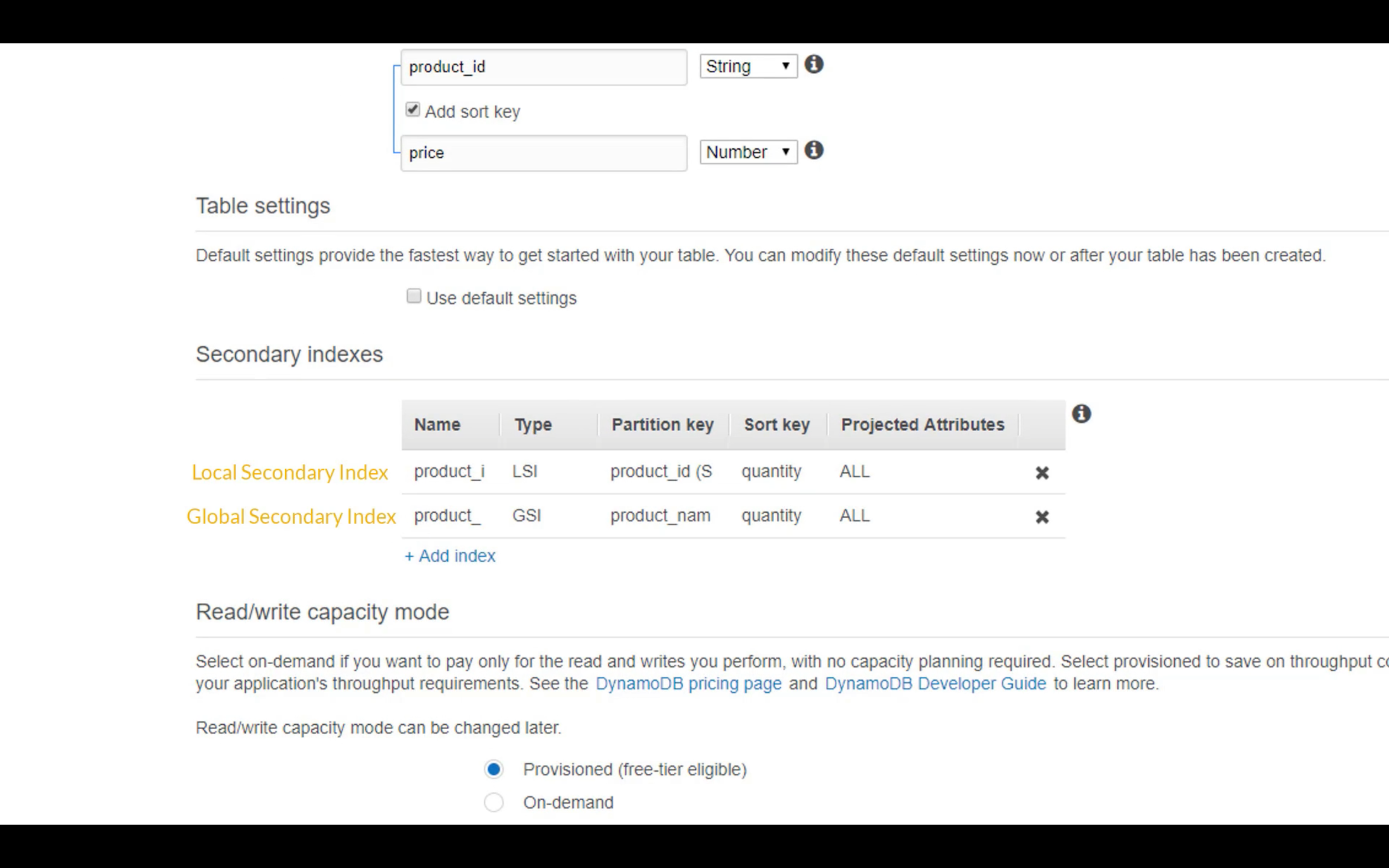

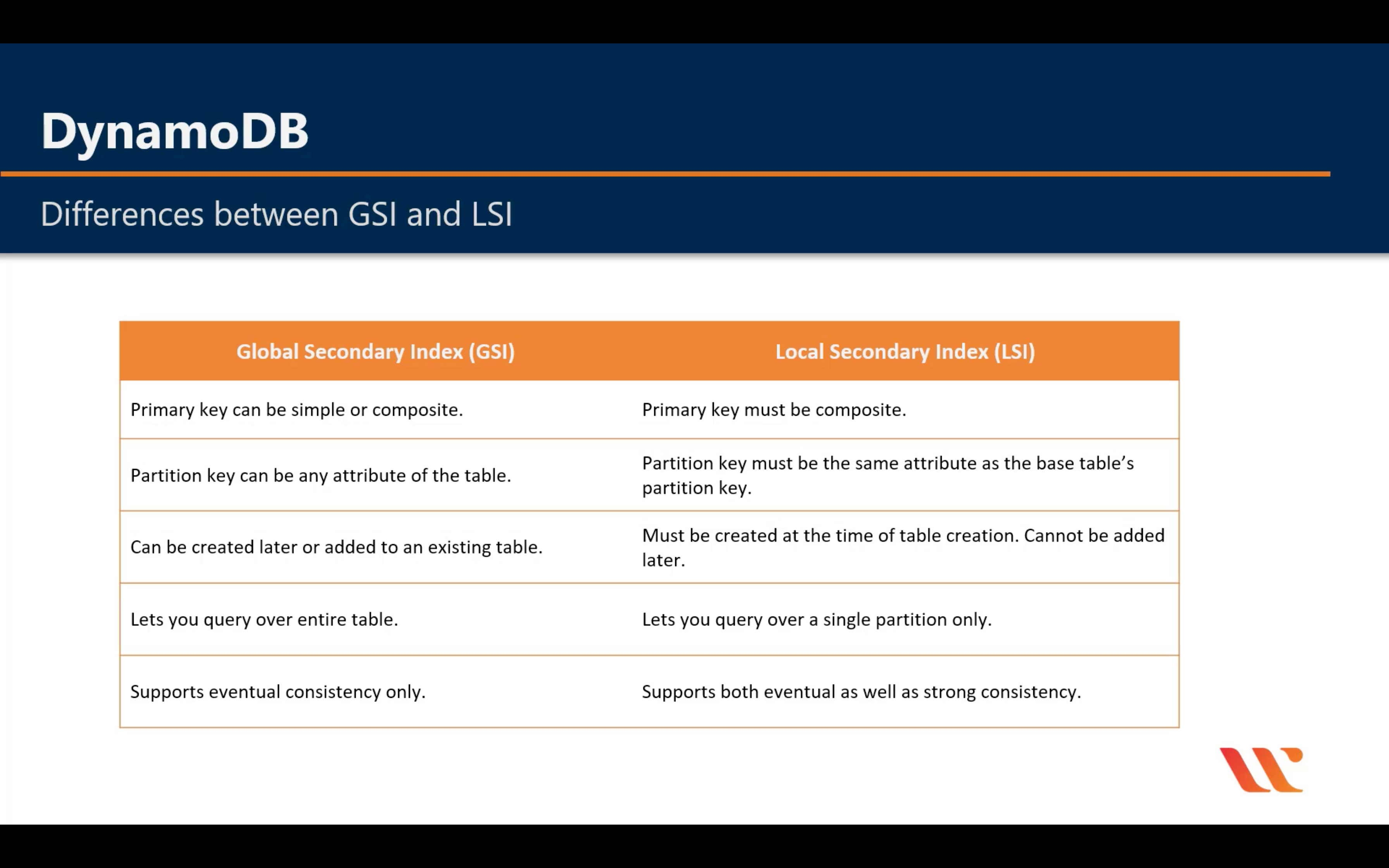

LSI: Must be composite with the partition key

GSI: Could be single or composite

GSI vs LSI

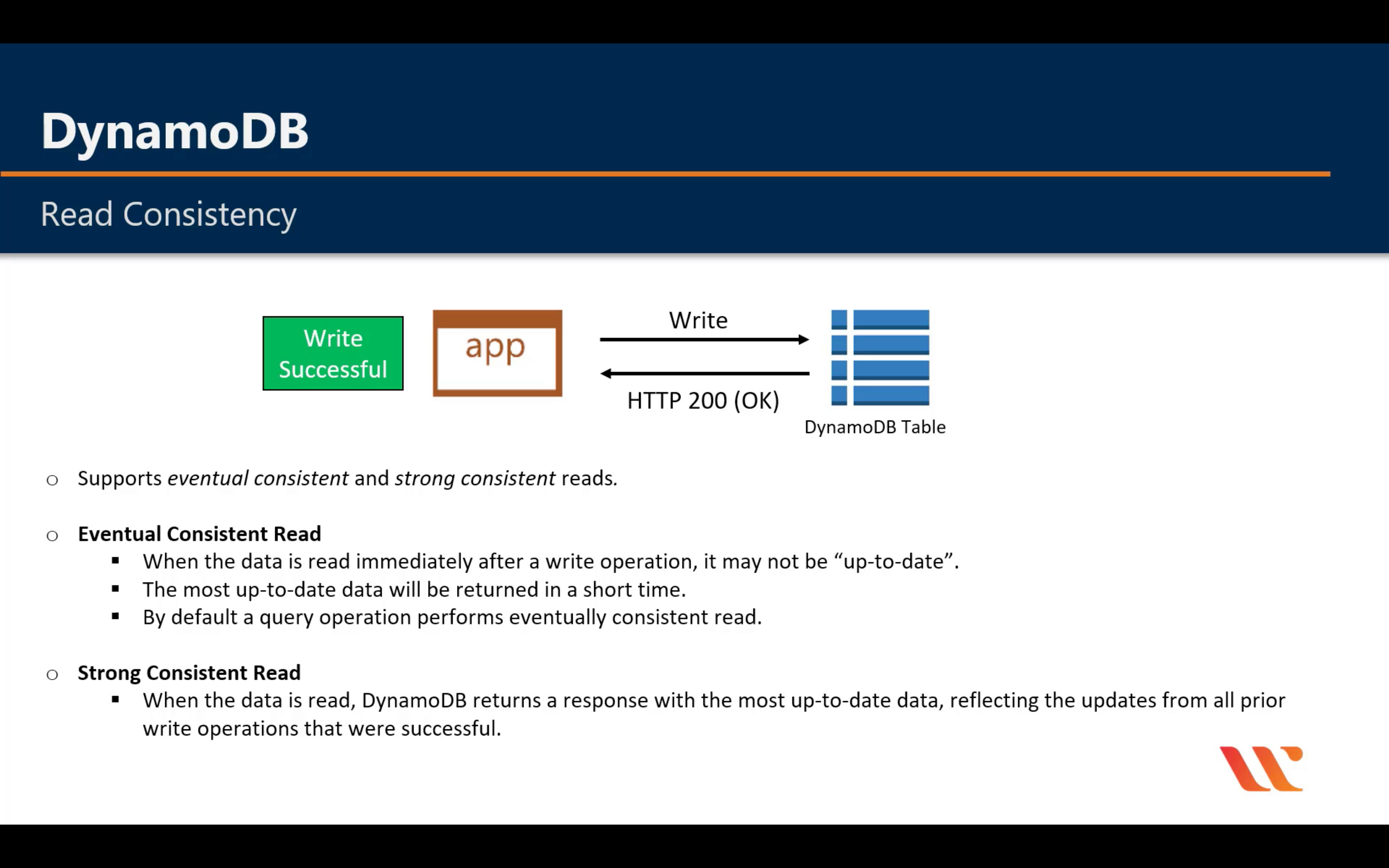

Read Consistency

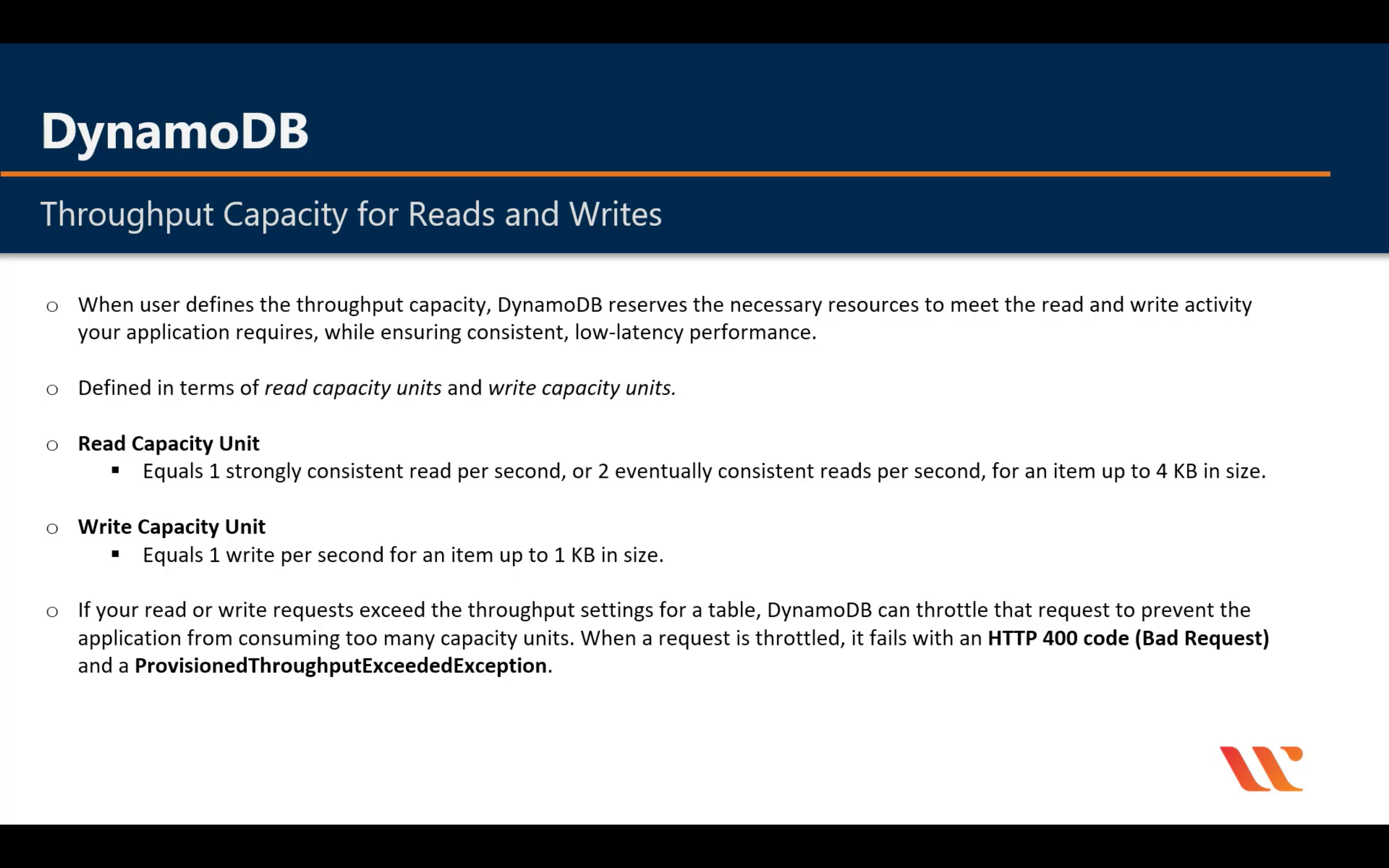

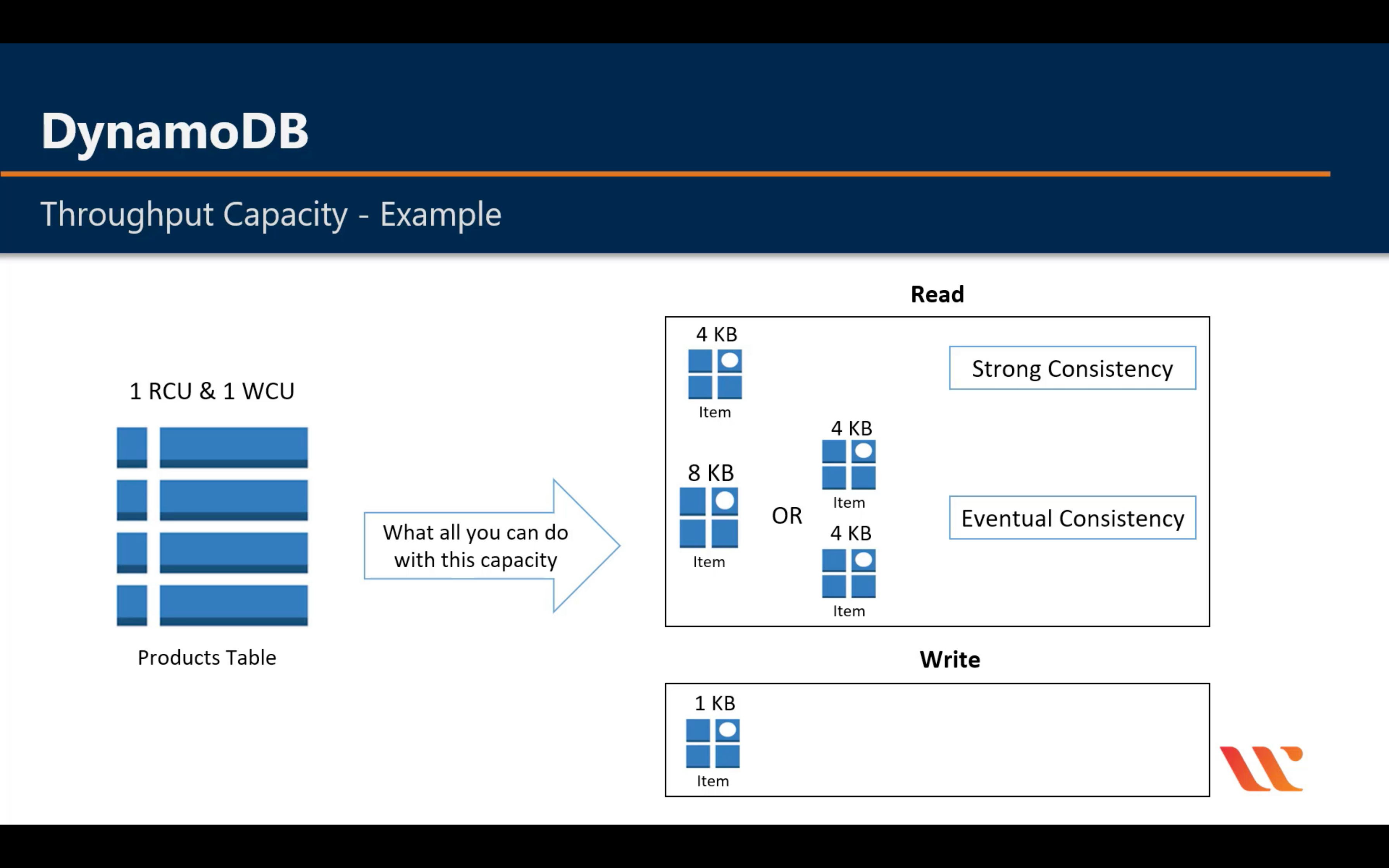

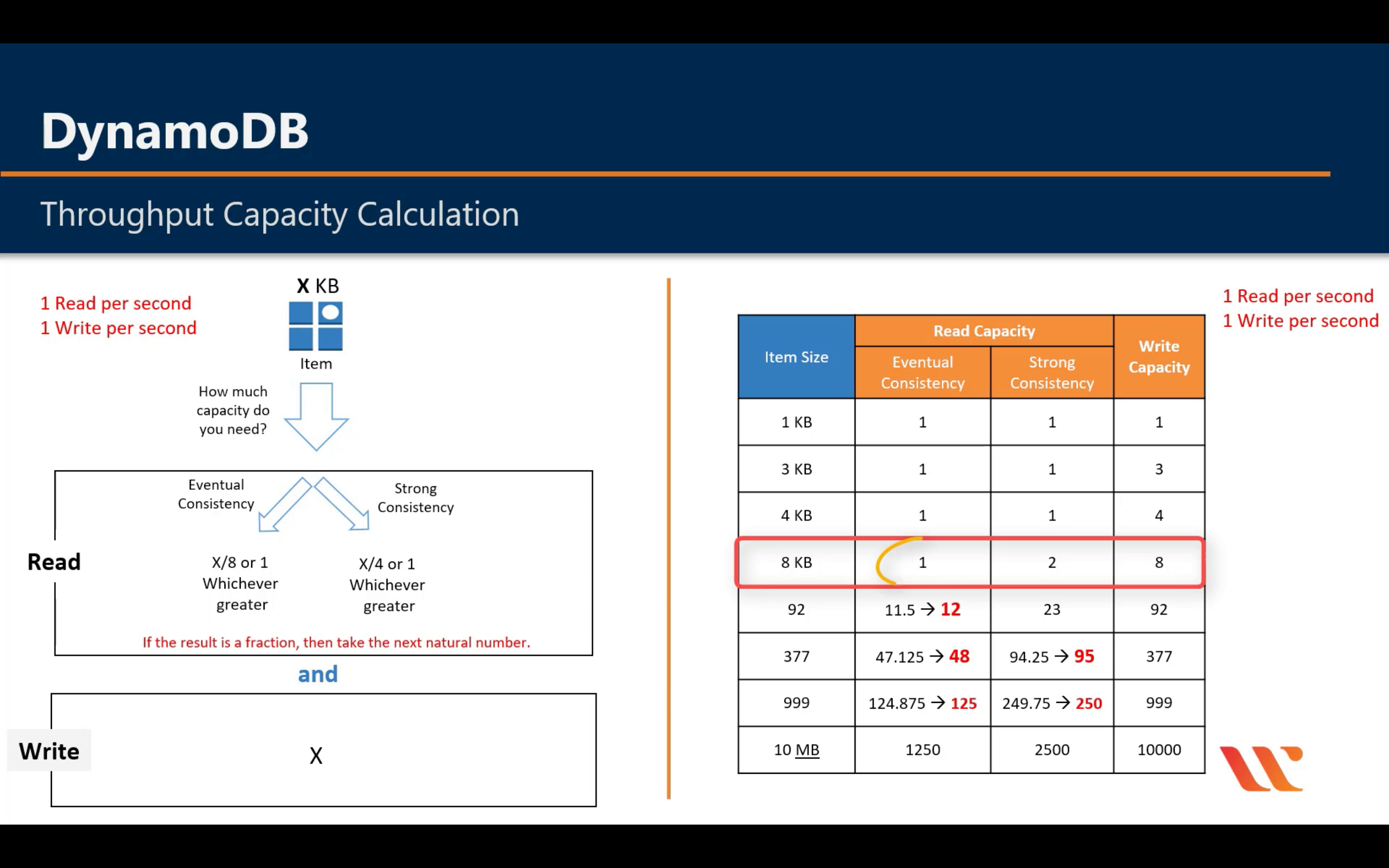

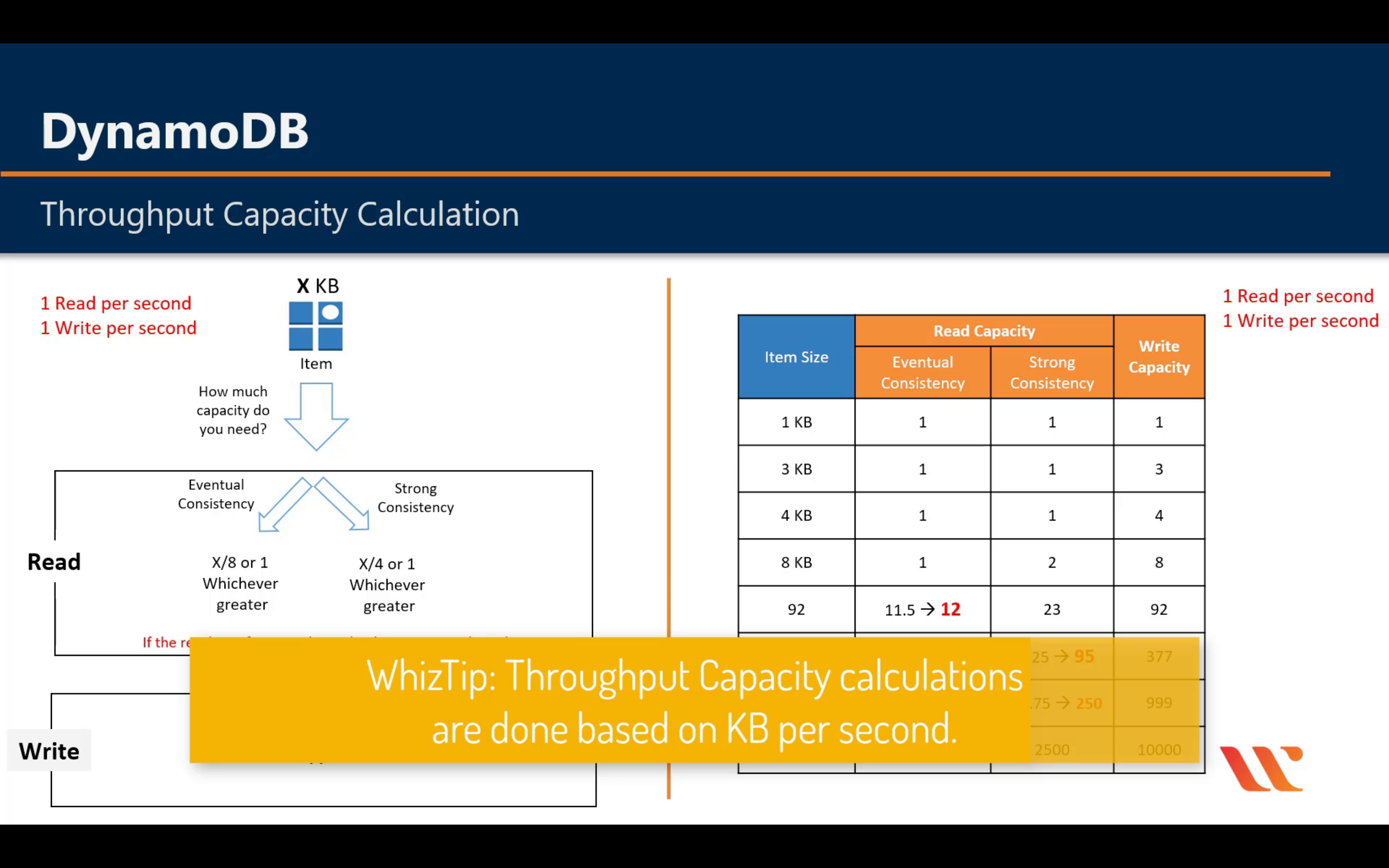

Throughput Capacity

1MB = 1,000 KB

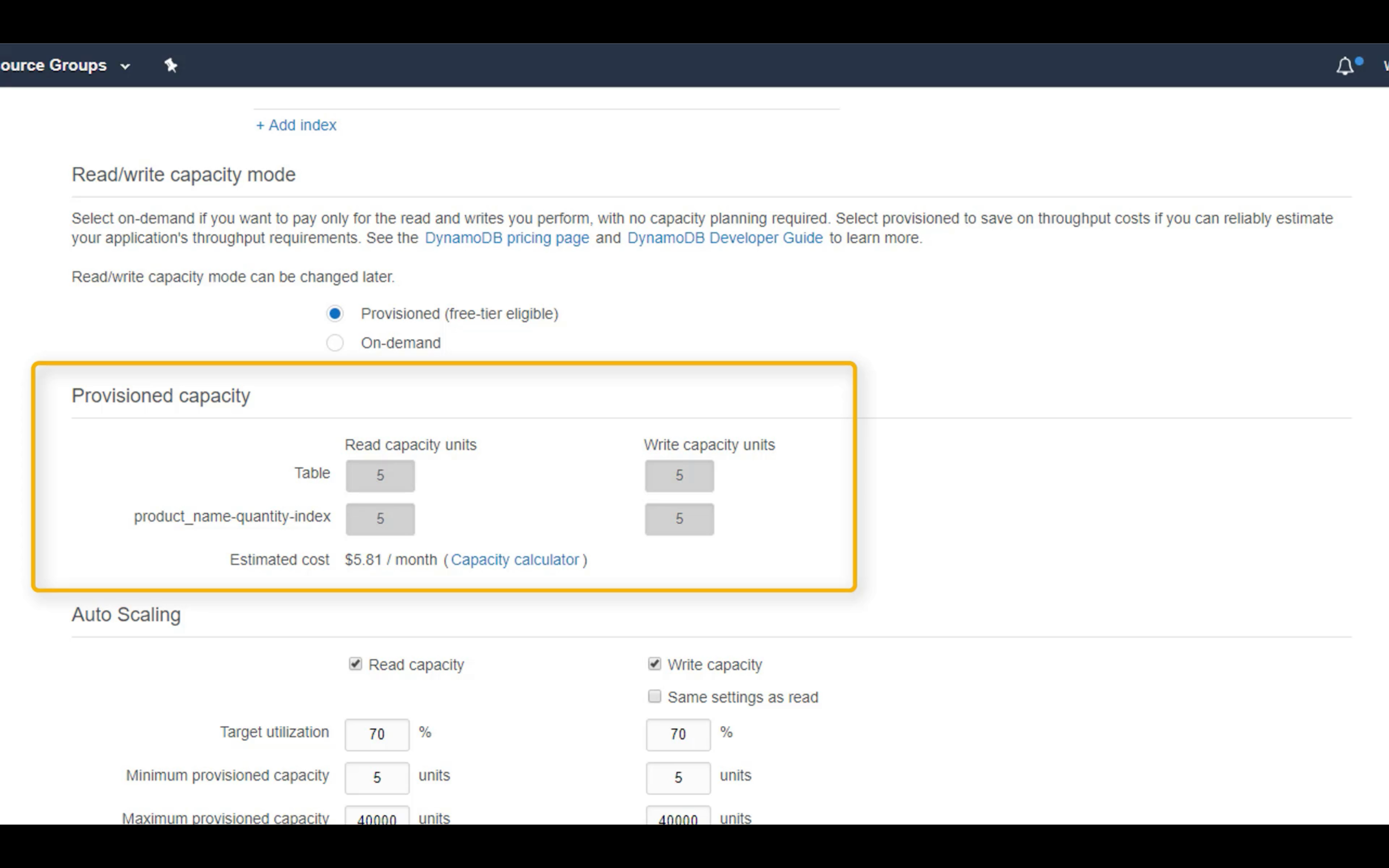

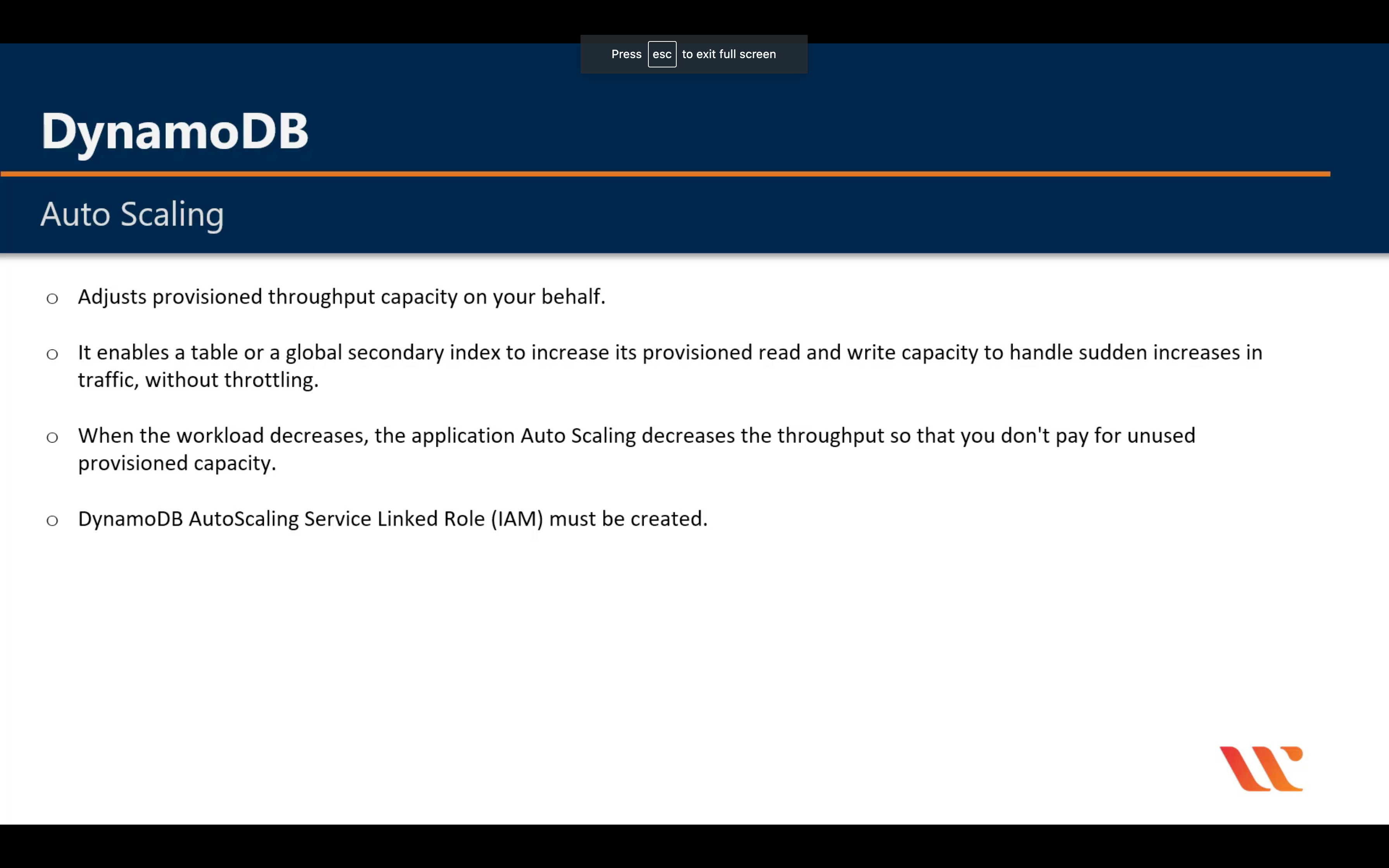

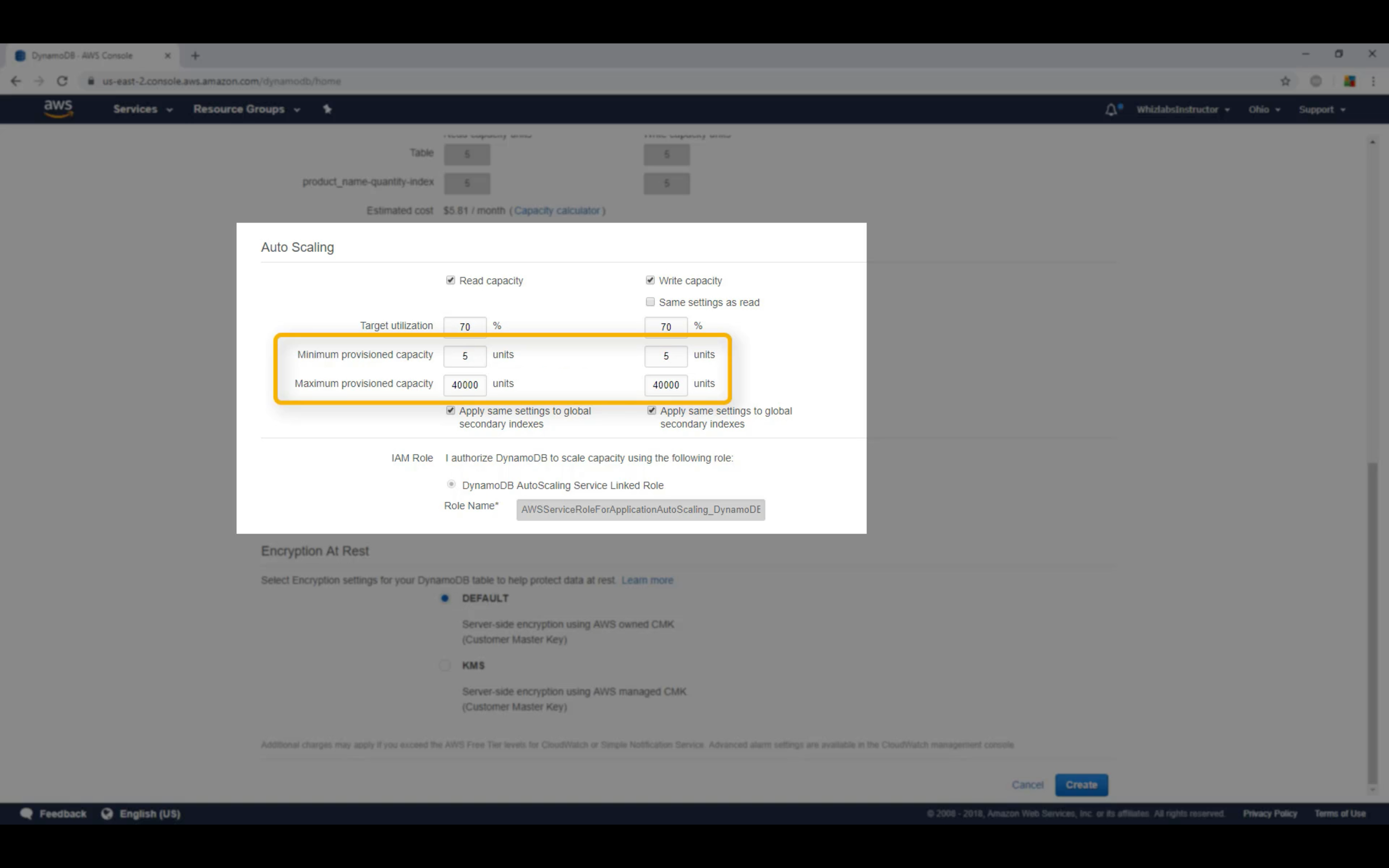

Auto Scaling

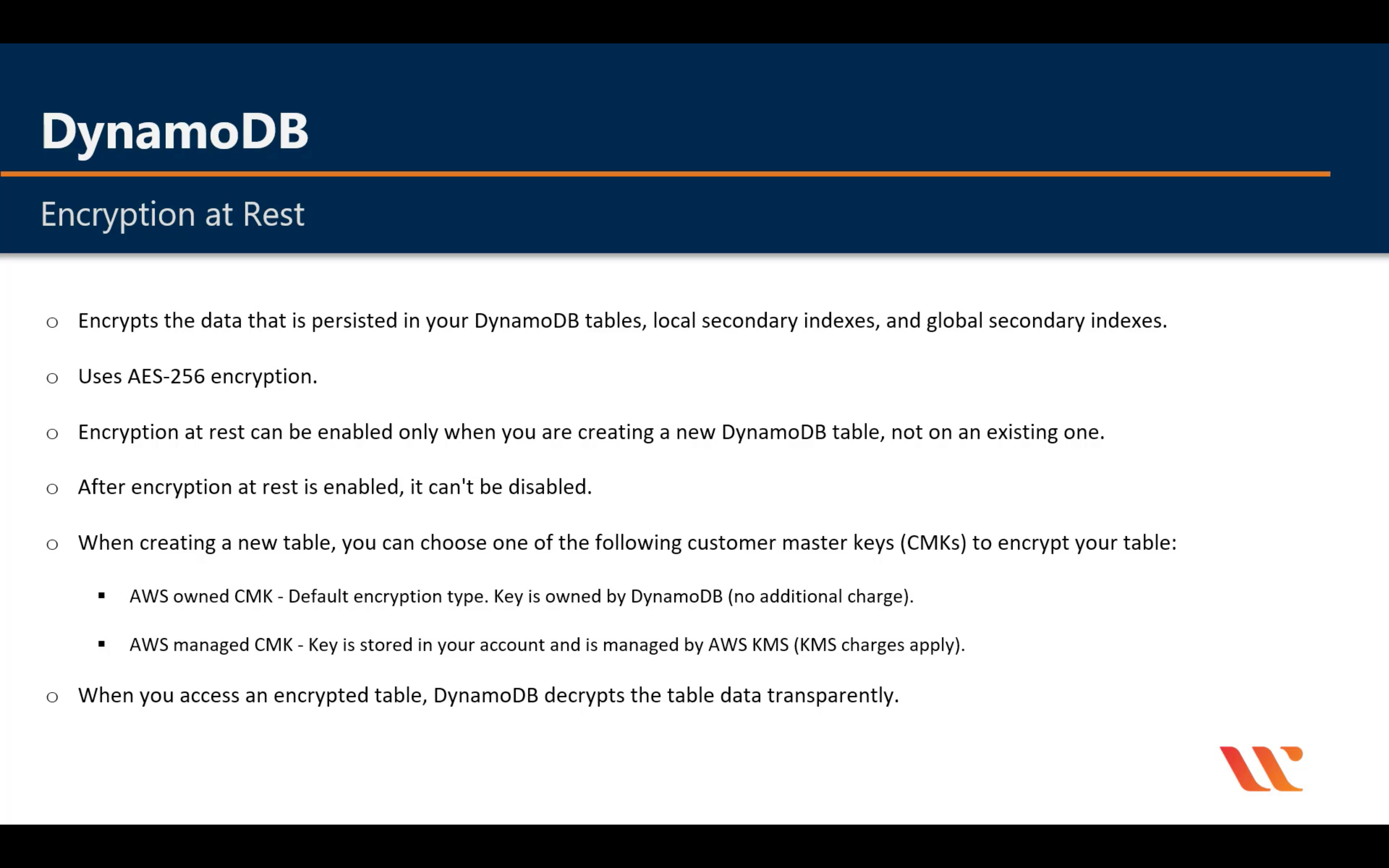

Encryption

Query vs Scan

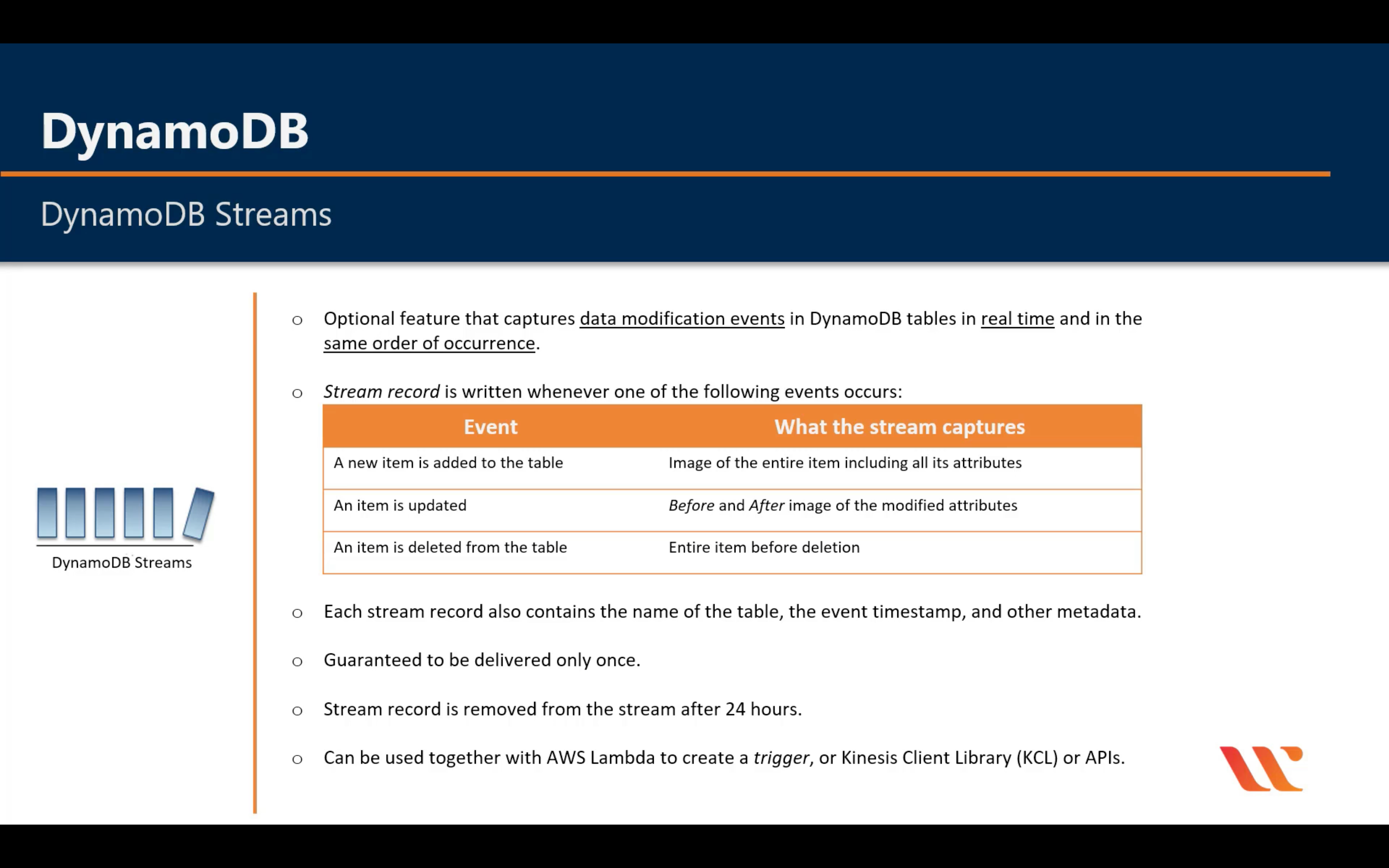

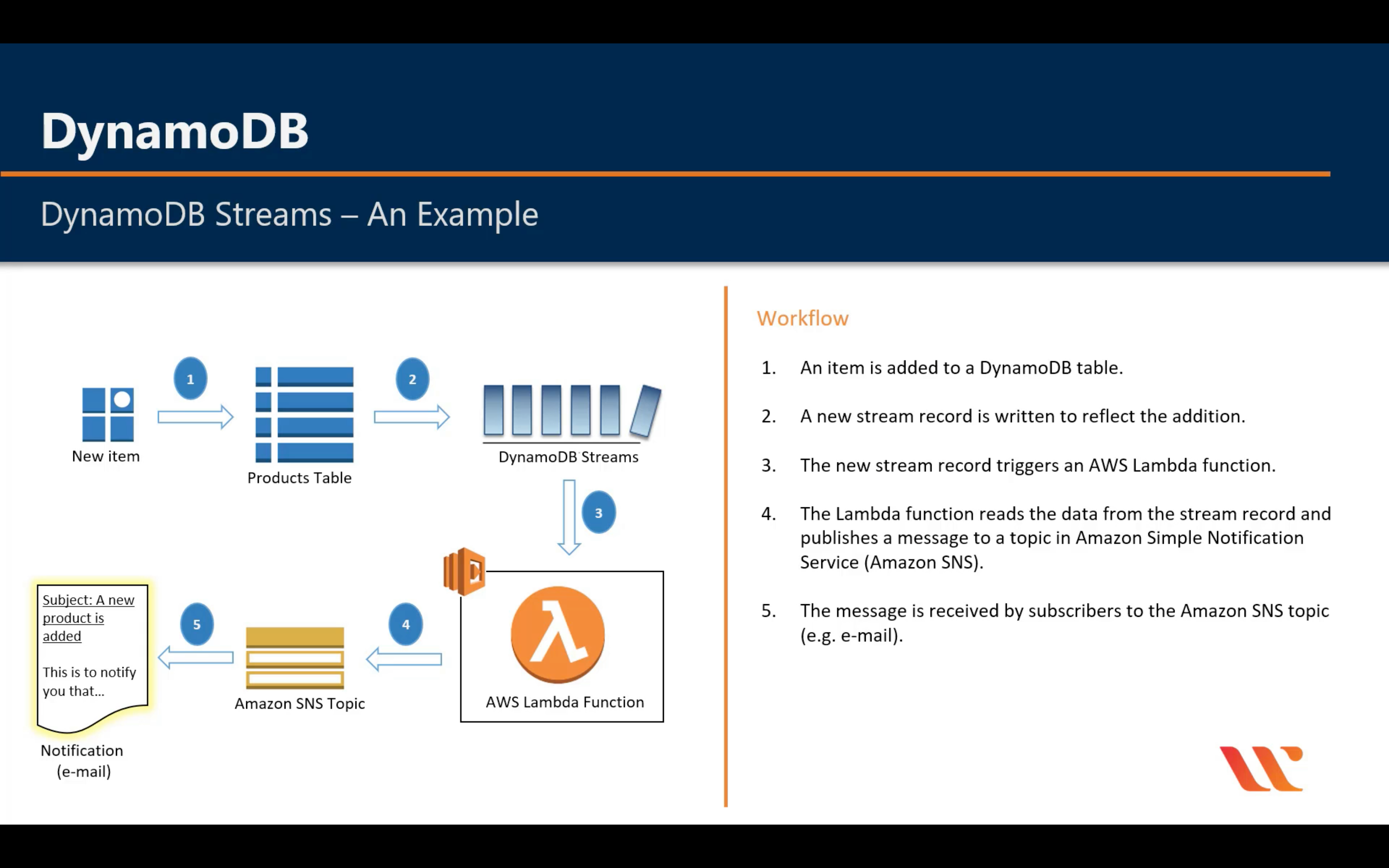

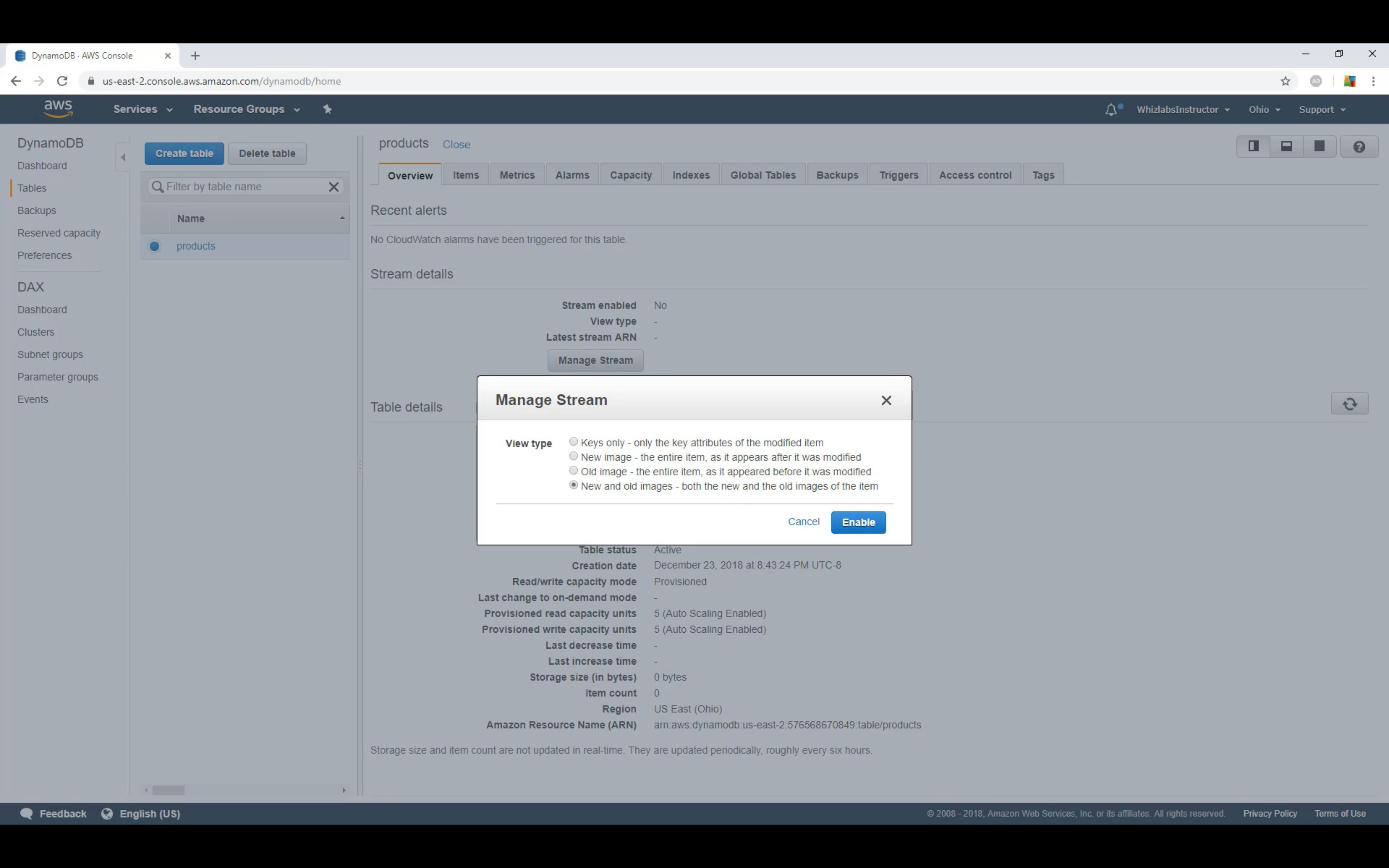

DynamoDB Stream

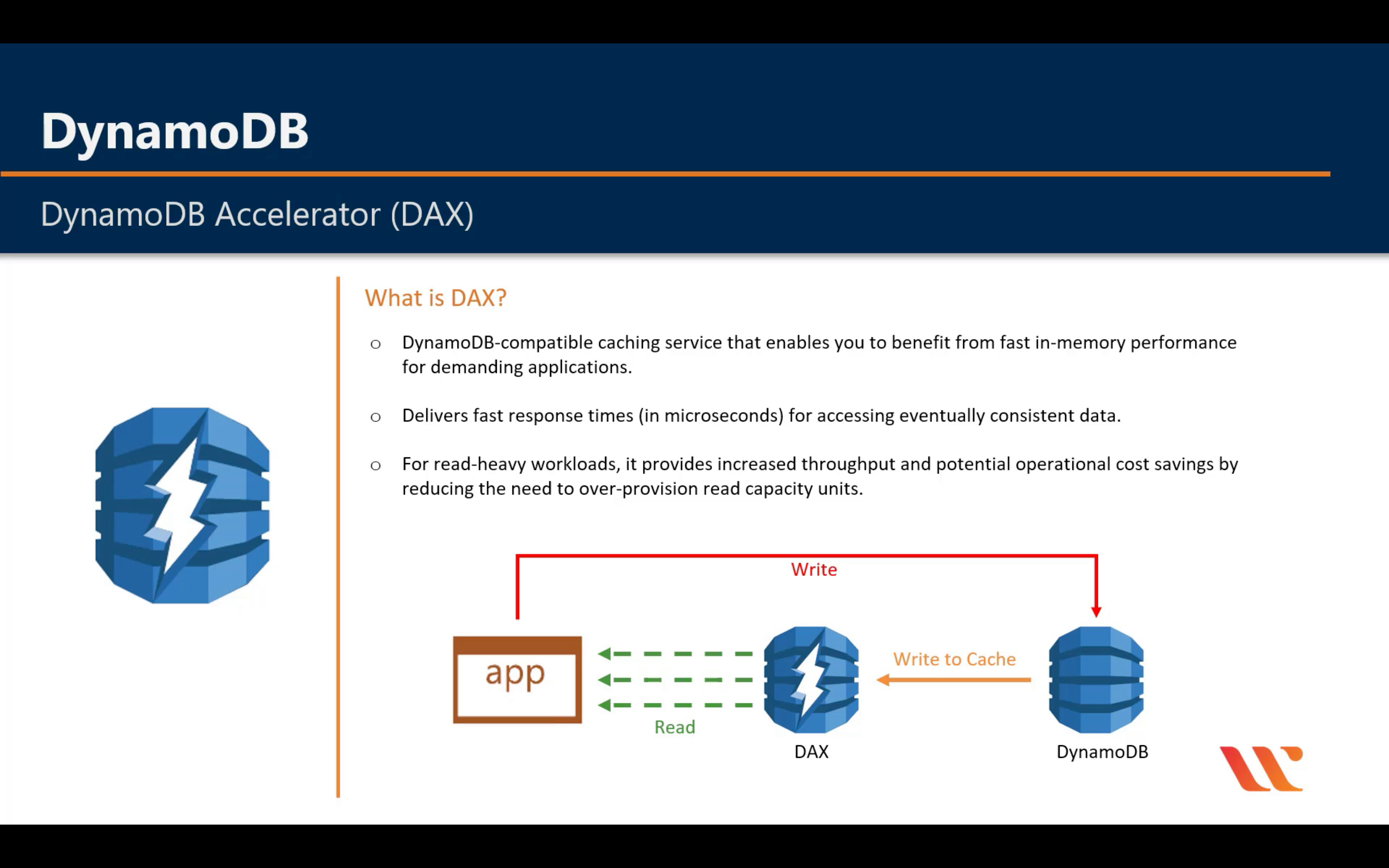

DAX (DynamoDB Accelerator)

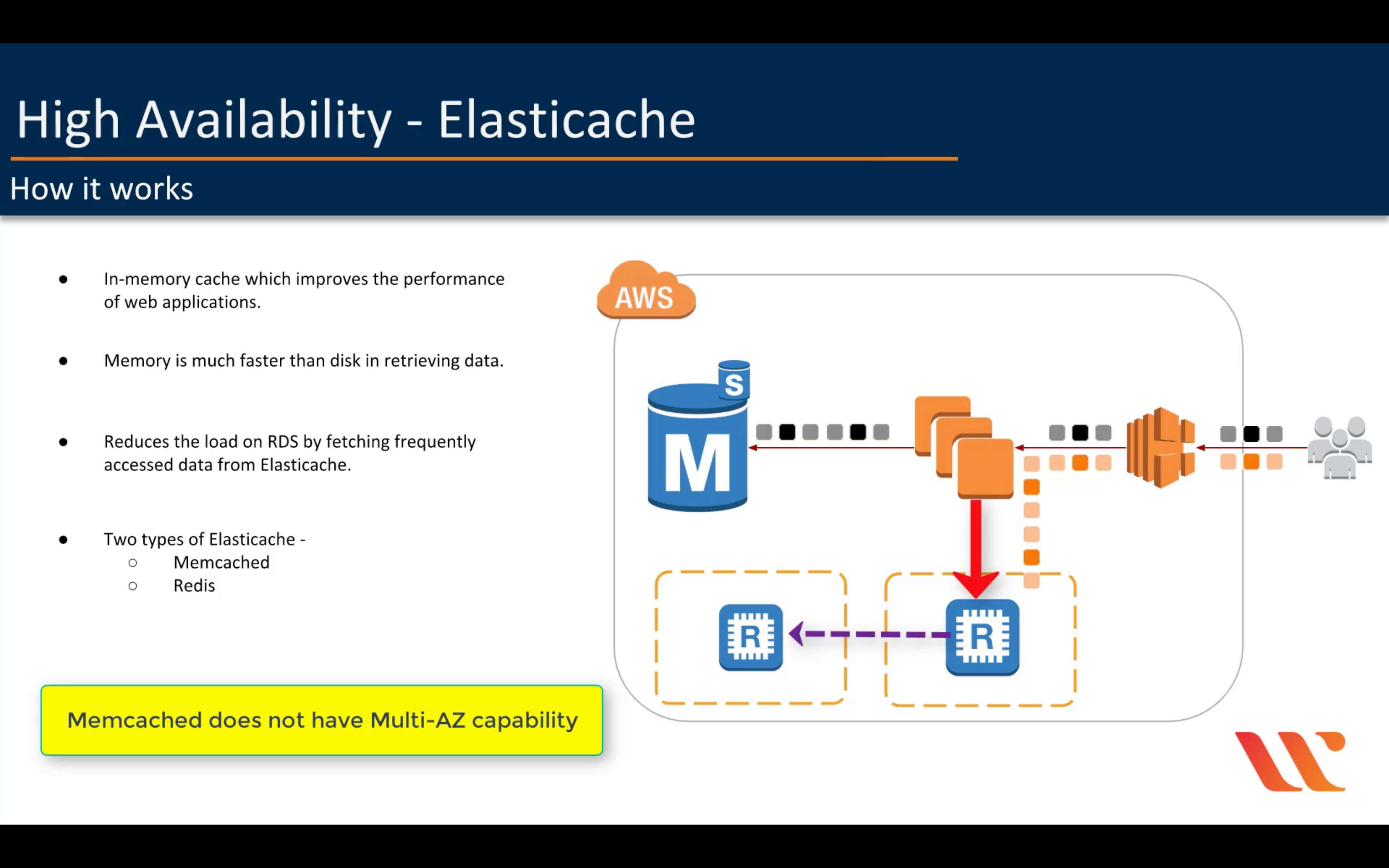

Amazon ElastiCache

In-memory caching service

Amazon Managed Apache Cassandra Service

Managed Cassandra-compatible database

Amazon Neptune

Fully managed graph database service

Amazon Quantum Ledger Database (QLDB)

Fully managed ledger database

Amazon RDS

Managed relational database service for MySQL, PostgreSQL, Oracle, SQL Server, and MariaDB

- Lower administrative burden

- Performance

- Scalability

- Availability and durability

- Security

- Manageability

- Cost-effectiveness

Introduction & Configuration

Automated Backup

Manual Backup & DB Snapshots

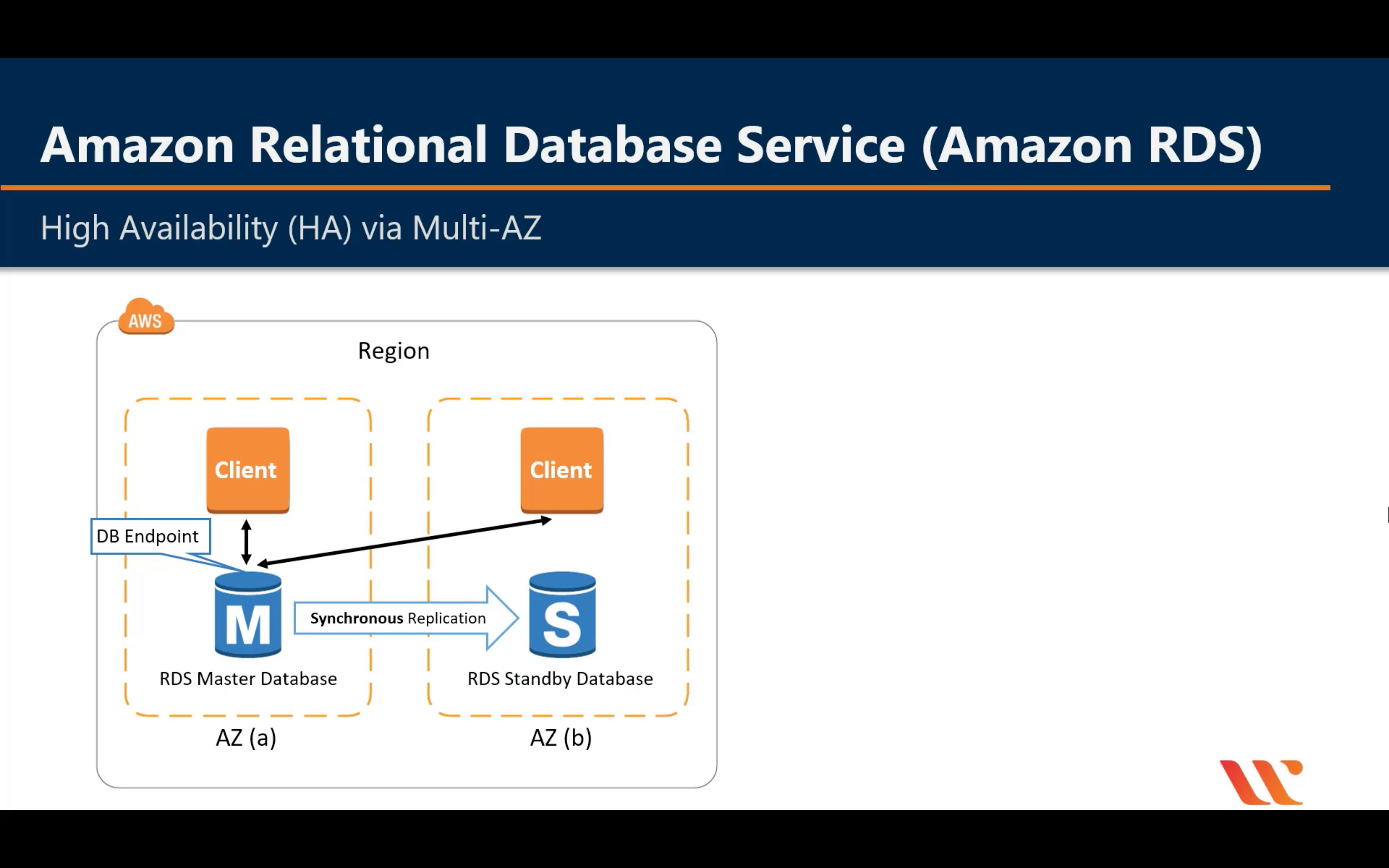

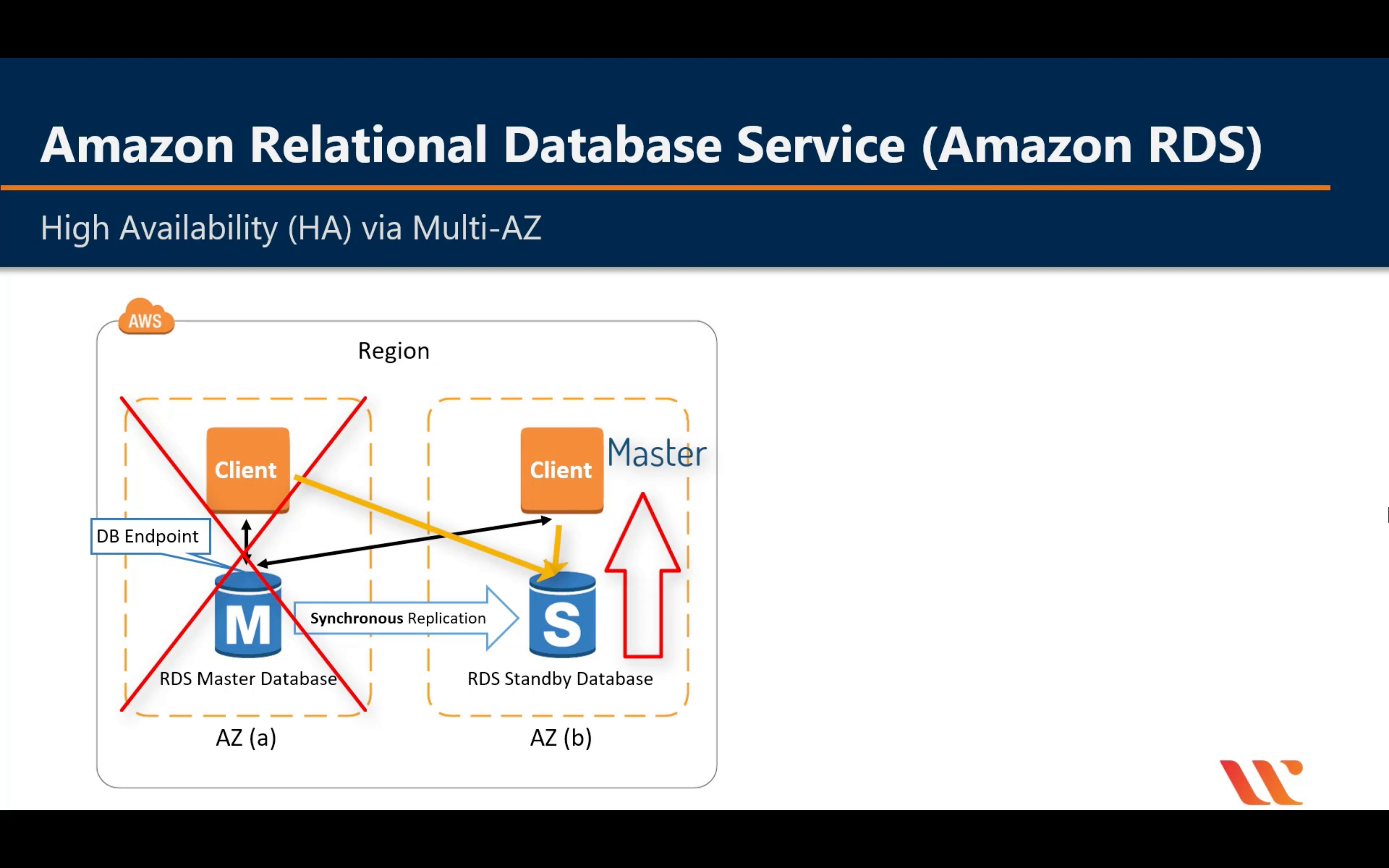

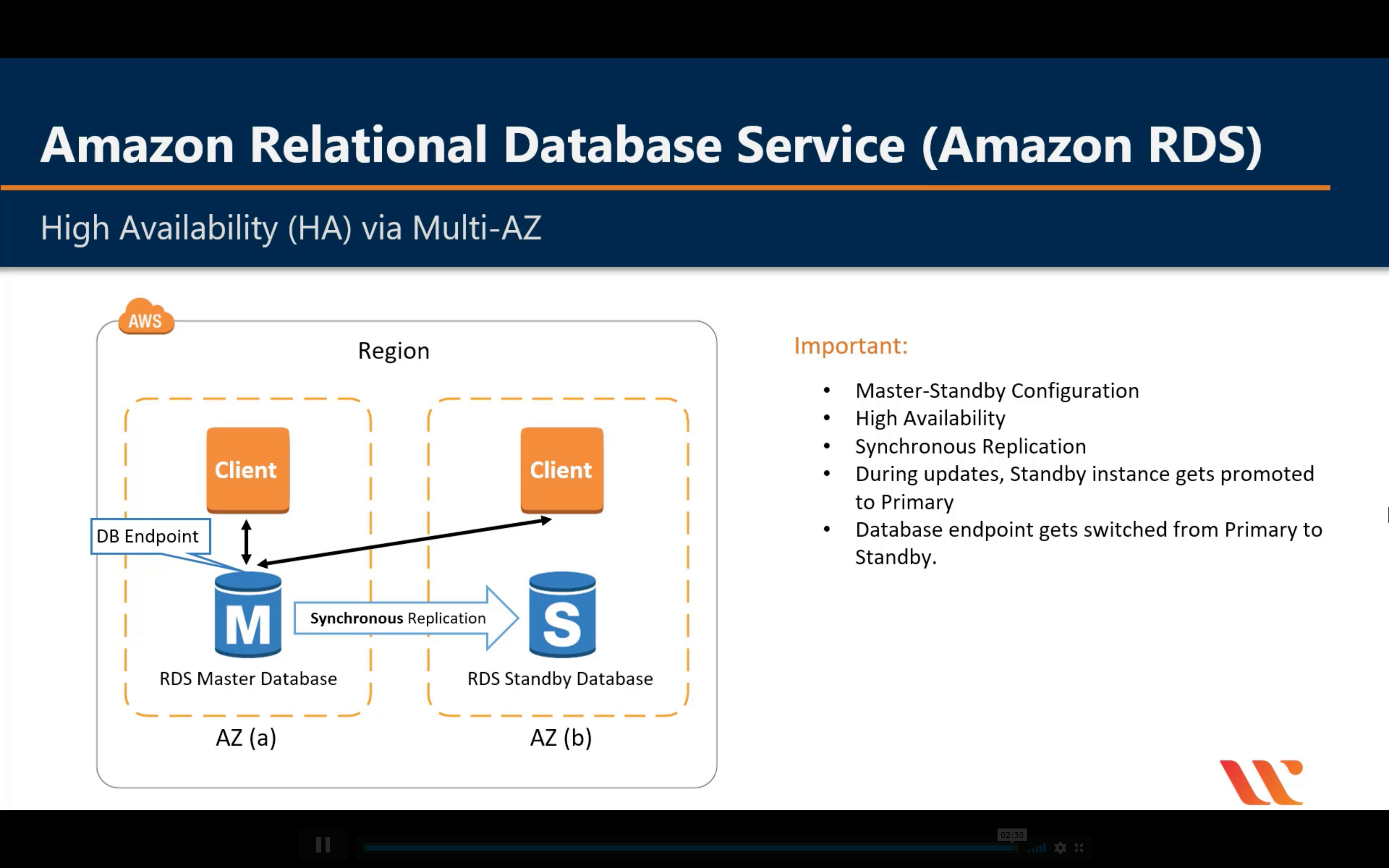

Multi-AZ Deployment

Standby DB never has data transfer until master DB down

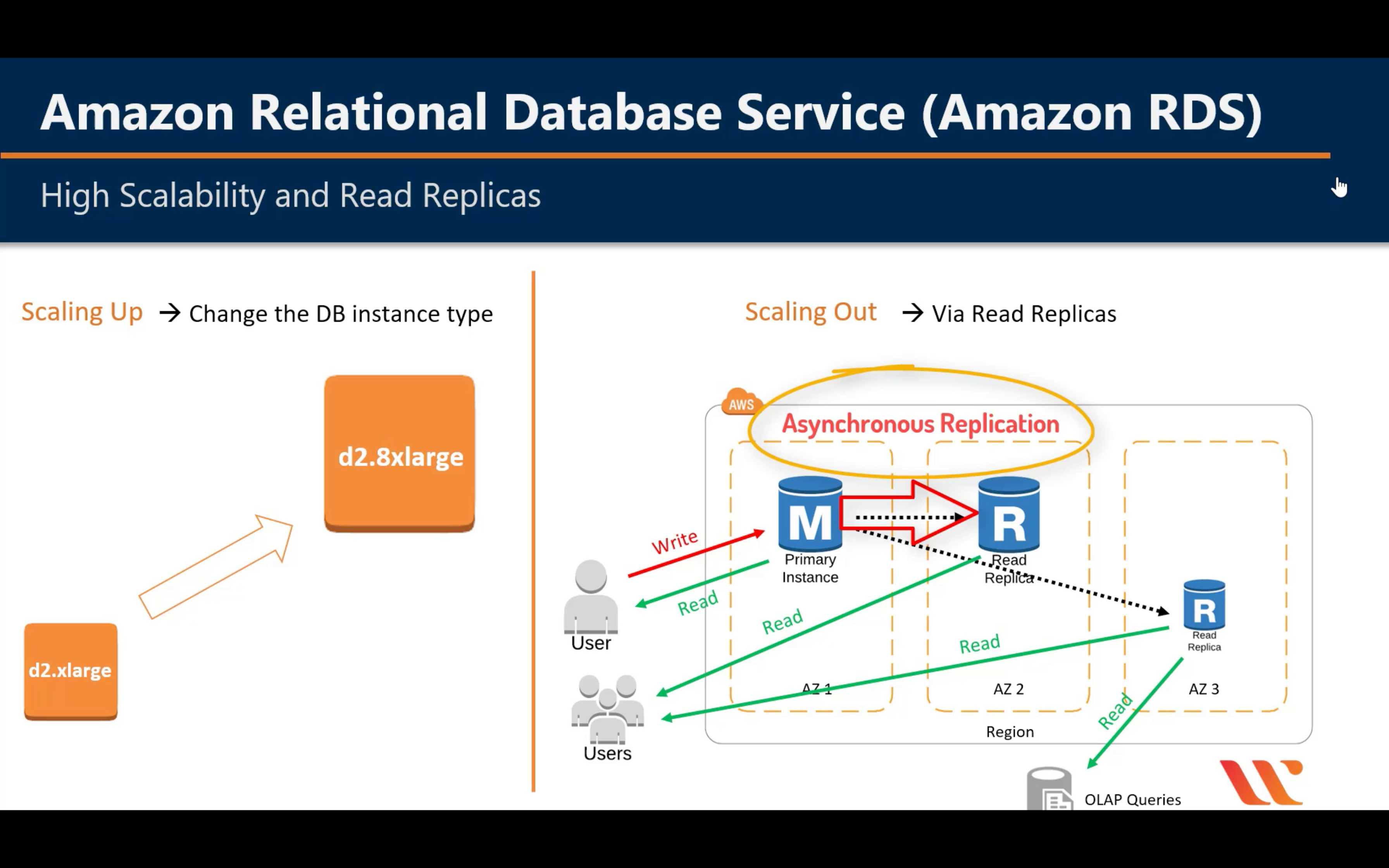

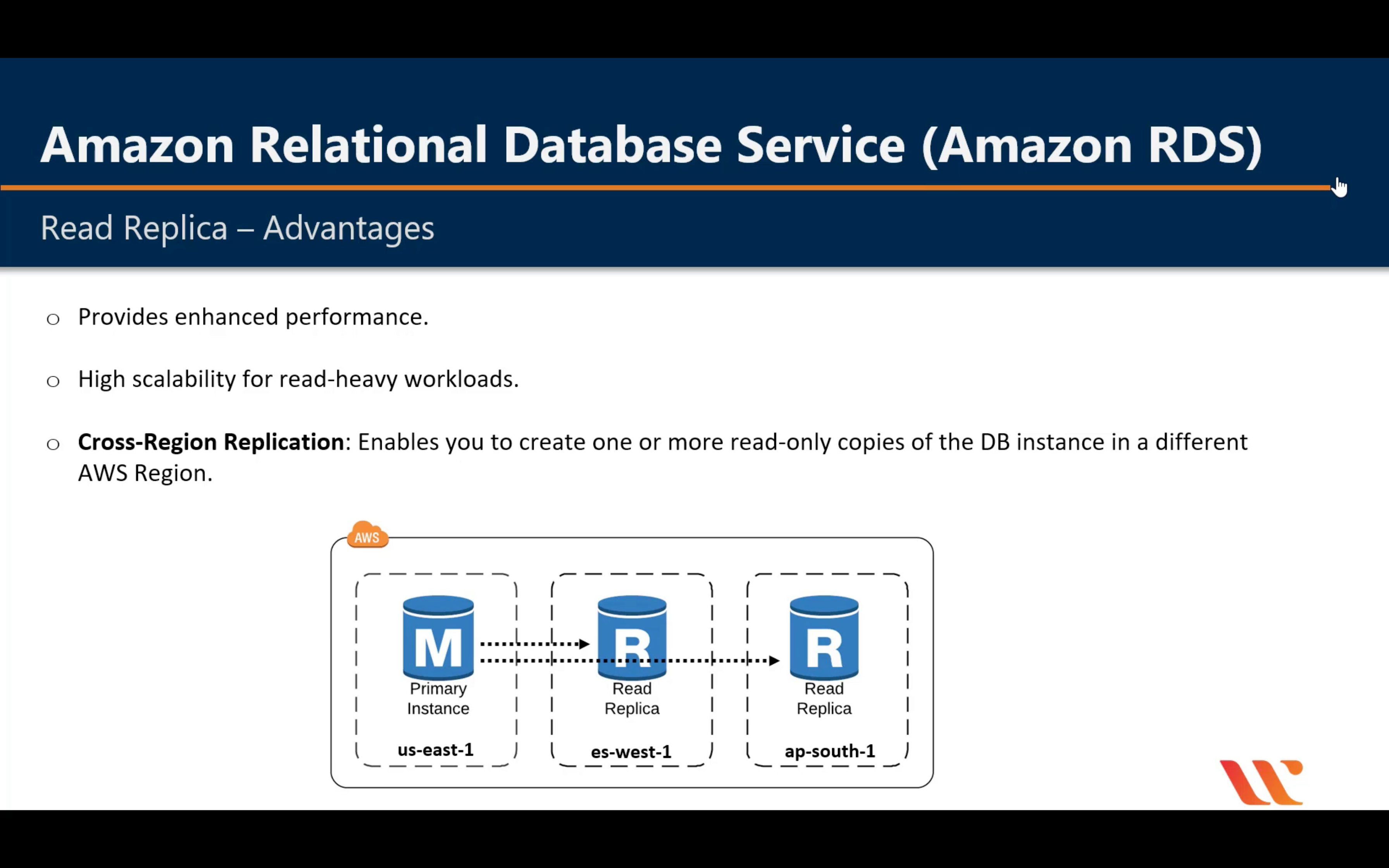

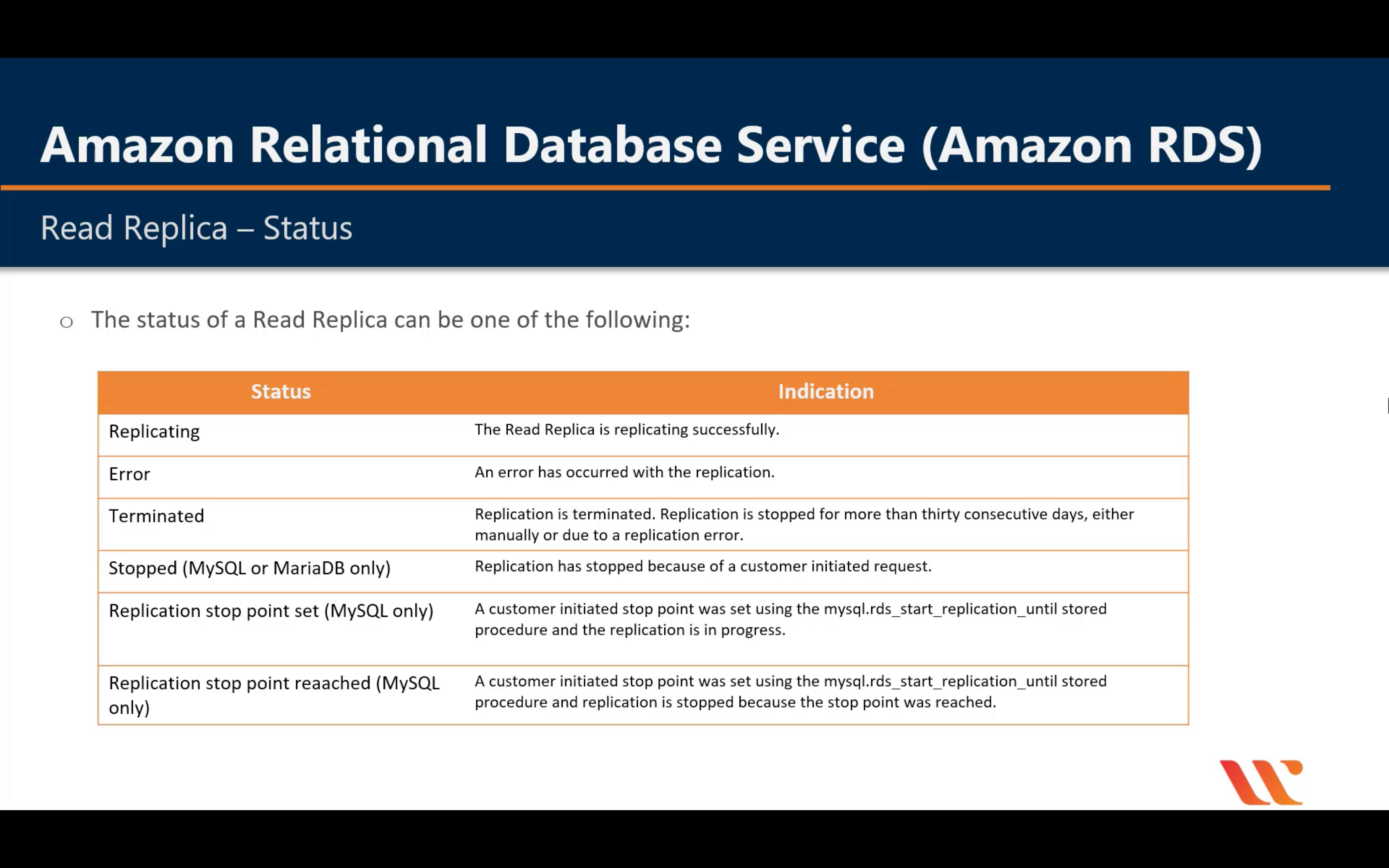

Read Replicas

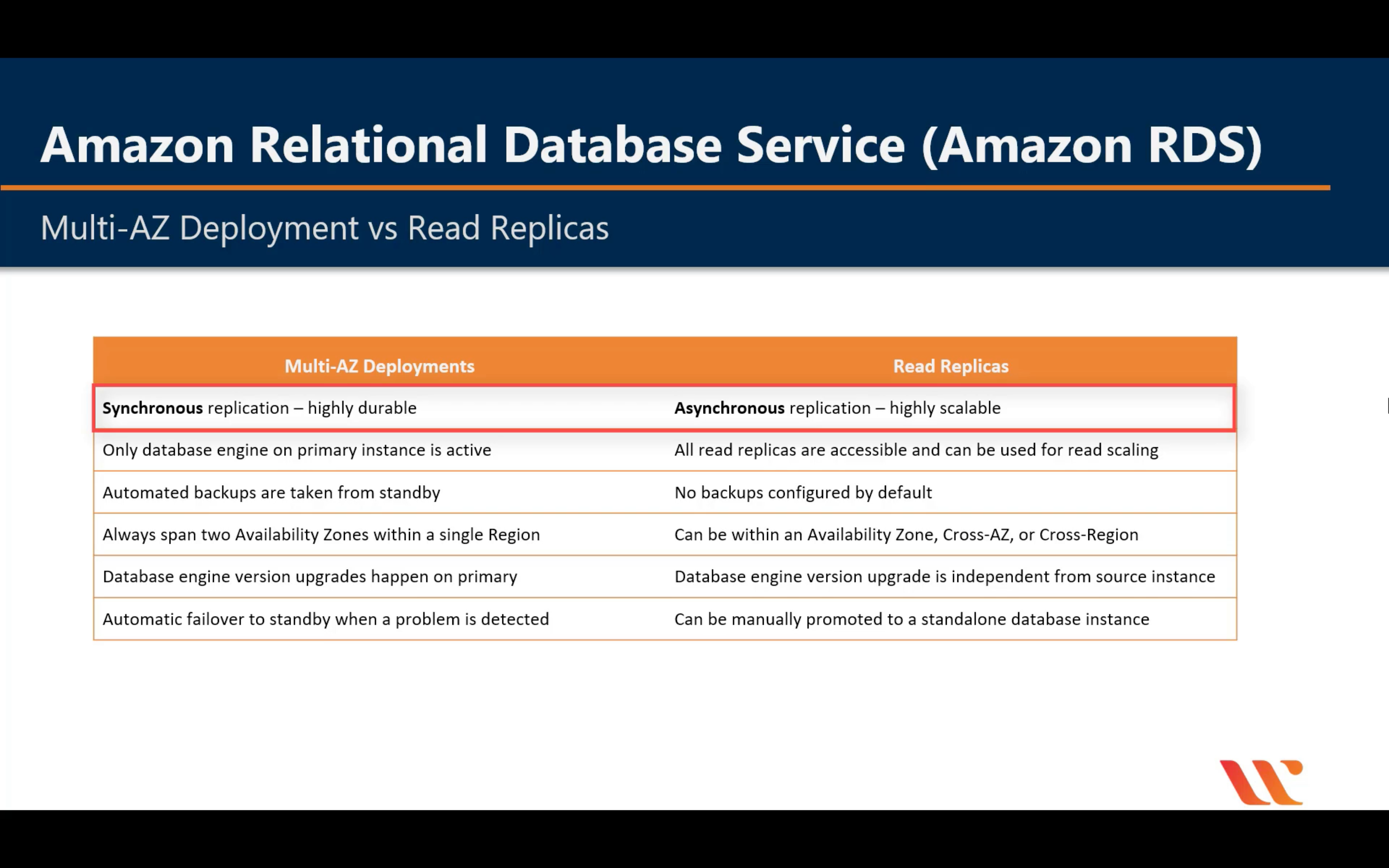

Multi-AZ vs. Read Replica

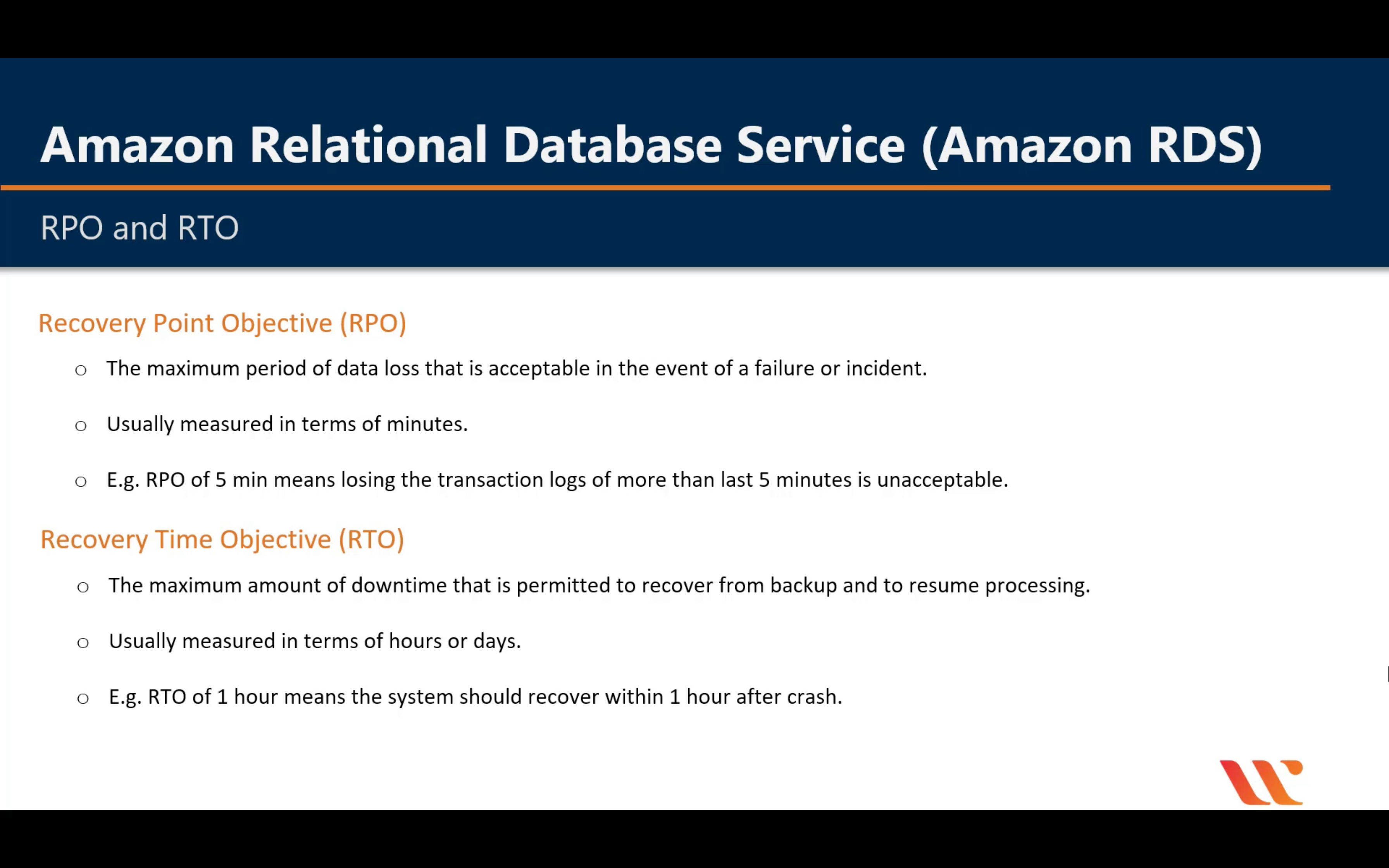

RPO & RTO

Minimal RTO and RPO

Unlike snapshot-based solutions that update target locations at distinct, infrequent intervals, CloudEndure uses Continuous Data Protection, enabling sub-second Recovery Point Objectives (RPOs). Highly automated machine conversion and orchestration enable Recovery Time Objectives (RTOs) of minutes.

Amazon RDS on VMware

Automate on-premises database management

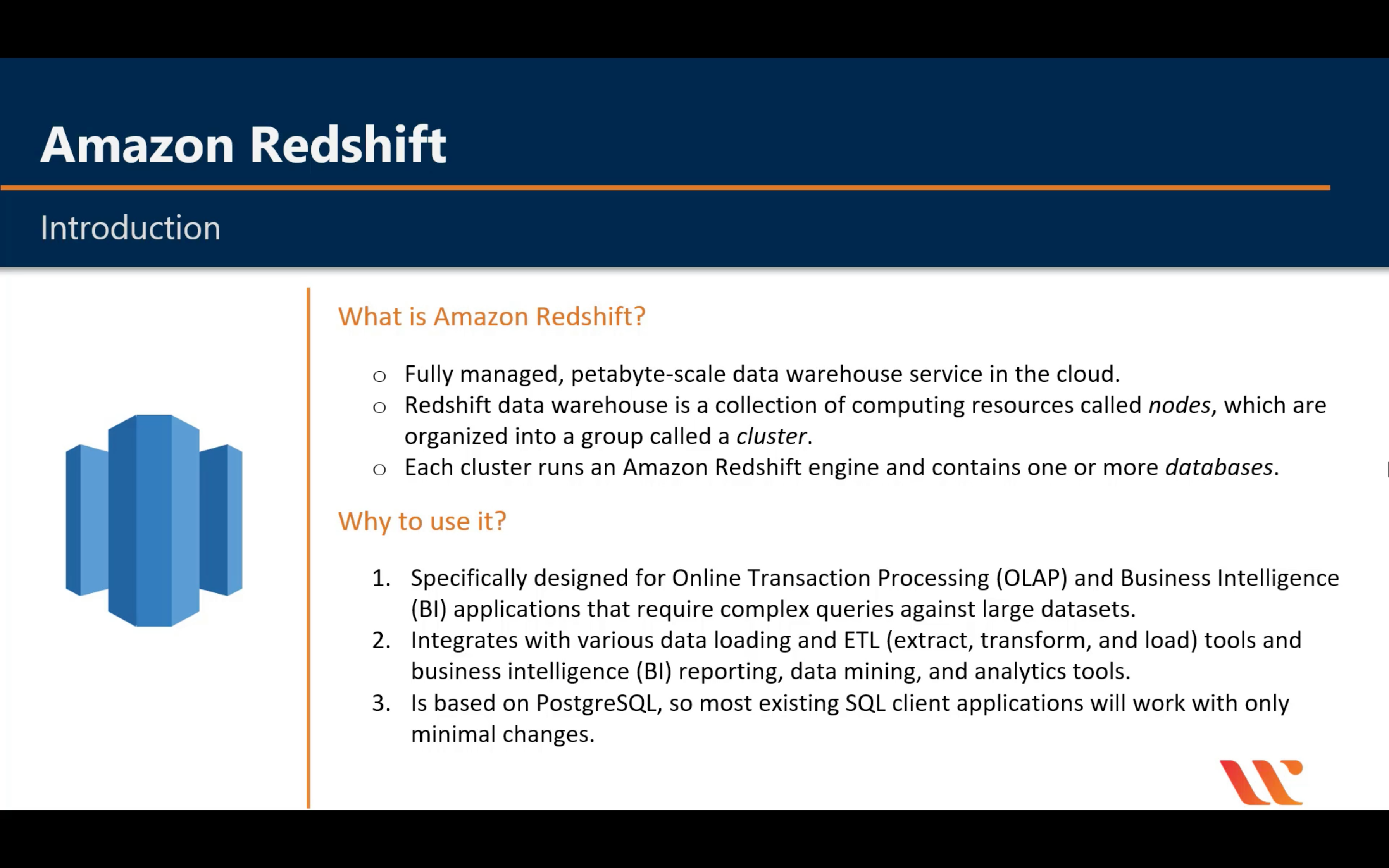

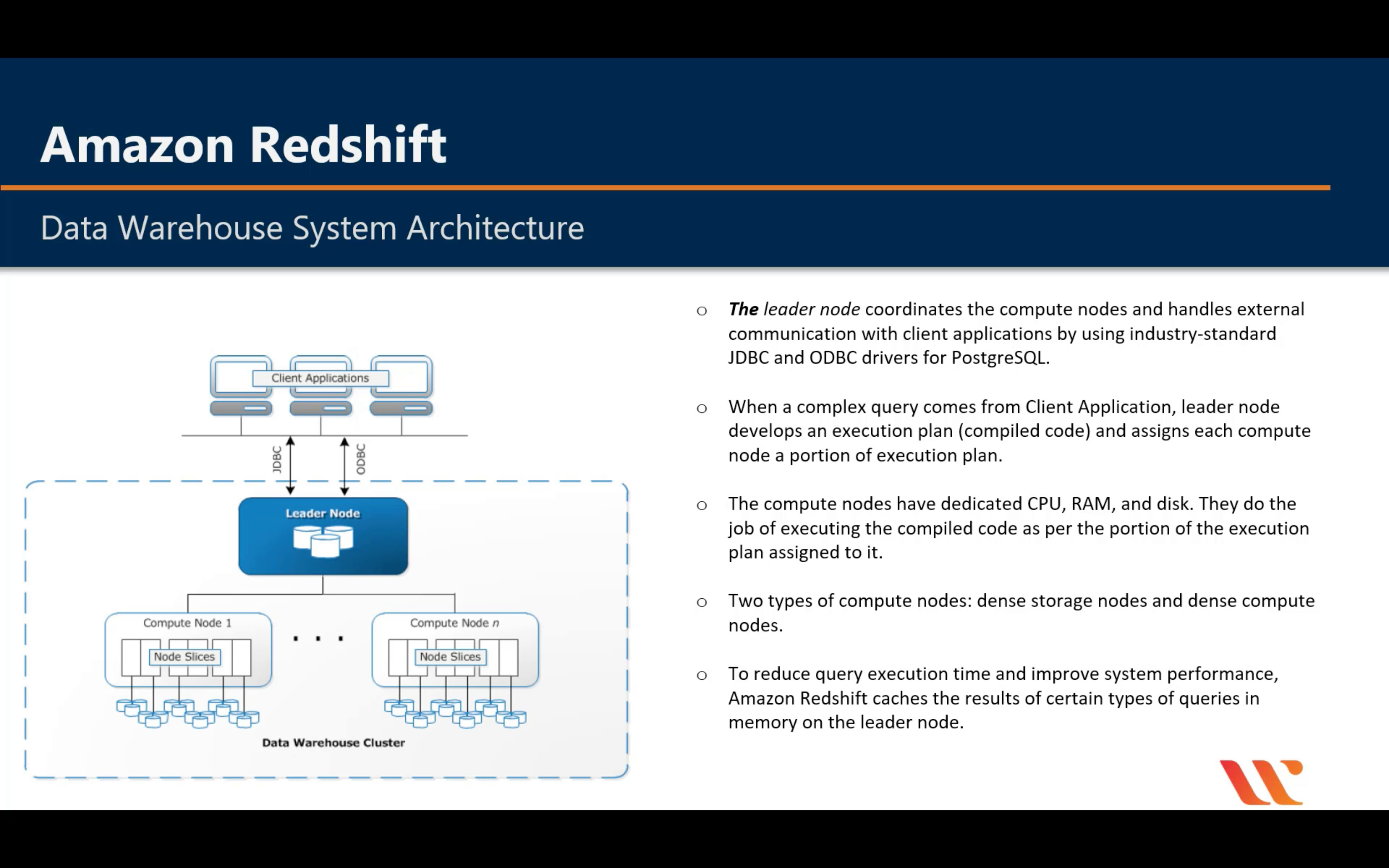

Amazon Redshift

Fast, simple, cost-effective data warehousing

- Deepest integration with your data lake and AWS services

- Query and export data to and from your data lake

- Federated Query (preview)

- AWS analytics ecosystem

- Best performance

Introduction

Amazon Timestream

Fully managed time series database

AWS Database Migration Service

Migrate databases with minimal downtime

- Create a Replication instance to manage the migration process.

- Using public transfer or through VPN.

- Schedule.

- Create source endpoint and target endpoint.

- Create migration task.

Machine Learning

Amazon SageMaker

Build, train, and deploy machine learning models at scale

Amazon Augmented AI

Easily implement human review of ML predictions

Amazon CodeGuru (Preview)

Automate code reviews and identify expensive lines of code

Amazon Comprehend

Discover insights and relationships in text

- Keyphrase Extraction

- Sentiment Analysis

- Syntax Analysis

- Entity Recognition

- Comprehend Medical

- Medical Named Entity and Relationship Extraction (NERe)

- Medical Ontology Linking

- Custom Entities

- Language Detection

- Custom Classification

- Topic Modeling

- Multiple language support

Amazon Elastic Inference

Deep learning inference acceleration

Amazon Forecast

Increase forecast accuracy using machine learning

Amazon Fraud Detector (Preview)

Detect more online fraud faster

Amazon Kendra

Reinvent enterprise search with ML

Amazon Lex

Build voice and text chatbots

Amazon Personalize

Build real-time recommendations into your applications

Amazon Polly

Turn text into life-like speech

- Simple-to-Use API

- Wide Selection of Voices and Languages

- Synchronize Speech for an Enhanced Visual Experience

- Optimize Your Streaming Audio

- Adjust Speaking Style, Speech Rate, Pitch, and Loudness

- Newscaster Speaking Style

- Conversational Speaking Style

- Adjust the Maximum Duration of Speech

- Platform and Programming Language Support

- Speech Synthesis via API, Console, or Command Line

- Custom Lexicons

Amazon Rekognition

Analyze image and video

- Video

- REAL-TIME ANALYSIS OF STREAMING VIDEO

- PERSON IDENTIFICATION AND PATHING

- FACE RECOGNITION

- FACIAL ANALYSIS

- OBJECTS, SCENES AND ACTIVITIES DETECTION

- INAPPROPRIATE VIDEO DETECTION

- CELEBRITY RECOGNITION

- ADMINISTRATION VIA API, CONSOLE, OR COMMAND LINE

- ADMINISTRATIVE SECURITY

- Image

- OBJECT AND SCENE DETECTION

- FACIAL RECOGNITION

- FACIAL ANALYSIS

- FACE COMPARISON

- UNSAFE IMAGE DETECTION

- CELEBRITY RECOGNITION

- TEXT IN IMAGE

- ADMINISTRATION VIA API, CONSOLE, OR COMMAND LINE

- ADMINISTRATIVE SECURITY

- Label

- ACCURATELY MEASURE BRAND COVERAGE

- DISCOVER CONTENT FOR SYNDICATION

- IMPROVE OPERATIONAL EFFICIENCY

- SIMPLIFY DATA LABELING

- AUTOMATED MACHINE LEARNING

- SIMPLIFIED MODEL EVALUATION, INFERENCE AND FEEDBACK

Amazon SageMaker Ground Truth

Build accurate ML training datasets

Amazon Textract

Extract text and data from documents

Amazon Translate

Natural and fluent language translation

Amazon Transcribe

Automatic speech recognition

AWS Deep Learning AMIs

Deep learning on Amazon EC2

AWS Deep Learning Containers

Docker images for deep learning

AWS DeepComposer

ML enabled musical keyboard

AWS DeepLens

Deep learning enabled video camera

AWS DeepRacer

Autonomous 1/18th scale race car, driven by ML

AWS Inferentia

Machine learning inference chip

Apache MXNet on AWS

Scalable, open-source deep learning framework

TensorFlow on AWS

Open-source machine intelligence library

Management & Governance

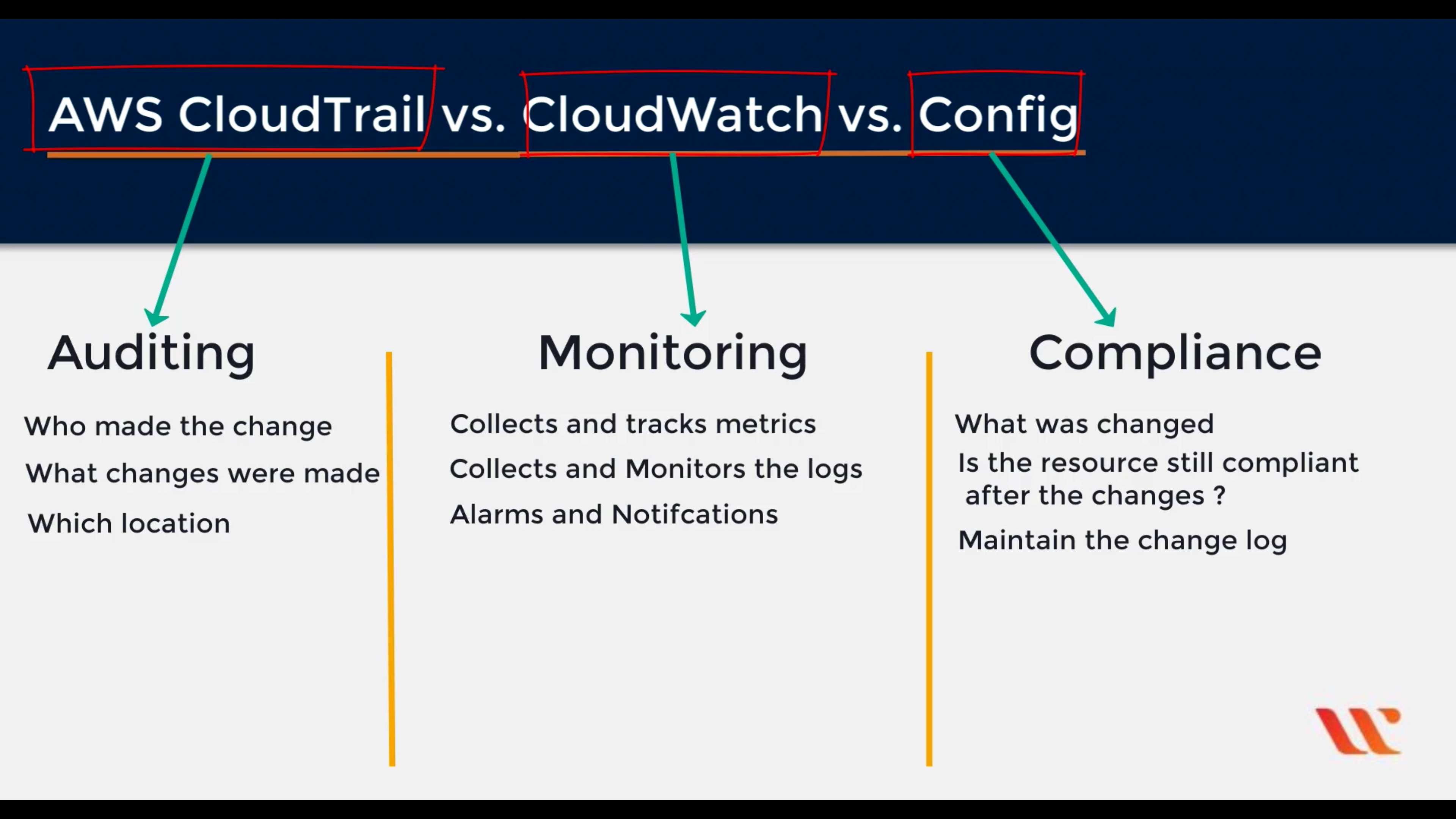

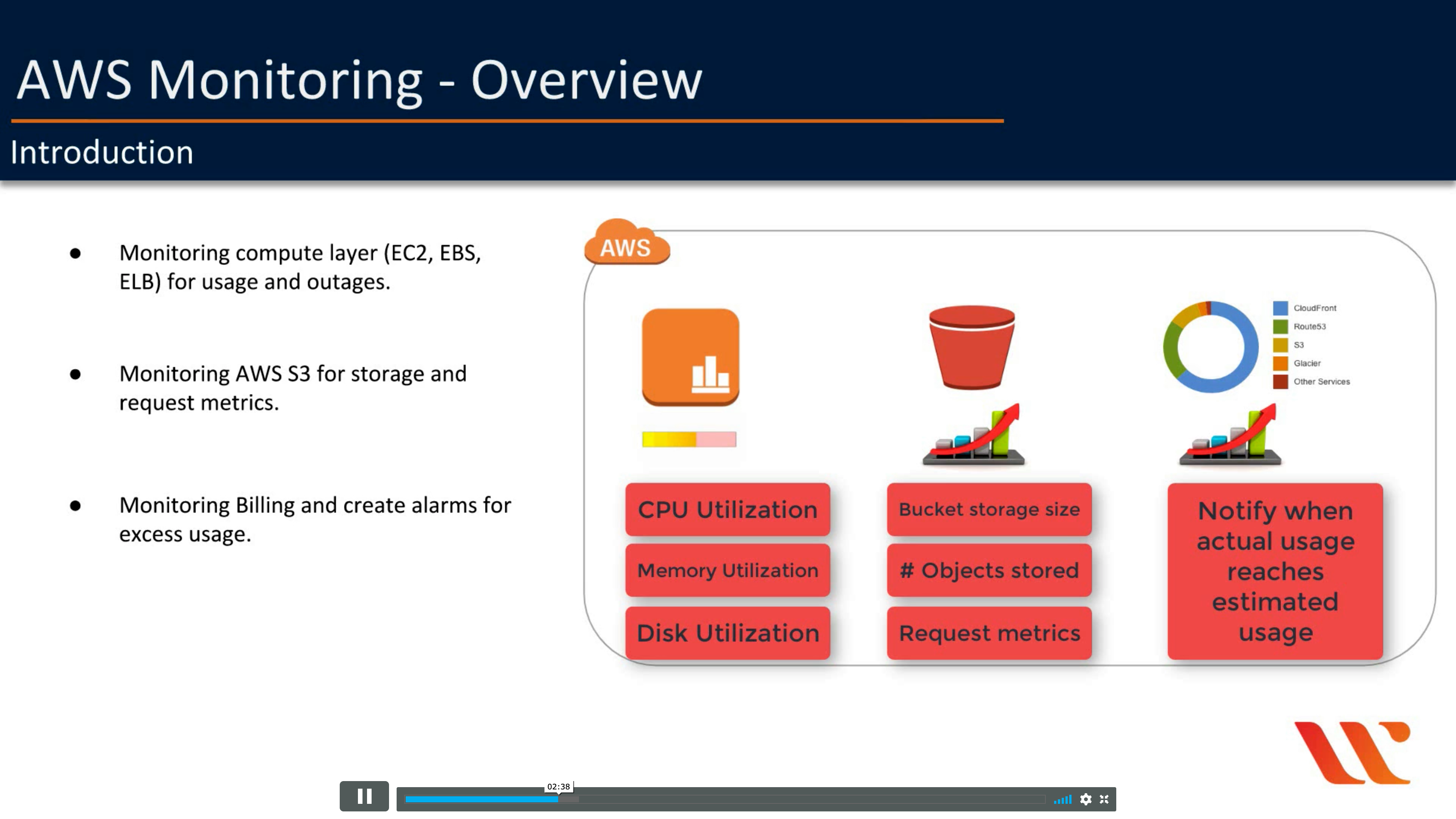

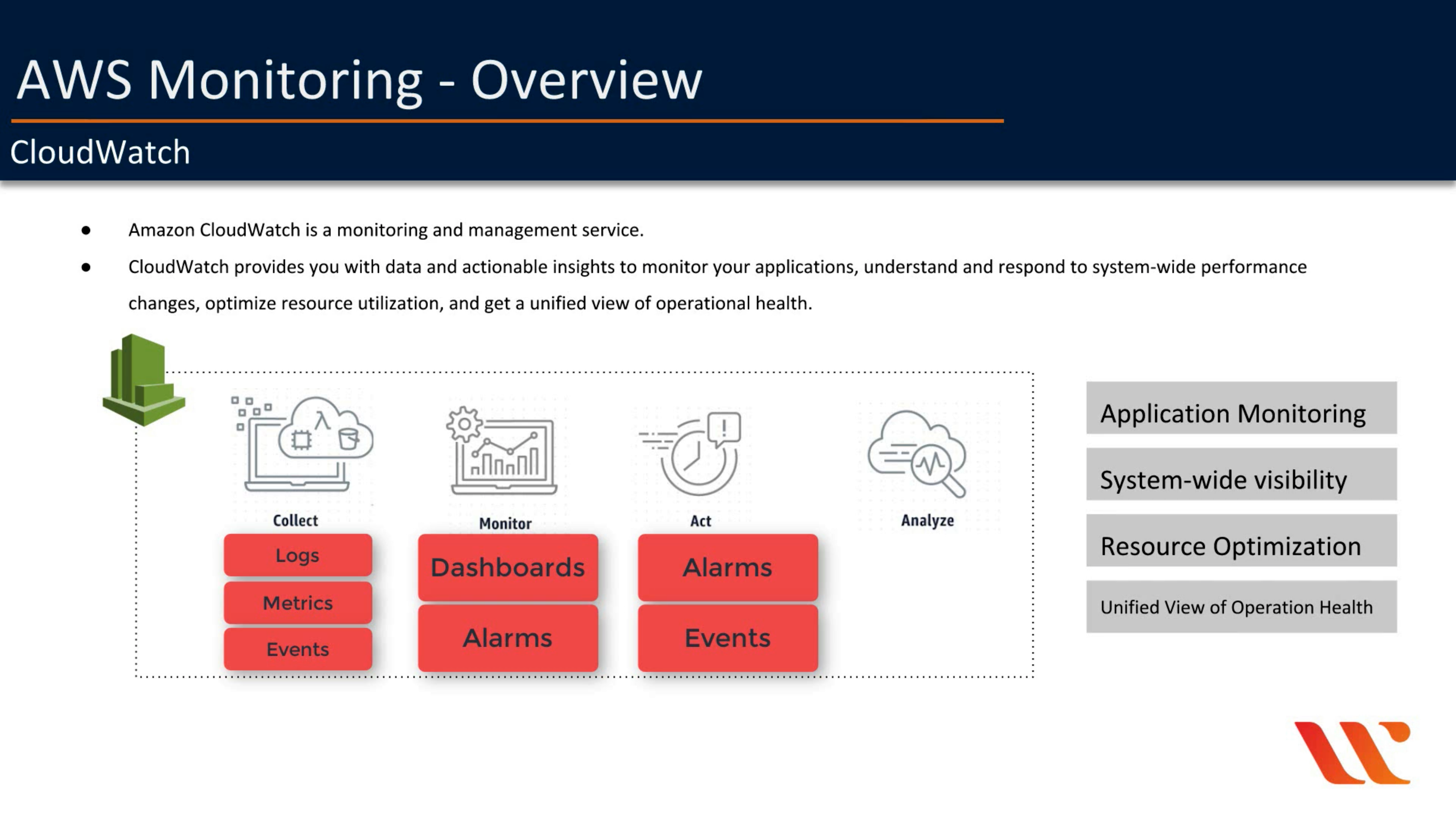

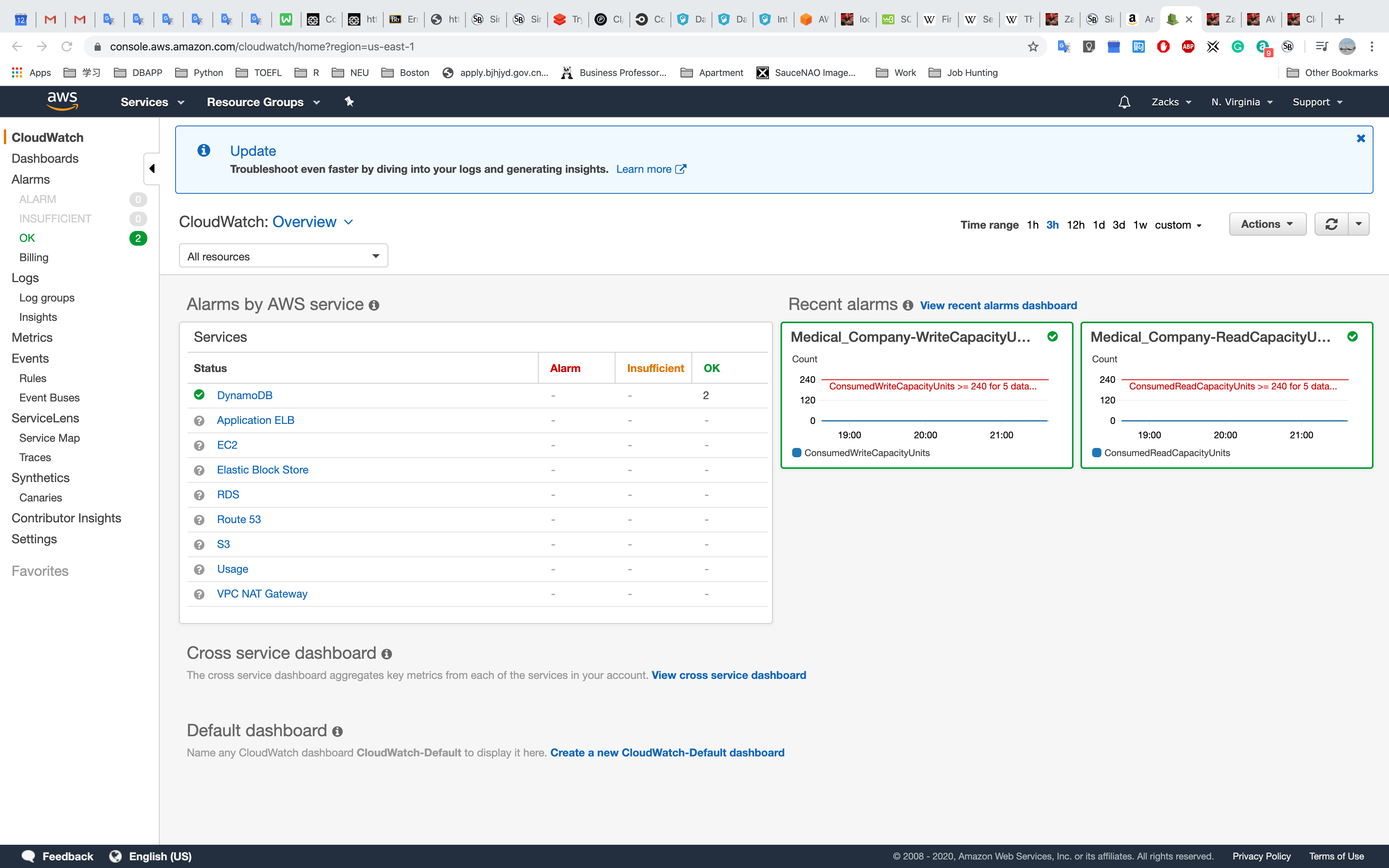

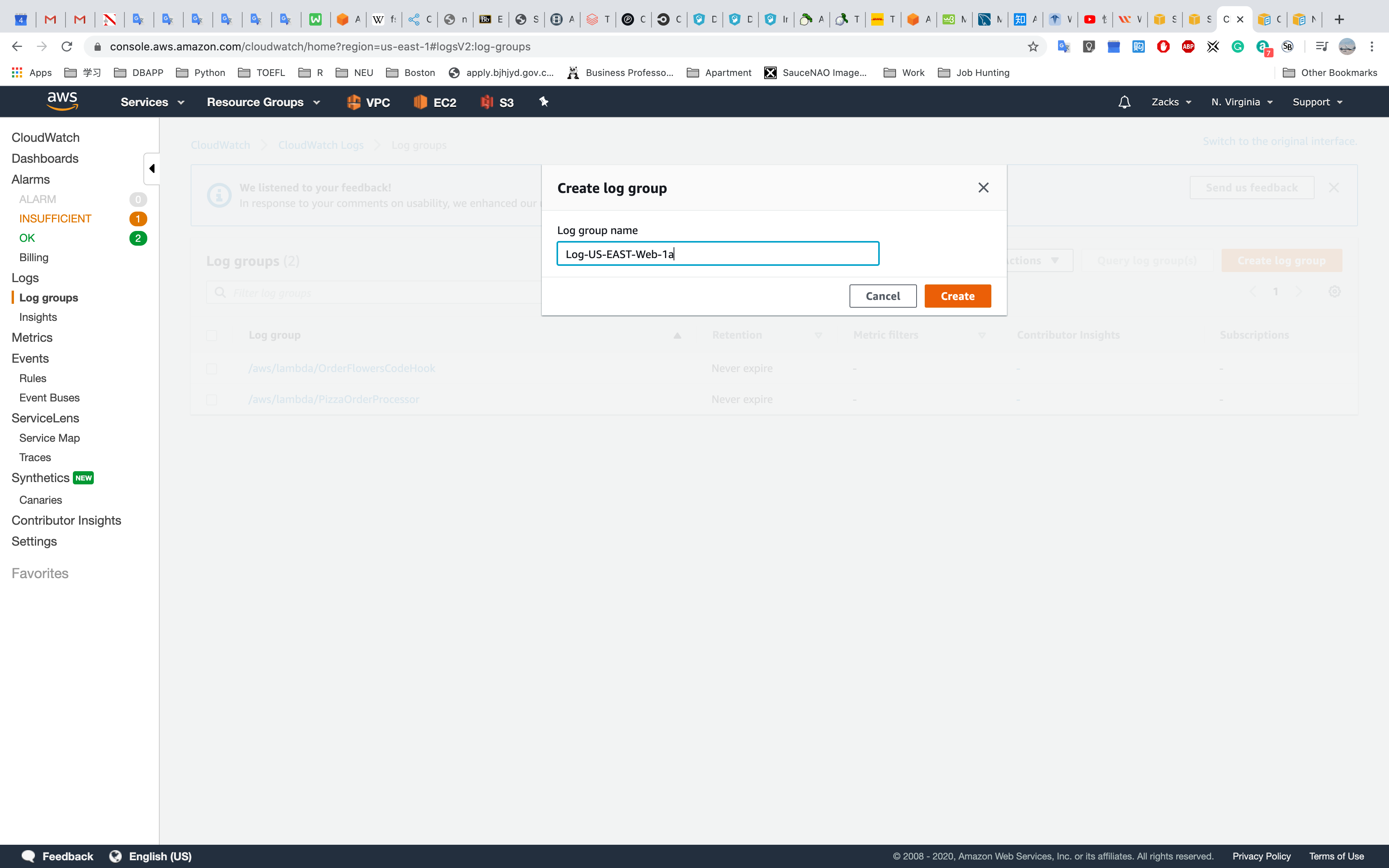

Amazon CloudWatch

Monitor resources and applications

- Collect

- Monitor

- Act

- Analyze

- Compliance and Security

Introduction

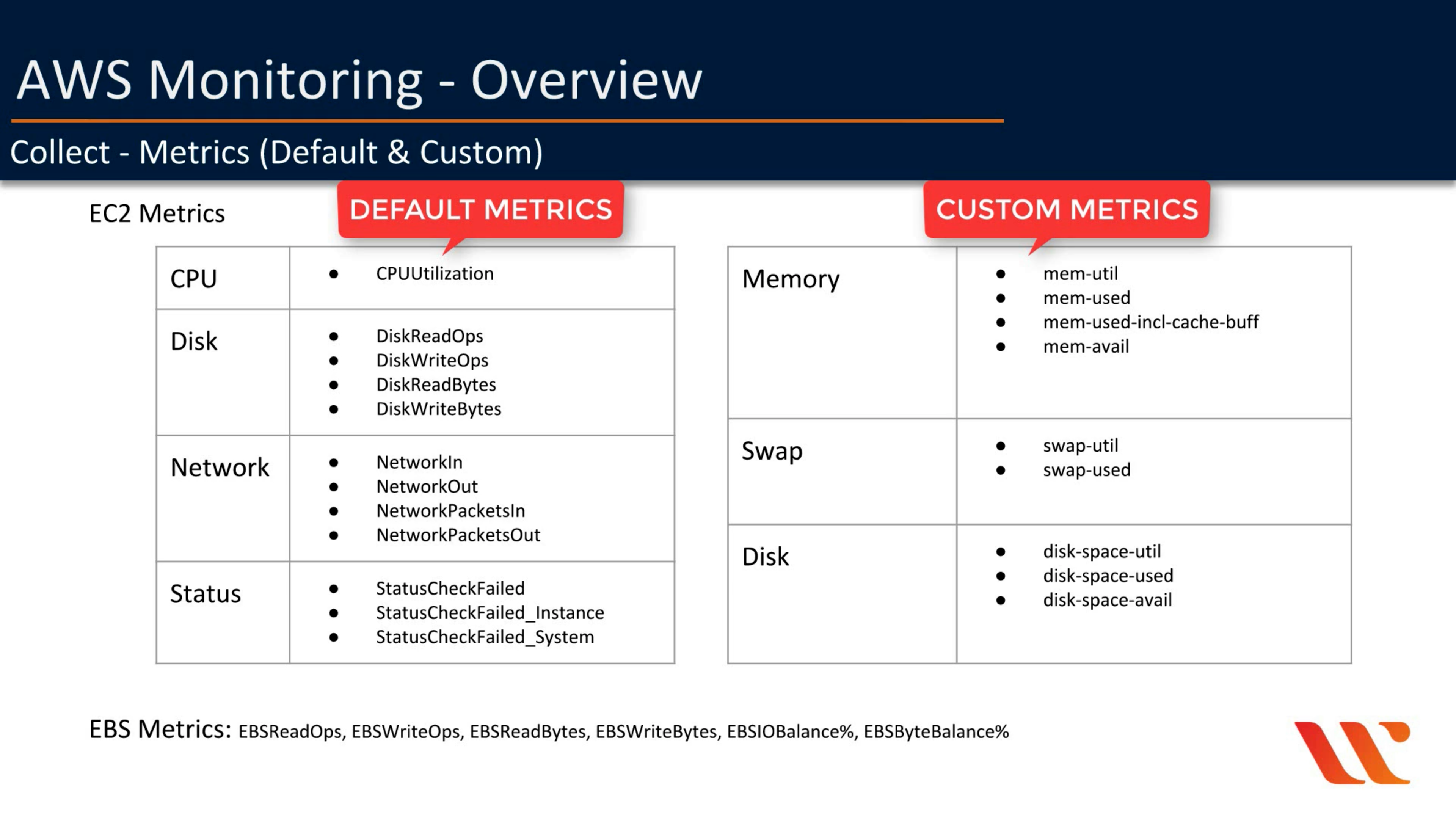

Collect

Memory are not default metric!

Monitoring

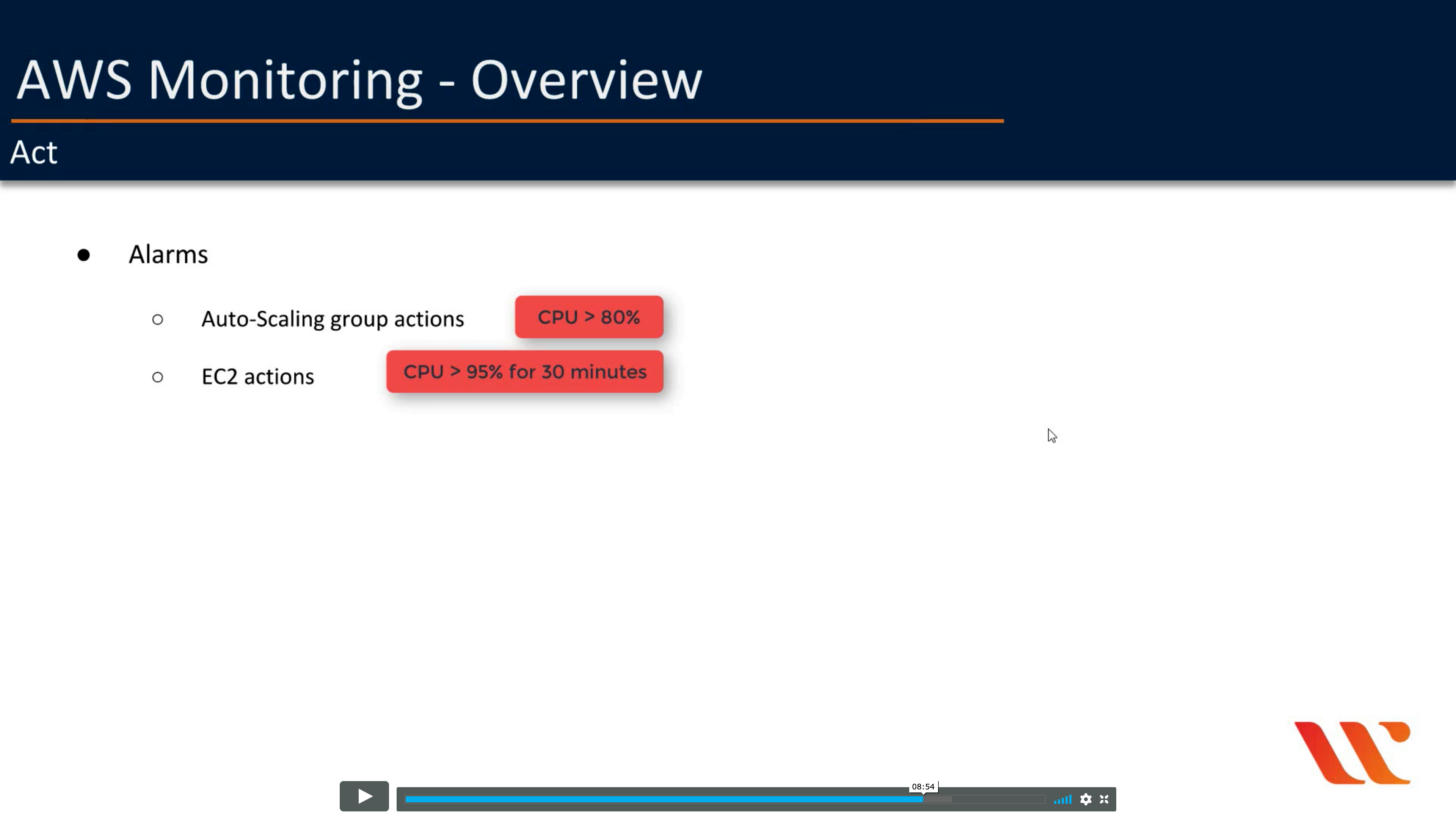

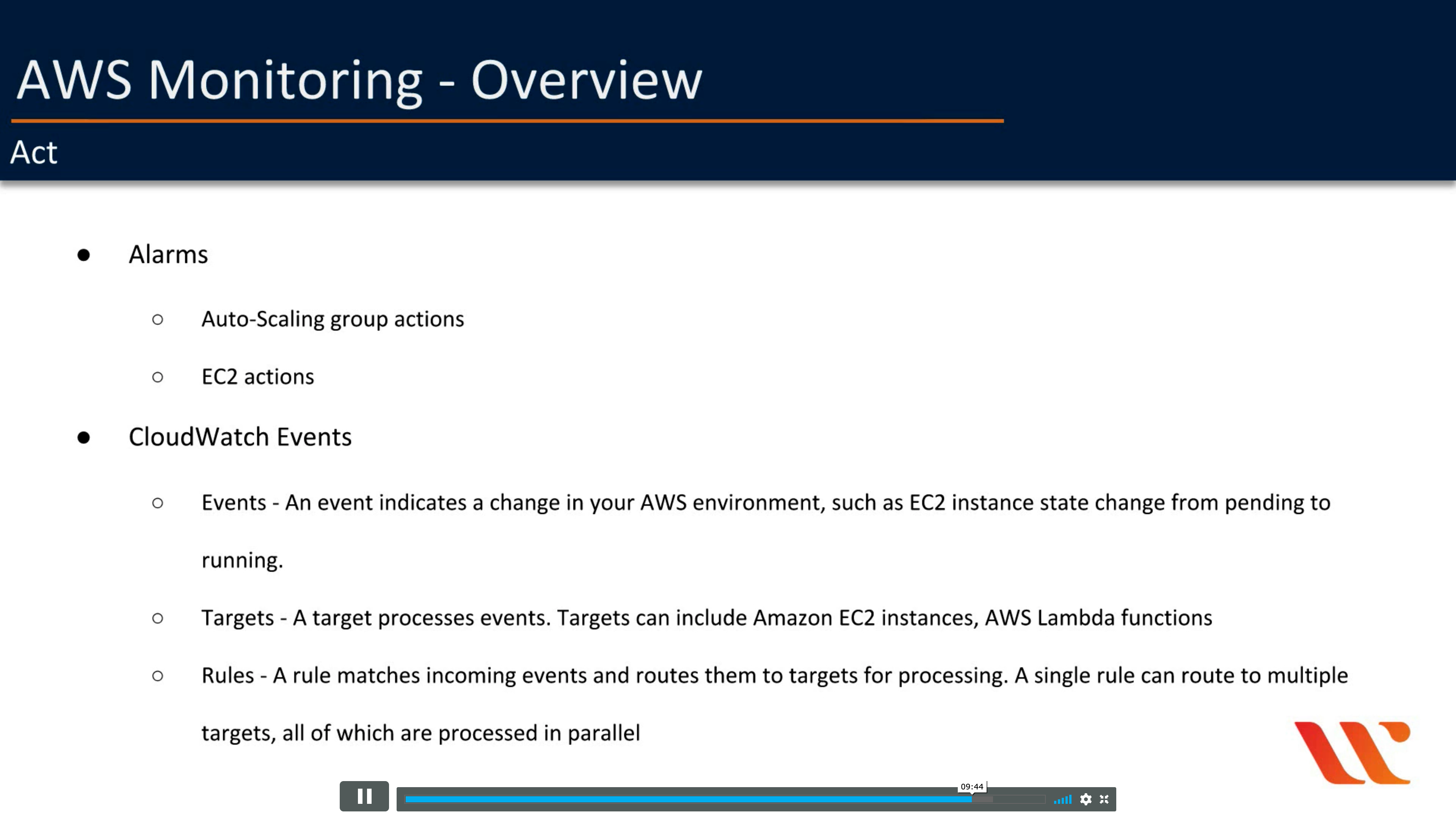

Act

For example

Analyze

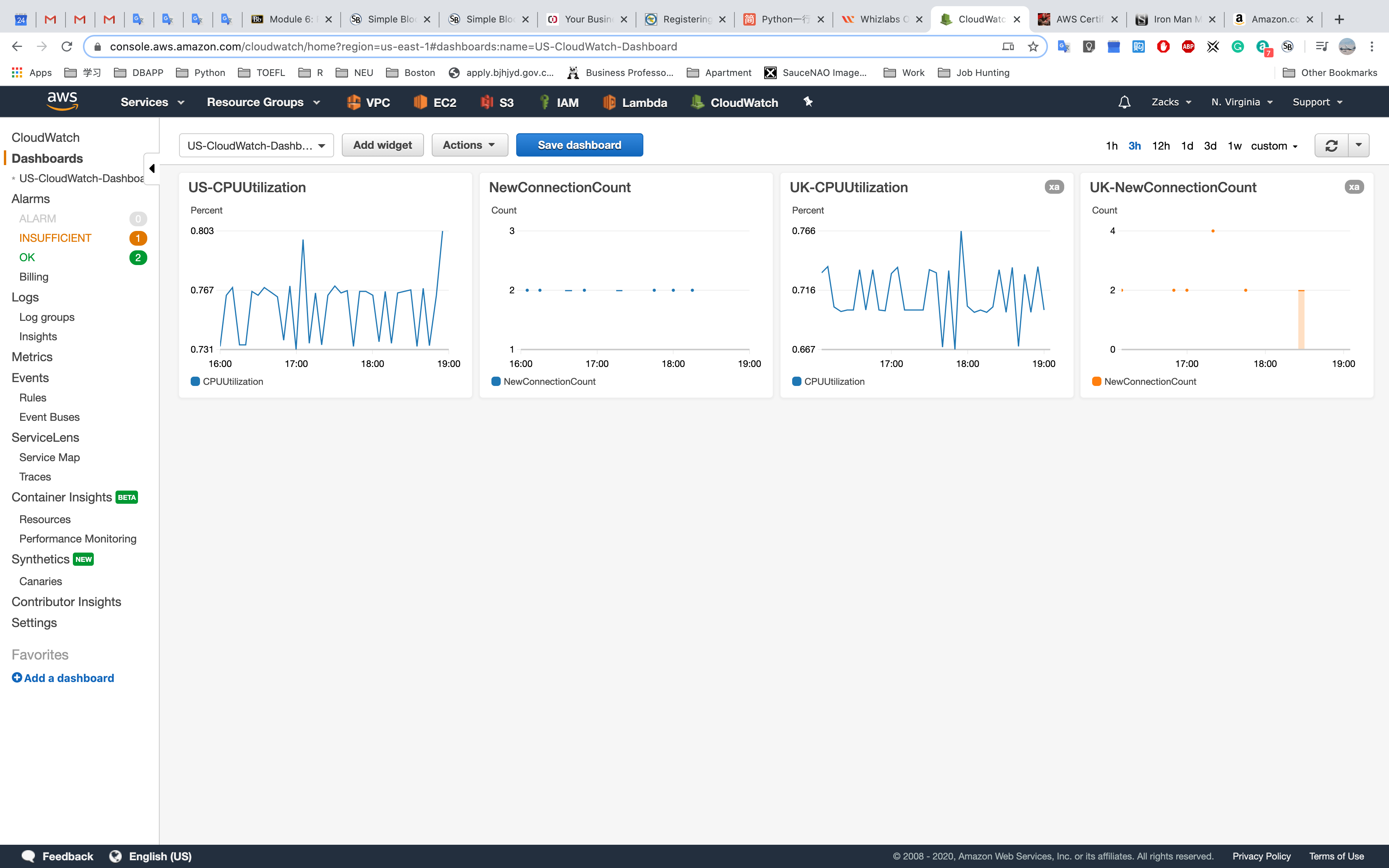

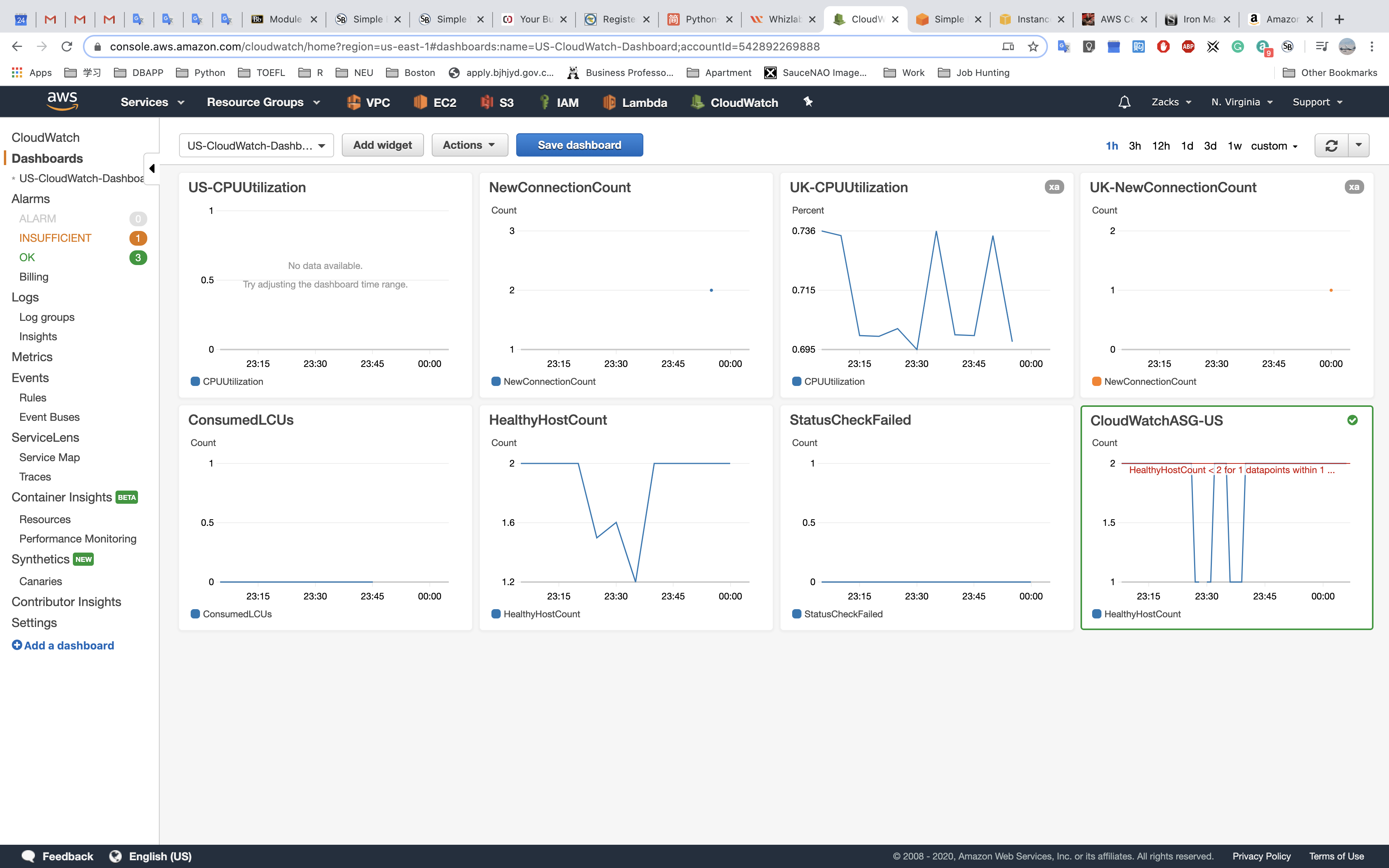

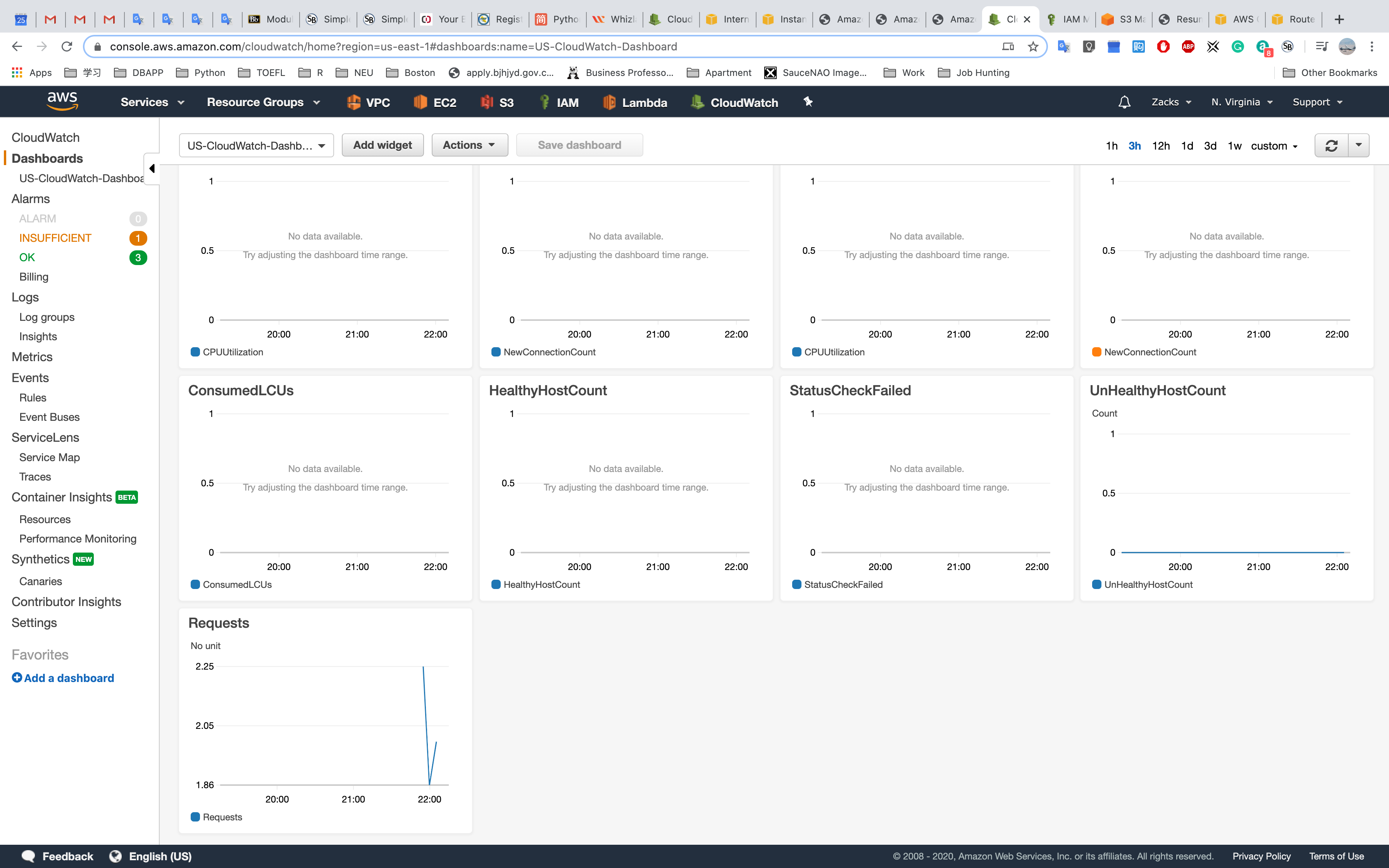

Dashboards and Alarms

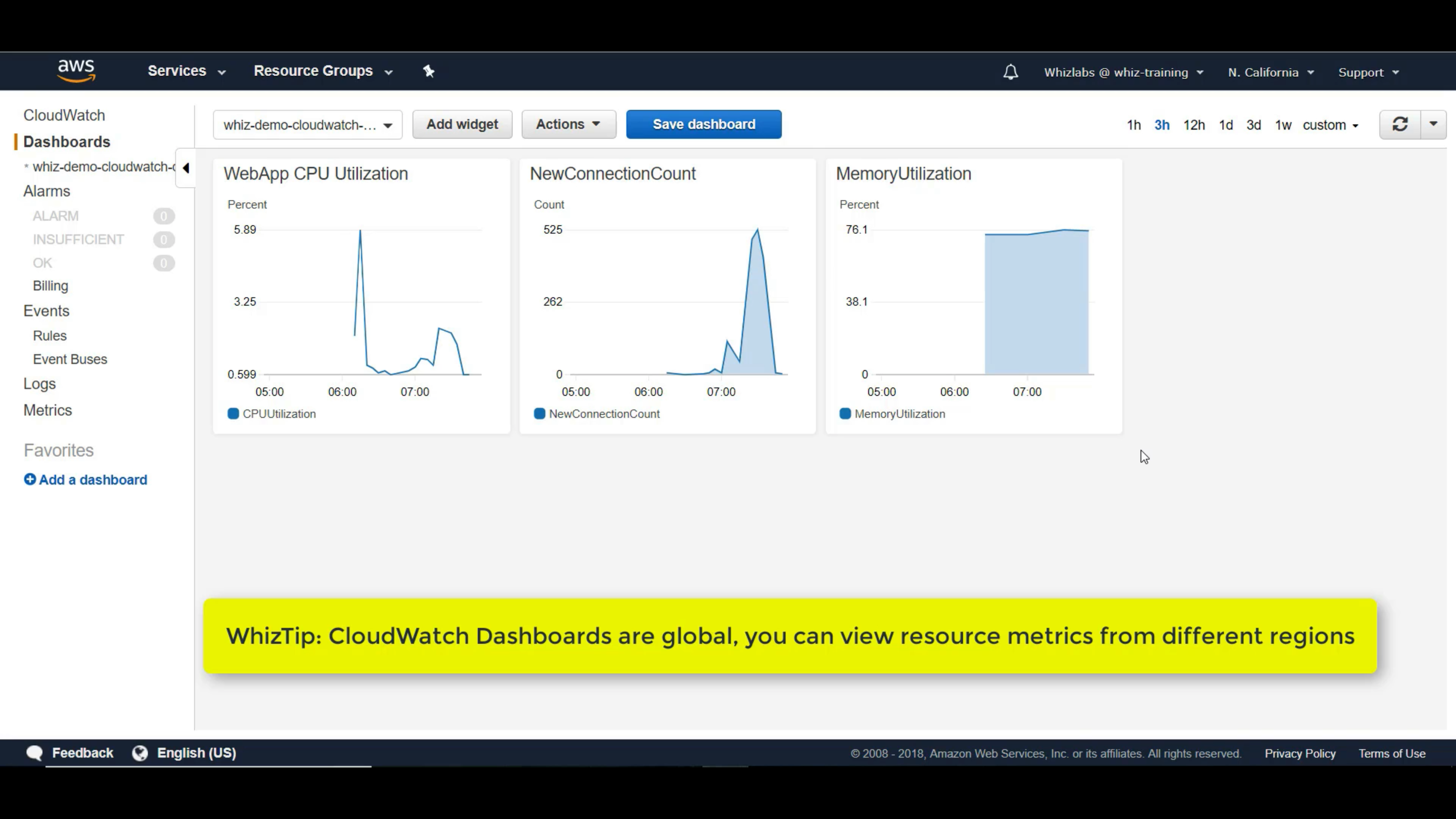

Remember save the dashboard

xa on the top and right of a widget means cross-region.

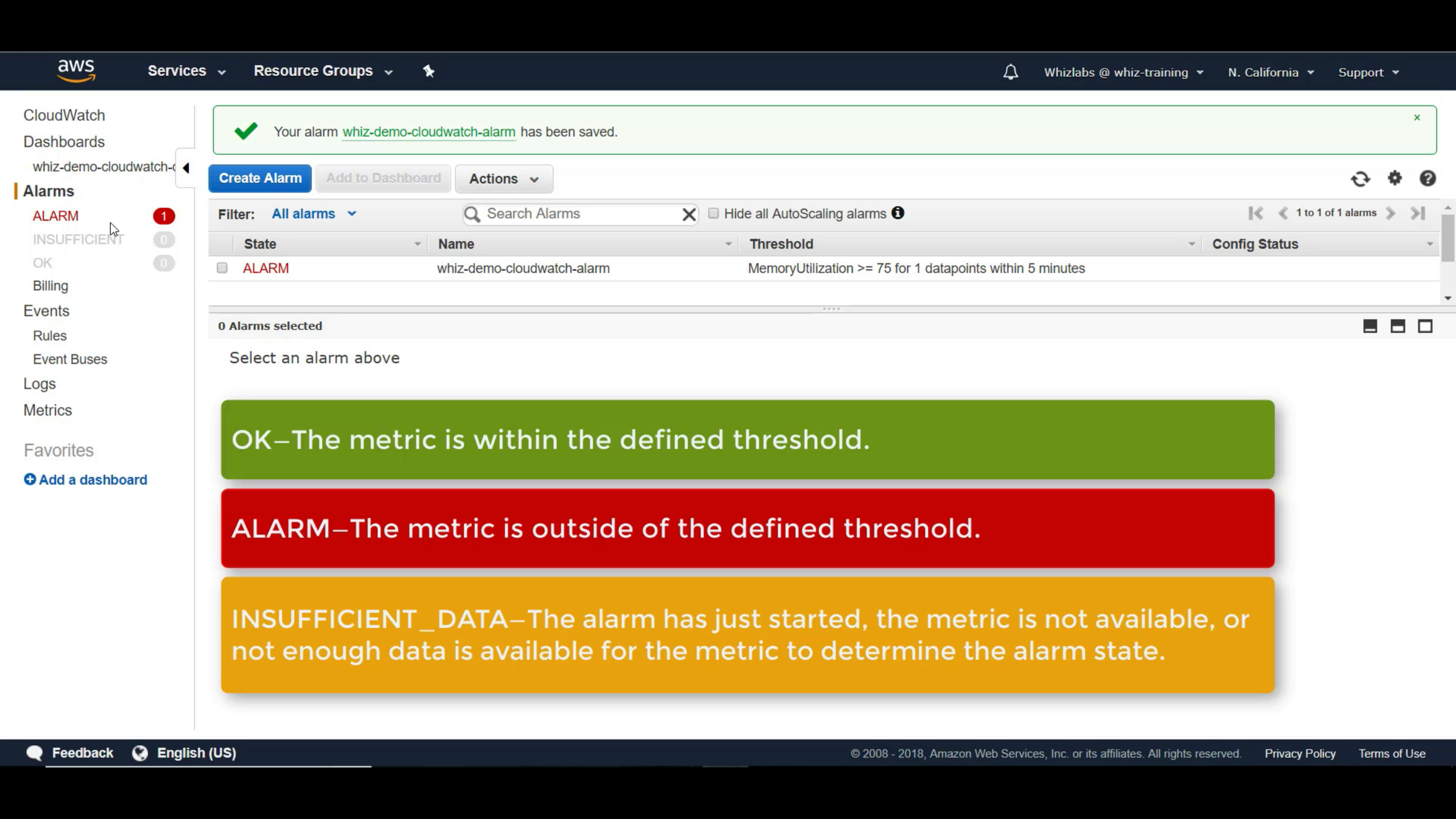

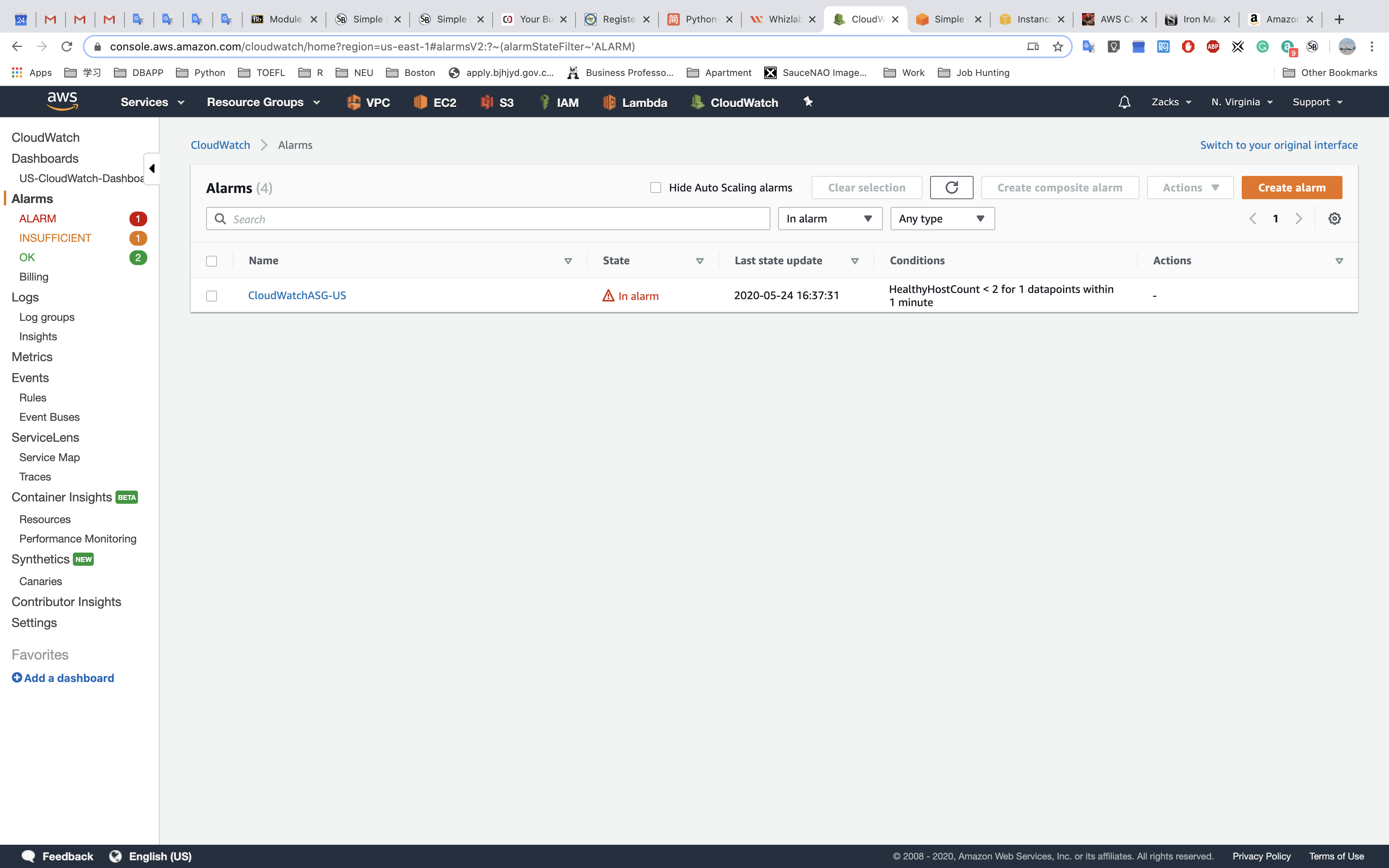

Create a CloudWatch Alarm -> Attach it to SNS (optional) -> Add an action (optional)

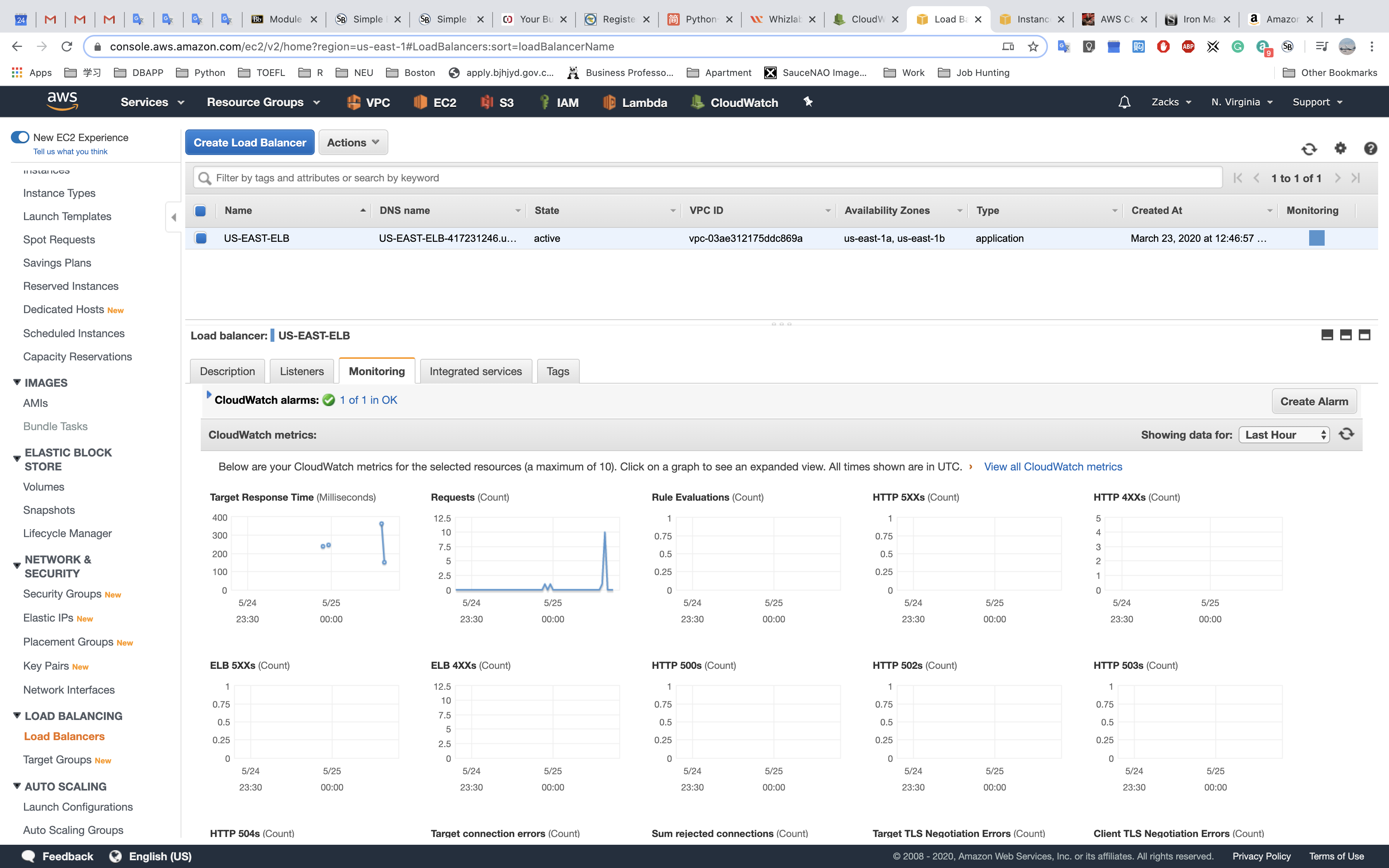

And you EC2 - Load Balancers page will also shows that you have an (CloudWatch) Alarm.

If you add an alarm to dashboard through Action, there will be a red or green frame outside the widget.

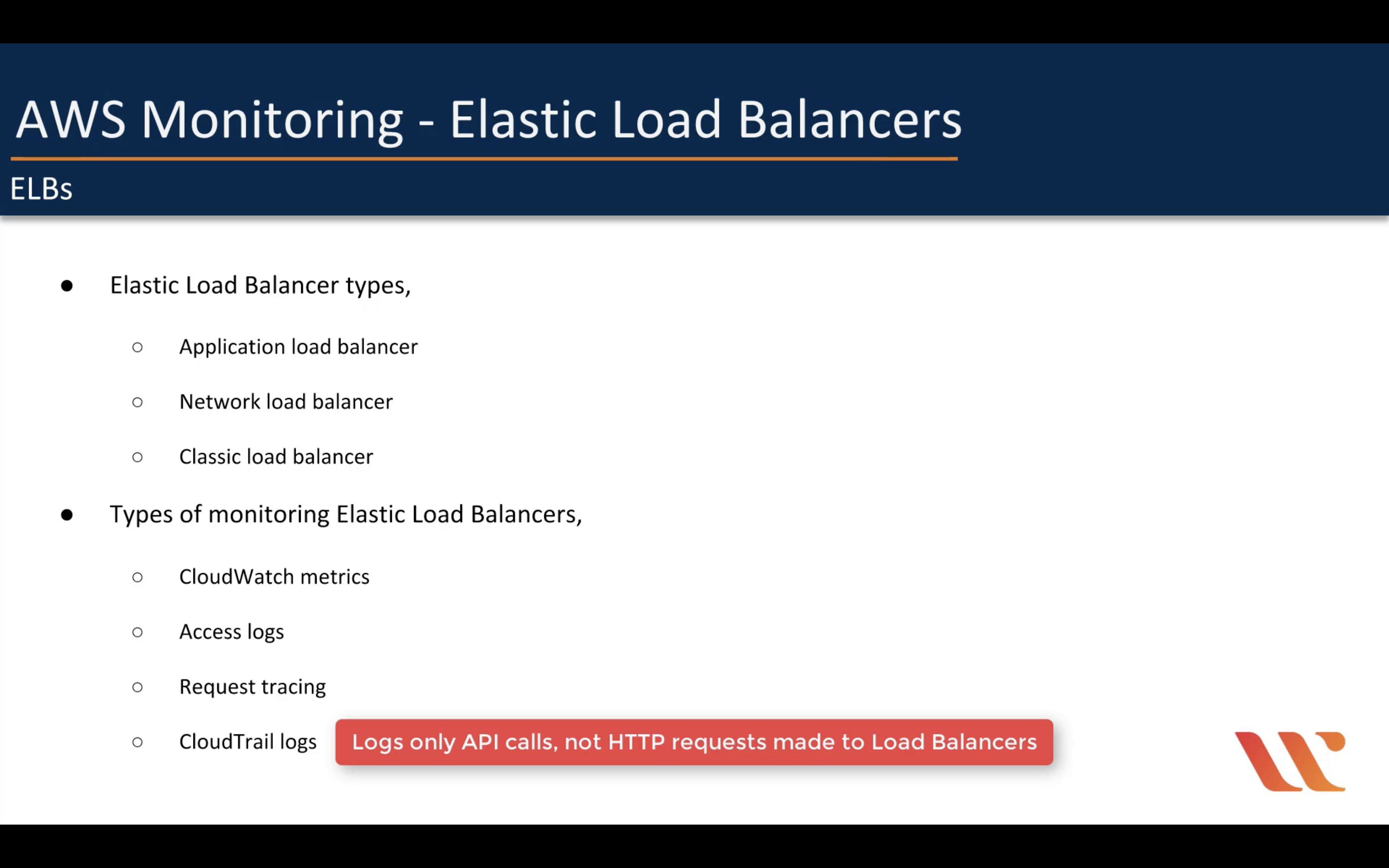

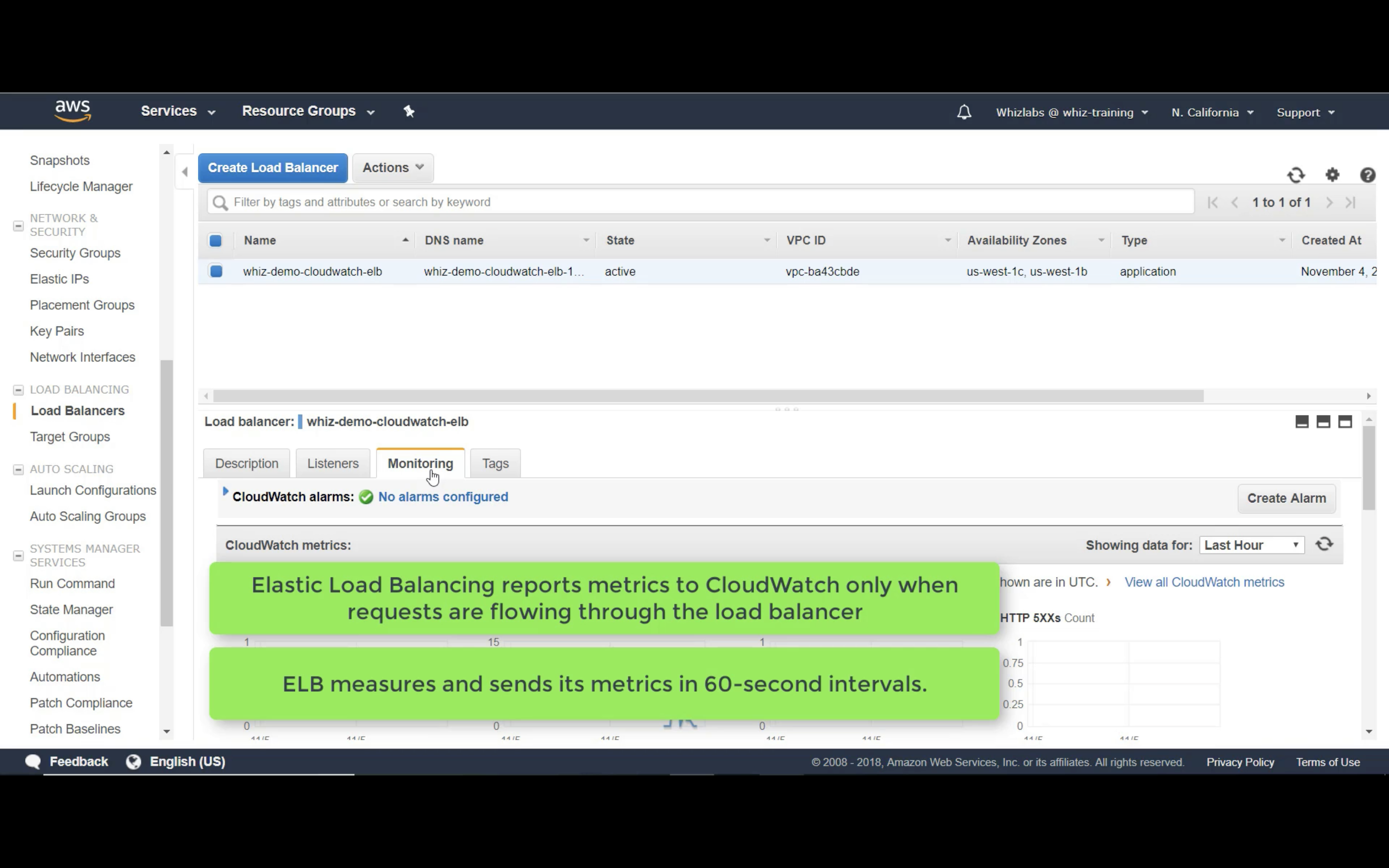

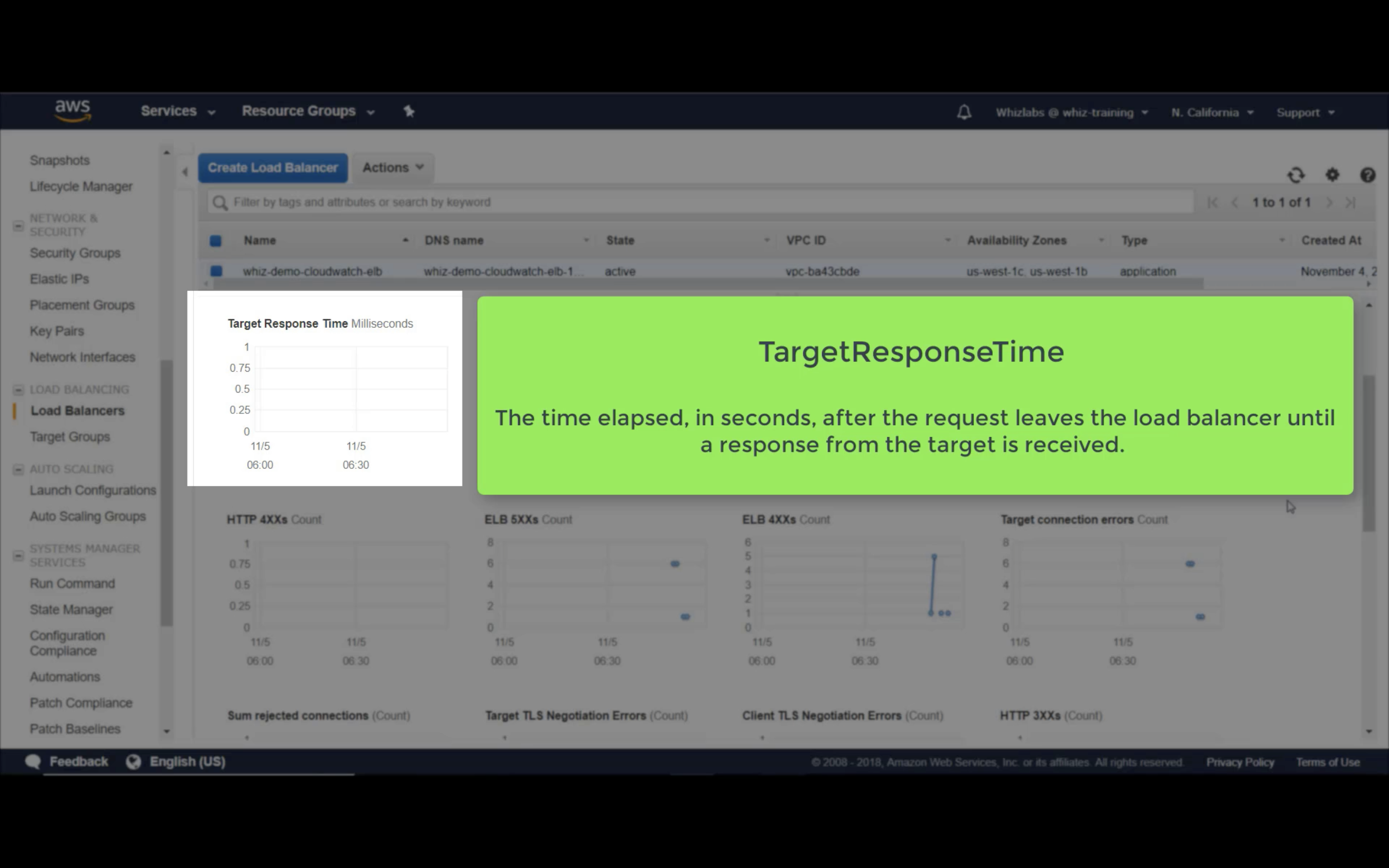

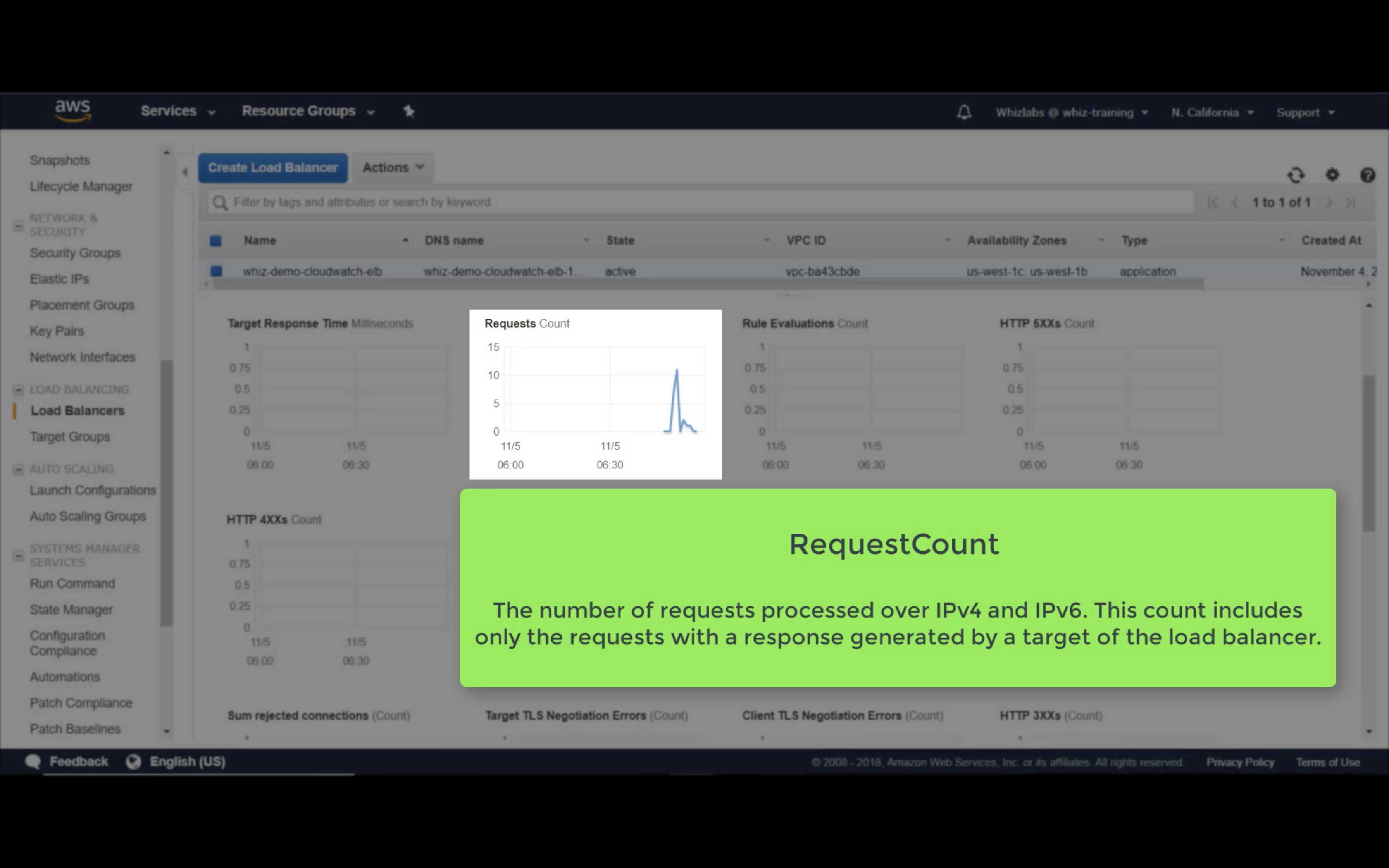

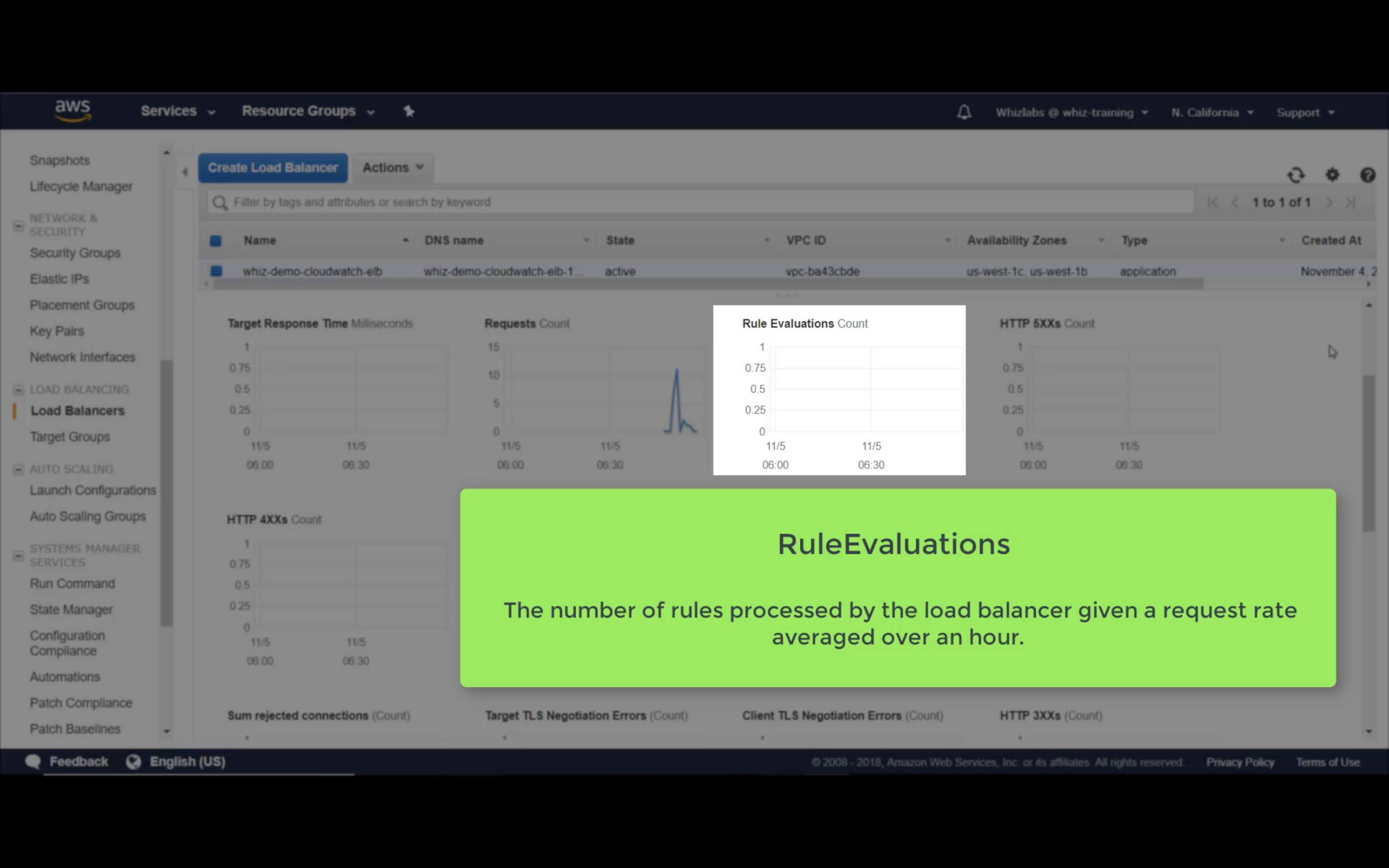

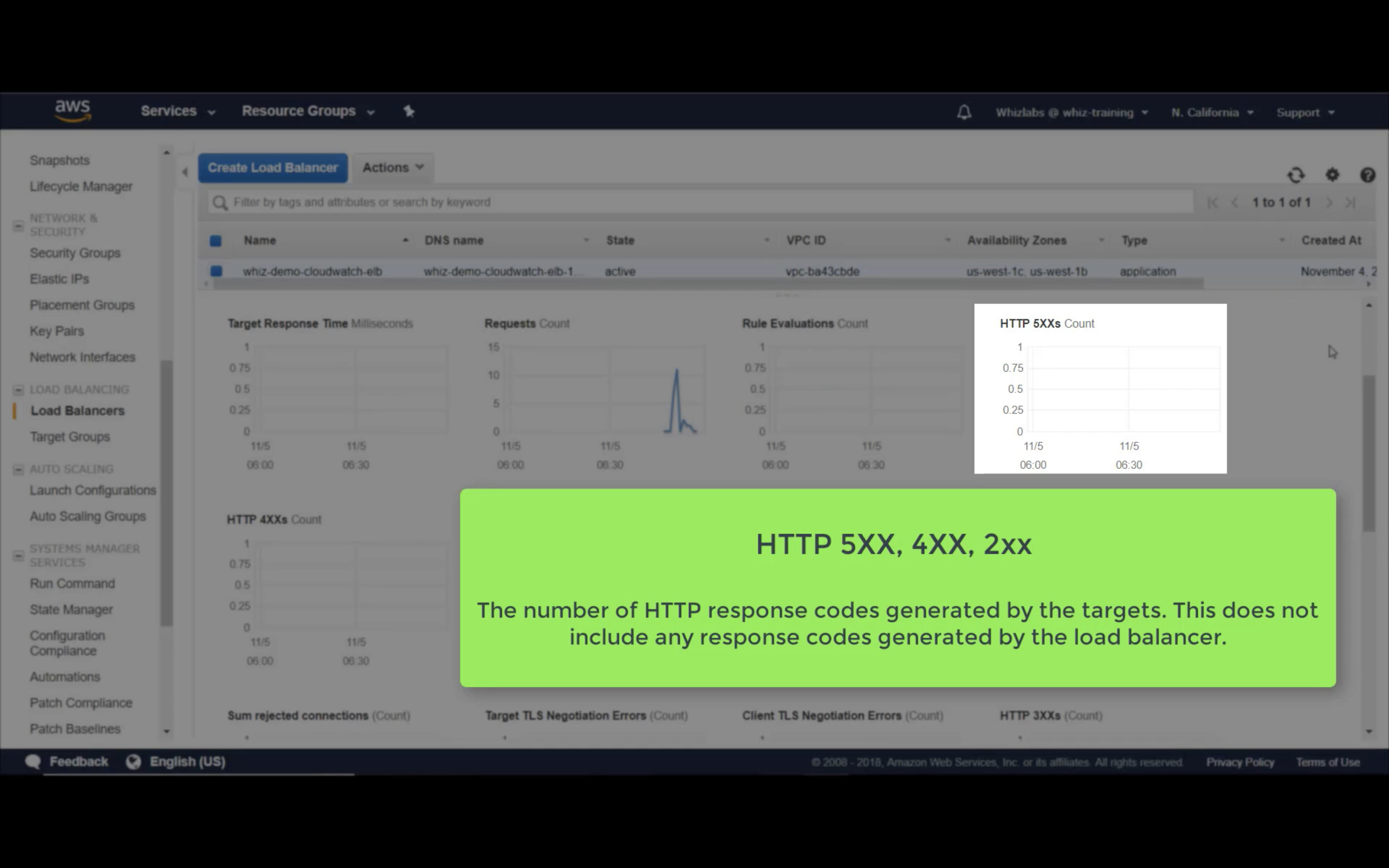

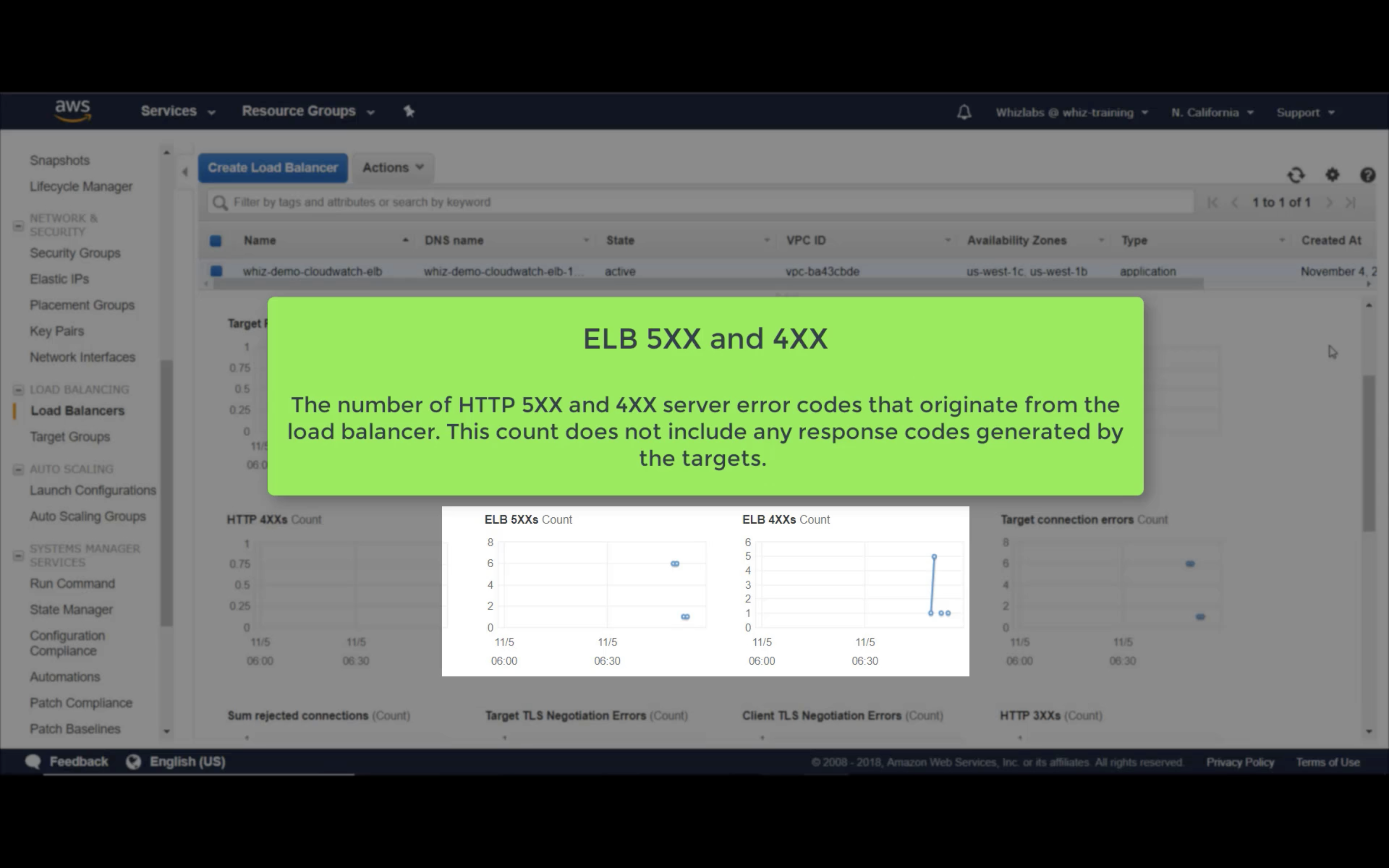

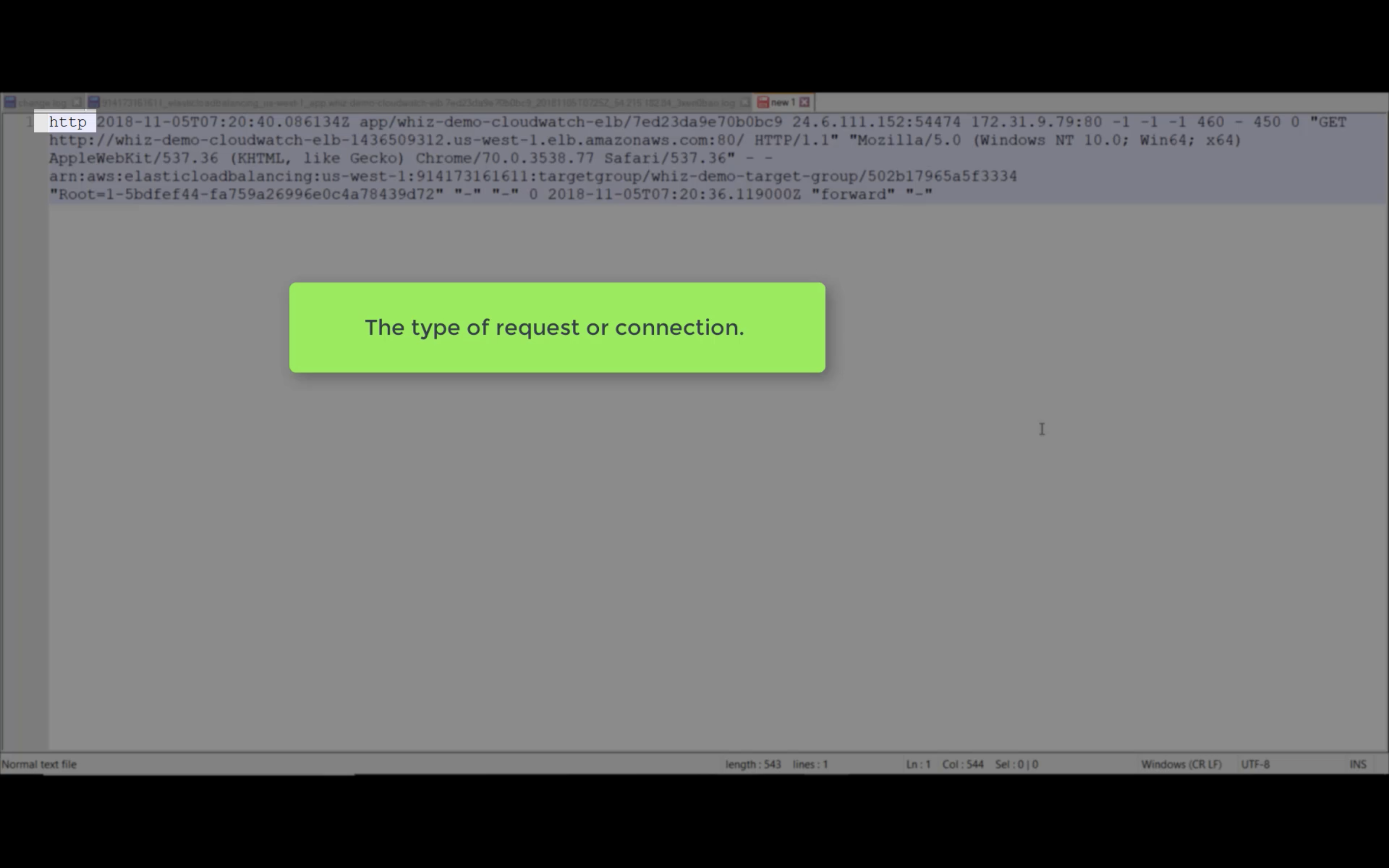

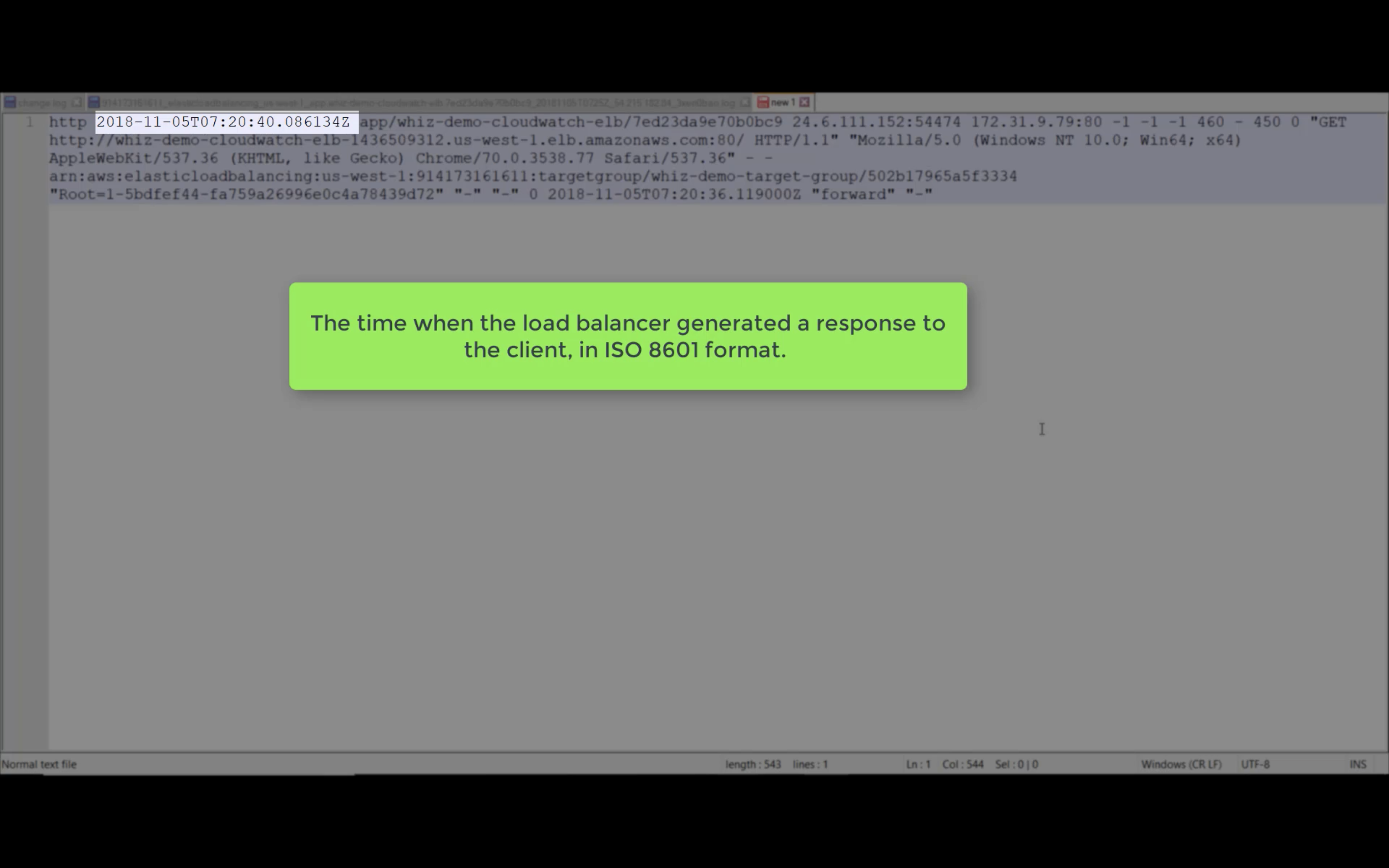

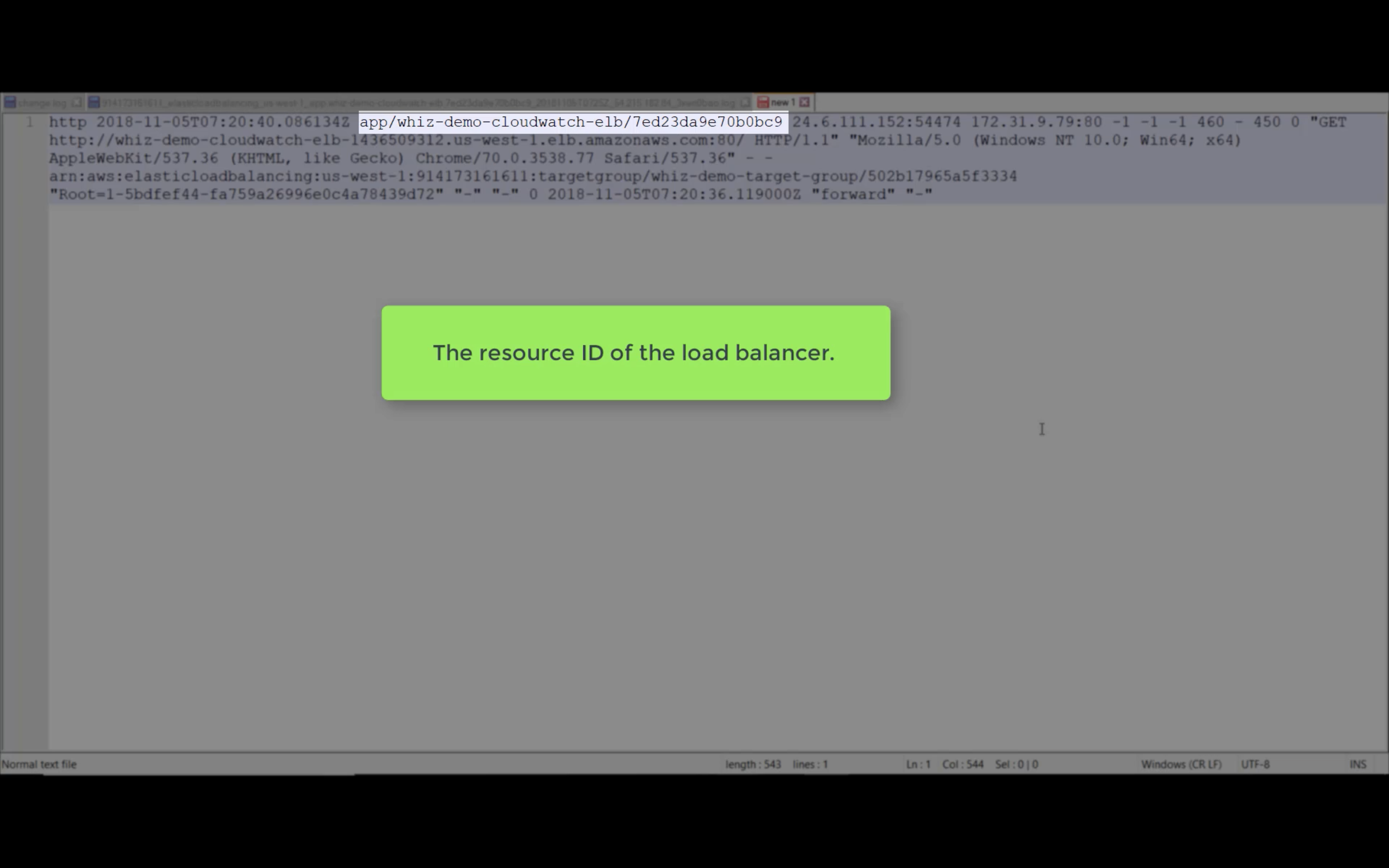

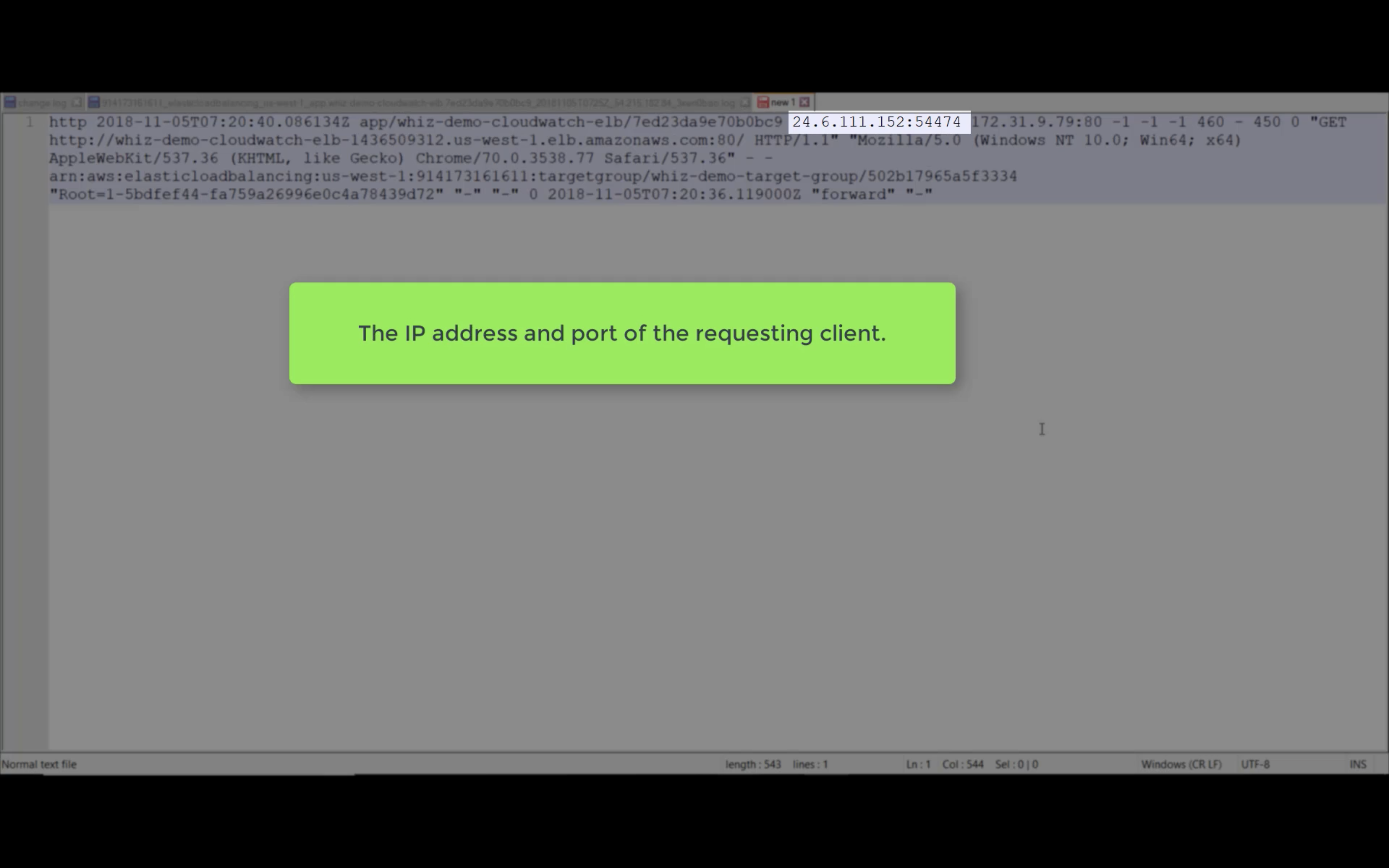

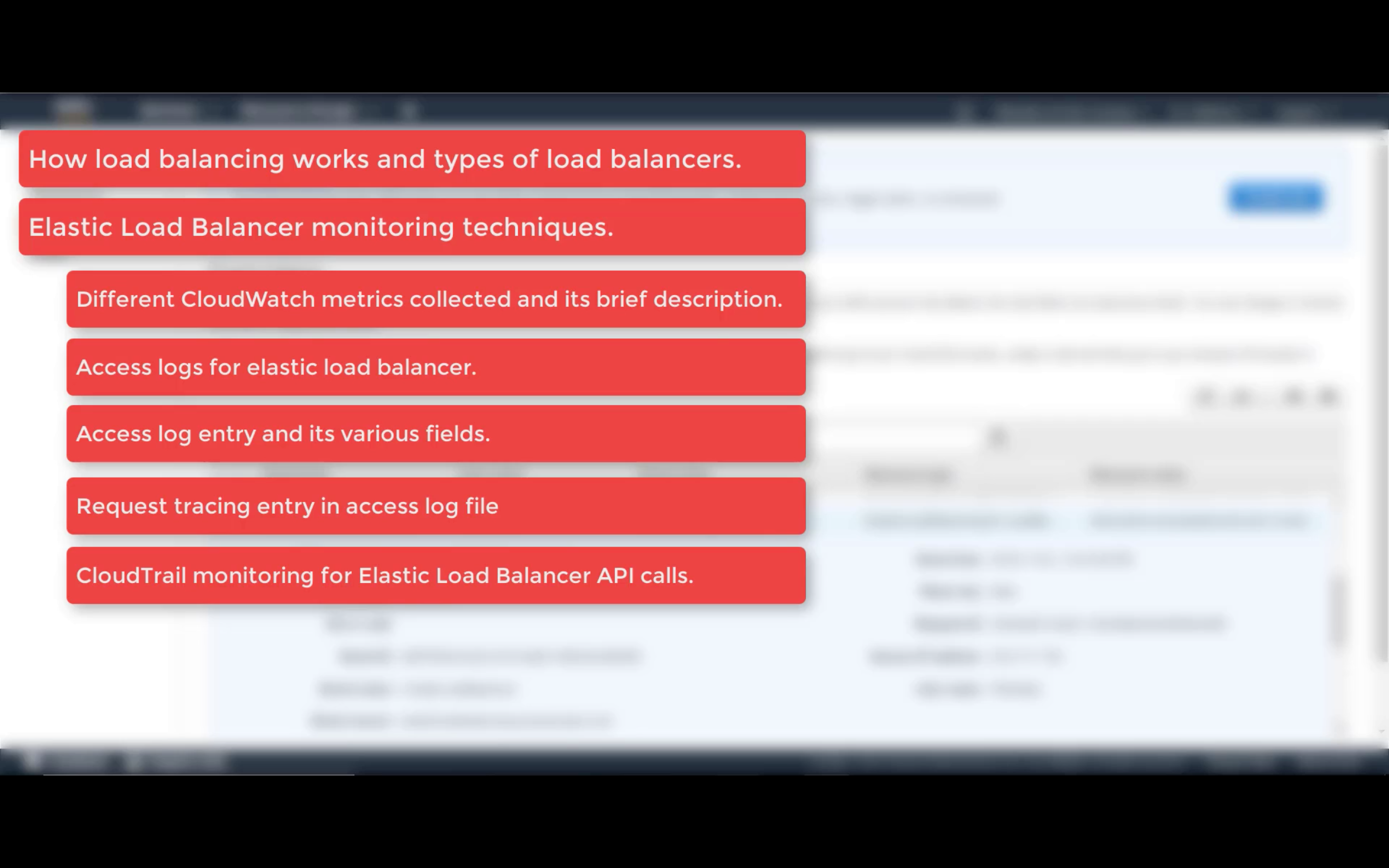

ELB Monitoring

Monitoring

Target Response Time

Requests (Count)

Rule Evaluations (Count)

HTTP 5XX, 4XX, 2XX (Count)

ELB 5XX, 4XX, 2XX (Count)

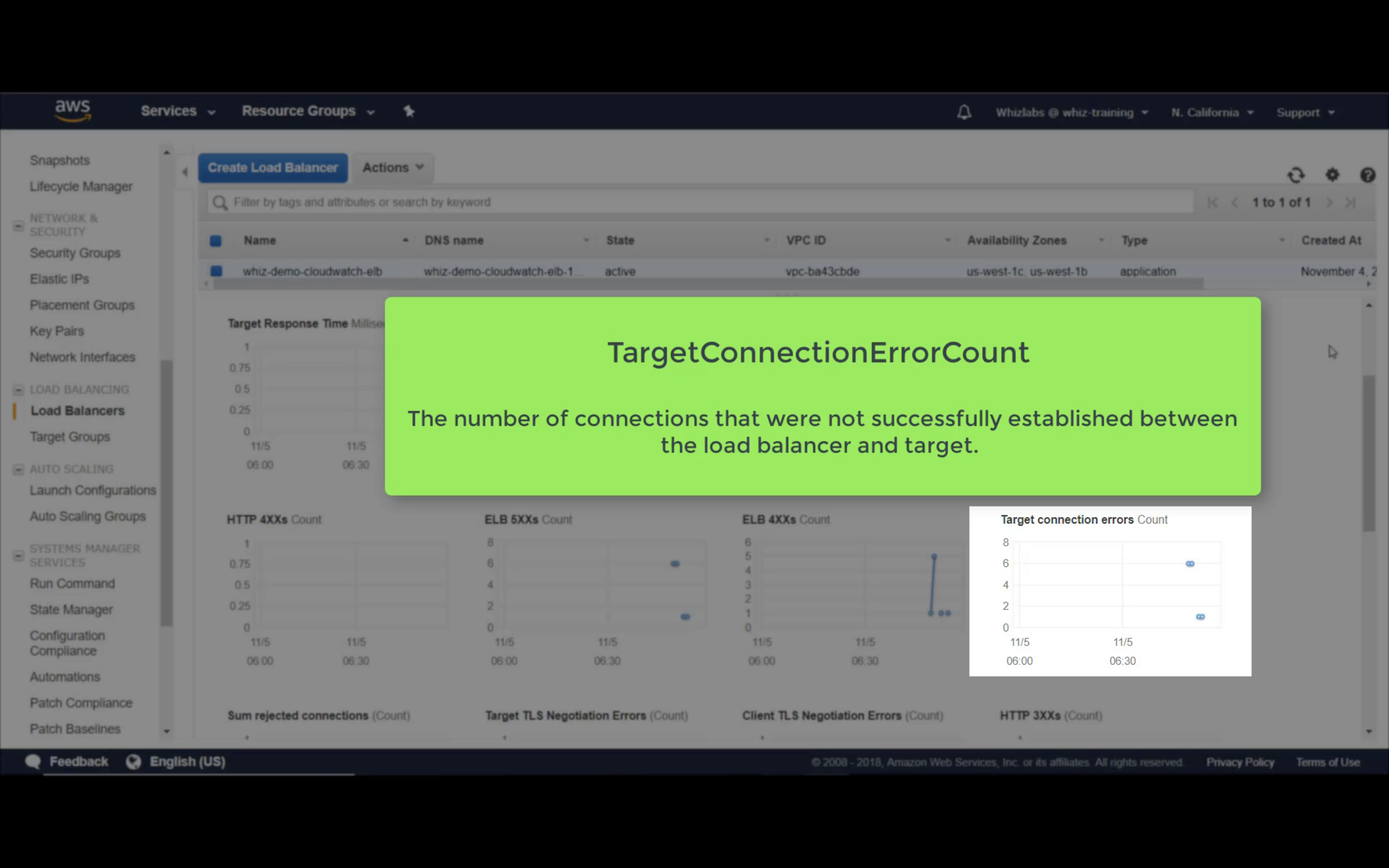

Target Connection Error (Count)

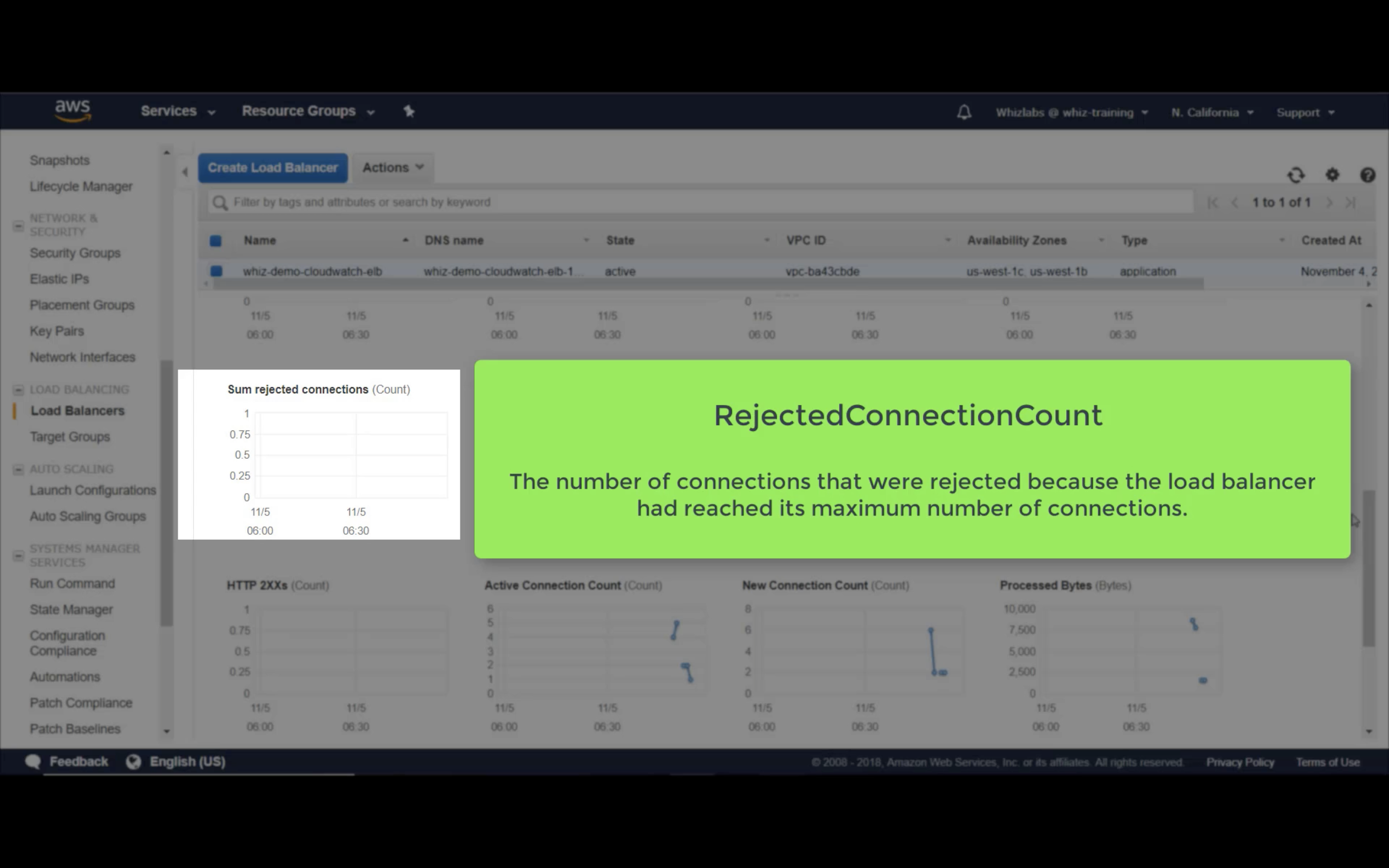

Sum Rejected Connection (Count)

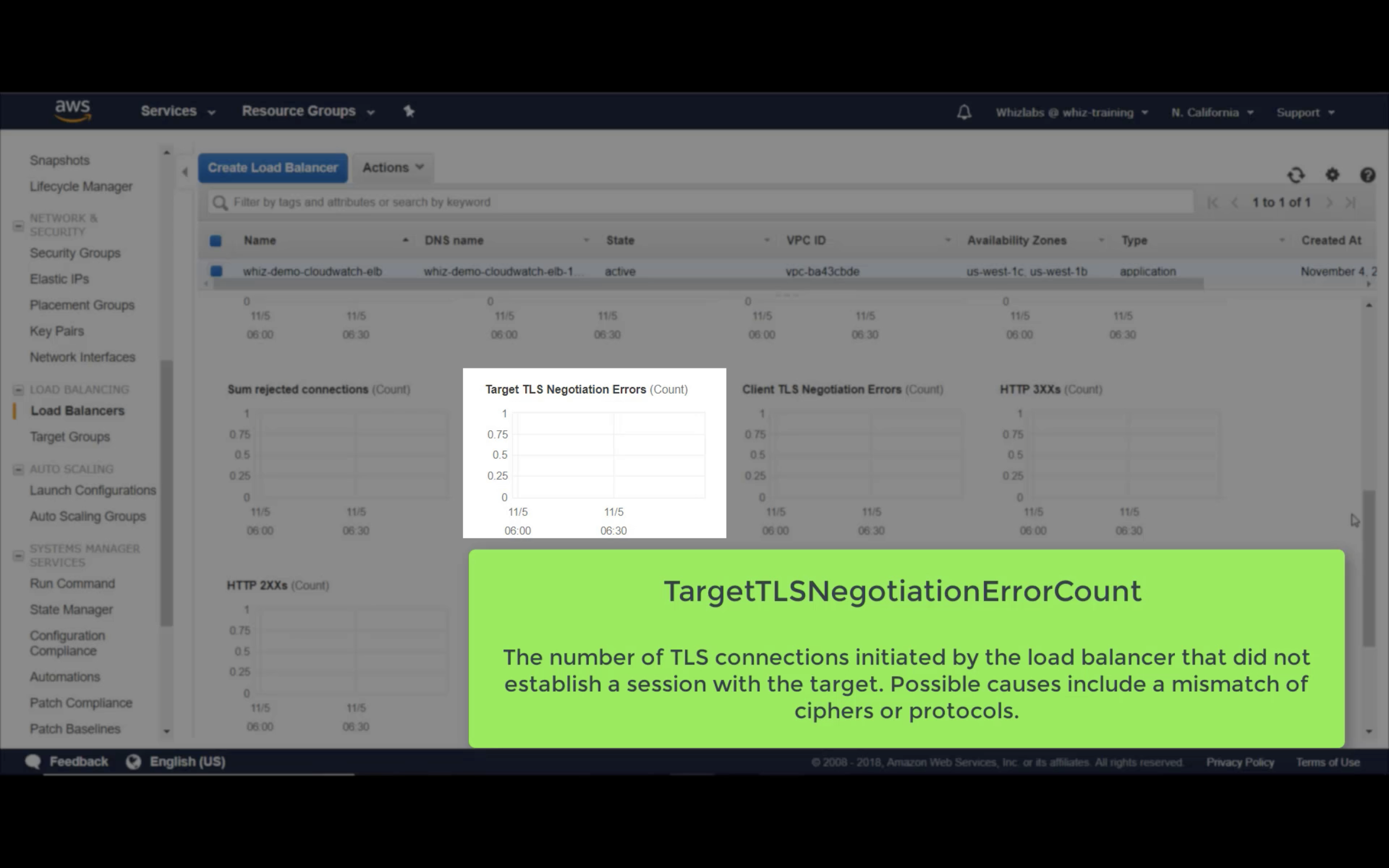

Target TLS Negotiation Error (Count)

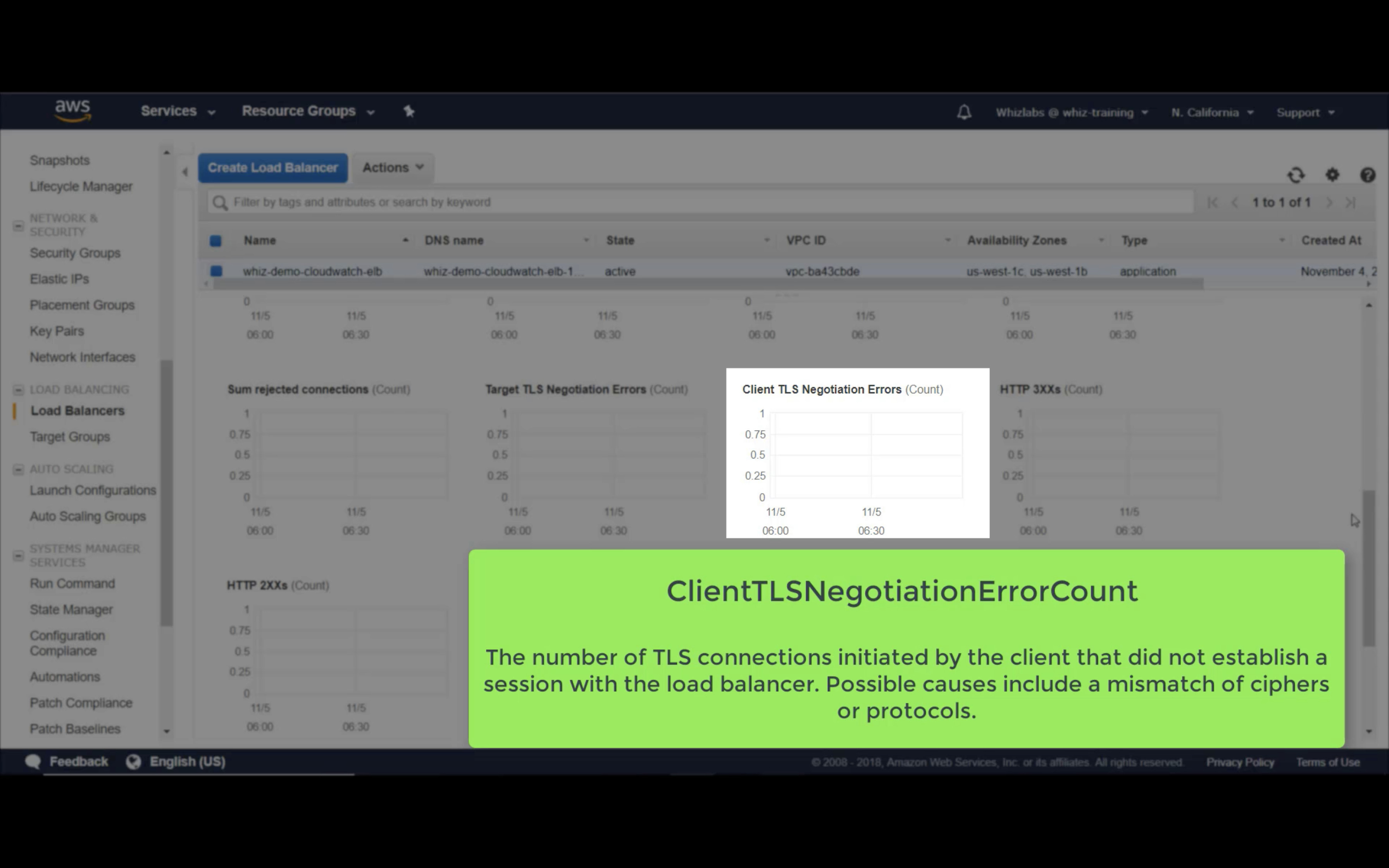

Client TLS Negotiation Error (Count)

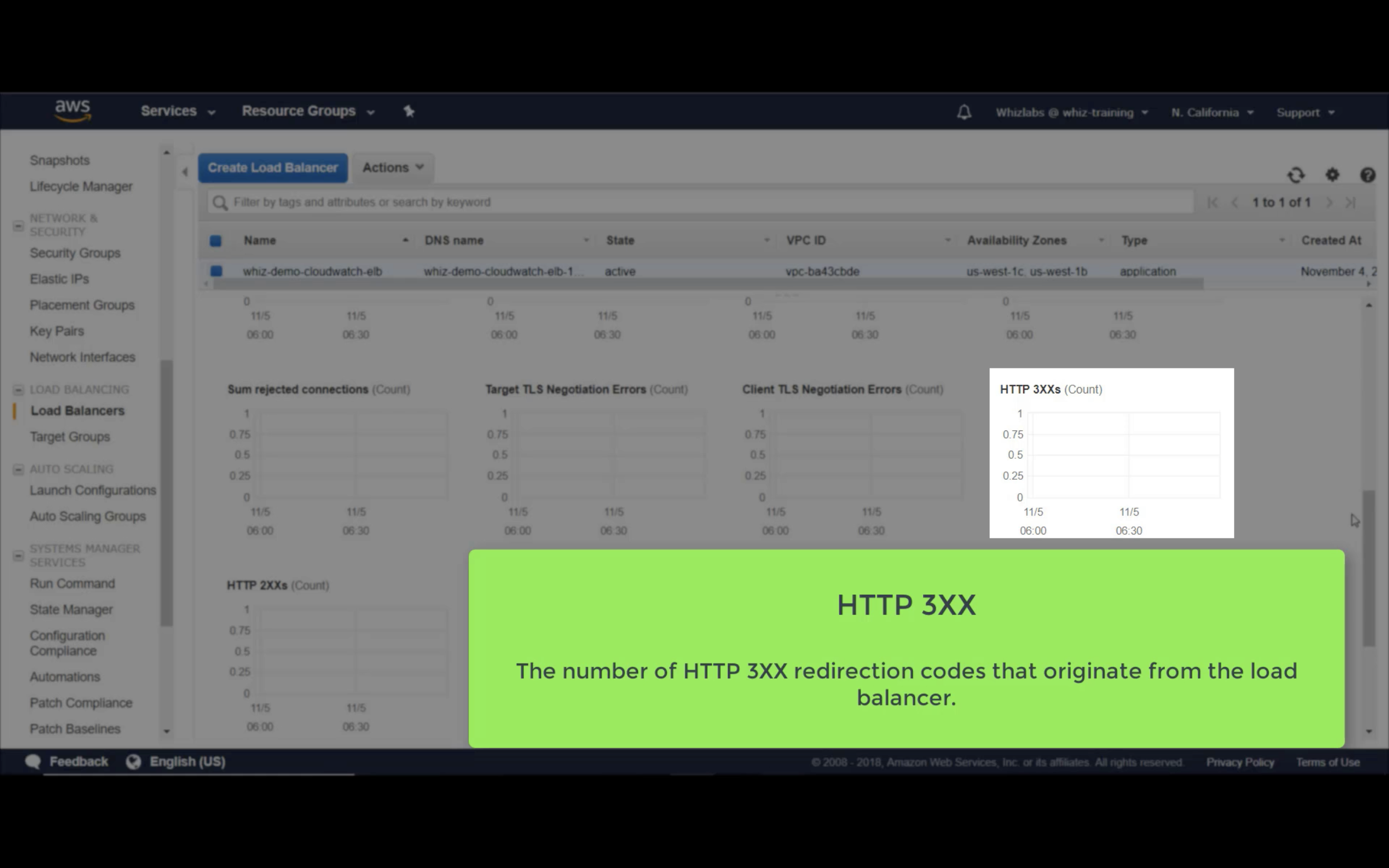

HTTP 3XX (Count)

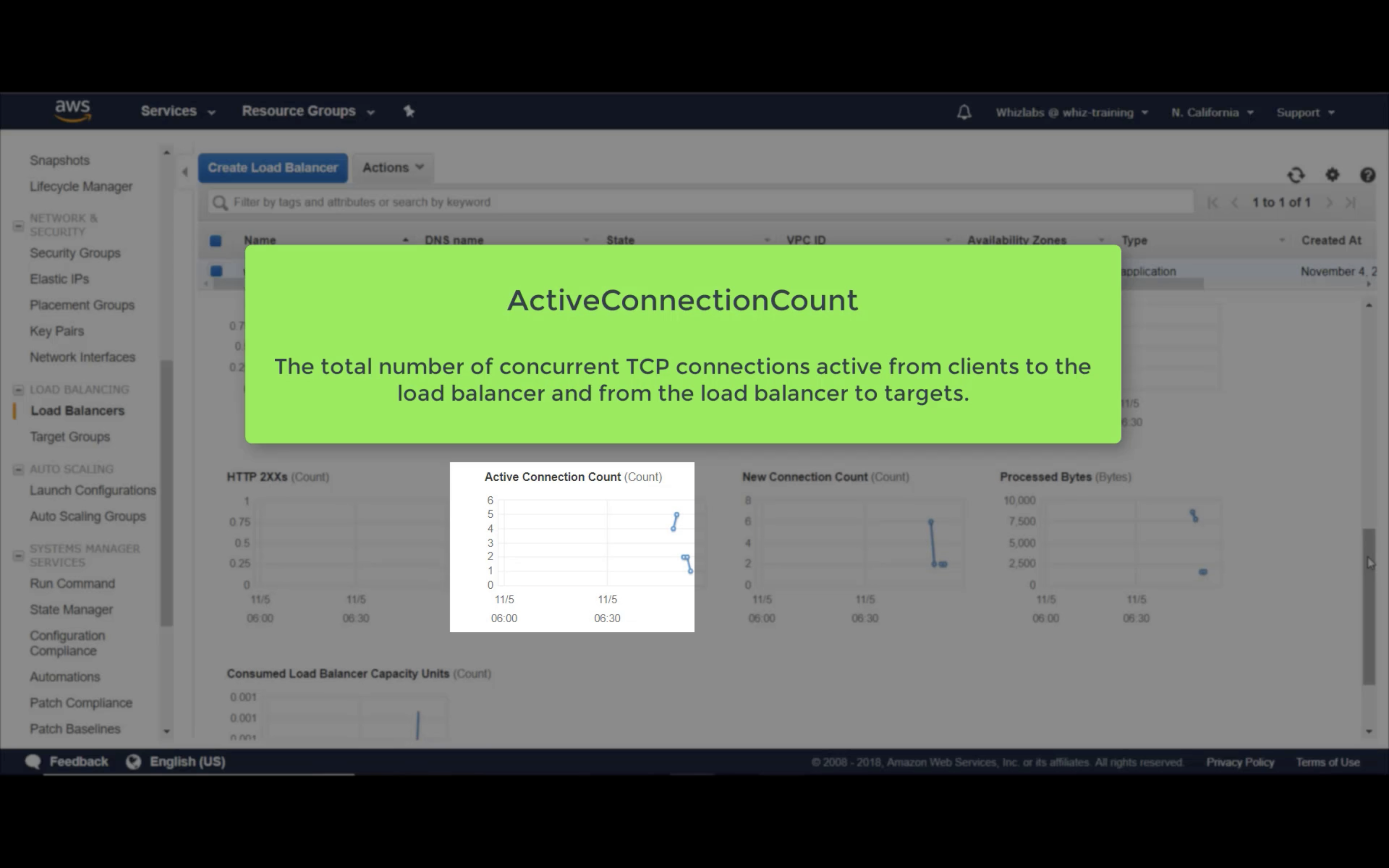

Active Connection Count (Count)

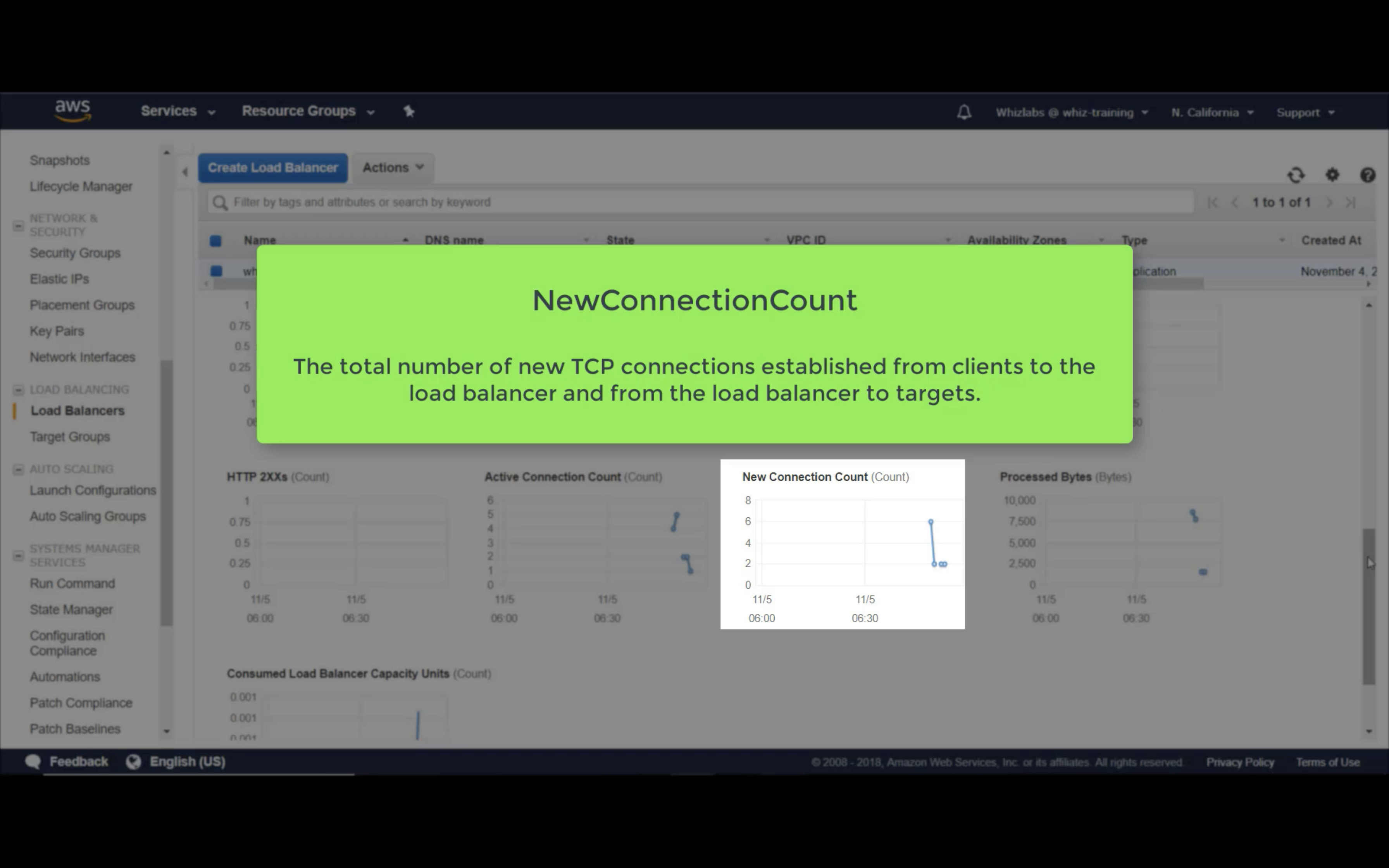

New Connection Count (Count)

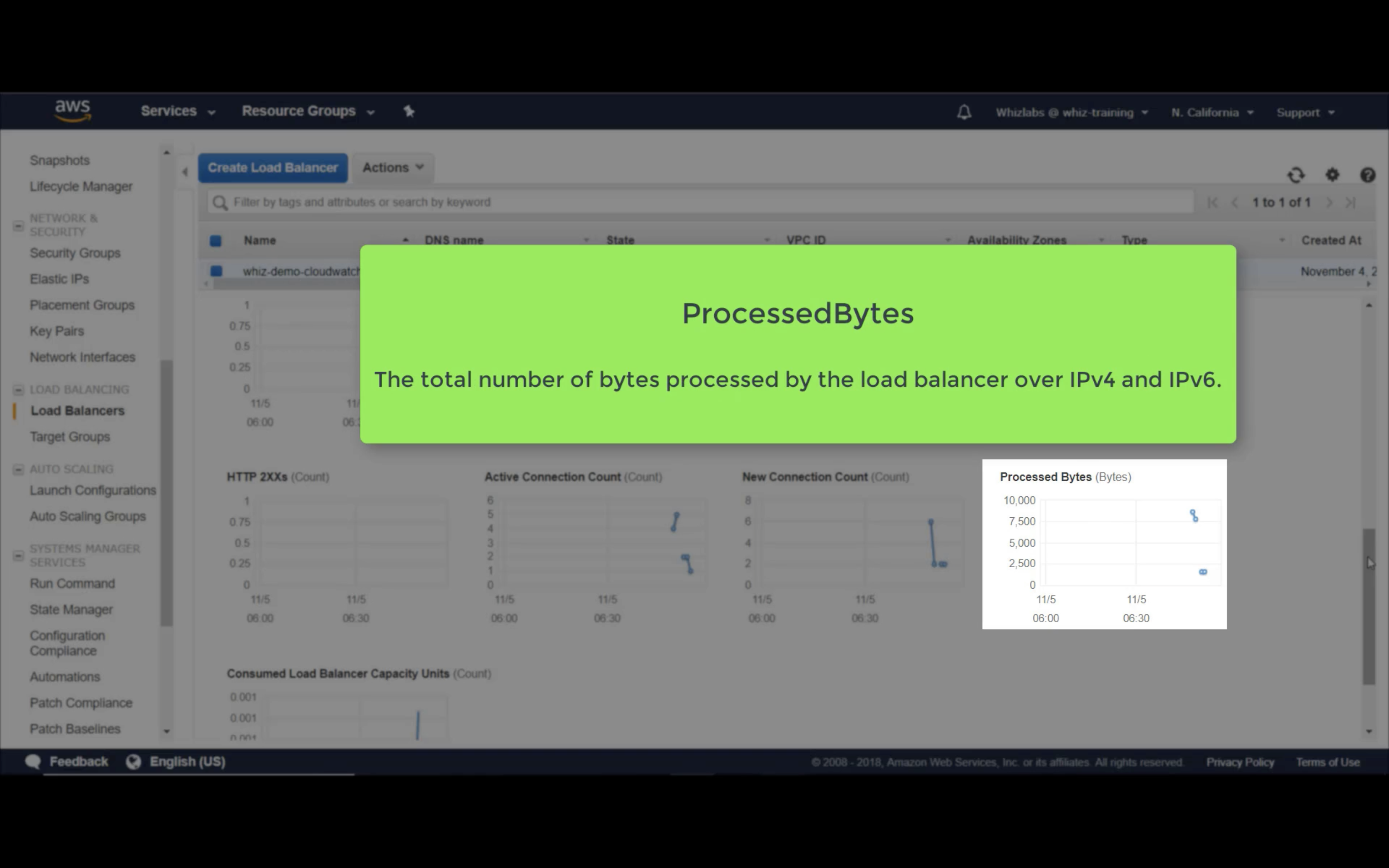

Processed Bytes

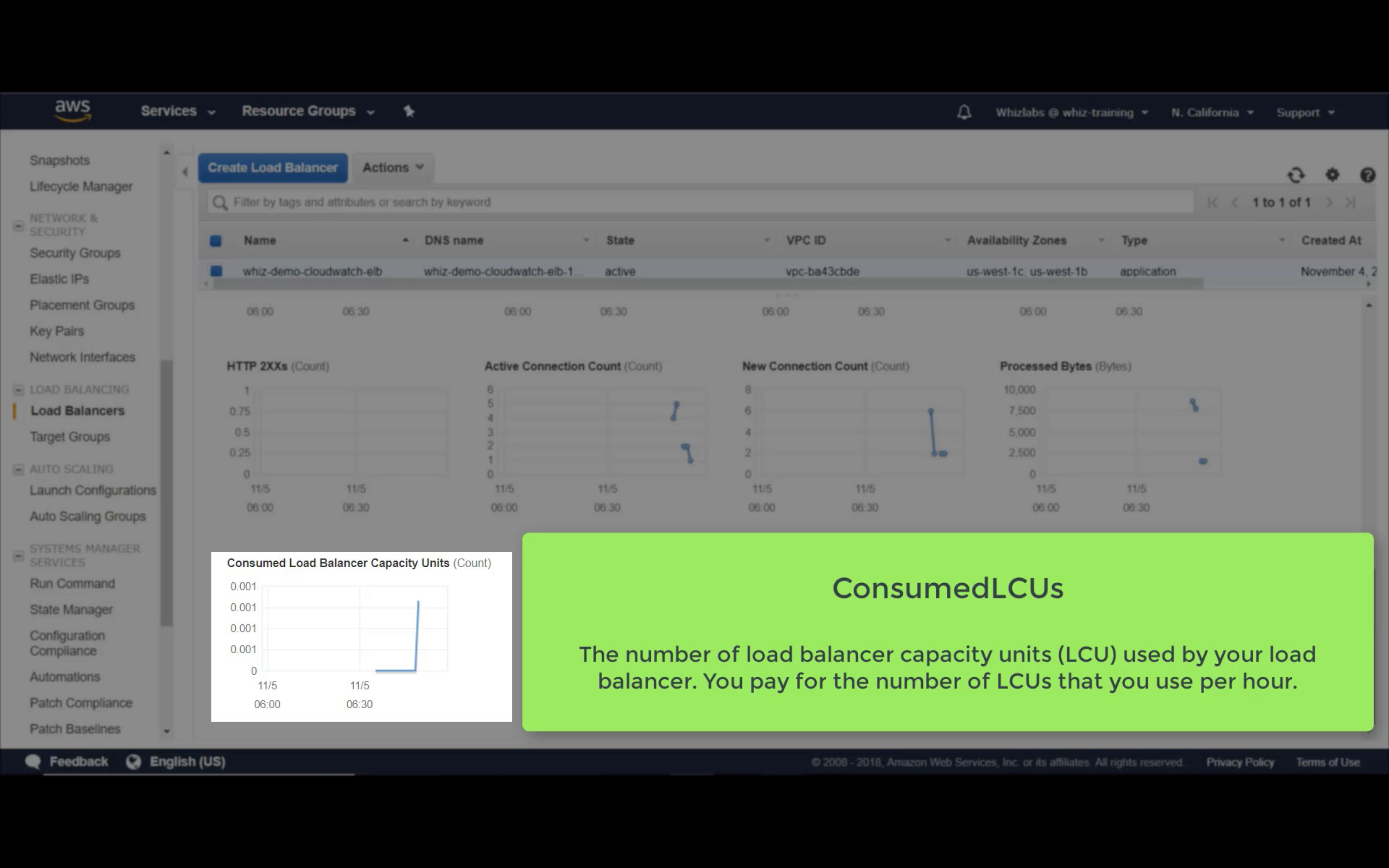

Consumed LCUs

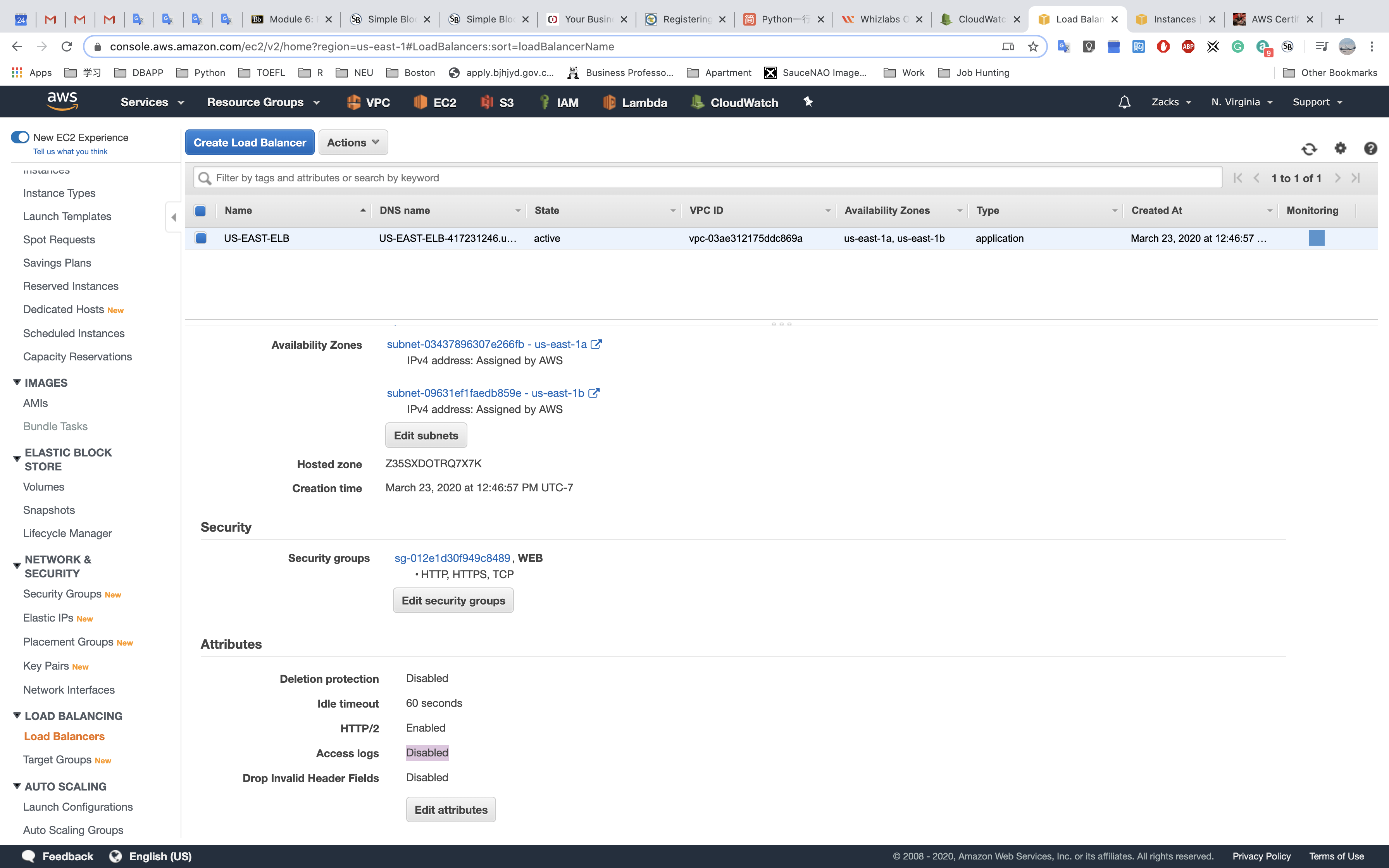

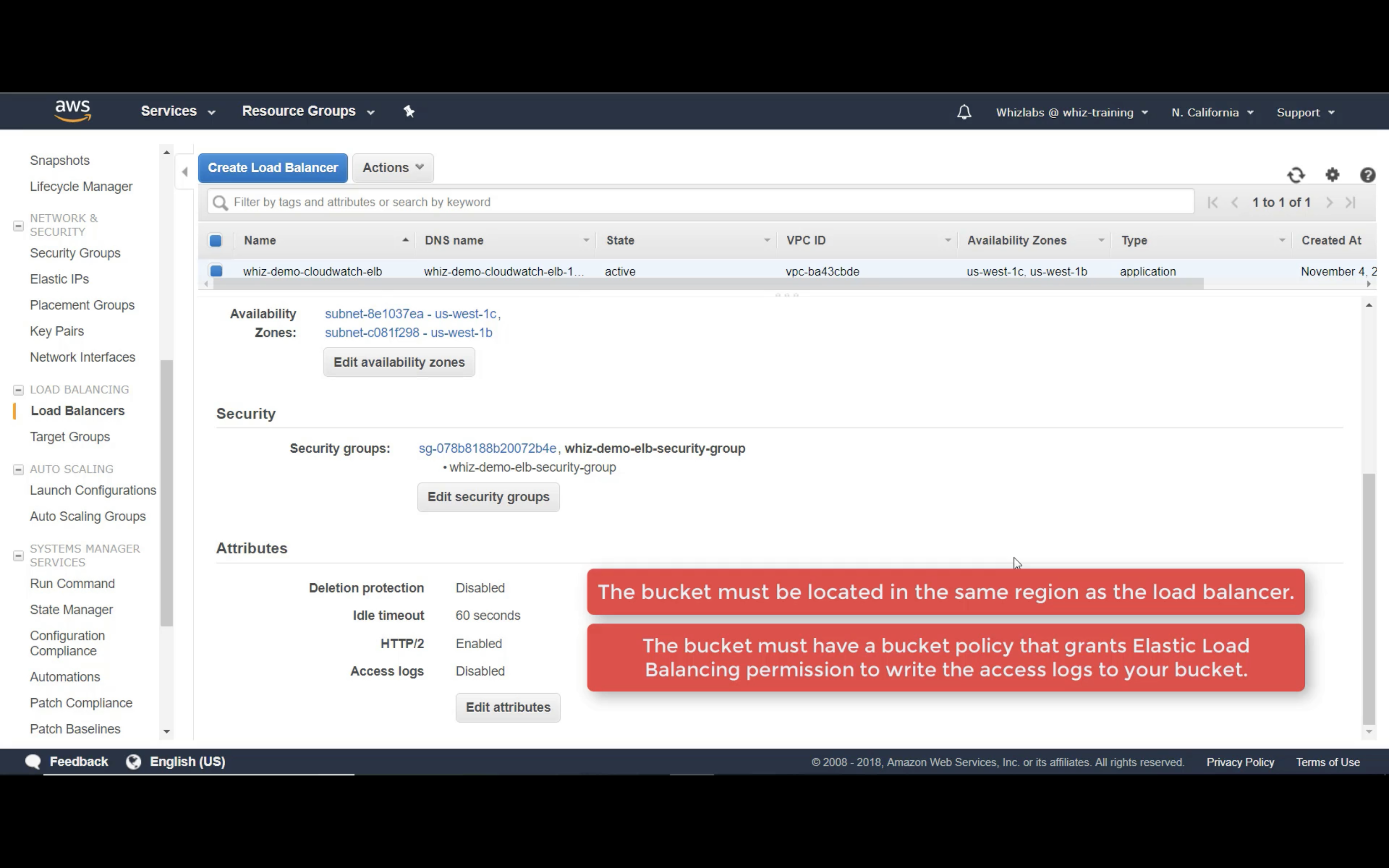

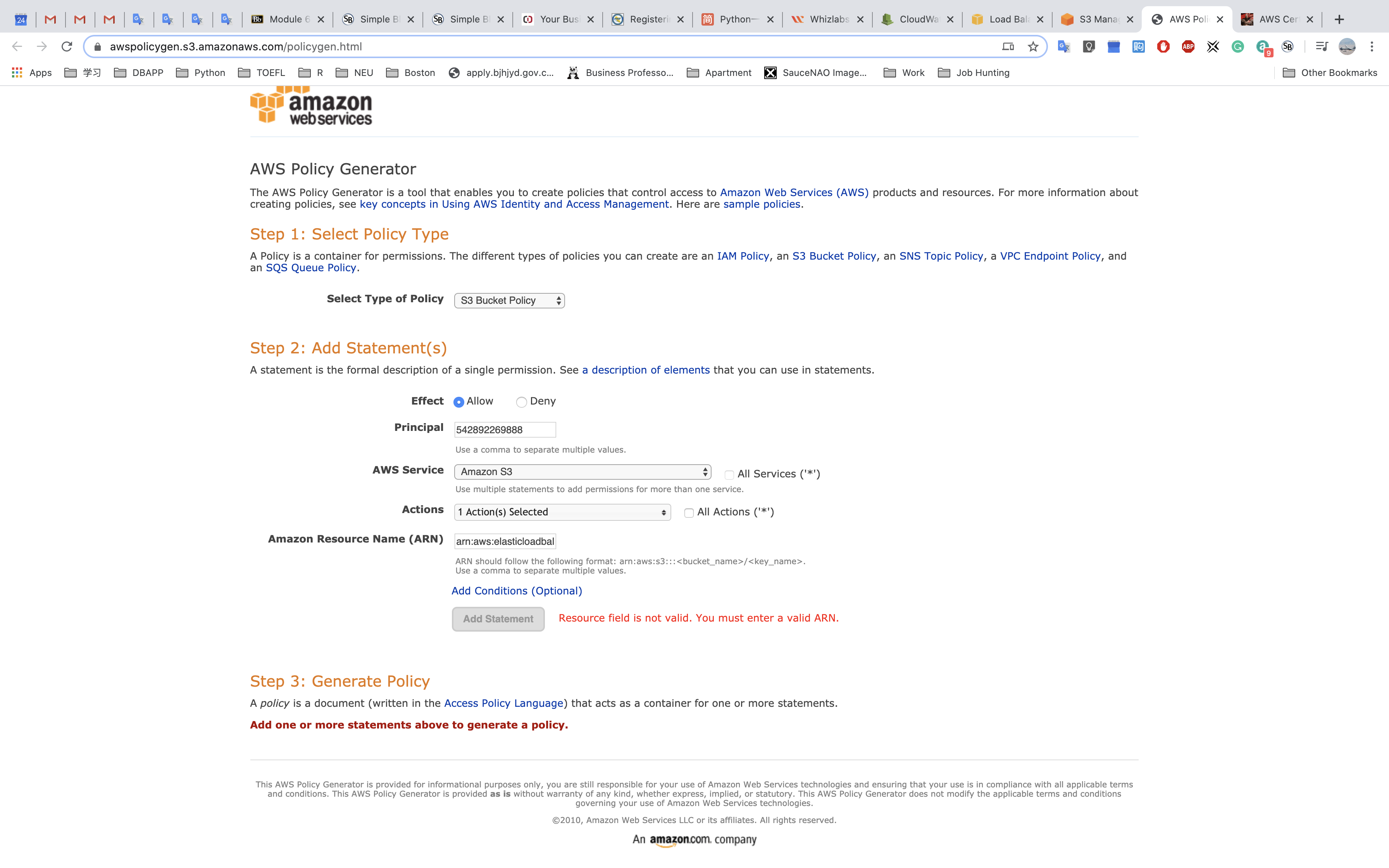

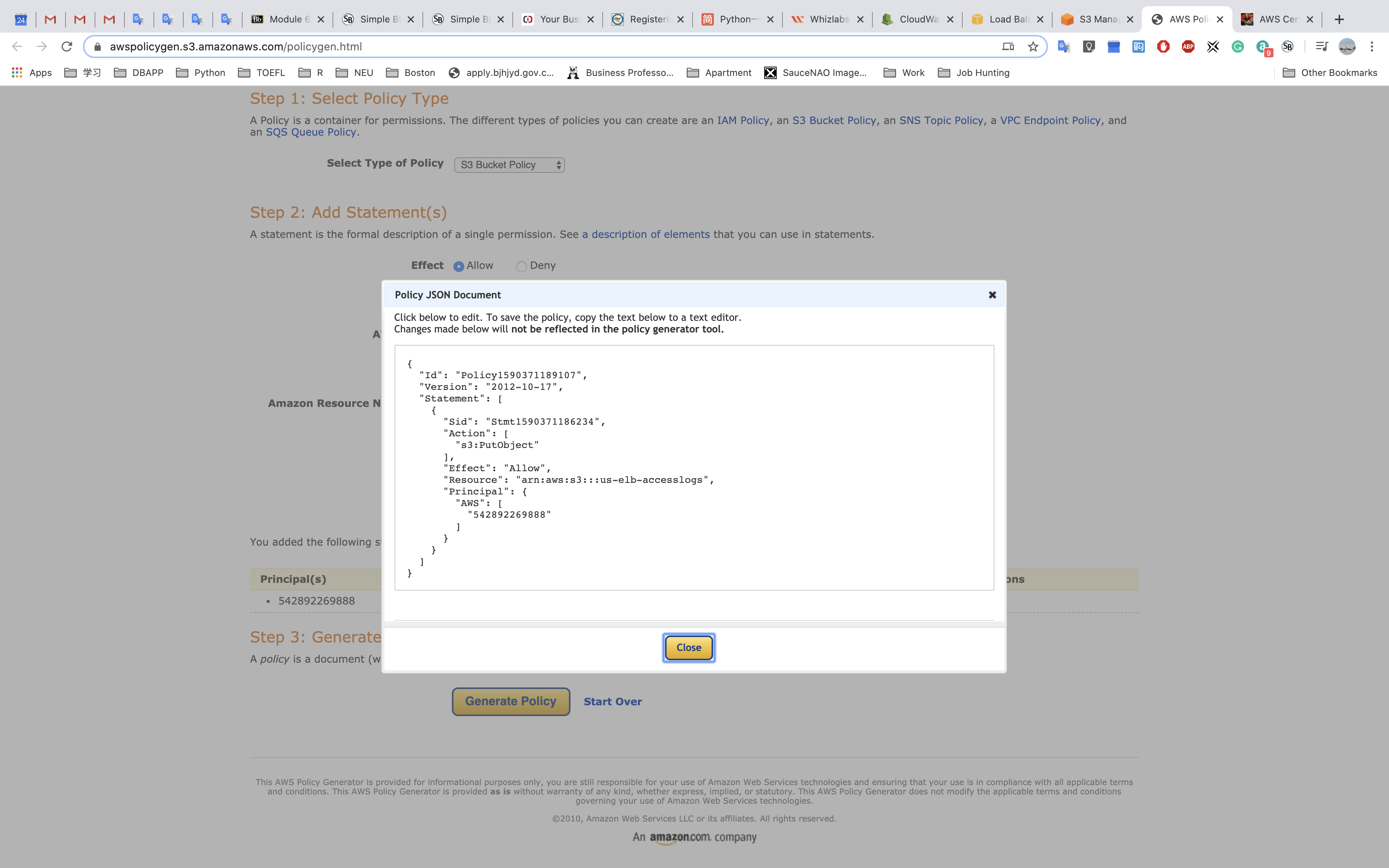

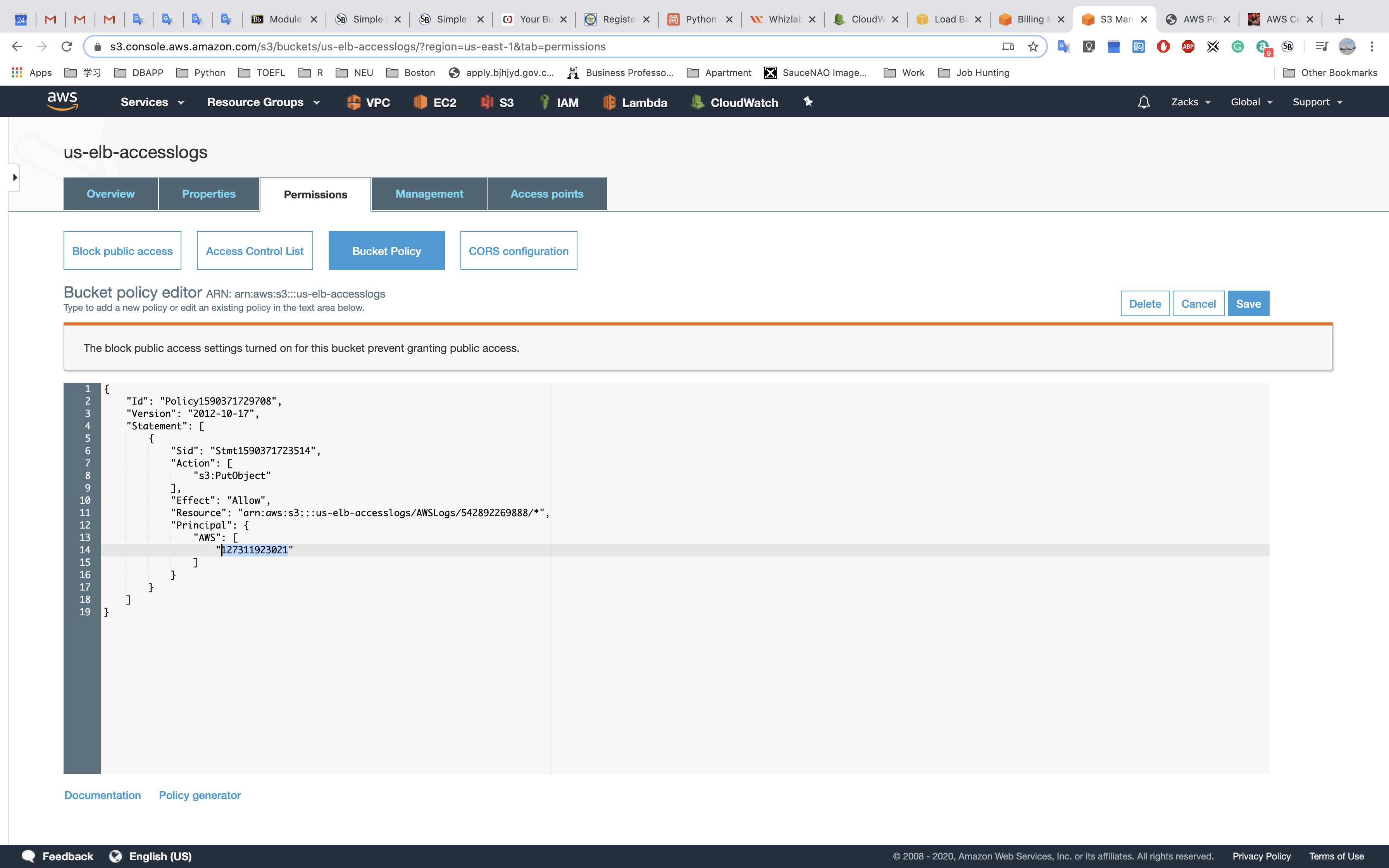

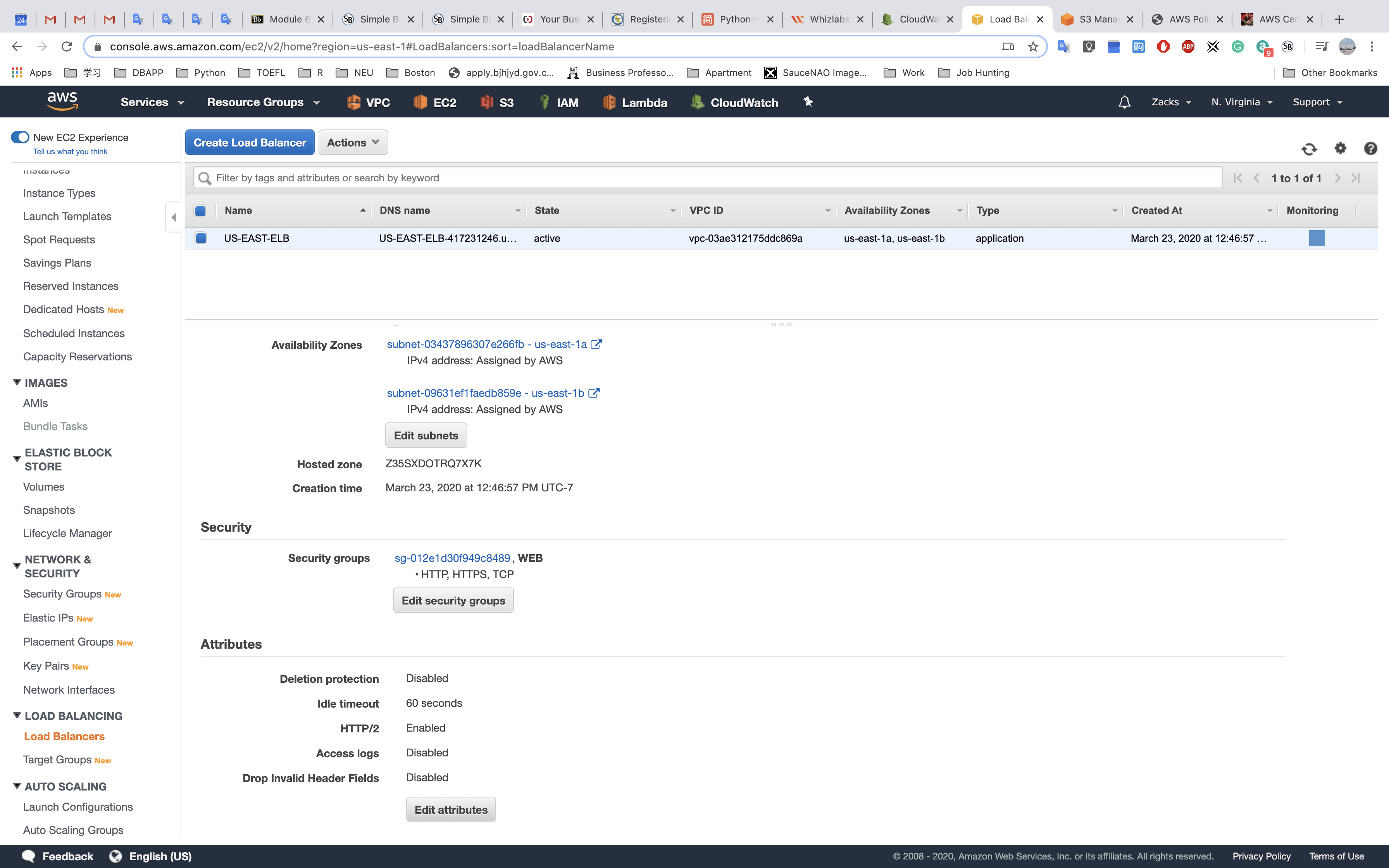

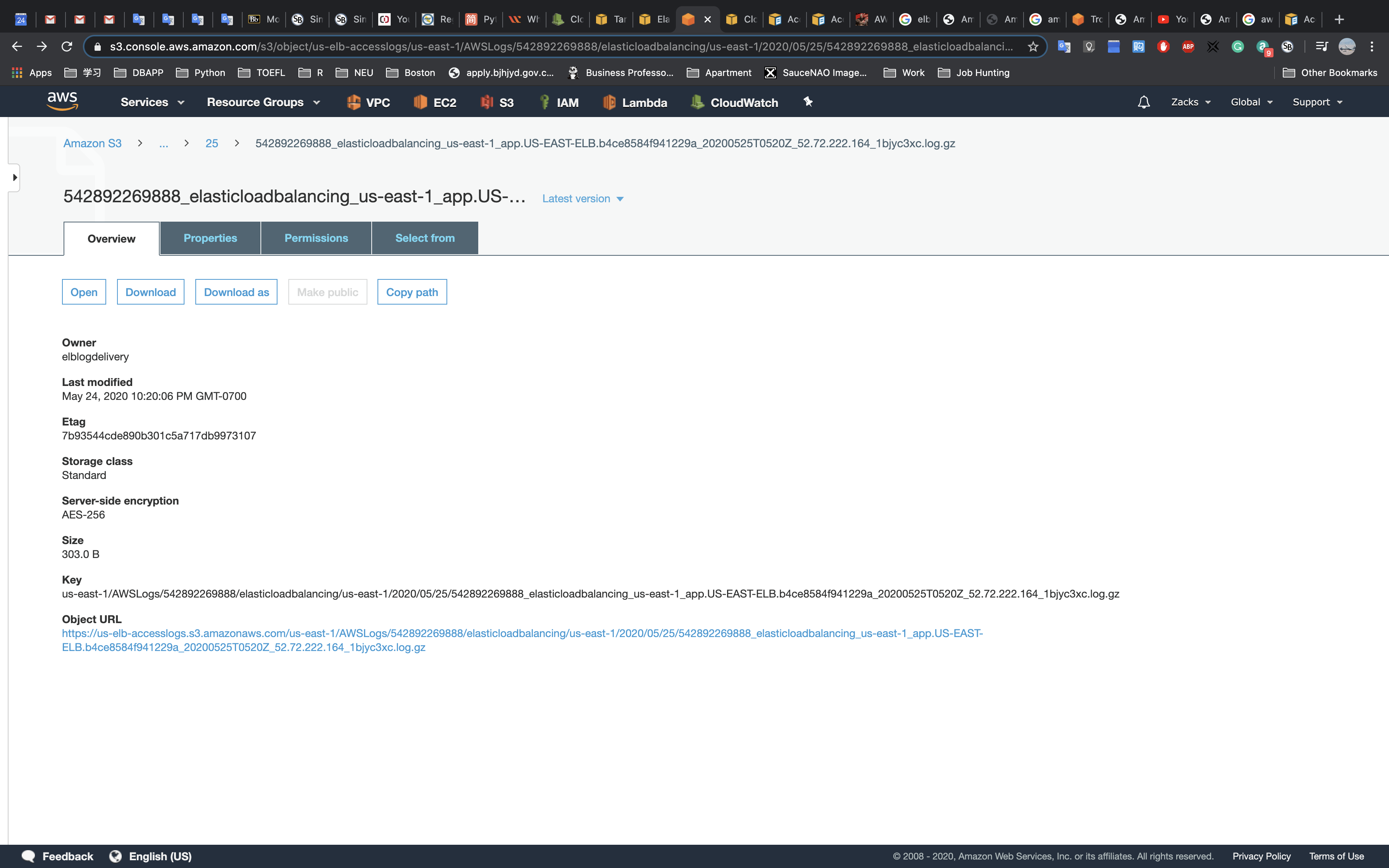

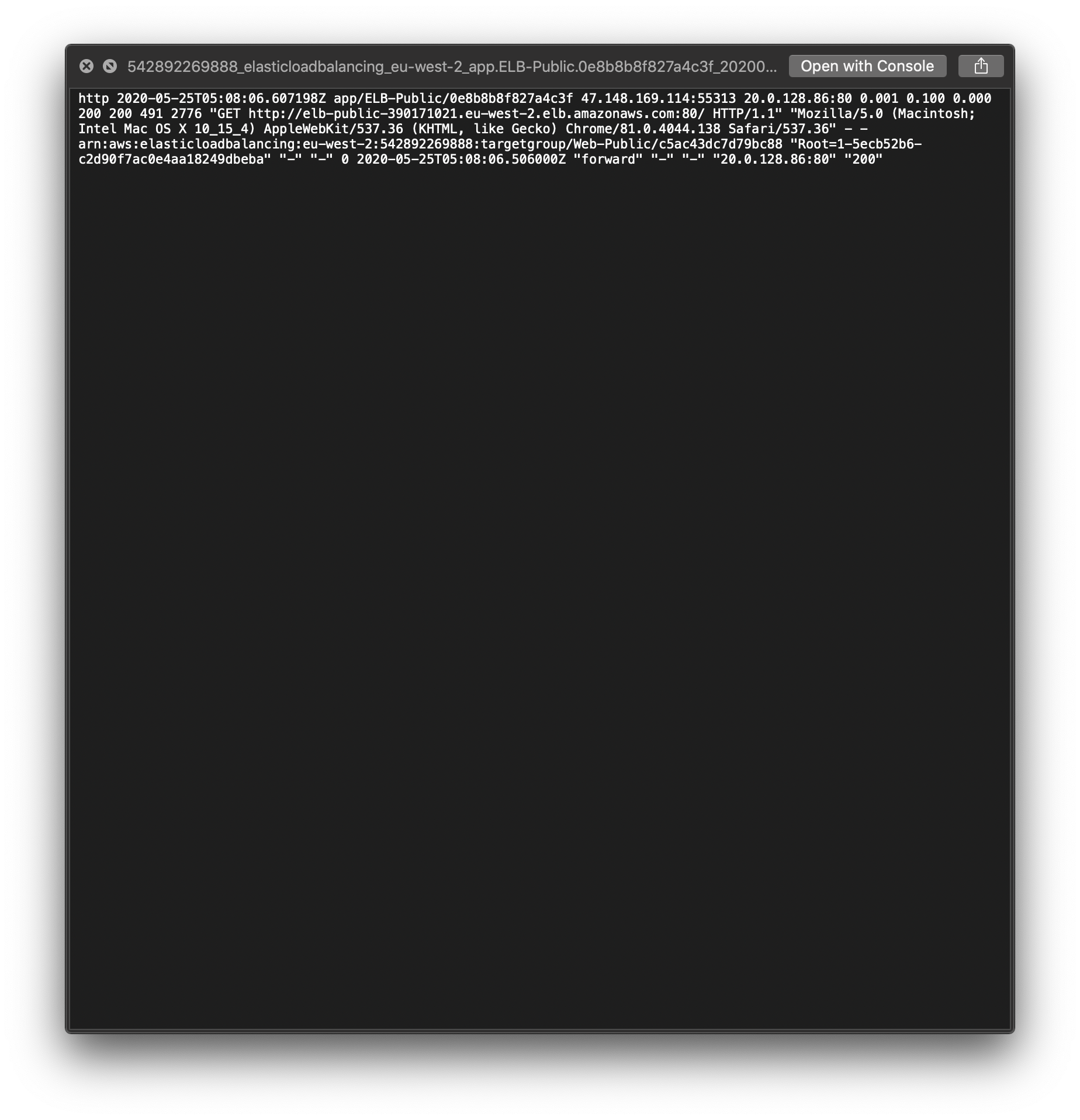

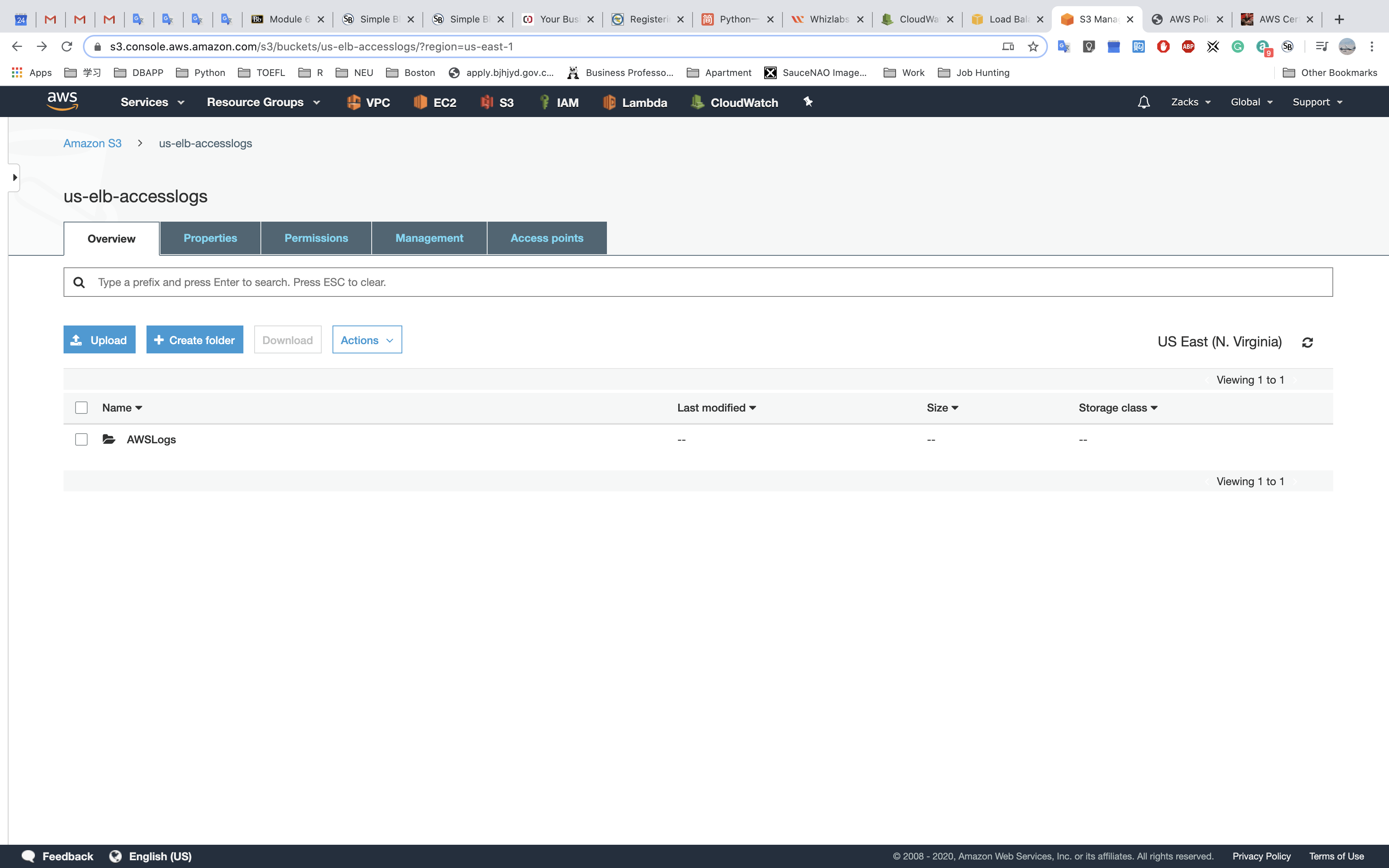

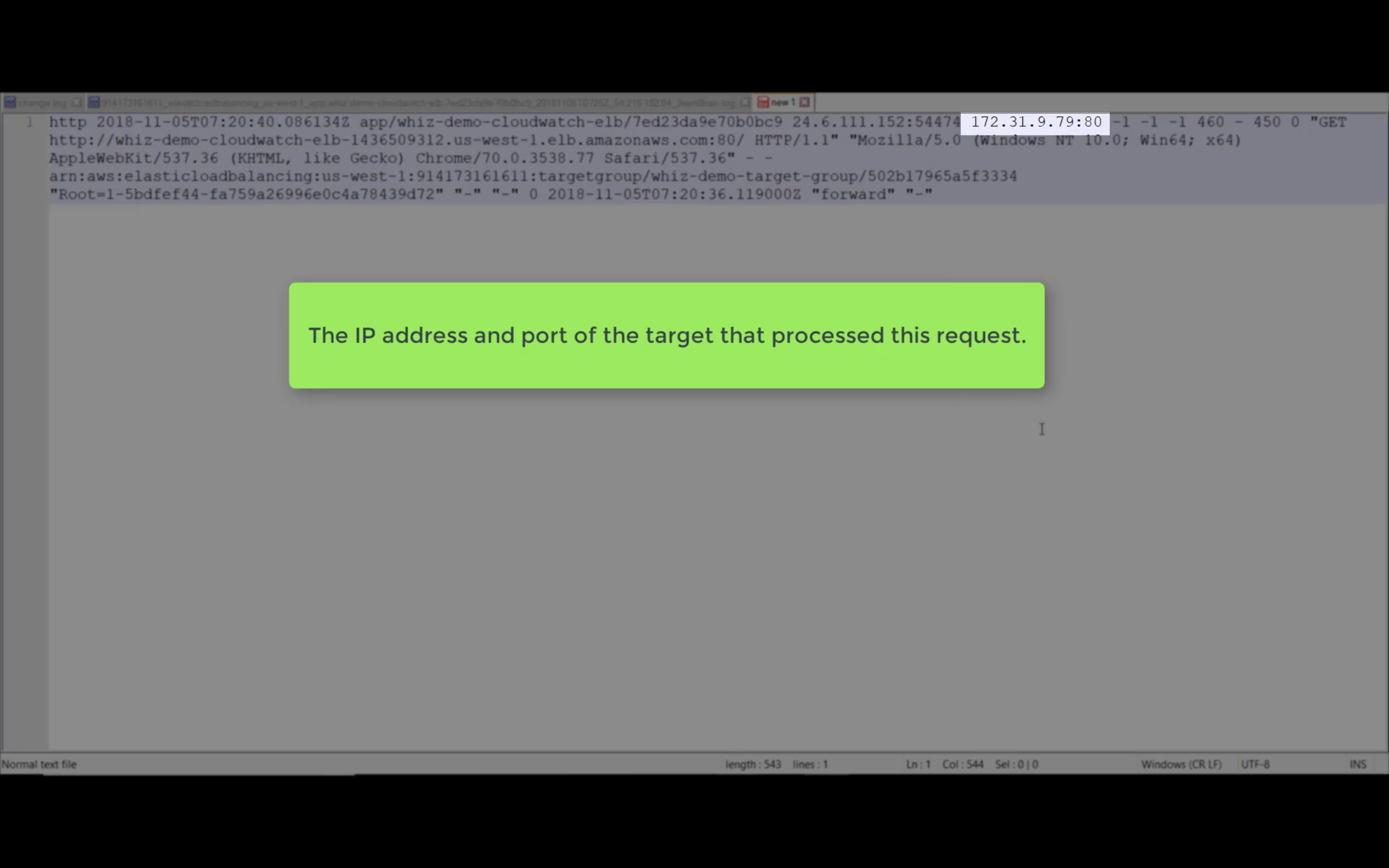

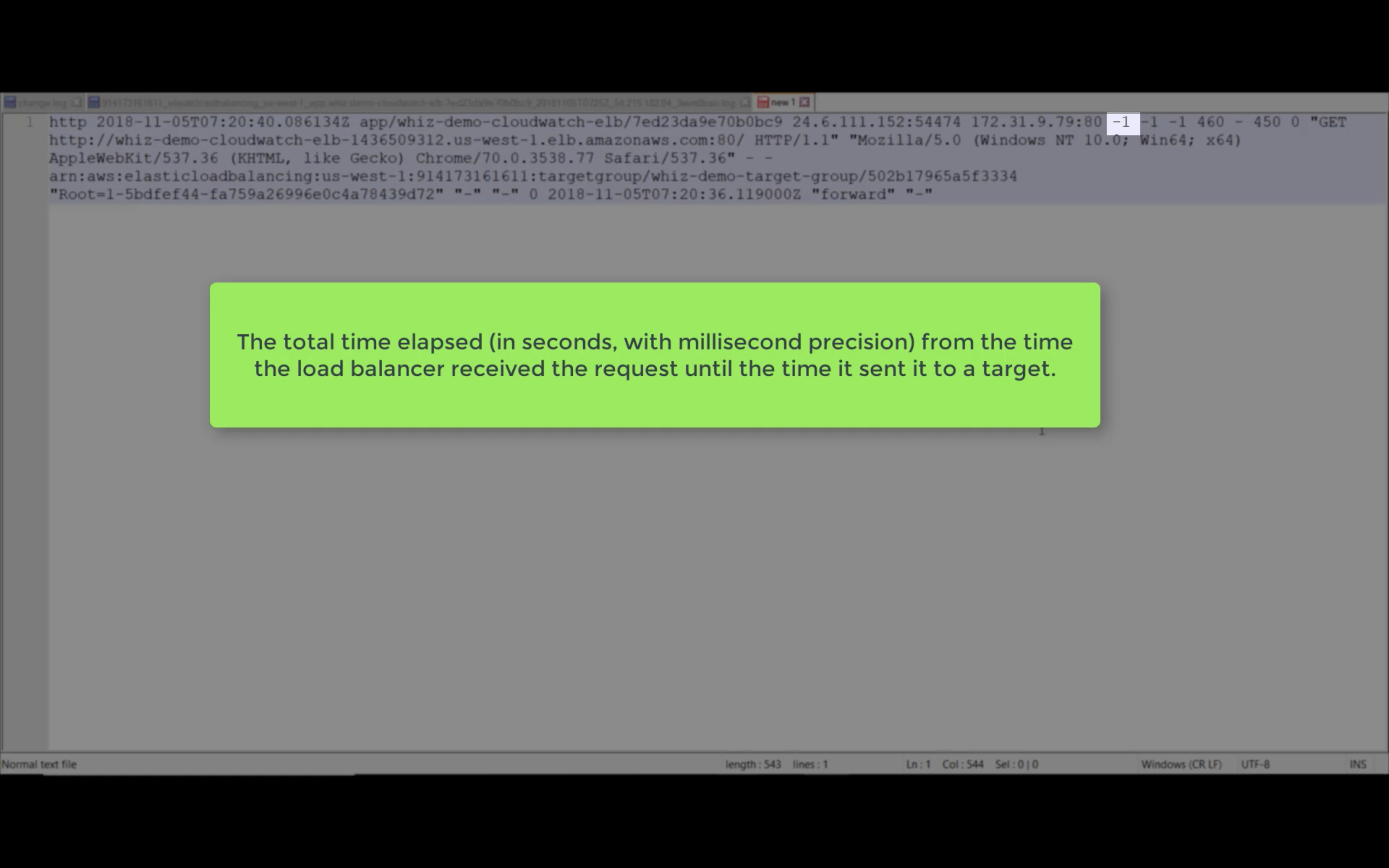

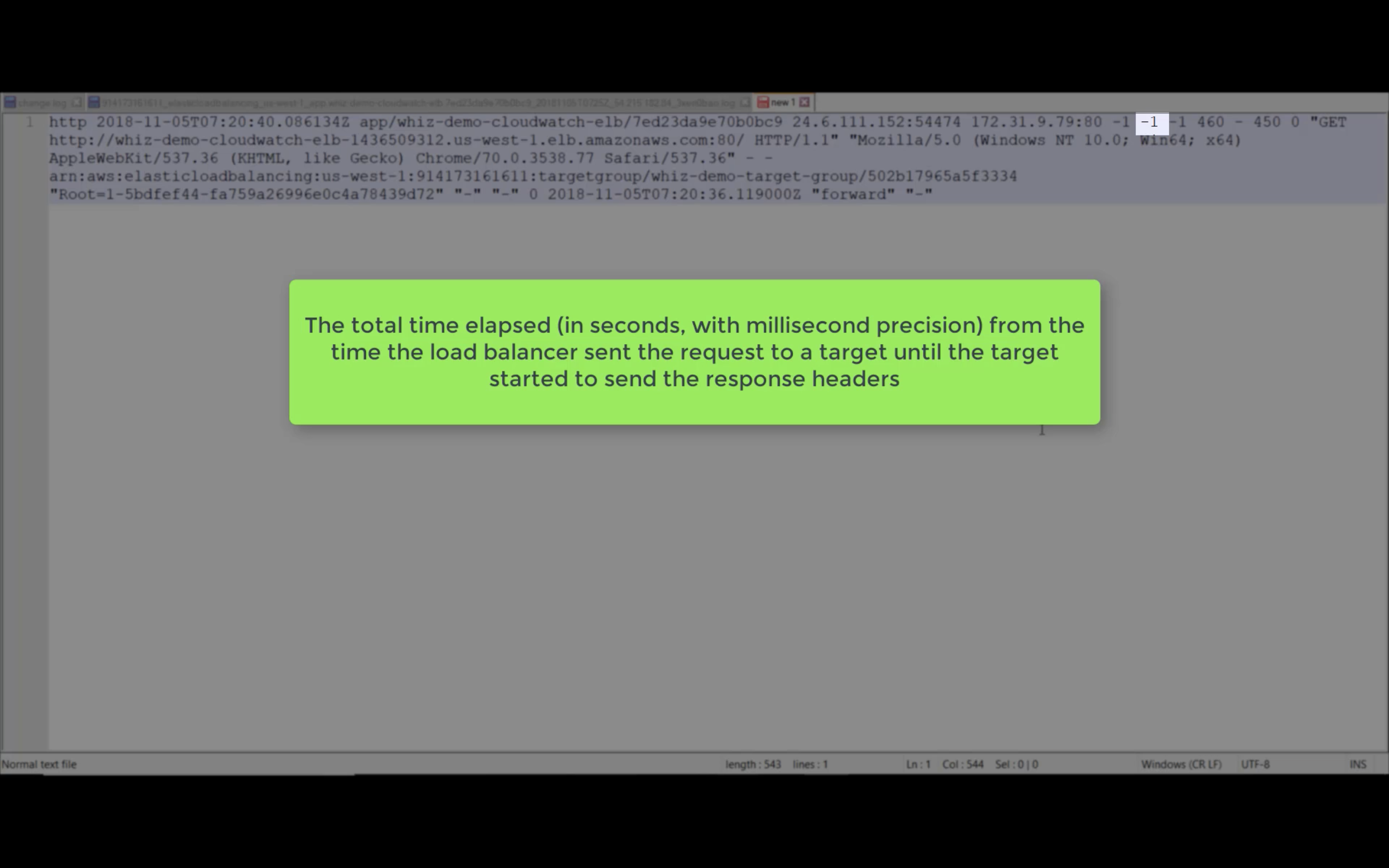

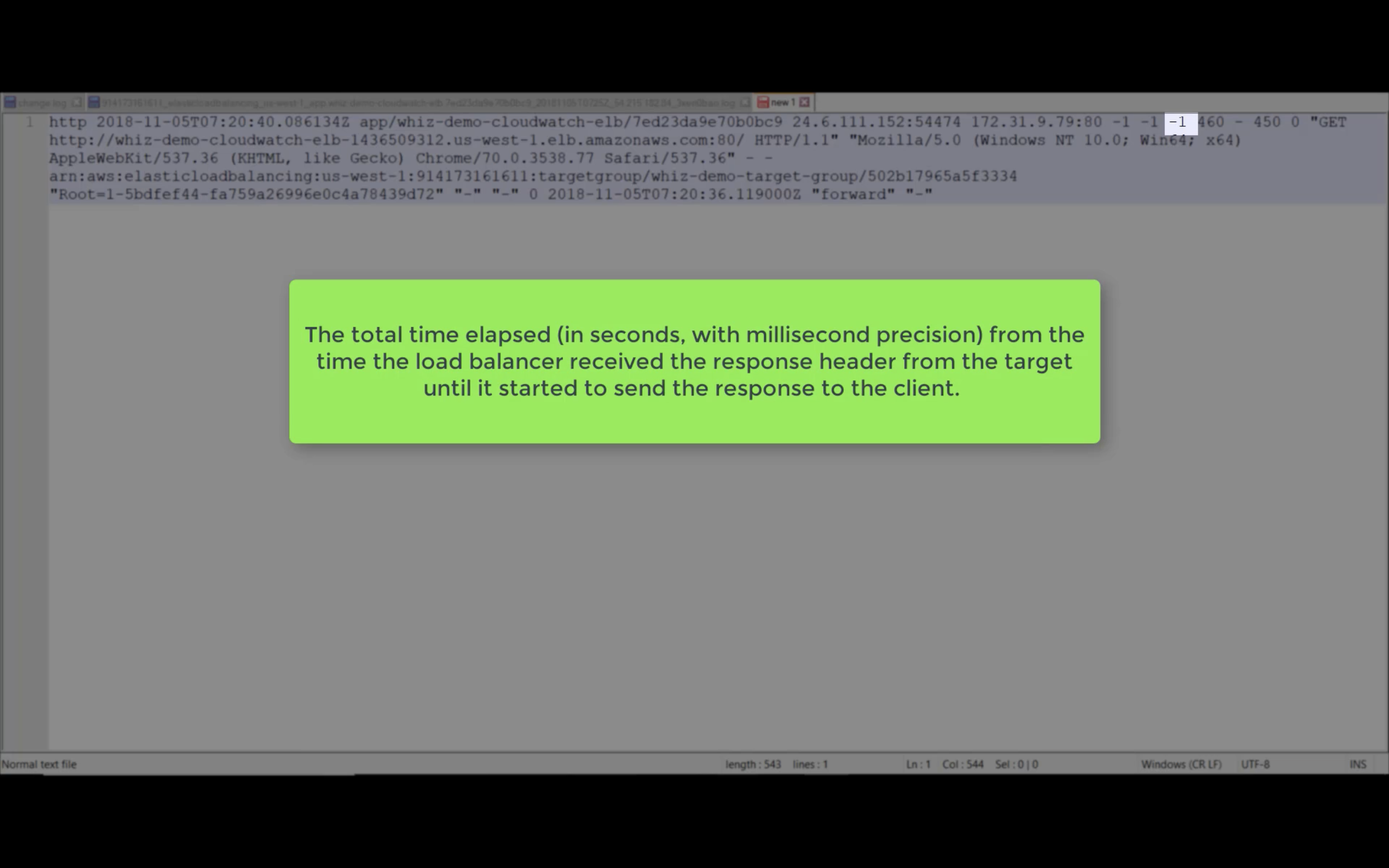

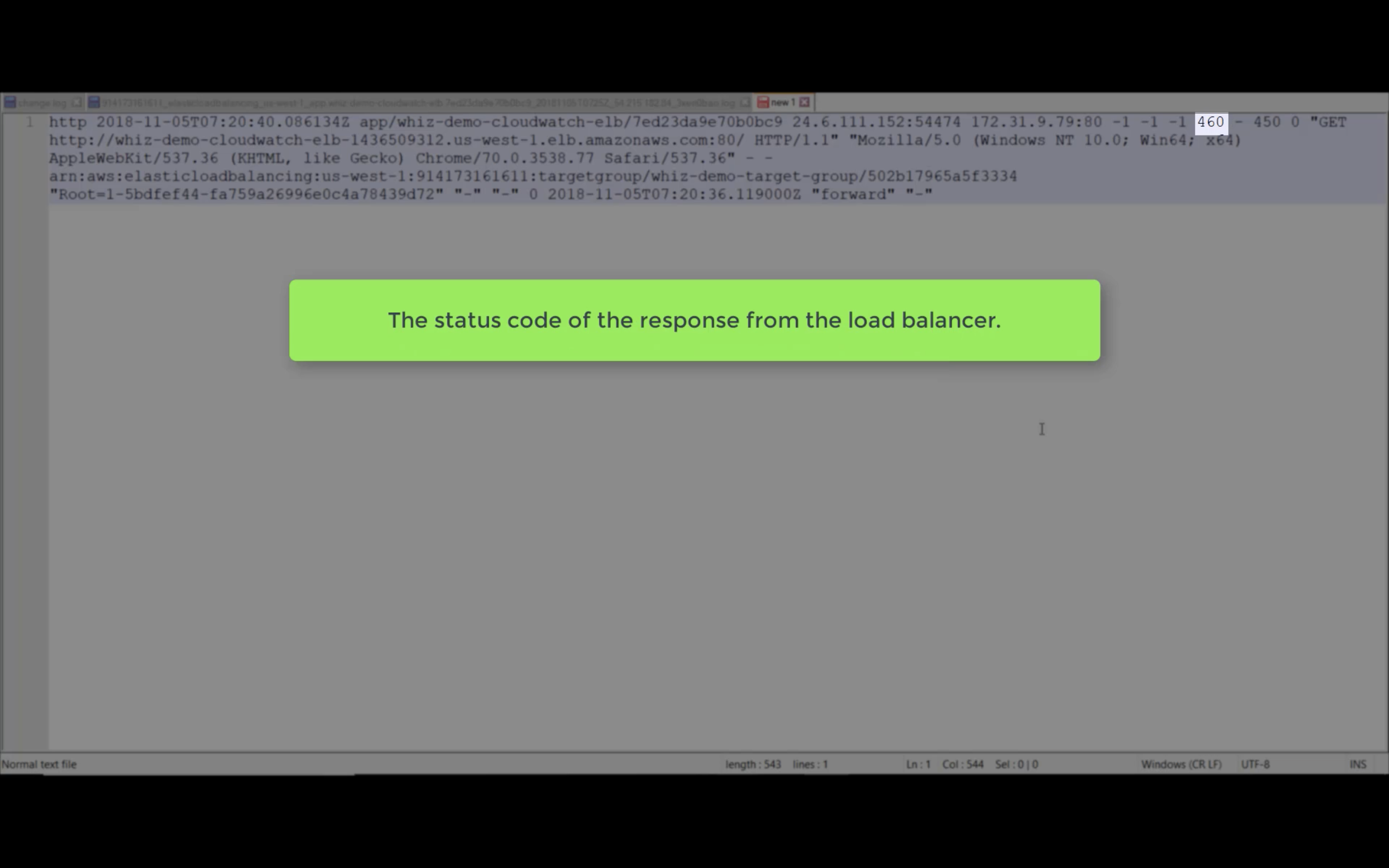

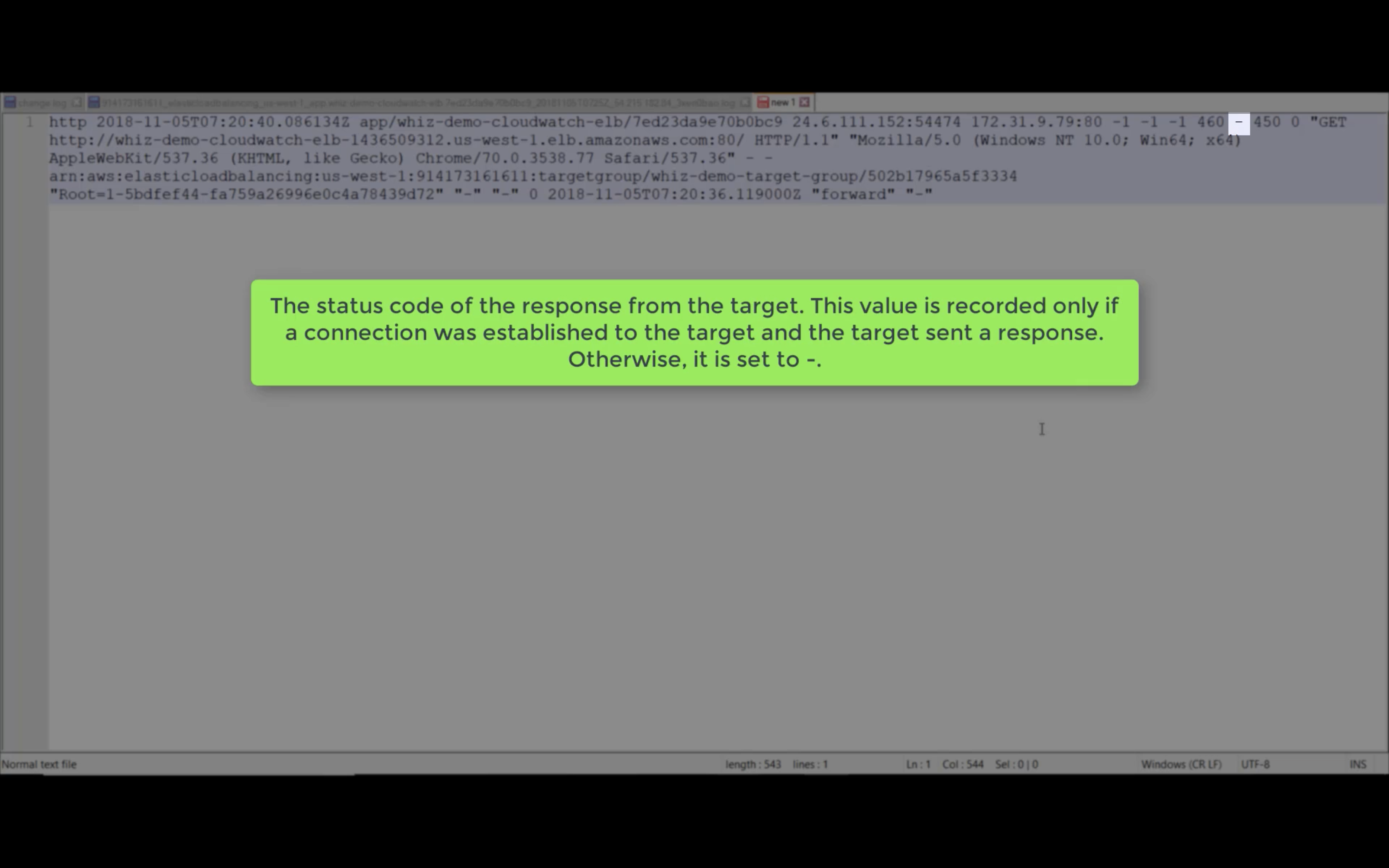

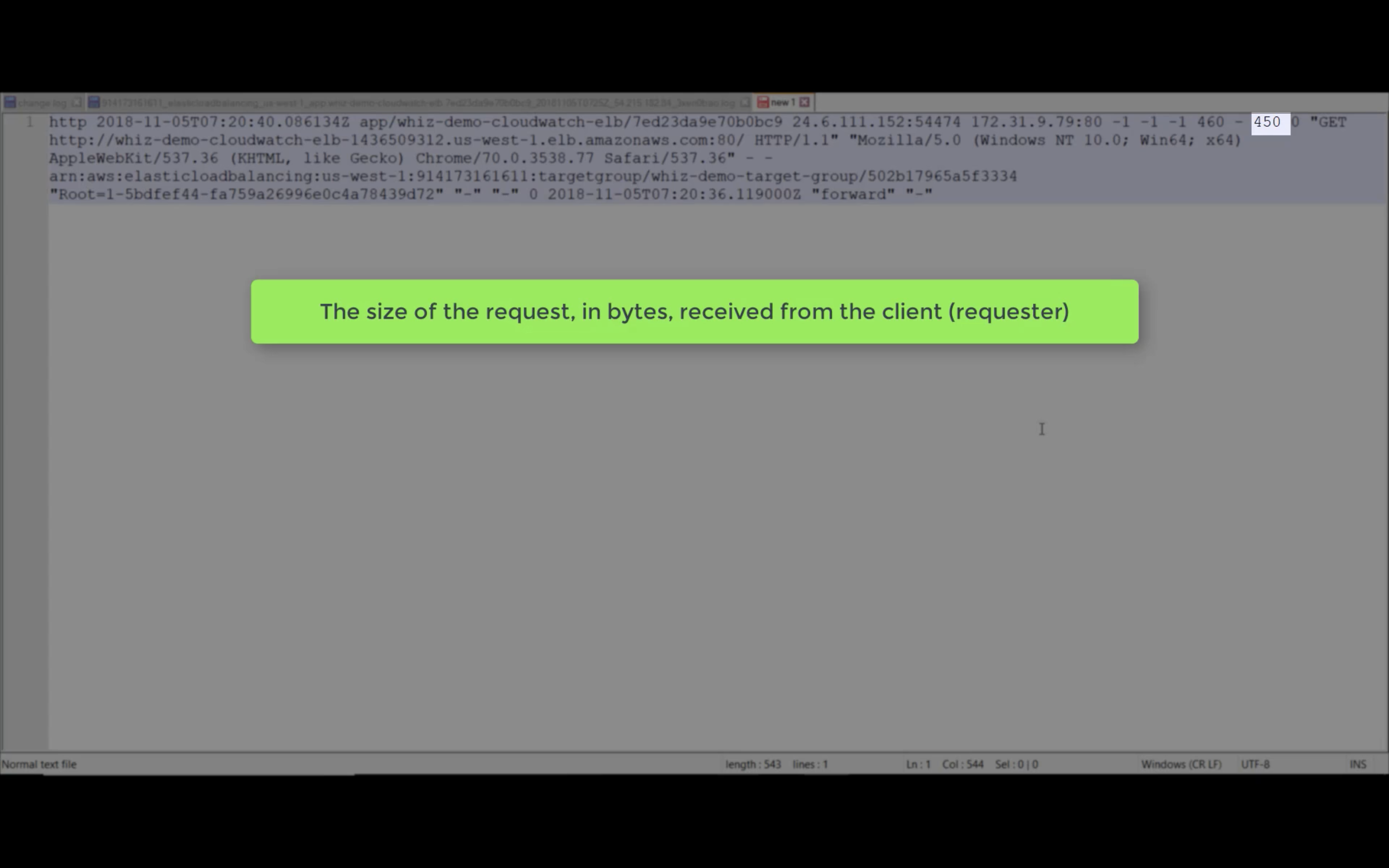

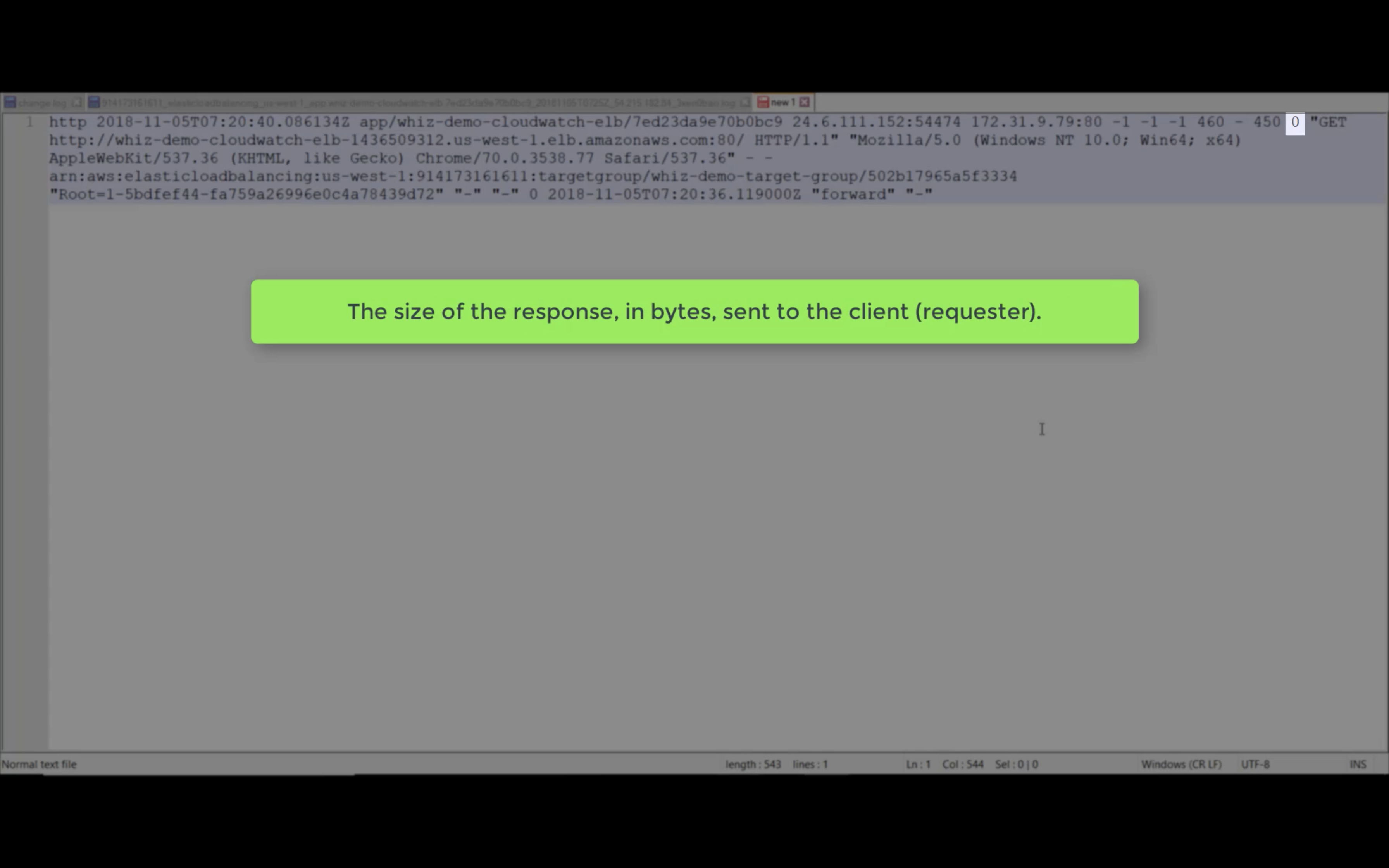

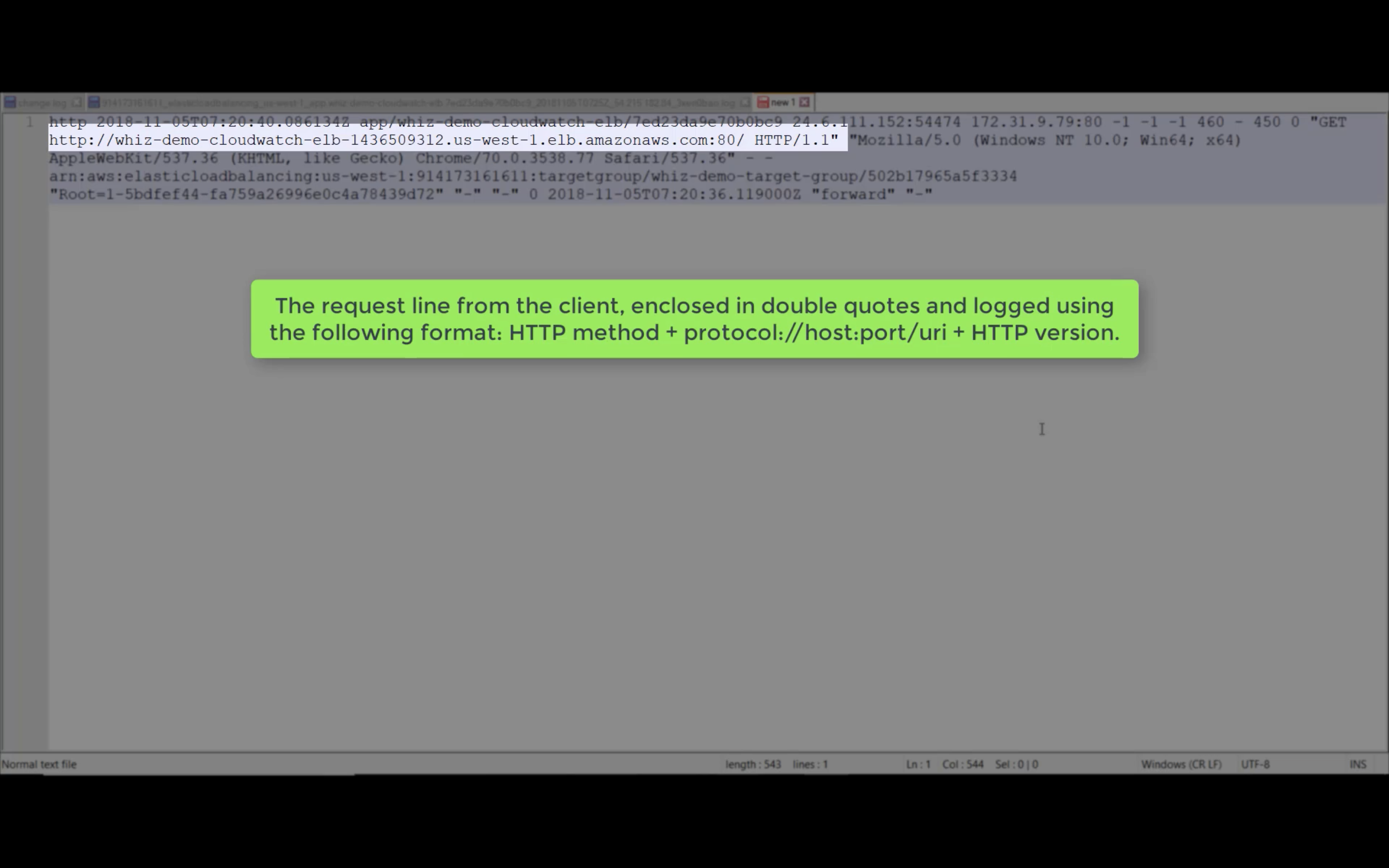

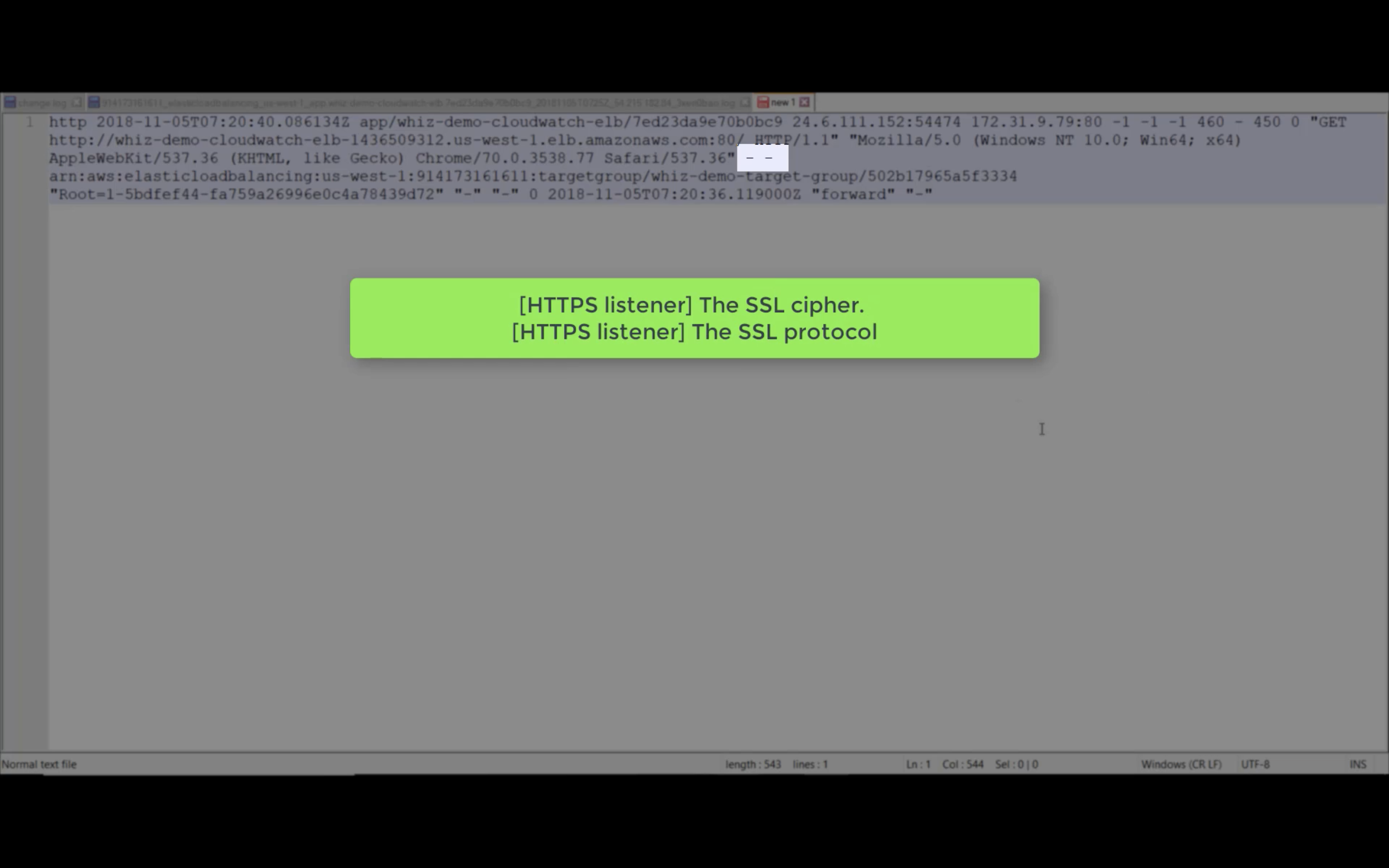

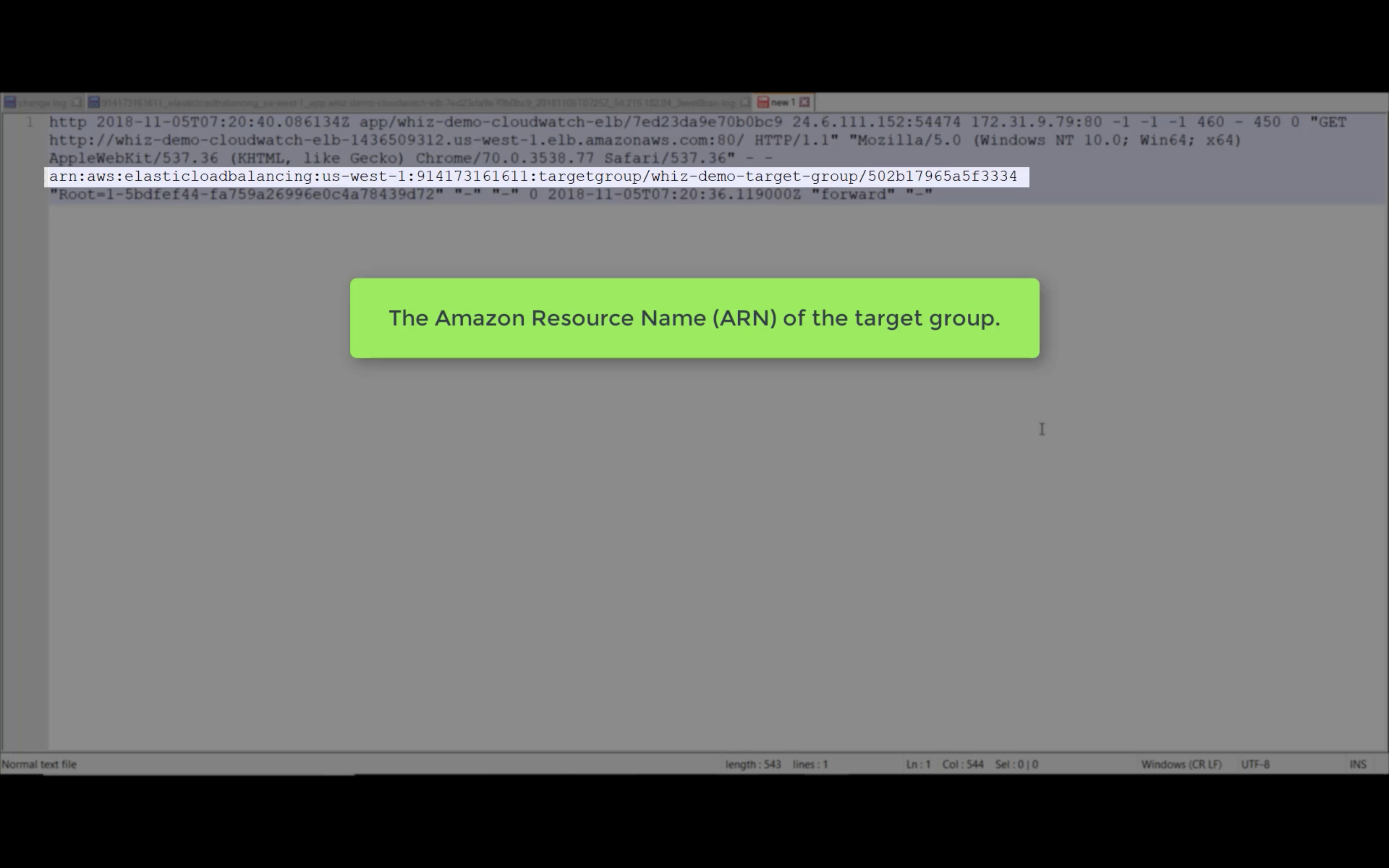

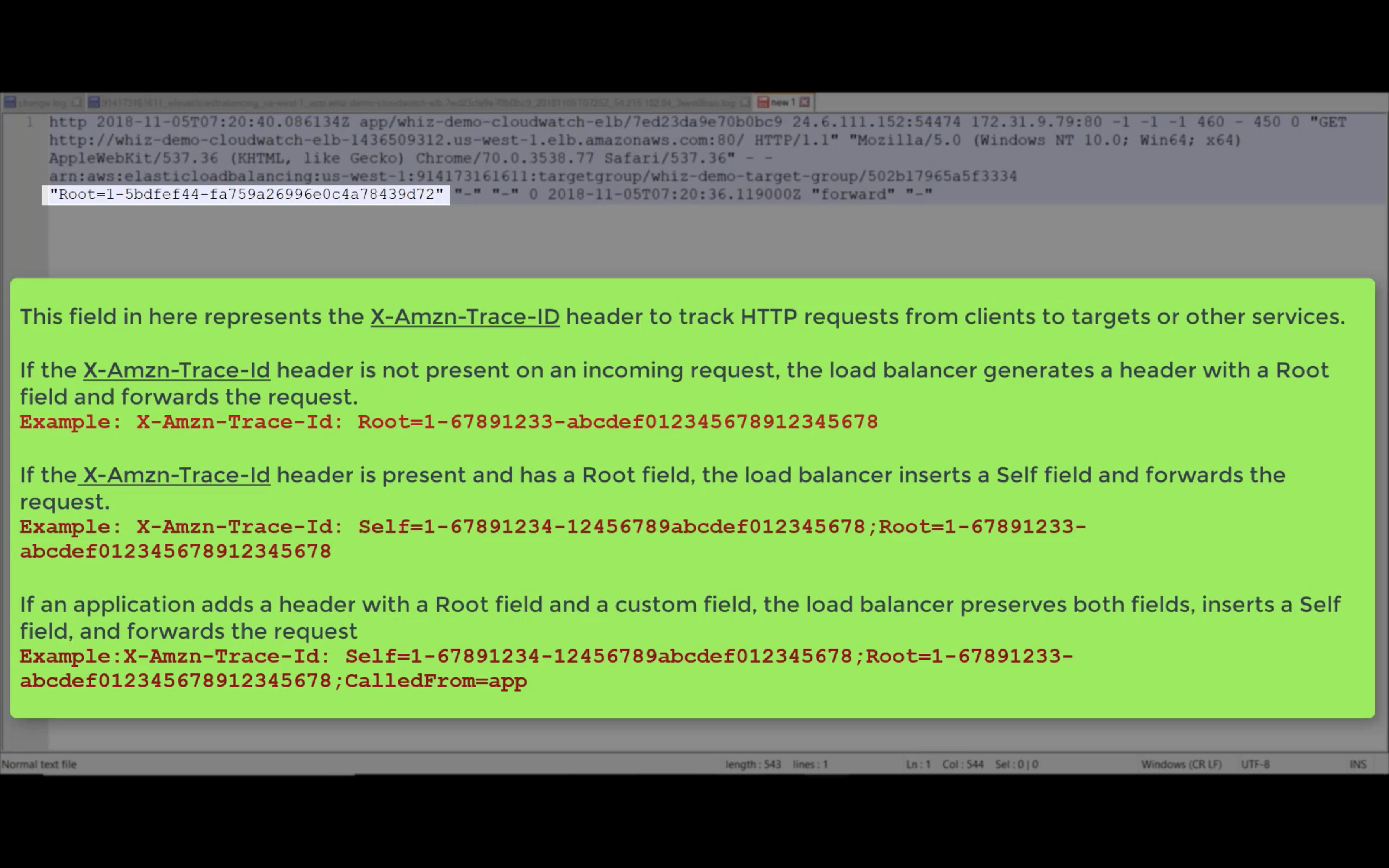

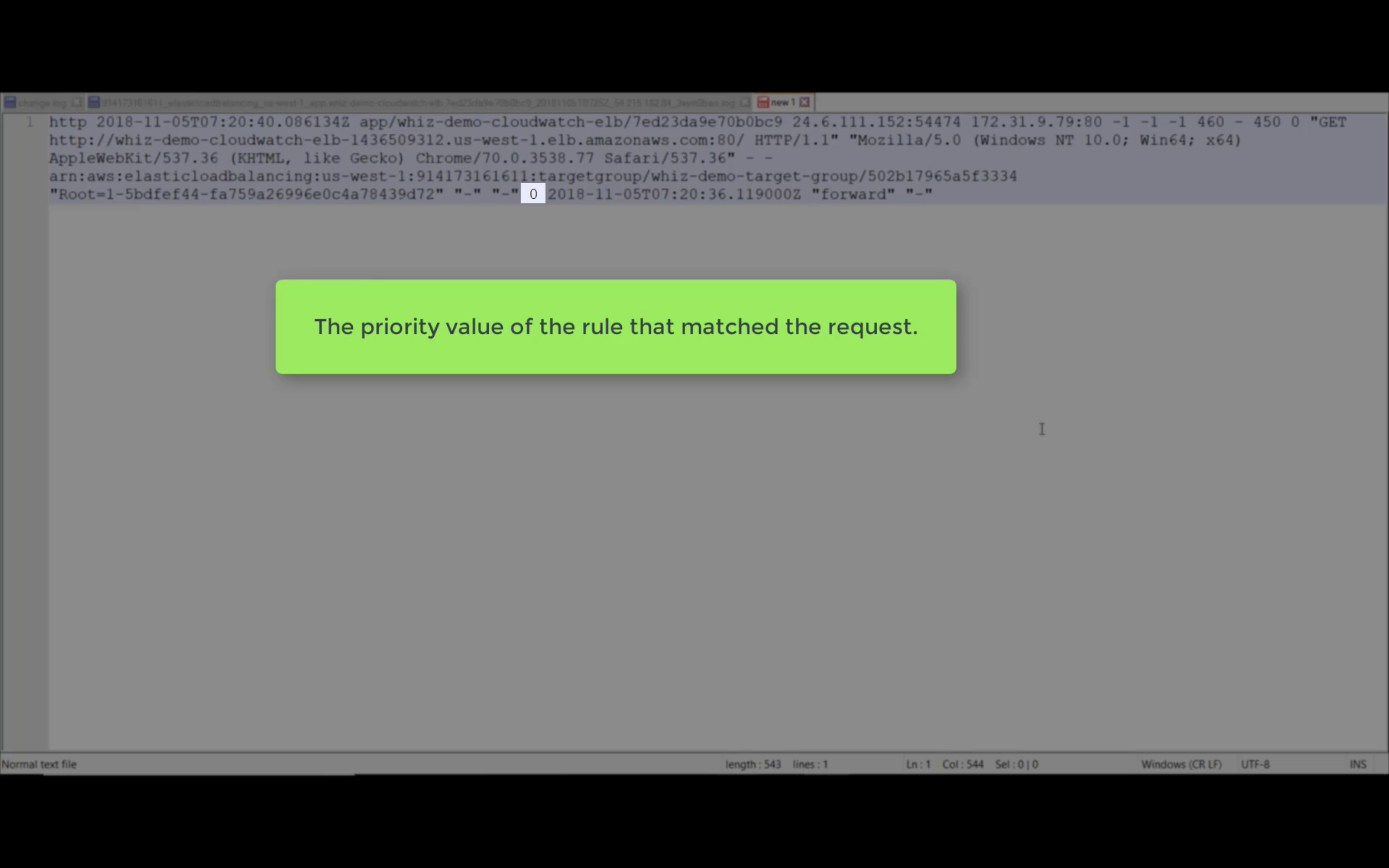

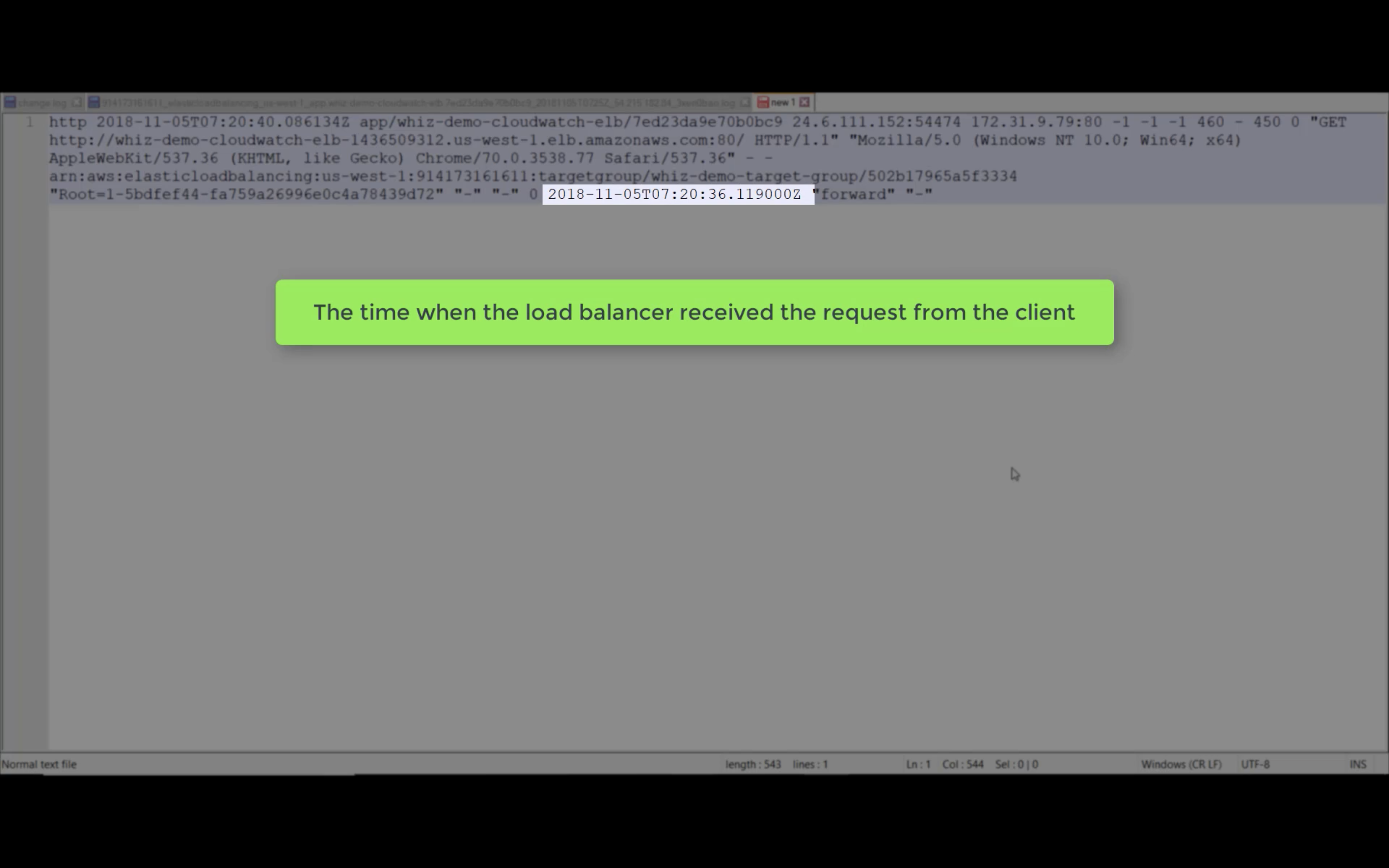

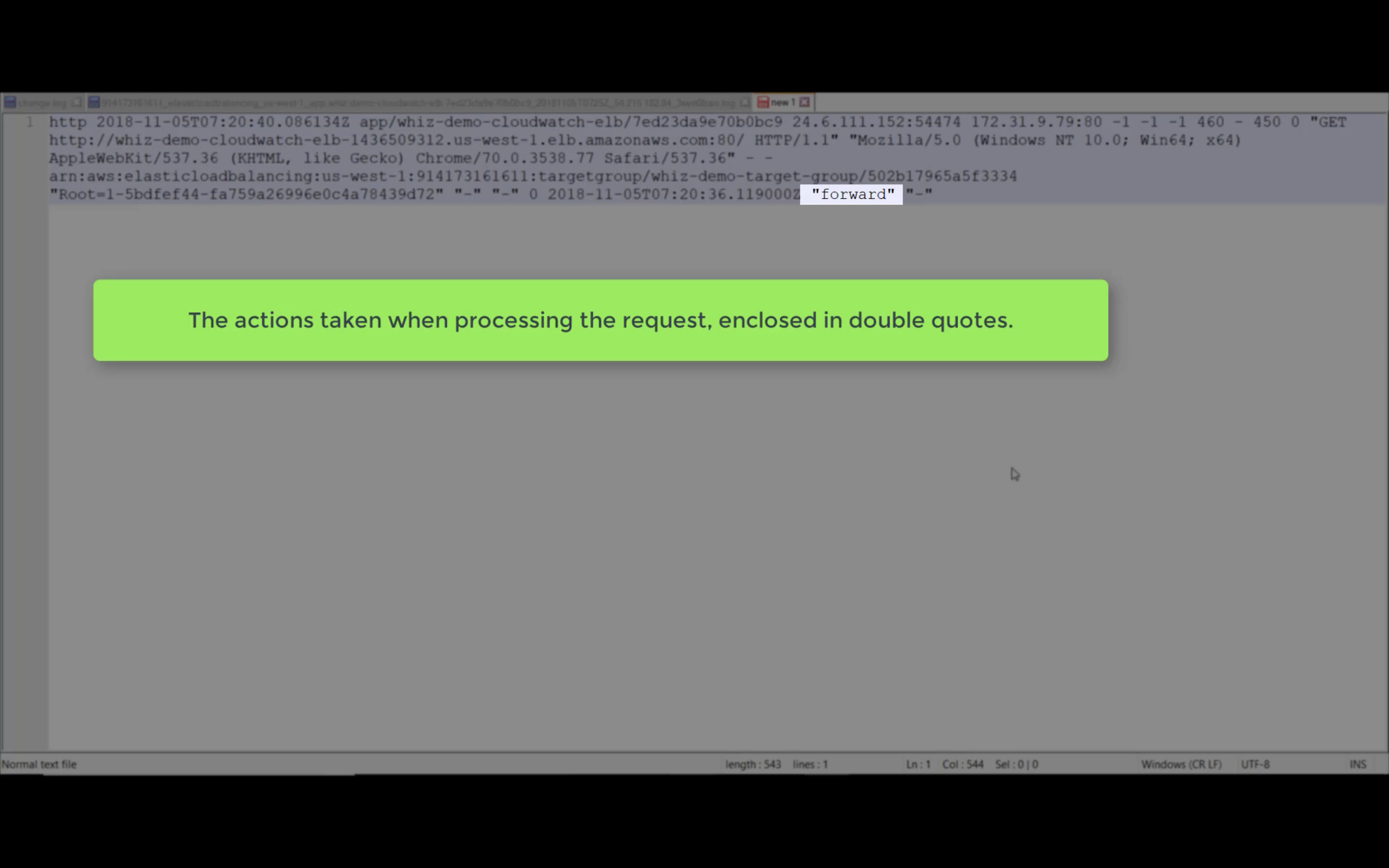

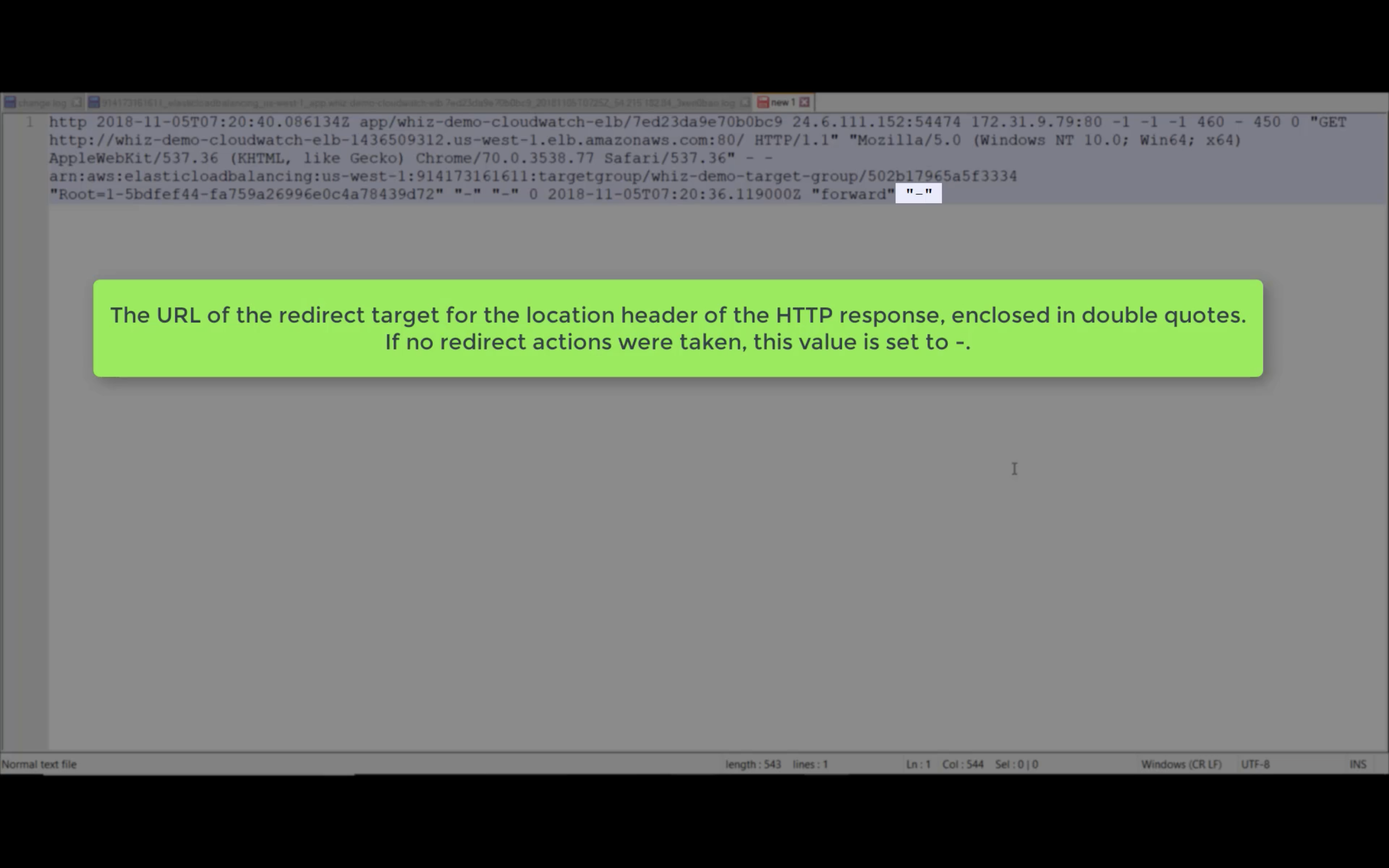

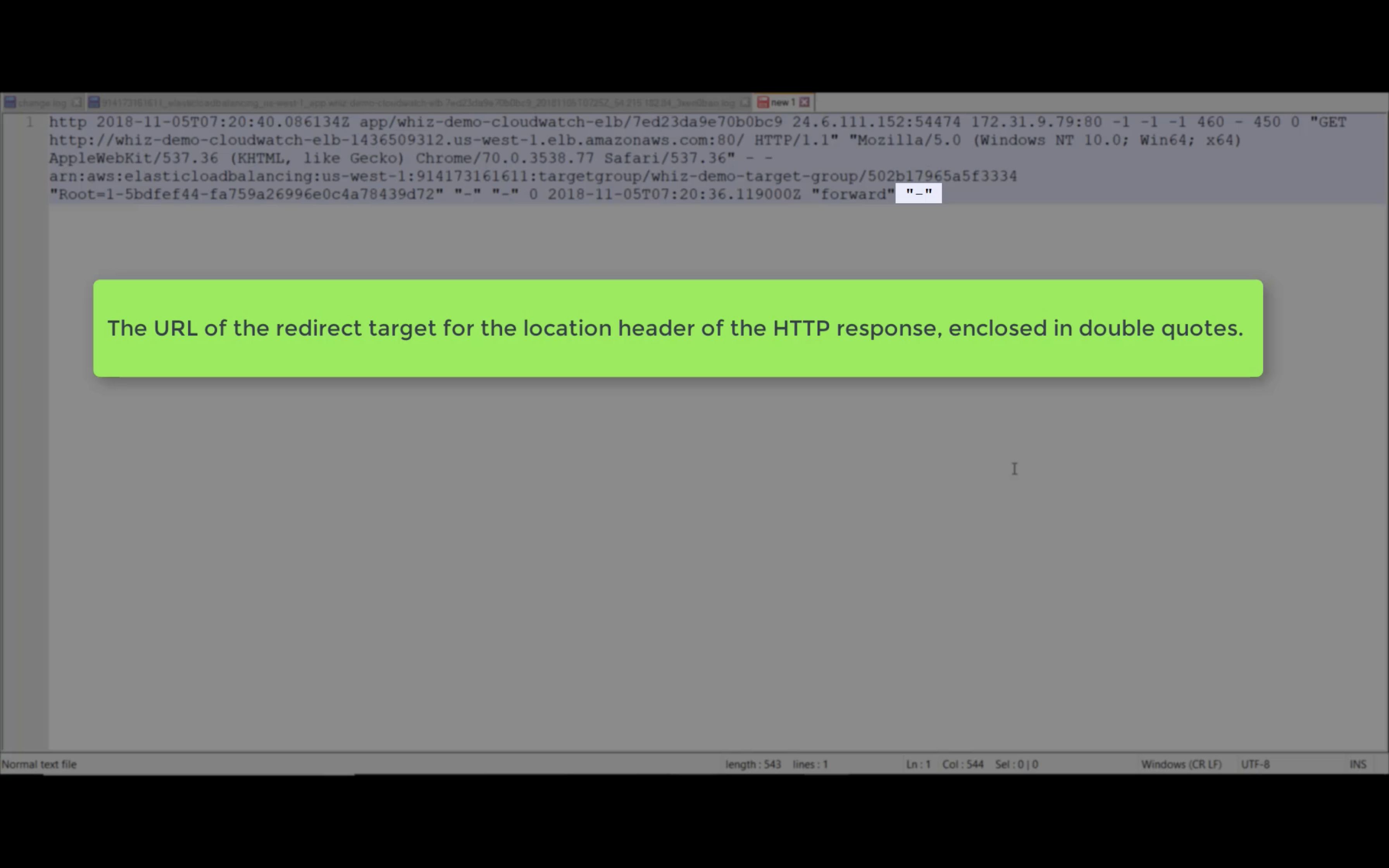

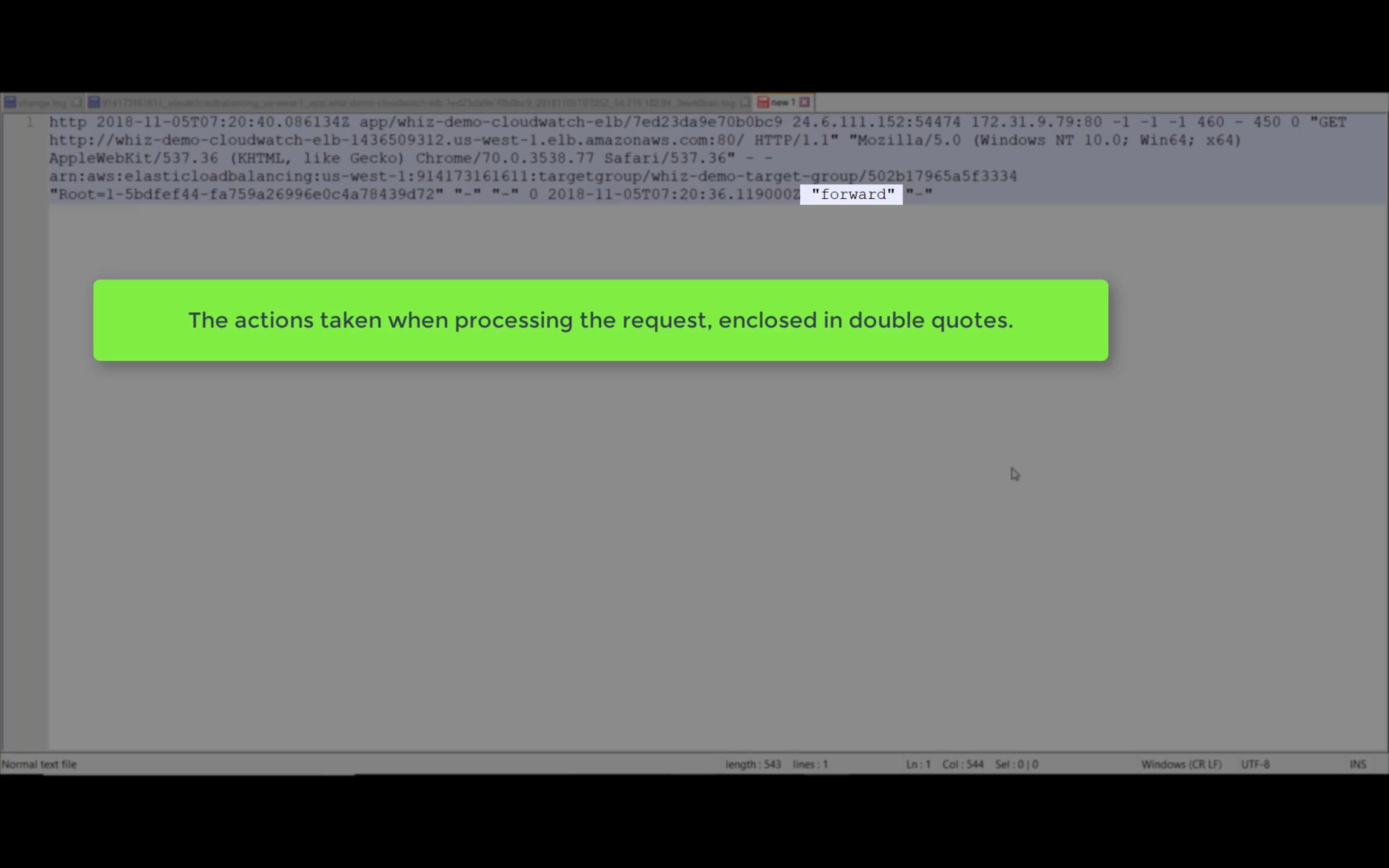

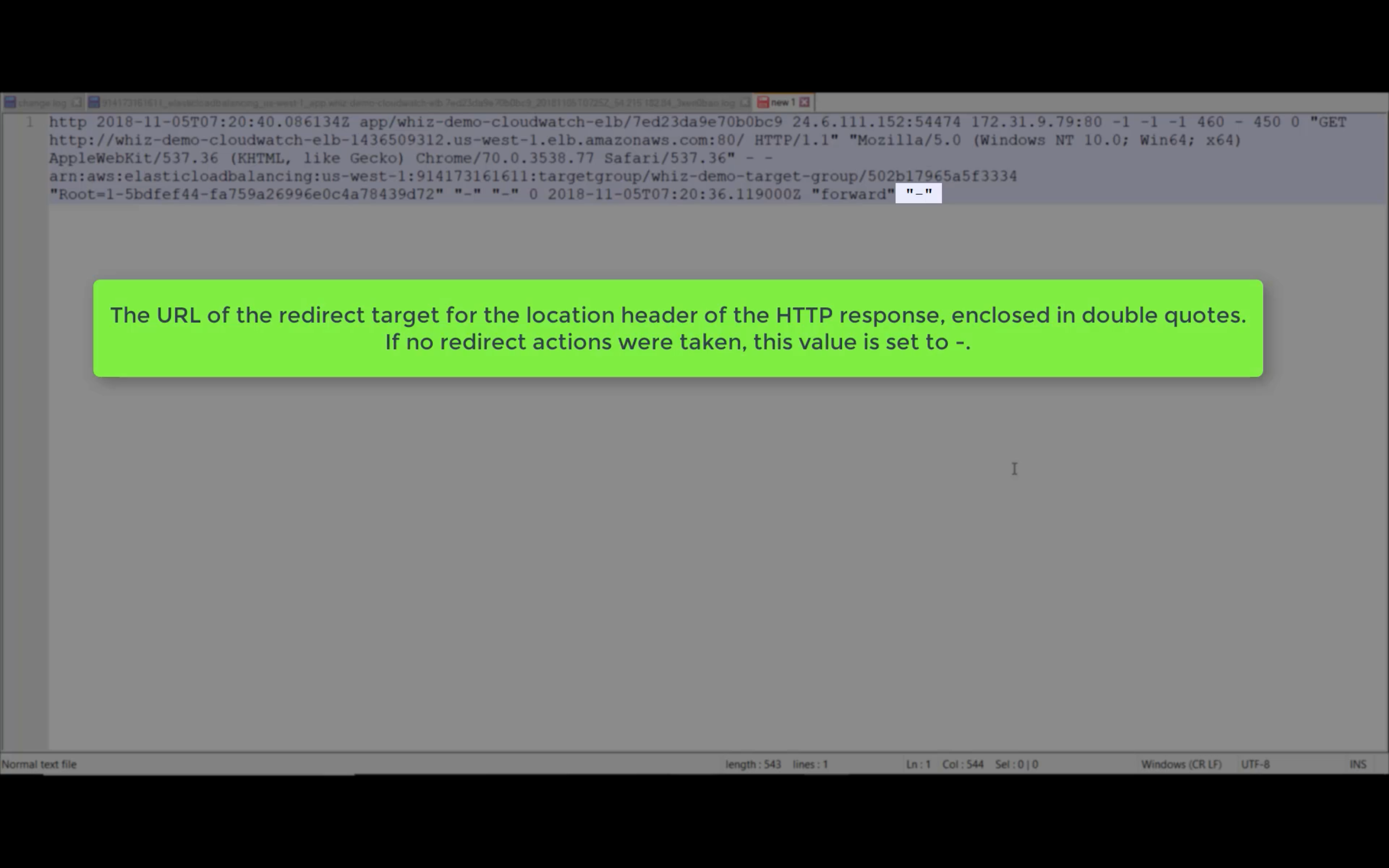

Access Logs

Write ELB access logs to S3 bucket.

Using bucket policy generator.

Resource is the target resource.

Resource ID is your AWS ID"Resource": "arn:aws:sqs:us-east-2:account-ID-without-hyphens:queue1""Resource": "arn:aws:iam::account-ID-without-hyphens:user/Bob"

Principal: in this experiment, I use ELB US-East-1 as Principal ID.

See AWS Services That Work with IAM

Use the Principal element in a policy to specify the principal that is allowed or denied access to a resource. You cannot use the Principal element in an IAM identity-based policy. You can use it in the trust policies for IAM roles and in resource-based policies. Resource-based policies are policies that you embed directly in an IAM resource. For example, you can embed policies in an Amazon S3 bucket or an AWS KMS customer master key (CMK).

IAM JSON Policy Elements Reference

Enable Access Logs for Your Classic Load Balancer

| Region | Region Name | Elastic Load Balancing Account ID |

|---|---|---|

us-east-1 |

US East (N. Virginia) | 127311923021 |

us-east-2 |

US East (Ohio) | 033677994240 |

us-west-1 |

US West (N. California) | 027434742980 |

us-west-2 |

US West (Oregon) | 797873946194 |

af-south-1 |

Africa (Cape Town) | 098369216593 |

ca-central-1 |

Canada (Central) | 985666609251 |

eu-central-1 |

Europe (Frankfurt) | 054676820928 |

eu-west-1 |

Europe (Ireland) | 156460612806 |

eu-west-2 |

Europe (London) | 652711504416 |

eu-south-1 |

Europe (Milan) | 635631232127 |

eu-west-3 |

Europe (Paris) | 009996457667 |

eu-north-1 |

Europe (Stockholm) | 897822967062 |

ap-east-1 |

Asia Pacific (Hong Kong) | 754344448648 |

ap-northeast-1 |

Asia Pacific (Tokyo) | 582318560864 |

ap-northeast-2 |

Asia Pacific (Seoul) | 600734575887 |

ap-northeast-3 |

Asia Pacific (Osaka-Local) | 383597477331 |

ap-southeast-1 |

Asia Pacific (Singapore) | 114774131450 |

ap-southeast-2 |

Asia Pacific (Sydney) | 783225319266 |

ap-south-1 |

Asia Pacific (Mumbai) | 718504428378 |

me-south-1 |

Middle East (Bahrain) | 076674570225 |

sa-east-1 |

South America (São Paulo) | 507241528517 |

us-gov-west-1*

|

AWS GovCloud (US-West) | 048591011584 |

us-gov-east-1*

|

AWS GovCloud (US-East) | 190560391635 |

cn-north-1*

|

China (Beijing) | 638102146993 |

cn-northwest-1*

|

China (Ningxia) | 037604701340 |

1 | { |

Edit the attribute and input your S3 bucket.

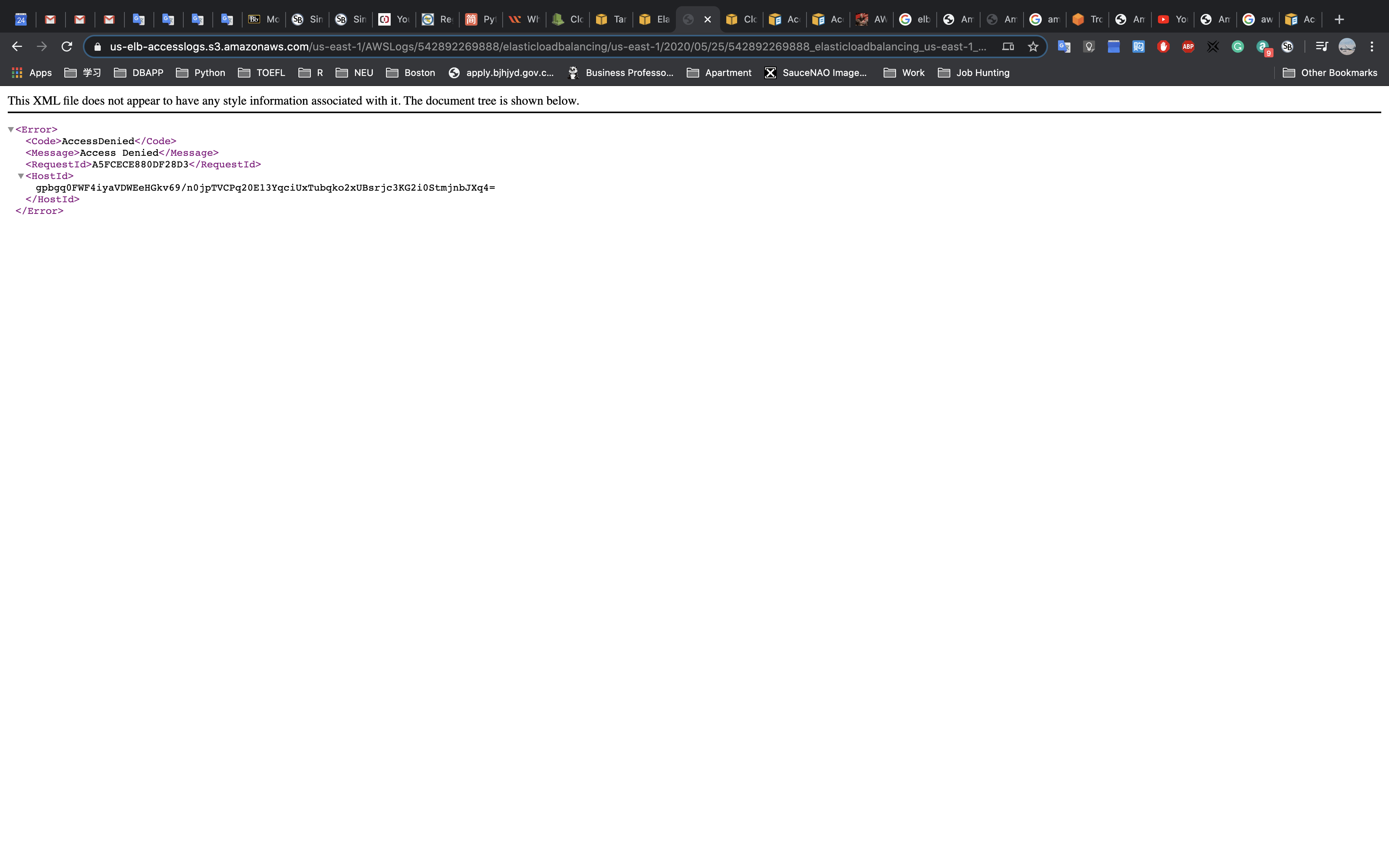

If you get the AccessDenied warning.

Try to download the log and open it locally.

Wait several minutes. The log will be generated.

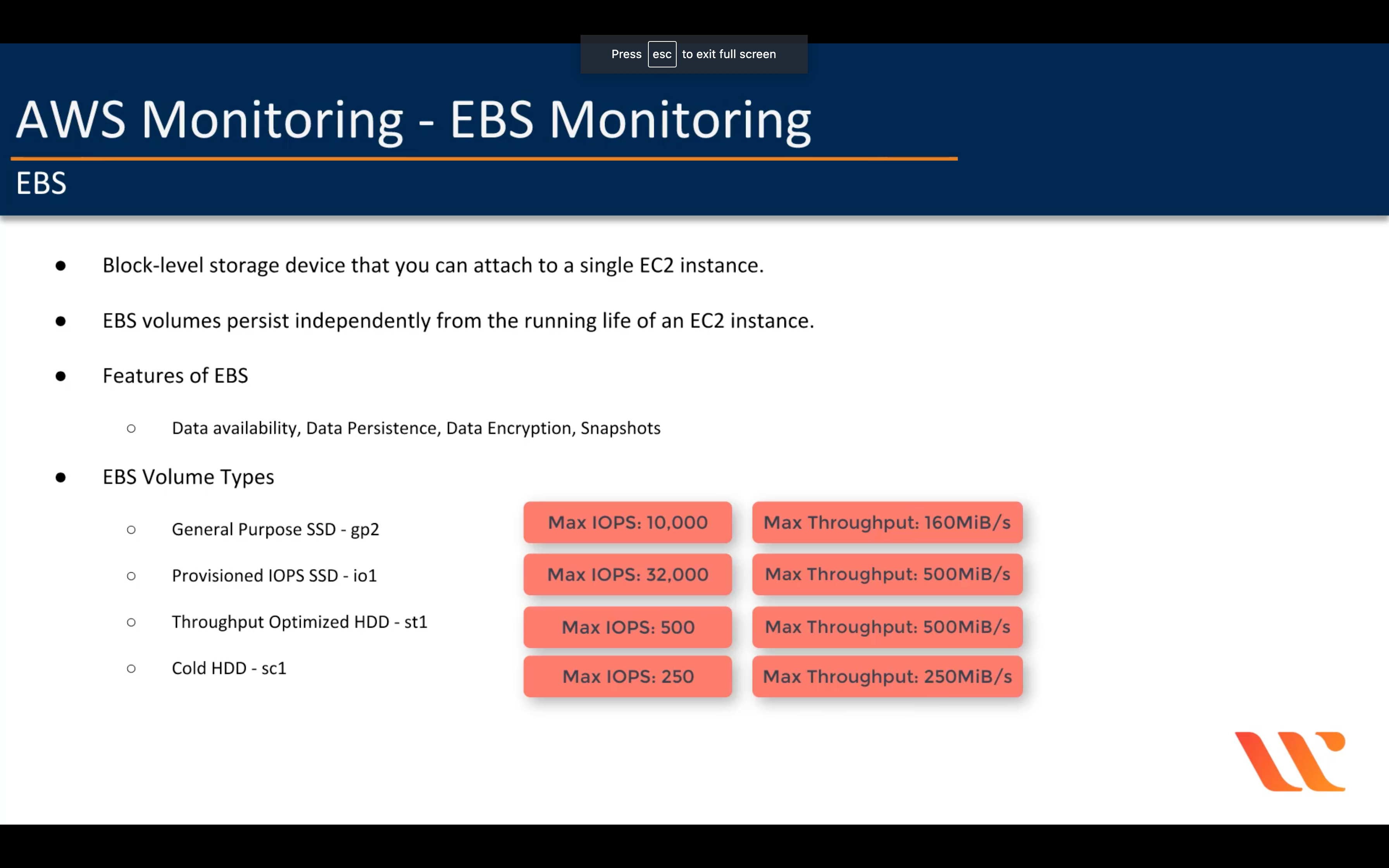

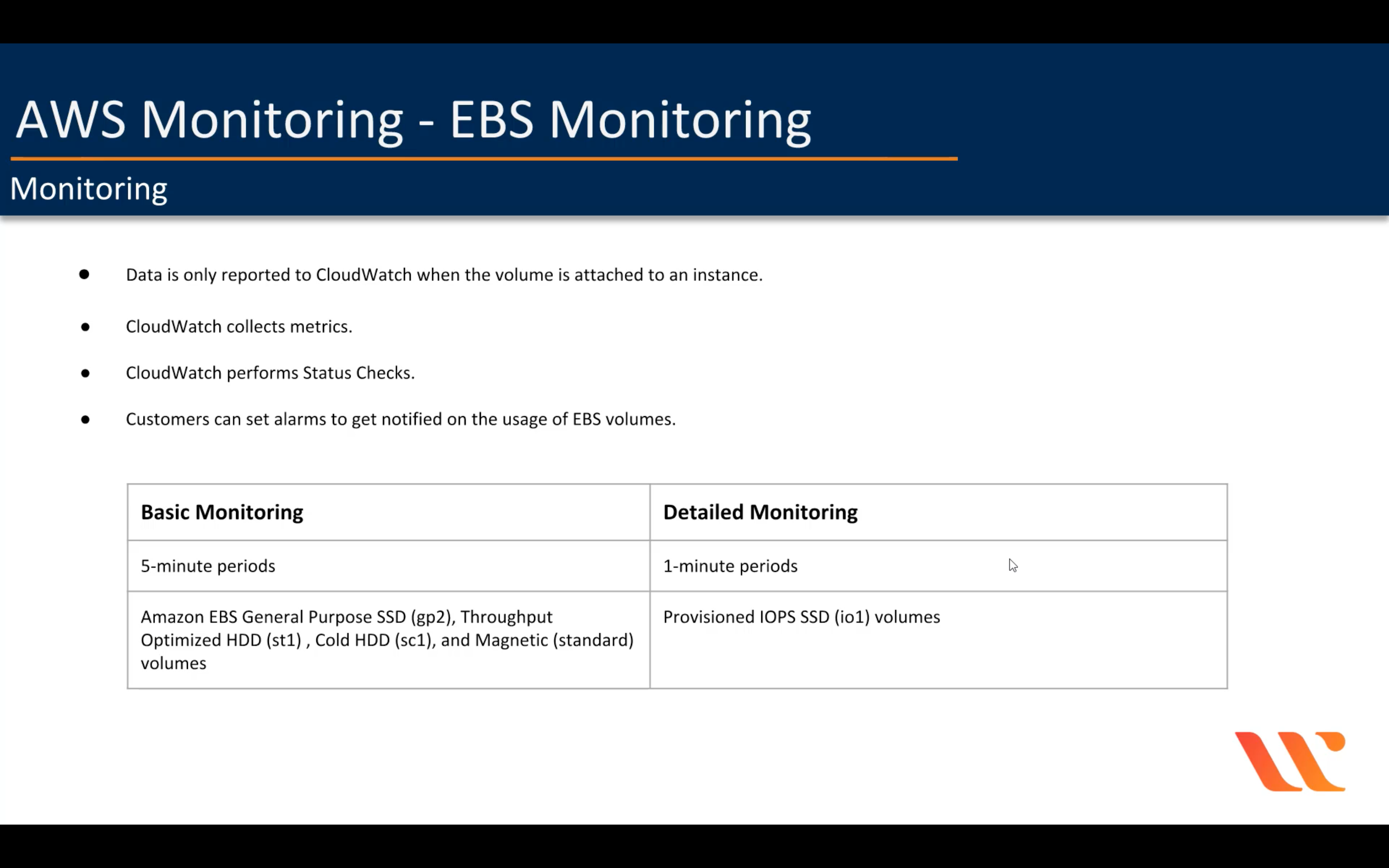

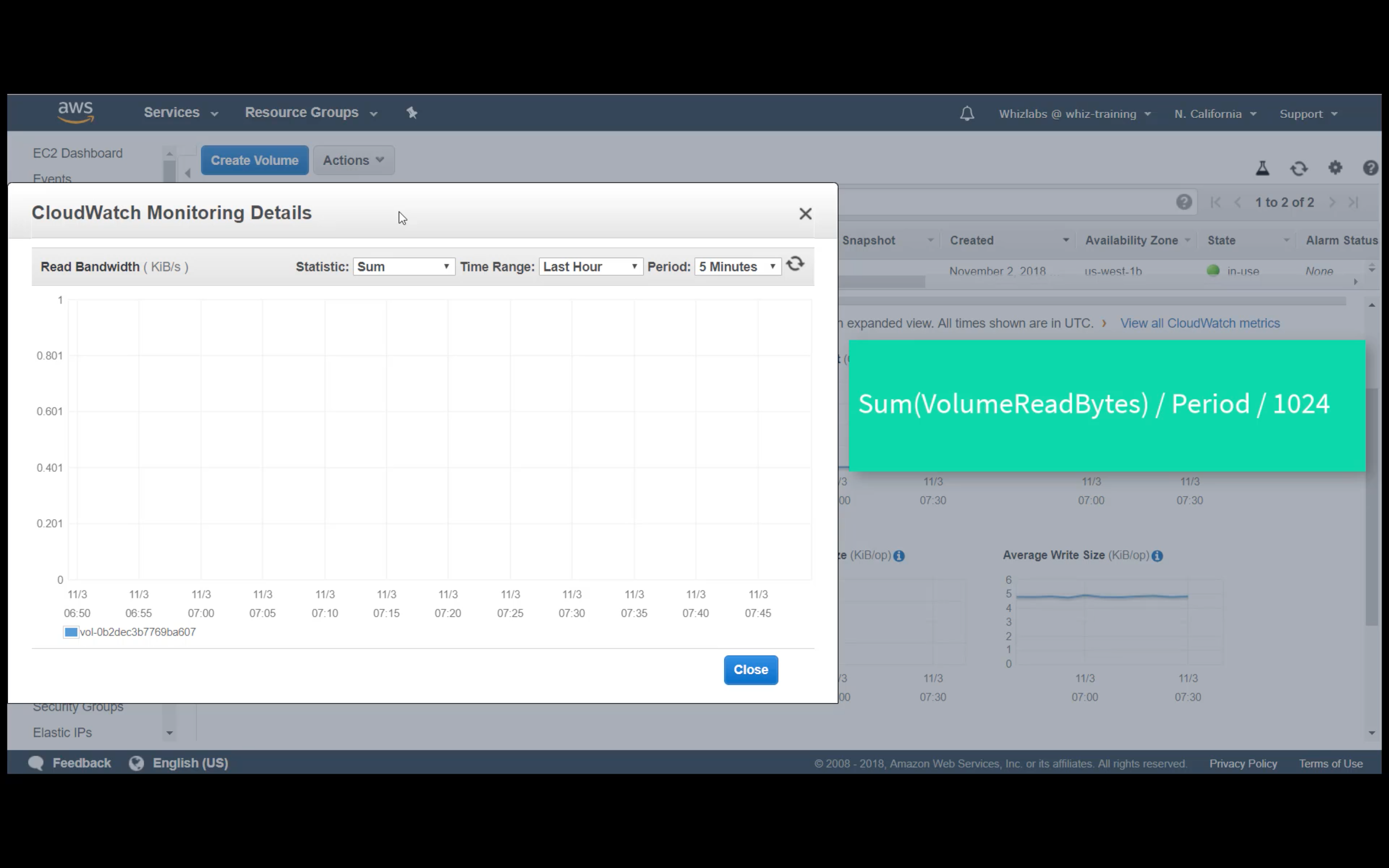

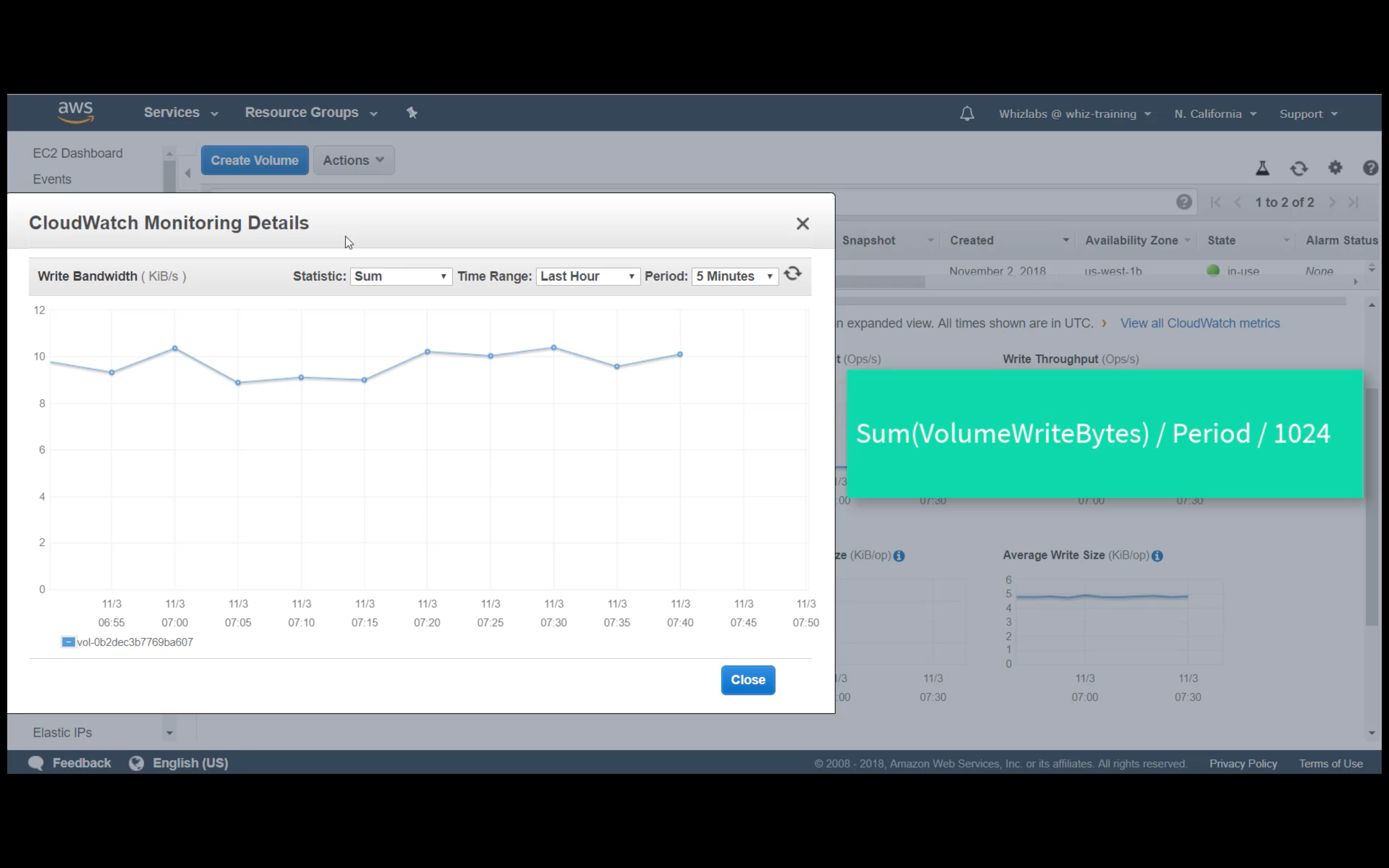

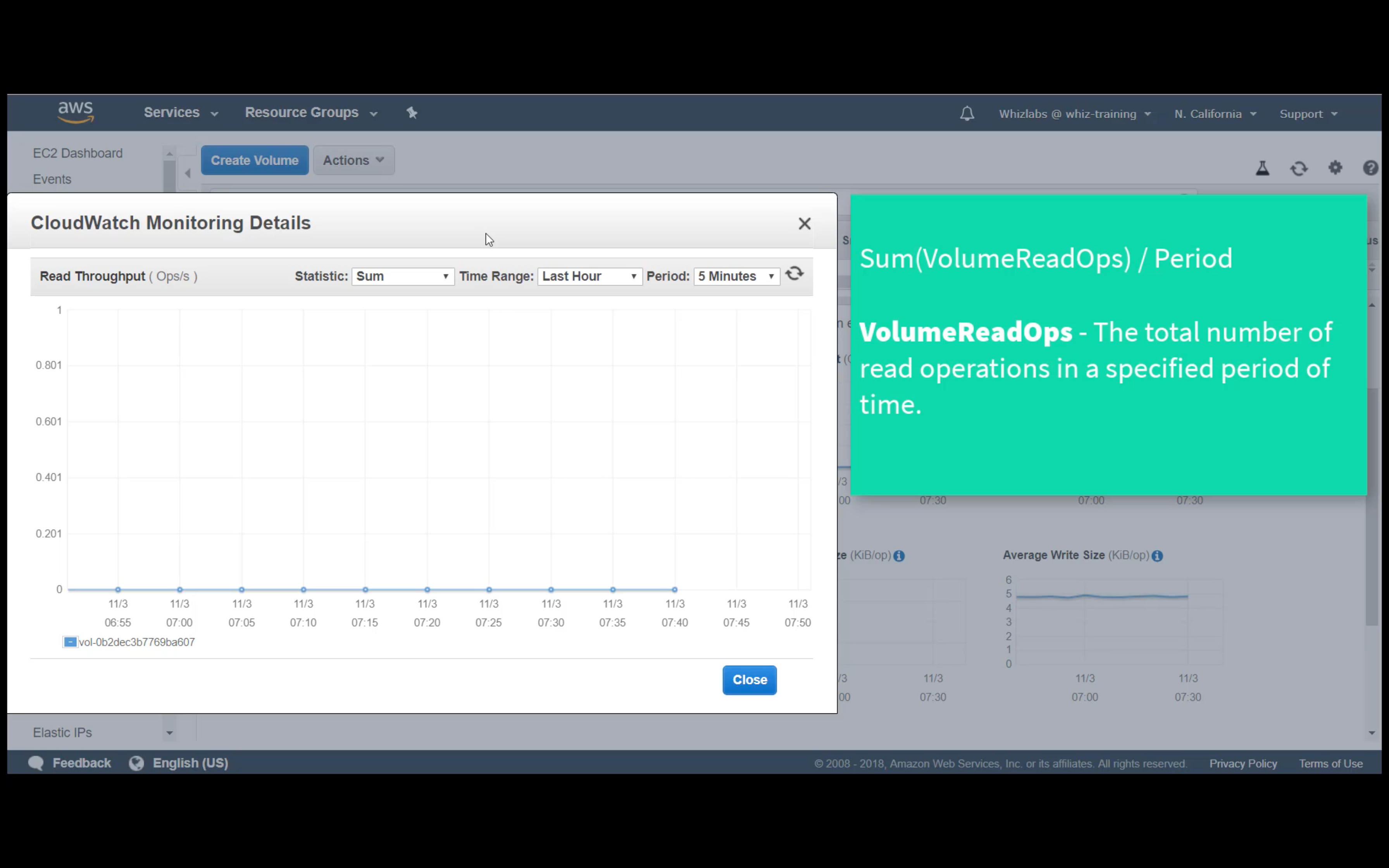

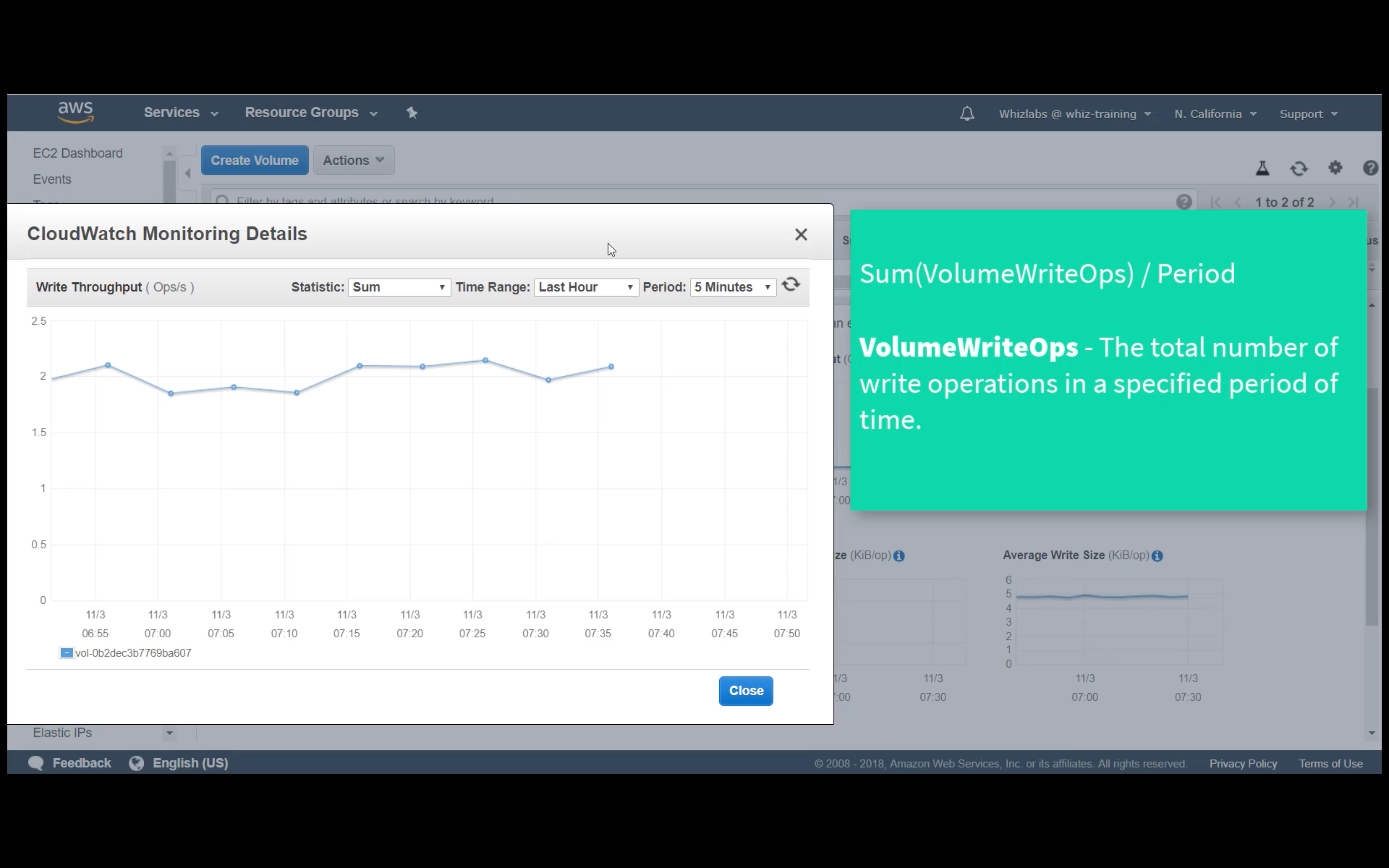

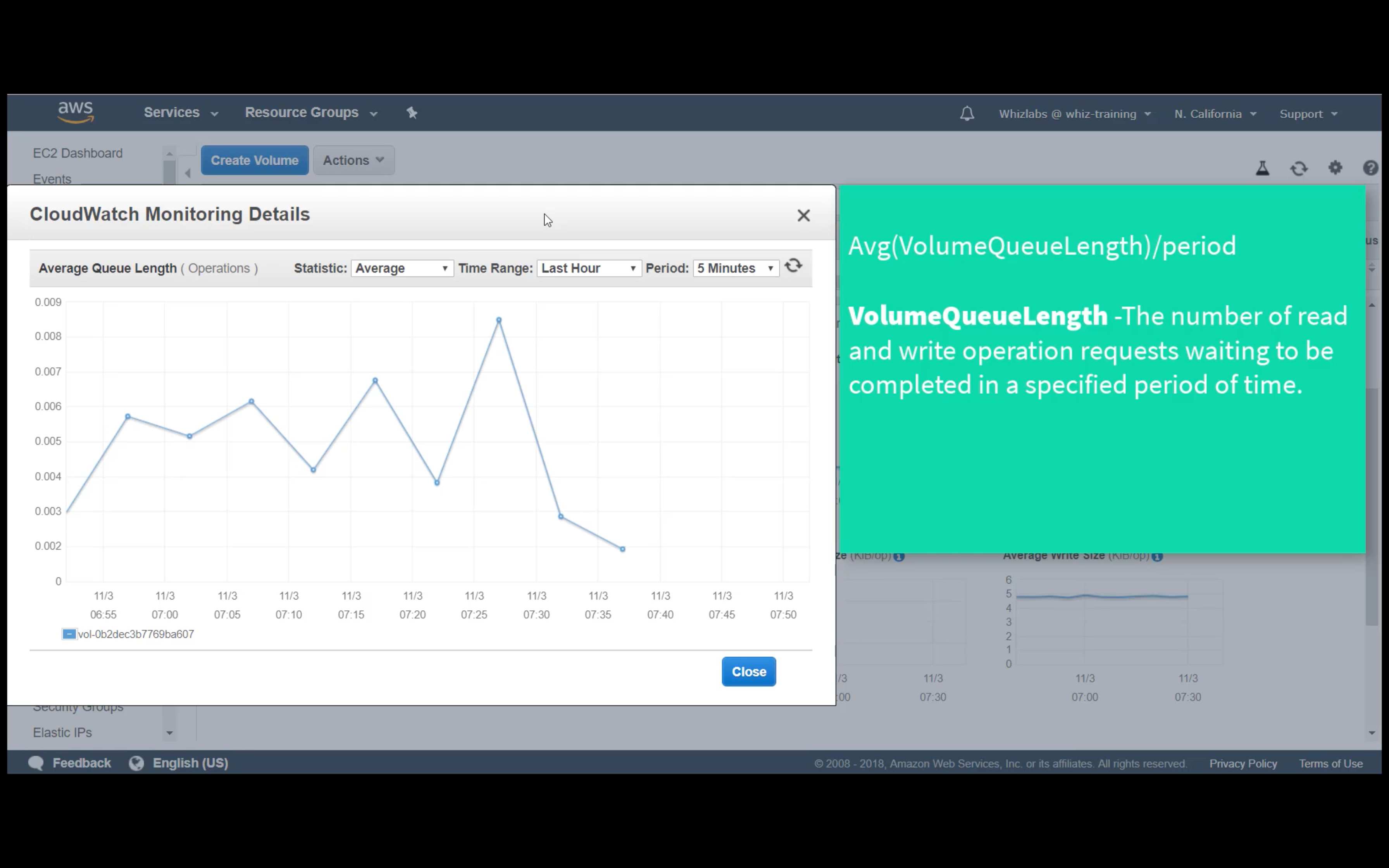

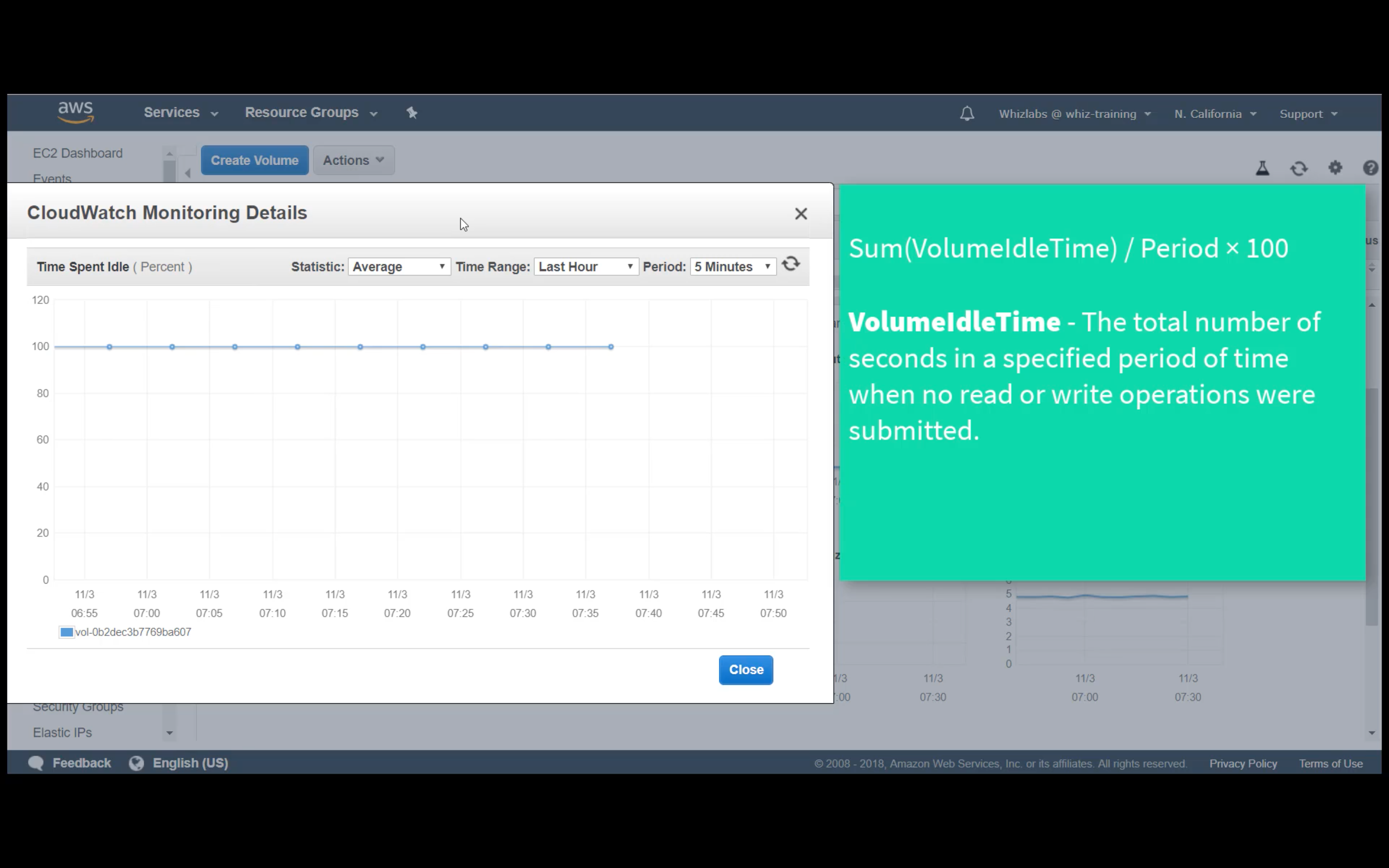

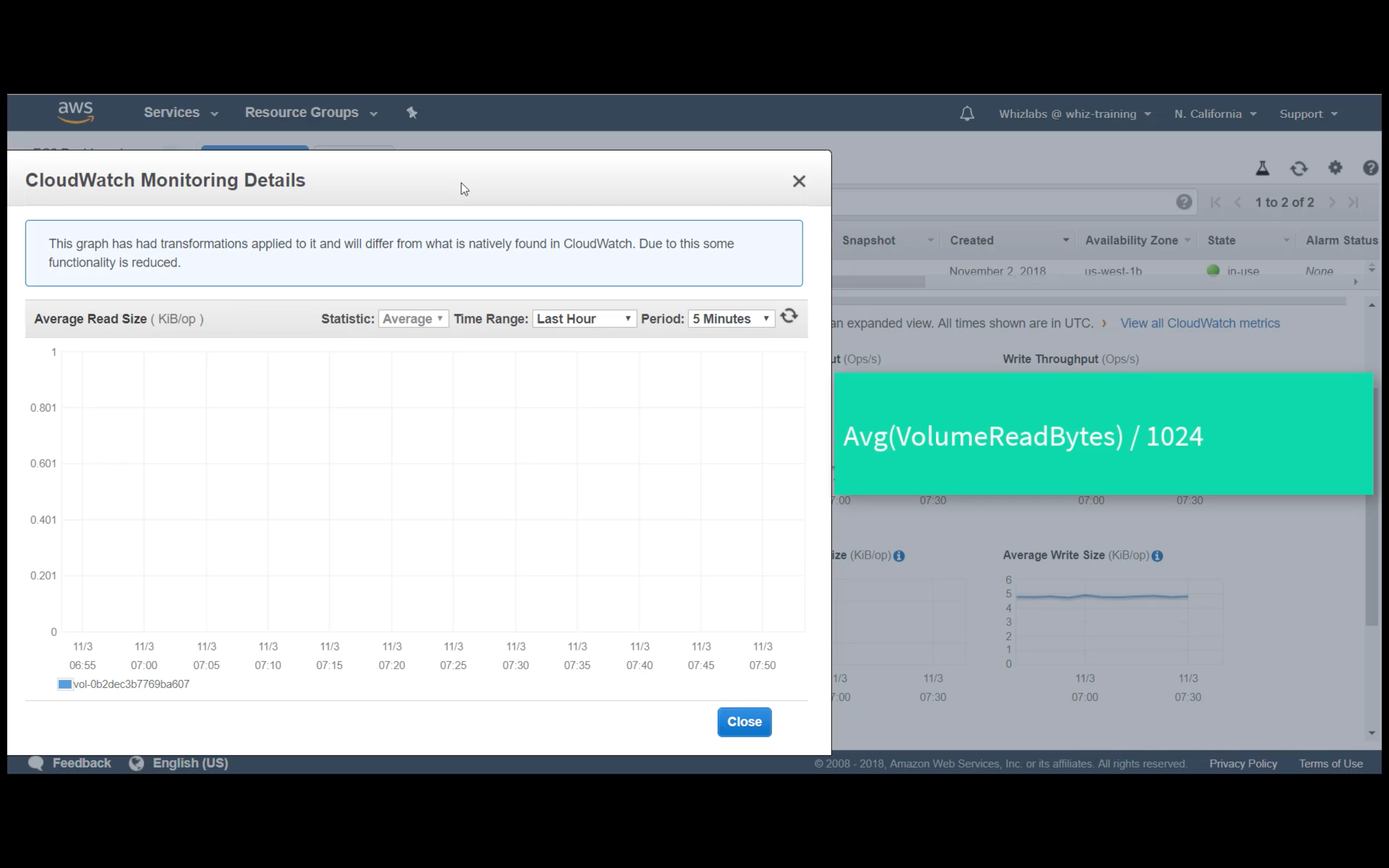

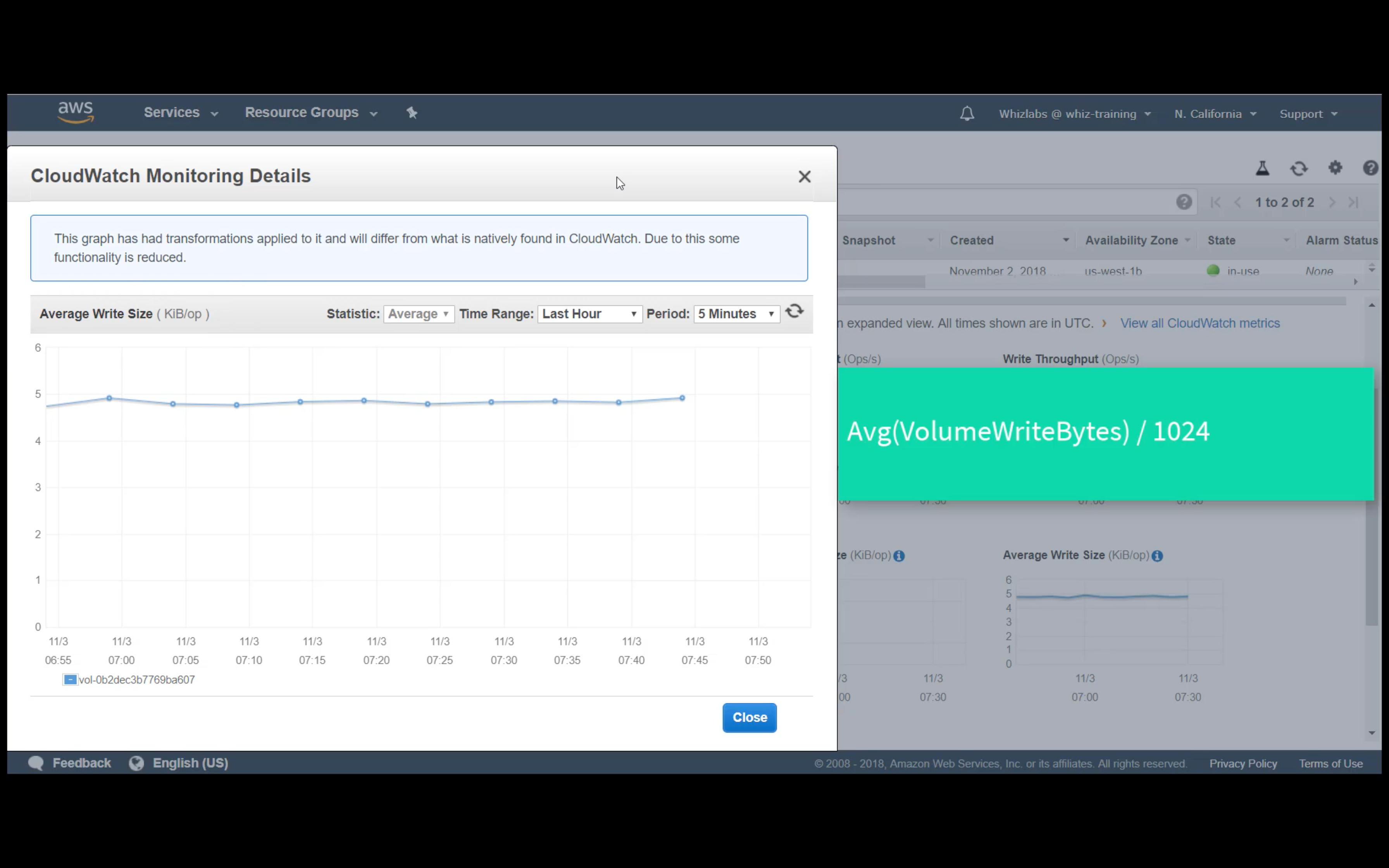

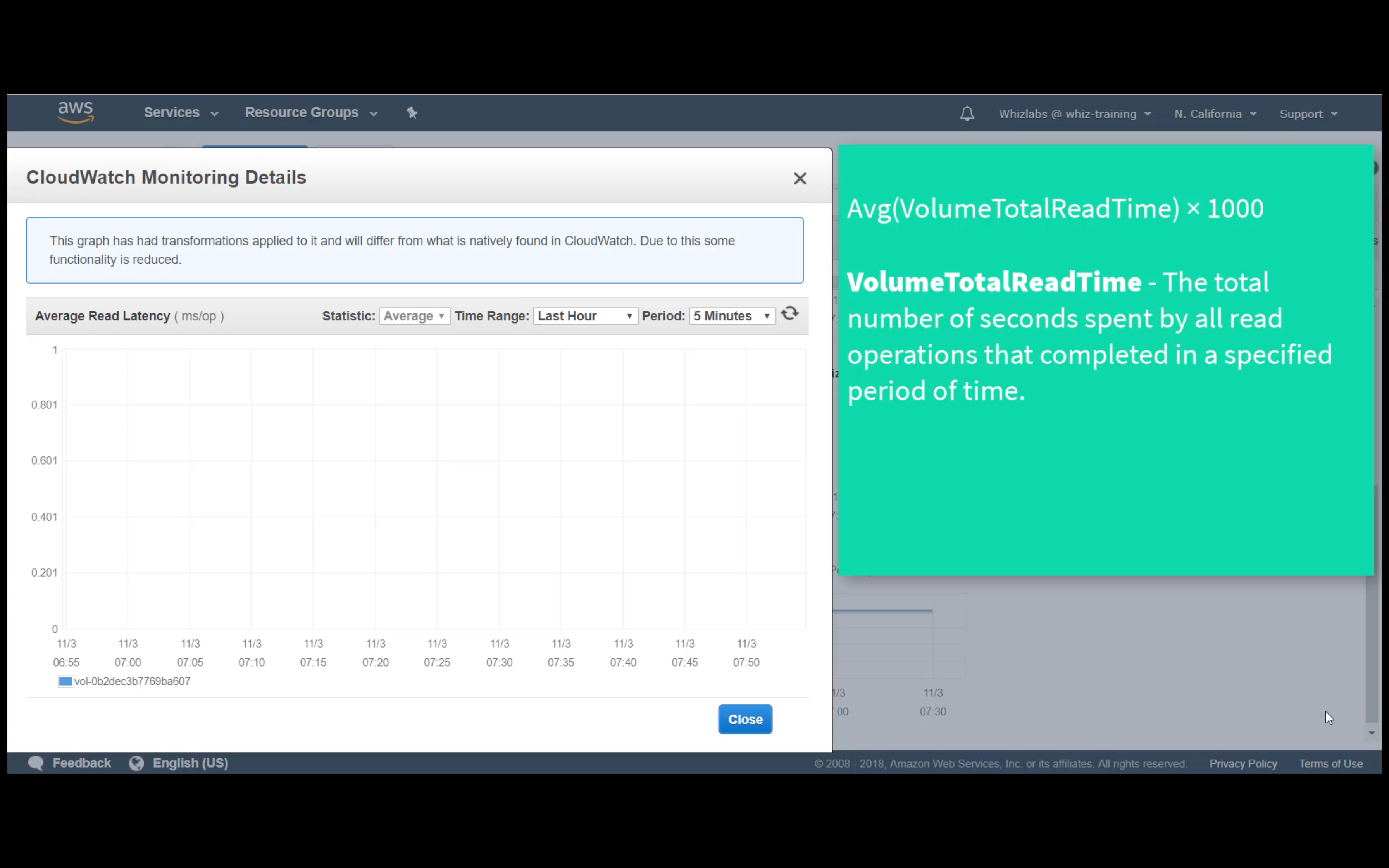

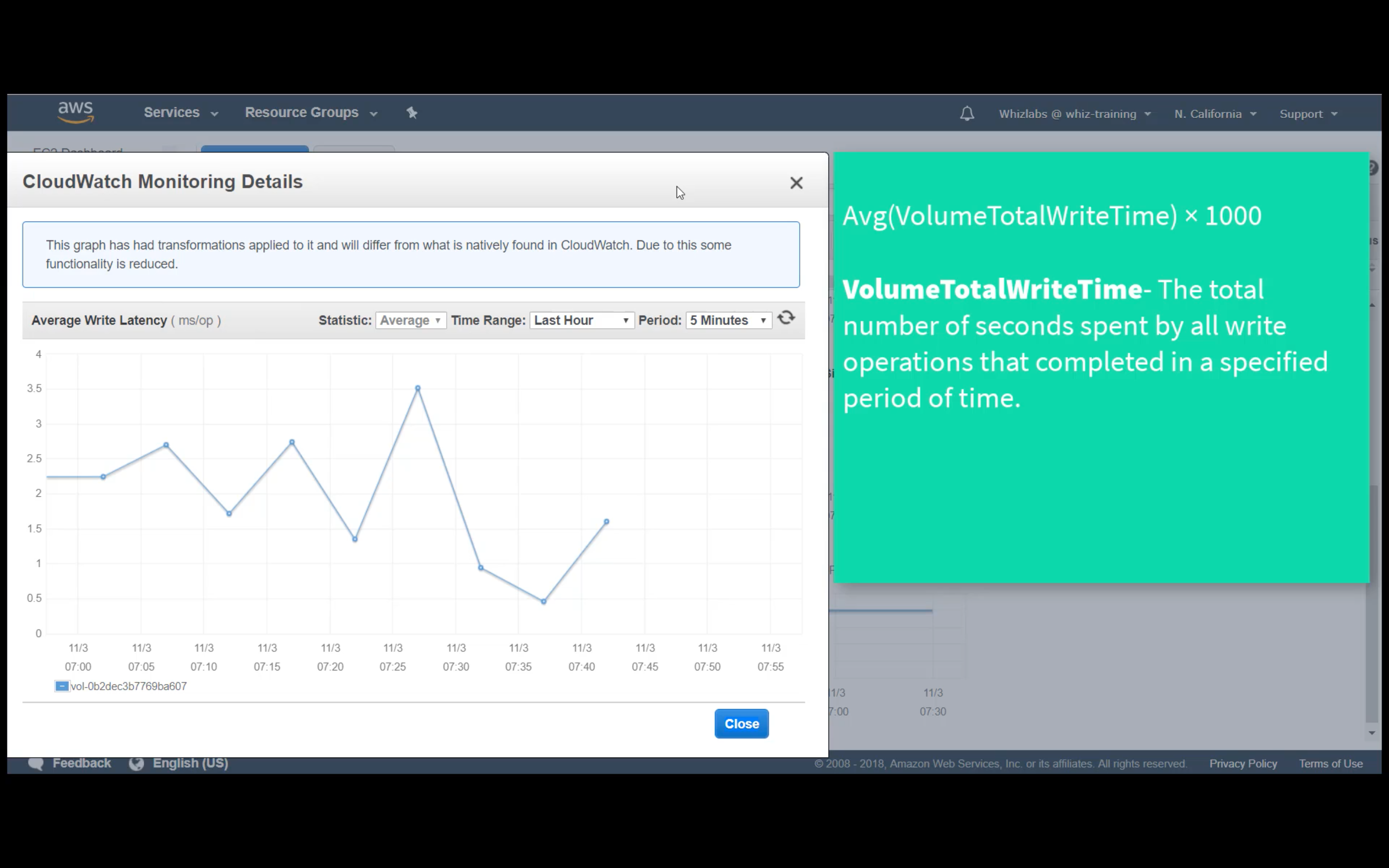

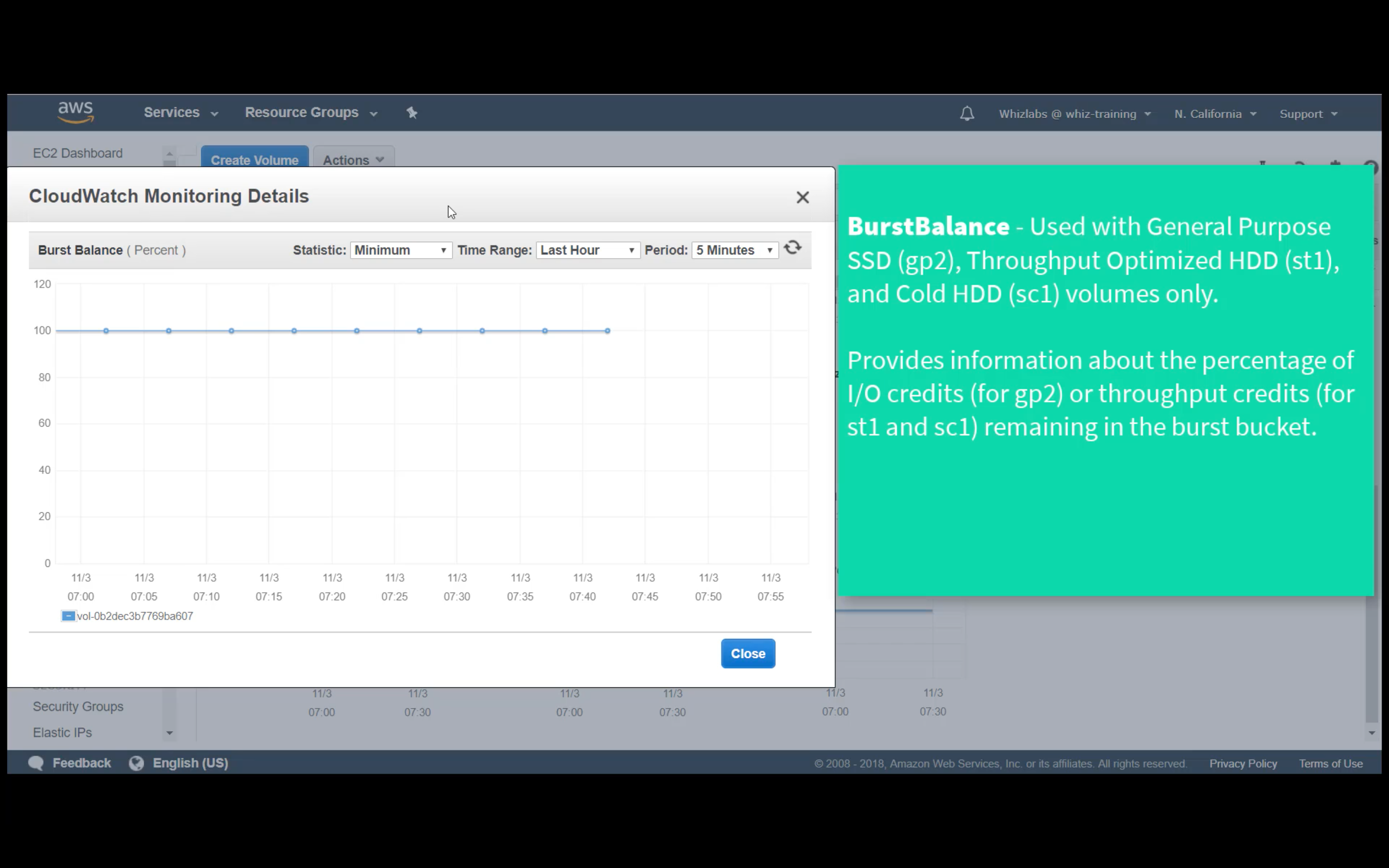

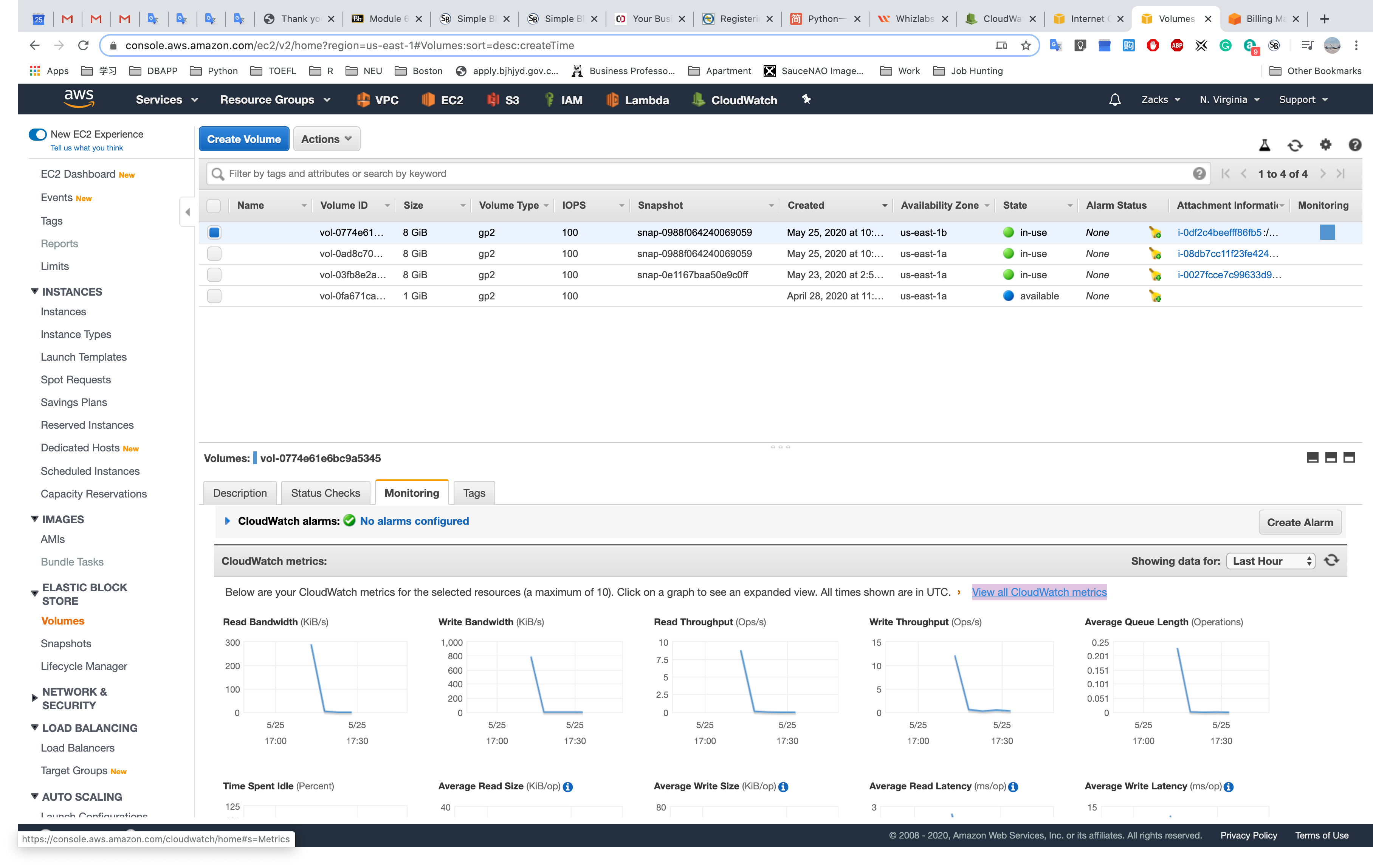

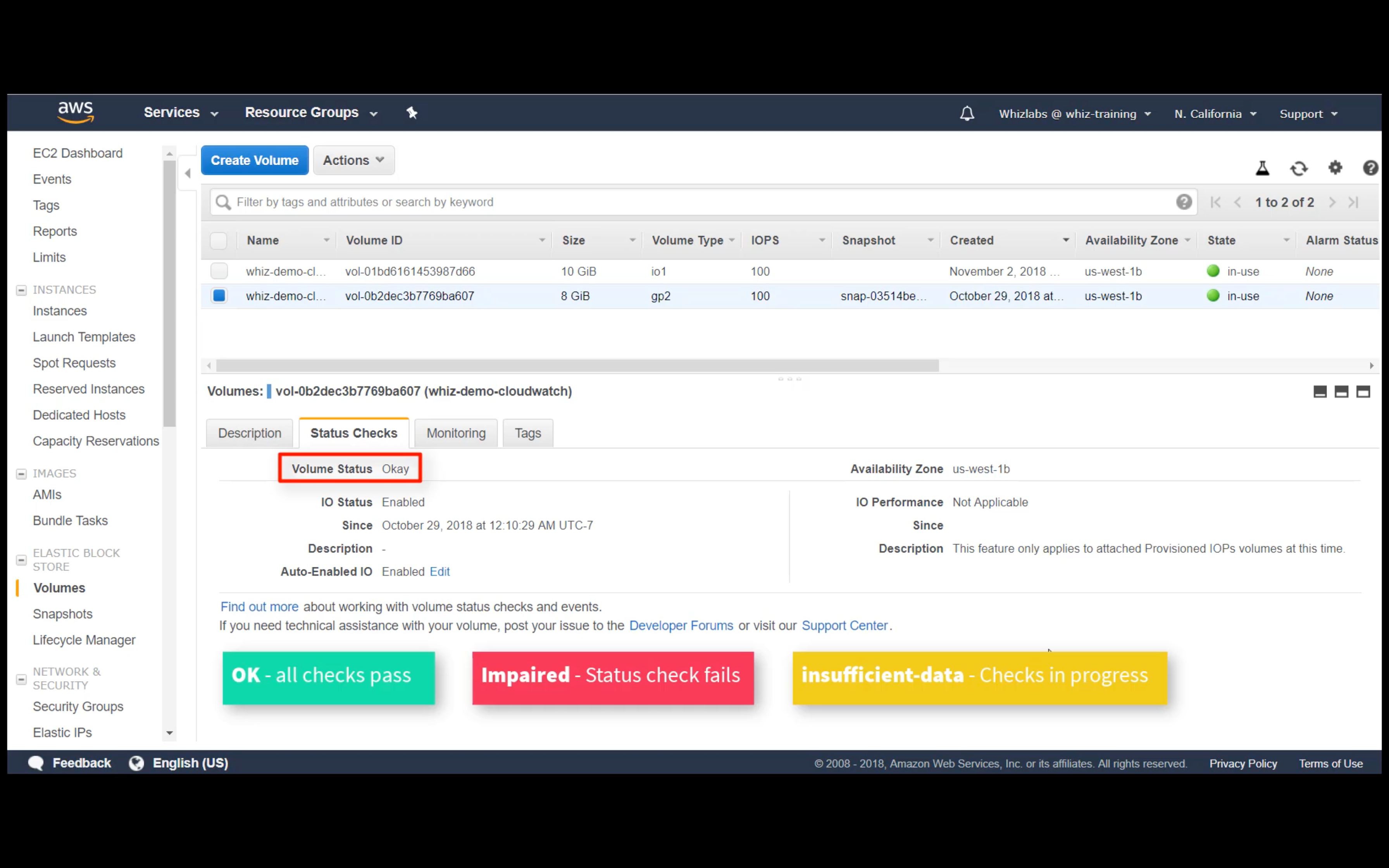

EBS Monitoring

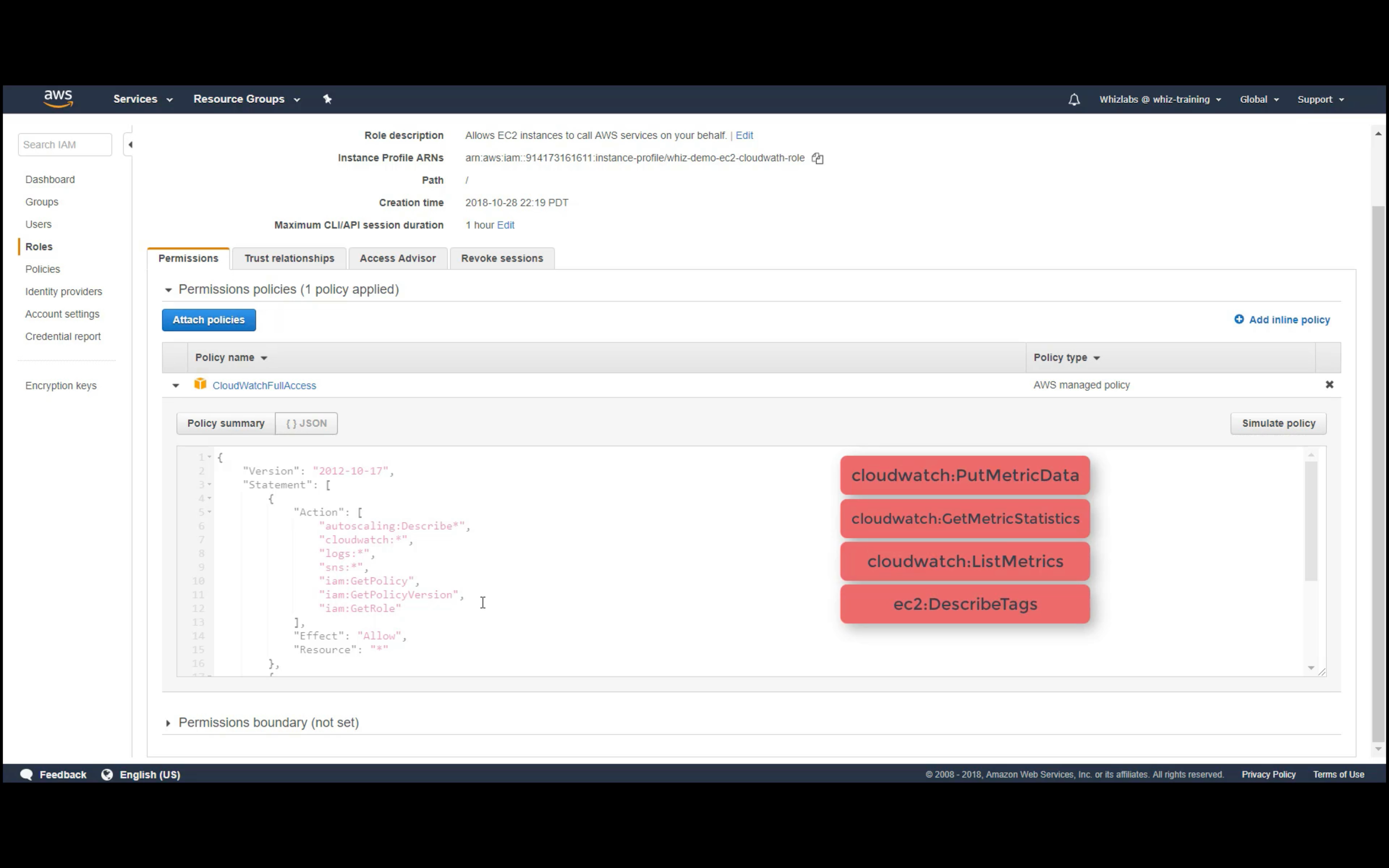

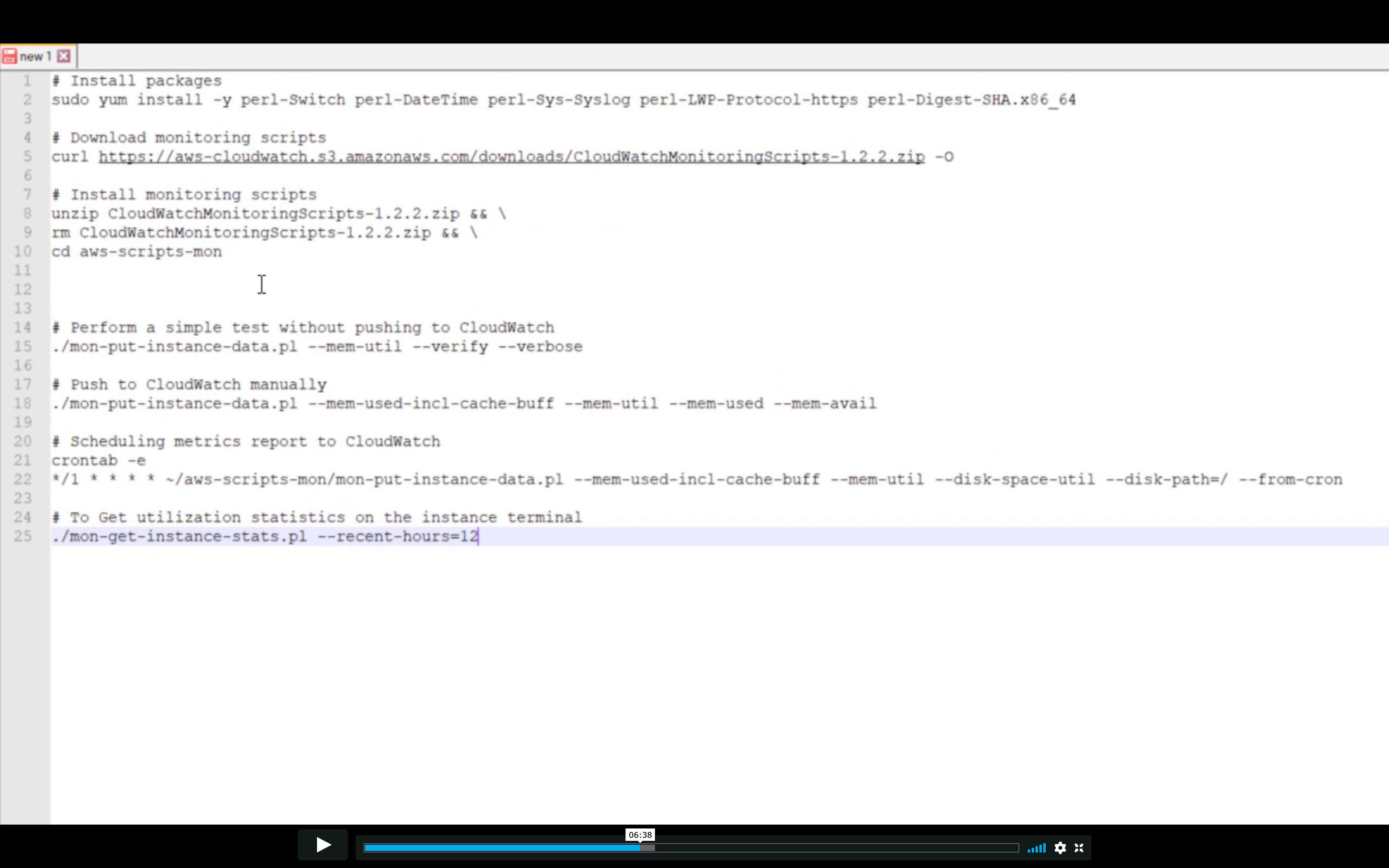

Custom Metrics Monitoring

IAM Role required

Extra program required

Monitoring memory and disk metrics for Amazon EC2 Linux instances

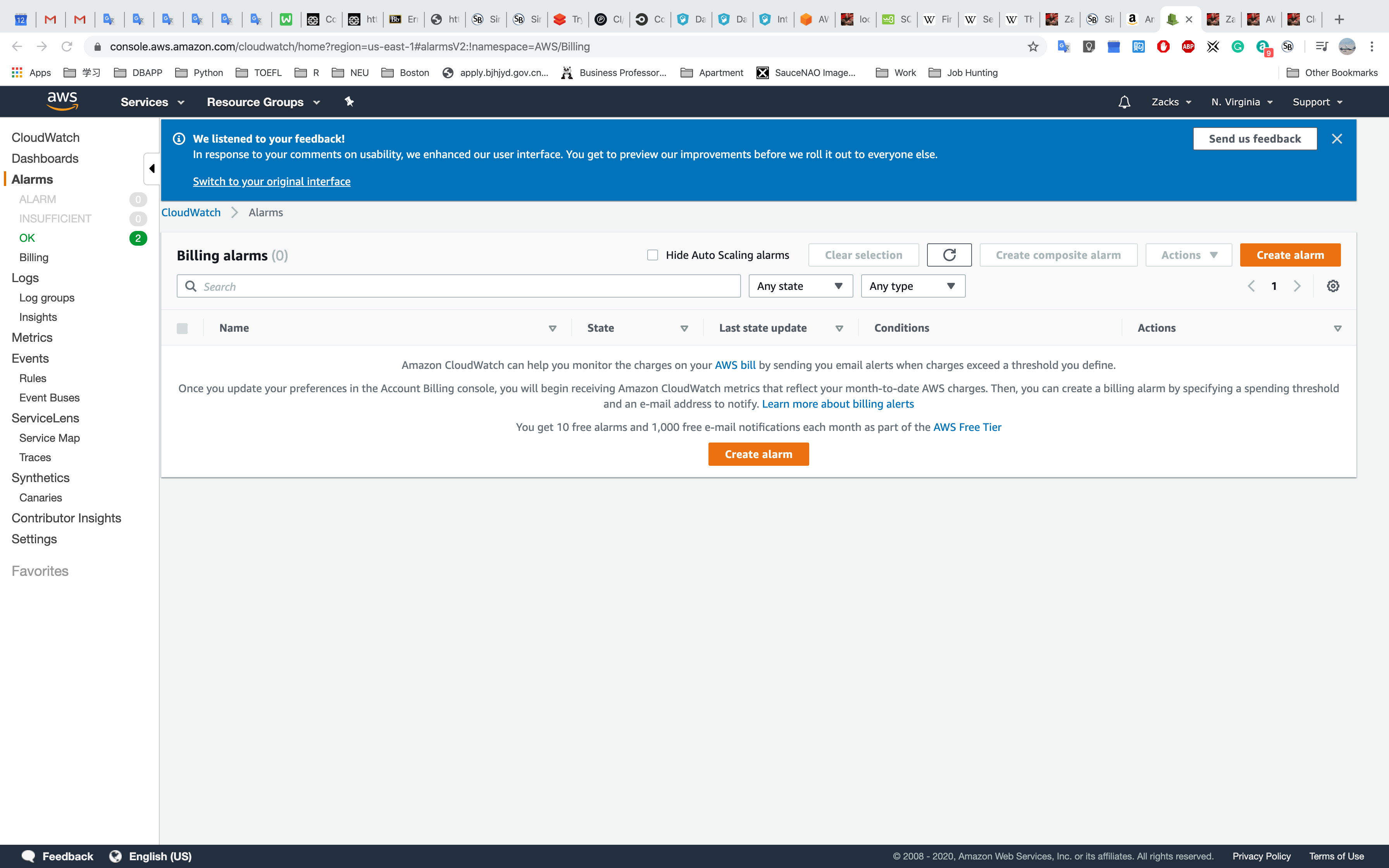

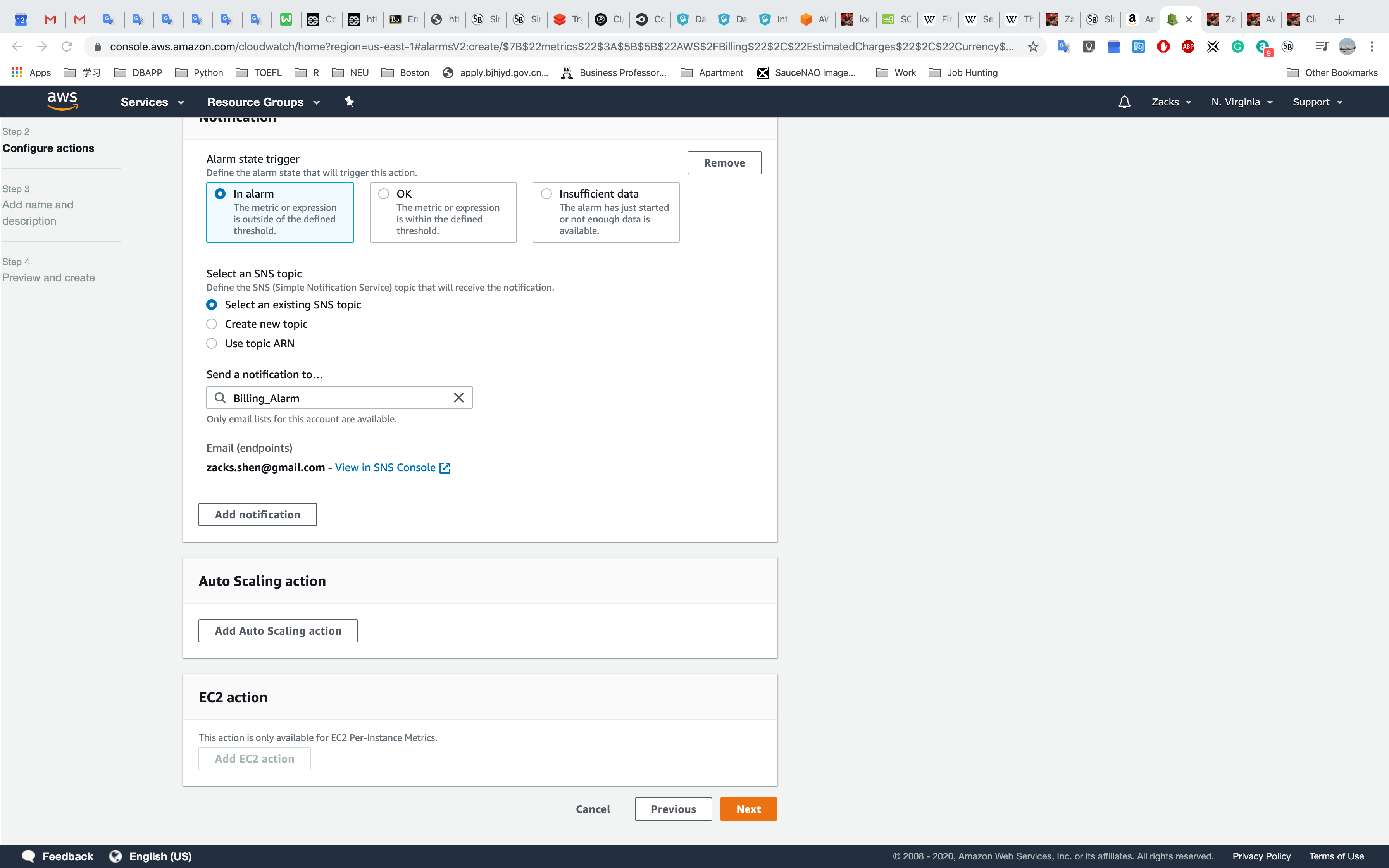

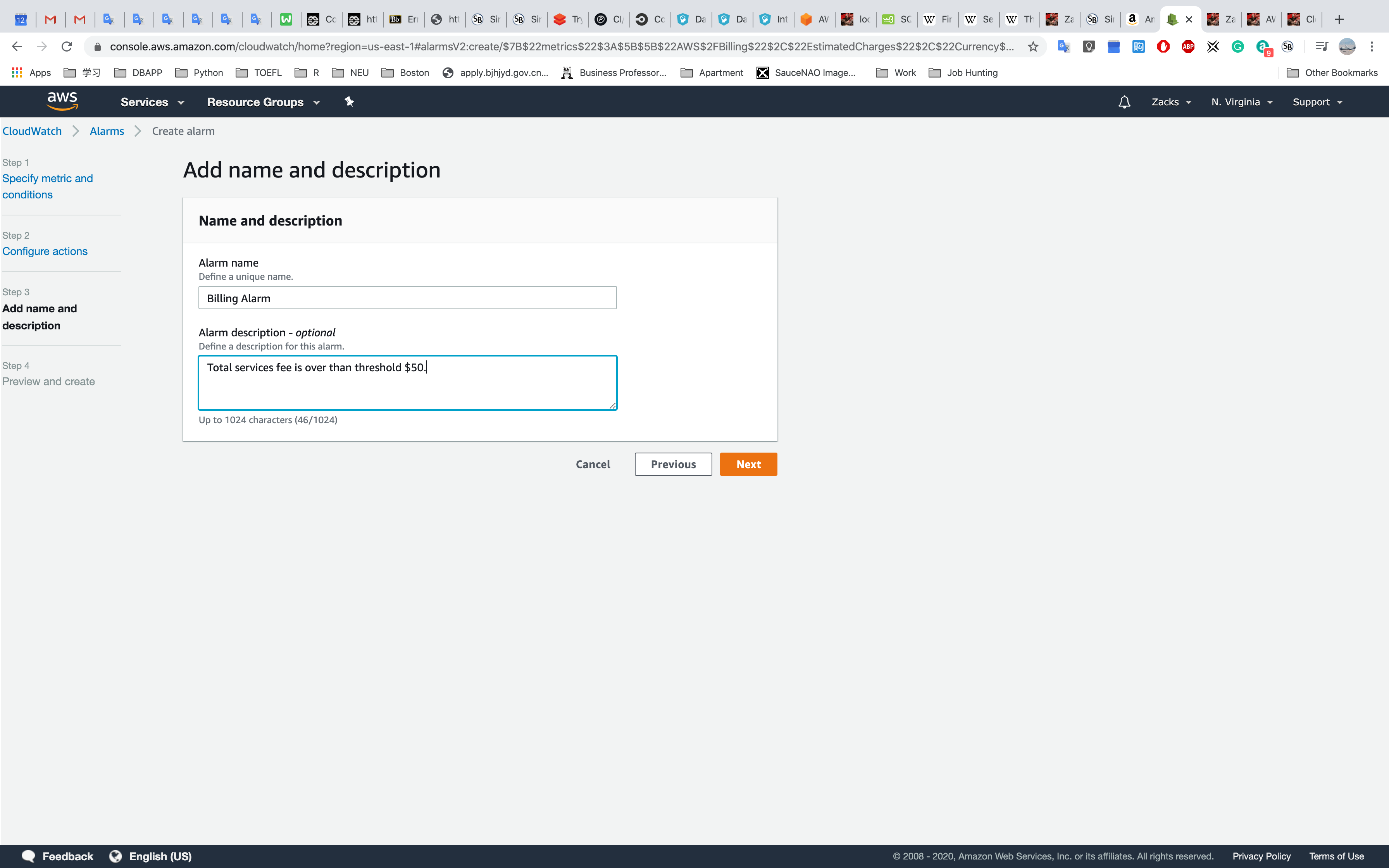

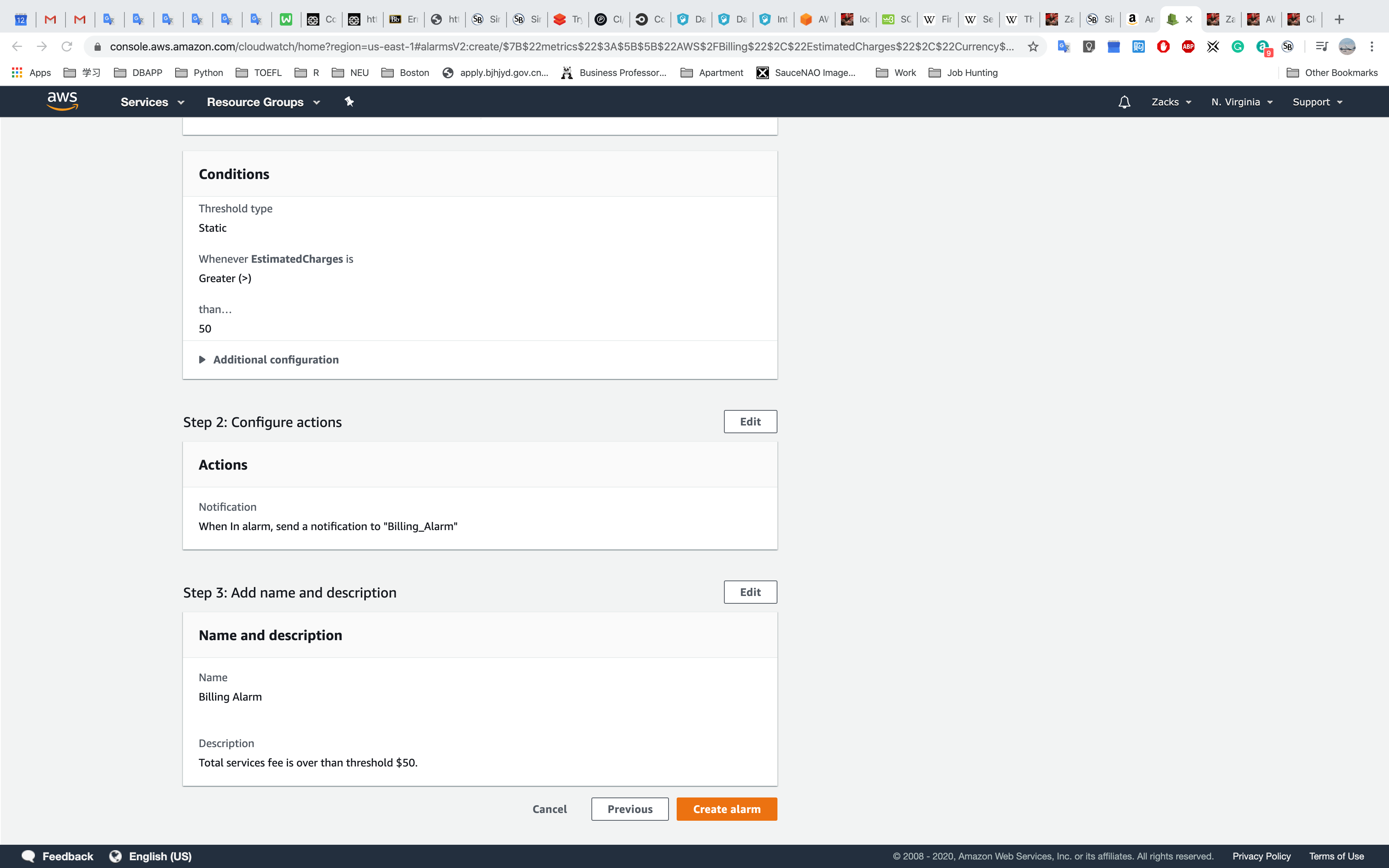

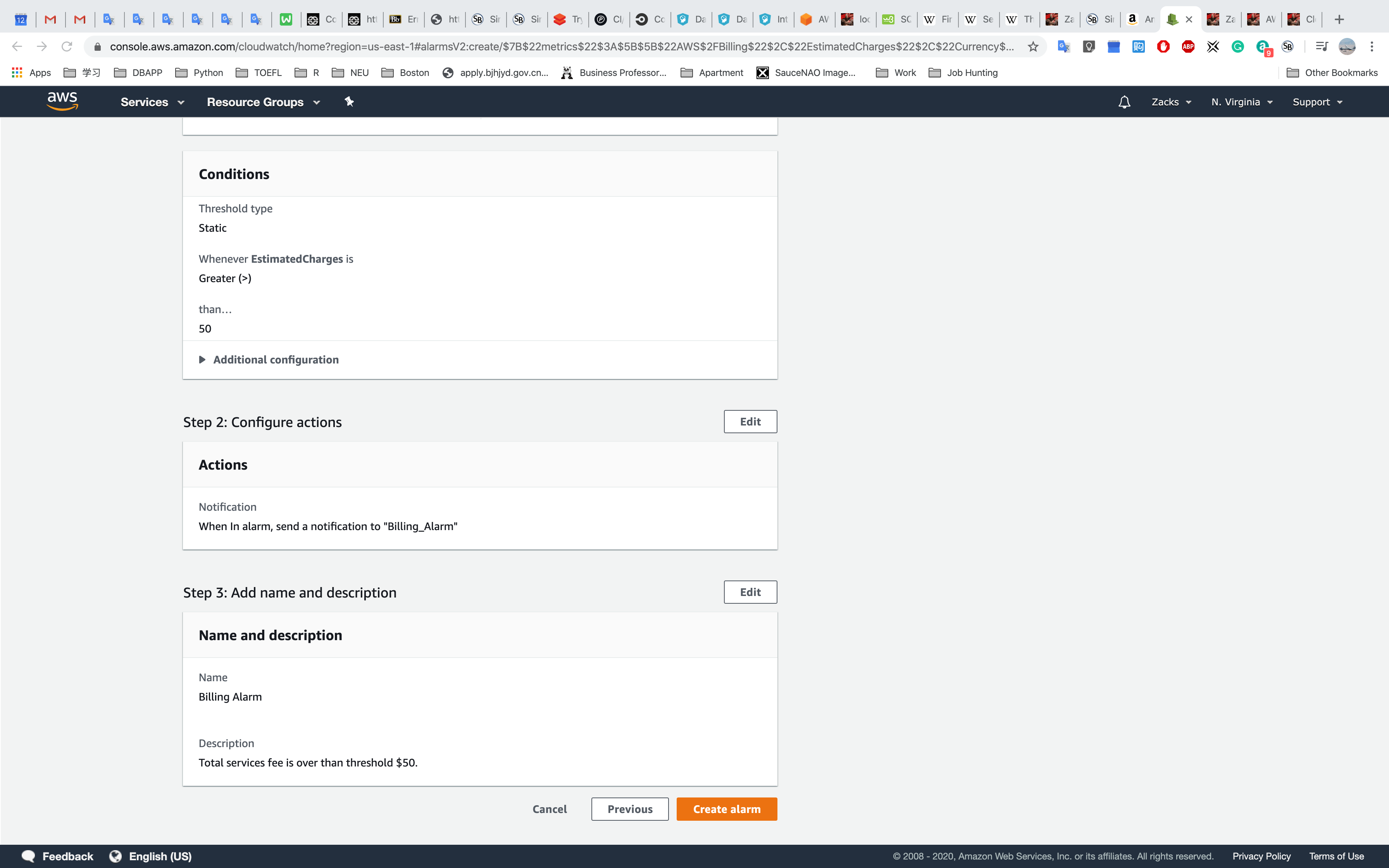

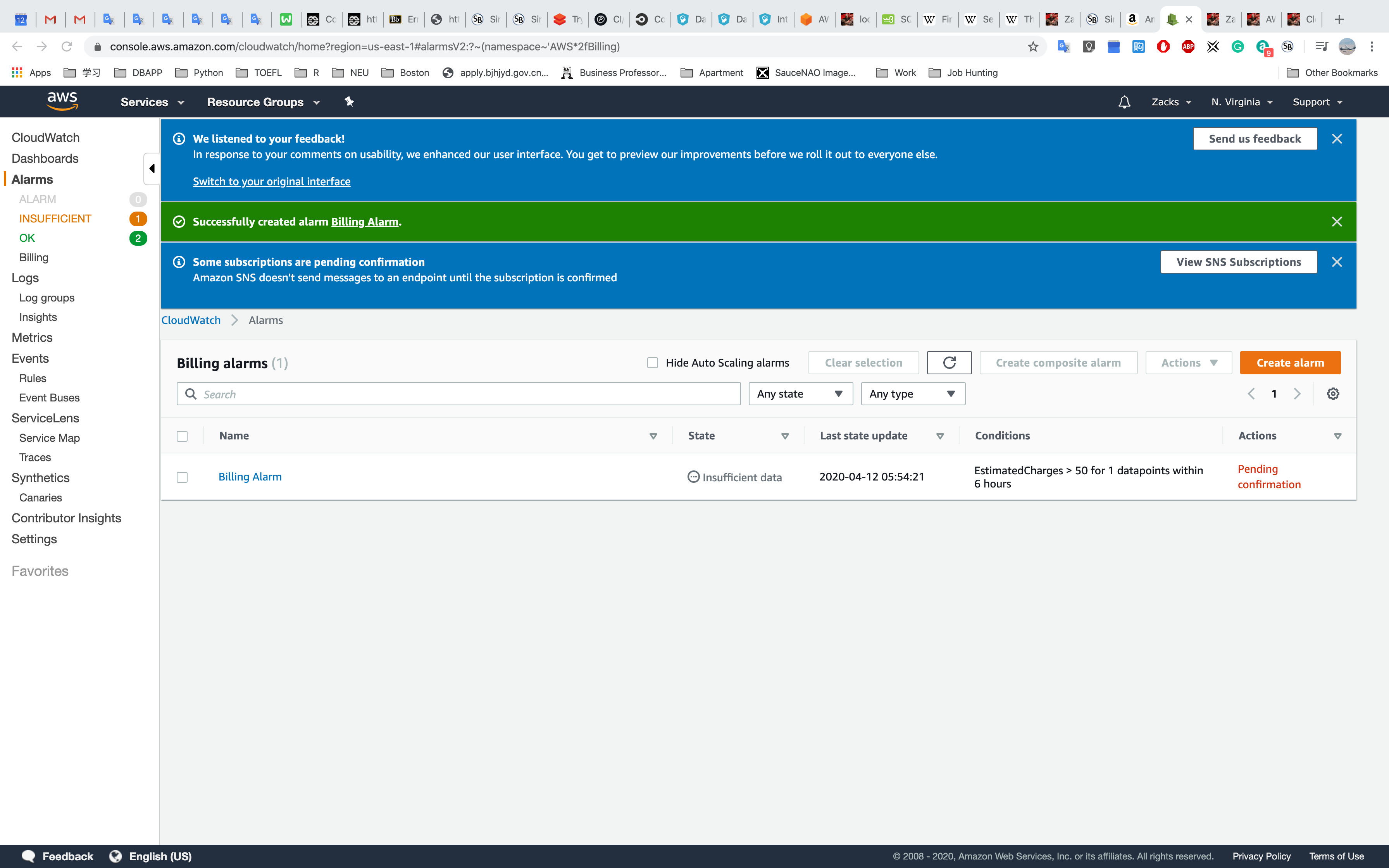

CloudWatch - Billing Alarm

Click Billing on the left pane

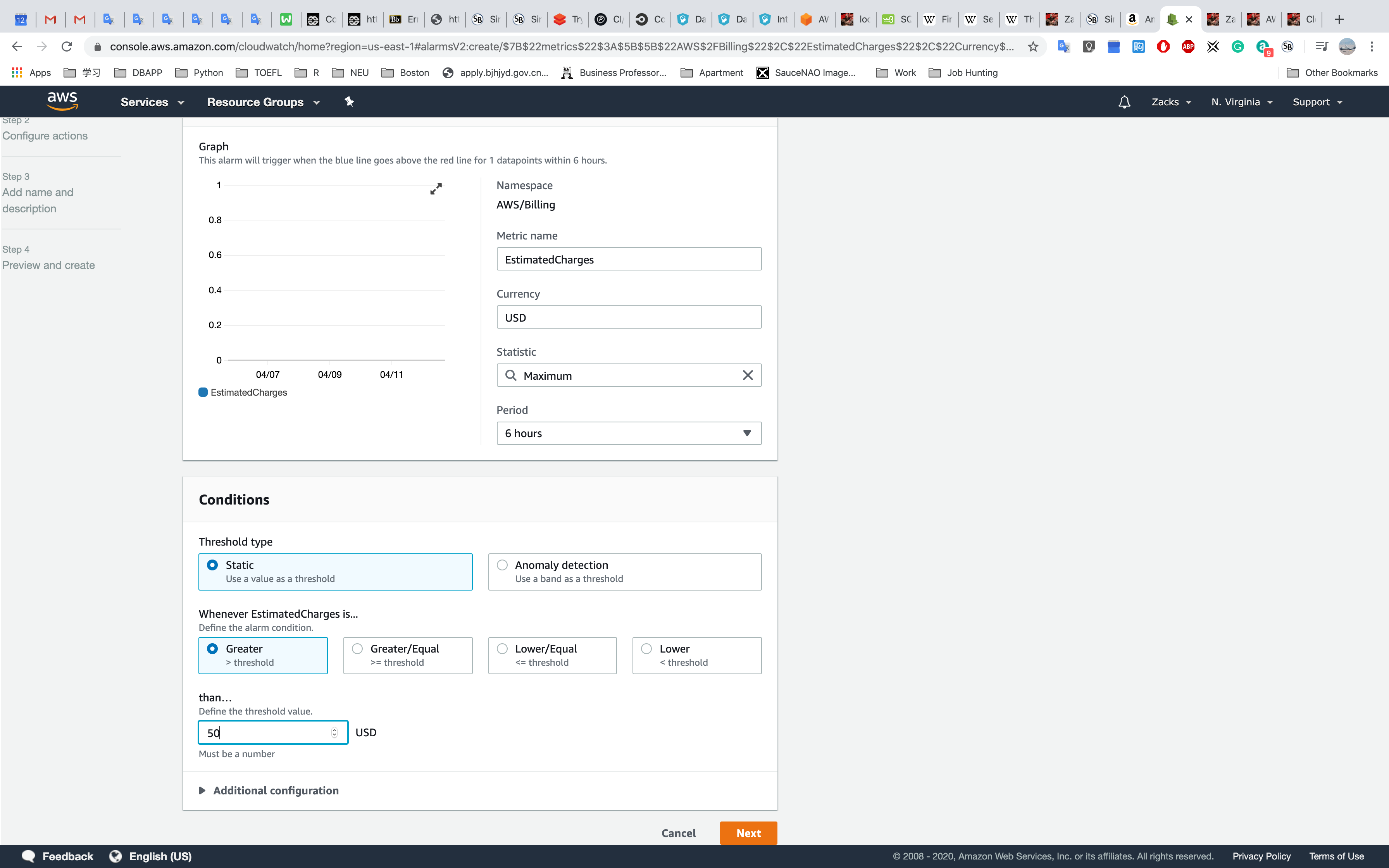

Click Create Alarm

Set a threshold and hit Next

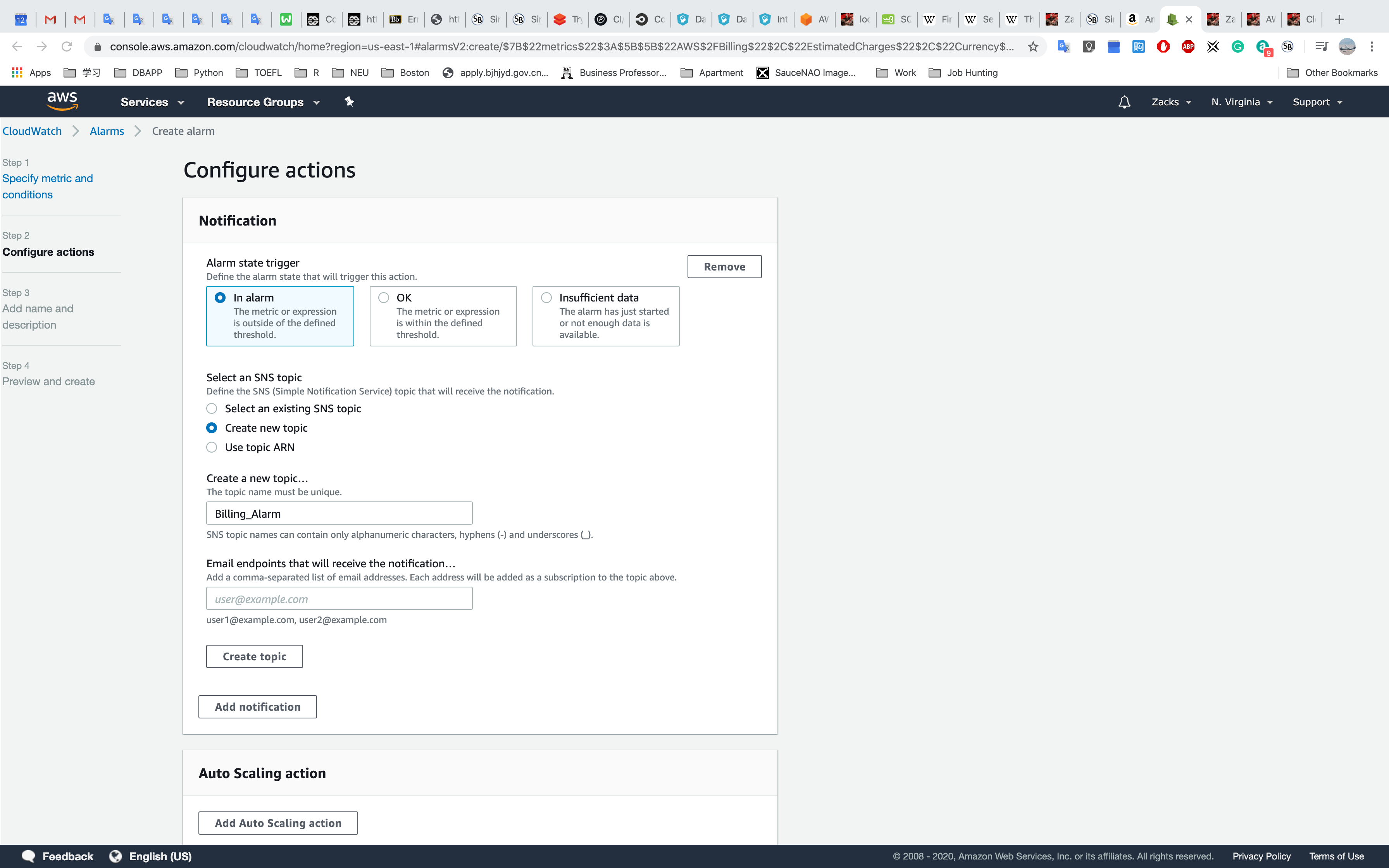

Select Create new topic, fill it, and Click Create Topic

Then Click Next

Name it and Click Next

Scroll down and Click Create Alarm

Click Create Alarm

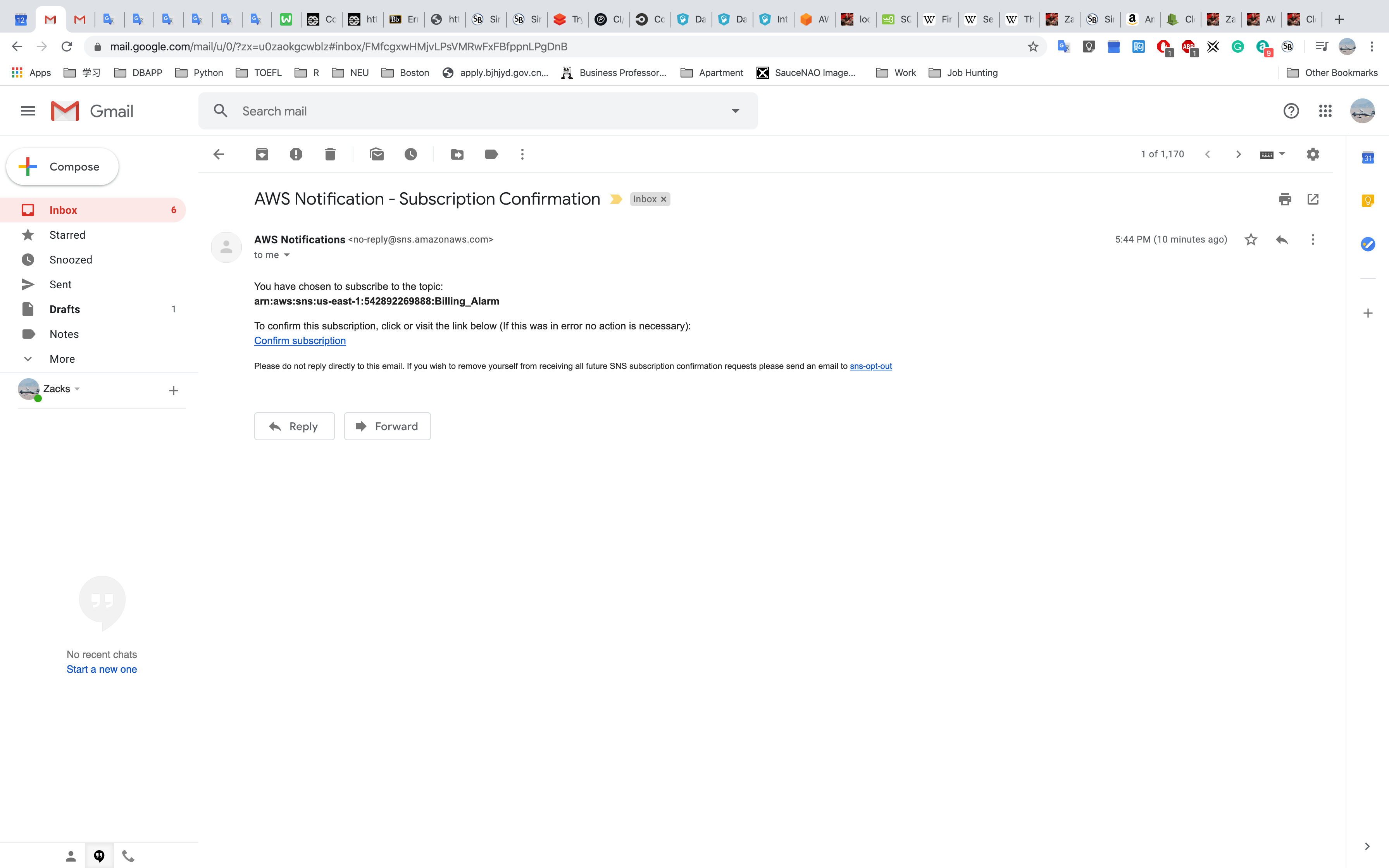

Notice the Pending confirmation

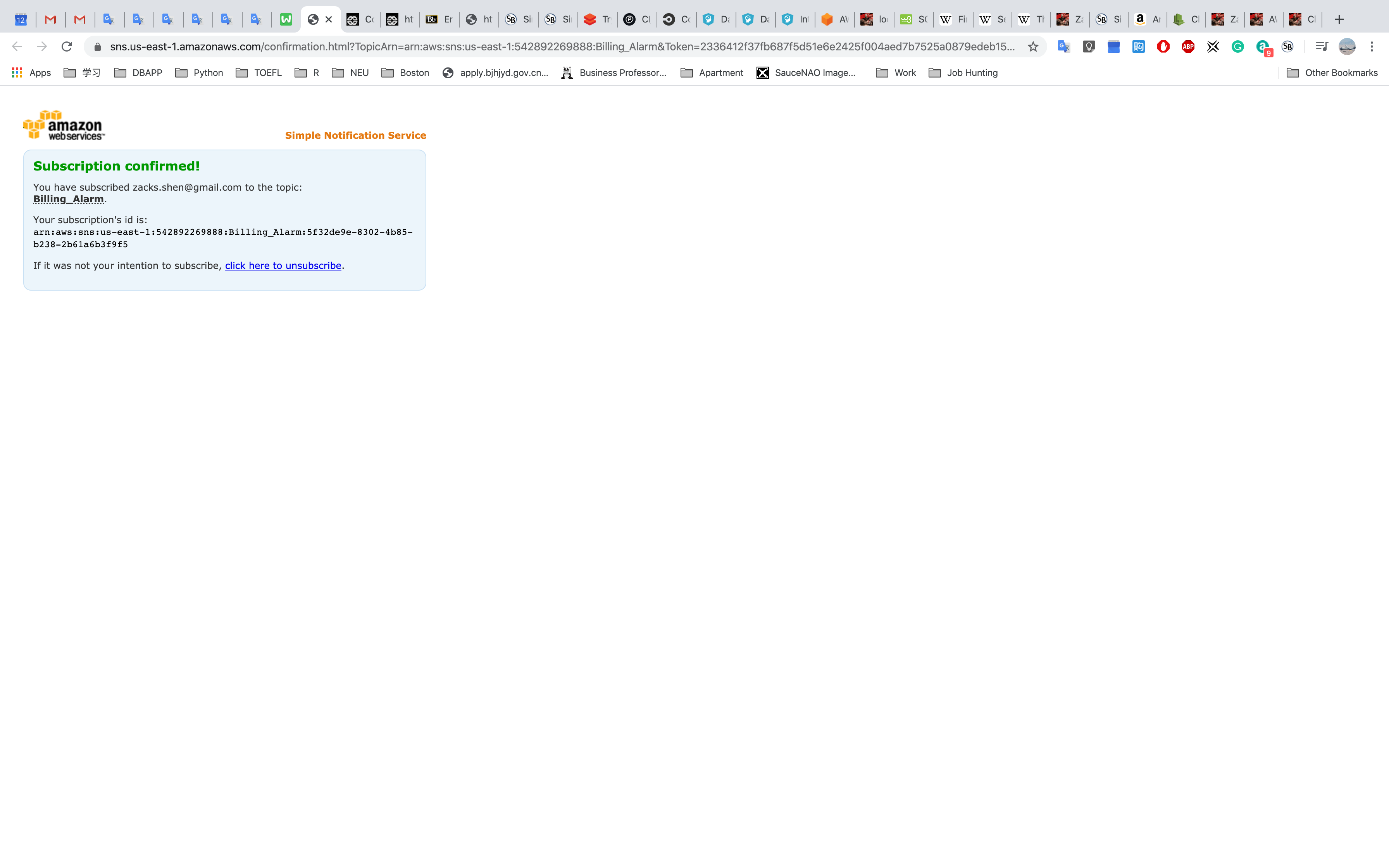

Open your email and Click Confirm subscription

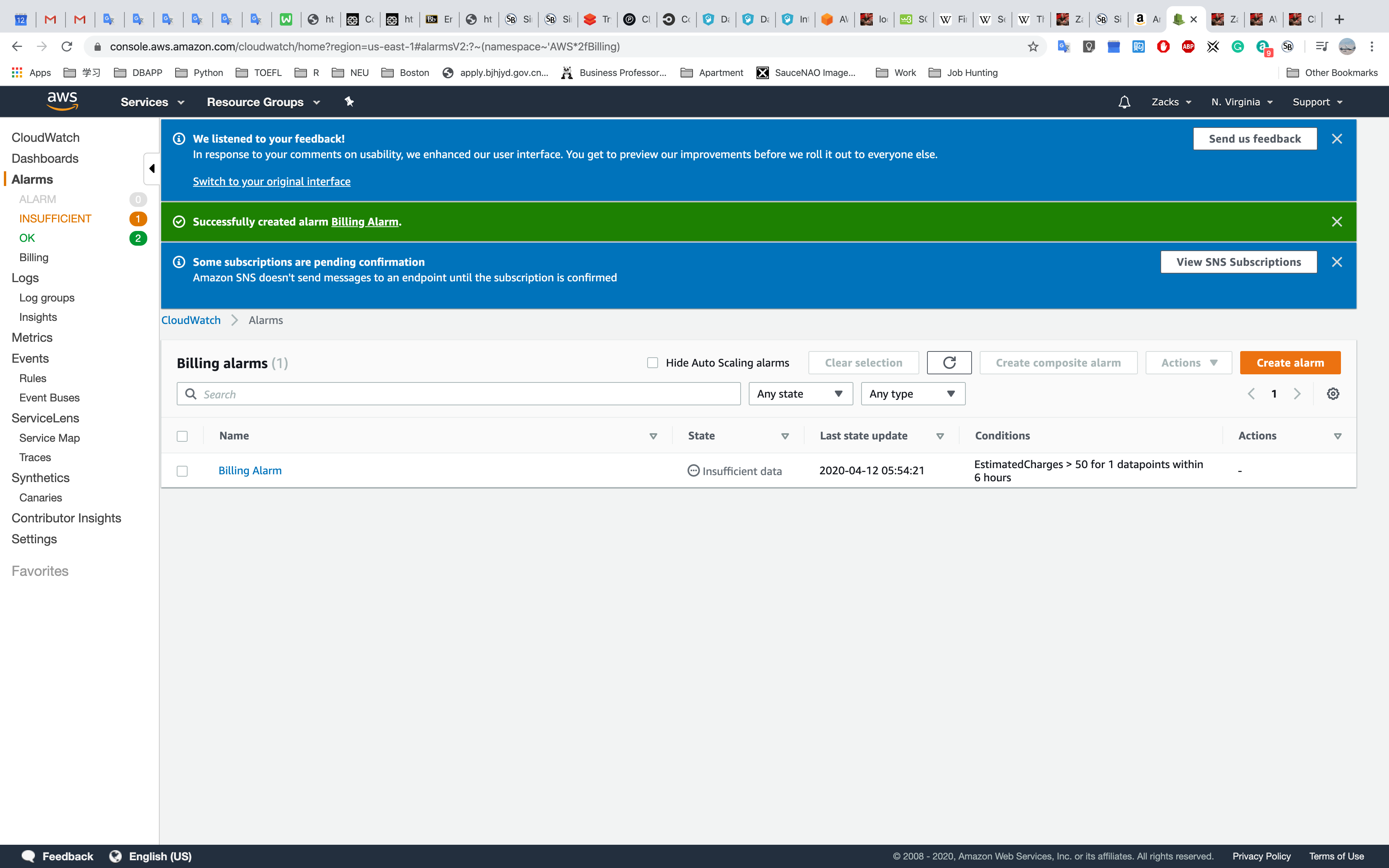

Go back to AWS Management Console and Refresh the page

AWS Auto Scaling

Scale multiple resources to meet demand

AWS Chatbot

ChatOps for AWS

- Receive notifications

- Retrieve diagnostic information

- Pre-defined AWS Identity and Access Management policy templates

- Supports Slack and Amazon Chime

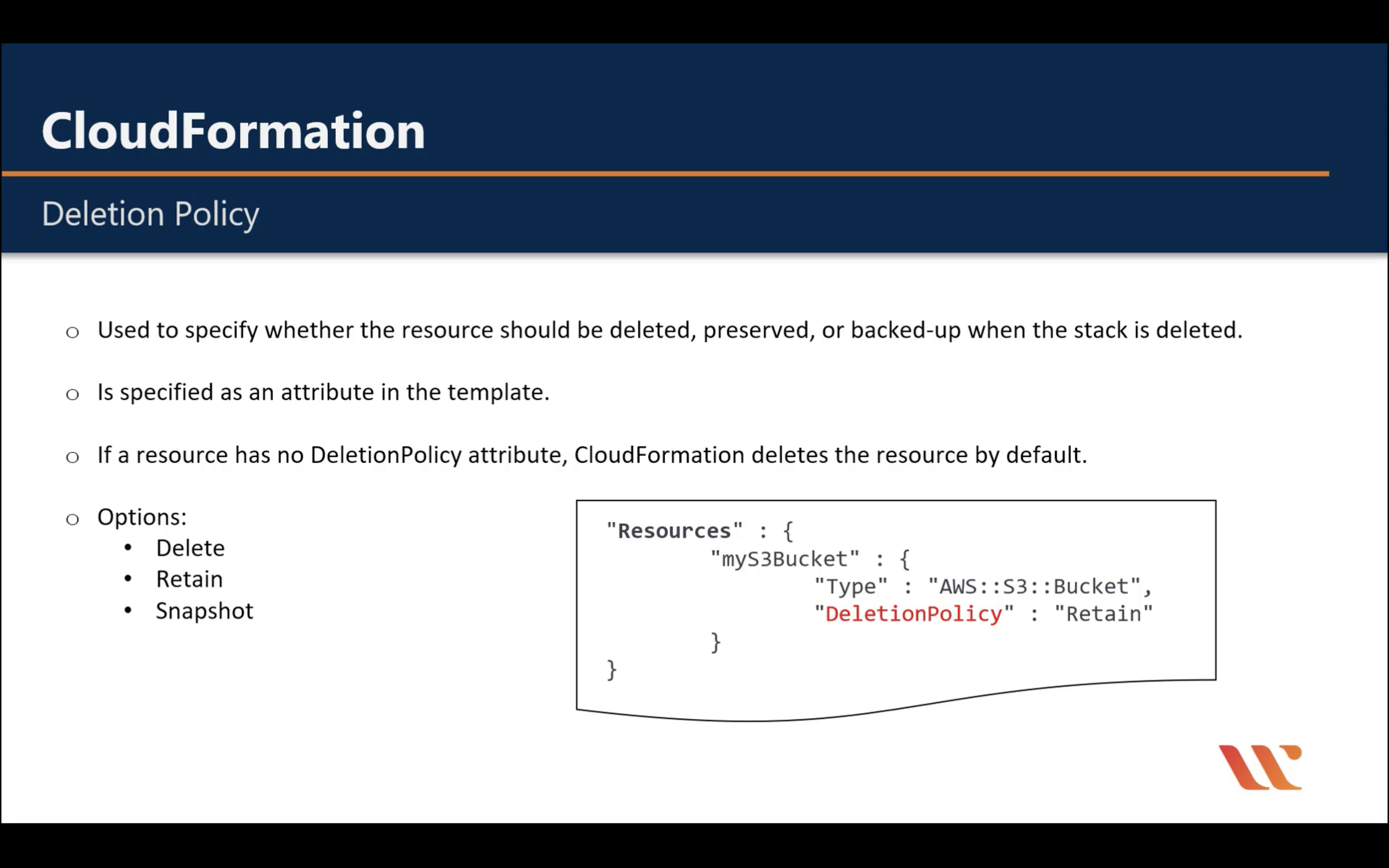

AWS CloudFormation

Create and manage resources with templates

AWS CloudFormation Drift Detection can be used to detect changes made to AWS resources outside the CloudFormation Templates. AWS CloudFormation Drift Detection only checks property values that are explicitly set by stack templates or by specifying template parameters. It does not determine drift for property values that are set by default. To determine drift for these resources, you can explicitly set property values which can be the same as that of the default value.

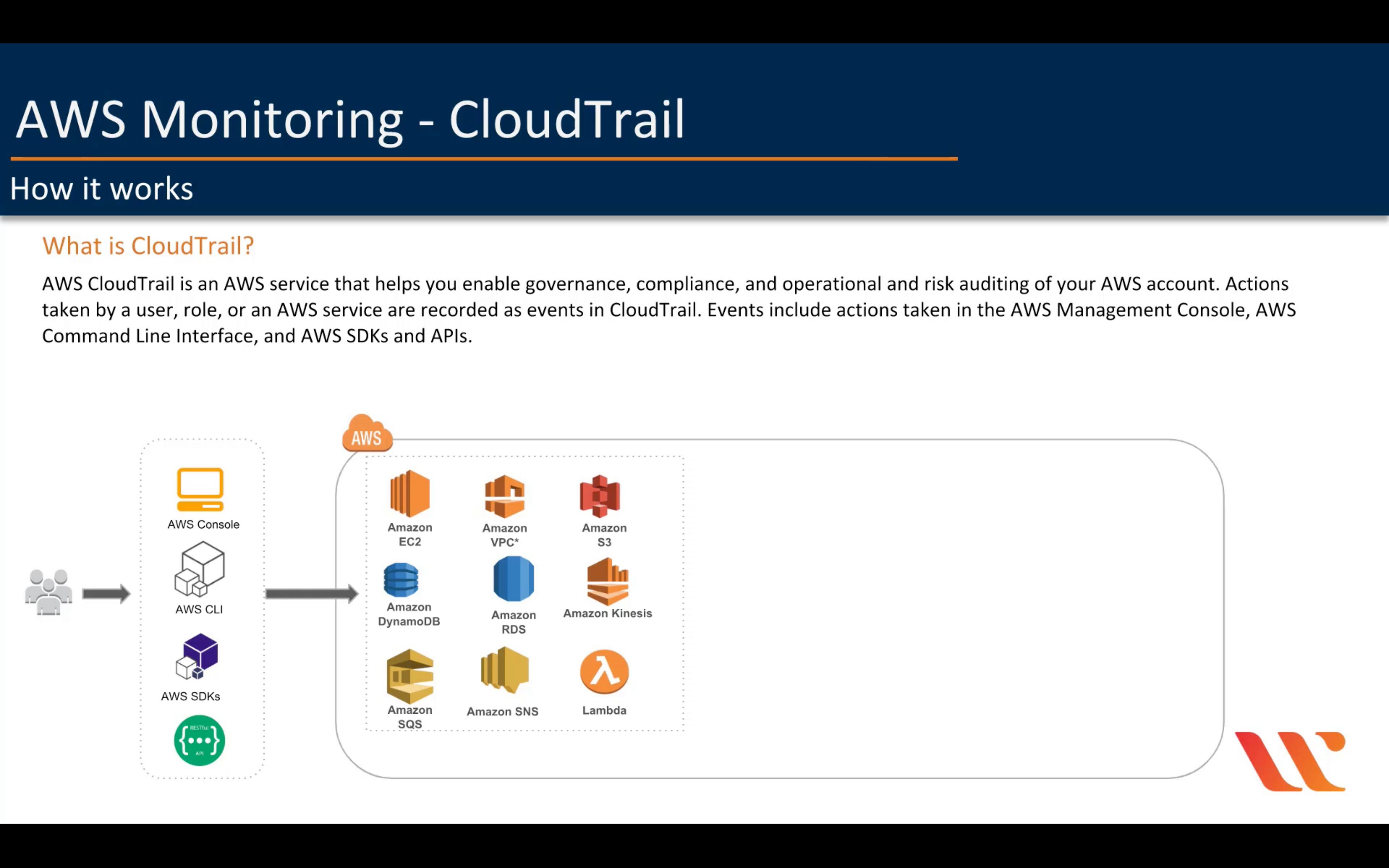

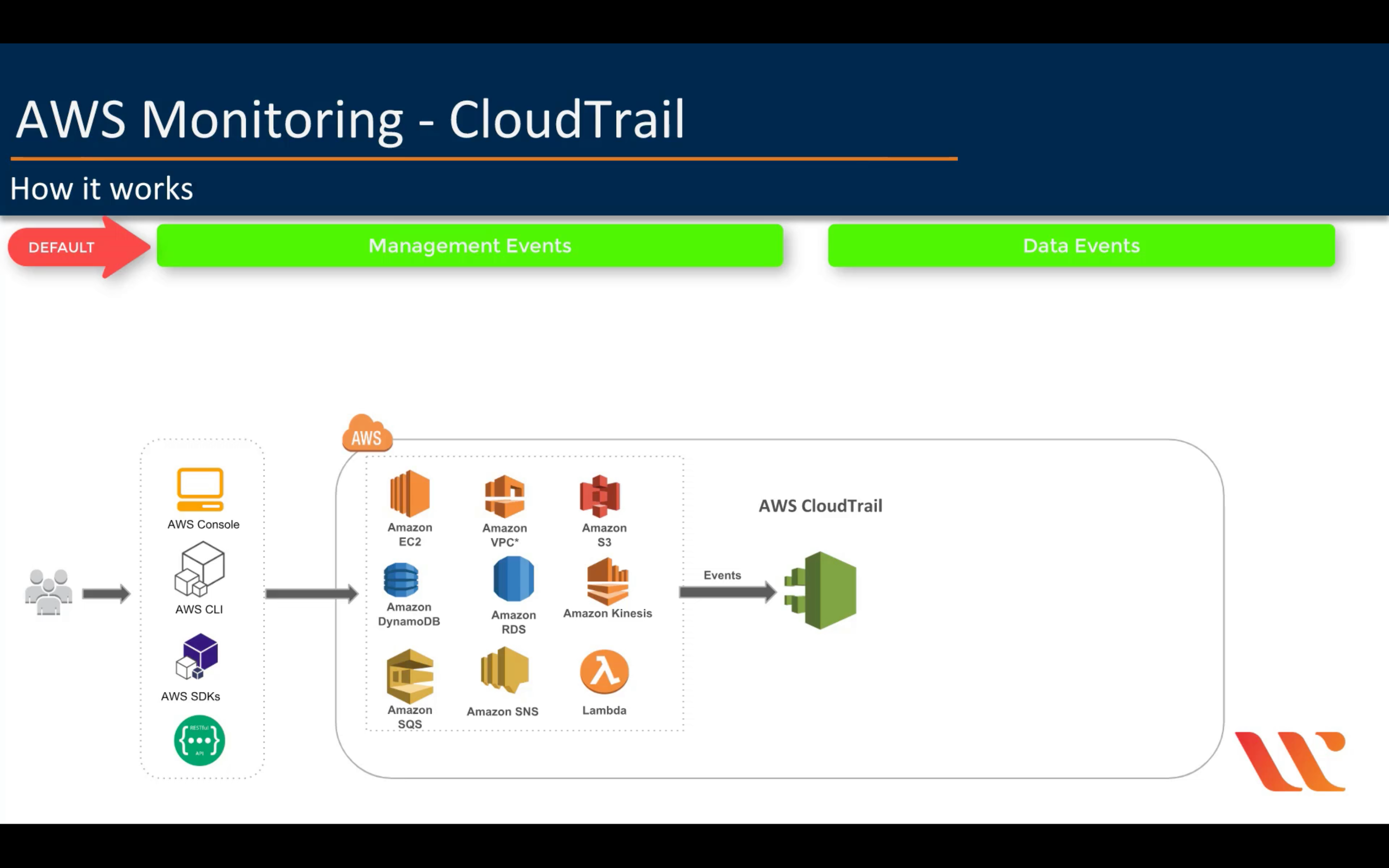

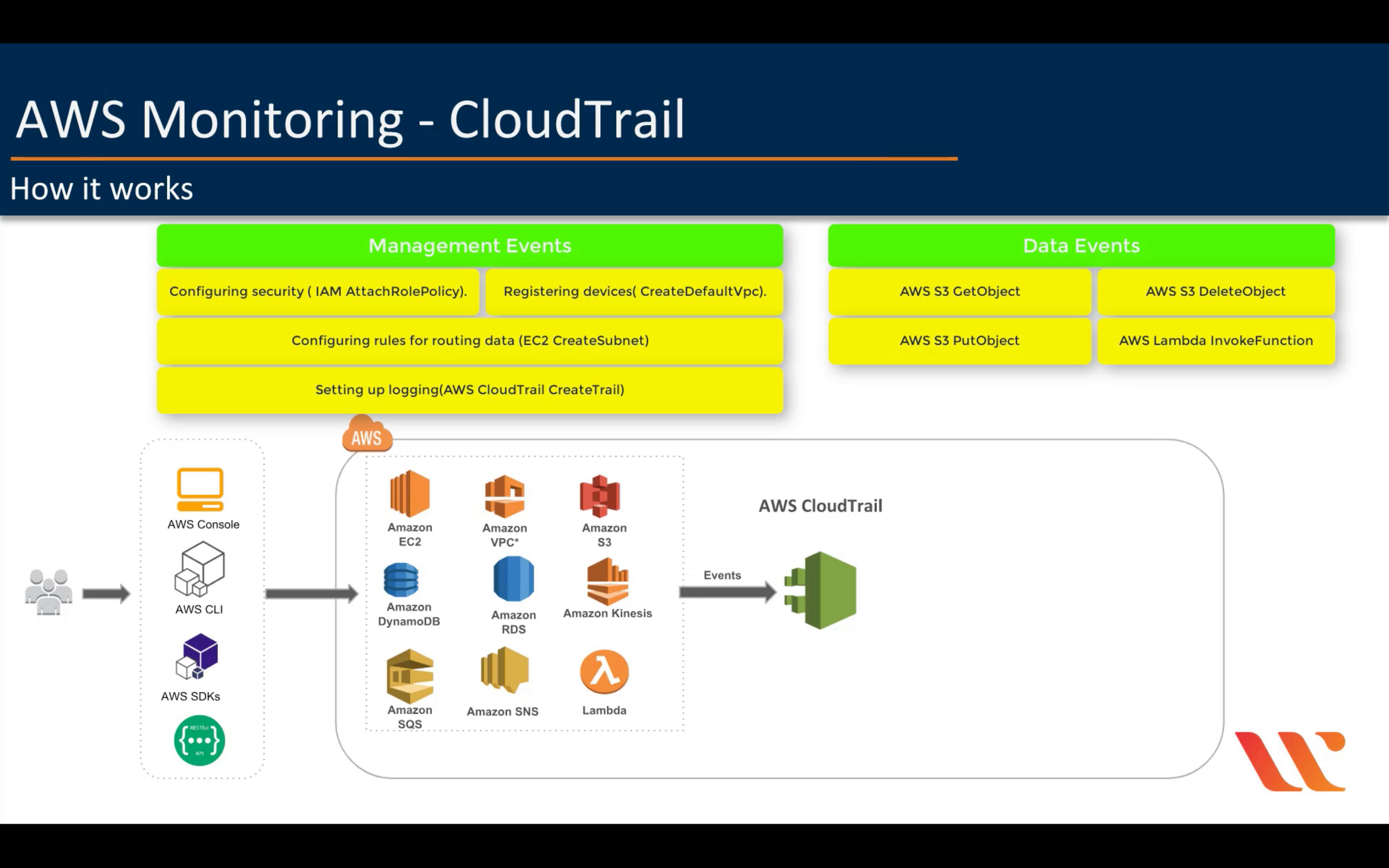

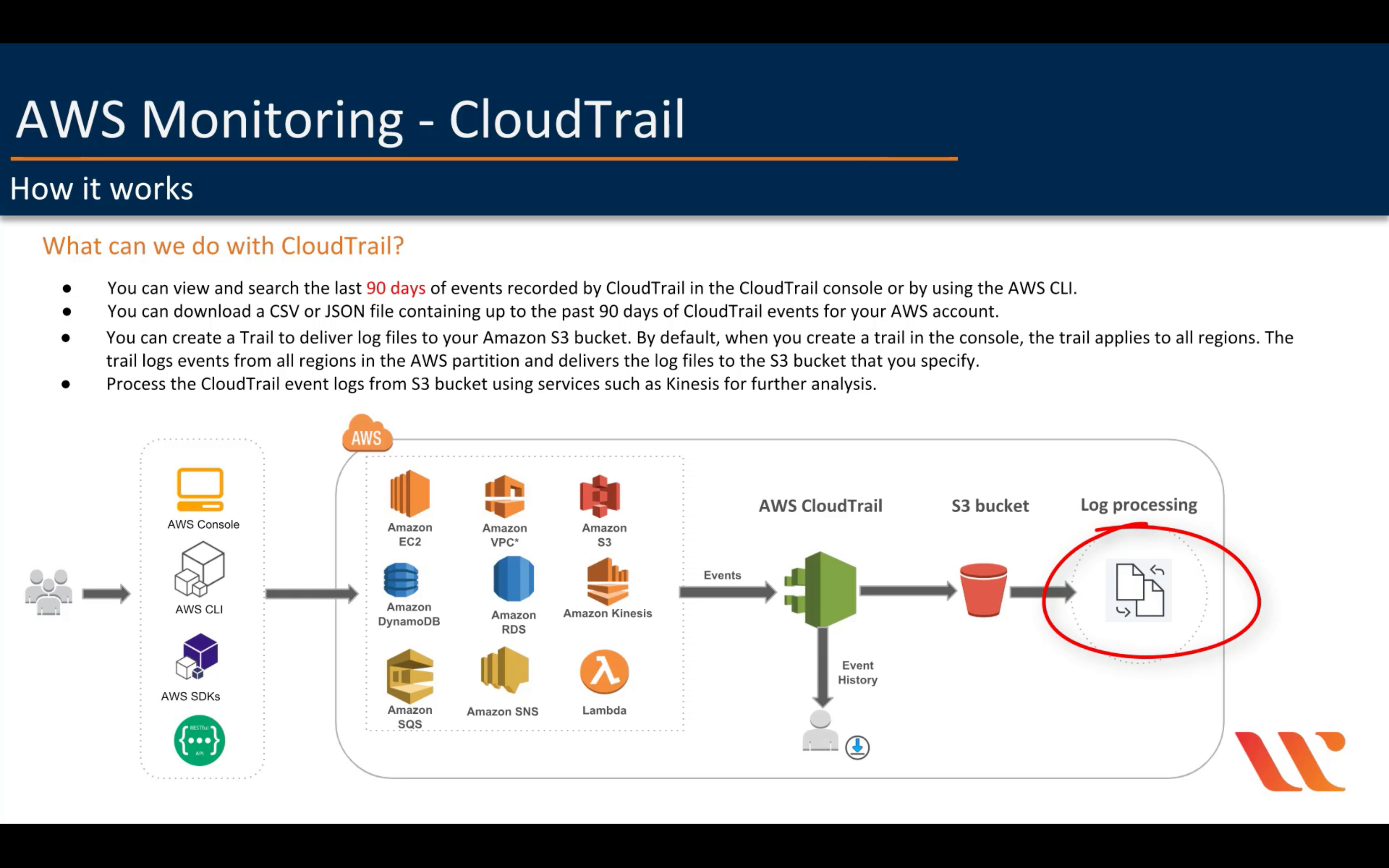

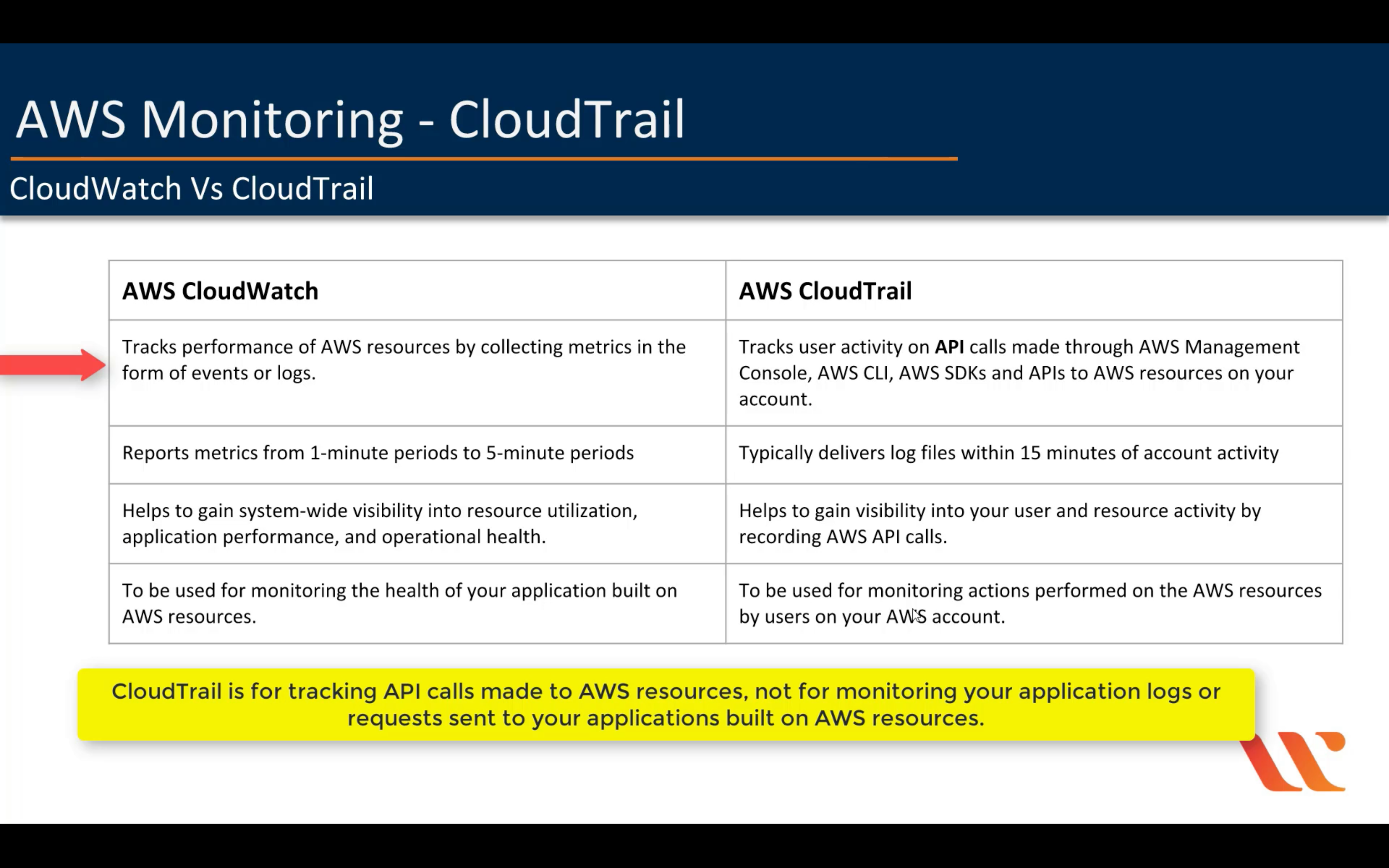

AWS CloudTrail

Track user activity and API usage

By default, CloudTrail log management events not data events.

AWS Command Line Interface

Unified tool to manage AWS services

AWS Compute Optimizer

Identify optimal AWS Compute resources

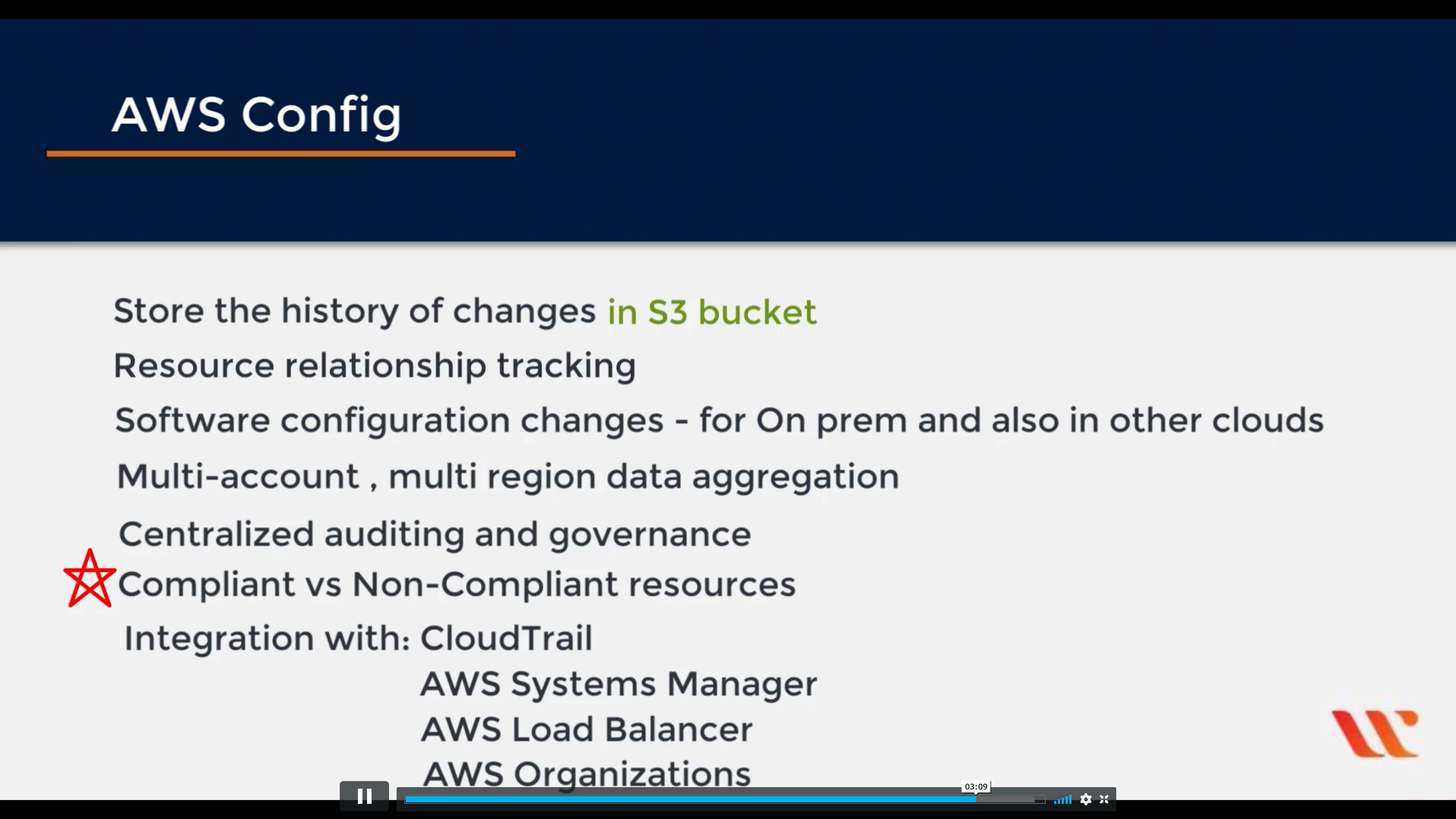

AWS Config

Track resources inventory and changes

AWS Control Tower

Set up and govern a secure, compliant multi-account environment

AWS Console Mobile Application

Access resources on the go

AWS License Manager

Track, manage, and control licenses

AWS Management Console

Web-based user interface

AWS Managed Services

Infrastructure operations management for AWS

AWS OpsWorks

Automate operations with Chef and Puppet

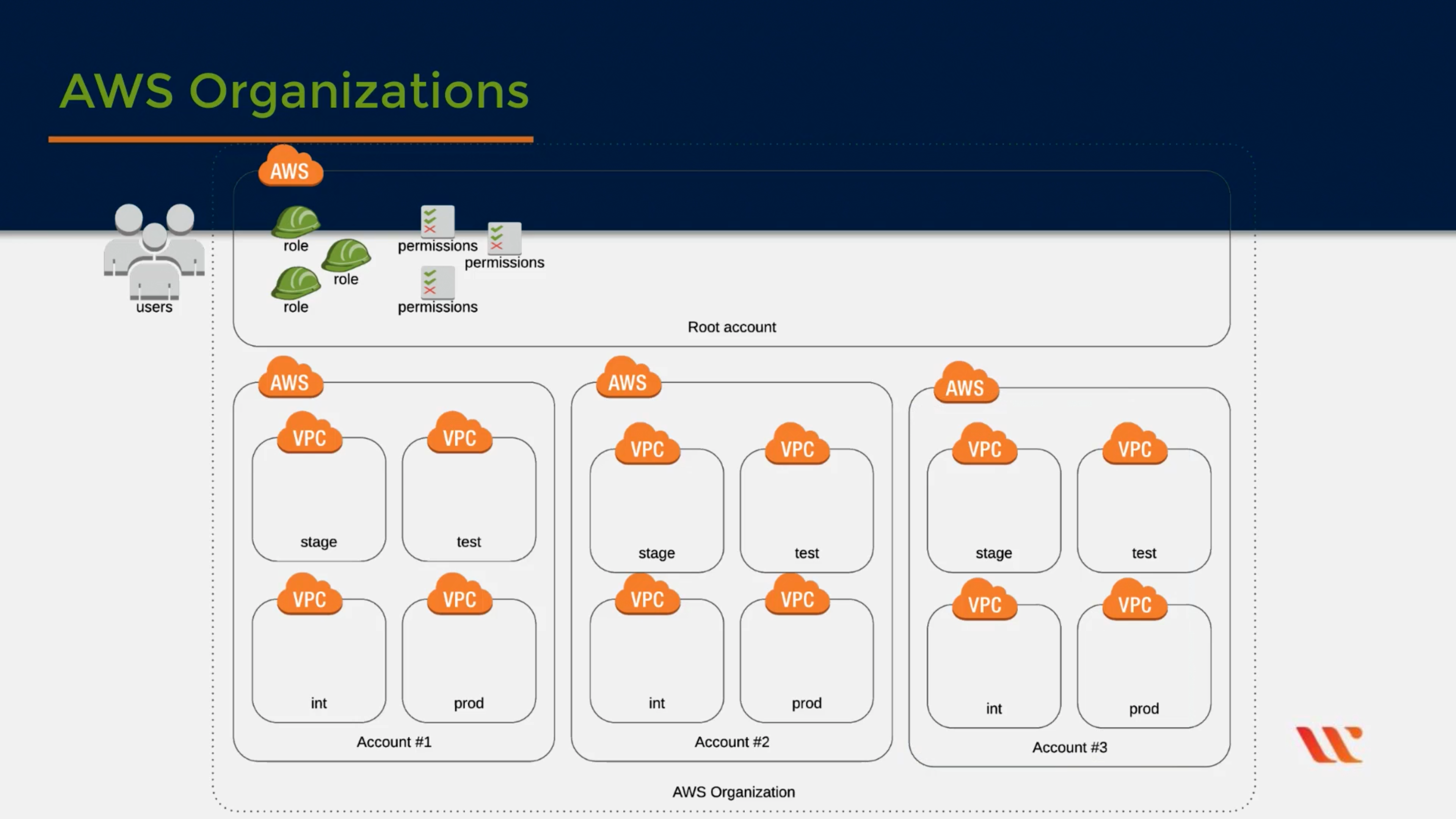

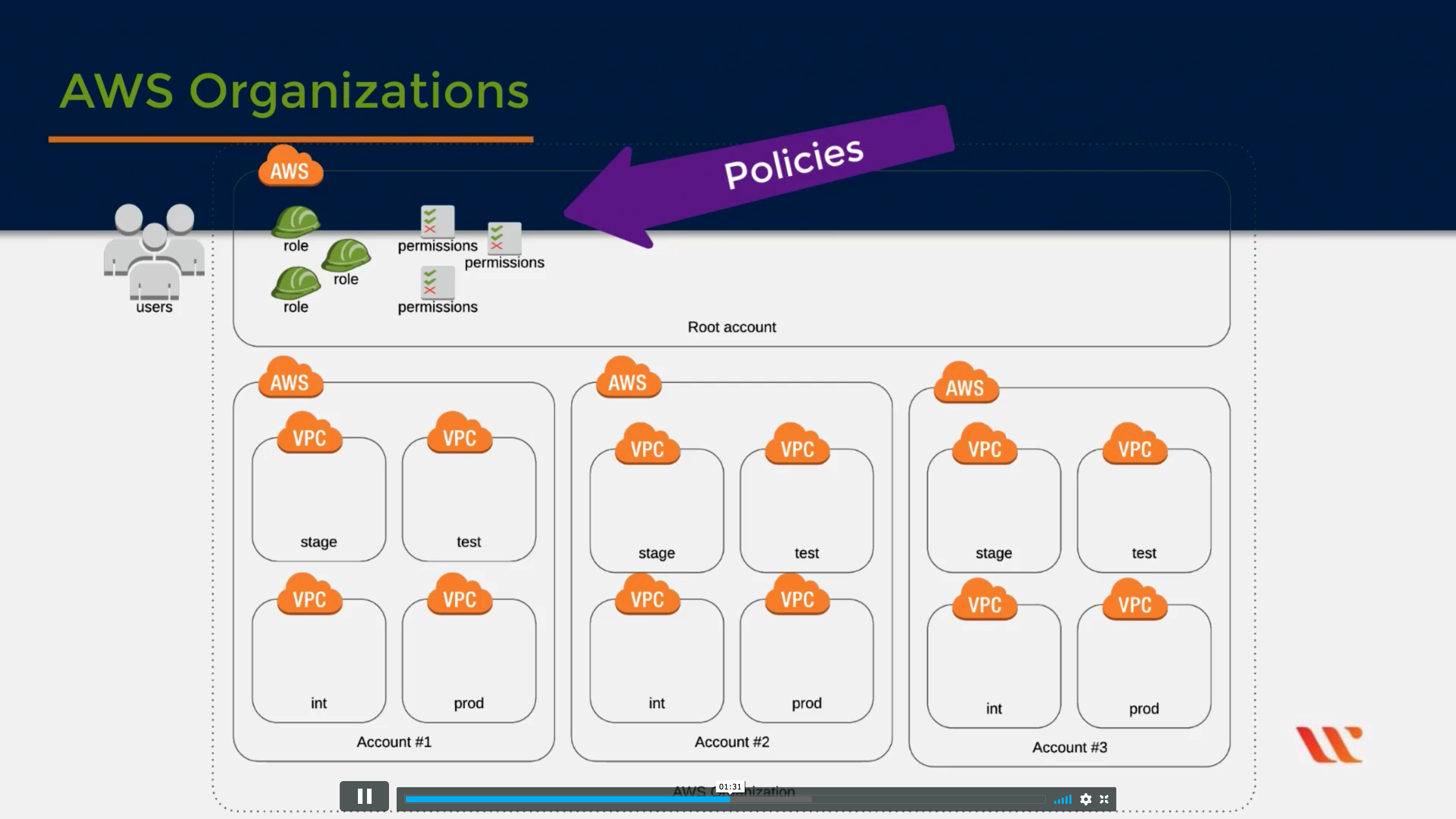

AWS Organizations

Central governance and management across AWS accounts

Service control policies

AWS Personal Health Dashboard

Personalized view of AWS service health

AWS Service Catalog

Create and use standardized products

AWS Systems Manager

Gain operational insights and take action

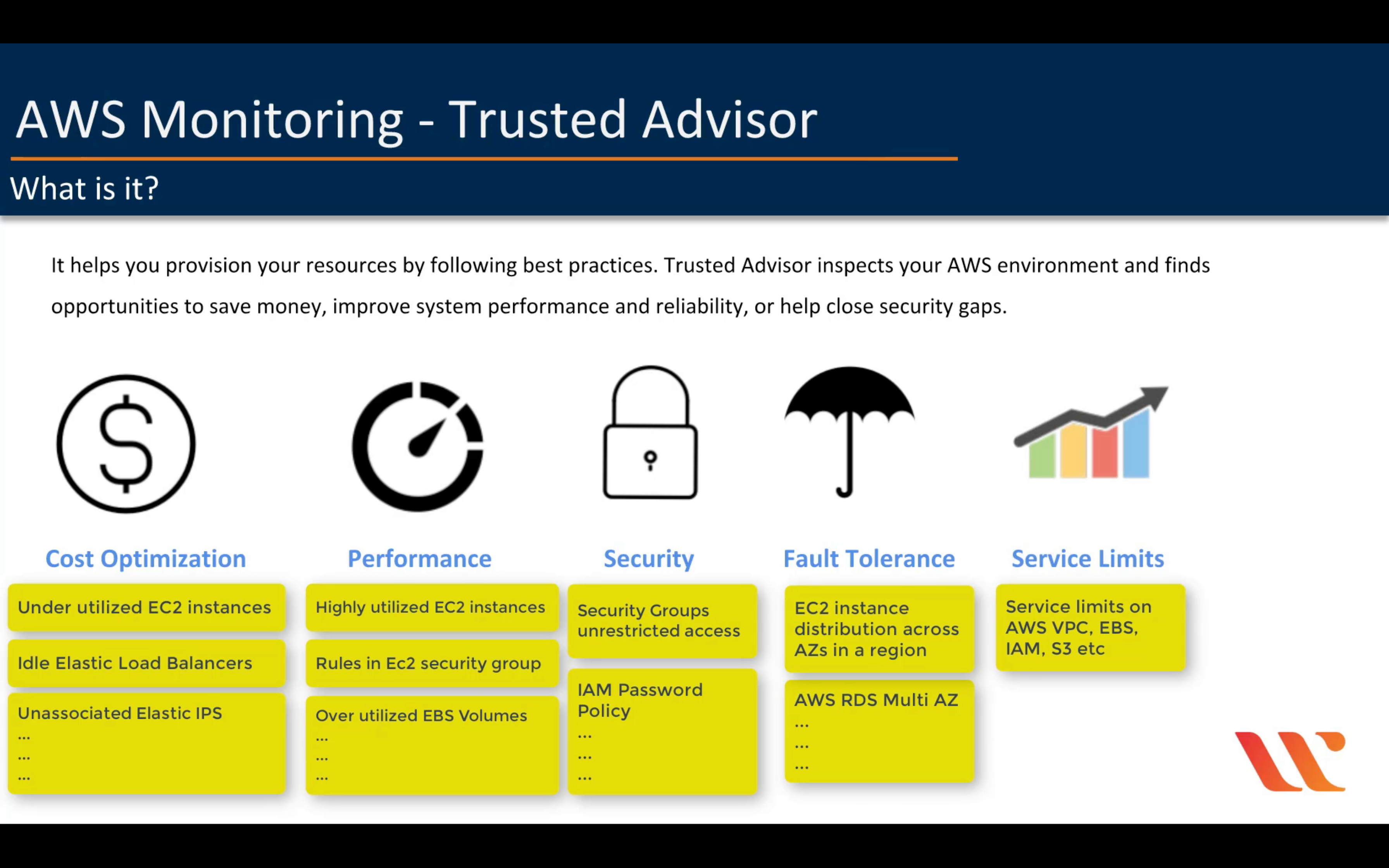

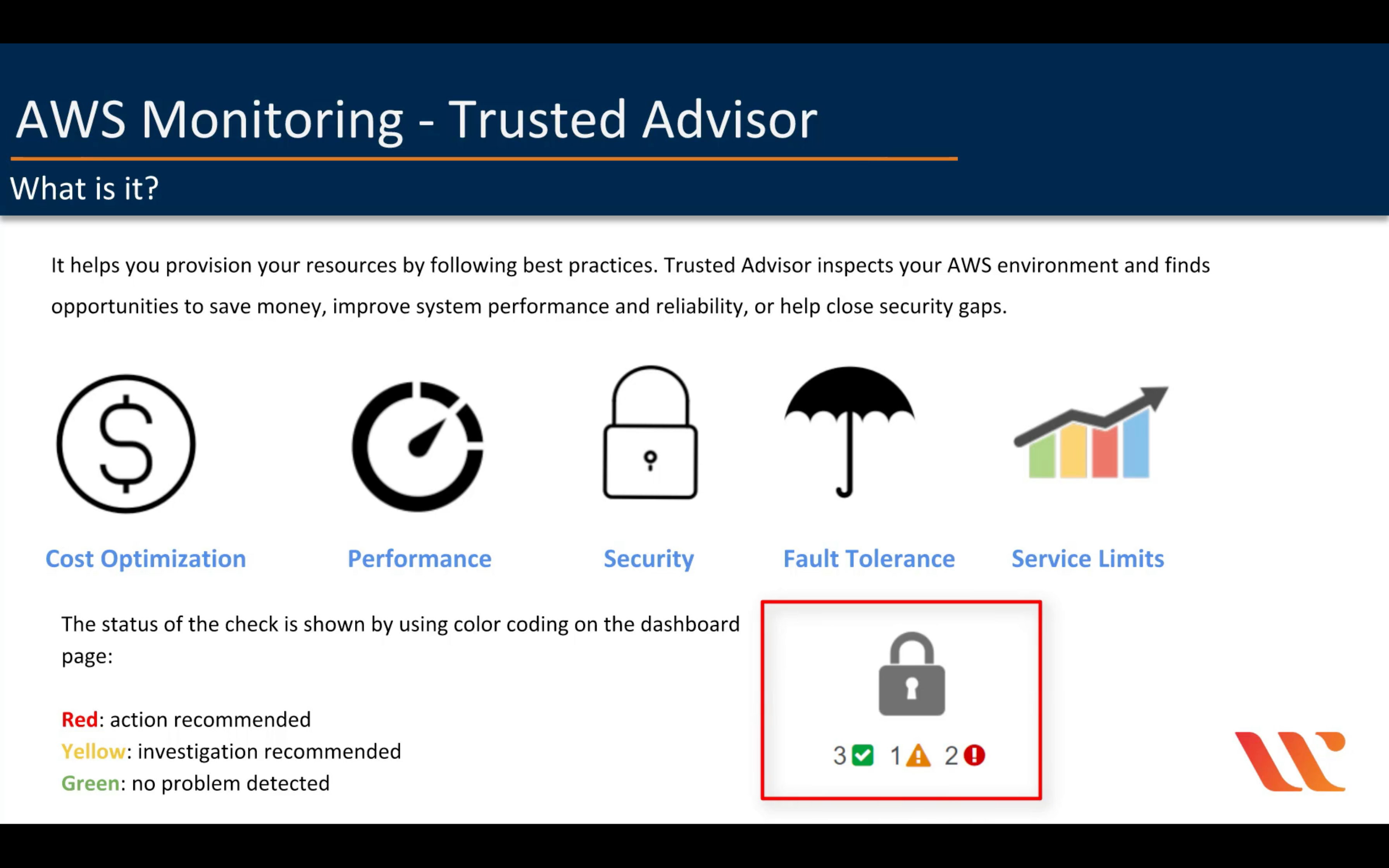

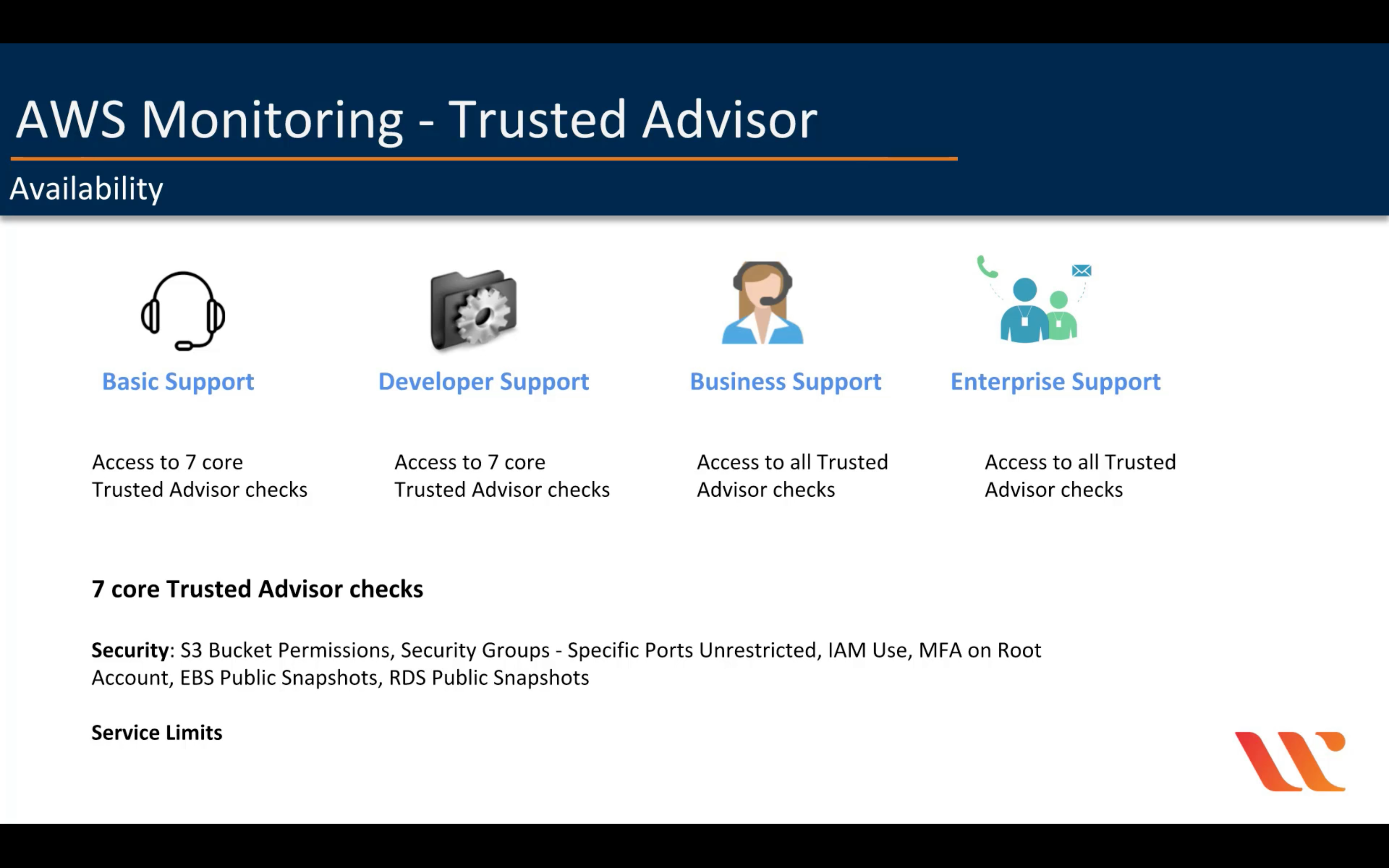

AWS Trusted Advisor

Optimize performance and security

AWS Well-Architected Tool

Review and improve your workloads

Security, Identity, & Compliance

AWS Identity and Access Management (IAM)

Securely manage access to services and resources

Enhanced security

Granular control

Temporary credentials

Analyze access

Flexible security credential management

Leverage external identity systems

Seamlessly integrated into AWS services

Centralized control of your AWS account

Shared Access to your AWS account

Granular Permissions

Identity Federation (including Active Directory, Facebook, LinkedIn etc)

Multifactor Authentication

Provide temporary access for users/devices and services were necessary

Allows you to set up your own password rotation policy

Integrated with many different AWS services

Supports PCI DSS Compliance

Amazon Cognito

Identity management for your apps

Amazon Detective

Investigate potential security issues

Amazon GuardDuty

Managed threat detection service

Amazon Inspector

Analyze application security

Amazon Macie

Discover, classify, and protect your data

AWS Artifact

On-demand access to AWS’ compliance reports

AWS Certificate Manager

Provision, manage, and deploy SSL/TLS certificates

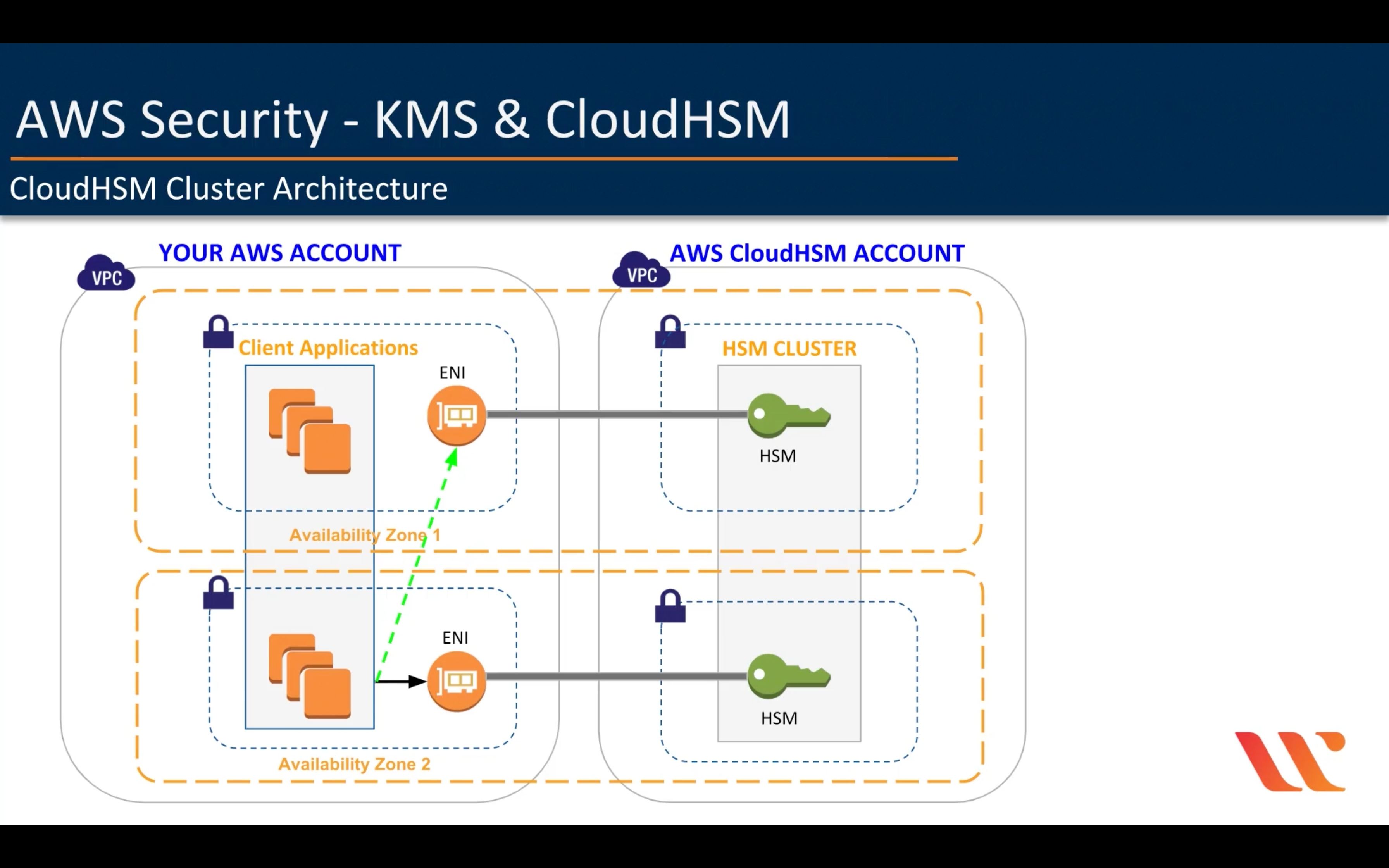

AWS CloudHSM

Hardware-based key storage for regulatory compliance

AWS Directory Service

Host and manage active directory

AWS Firewall Manager

Central management of firewall rules

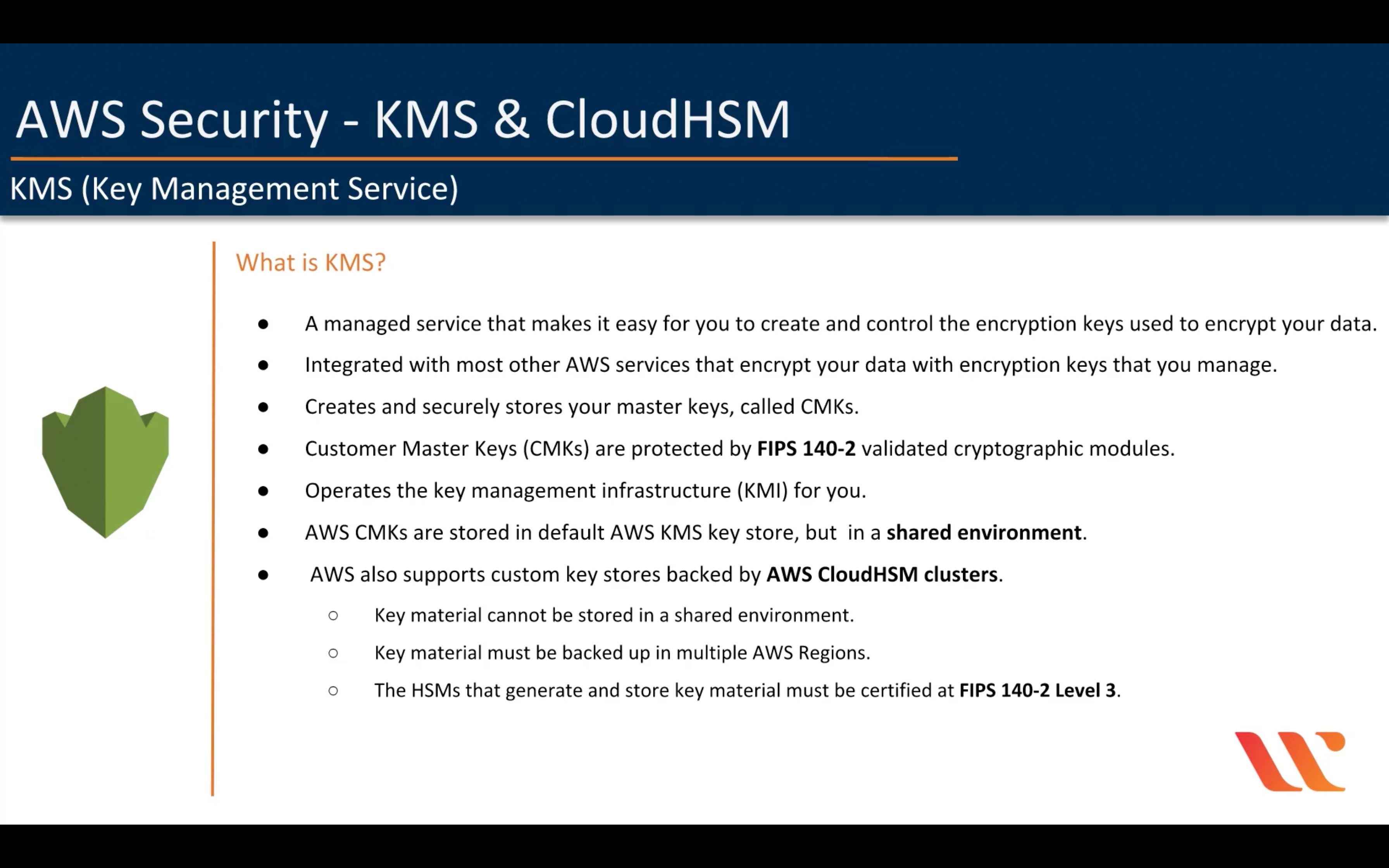

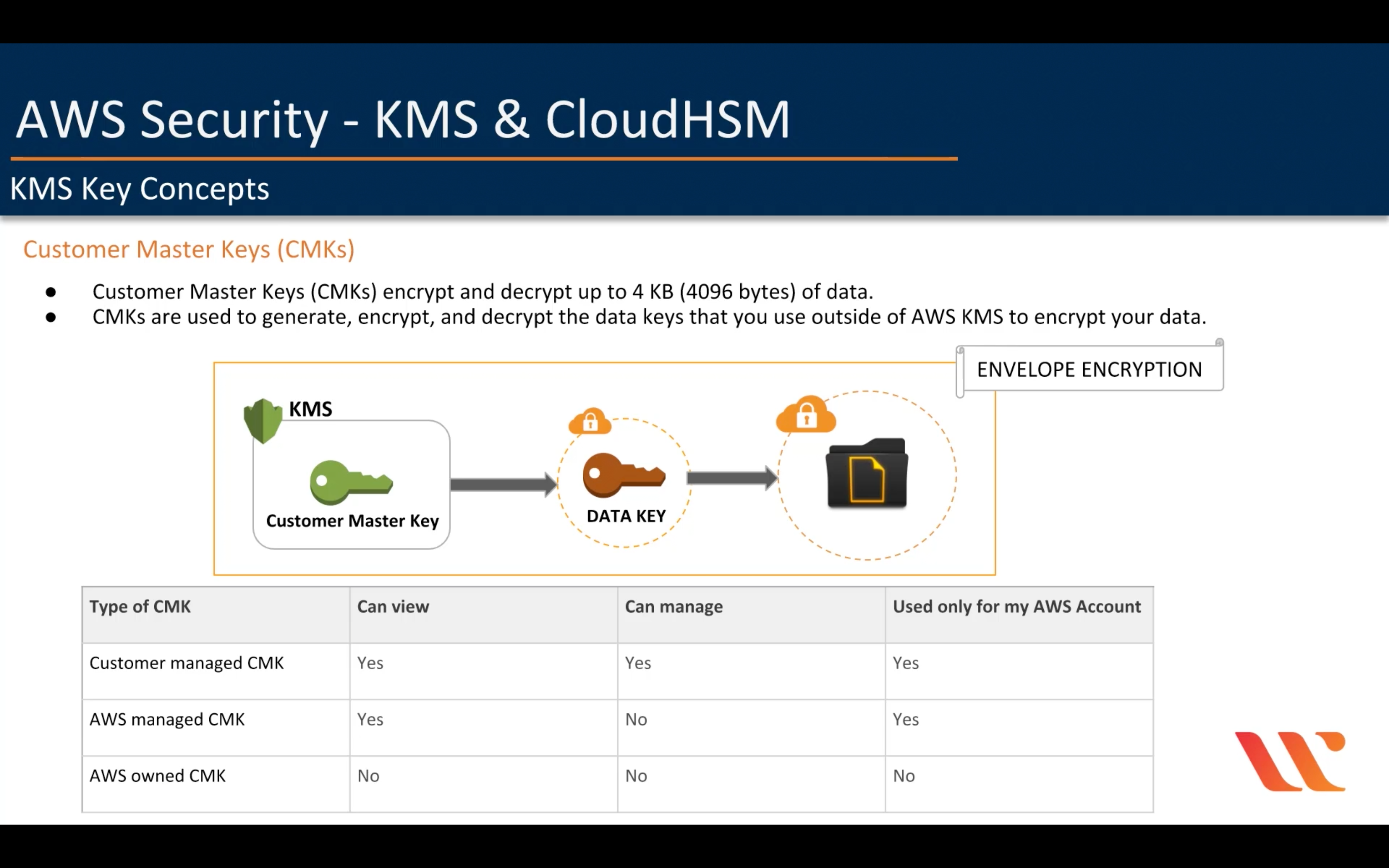

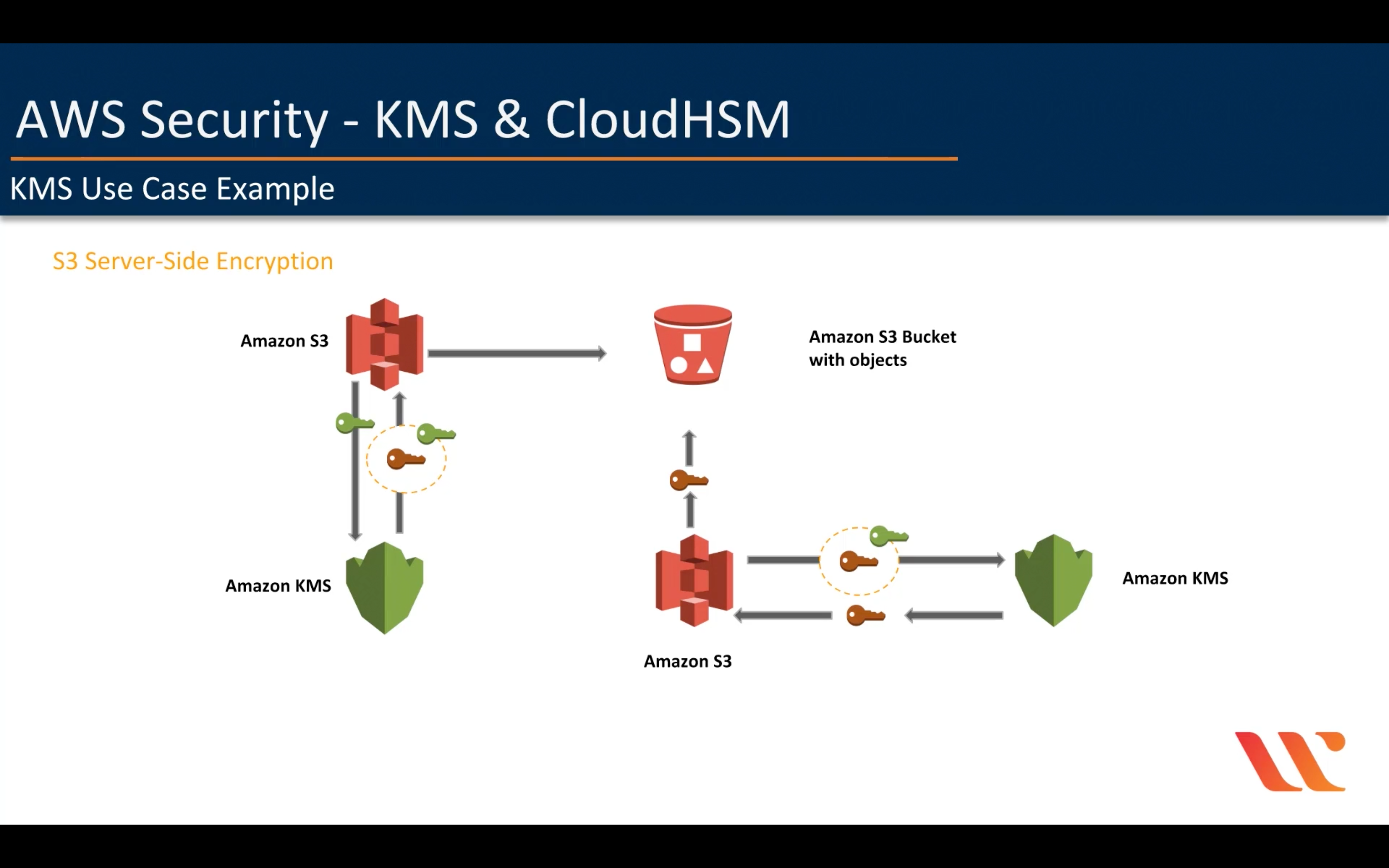

AWS KMS (Key Management Service)

Managed creation and control of encryption keys

- Fully managed

- Centralized key management

- Manage encryption for AWS services

- Encrypt data in your applications

- Digitally sign data

- Low cost

- Secure

- Compliance

- Built-in auditing

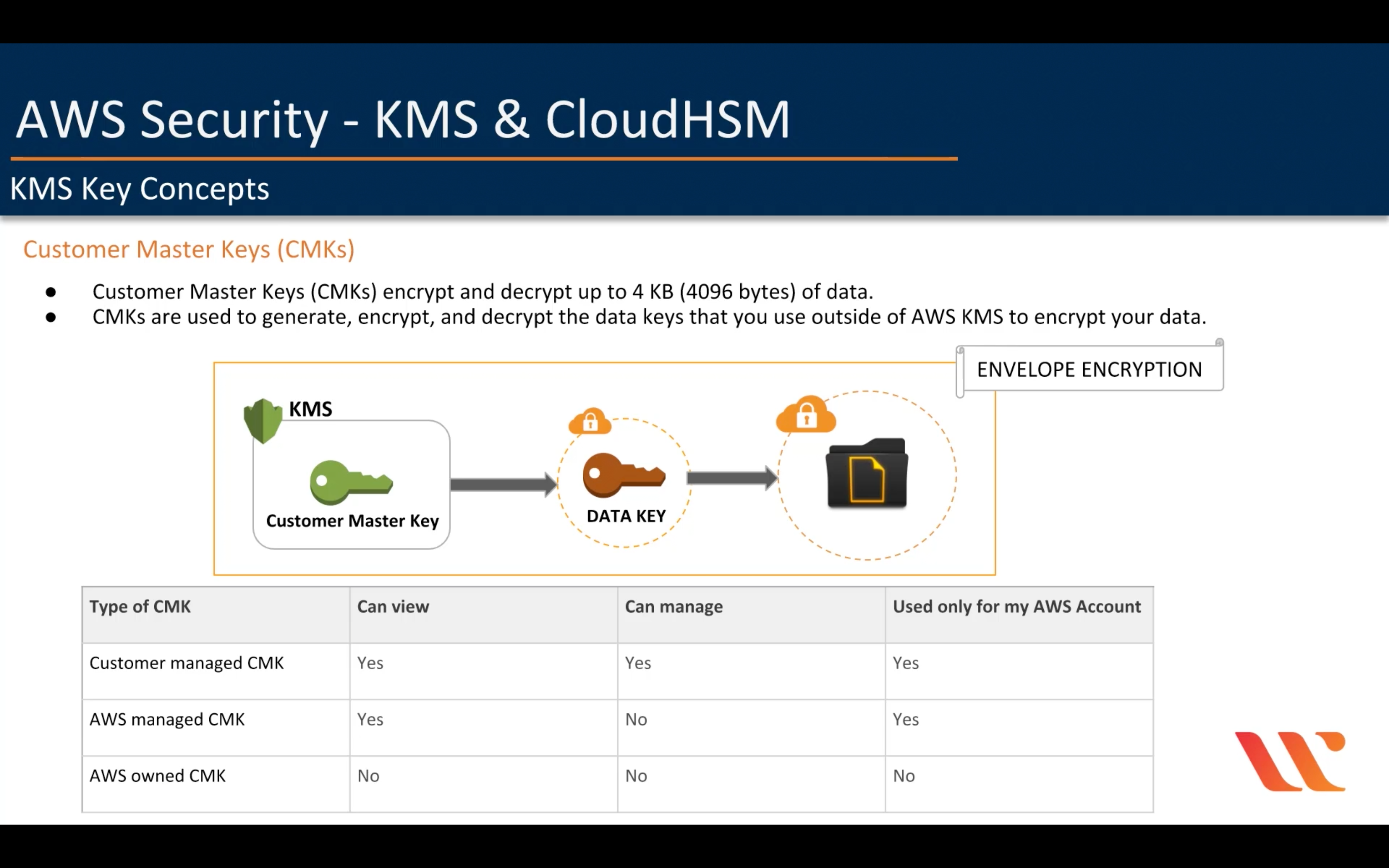

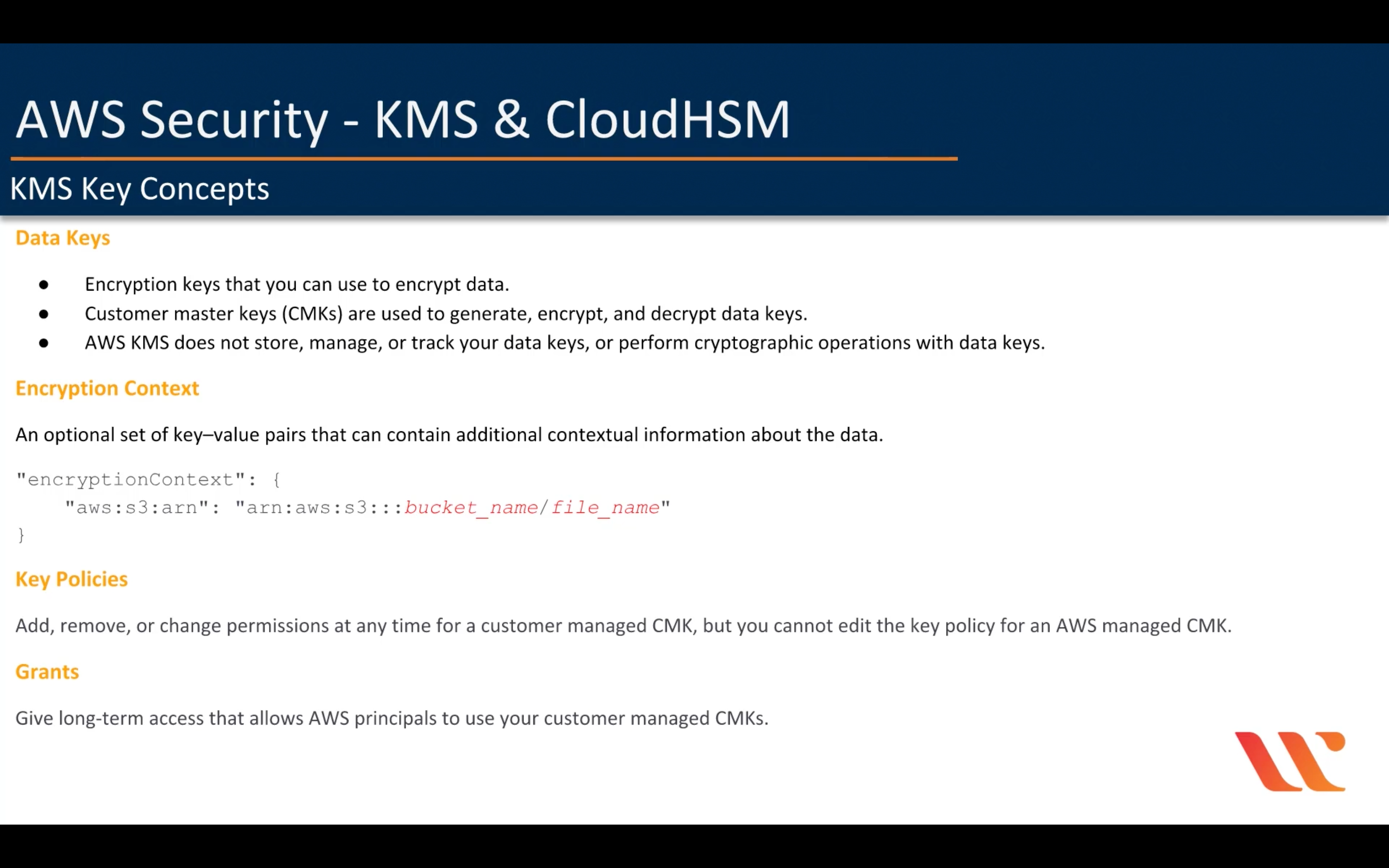

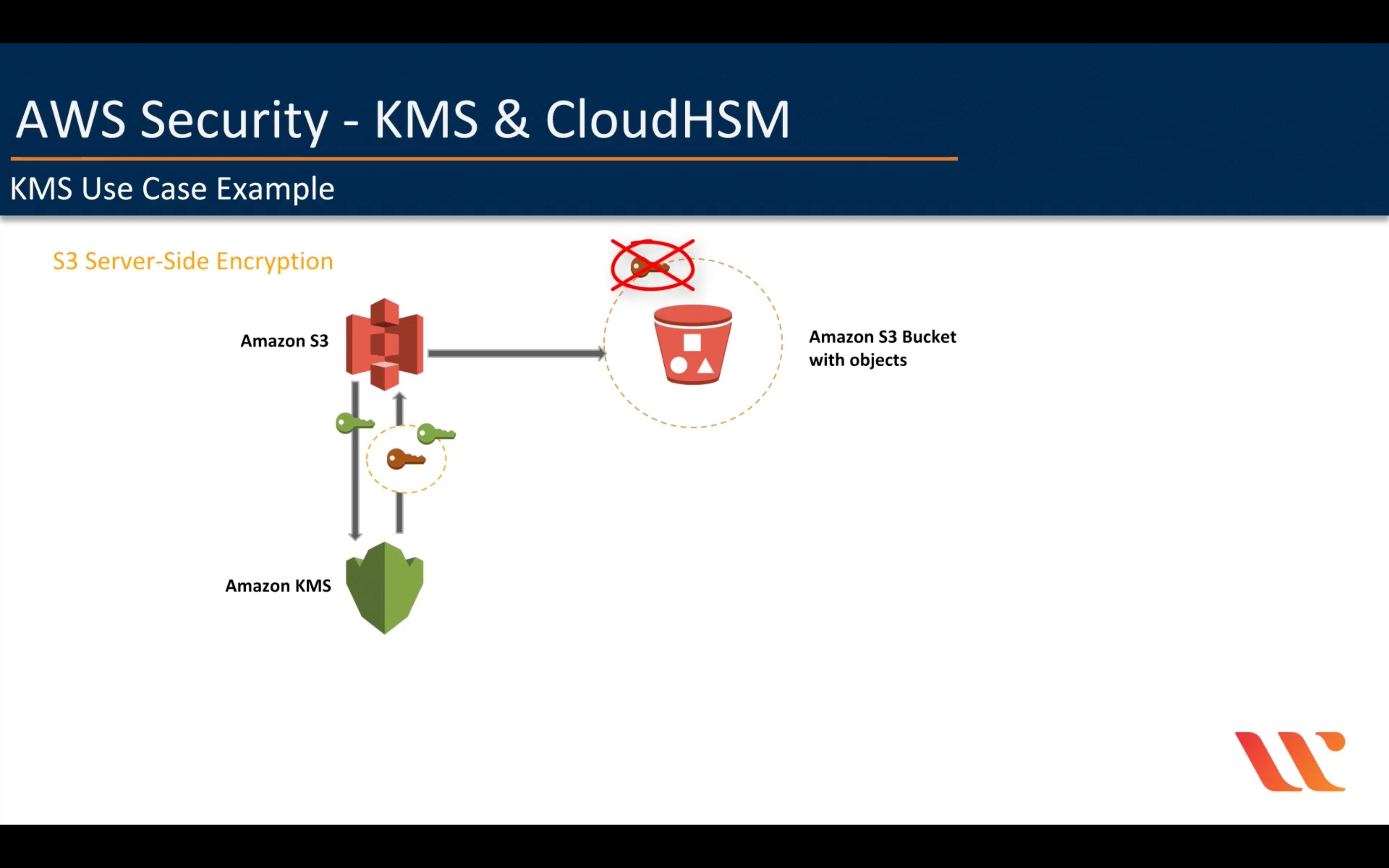

KMS Key Concepts

S3 send a CMK you choose to KMS.

S3 will remove data key after finish the encryption or decryption process, which means S3 must request a data key from KMS every time.

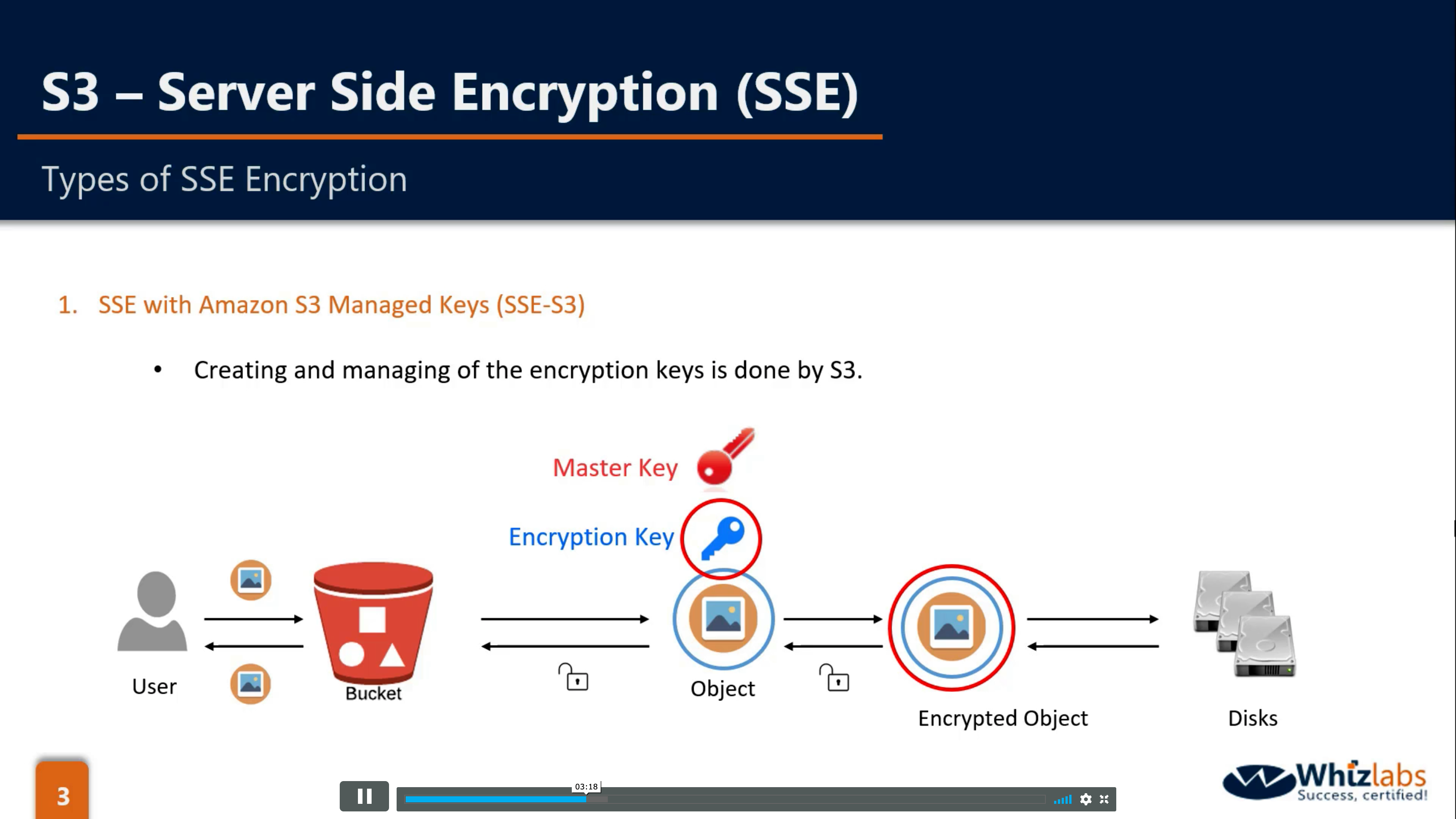

| # | Option | Key Generation | Key Storage | Key Usage |

|---|---|---|---|---|

| 1 | SSE-S3-Managed Keys | S3 | S3 | Server-side (S3) |

| 2(a) | SSE-KMS-Managed keys | KMS | KMS | Server-side (S3) |

| 2(b) | SSE-KMS-Managed Keys | Customer | KMS | Server-side (S3) |

| 3 | SSE-Customer-Provided Keys | Customer | Customer | Server-side (S3) |

| 4(a) | CSE KMS-Managed CMK | KMS | KMS | Client-side (customer application) |

| 4(b) | CSE KMS-Managed CMK | Customer | KMS | Client-side (customer application) |

| 5 | CSE Client-side Master Key | Customer | Customer | Client-side (customer application) |

CloudHSM

AWS Resource Access Manager

Simple, secure service to share AWS resources

AWS Secrets Manager

Rotate, manage, and retrieve secrets

AWS Security Hub

Unified security and compliance center

AWS Shield

DDoS protection

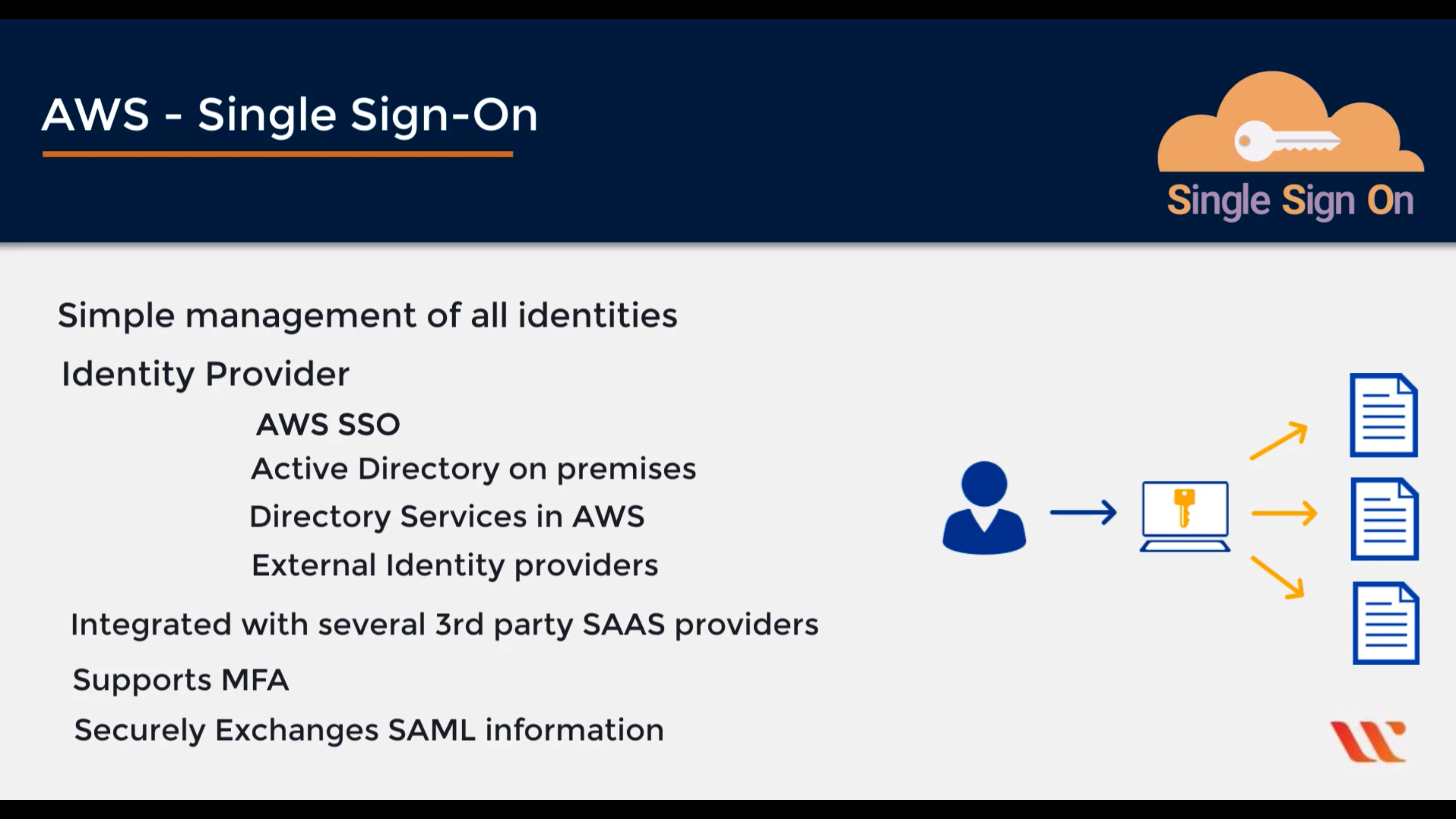

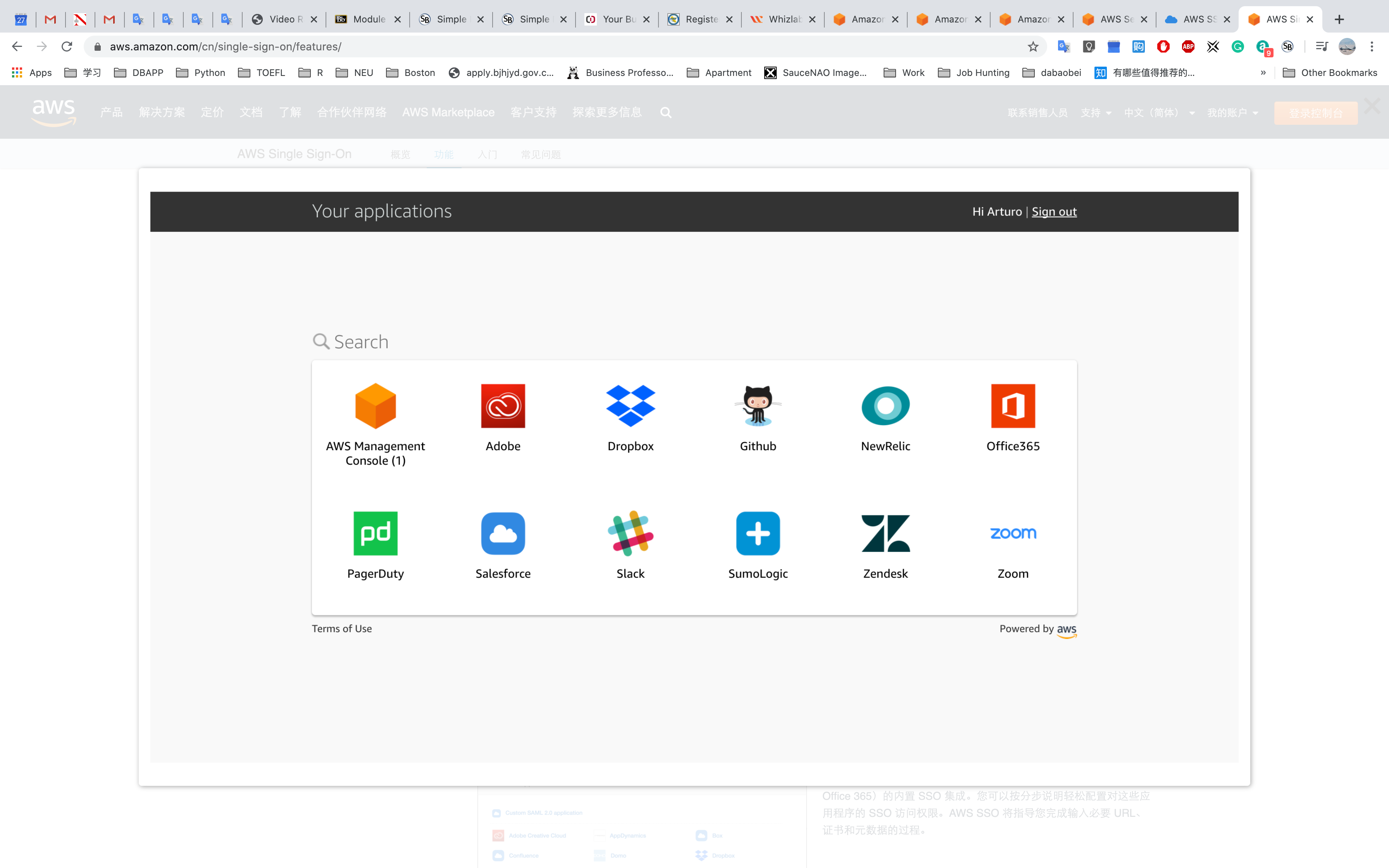

AWS Single Sign-On

Cloud single sign-on (SSO) service

AWS Single Sign-On (SSO) makes it easy to centrally manage access to multiple AWS accounts and business applications and provide users with single sign-on access to all their assigned accounts and applications from one place.

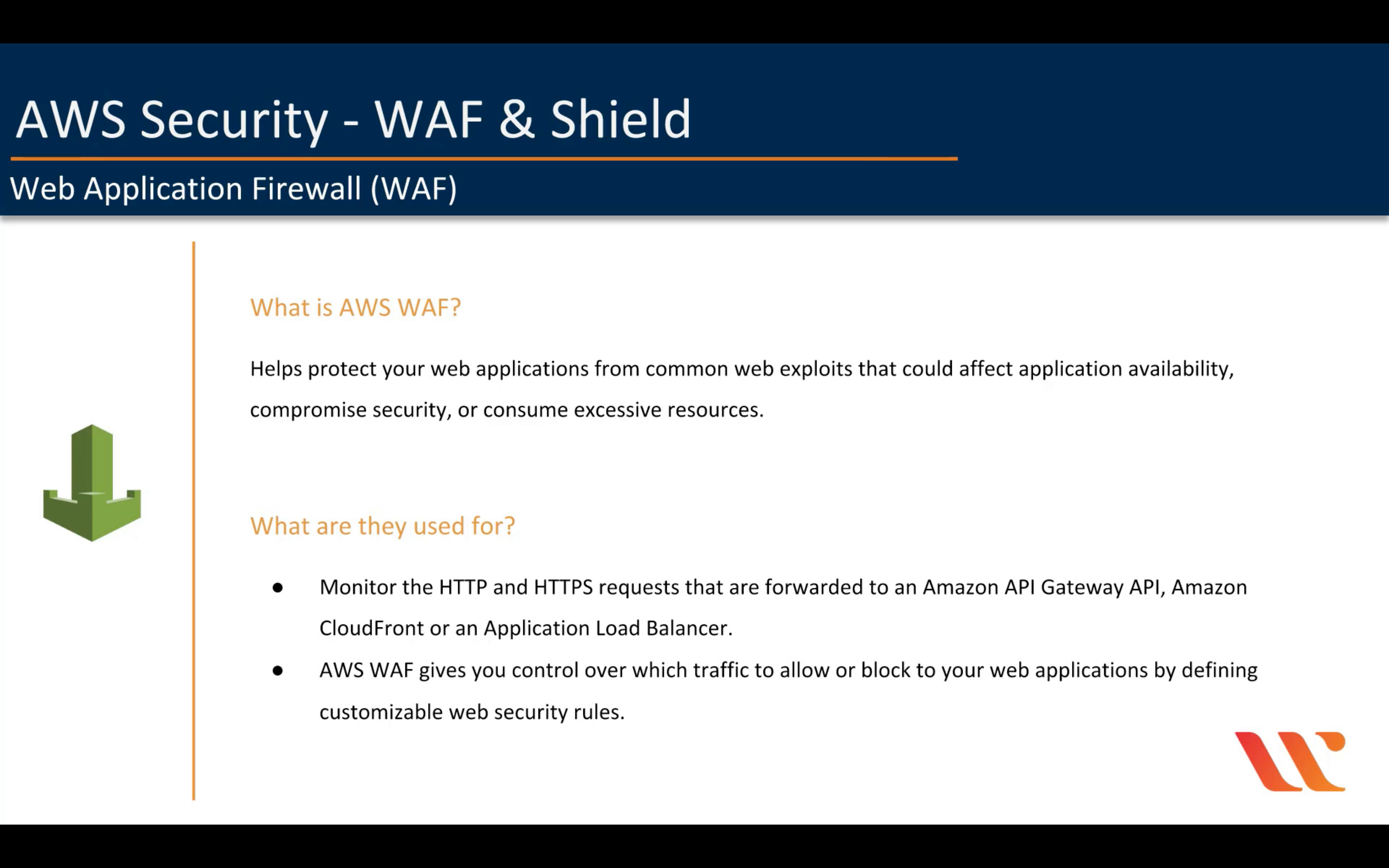

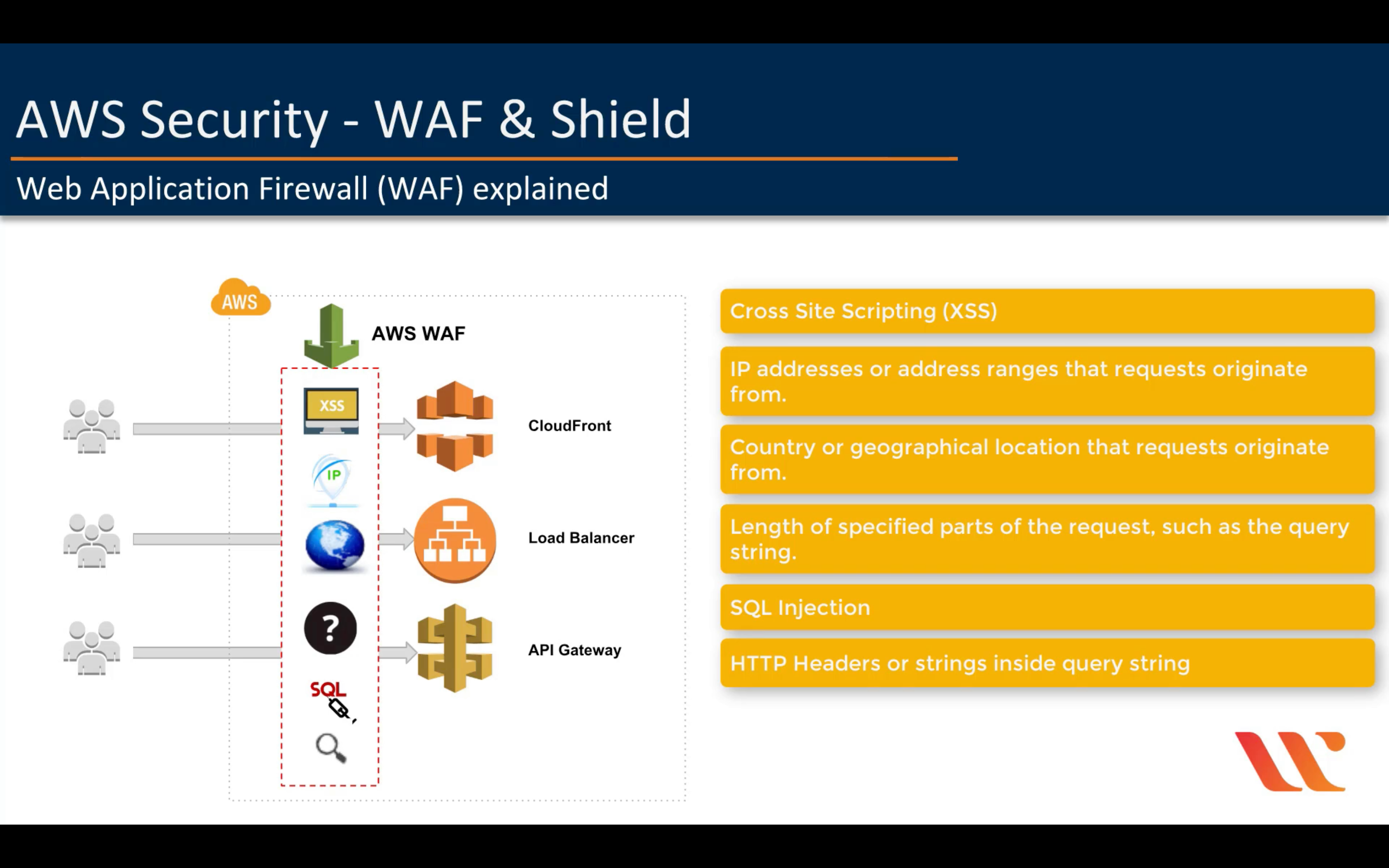

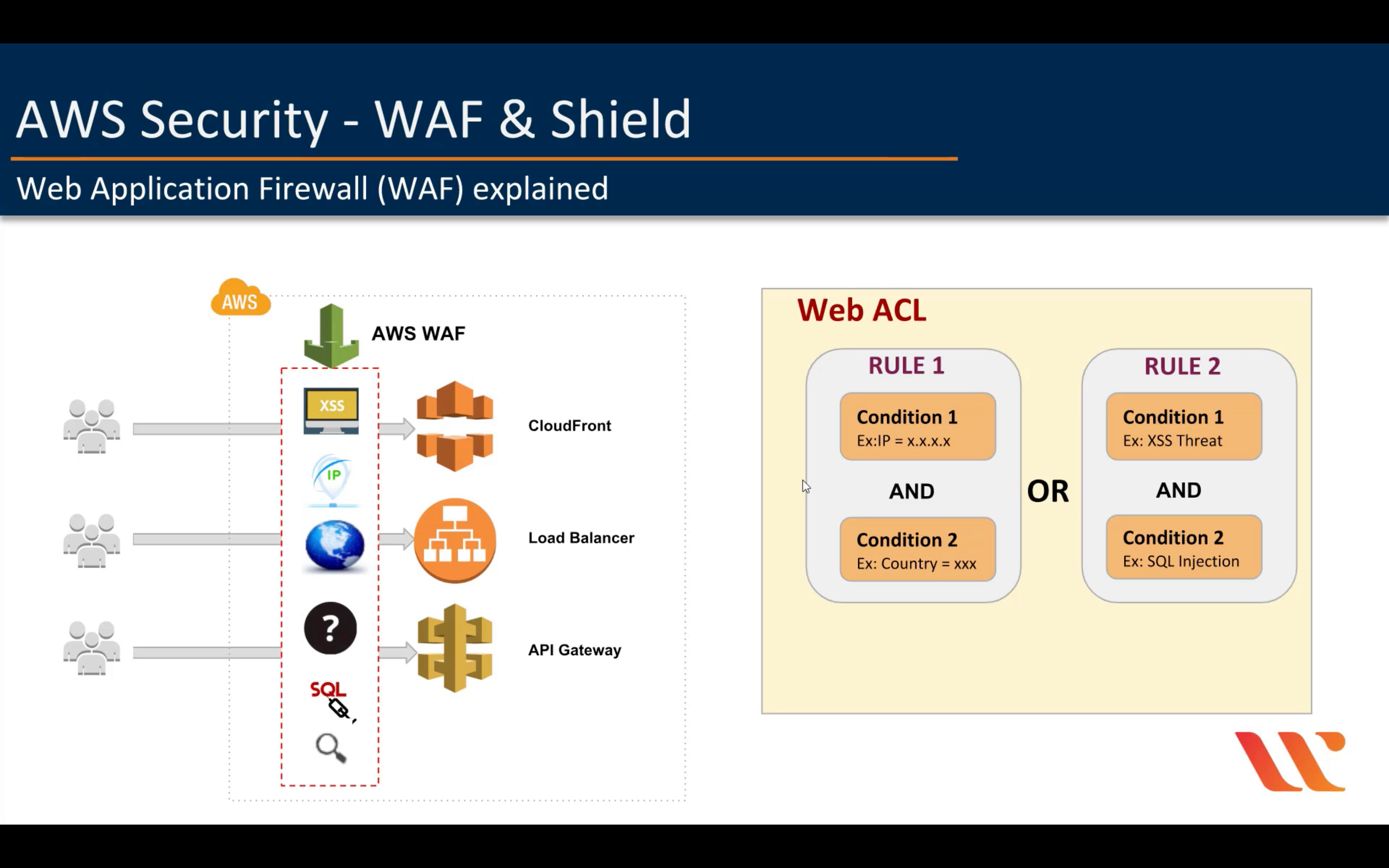

AWS WAF

Filter malicious web traffic

Migration & Transfer

AWS Migration Hub

Track migrations from a single place

AWS Application Discovery Service

Discover on-premises applications to streamline migration

AWS Database Migration Service

Migrate databases with minimal downtime

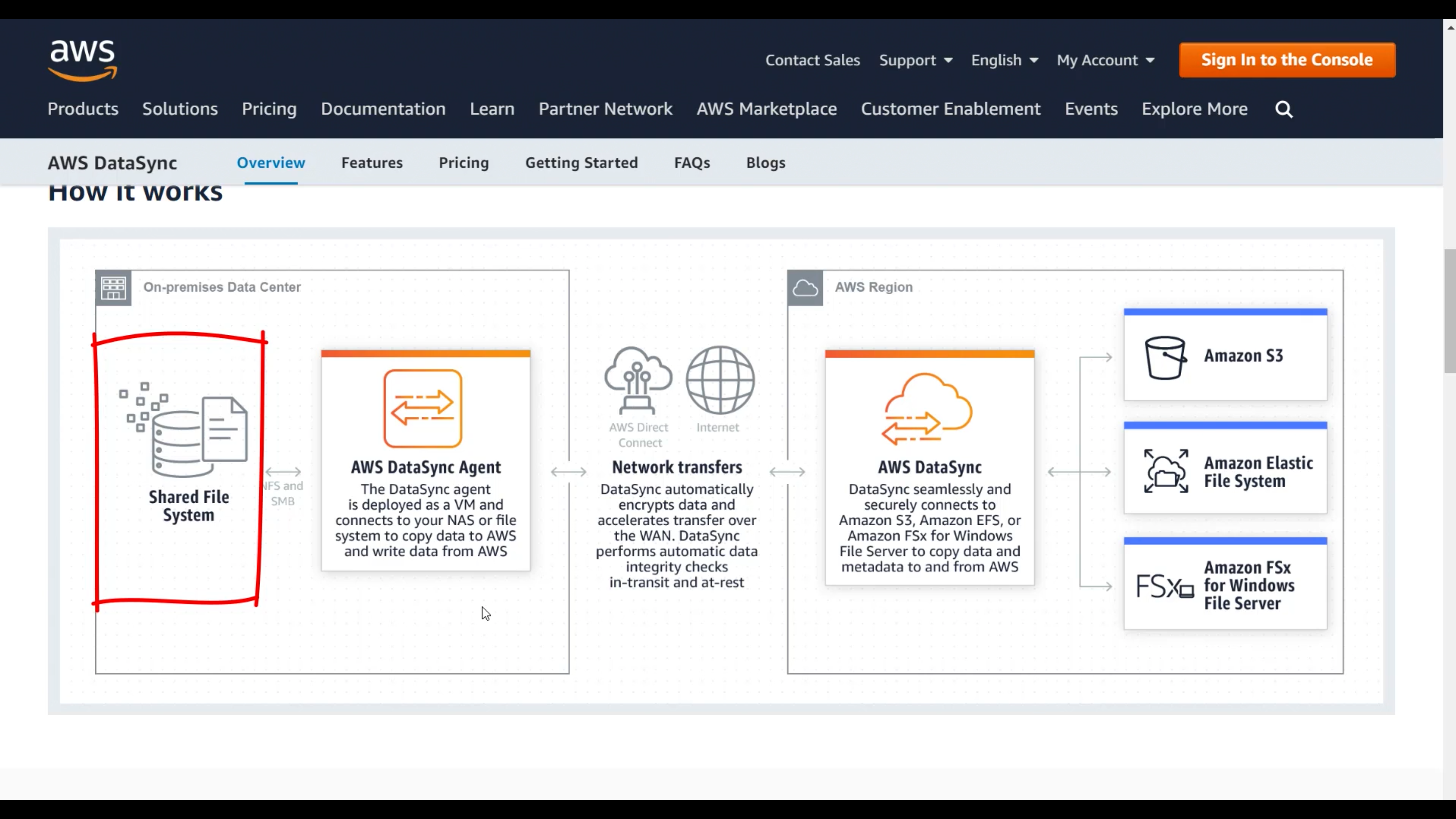

AWS DataSync

Simple, fast, online data transfer

Synchronize data (not database)

AWS Server Migration Service

Migrate on-premises servers to AWS

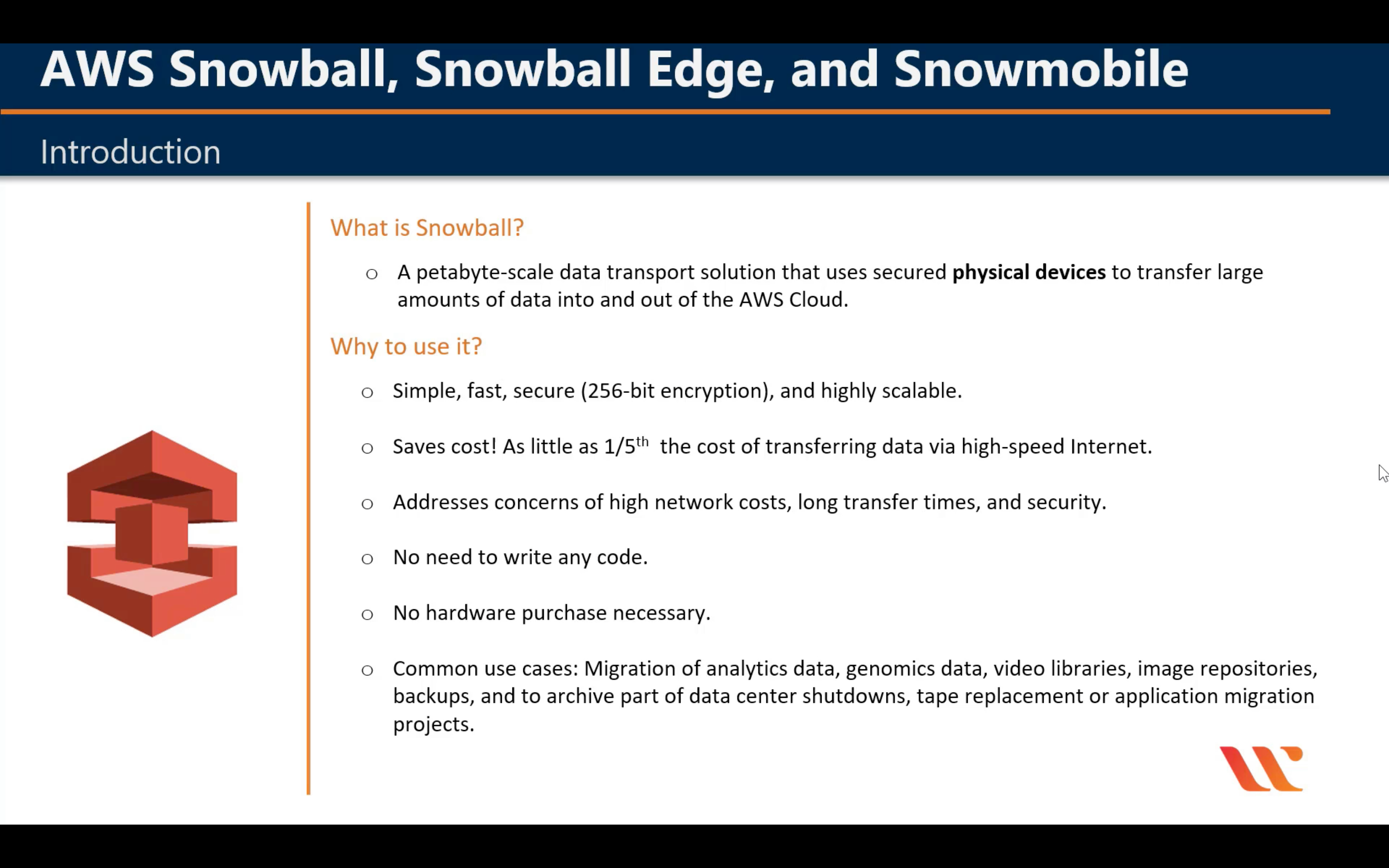

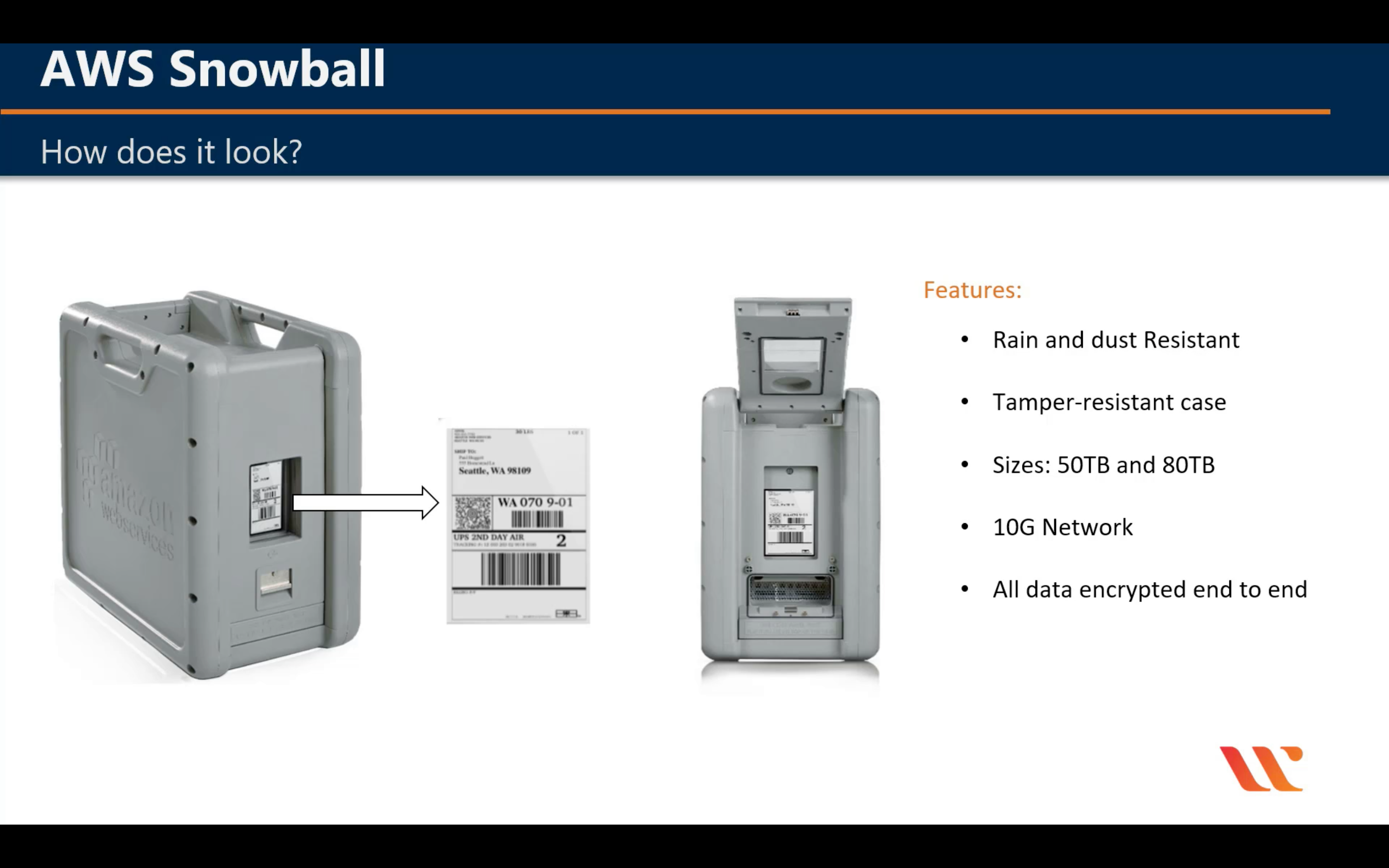

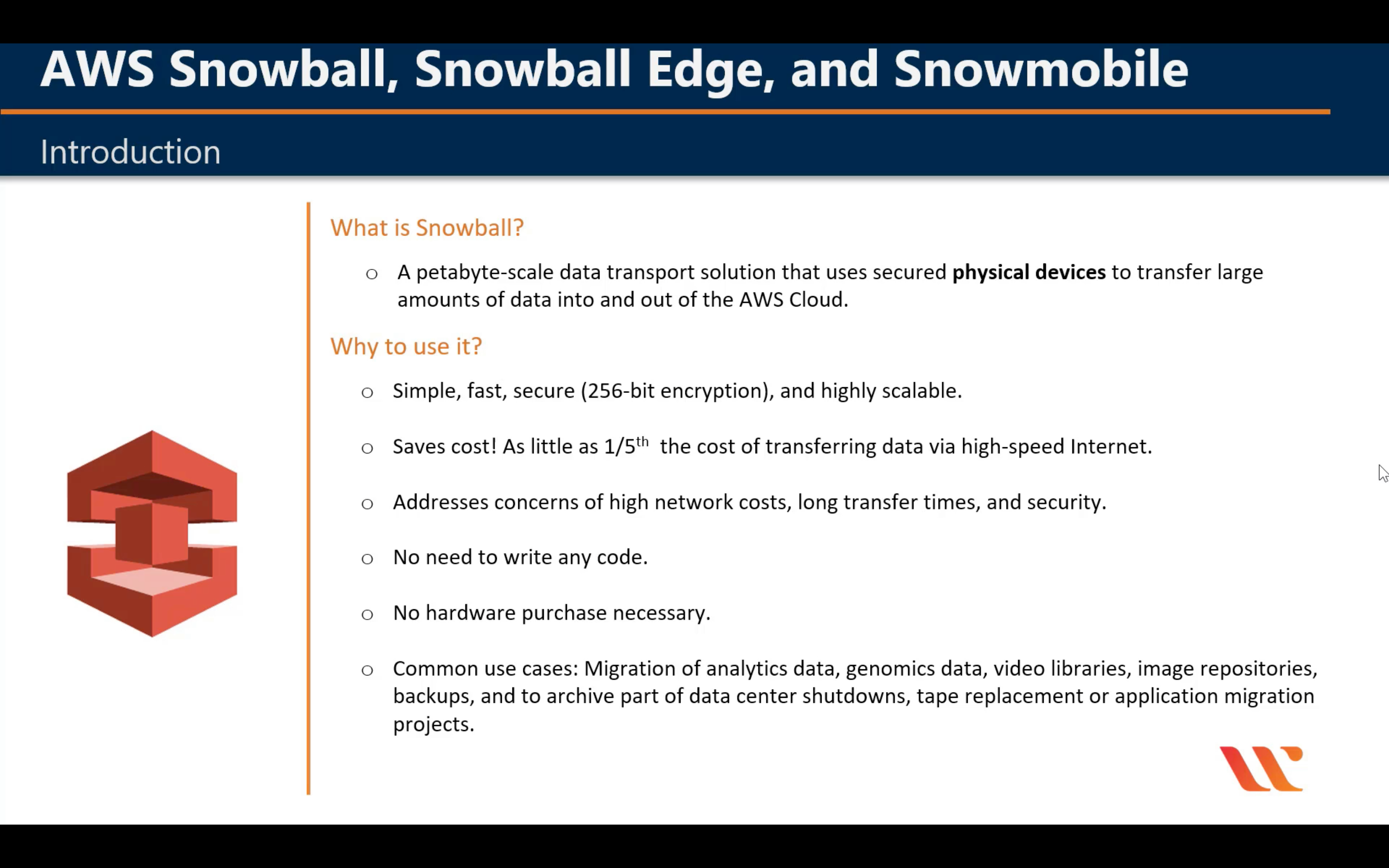

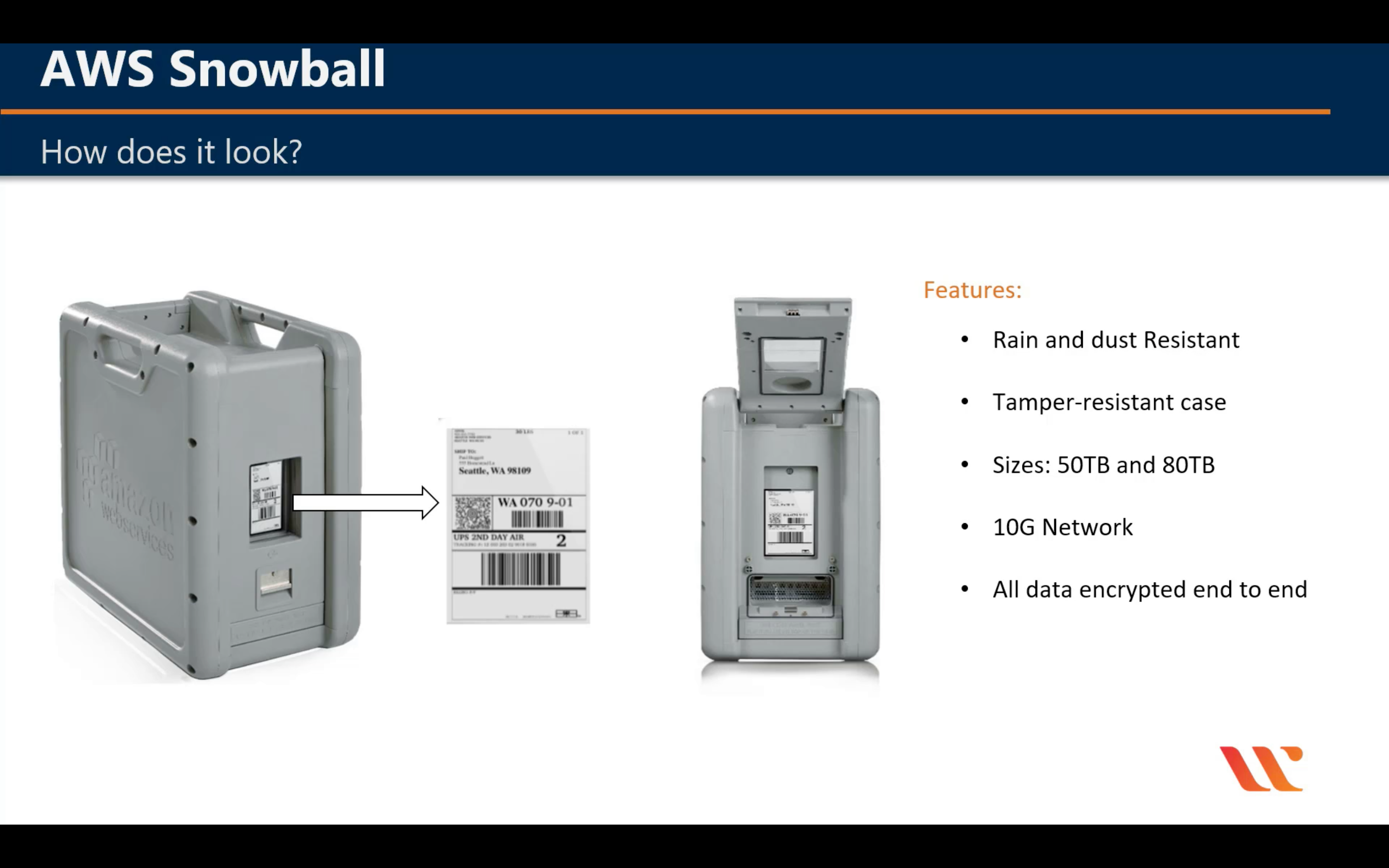

AWS Snow Family

Physical devices to migrate data into and out of AWS

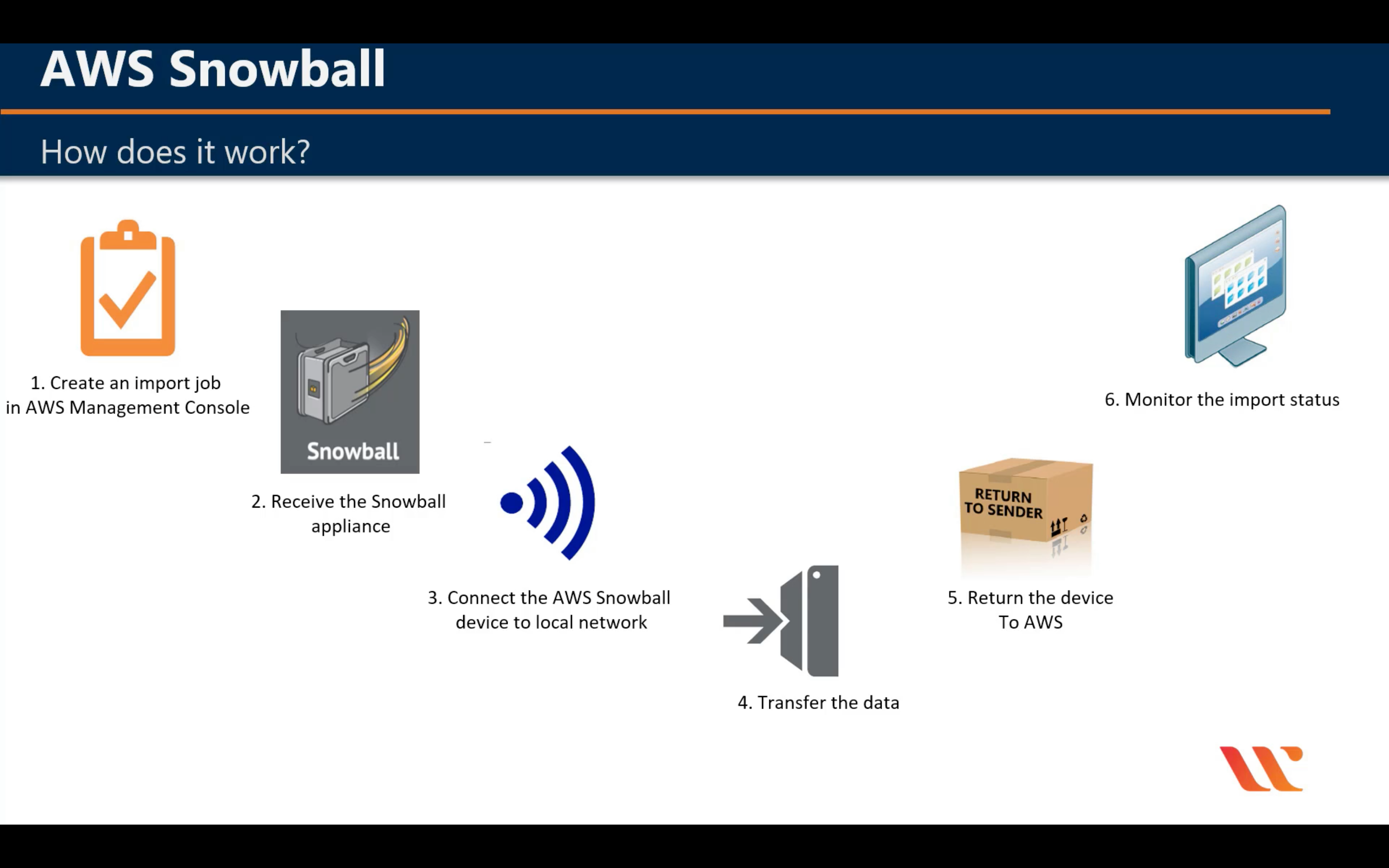

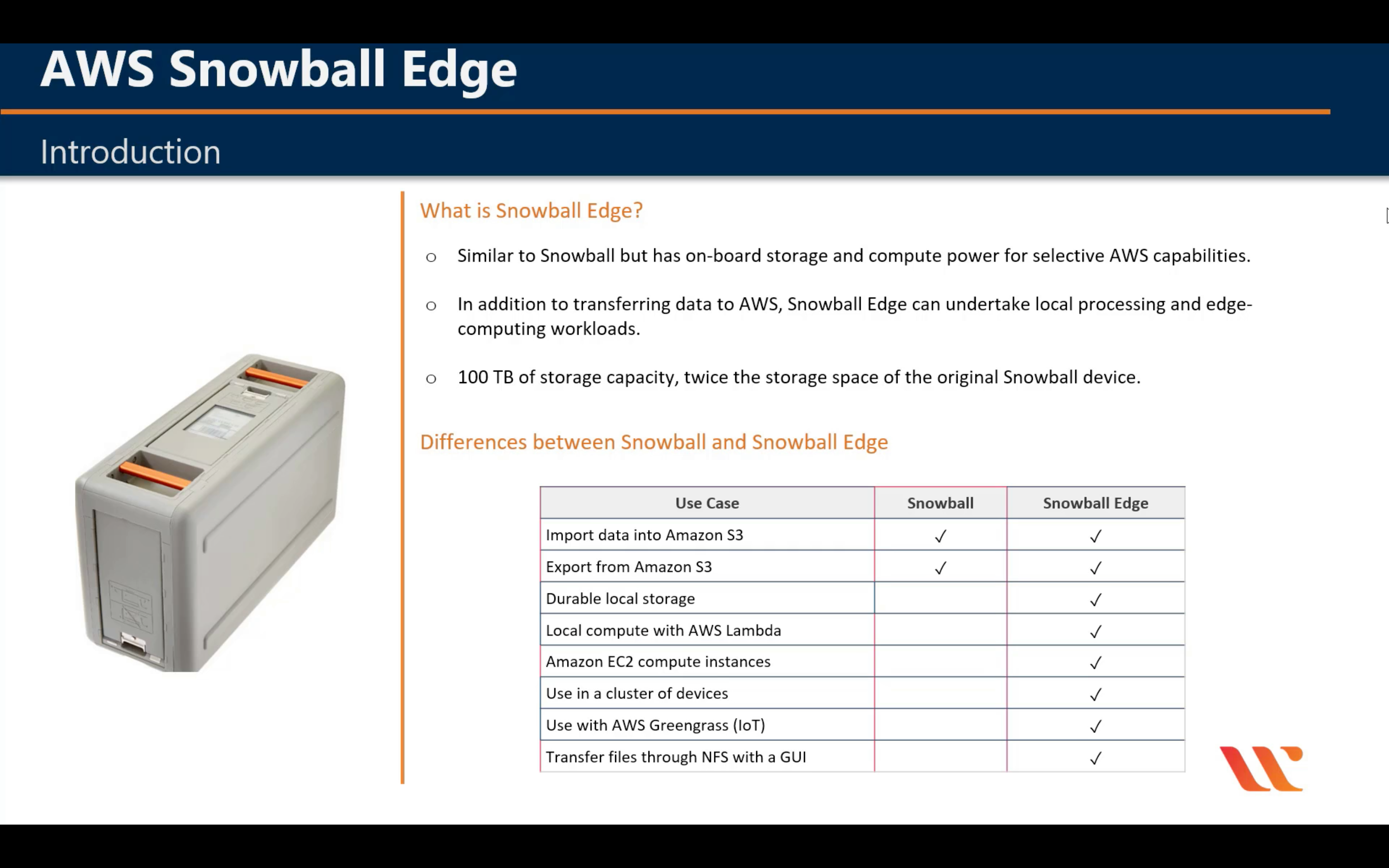

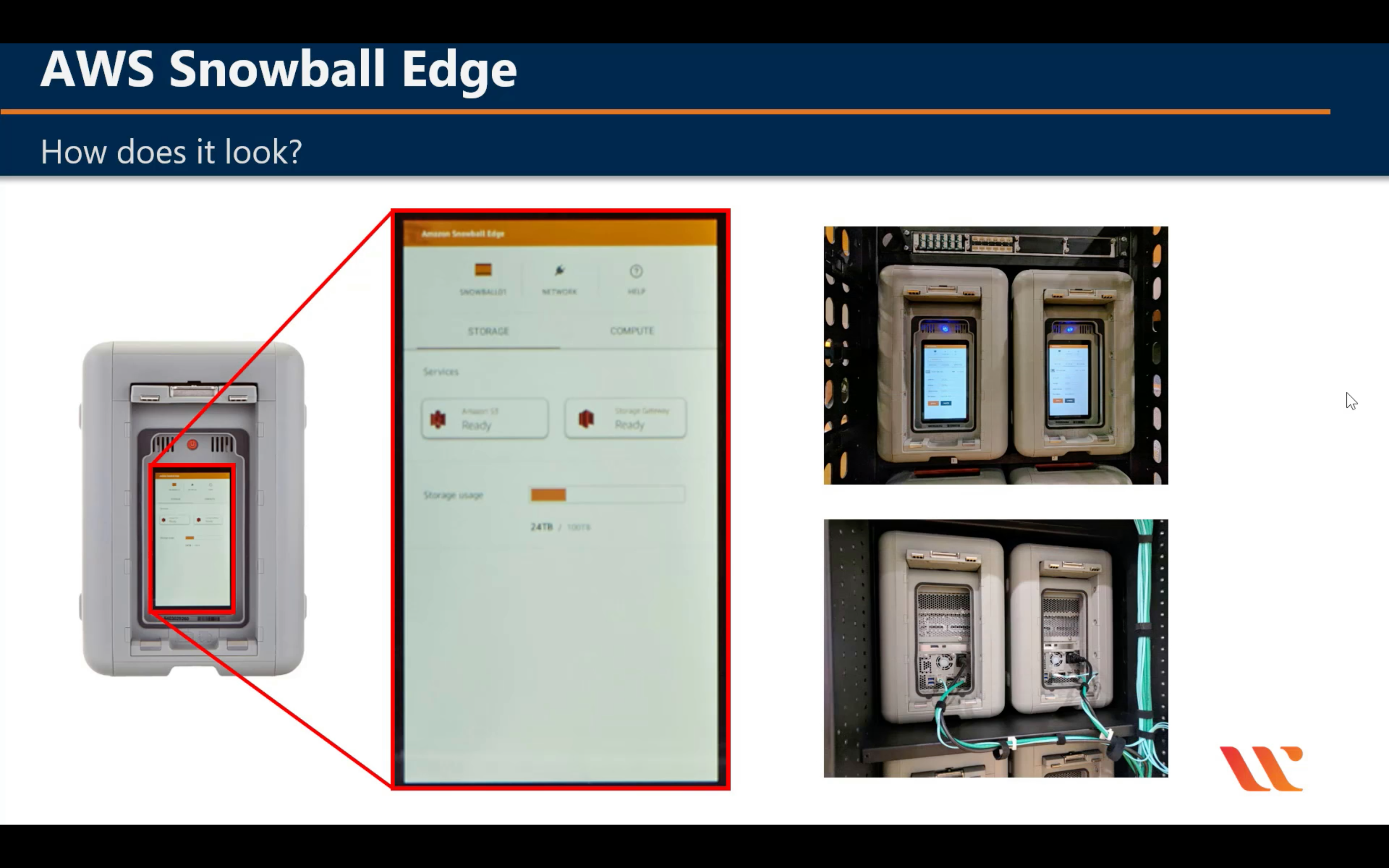

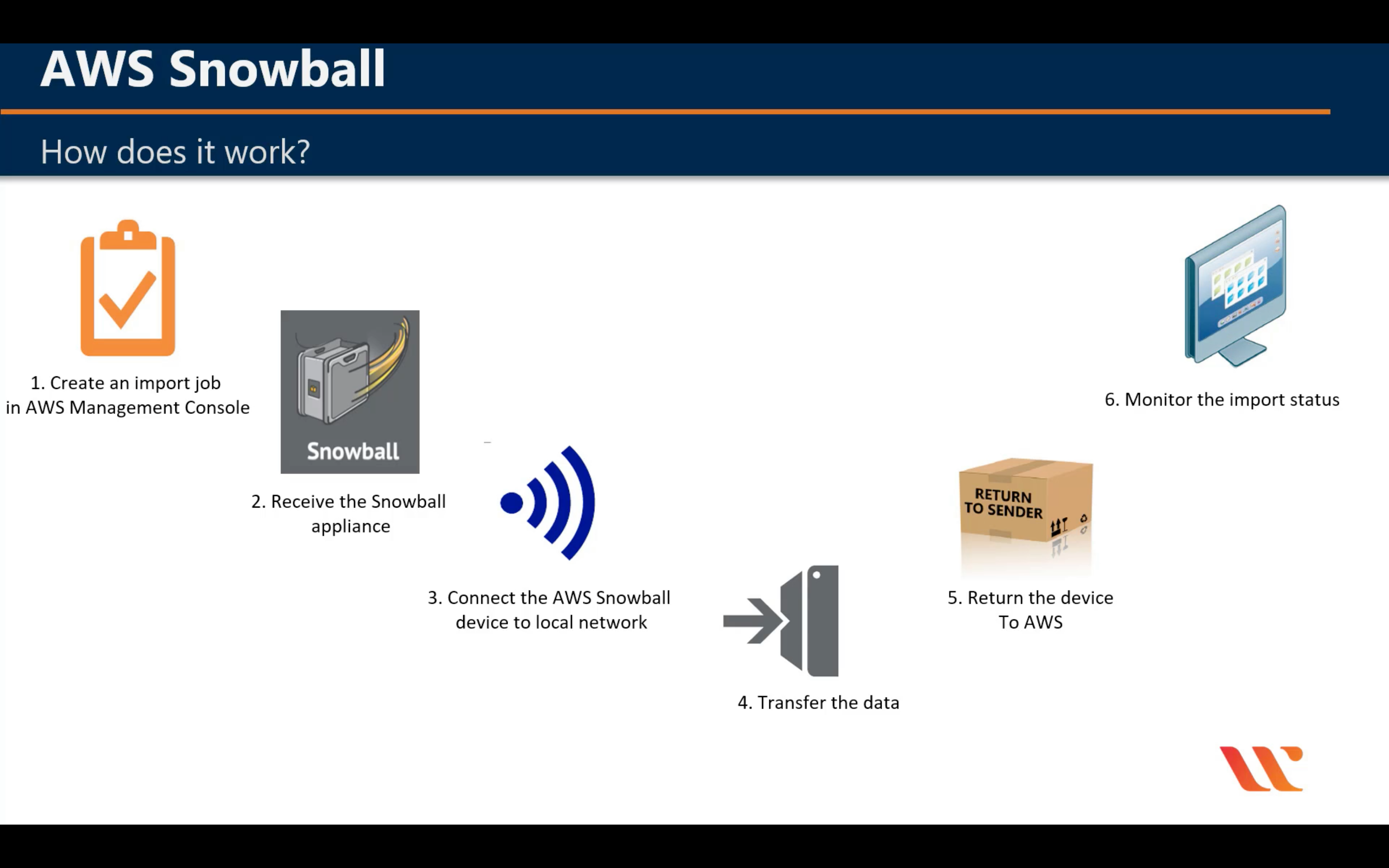

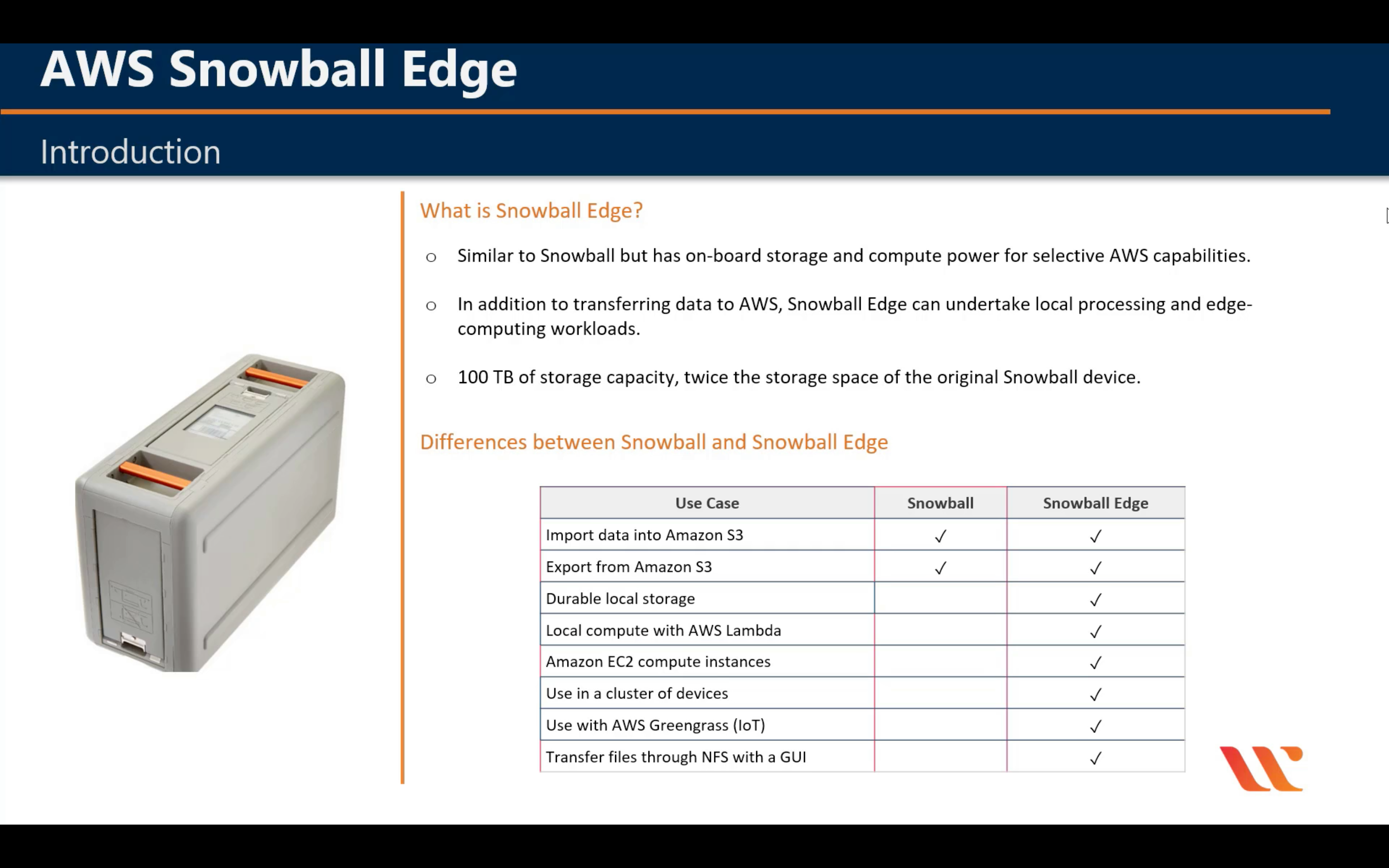

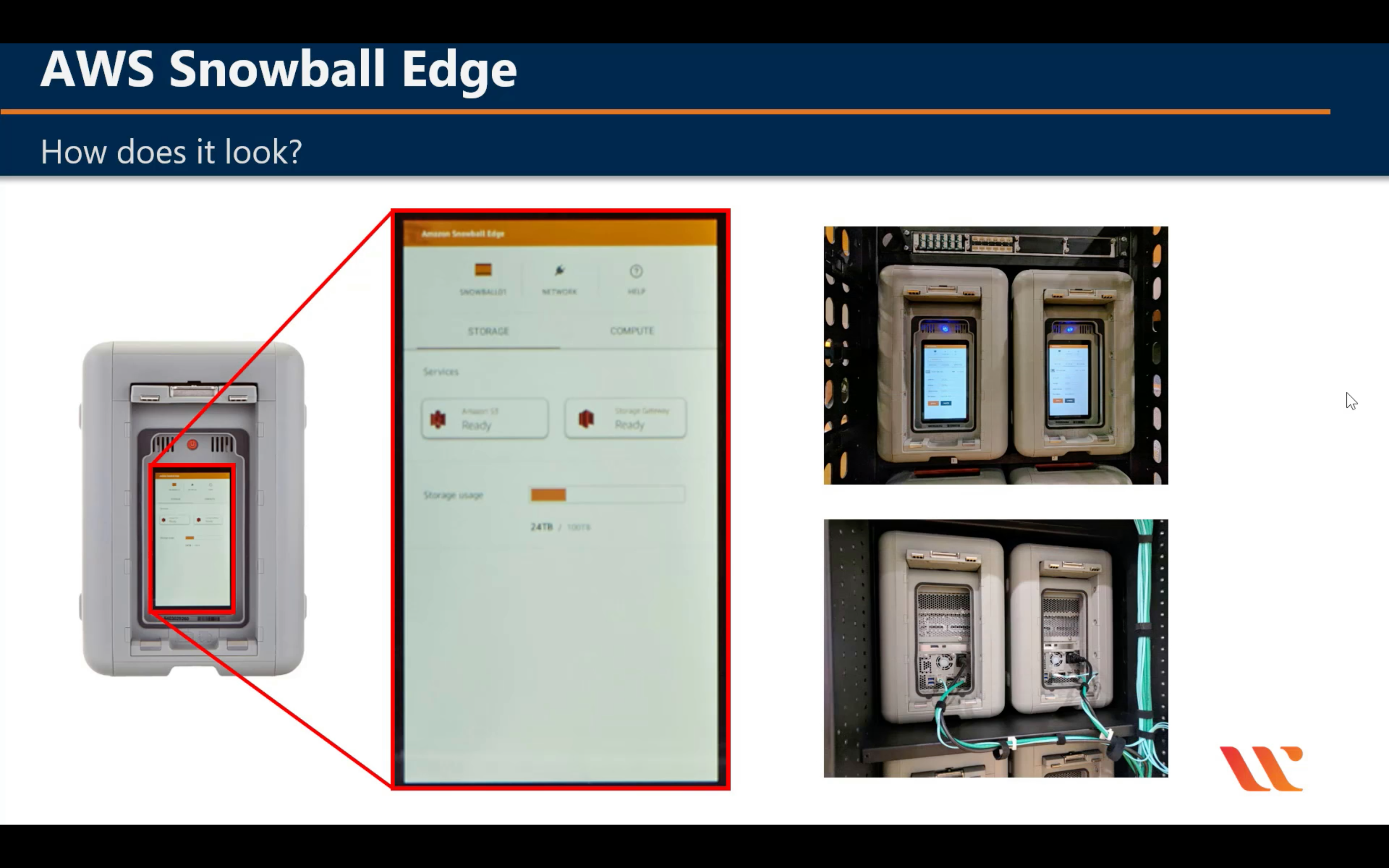

AWS Snowball

Snowball

Snowball

Snowmobile

AWS Transfer Family

Fully managed SFTP, FTPS, and FTP service

CloudEndure Migration

Automate your mass migration to the AWS cloud

Networking & Content Delivery

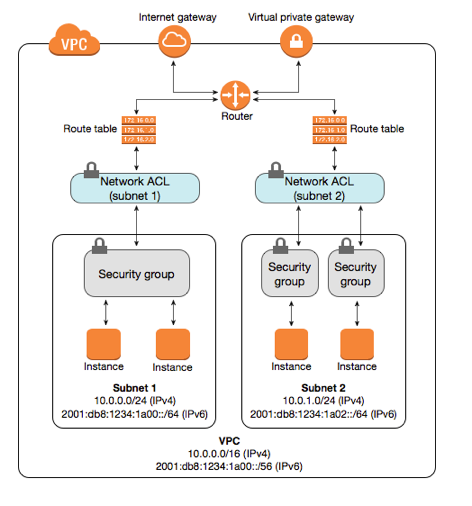

Amazon VPC

Isolated cloud resources

https://docs.aws.amazon.com/vpc/latest/userguide/vpc-dns.html

By default, custom VPCs does not have DNS Hostnames enabled. So when you launch an EC2 instance in custom VPC, you do not have a public DNS name. You should go to VPC actions € Edit DNS Hostnames and enable it to have DNS hostnames for the resources within VPC.

As per AWS docs “When you launch an instance into a nondefault VPC, we provide the instance with a private DNS hostname and we might provide a public DNS hostname, depending on the DNS attributes you specify for the VPC and if your instance has a public IPv4 address.”

In order to use AWS VPN Cloud Hub, one must create a virtual private gateway with multiple customer gateways, each with unique Border Gateway Protocol (BGP) Autonomous System Number (ASN).

Egress-Only Internet Gateways

An egress-only Internet gateway is a horizontally scaled, redundant, and highly available VPC component that allows outbound communication over IPv6 from instances in your VPC to the Internet, and prevents the Internet from initiating an IPv6 connection with your instances.

Note

An egress-only Internet gateway is for use with IPv6 traffic only. To enable outbound-only Internet communication over IPv4, use a NAT gateway instead. For more information, see NAT Gateways.

As per AWS documentation,

Instances in Private Subnet Cannot Access internet

Check that the NAT gateway is in the Available state. In the Amazon VPC console, go to the NAT Gateways page and view the status information in the details pane. If the NAT gateway is in a failed state, there may have been an error when it was created.

Check that you’ve configured your route tables correctly:

The NAT gateway must be in a public subnet with a routing table that routes internet traffic to an internet gateway.

Your instance must be in a private subnet with a routing table that routes internet traffic to the NAT gateway.

Check that there are no other route table entries that route all or part of the internet traffic to another device instead of the NAT gateway.

The NAT gateway itself allows all outbound traffic and traffic received in response to an outbound request (it is therefore stateful).

Please refer to the following links for more information.

https://aws.amazon.com/premiumsupport/knowledge-center/nat-gateway-vpc-private-subnet/

https://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/vpc-nat-gateway.html

https://docs.aws.amazon.com/vpc/latest/userguide/VPC_Security.html

| Security group | Network ACL |

|---|---|

| Operates at the instance level | |

| Supports allow rules only | Supports allow rules and deny rules |

| Is stateful: Return traffic is automatically allowed, regardless of any rules | Is stateless: Return traffic must be explicitly allowed by rules |

| We evaluate all rules before deciding whether to allow traffic | We process rules in number order when deciding whether to allow traffic |

| Applies to an instance only if someone specifies the security group when launching the instance, or associates the security group with the instance later on | Automatically applies to all instances in the subnets that it’s associated with (therefore, it provides an additional layer of defense if the security group rules are too permissive) |

https://docs.aws.amazon.com/vpc/latest/userguide/VPC_Subnets.html#VPC_Sizing

The CIDR block of a subnet can be the same as the CIDR block for the VPC (for a single subnet in the VPC), or a subset of the CIDR block for the VPC (for multiple subnets). The allowed block size is between a /28 netmask and /16 netmask. If you create more than one subnet in a VPC, the CIDR blocks of the subnets cannot overlap.

For example, if you create a VPC with CIDR block 10.0.0.0/24, it supports 256 IP addresses. You can break this CIDR block into two subnets, each supporting 128 IP addresses. One subnet uses CIDR block 10.0.0.0/25 (for addresses 10.0.0.0 - 10.0.0.127) and the other uses CIDR block 10.0.0.128/25 (for addresses 10.0.0.128 - 10.0.0.255).

There are many tools available to help you calculate subnet CIDR blocks; for example, see http://www.subnet-calculator.com/cidr.php. Also, your network engineering group can help you determine the CIDR blocks to specify for your subnets.

The first four IP addresses and the last IP address in each subnet CIDR block are not available for you to use, and cannot be assigned to an instance. For example, in a subnet with CIDR block 10.0.0.0/24, the following five IP addresses are reserved:

- 10.0.0.0: Network address.

- 10.0.0.1: Reserved by AWS for the VPC router.

- 10.0.0.2: Reserved by AWS. The IP address of the DNS server is the base of the VPC network range plus two. For VPCs with multiple CIDR blocks, the IP address of the DNS server is located in the primary CIDR. We also reserve the base of each subnet range plus two for all CIDR blocks in the VPC. For more information, see Amazon DNS Server.

- 10.0.0.3: Reserved by AWS for future use.

- 10.0.0.255: Network broadcast address. We do not support broadcast in a VPC, therefore we reserve this address.

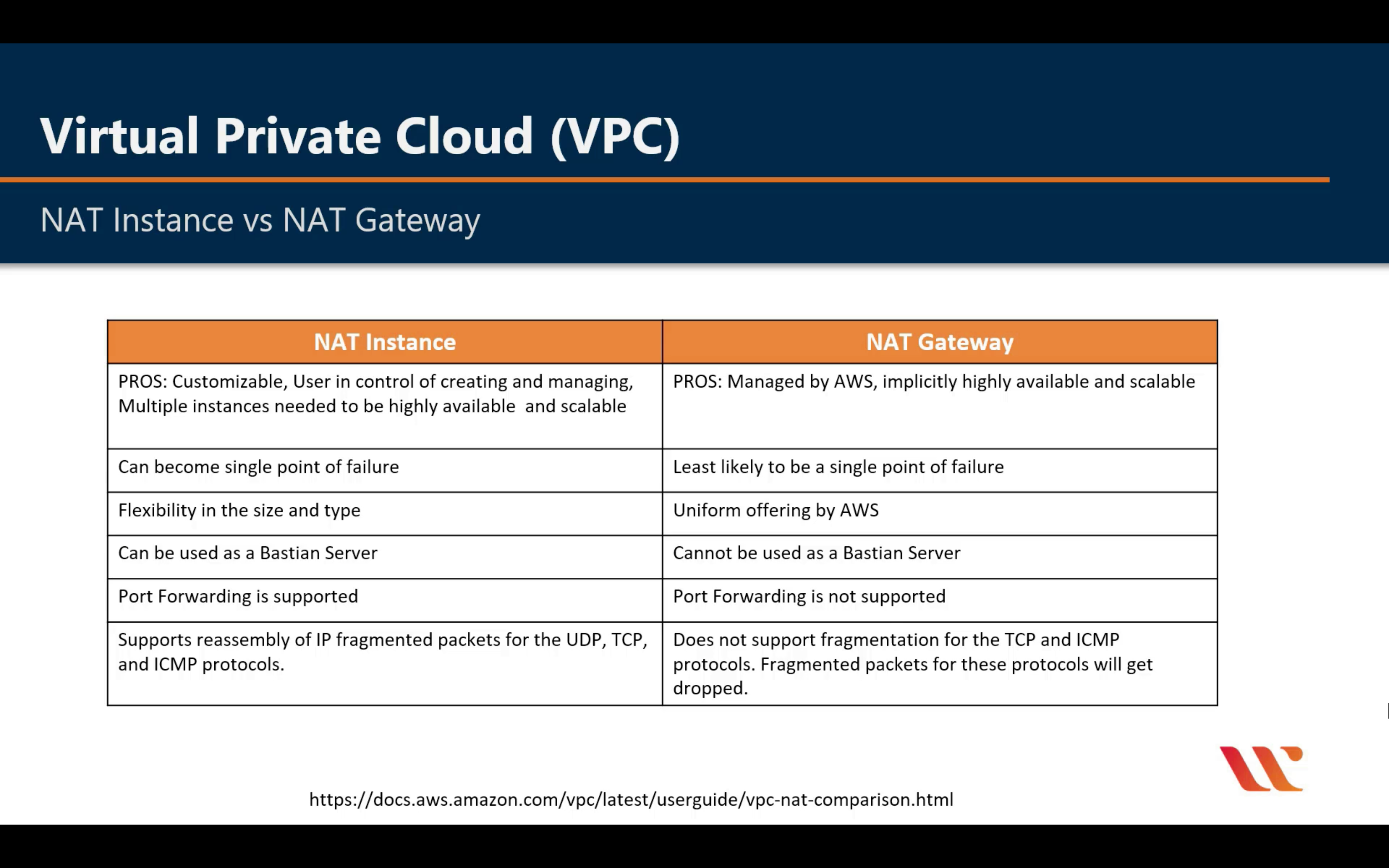

Comparison of NAT Instances and NAT Gateways

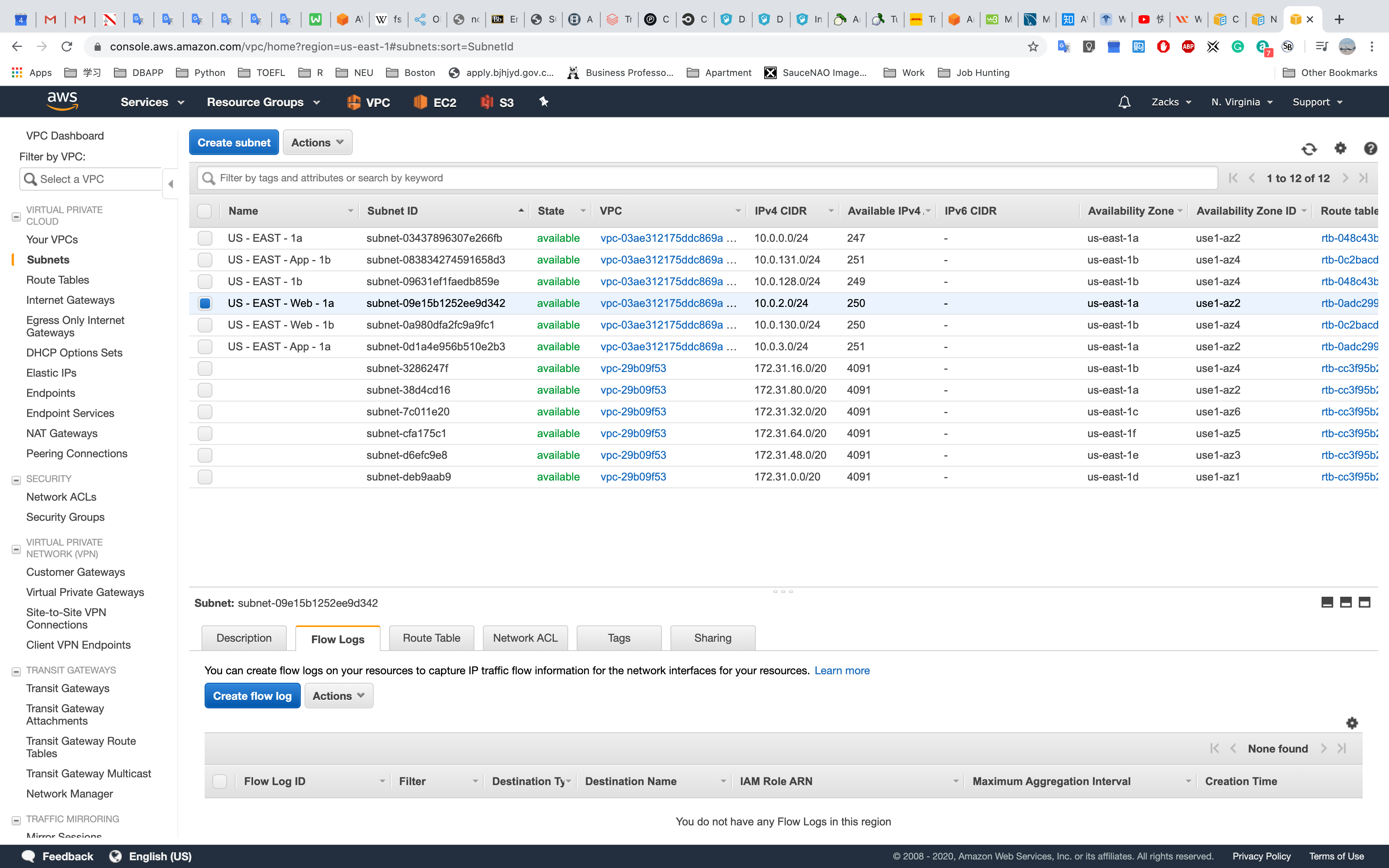

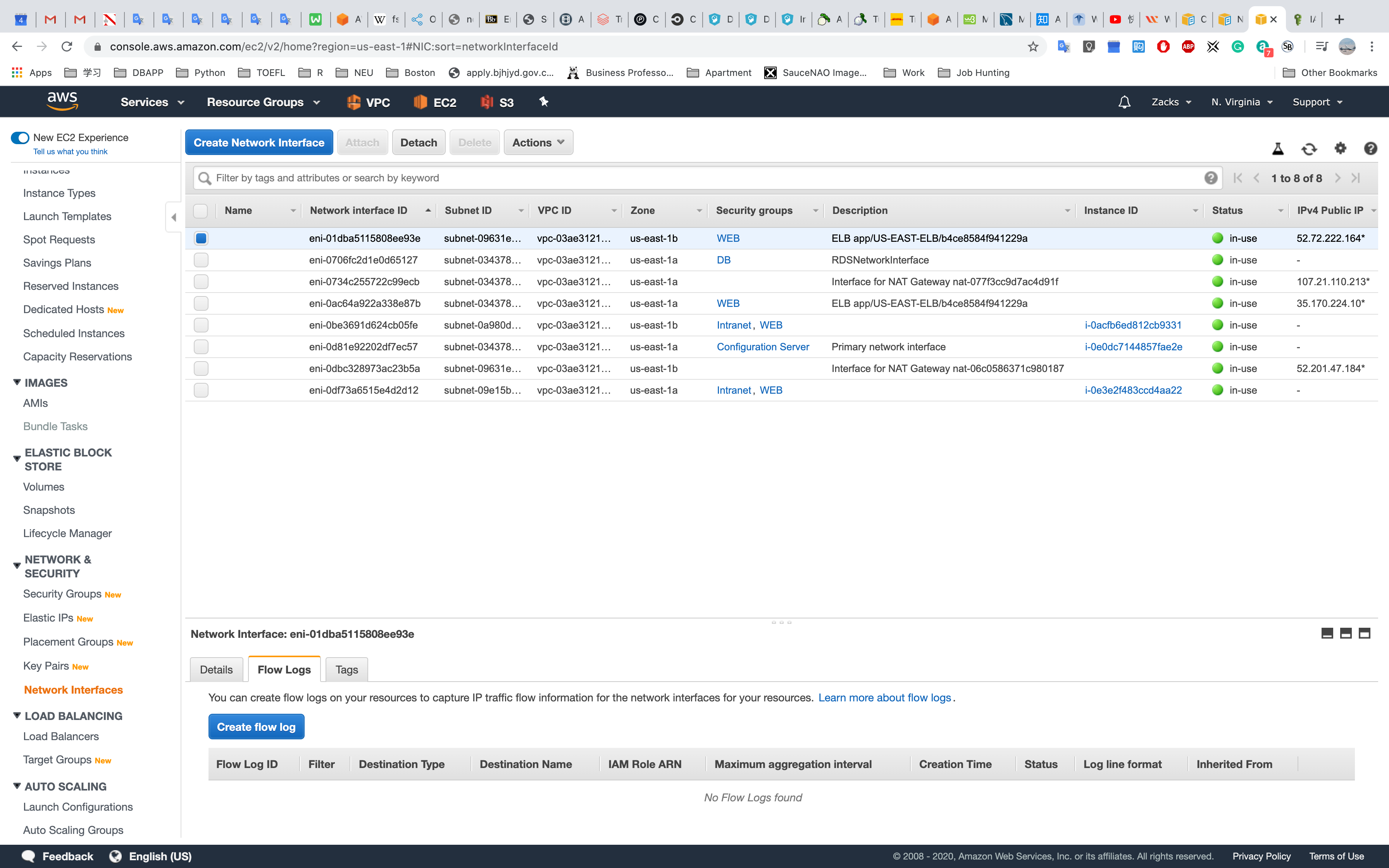

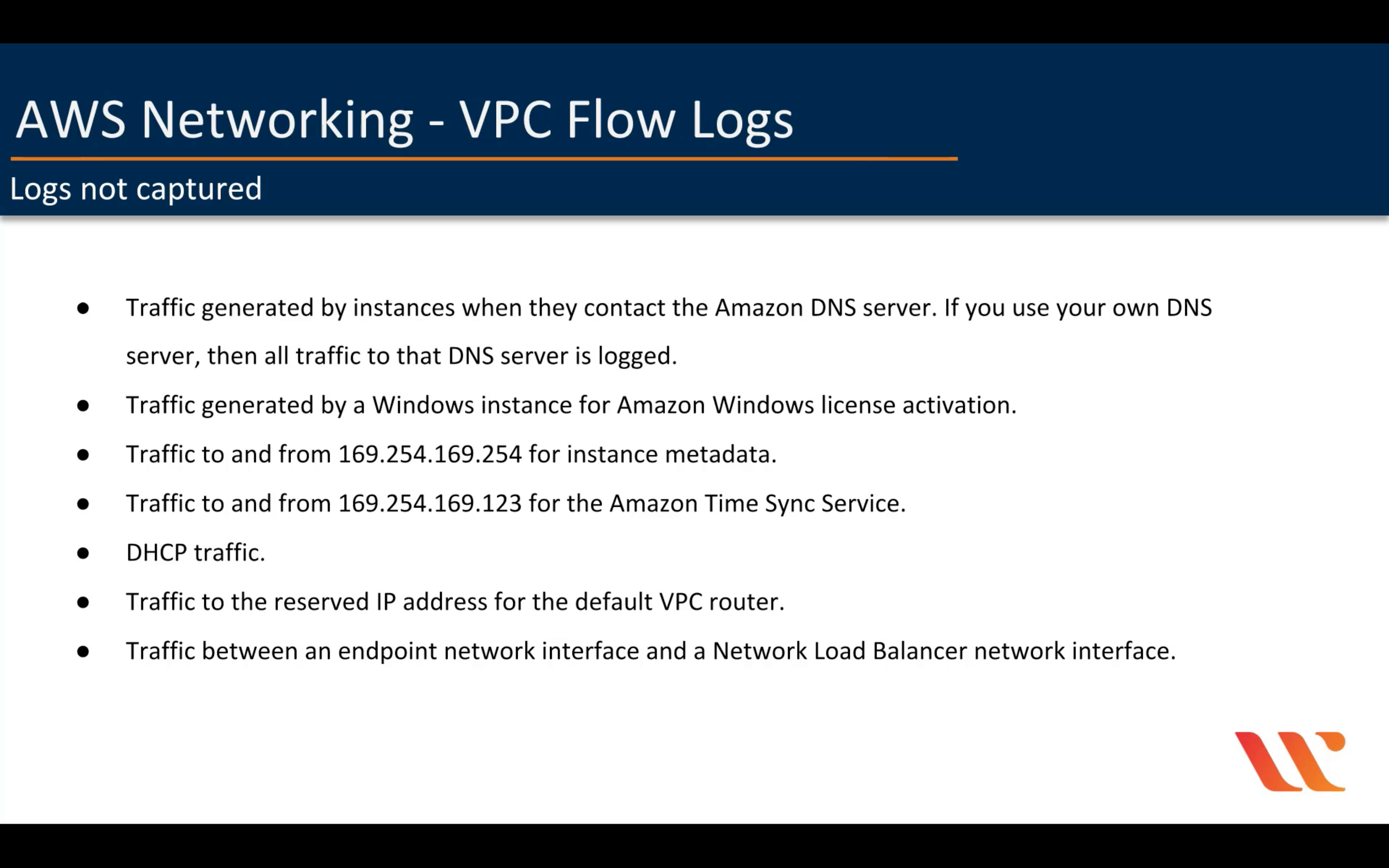

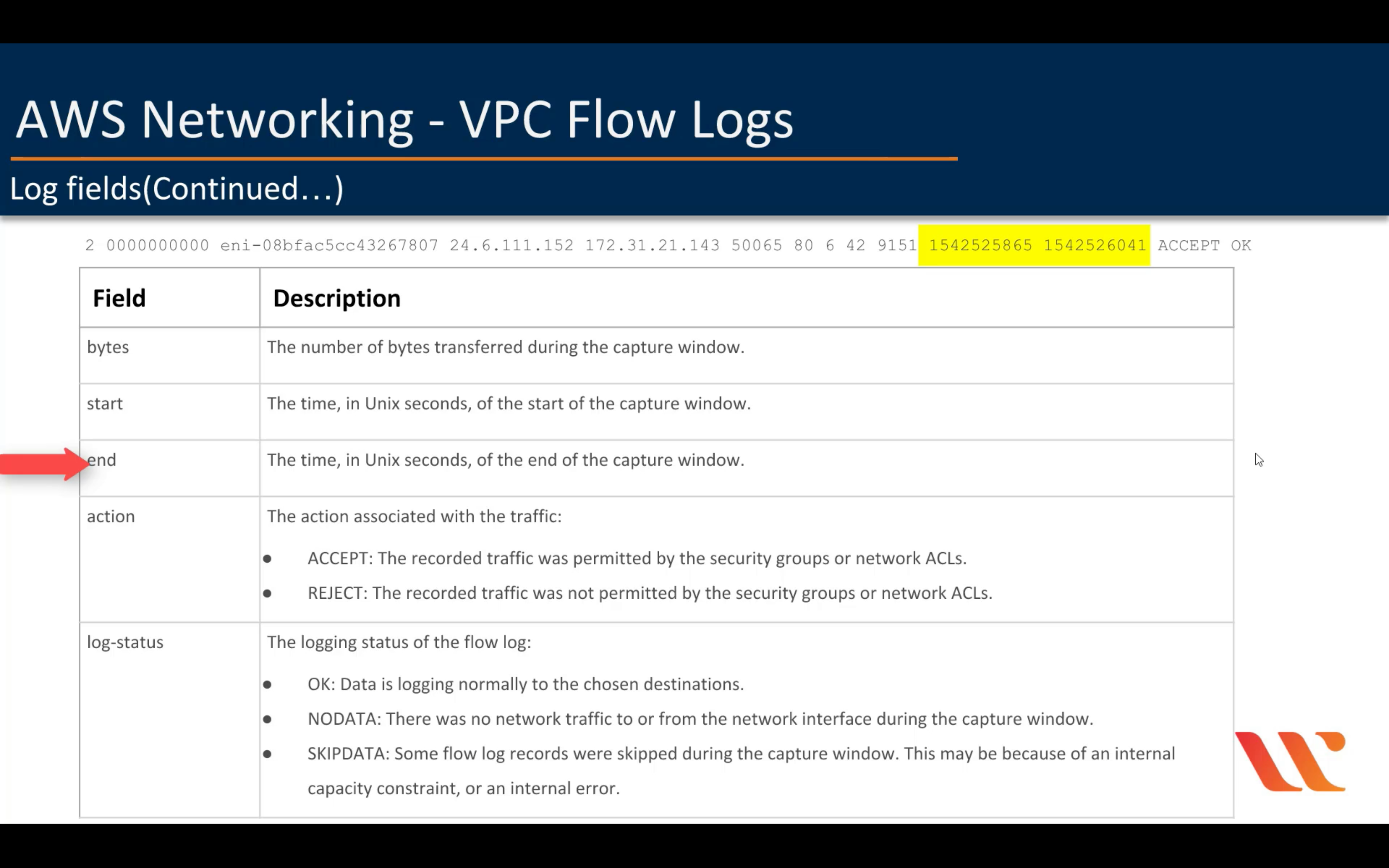

VPC Flow Log

CloudWatch

VPC:

- VPC

- Subnet

EC2:

- Network Interfaces

AMI: Flow Log permission

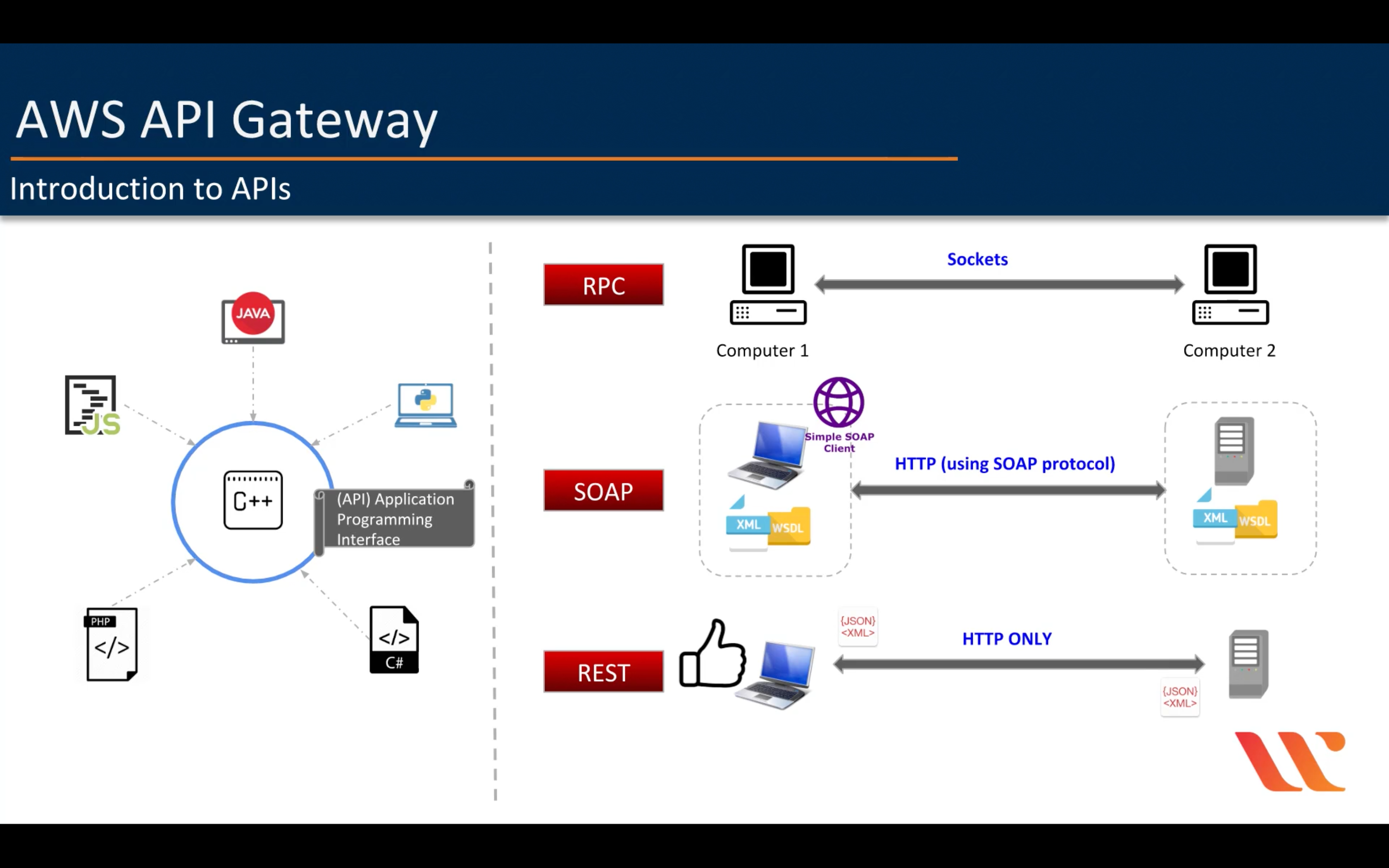

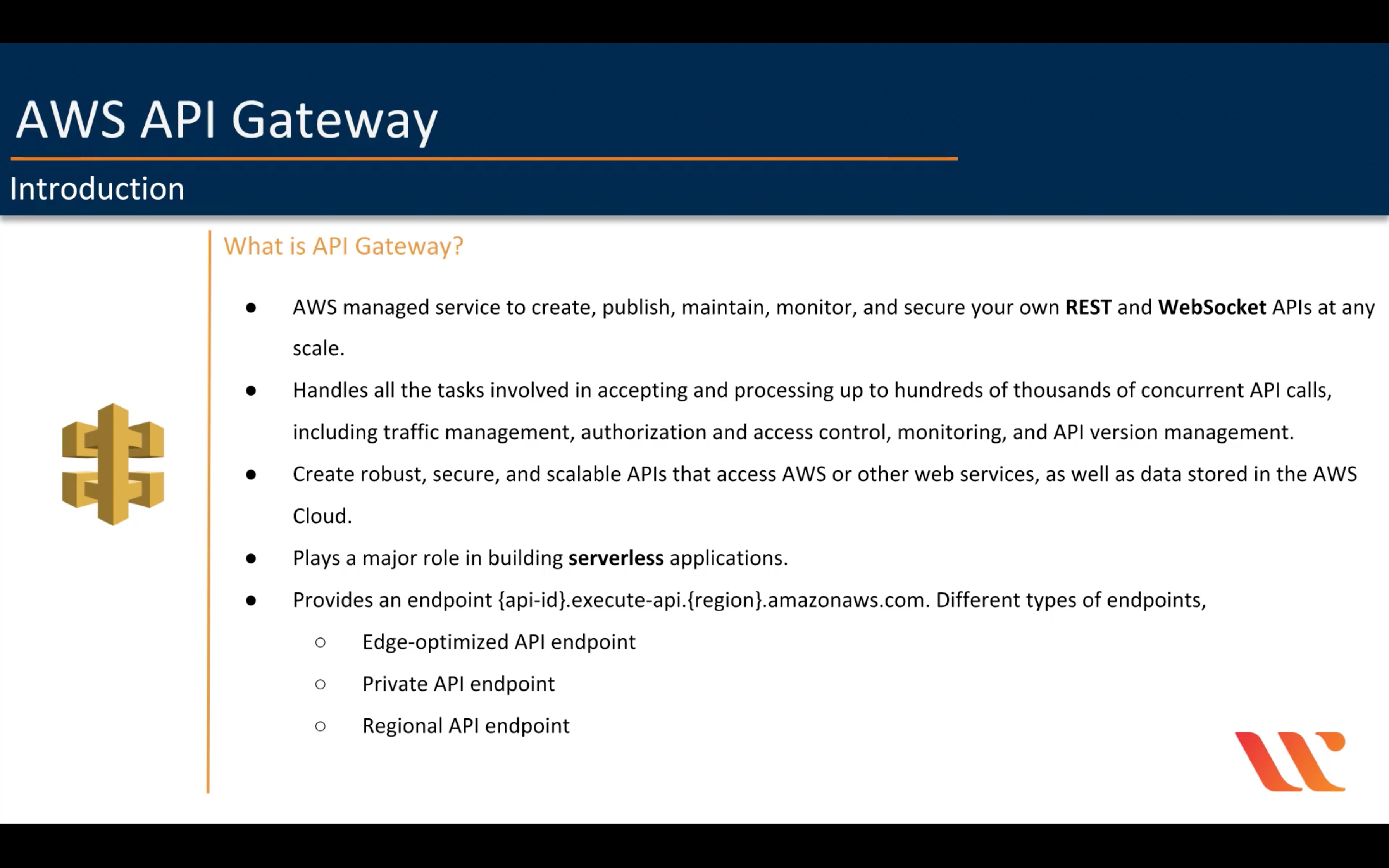

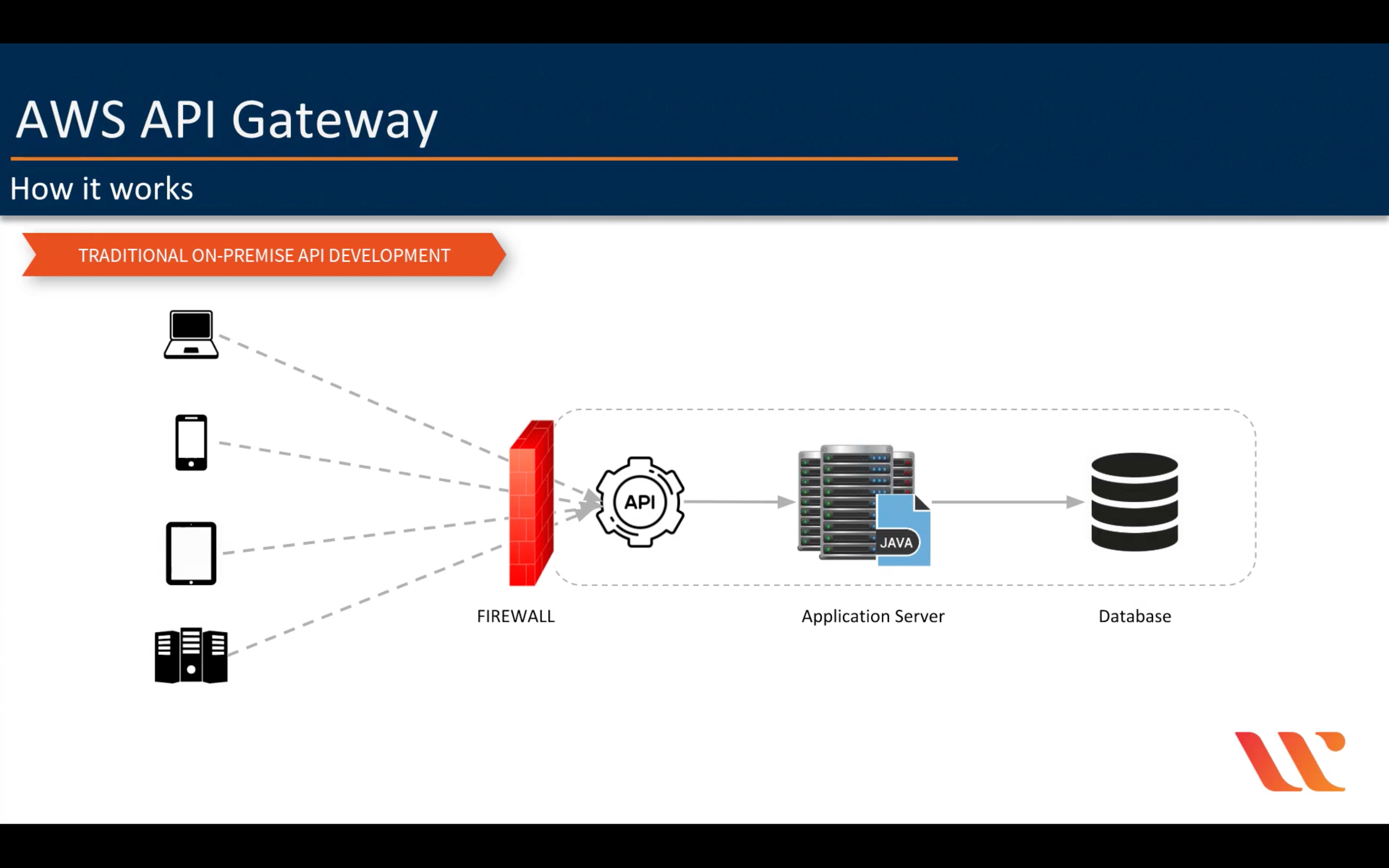

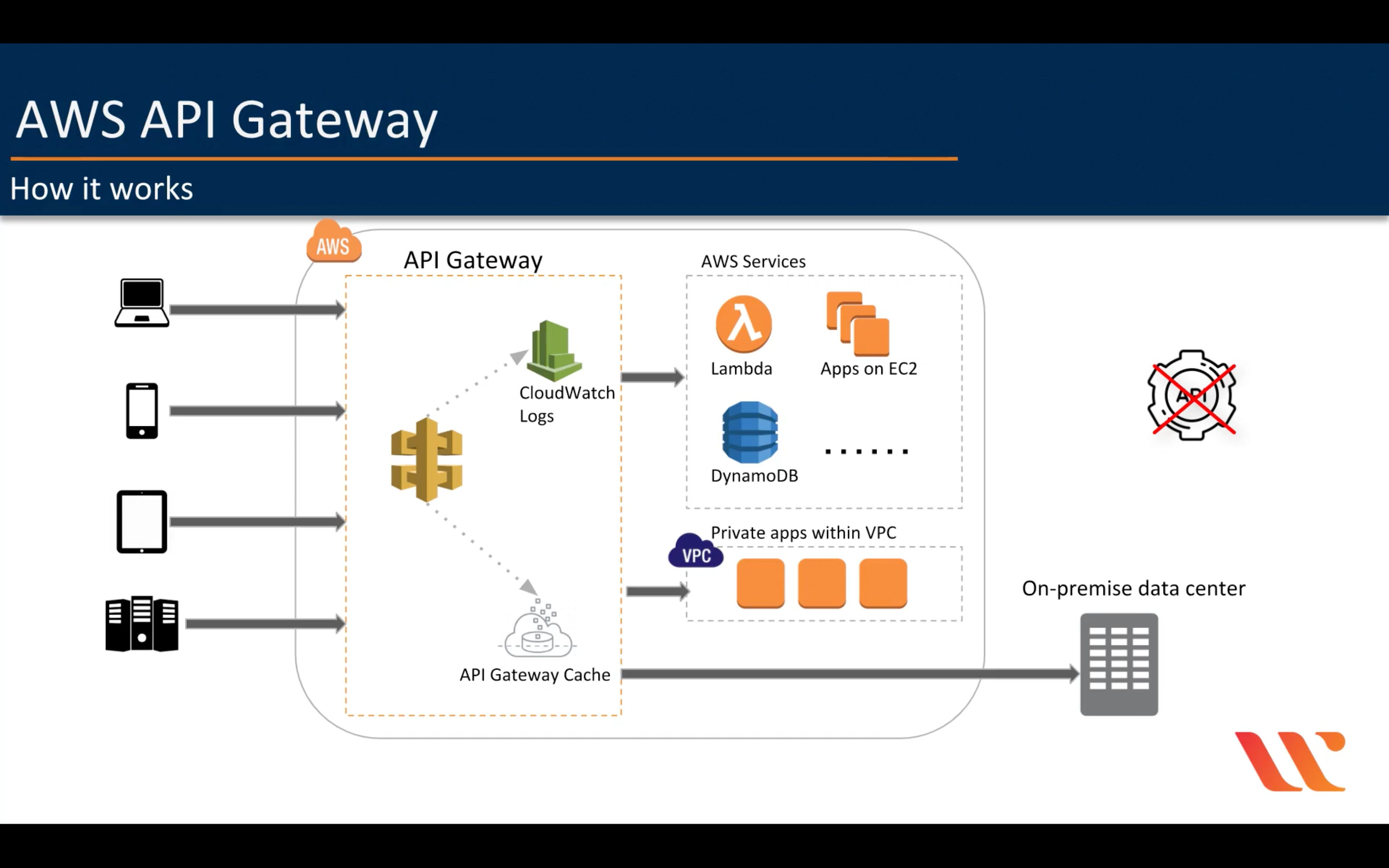

Amazon API Gateway

Build, deploy, and manage APIs

- Support for RESTful APIs and WebSocket APIs

- Private integrations with AWS ELB & AWS Cloud Map

- Resiliency

- Easy API Creation and Deployment

- API Operations Monitoring

- AWS Authorization

- API Keys for Third-Party Developers

- SDK Generation

- API Lifecycle Management

AWS SAM supports several mechanisms for controlling access to your API Gateway APIs:

- Lambda authorizers.

- Amazon Cognito user pools.

- IAM permissions.

- API keys.

- Resource policies.

Amazon API Gateway can execute AWS Lambda functions in your account, start AWS Step Functions state machines, or call HTTP endpoints hosted on AWS Elastic Beanstalk, Amazon EC2, and also non-AWS hosted HTTP based operations that are accessible via the public Internet. API Gateway also allows you to specify a mapping template to generate static content to be returned, helping you mock your APIs before the backend is ready. You can also integrate API Gateway with other AWS services directly – for example, you could expose an API method in API Gateway that sends data directly to Amazon Kinesis.

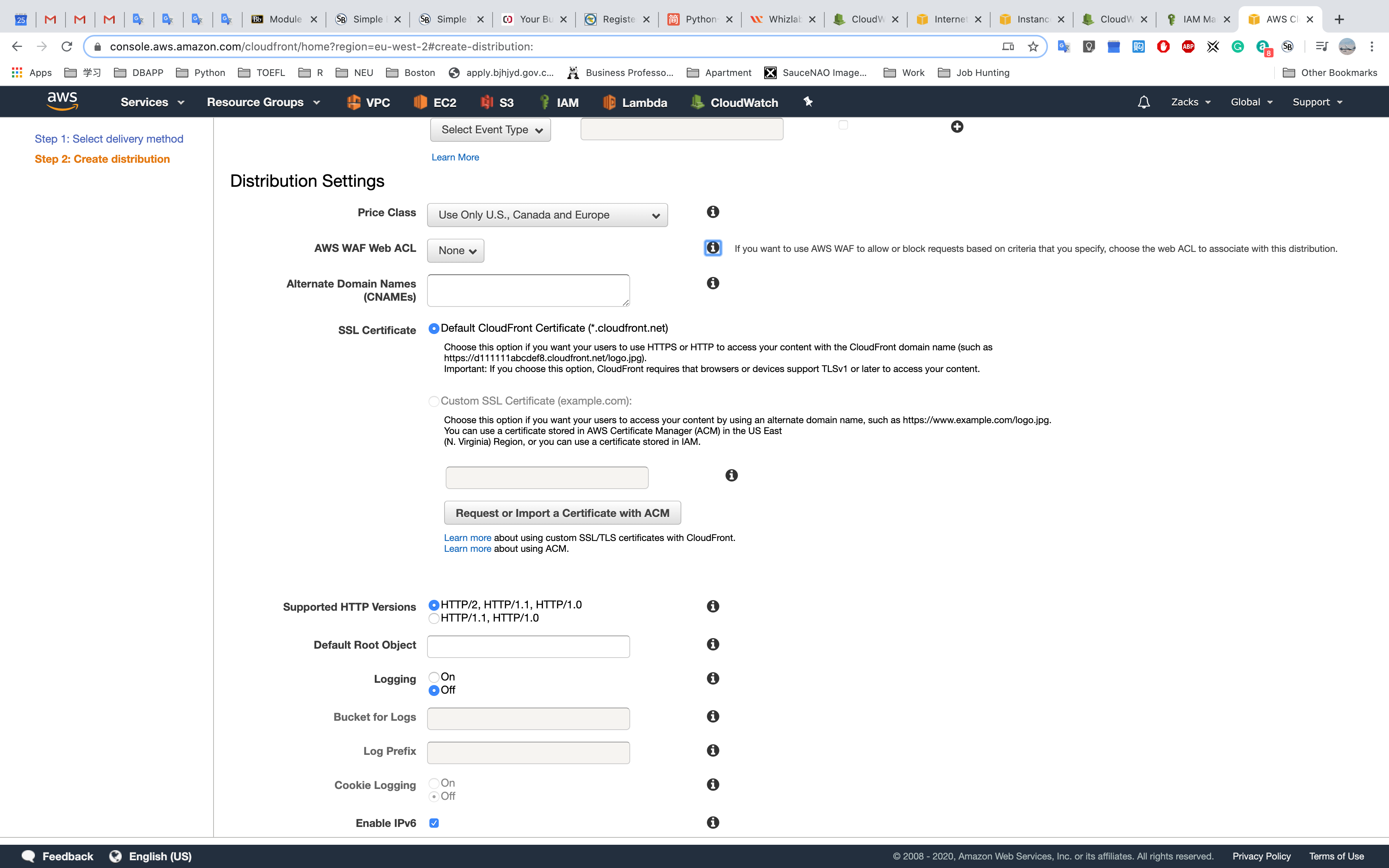

Amazon CloudFront

Global content delivery network

- Amazon CloudFront Infrastructure: The Amazon CloudFront Global Edge Network

- Security

- Performance

- Programmable and DevOps Friendly

- Lambda@Edge

- Cost Effective

Request without CDN

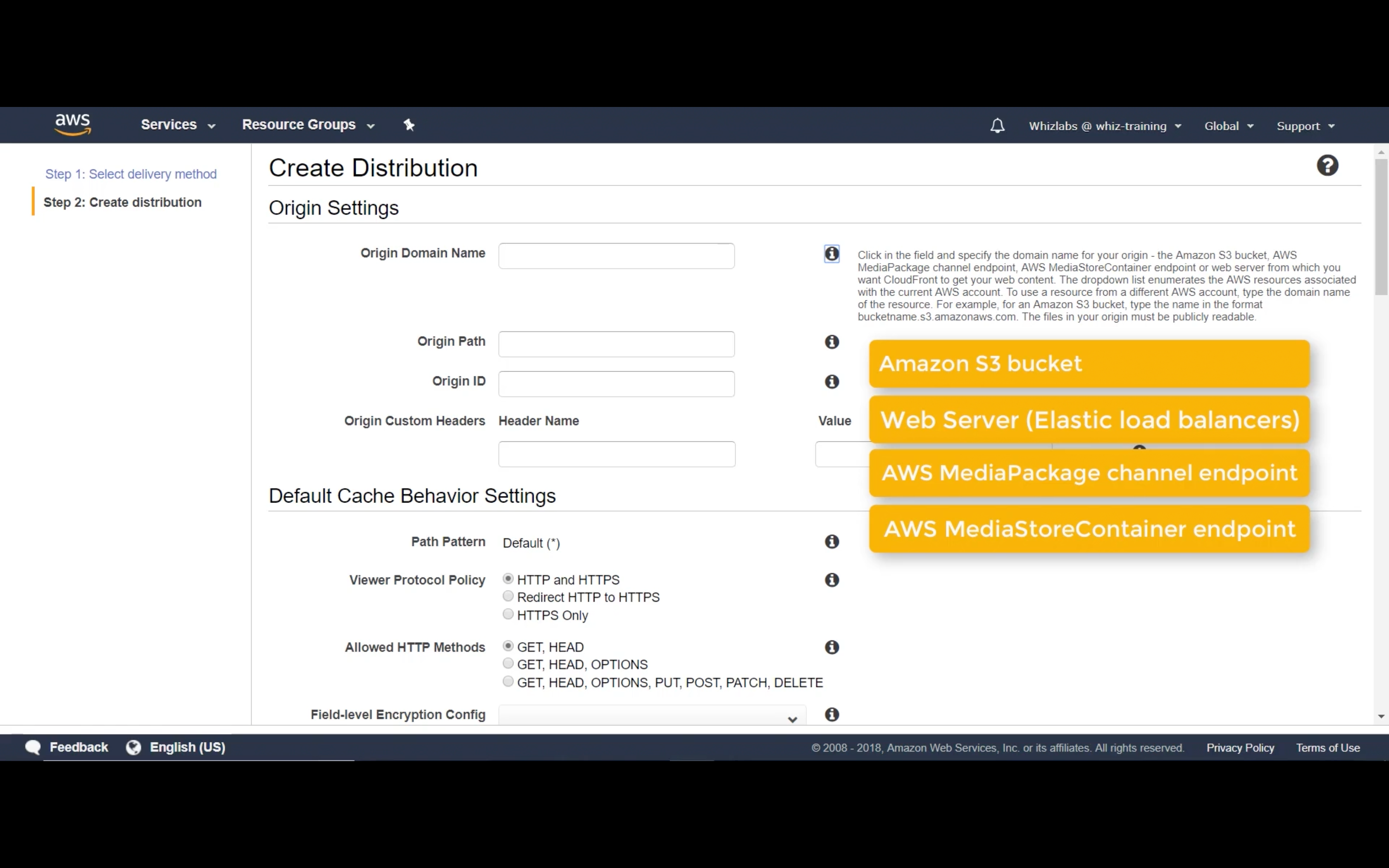

Labs - S3

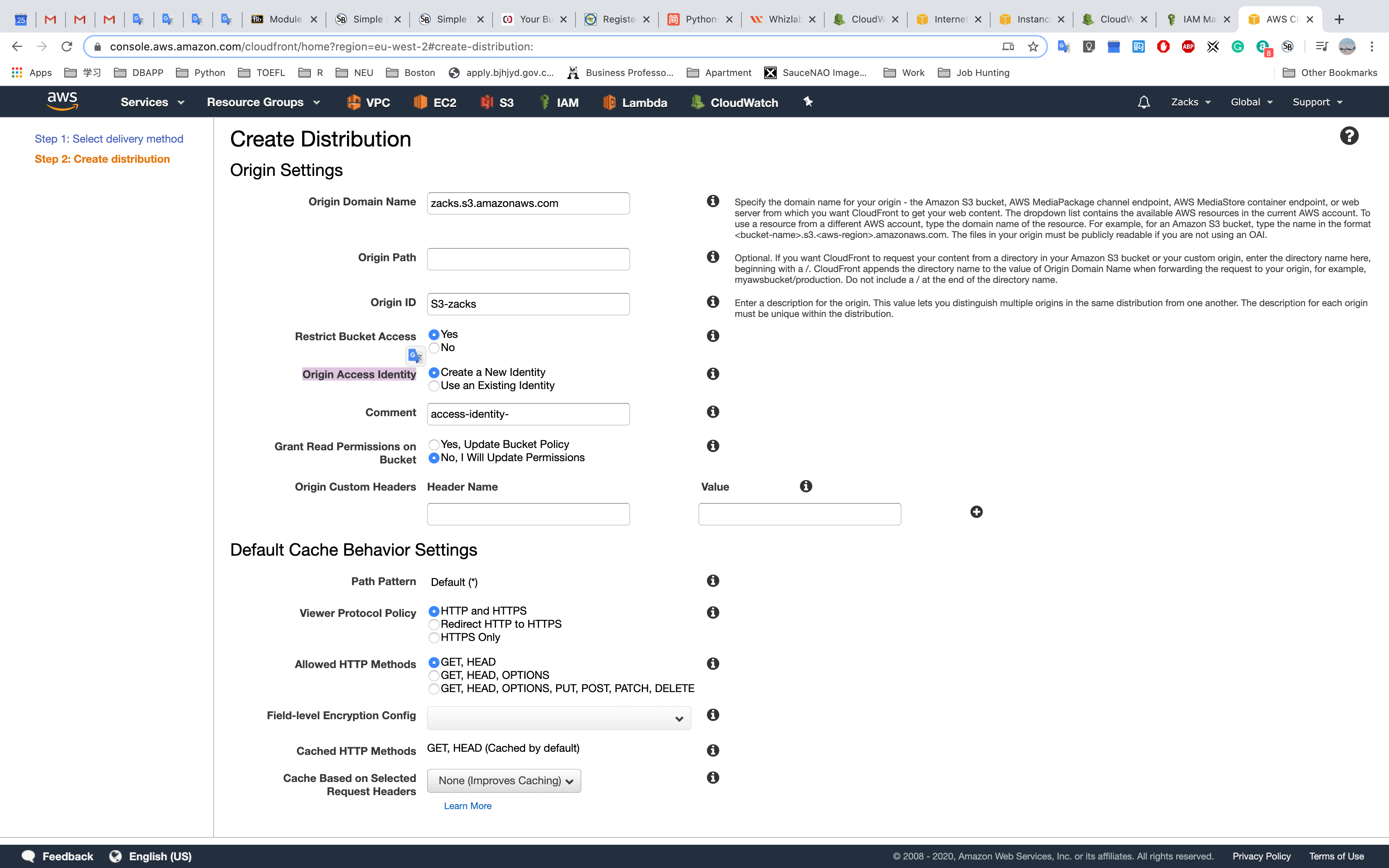

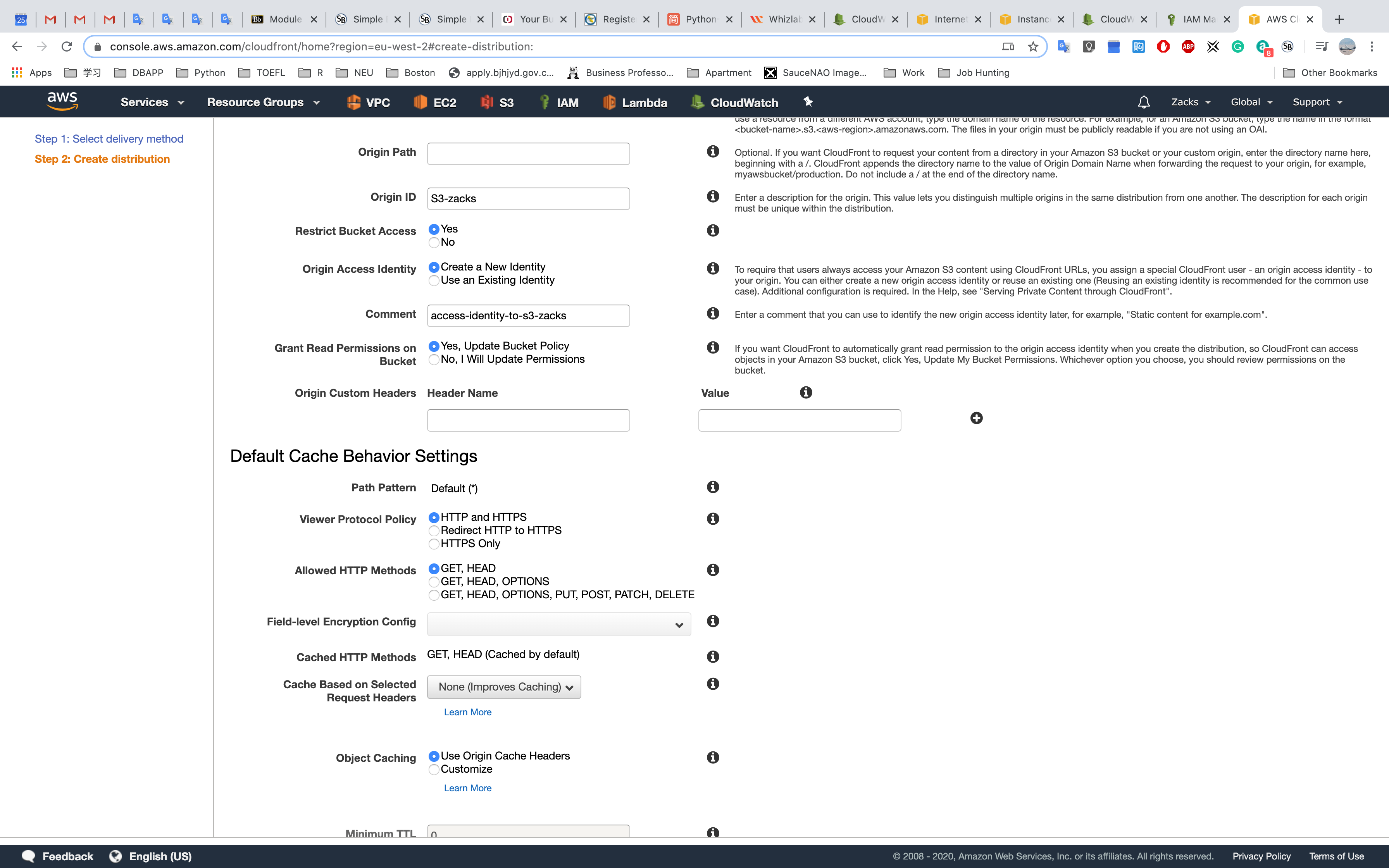

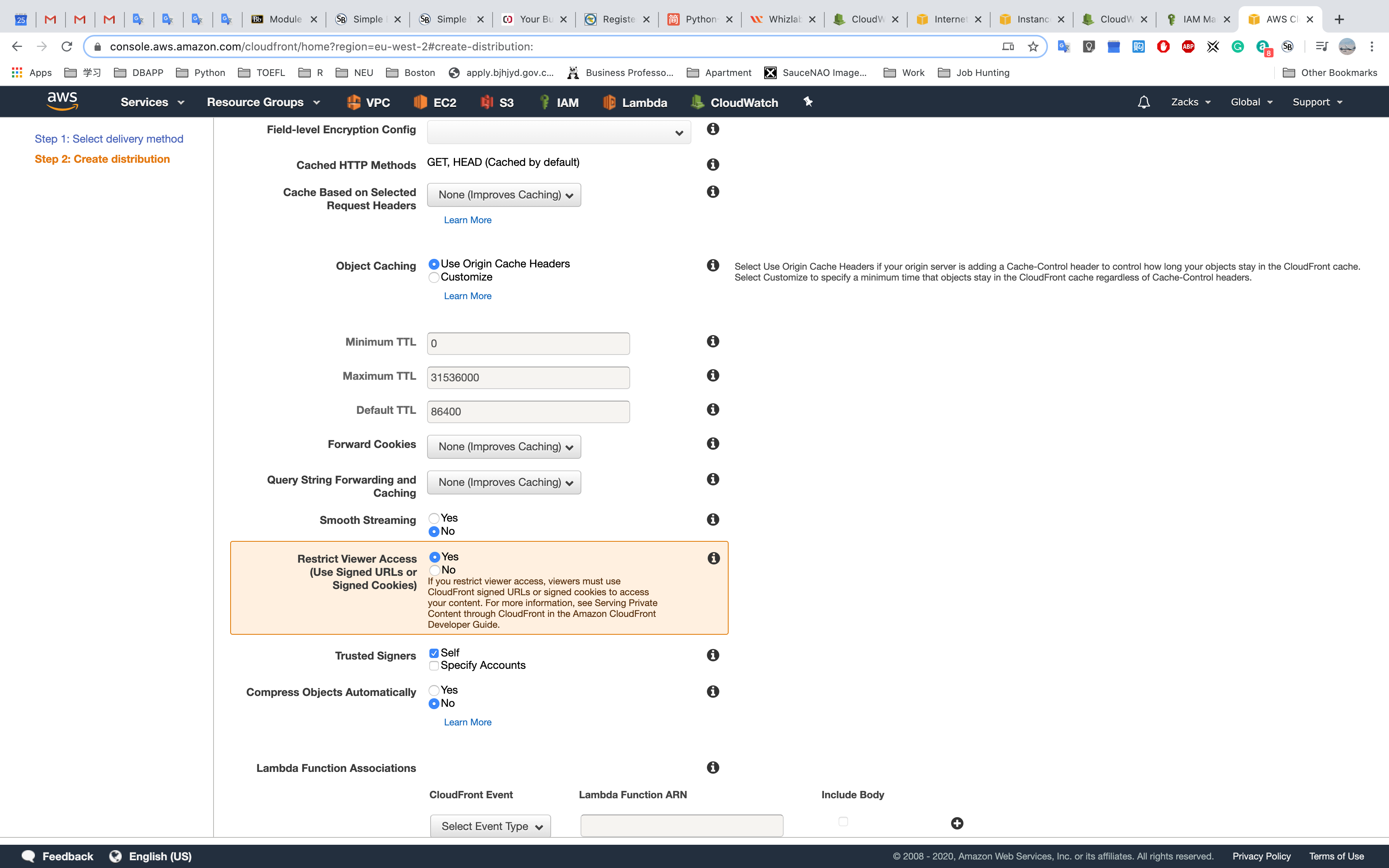

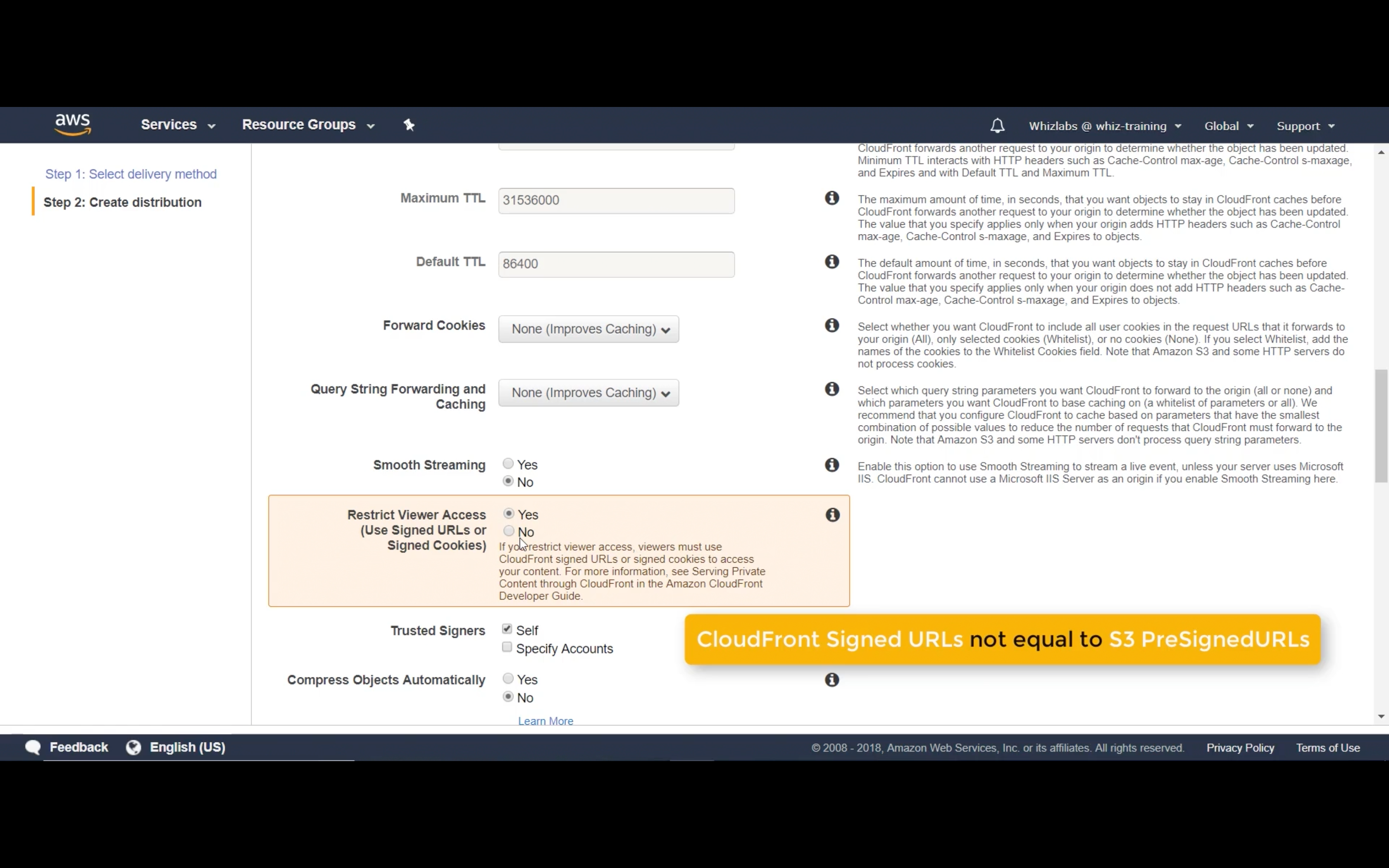

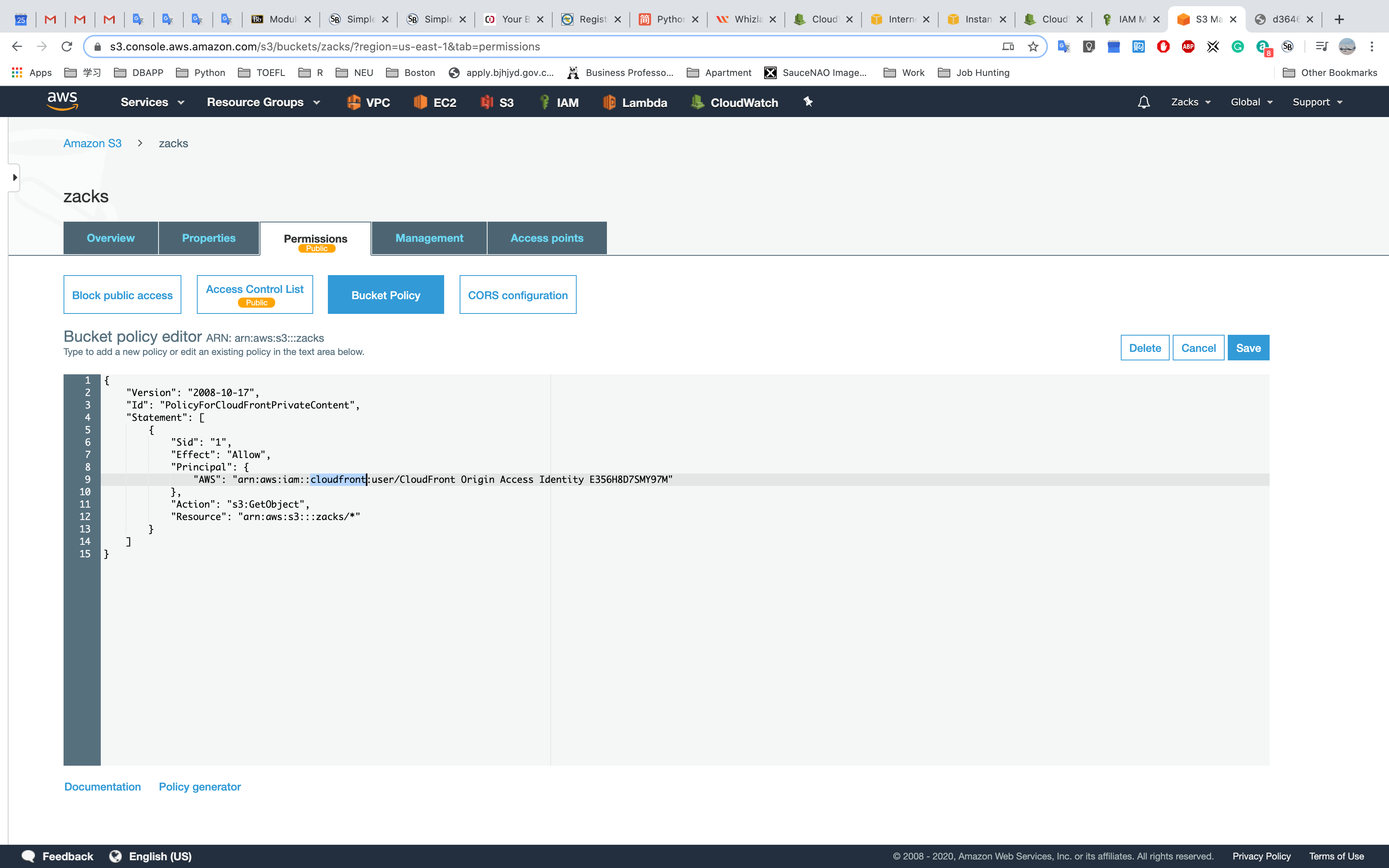

OAI

To require that users always access your Amazon S3 content using CloudFront URLs, you assign a special CloudFront user - an origin access identity - to your origin. You can either create a new origin access identity or reuse an existing one (Reusing an existing identity is recommended for the common use case). Additional configuration is required. In the Help, see “Serving Private Content through CloudFront”.

Restricted Viewer Access (Disabled)

Open S3 bucket - permissions - bucket policy

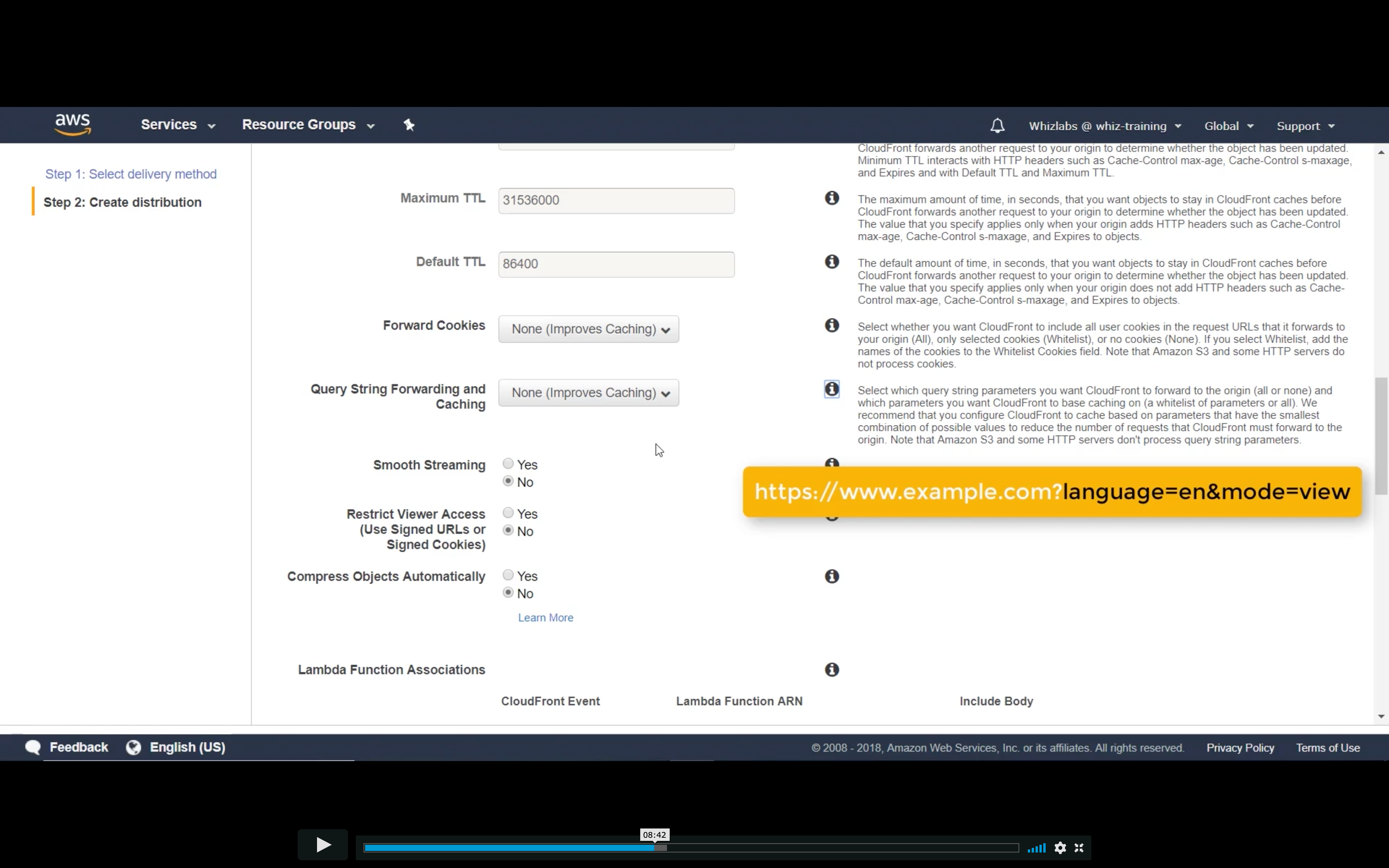

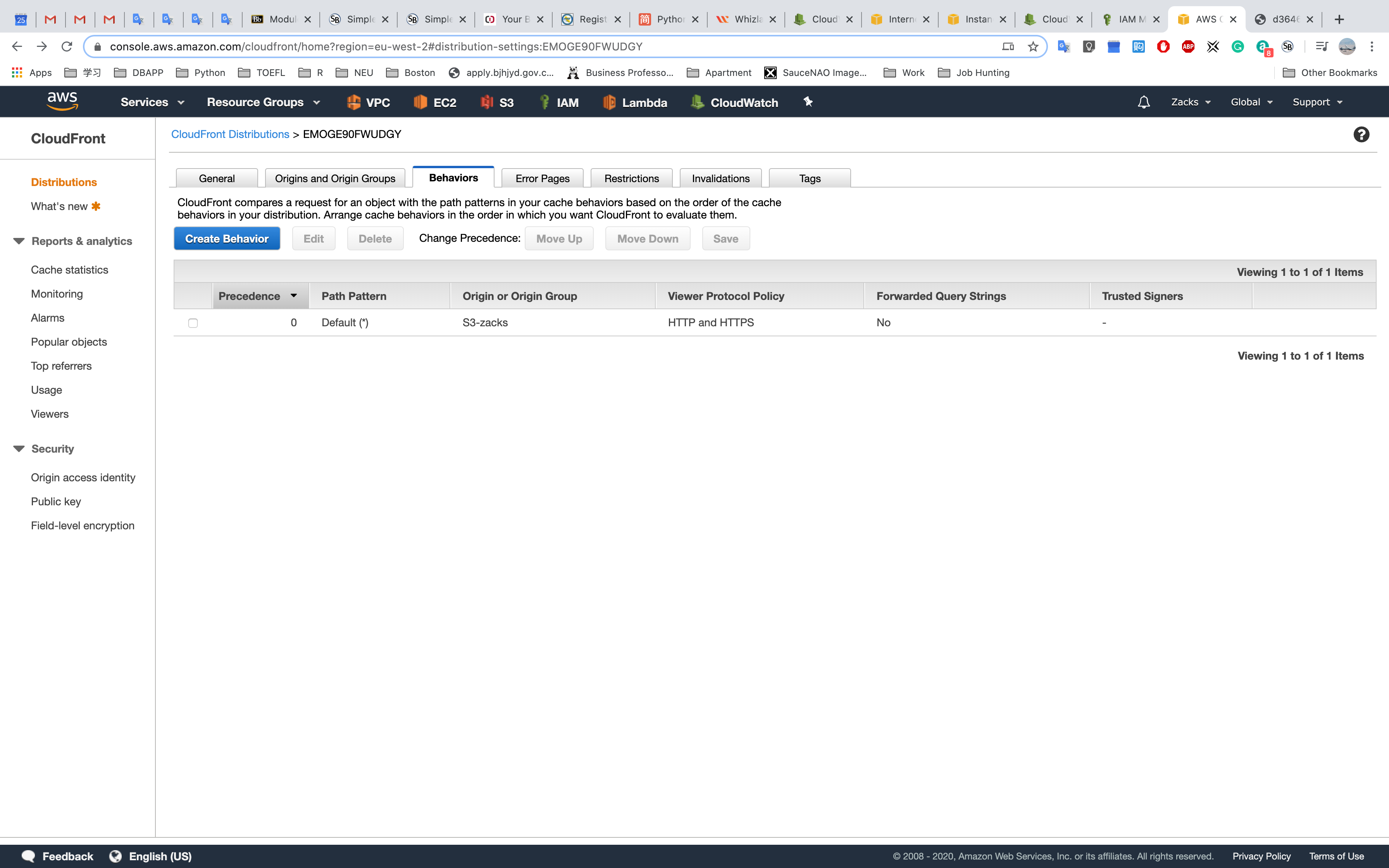

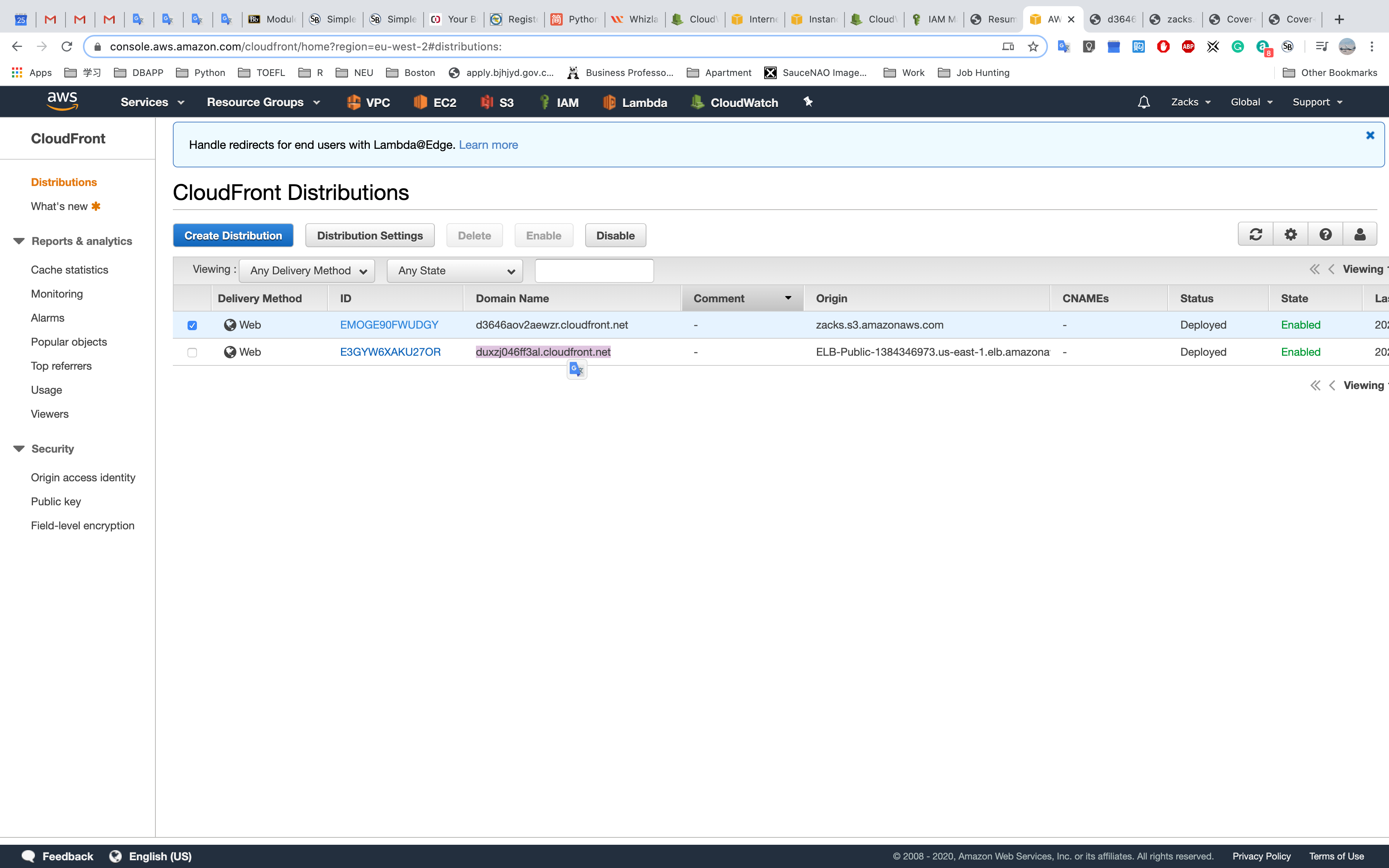

Distribute the content based on behaviors

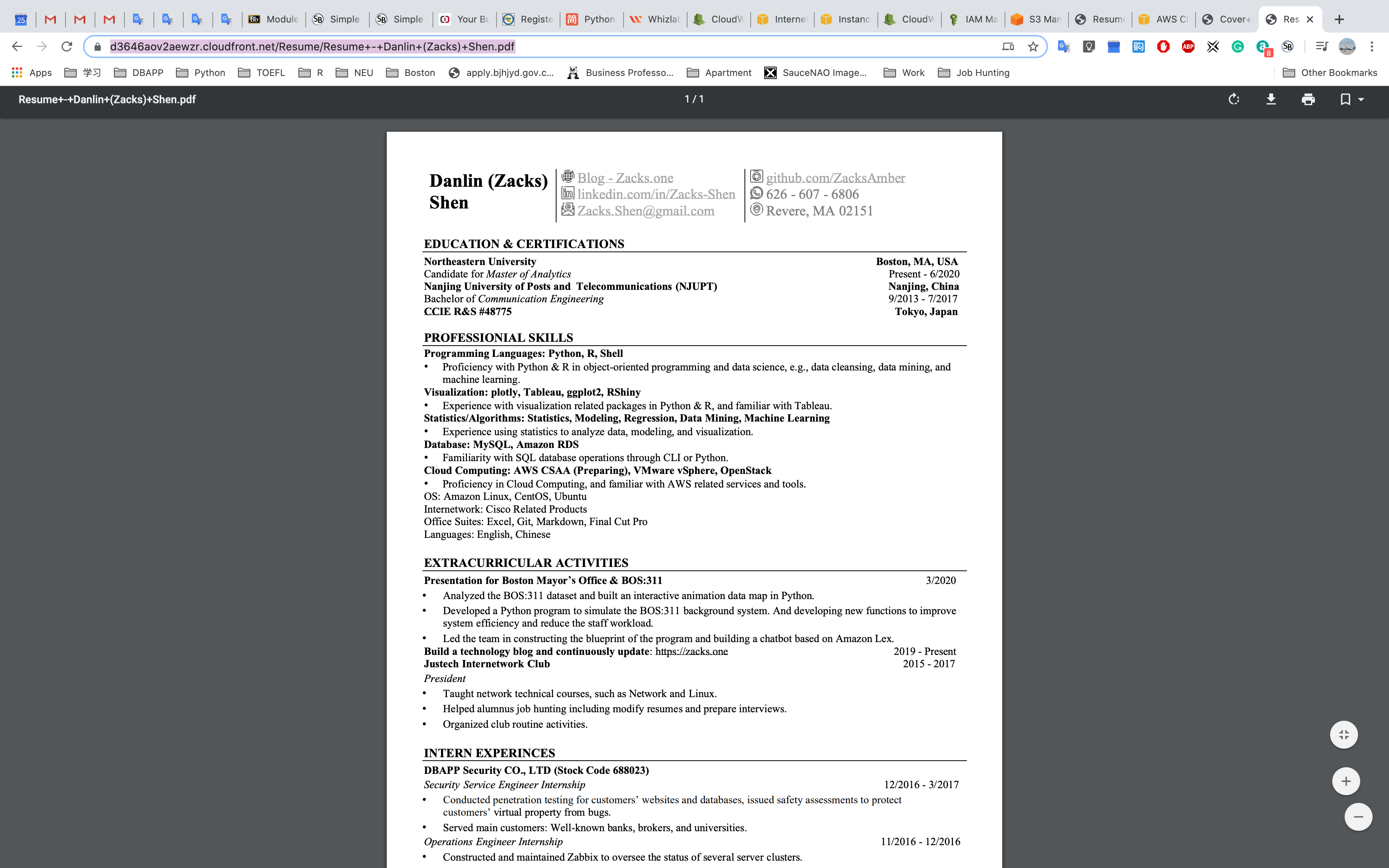

For S3 bucket (Not S3 static website), you can replace the domain name part from the origin to CDN. For example,

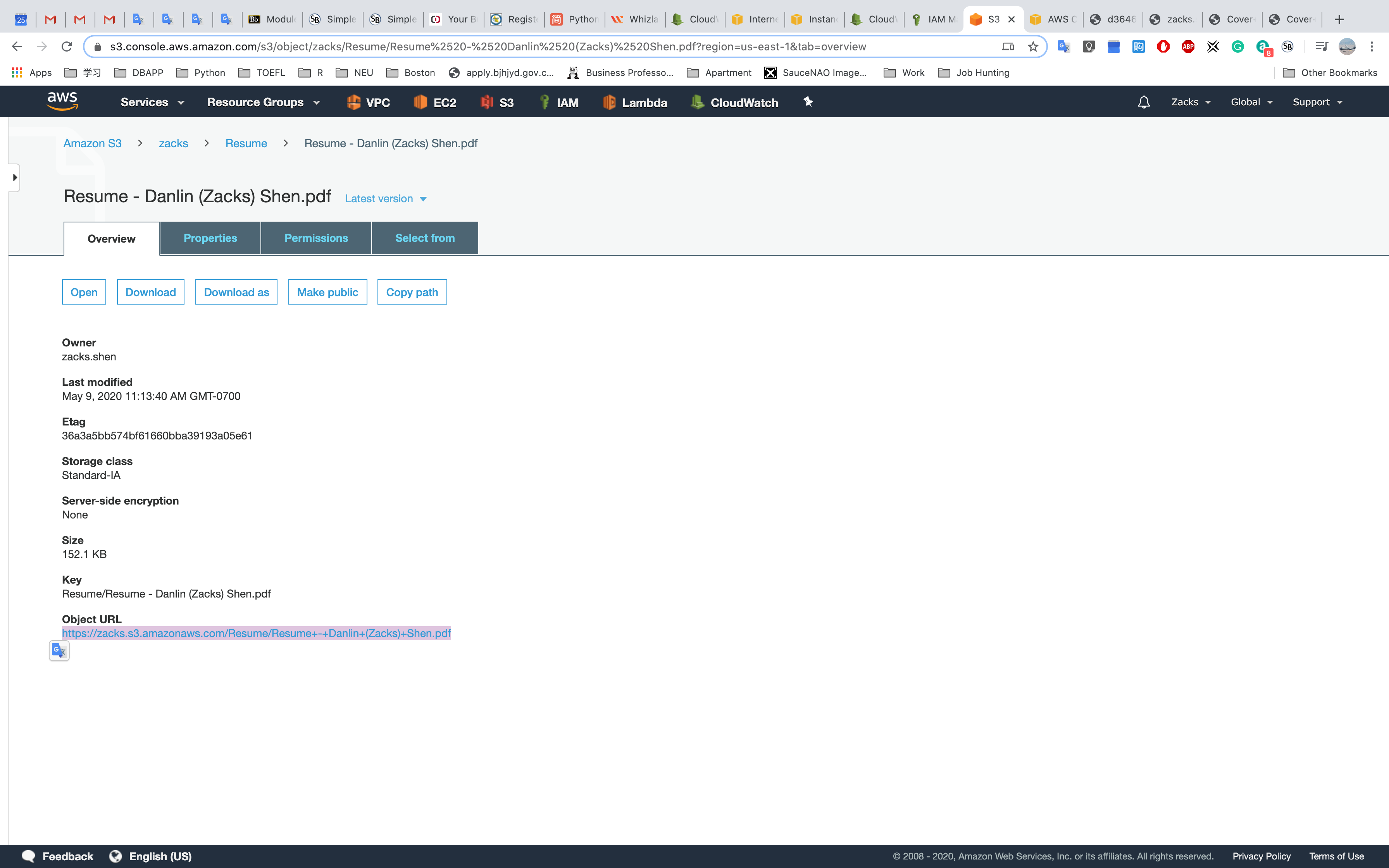

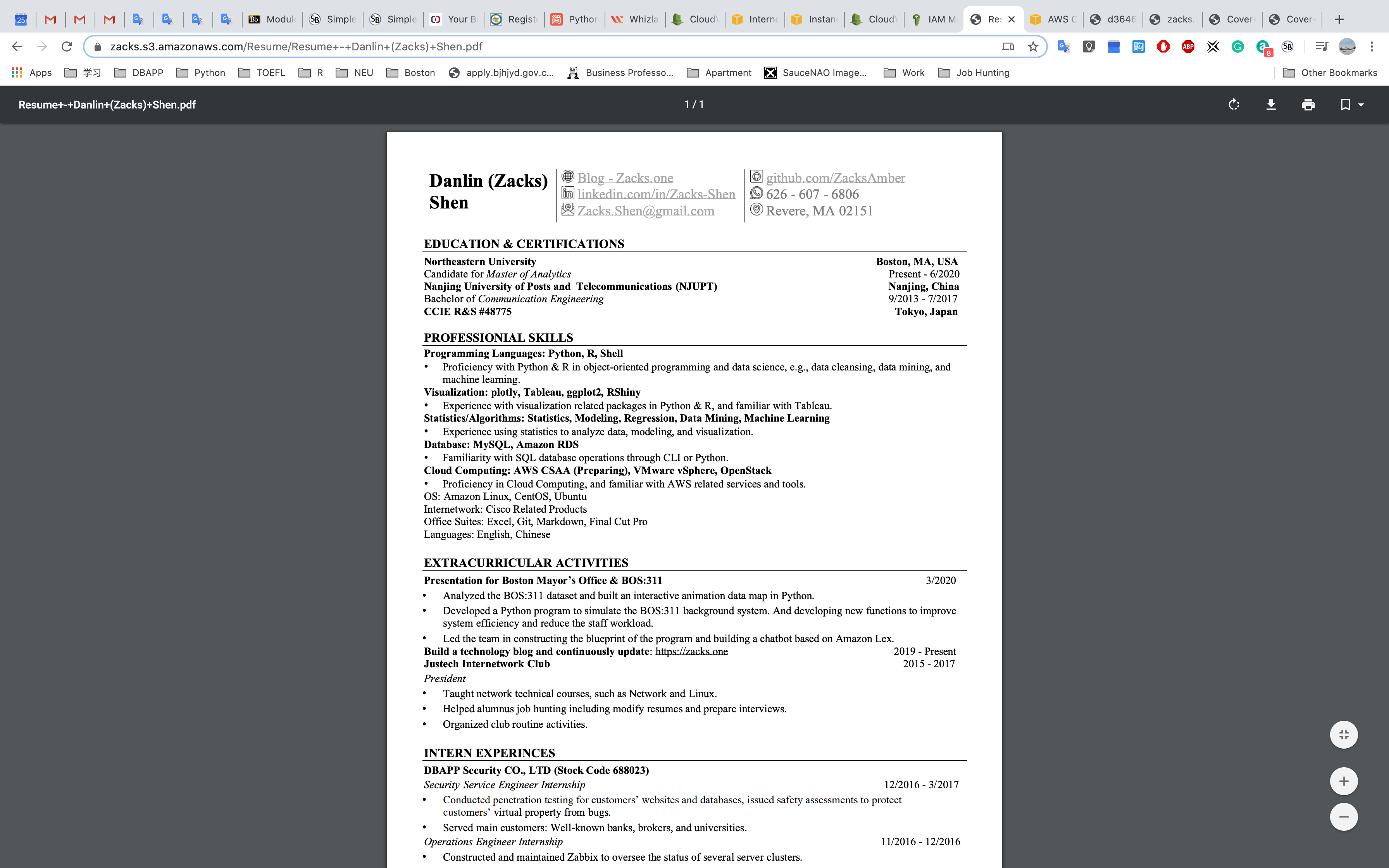

Here is a object in a S3 public bucket with url https://zacks.s3.amazonaws.com/Resume/Resume+-+Danlin+(Zacks)+Shen.pdf

Open it.

Copy the CDN domain name: d3646aov2aewzr.cloudfront.net

Replace the origin domain name to CDN domain name:

https://zacks.s3.amazonaws.com/Resume/Resume+-+Danlin+(Zacks)+Shen.pdf

⬇️⬇️

https://d3646aov2aewzr.cloudfront.net/Resume/Resume+-+Danlin+(Zacks)+Shen.pdf

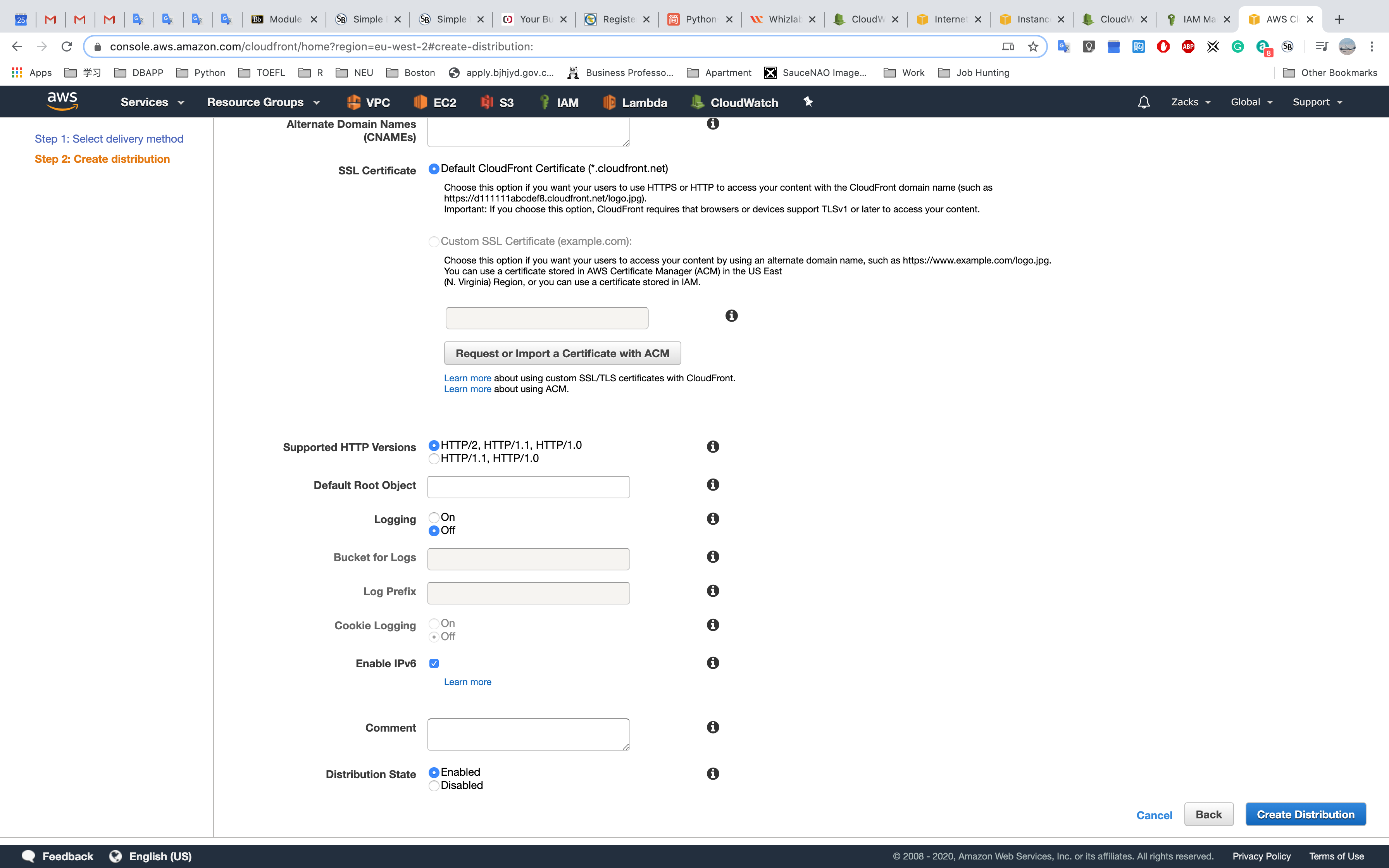

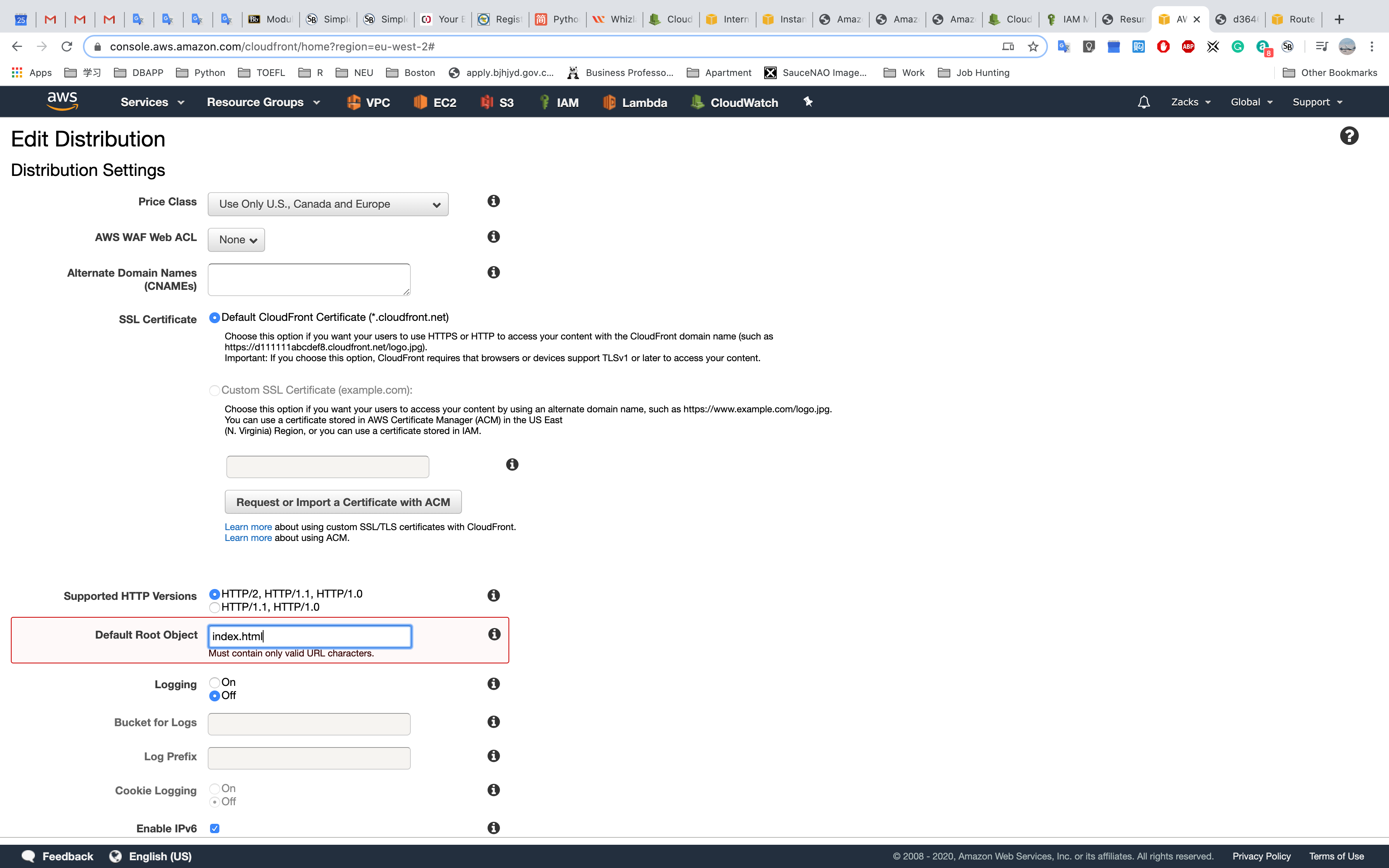

Or you can give it a default root object in CloudFront Edit Distribution

Lab - ELB

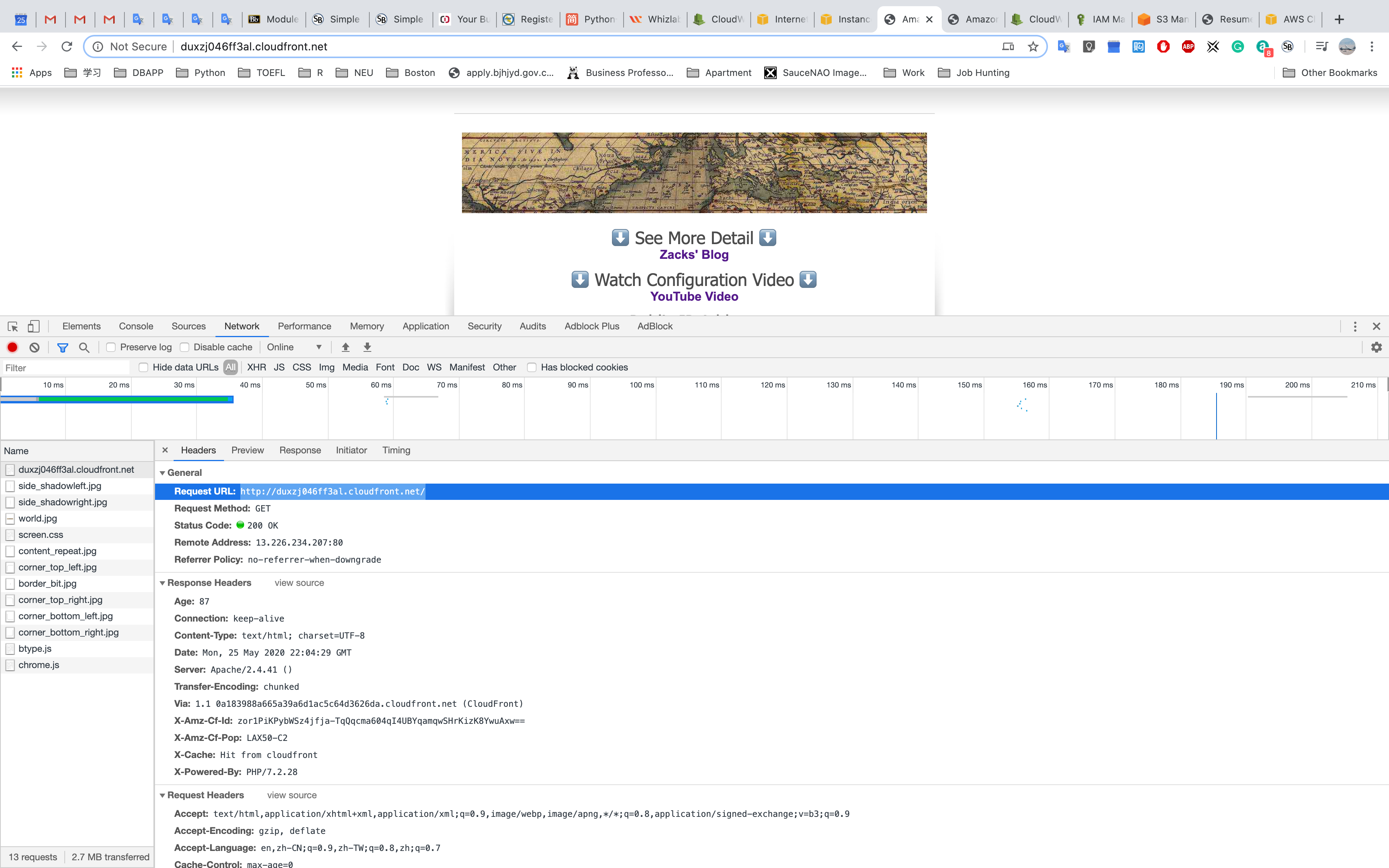

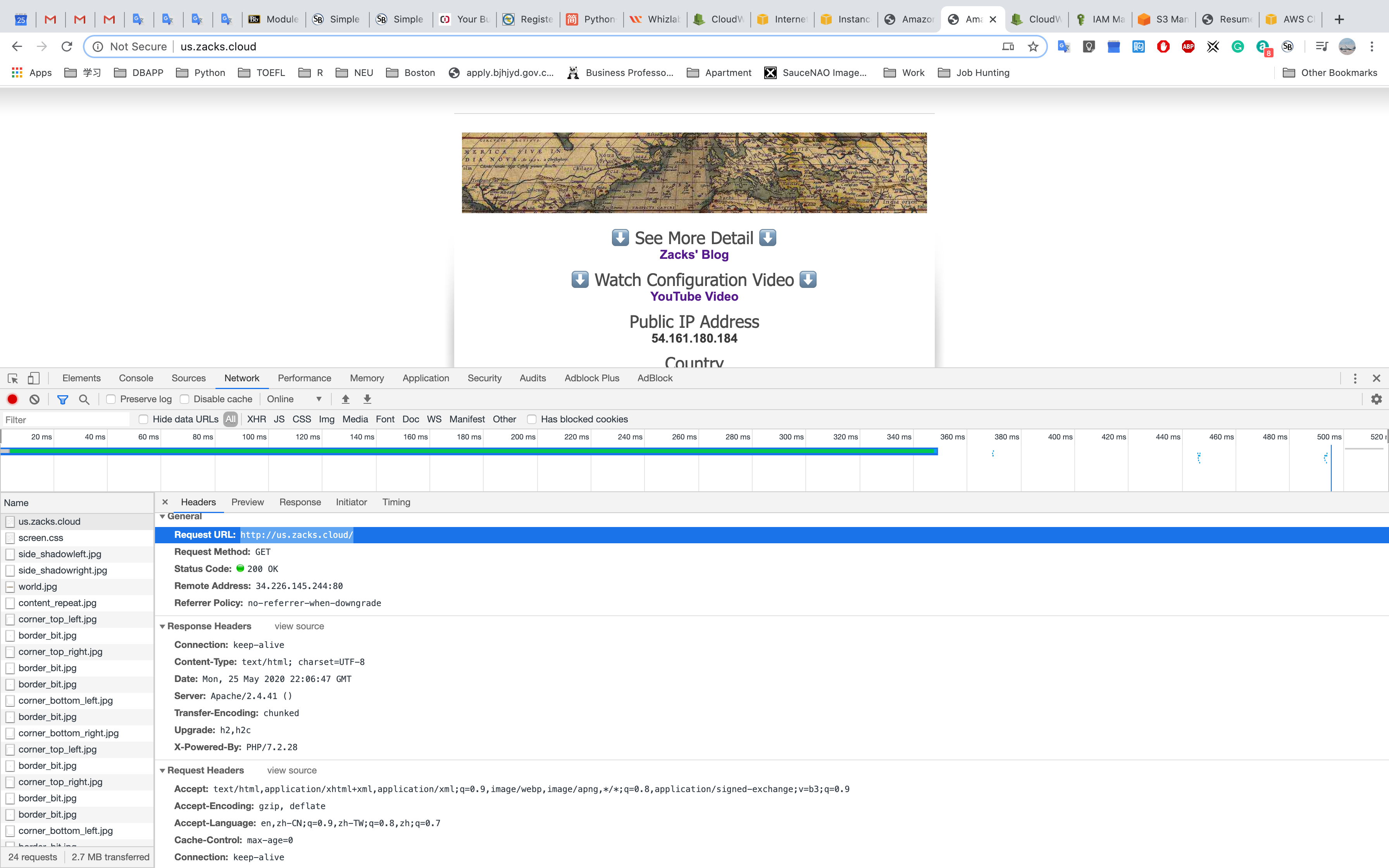

Try to visit cloudfront cached web and original web. Then observe the requests from them.

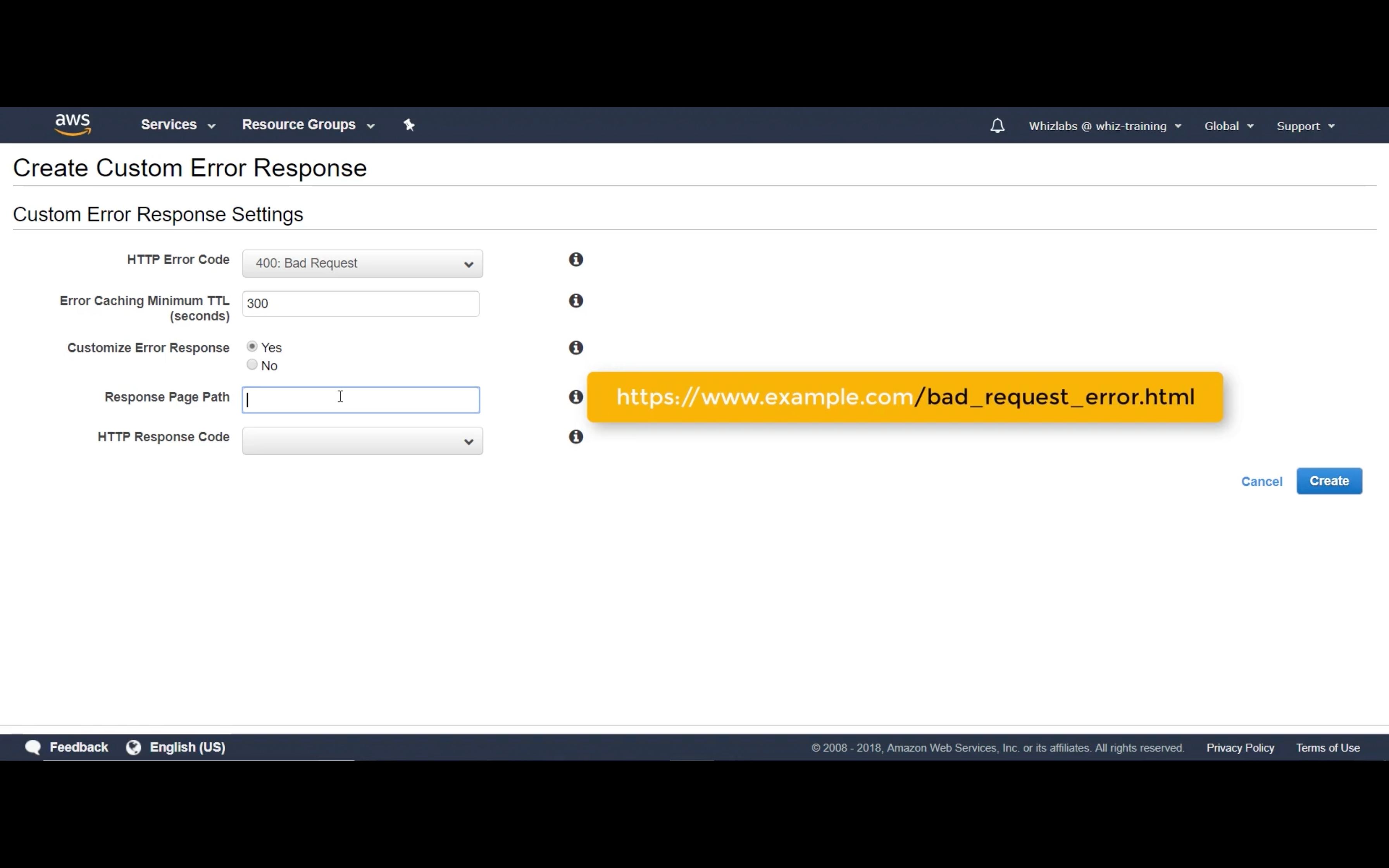

Restrictions

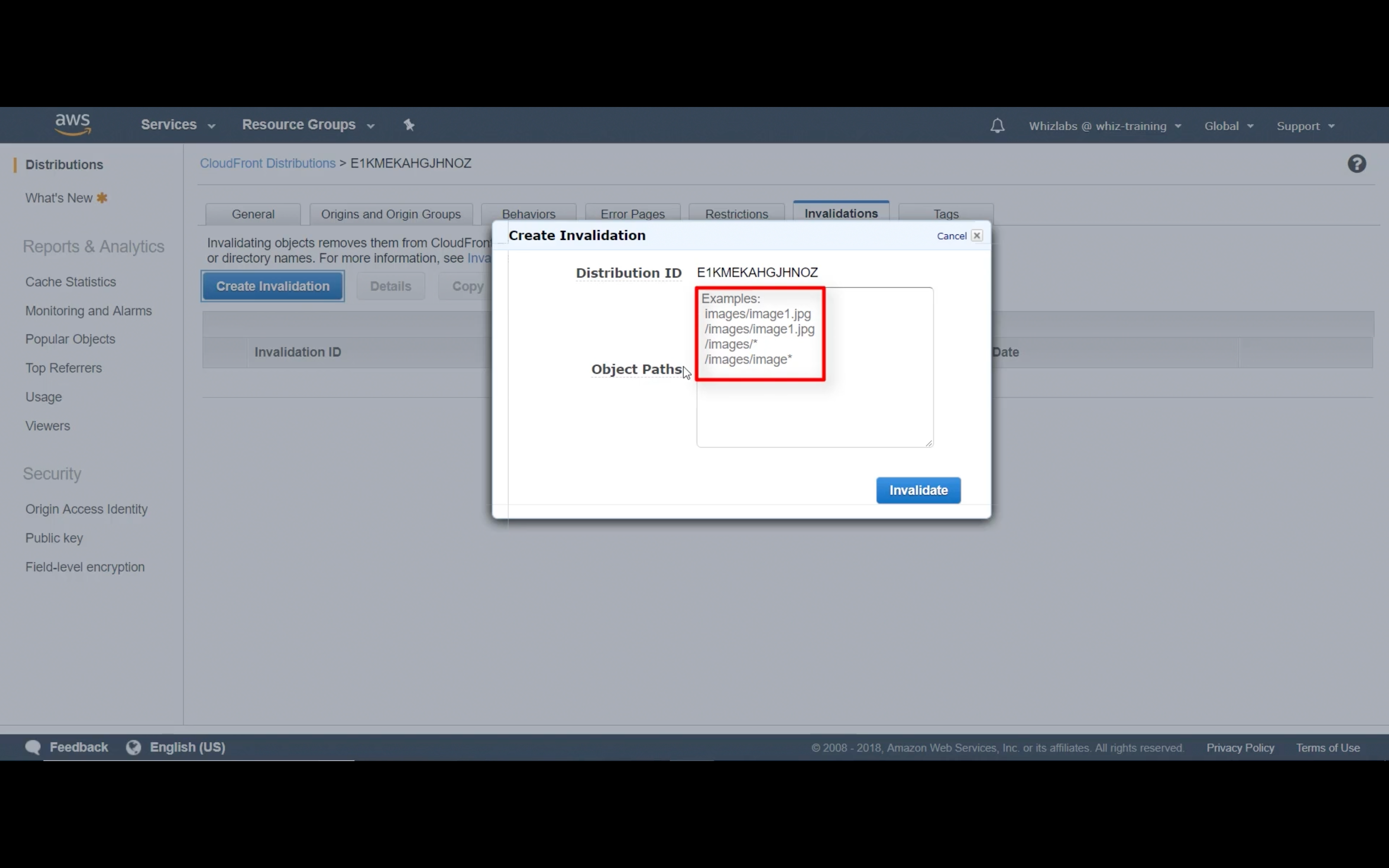

Invalidations

CloudWatch

Route 53

CloudFront Cache Statistics Reports

RequestCount

The total number of requests for all HTTP status codes (for example, 200 or 404) and all methods (for example, GET, HEAD, or POST).

HitCount

The number of viewer requests for which the object is served from a CloudFront edge cache.

MissCount

The number of viewer requests for which the object isn’t currently in an edge cache, so CloudFront must get the object from your origin.

ErrorCount

The number of viewer requests that resulted in an error, so CloudFront didn’t serve the object.

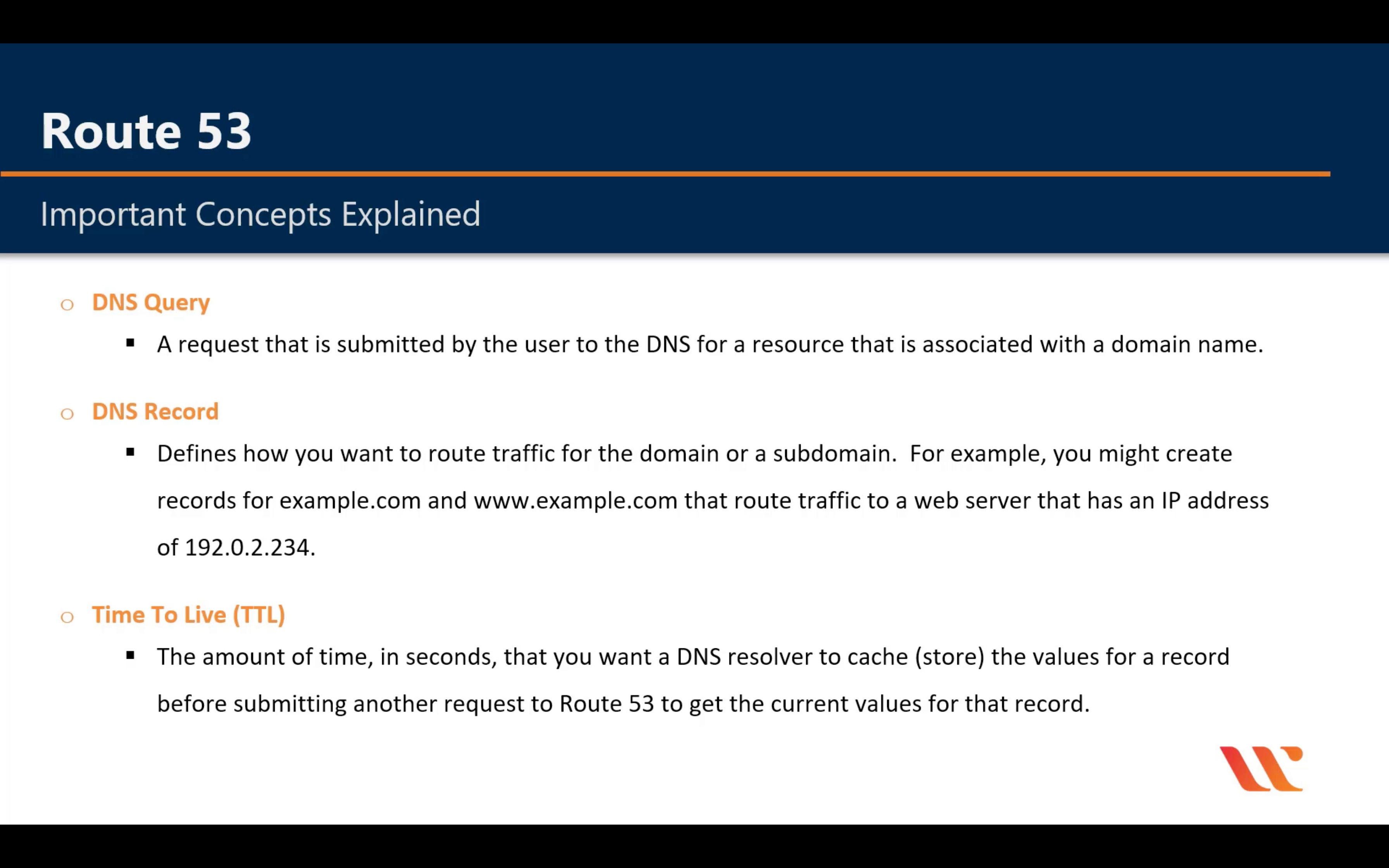

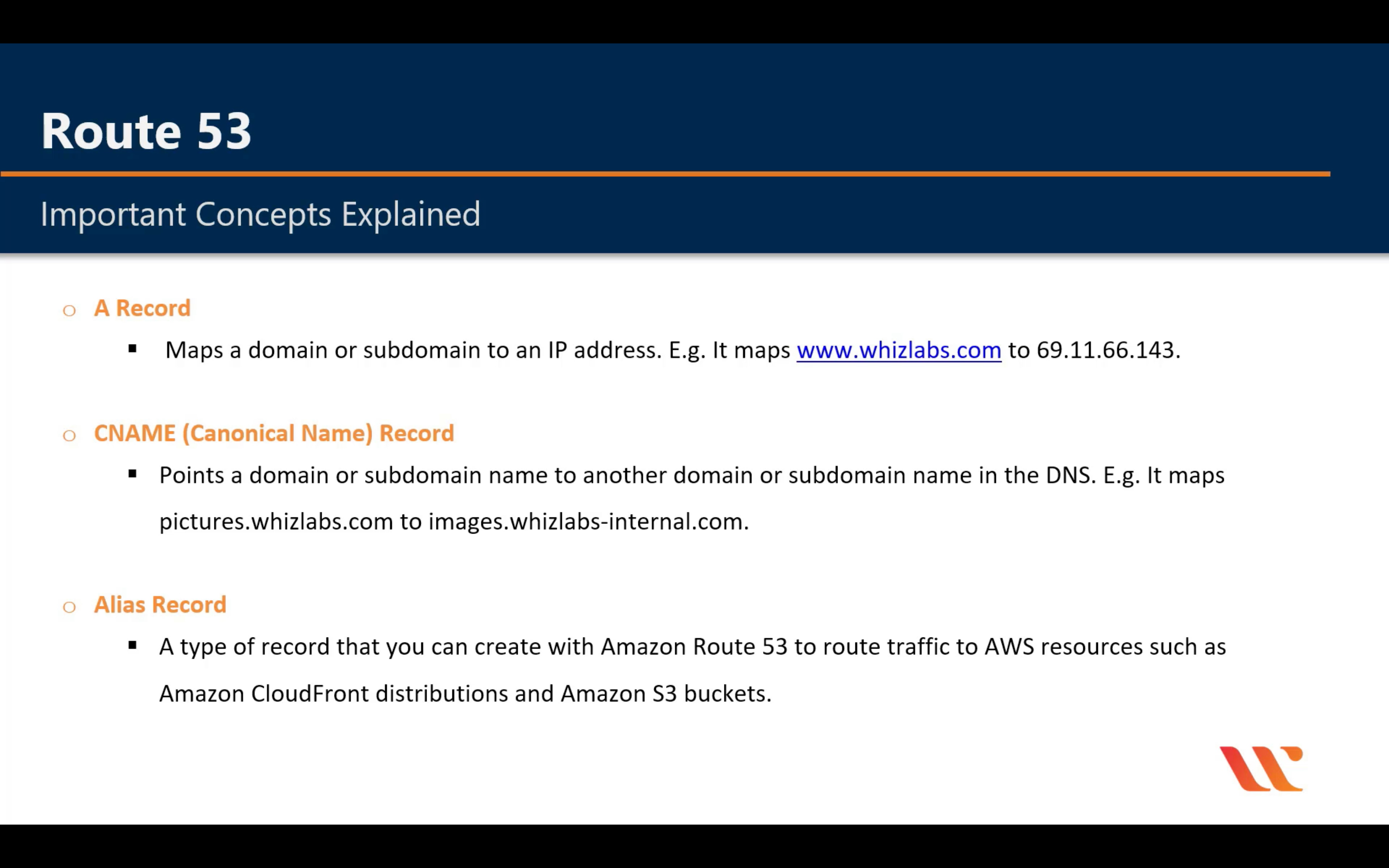

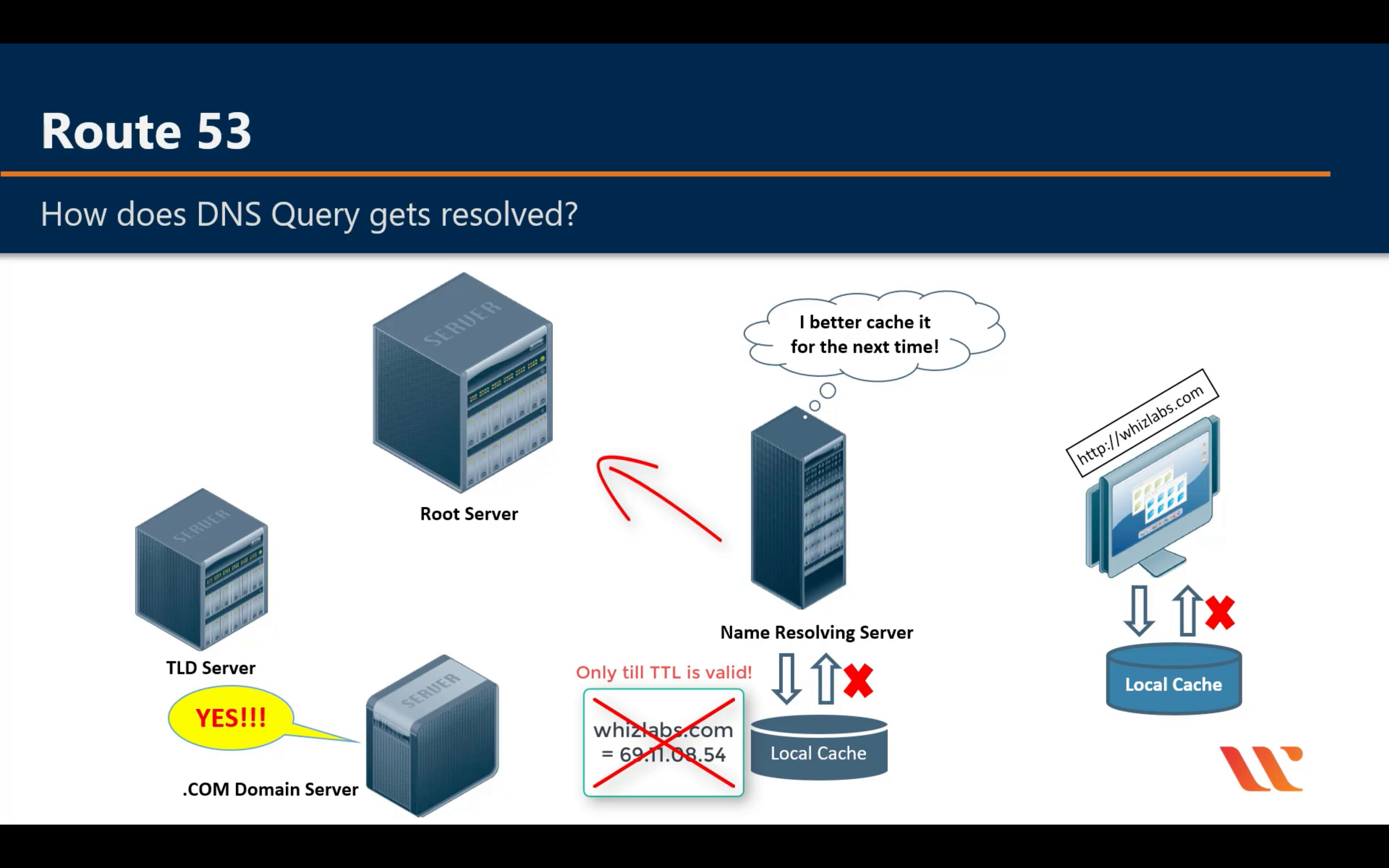

Amazon Route 53

Scalable domain name system (DNS)

www.google.com is also a subdomain

Route Policy

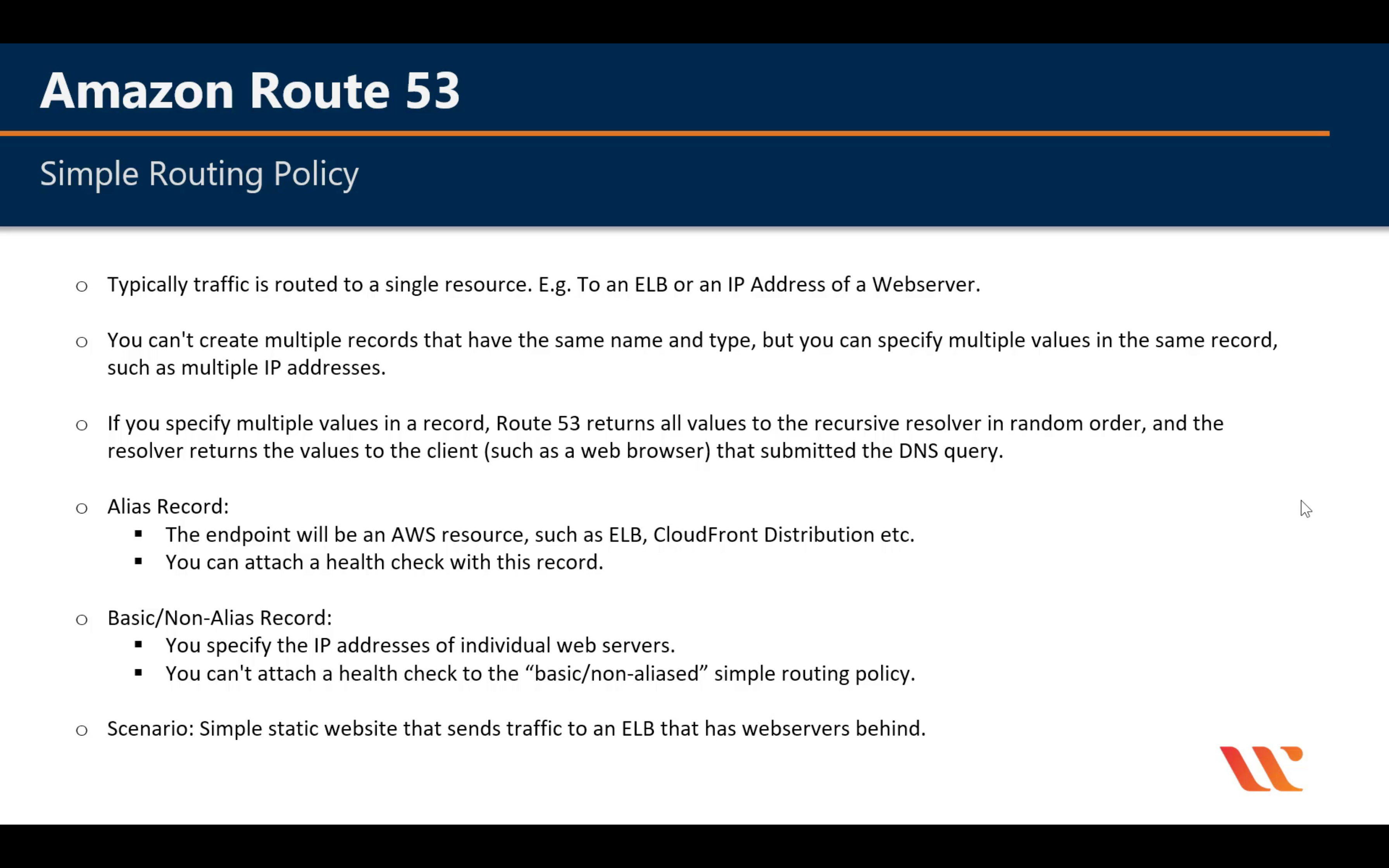

Simple Routing Policy

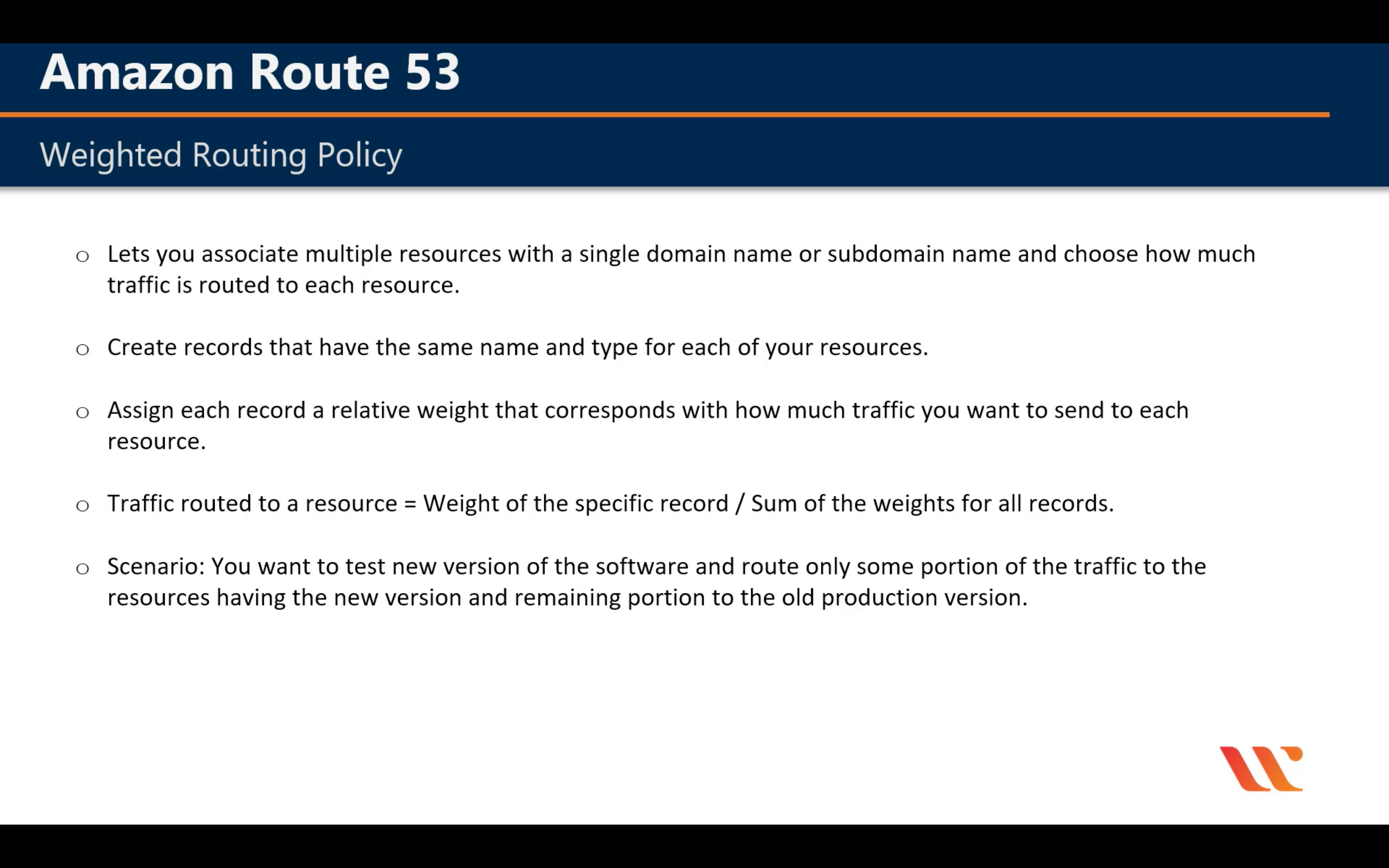

Weighted Routing Policy

Test it after you create all node. Otherwise your computer will cache the IP address!

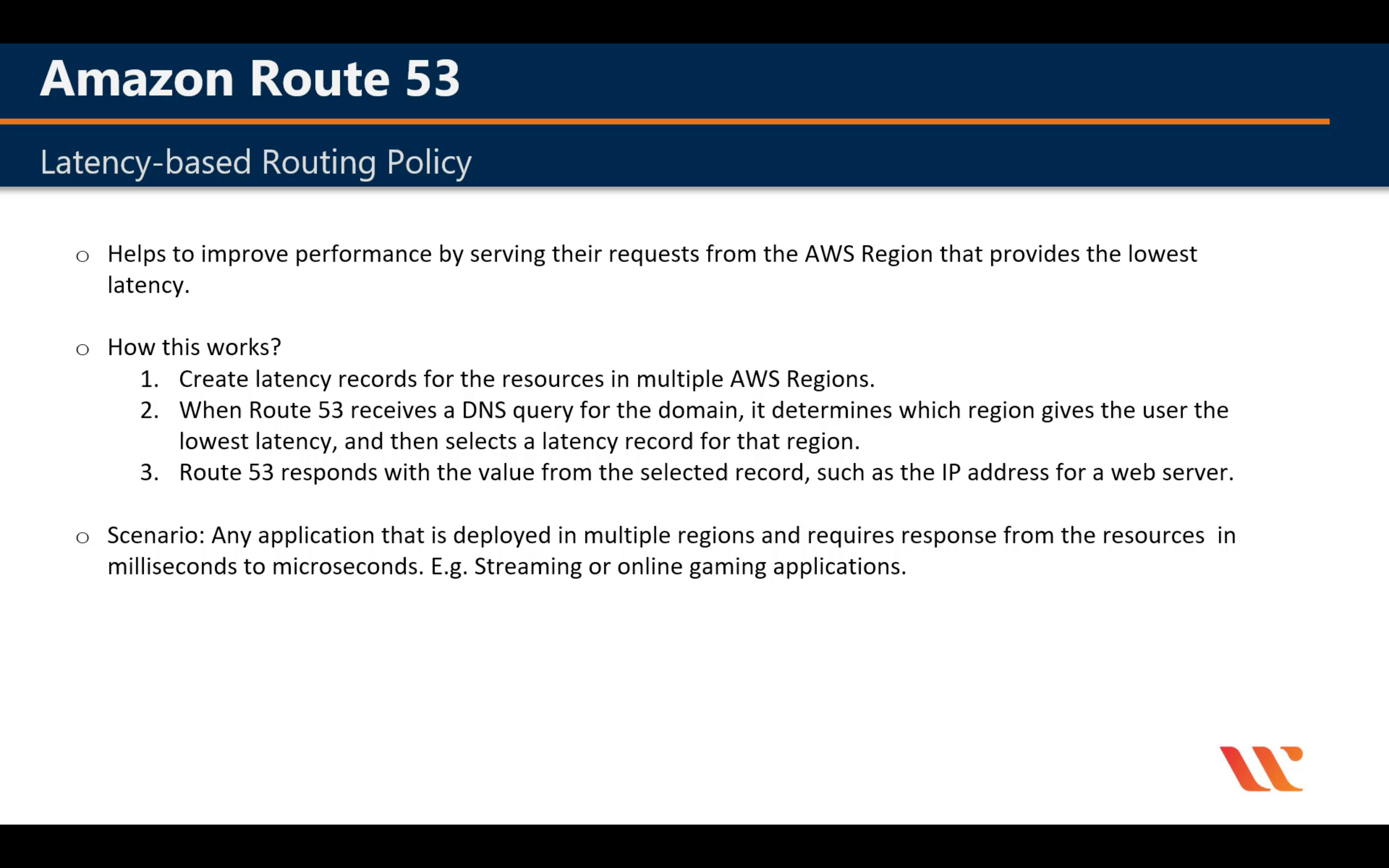

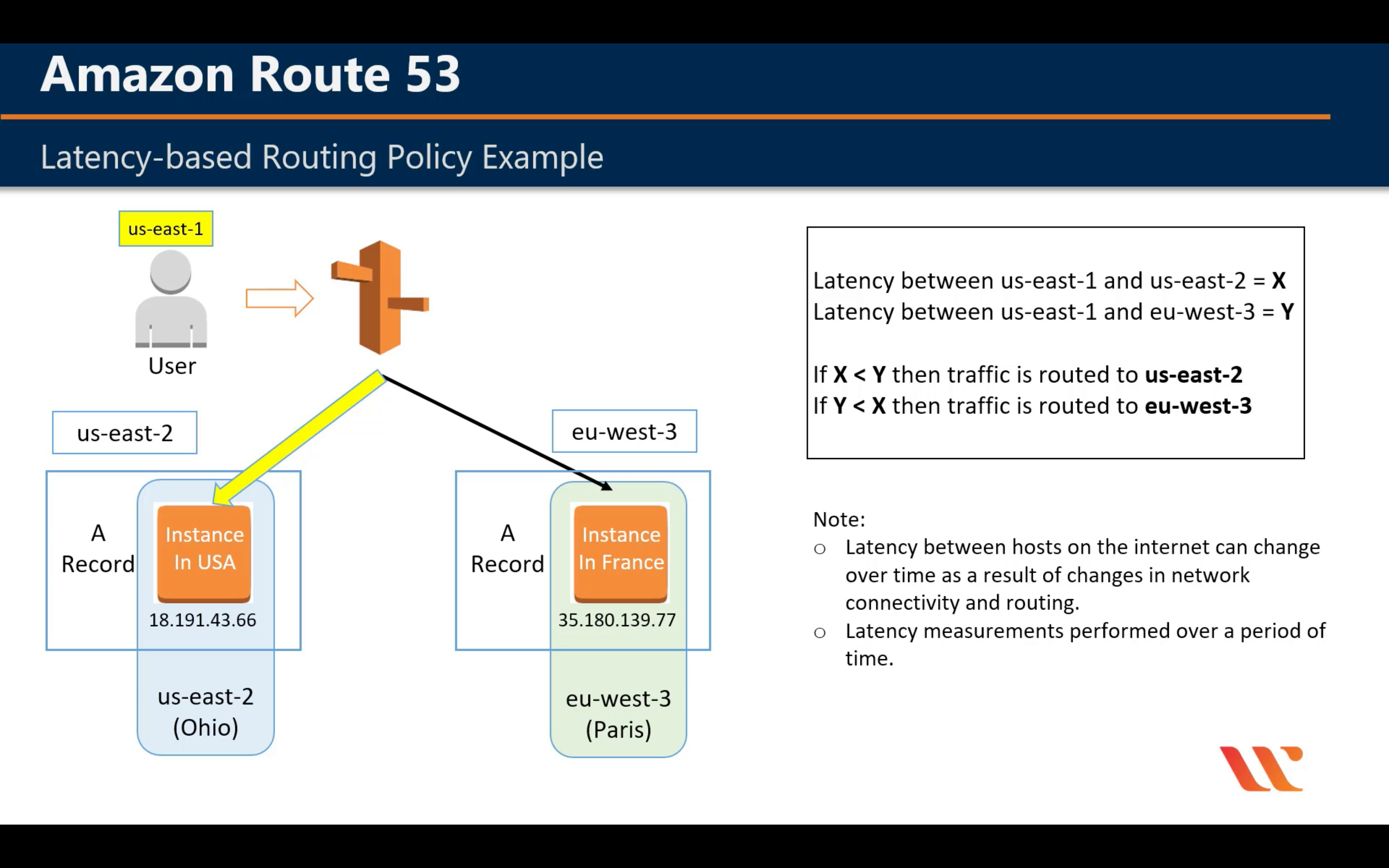

Latency Routing Policy

Geographical Routing Policy

For example, I have an ELB in EU and an ELB in US. And I create two A records with alias of each ELB and the Location of US and EU. When I test the record from an Canada IP address, it will fail.

Failover Routing Policy

Create Primary Health Check then Create an Secondary Check(If you have third record)

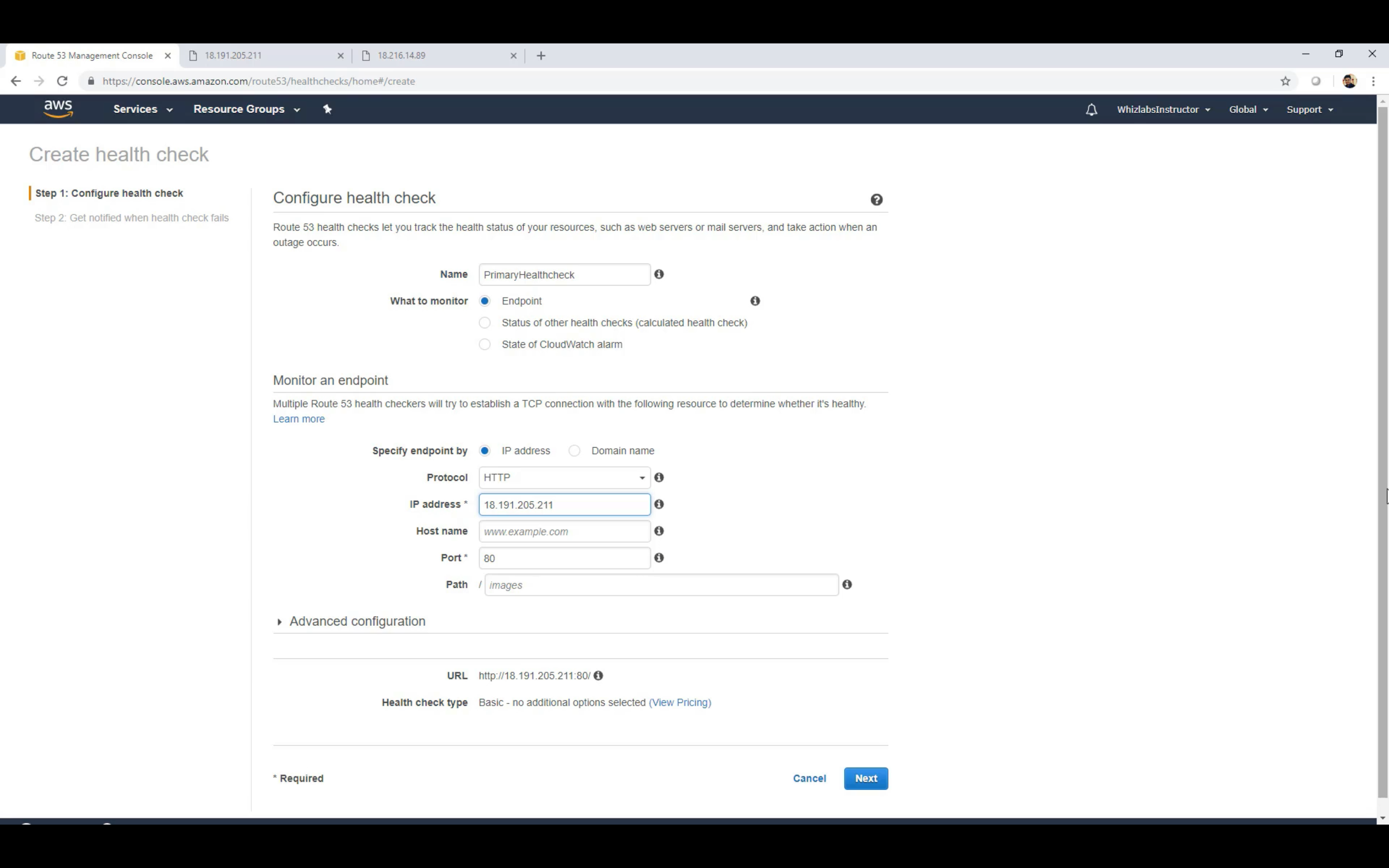

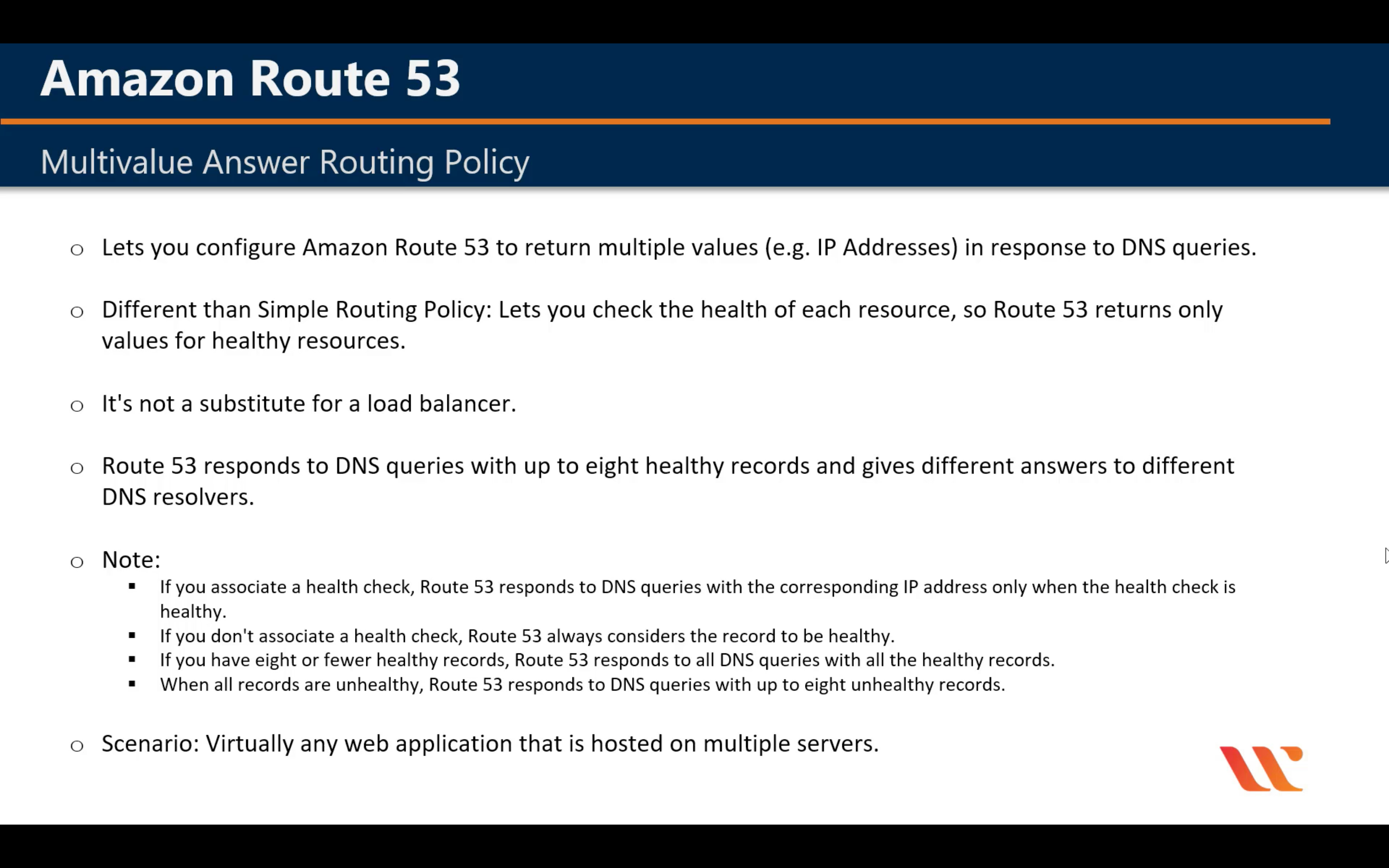

Multivalue Answer Routing Policy

Create health check for each ip address (not for elb)

AWS App Mesh

Monitor and control microservices

AWS Cloud Map

Service discovery for cloud resources

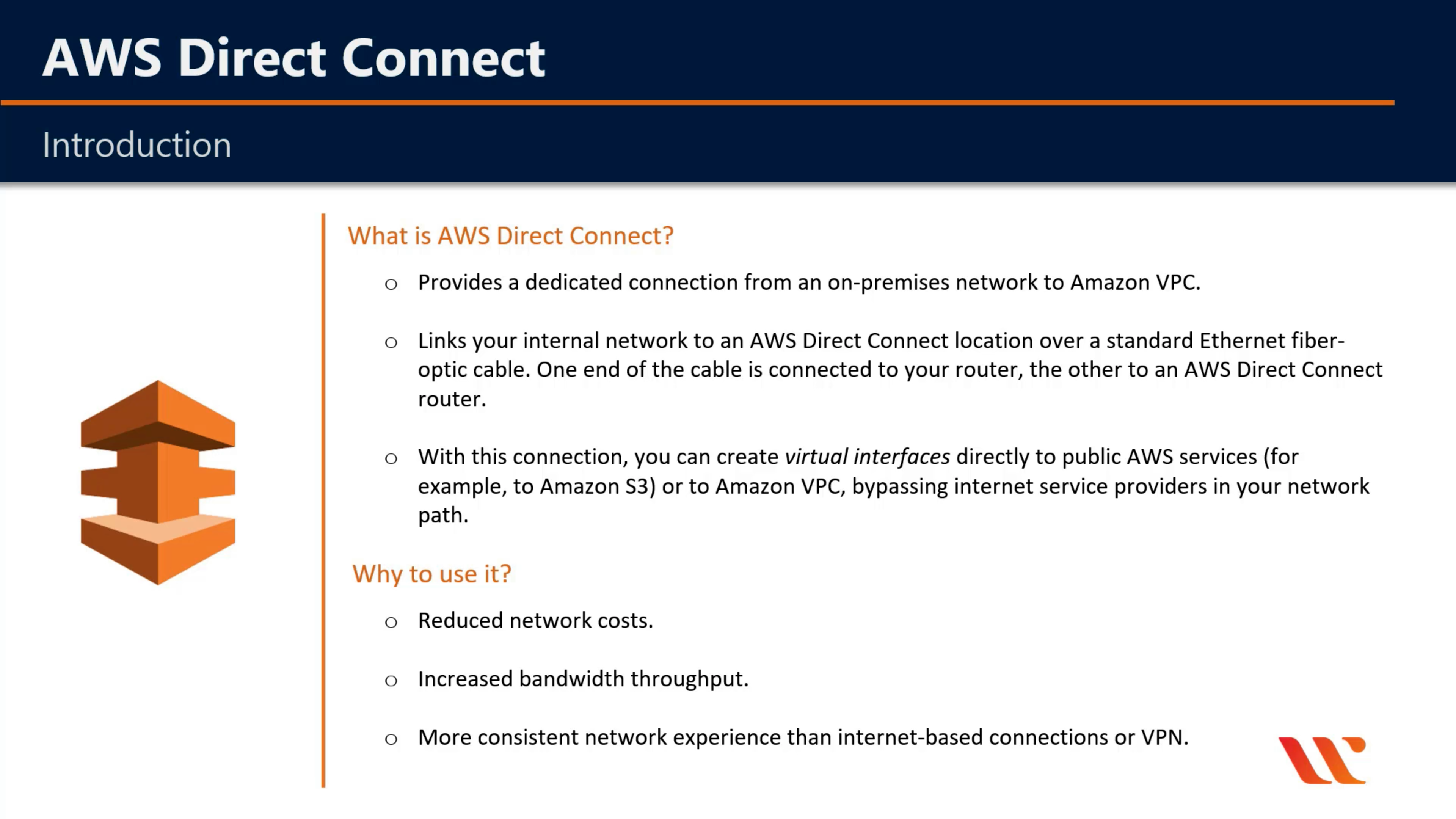

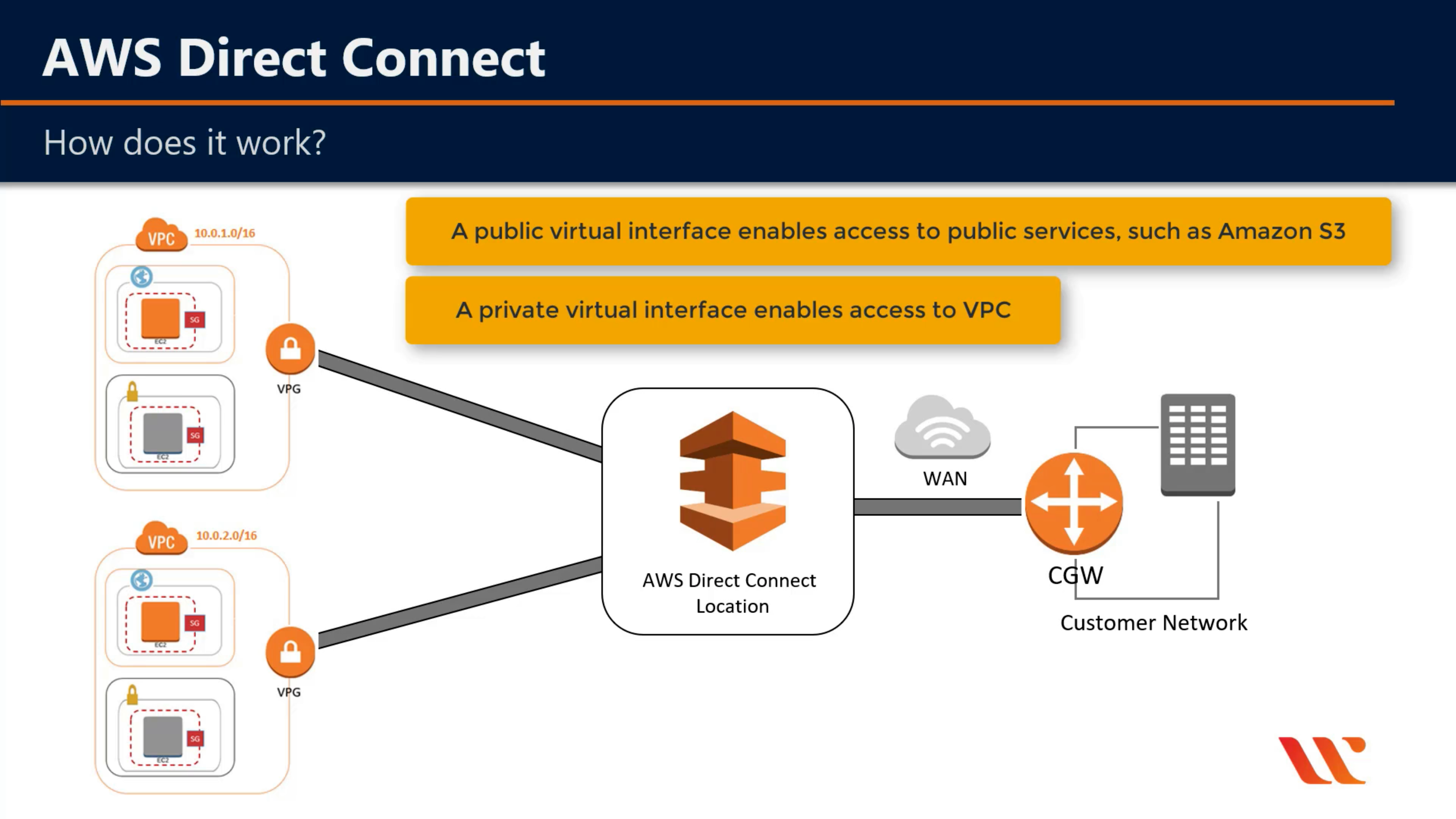

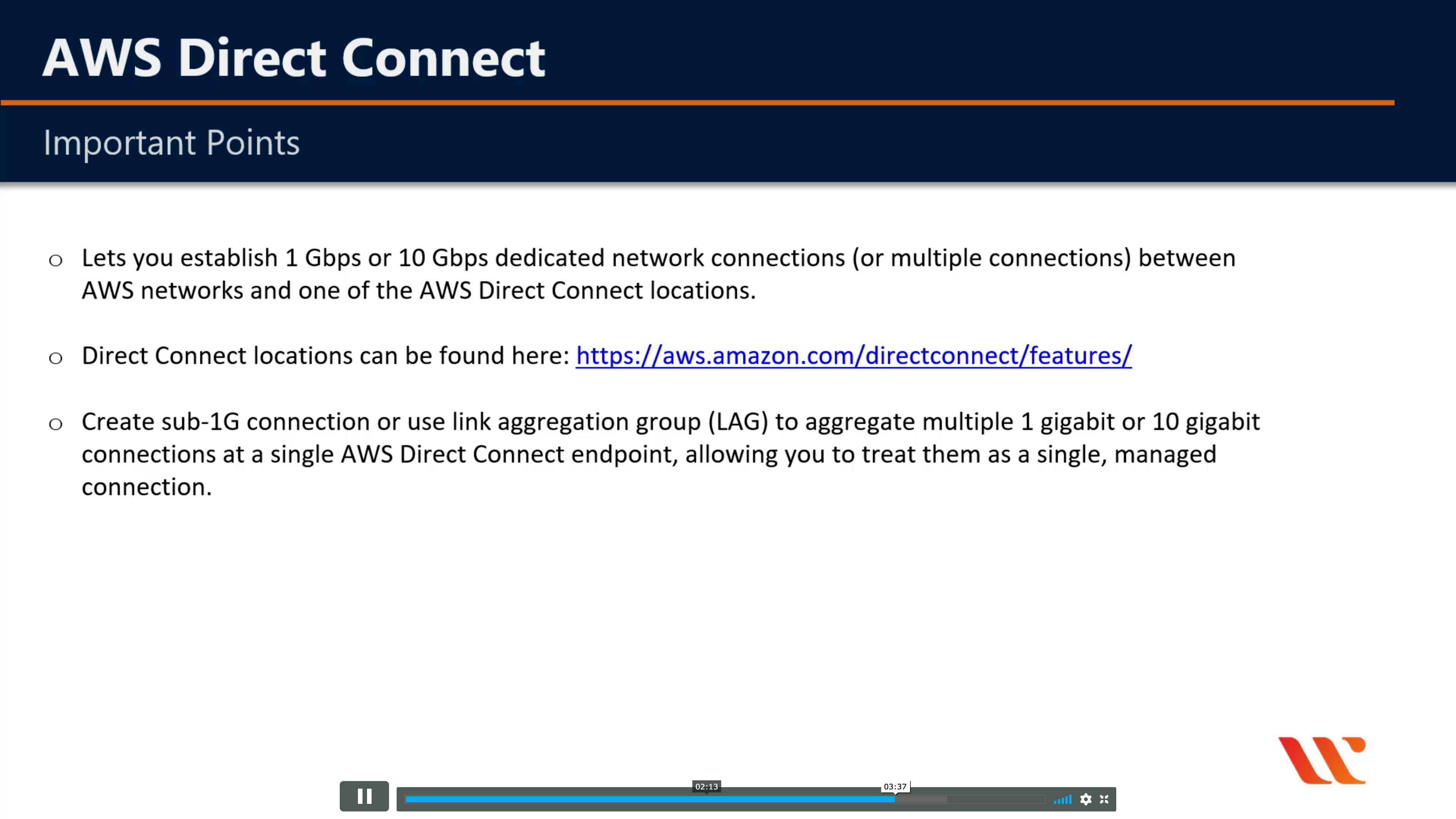

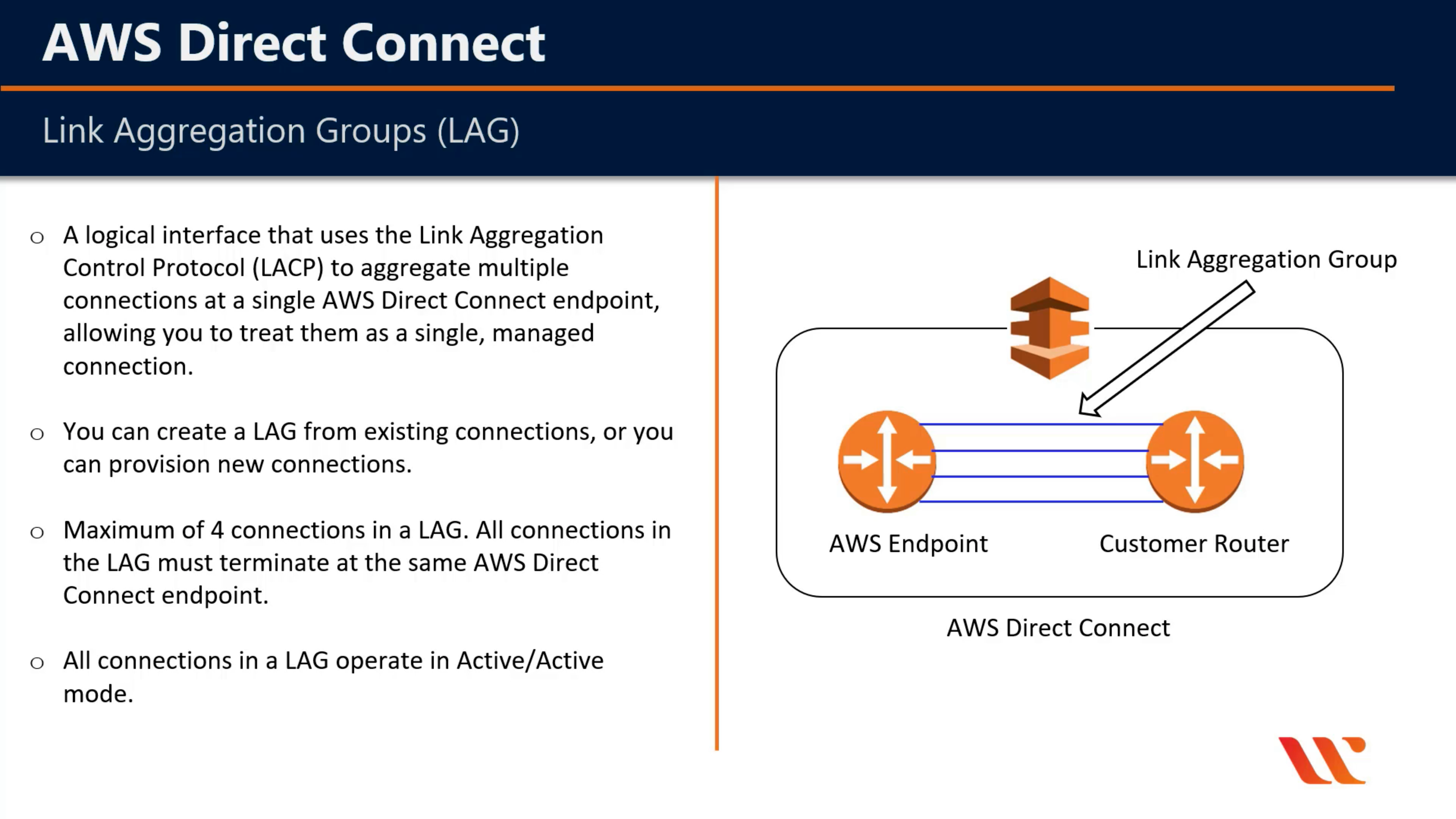

AWS Direct Connect

Dedicated network connection to AWS

- Working with Large Data Sets

- Real-time Data Feeds

- Hybrid Environments

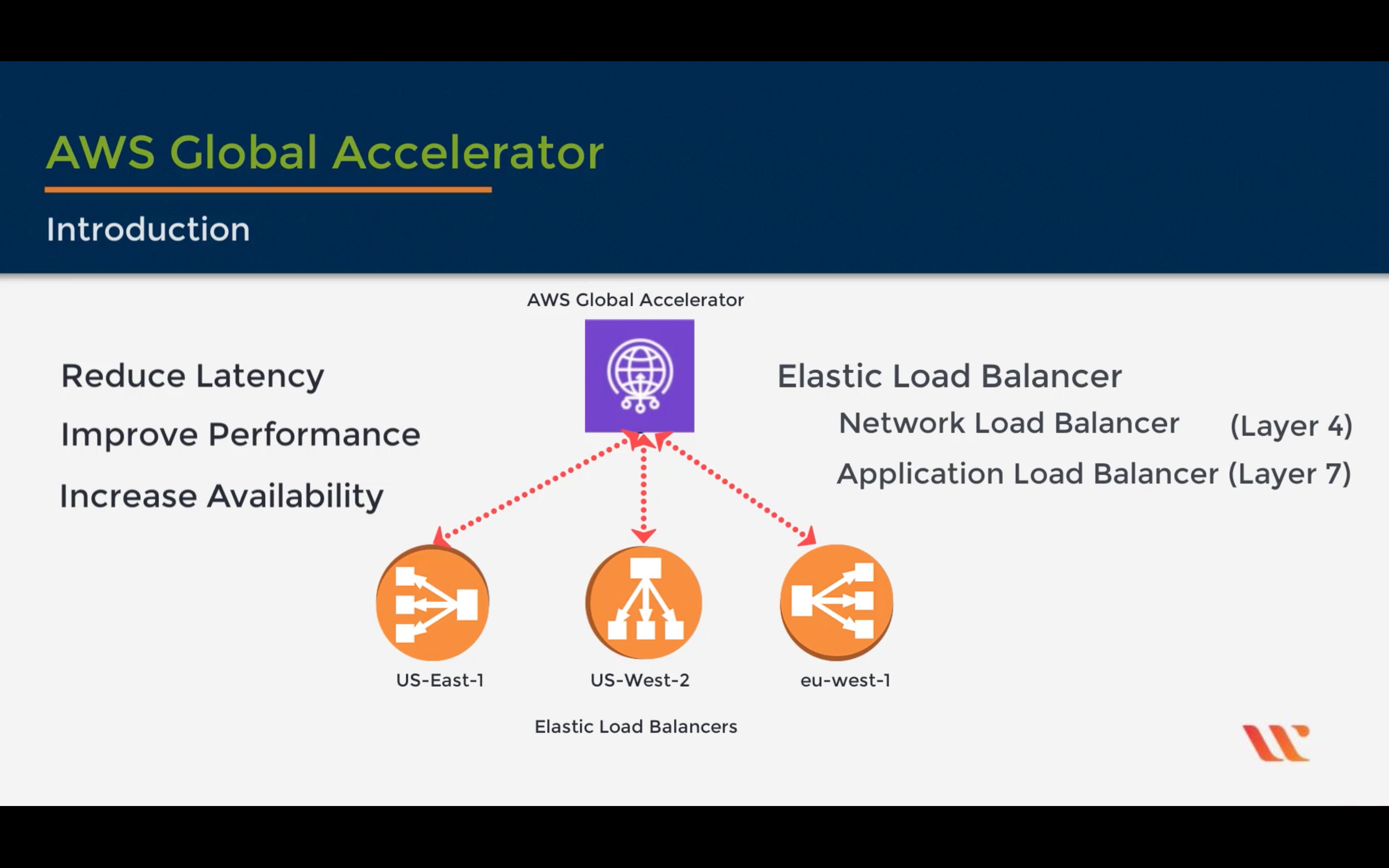

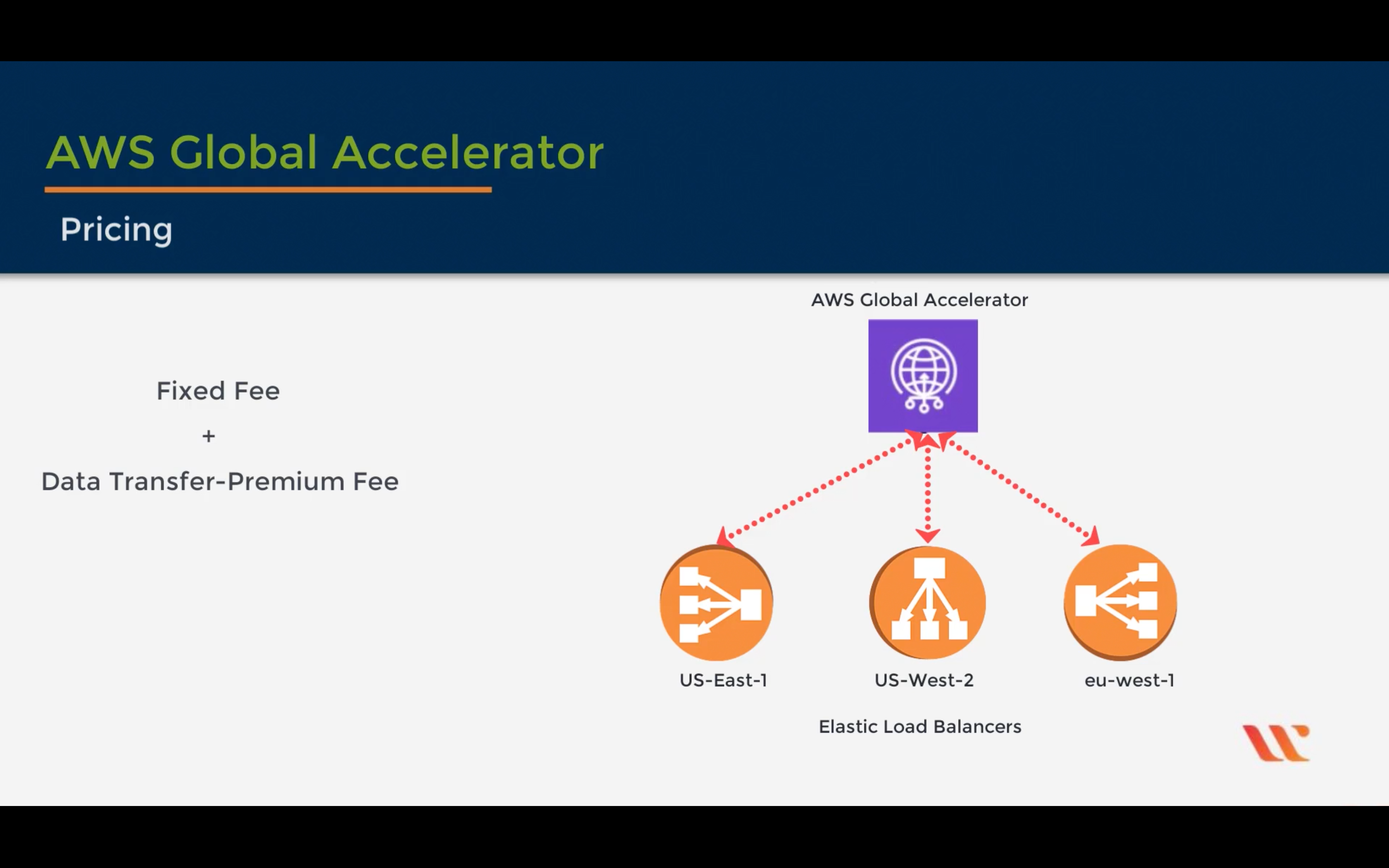

AWS Global Accelerator

Improve application availability and performance

AWS PrivateLink

Securely access services hosted on AWS

AWS Transit Gateway

Easily scale VPC and account connections

AWS VPN

Securely access your network resources

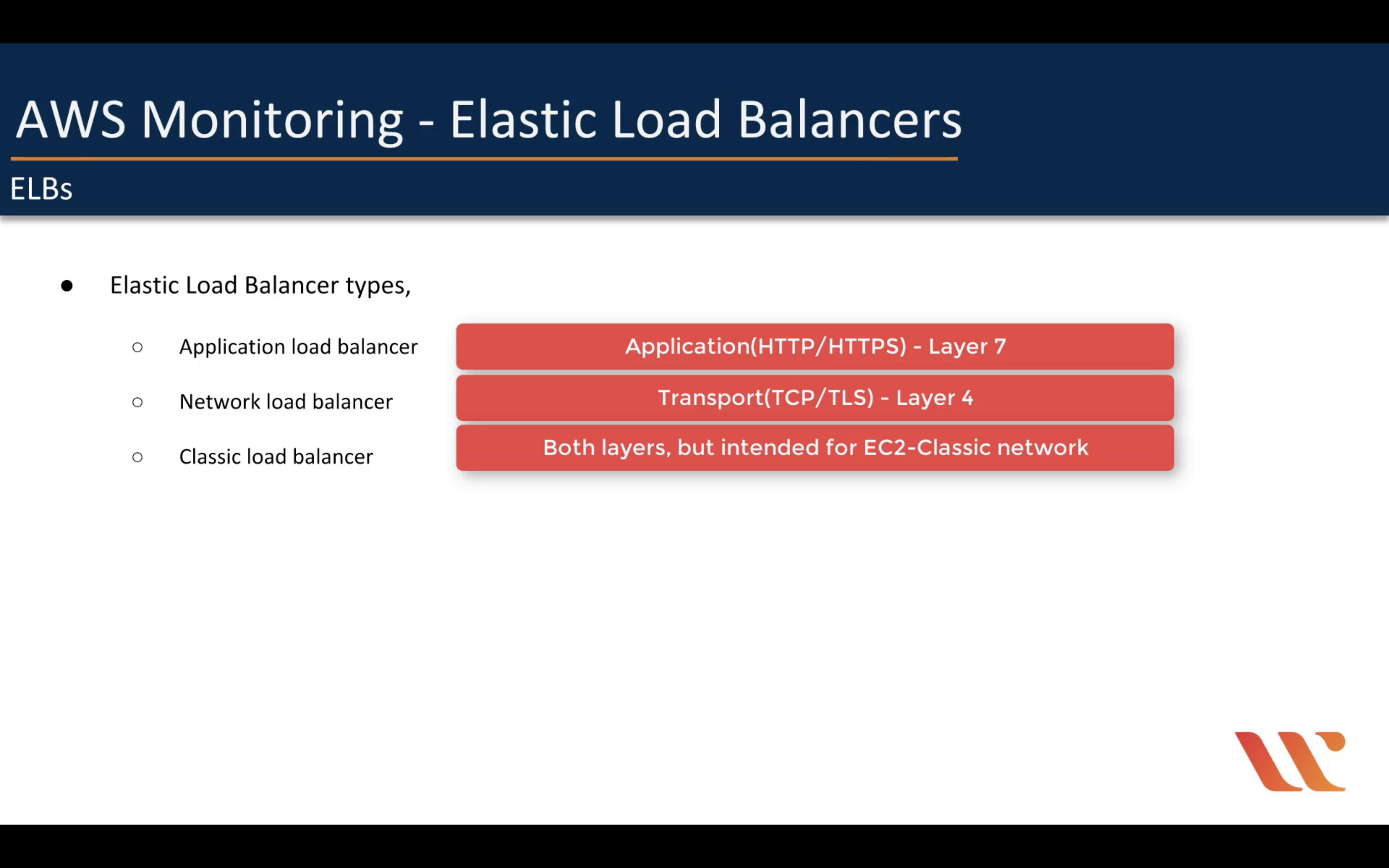

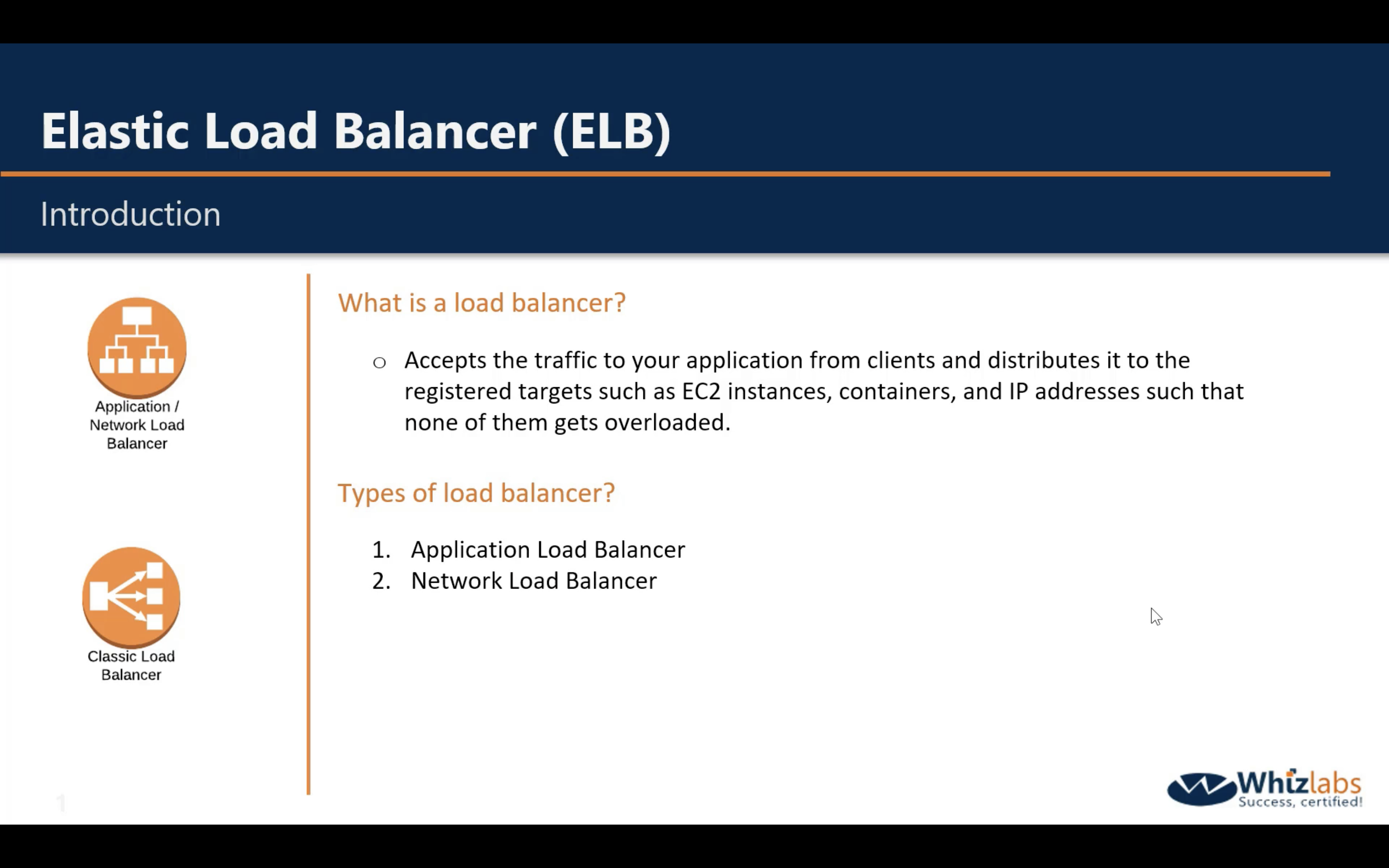

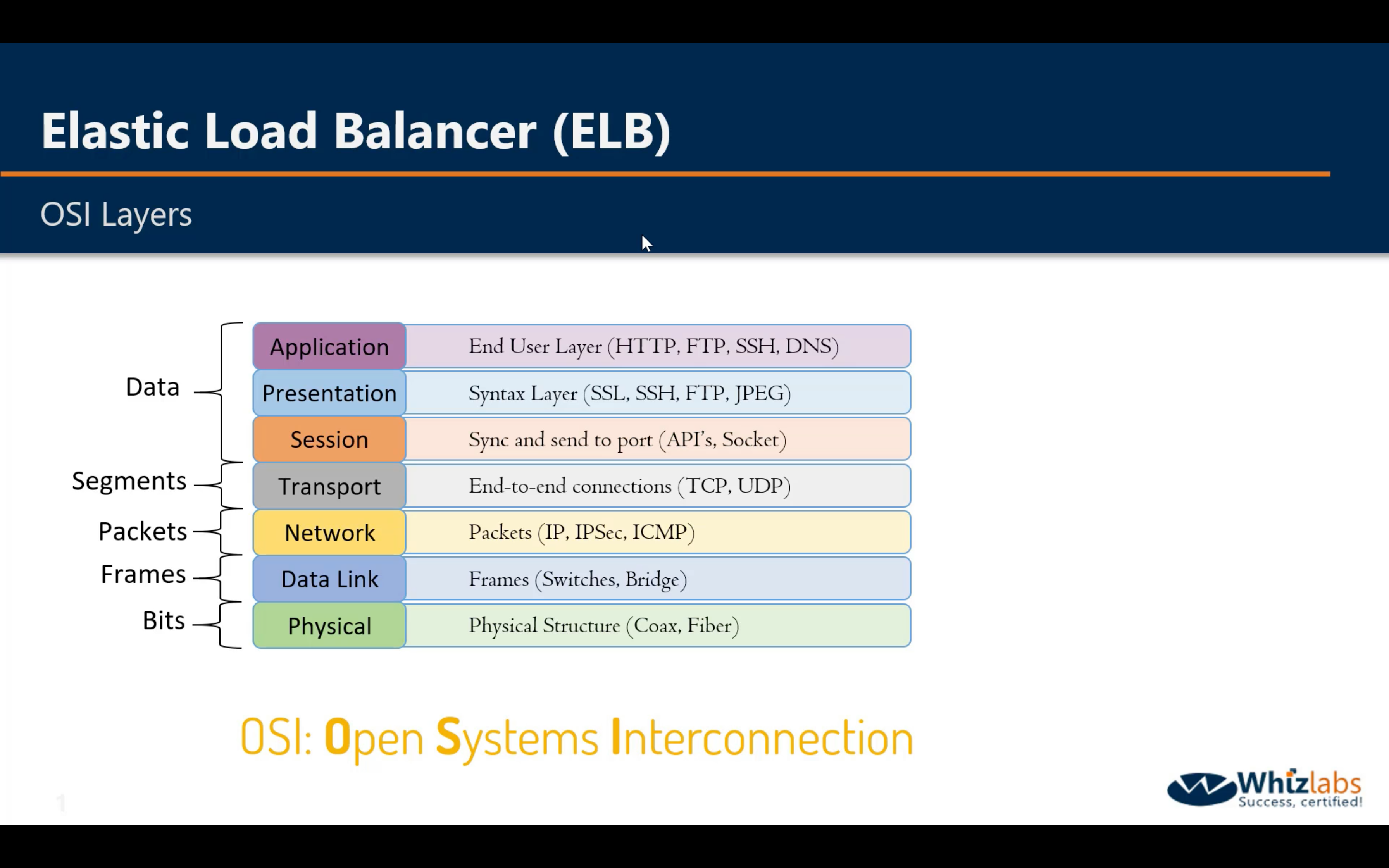

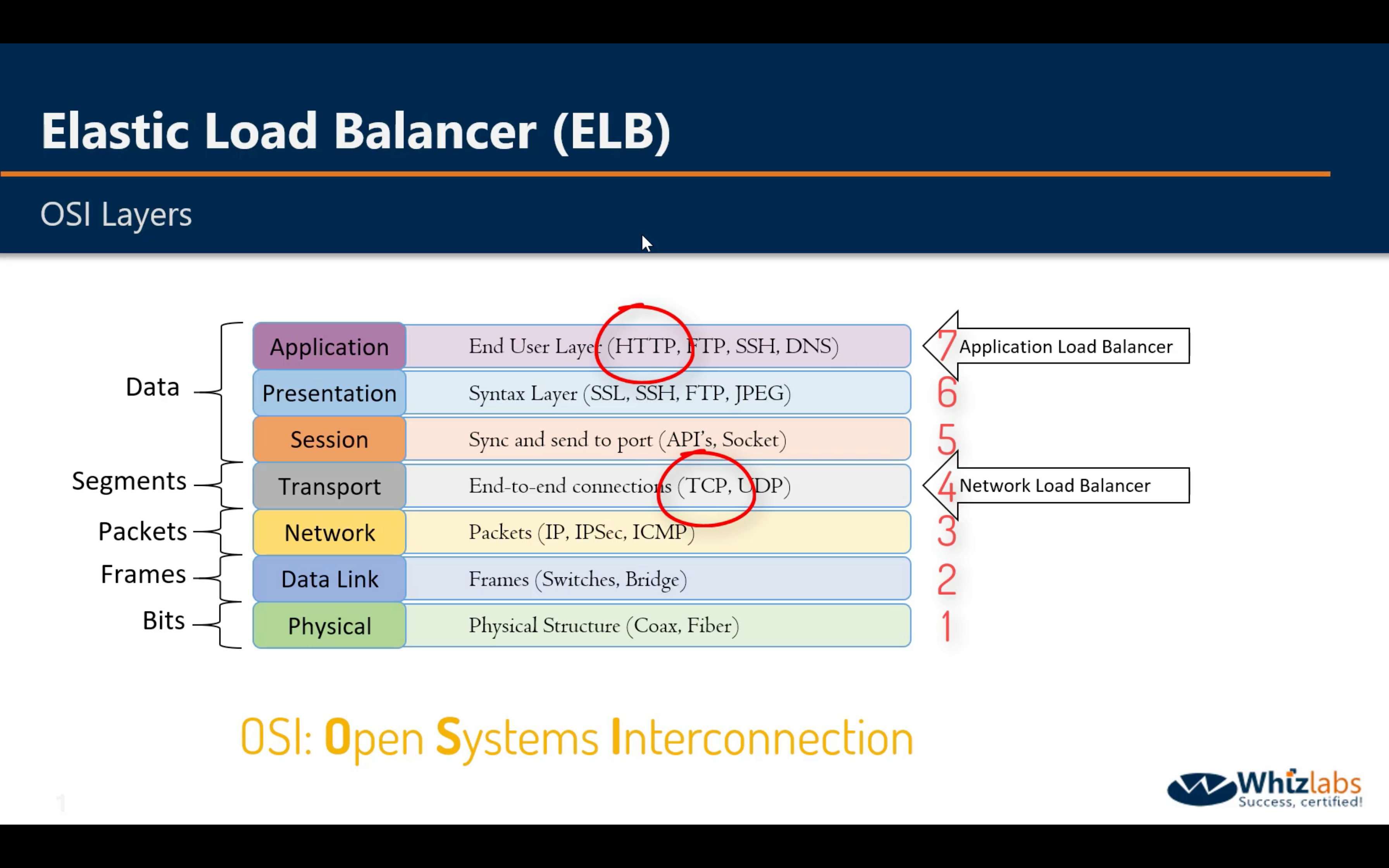

Elastic Load Balancing (ELB)

- Application Load Balancer

- Network Load Balancer

- Classic Load Balancer

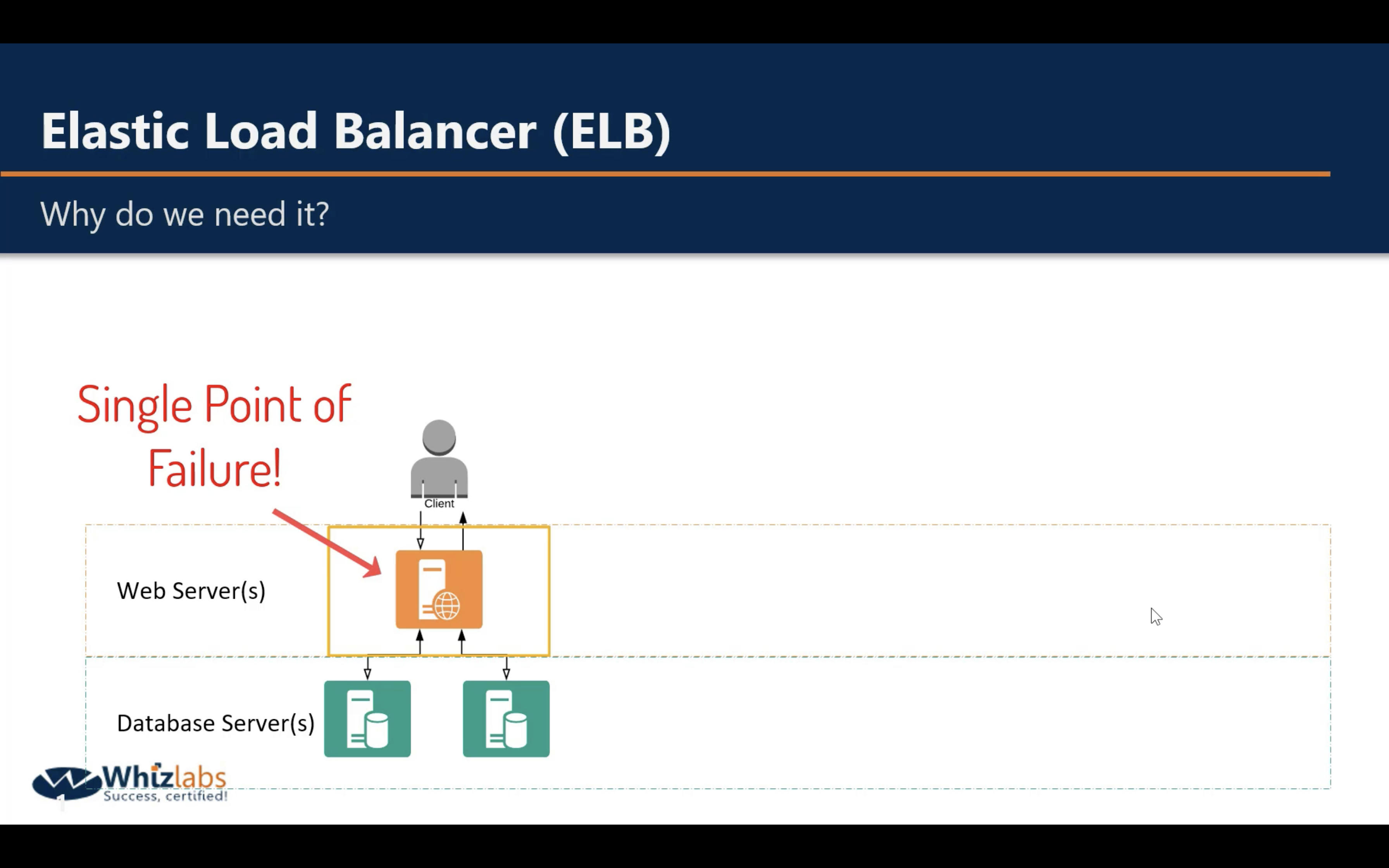

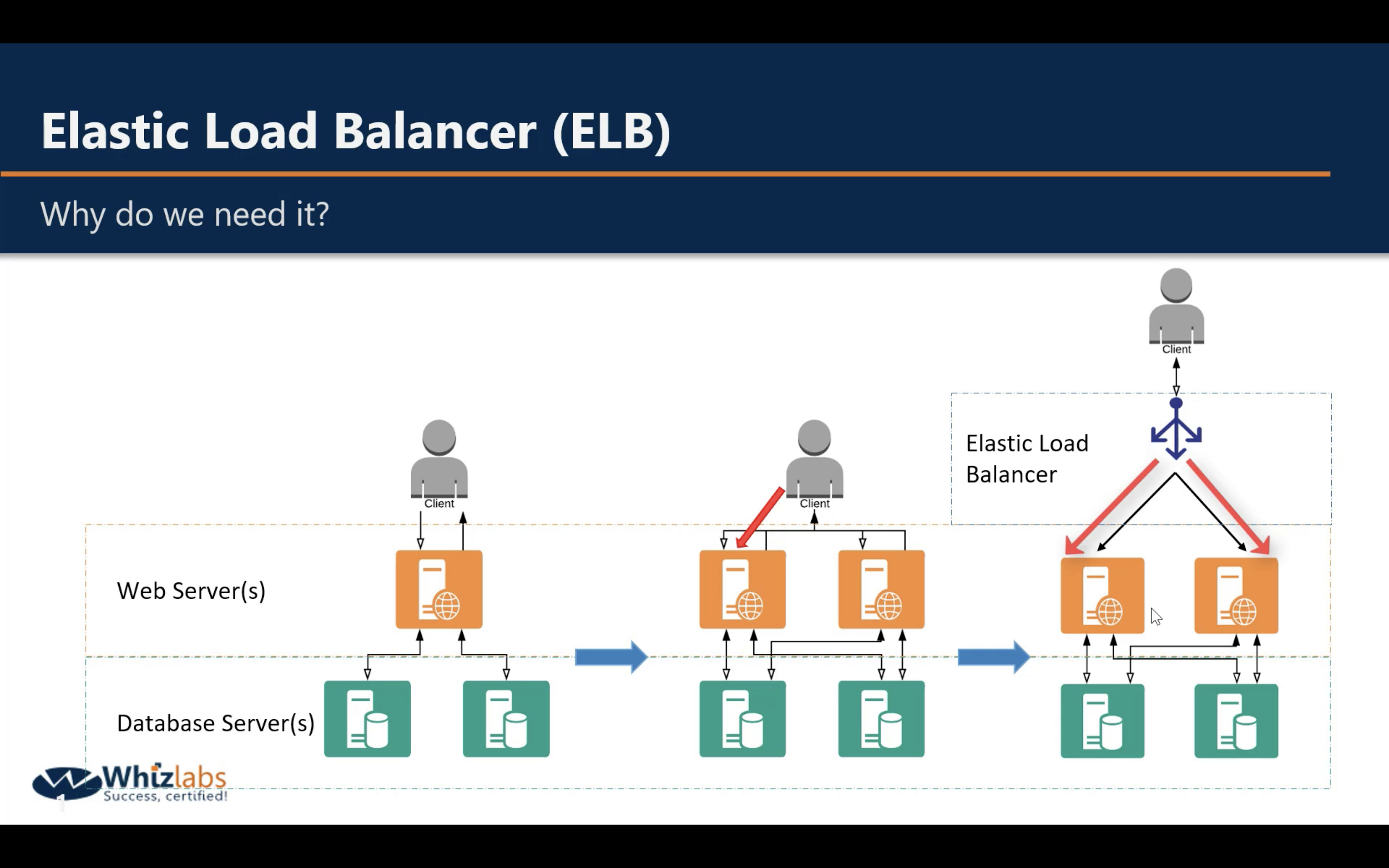

One server: single failure

Two or more servers: one is overload, another is idle. Resource wasted.

Load balancer:

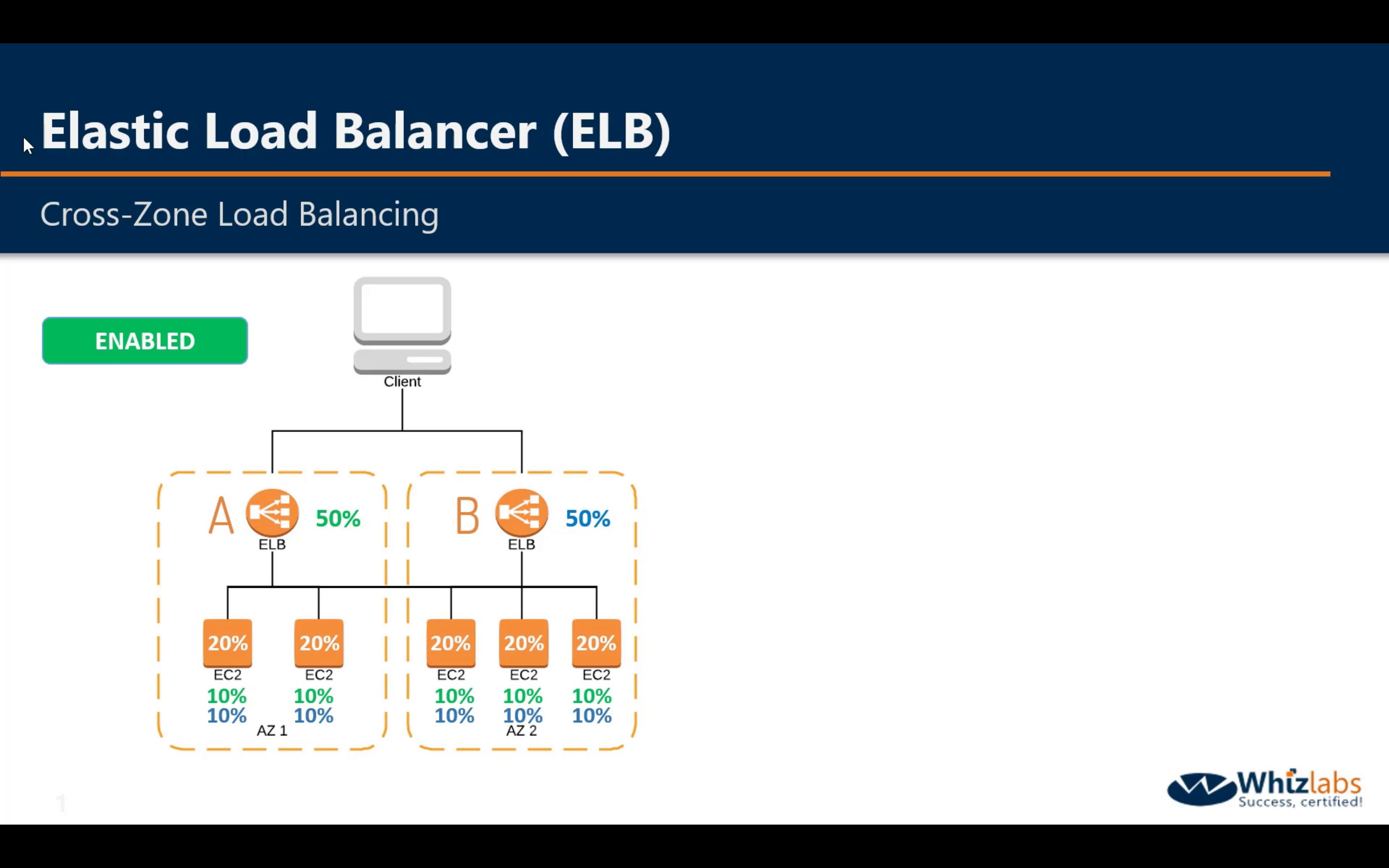

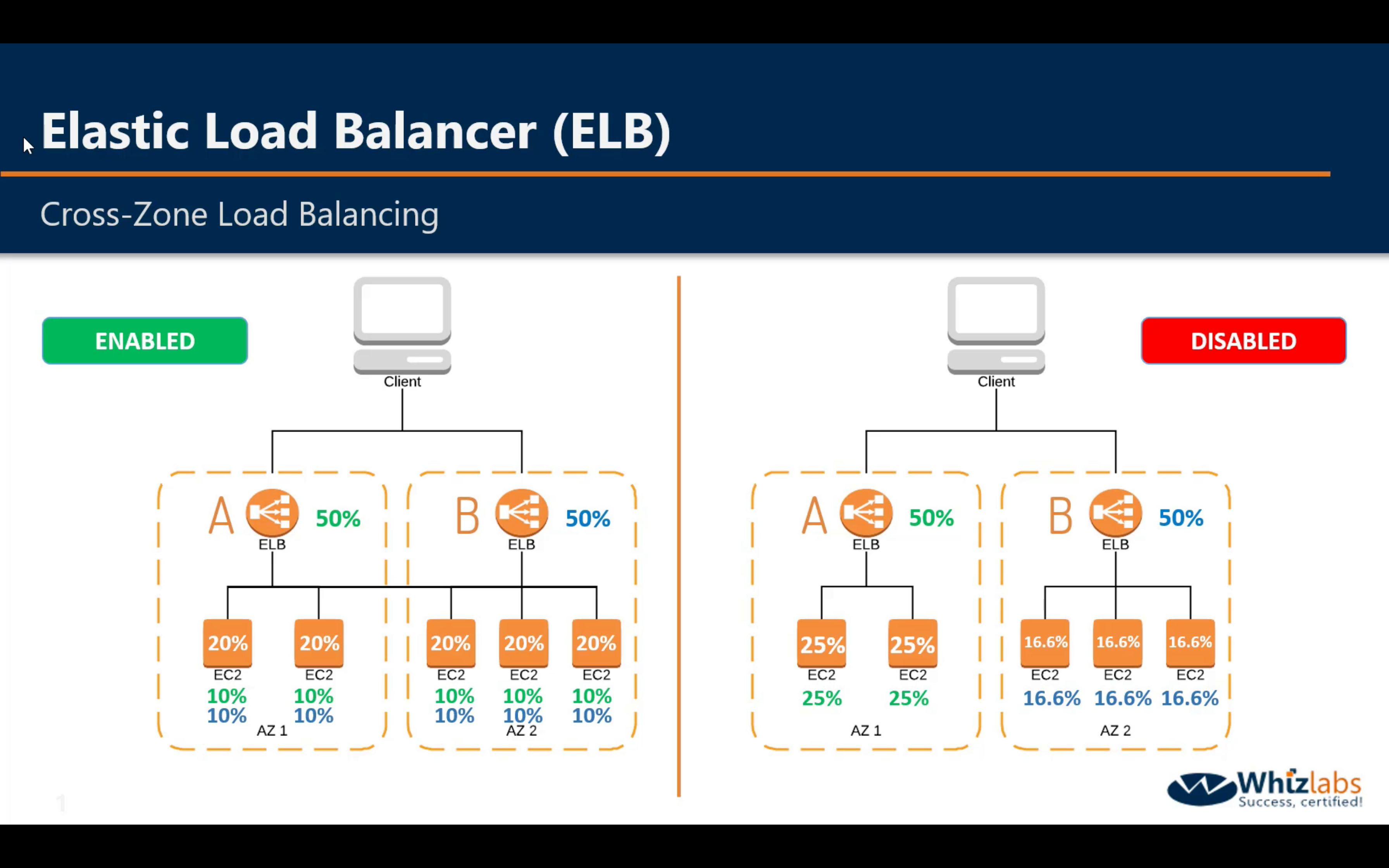

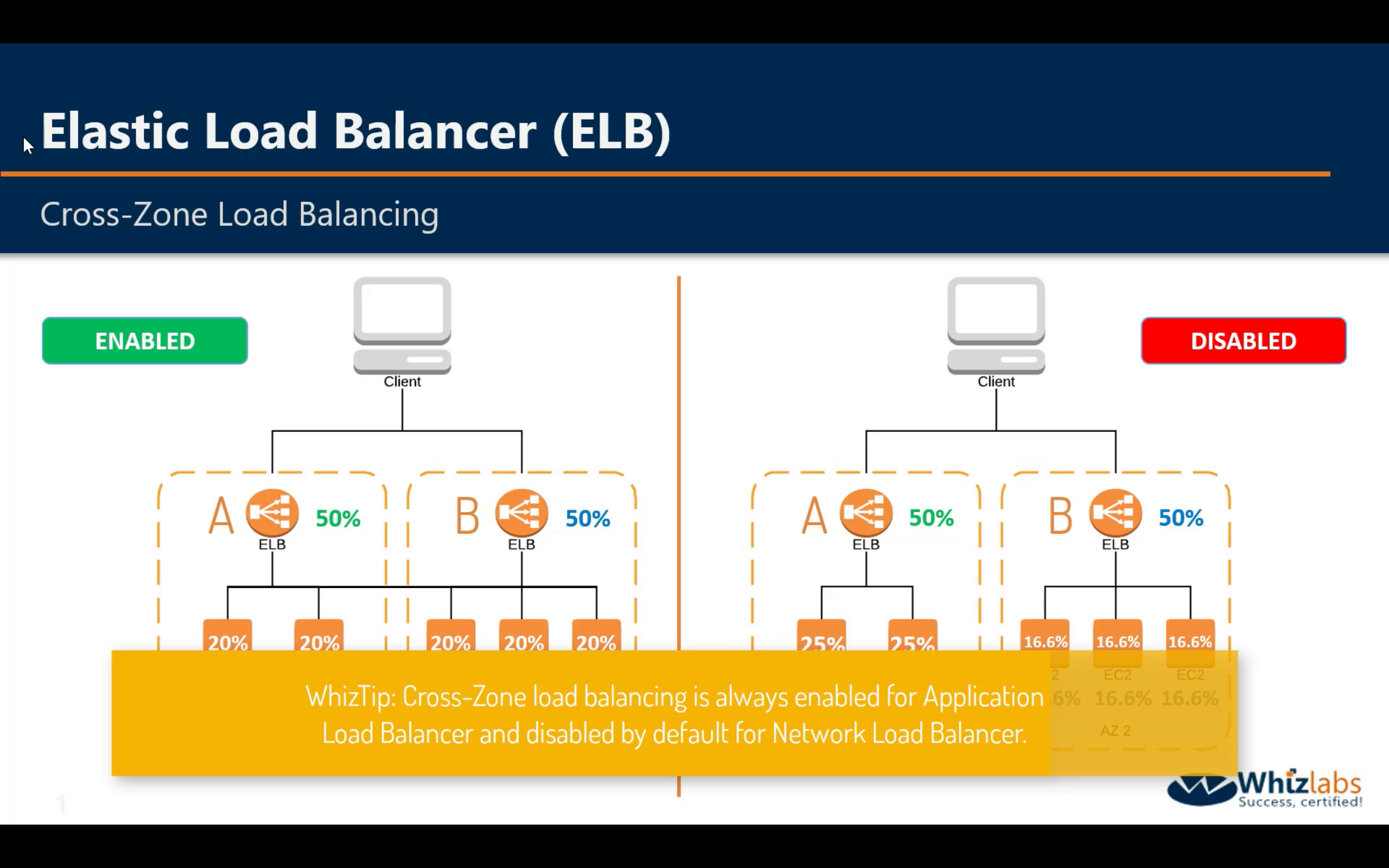

Cross-Zone Load Balancing - Enabled

Cross-Zone Load Balancing - Disabled

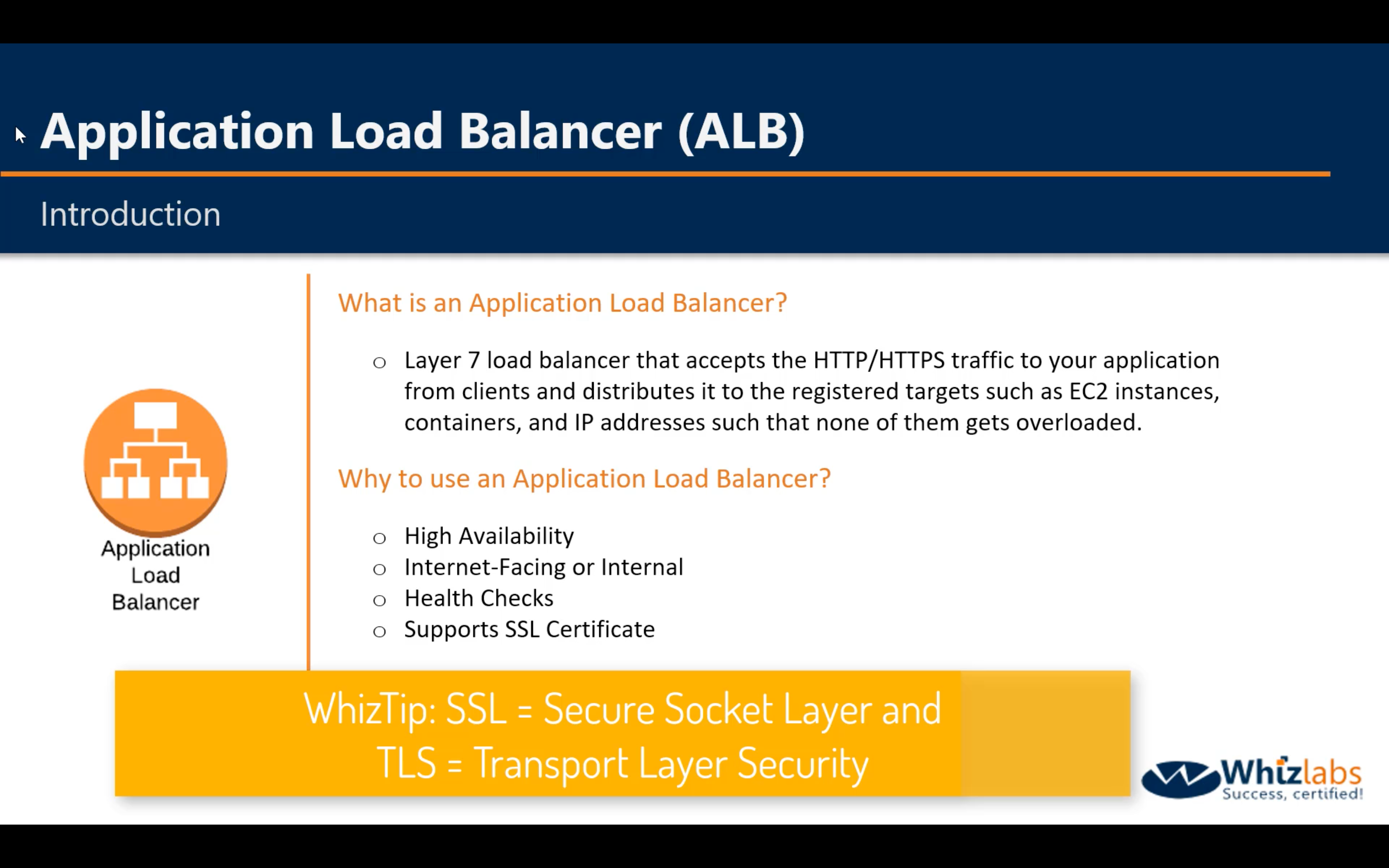

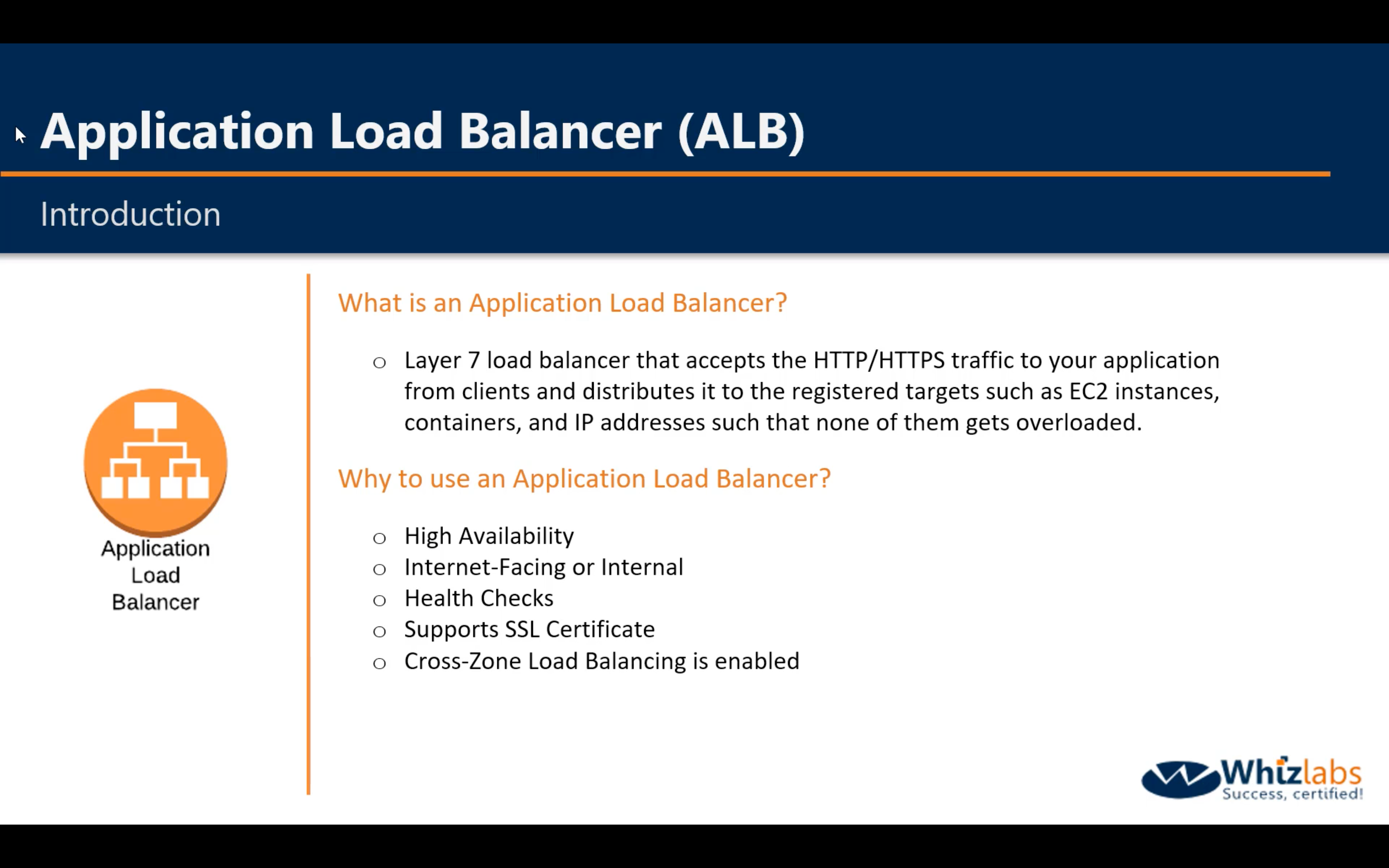

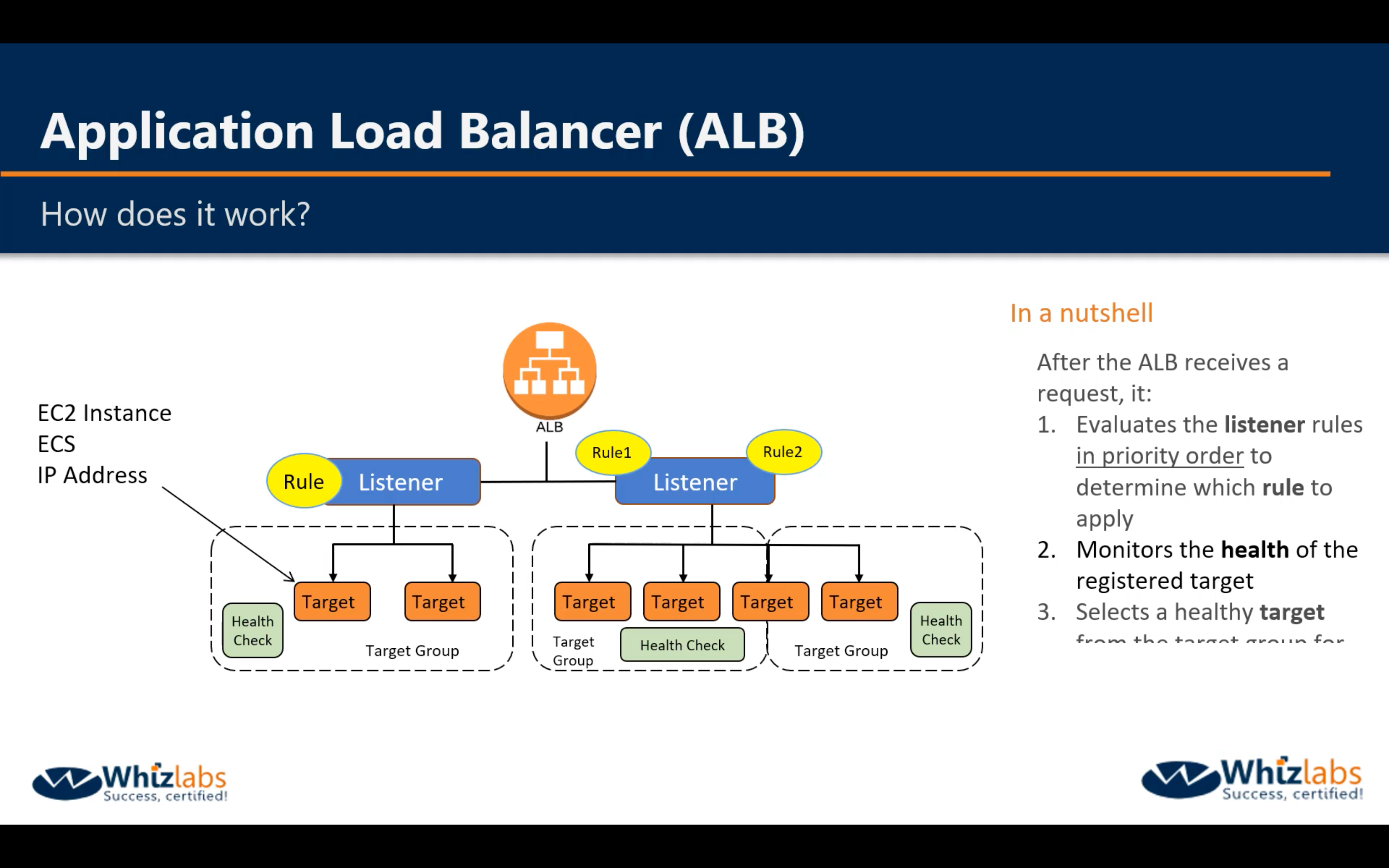

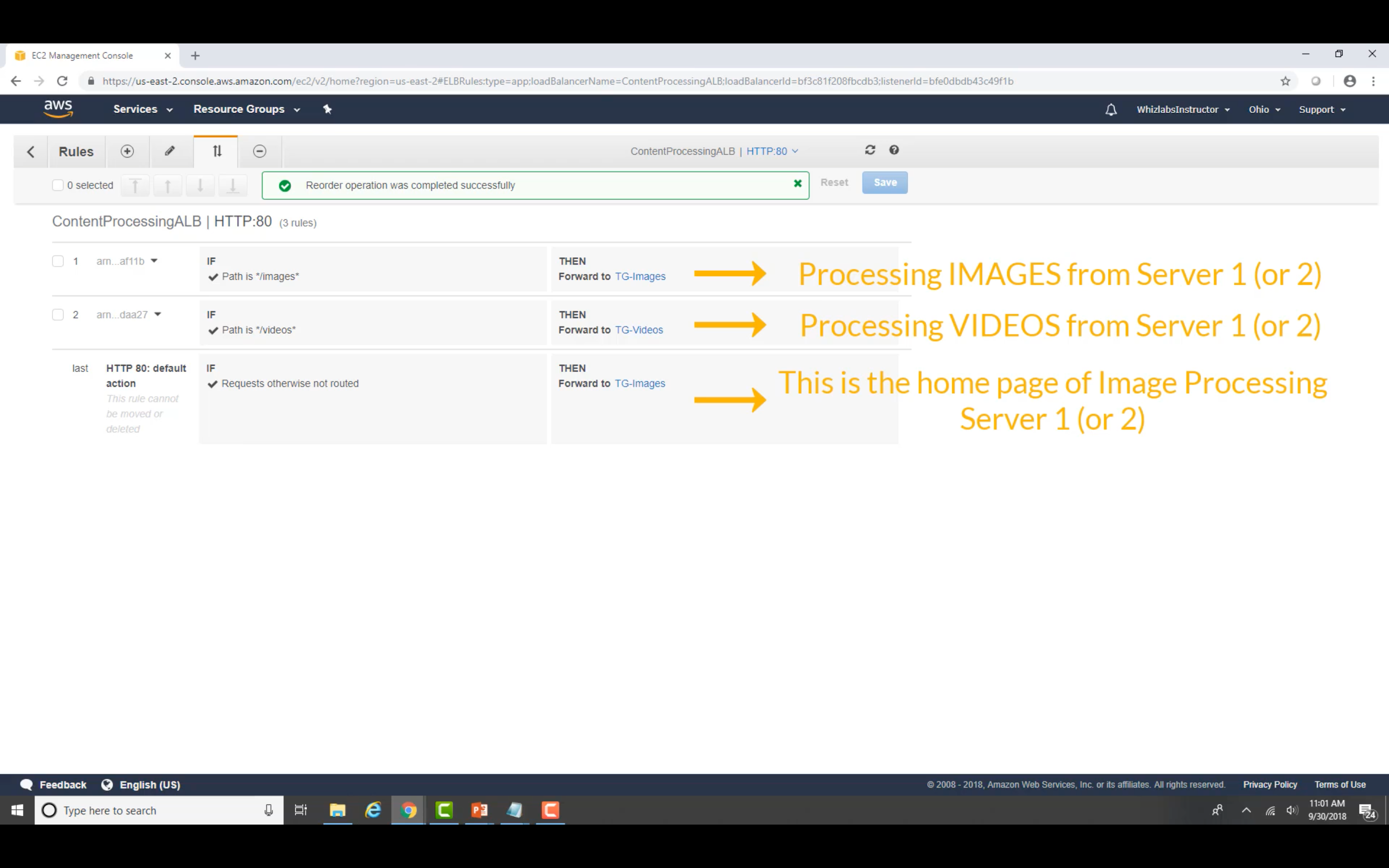

ALB

Components

Listener

Rules

Health Checks

Target and Target Groups

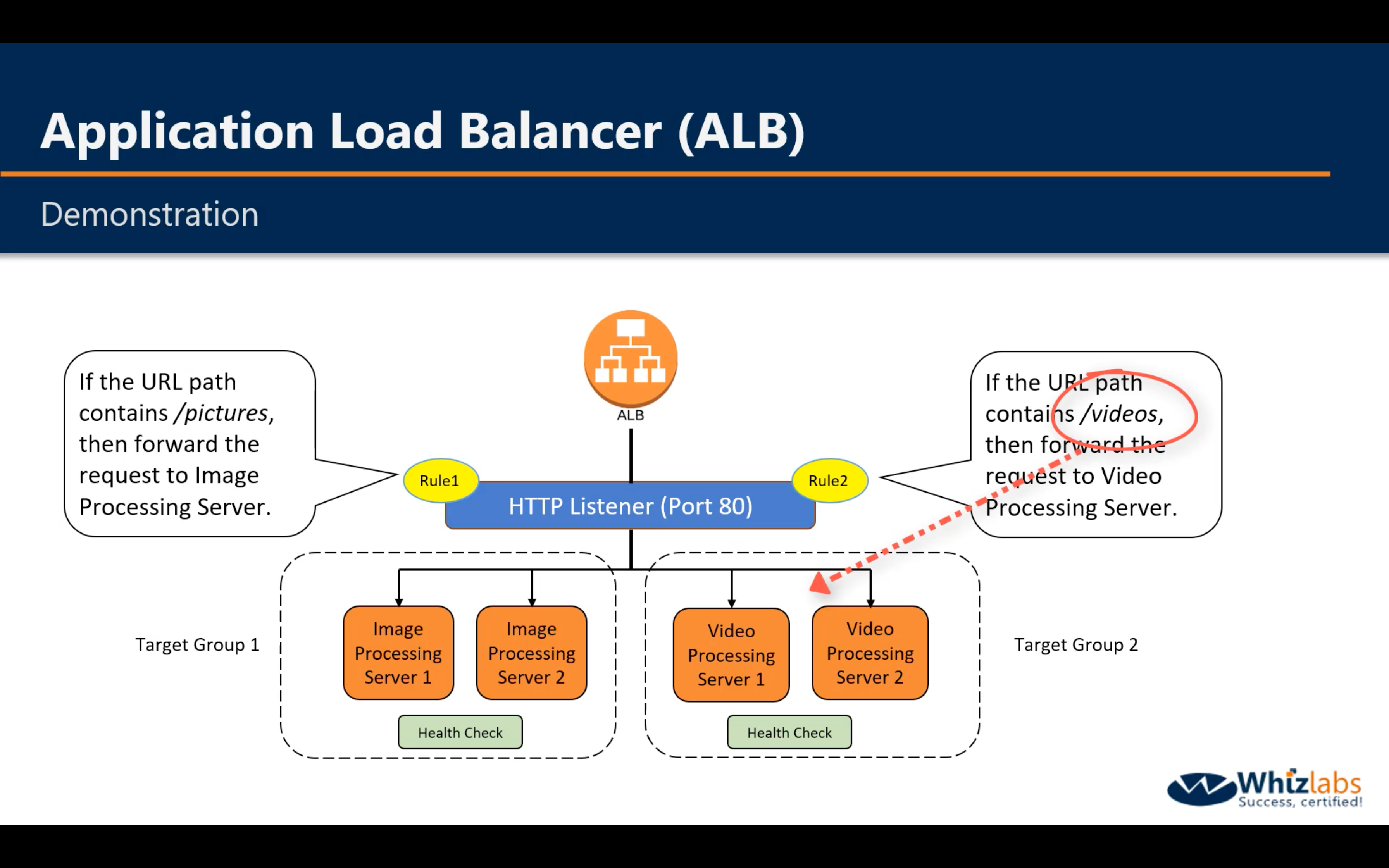

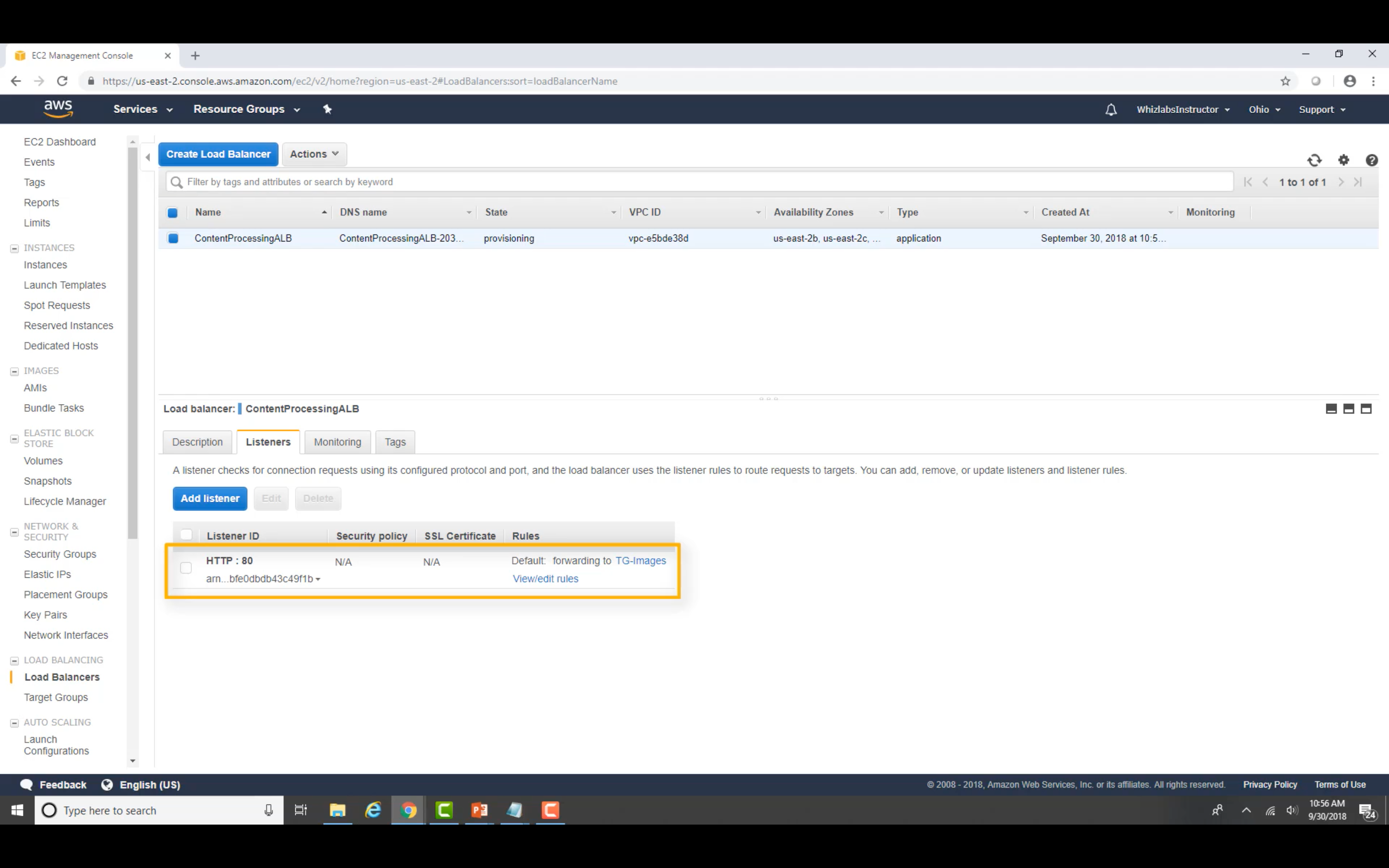

Demo

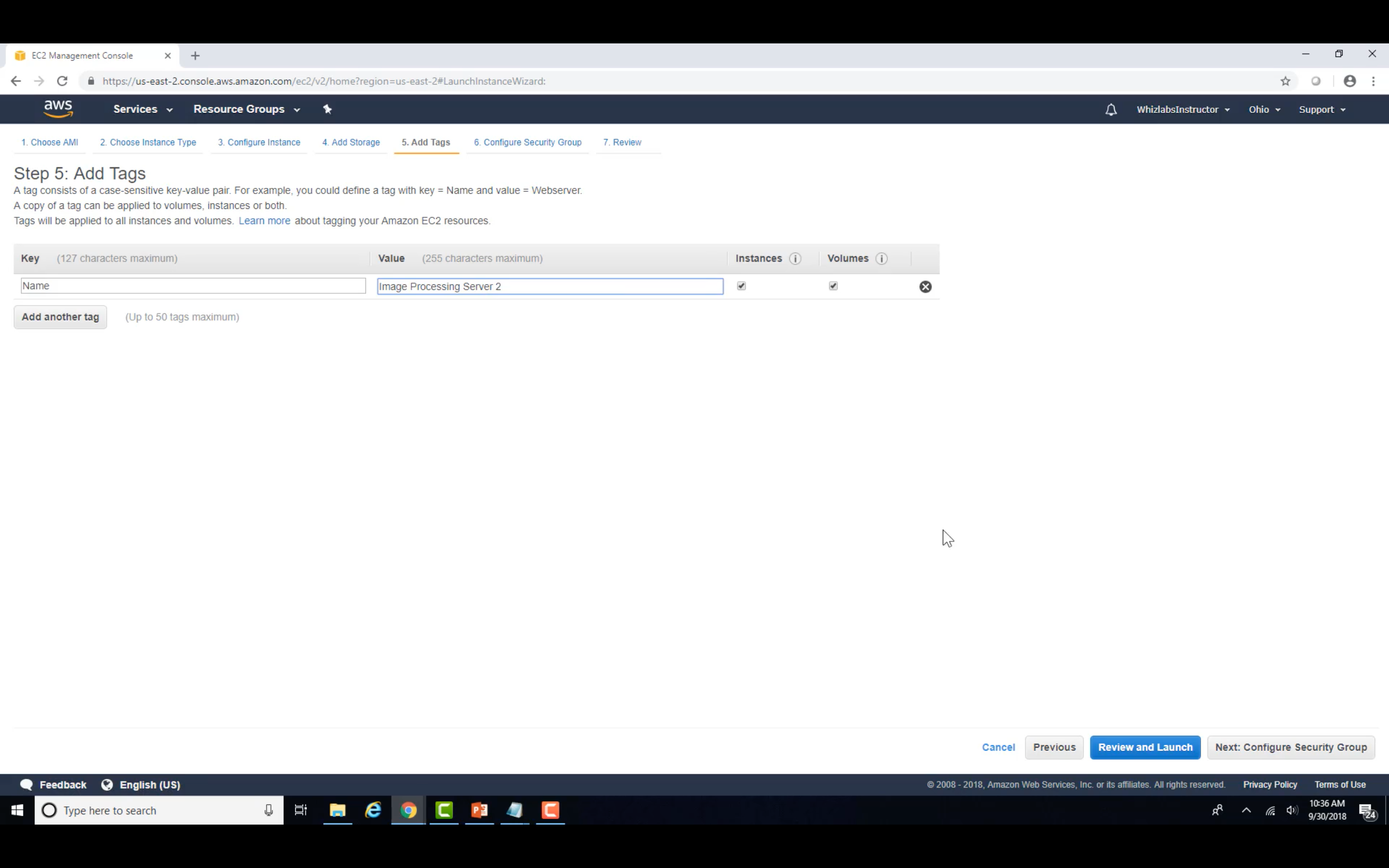

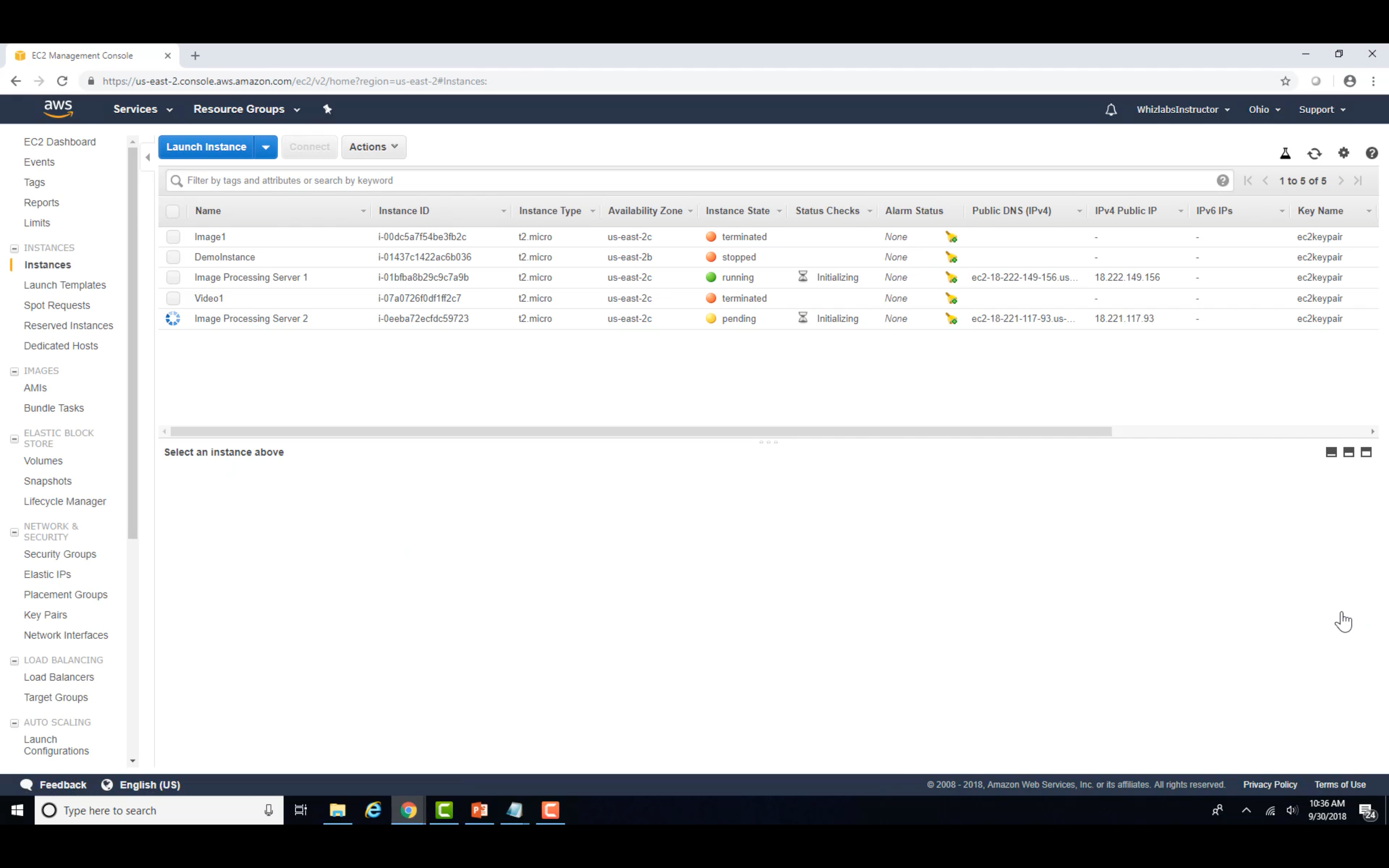

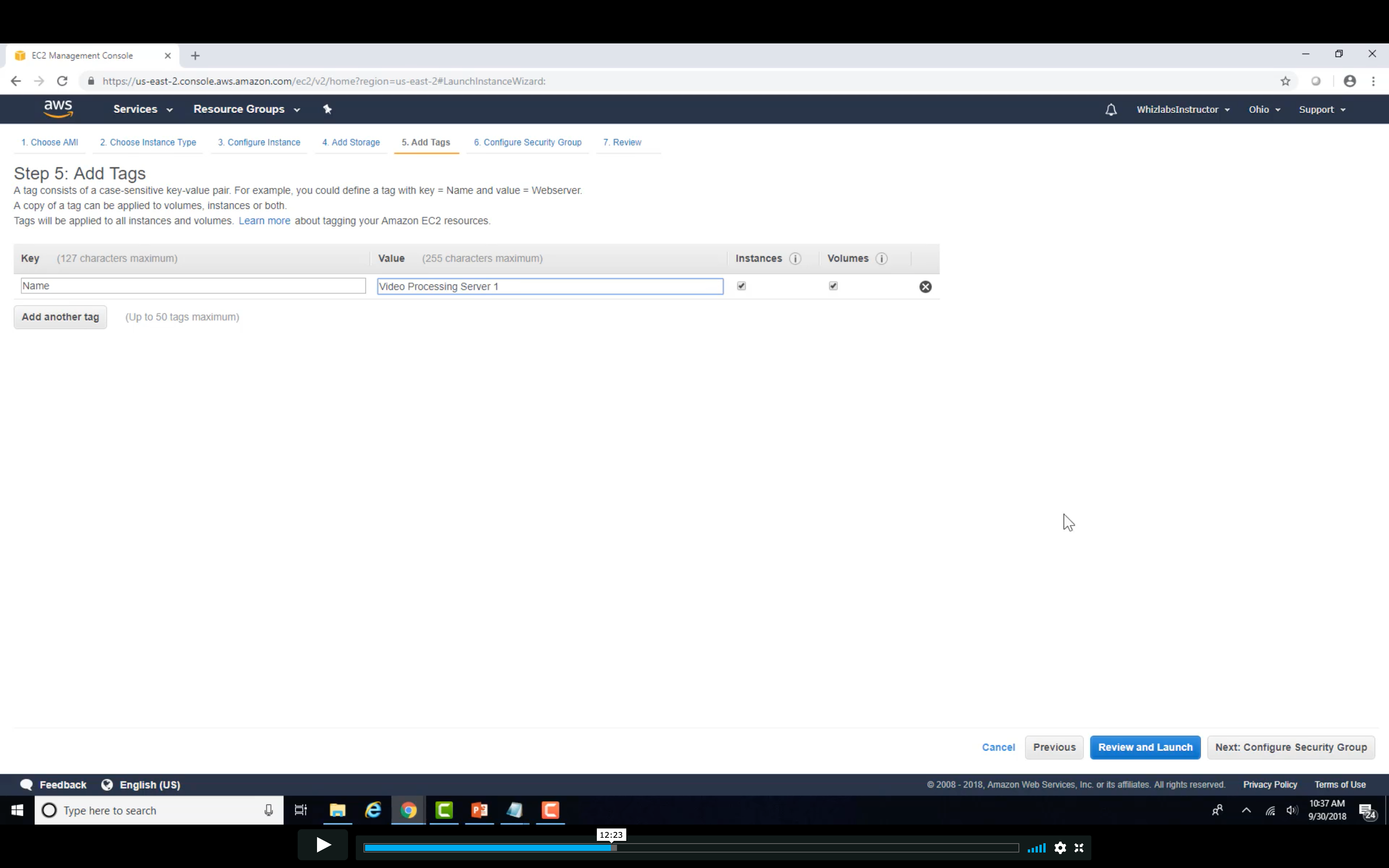

Image Server 1&2

Video Server 1&2

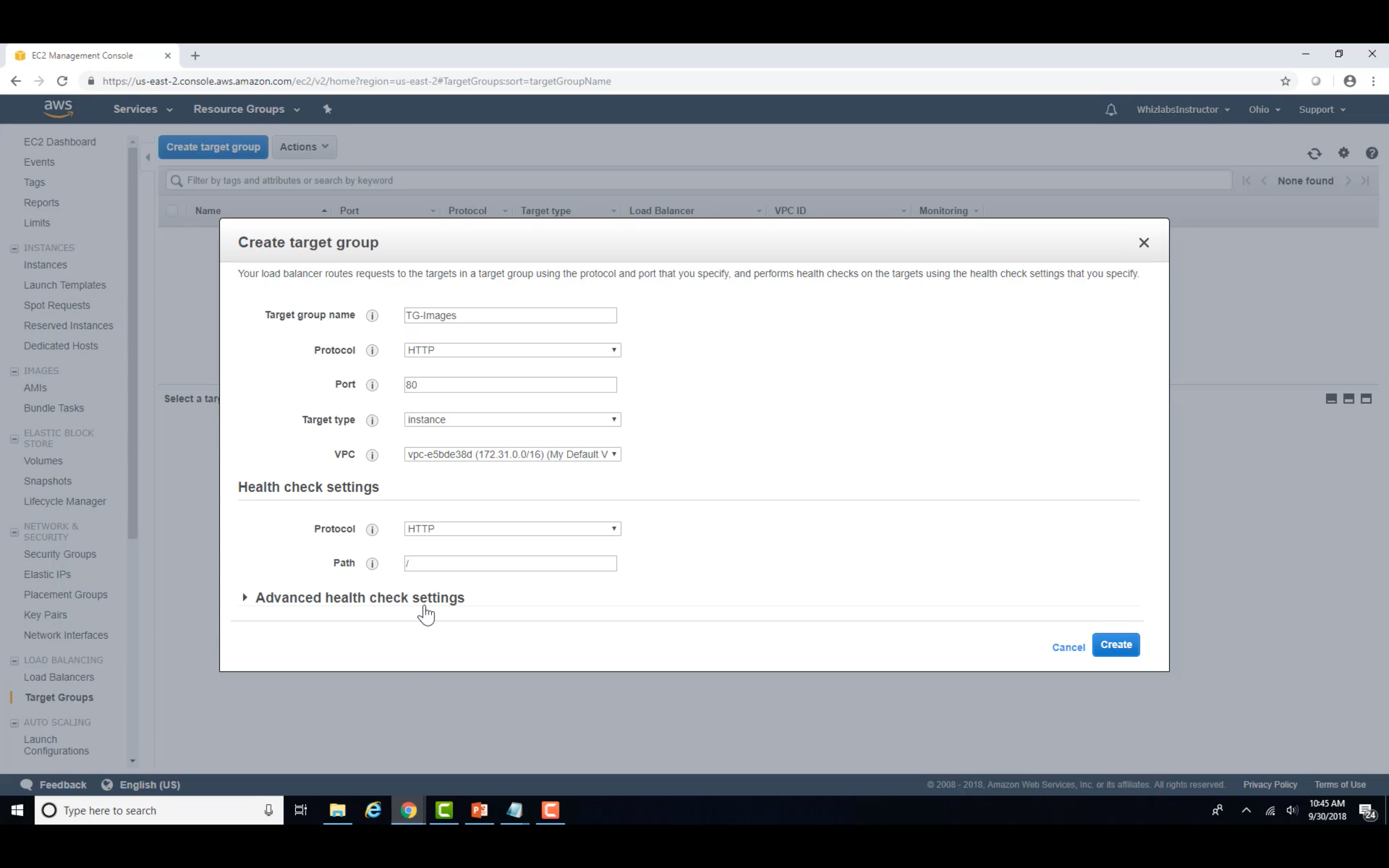

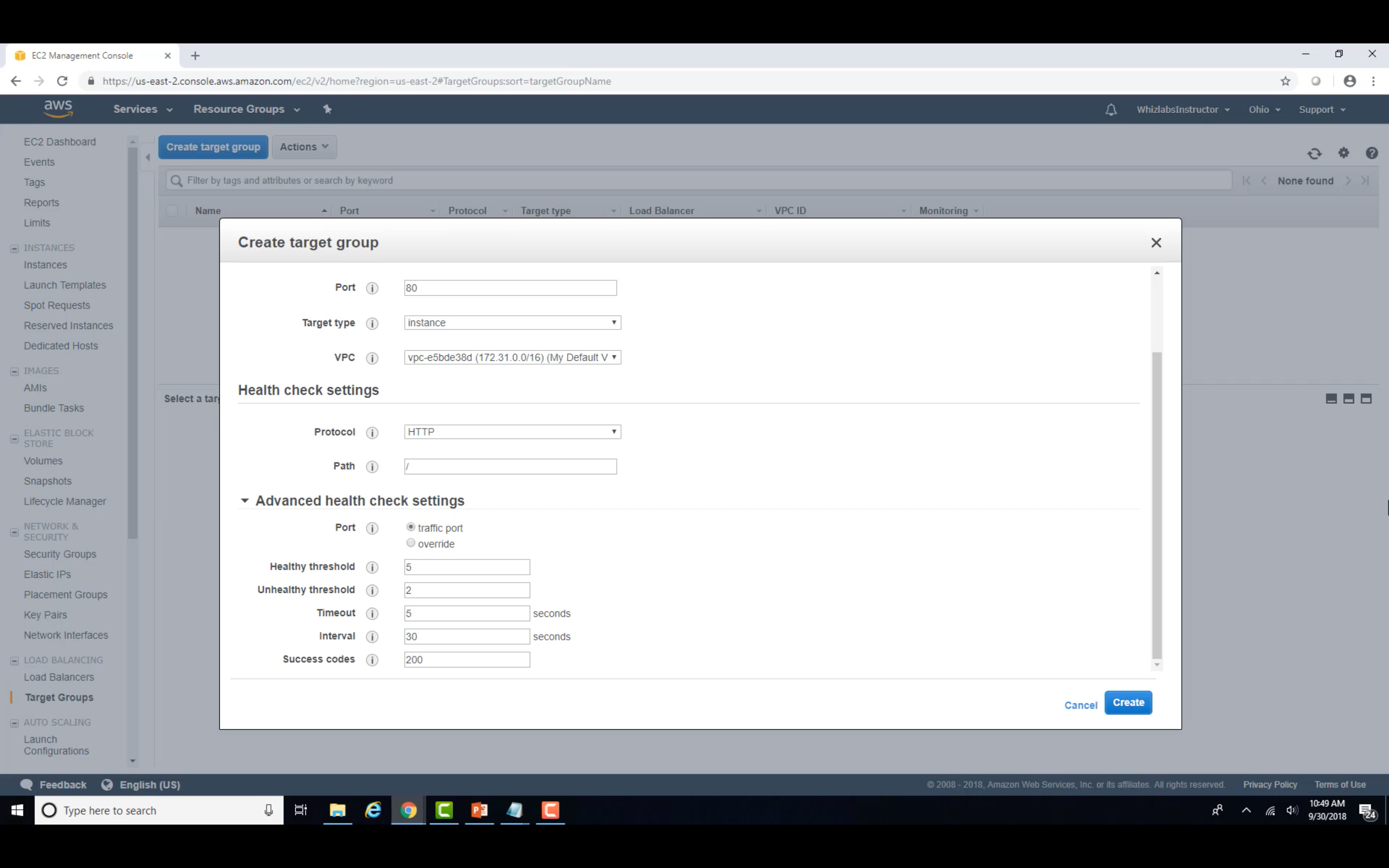

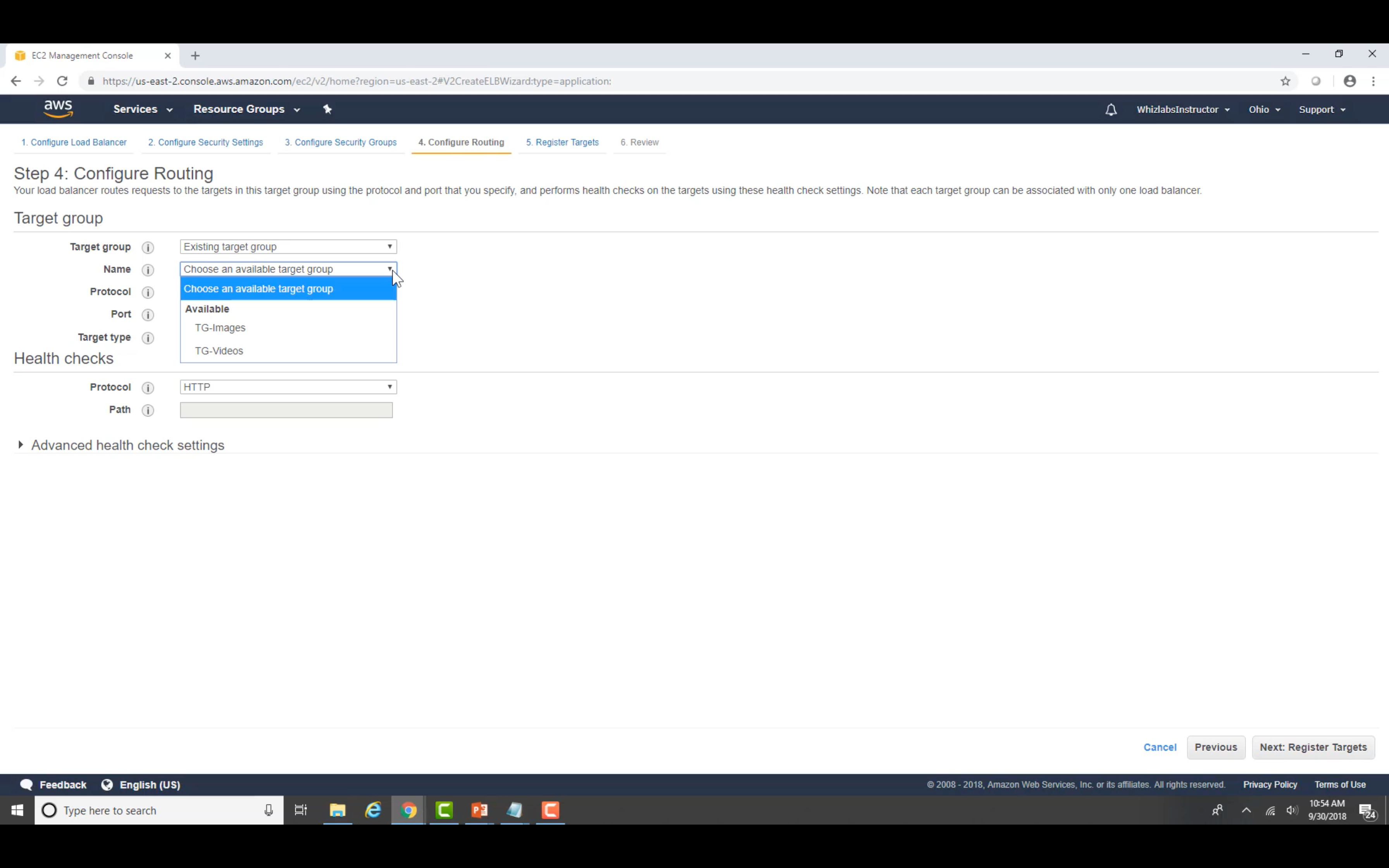

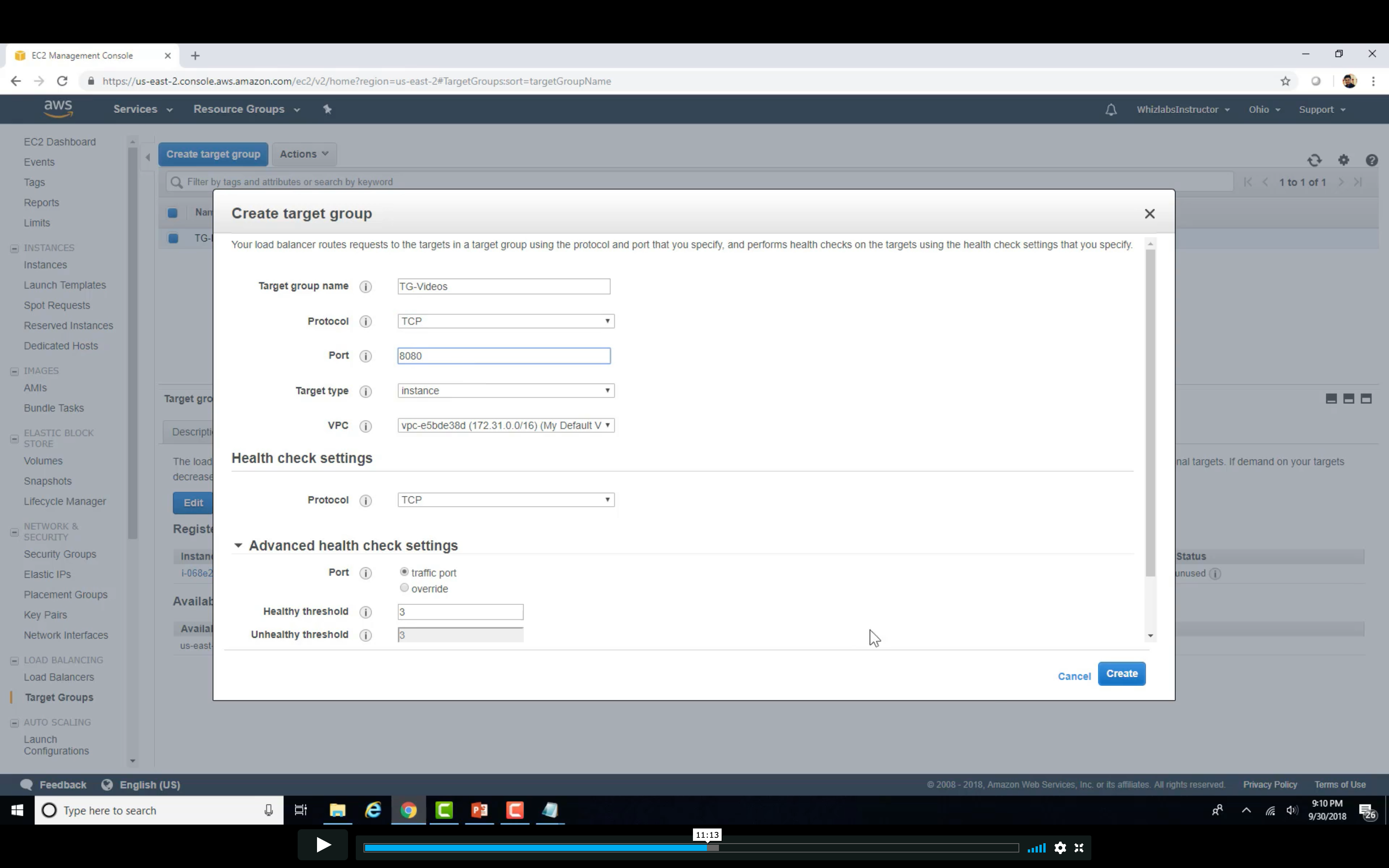

Target Group Setting

Create Image Target Group

Change the path if you need

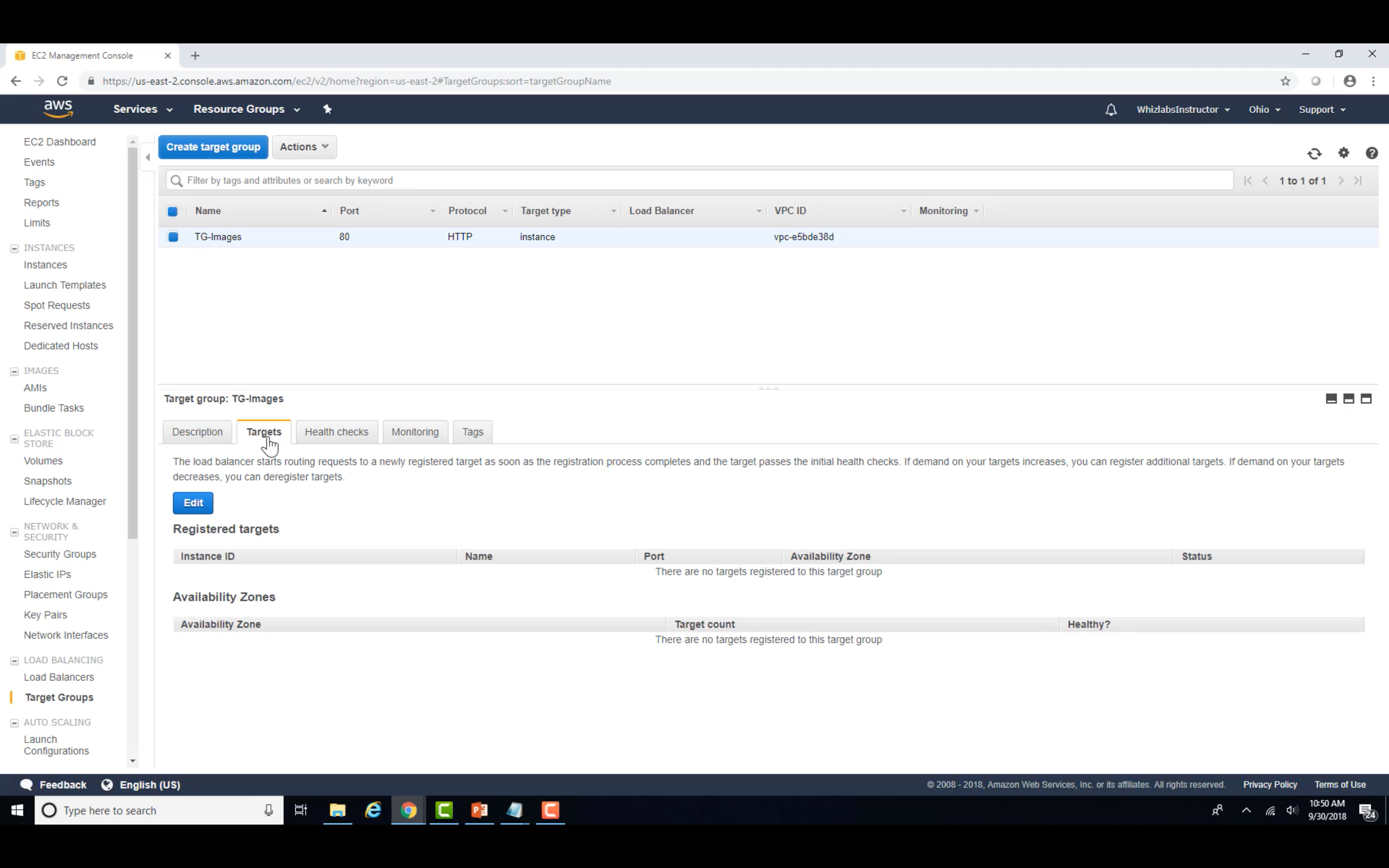

Register the targets (instances or IP range)

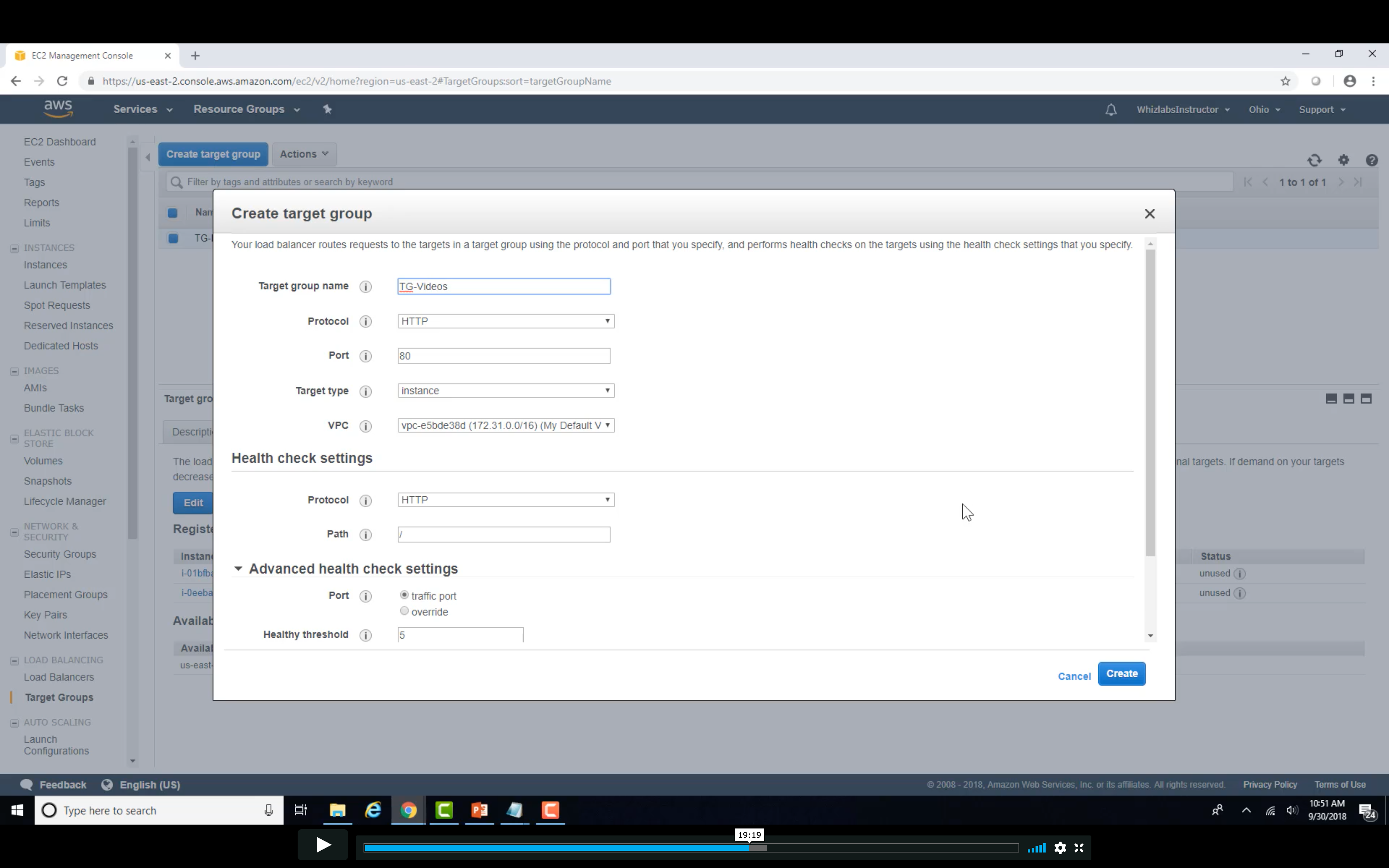

Create Video Target Group

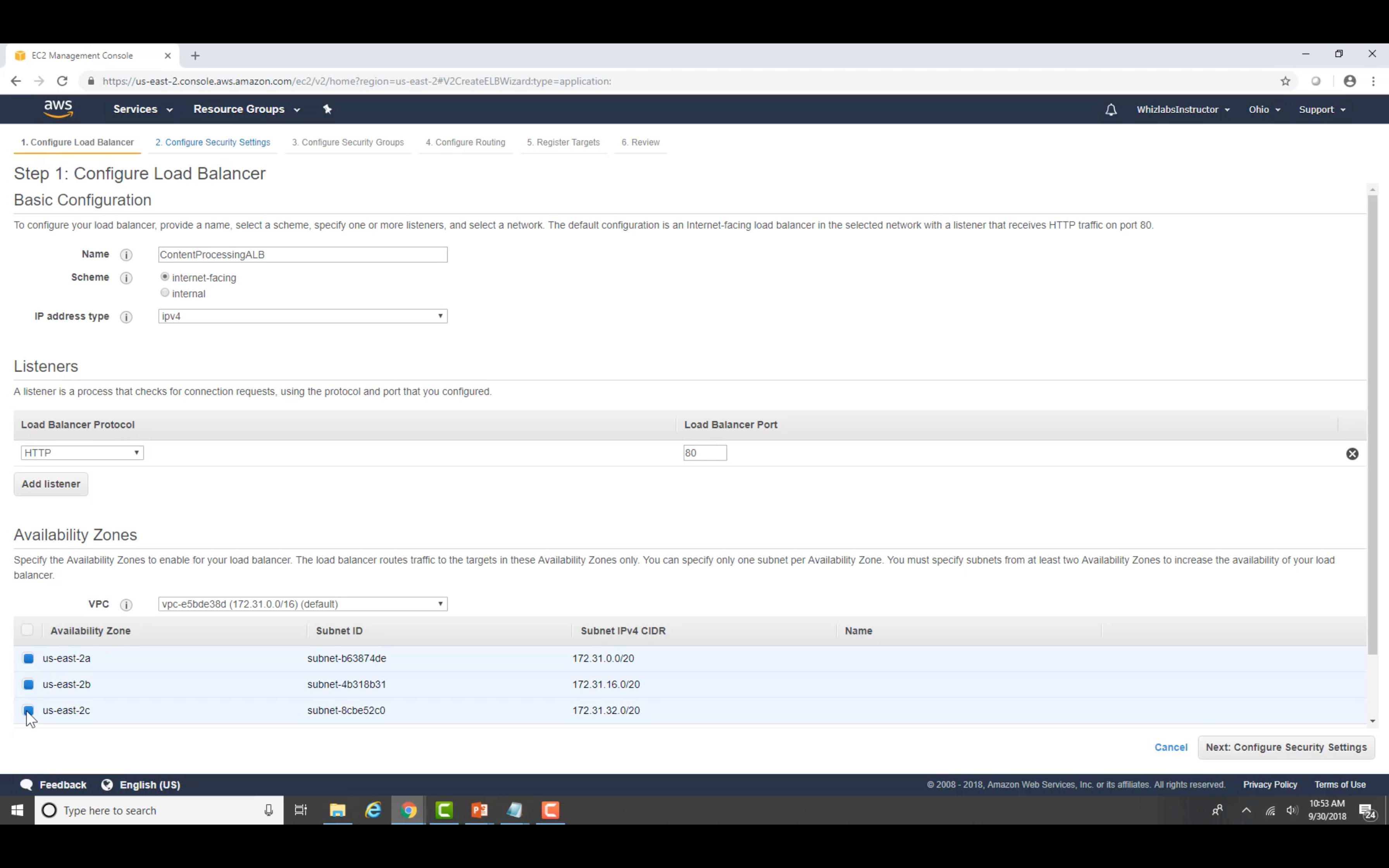

Load Balancer Configuration

Select One is OK

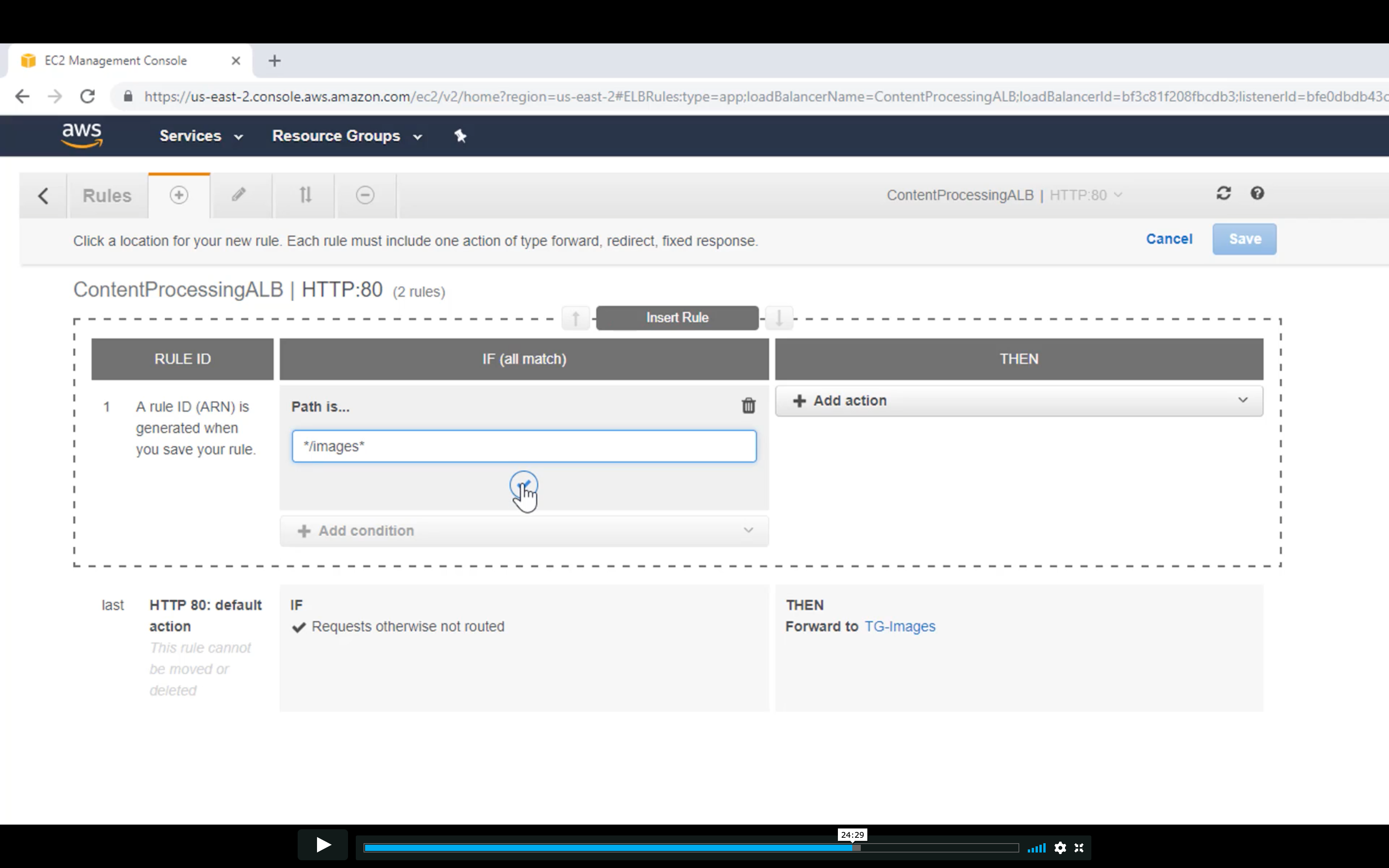

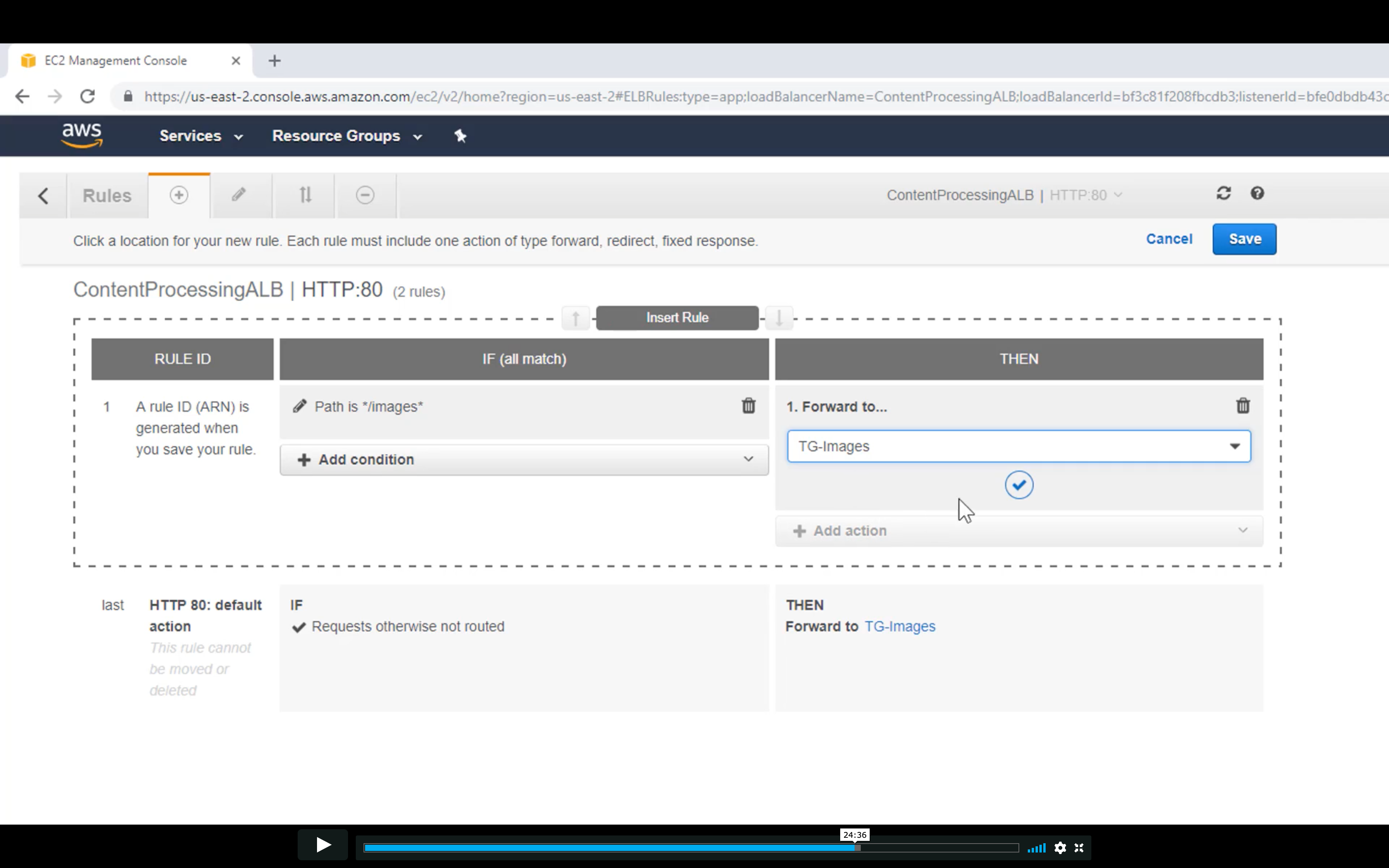

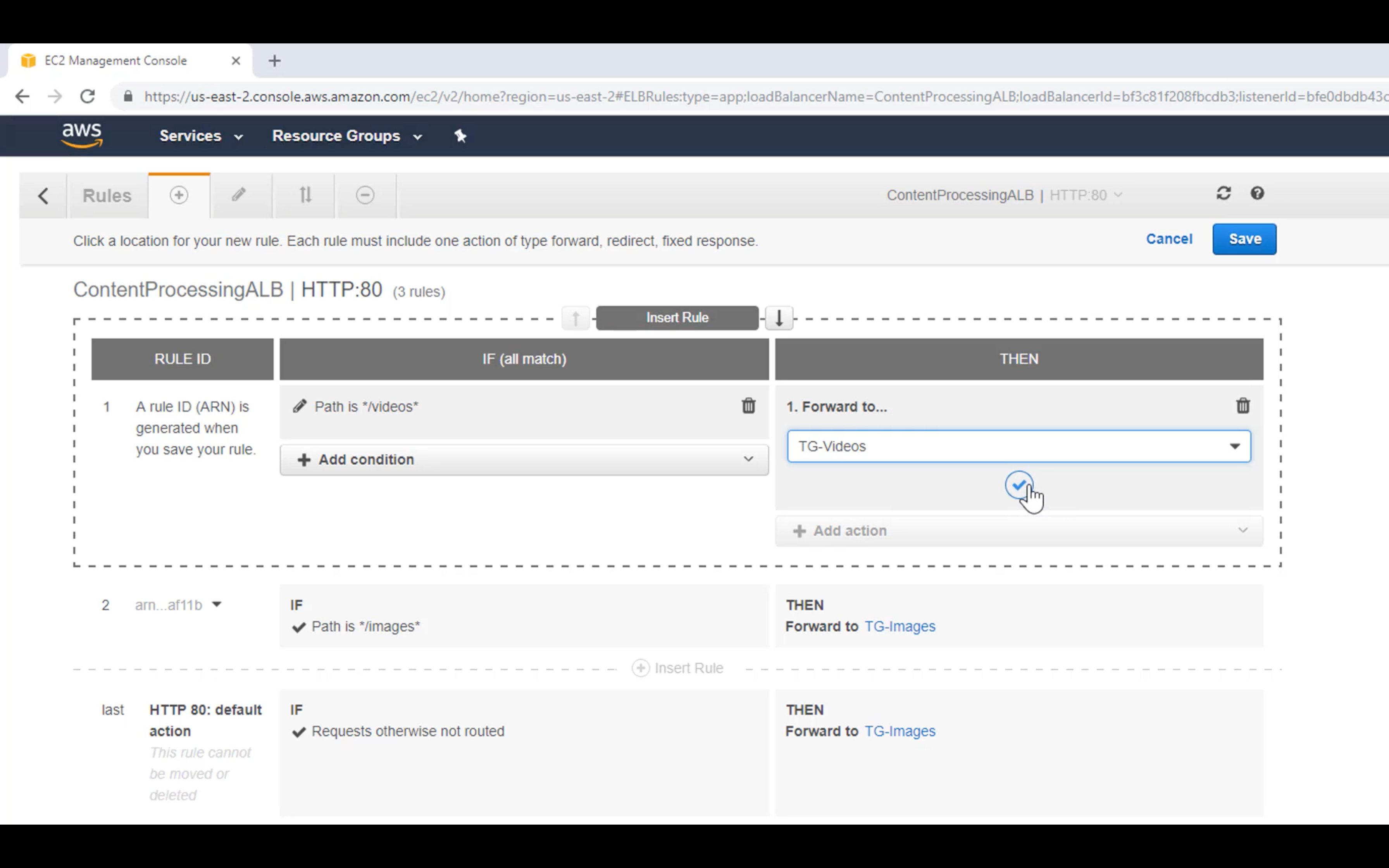

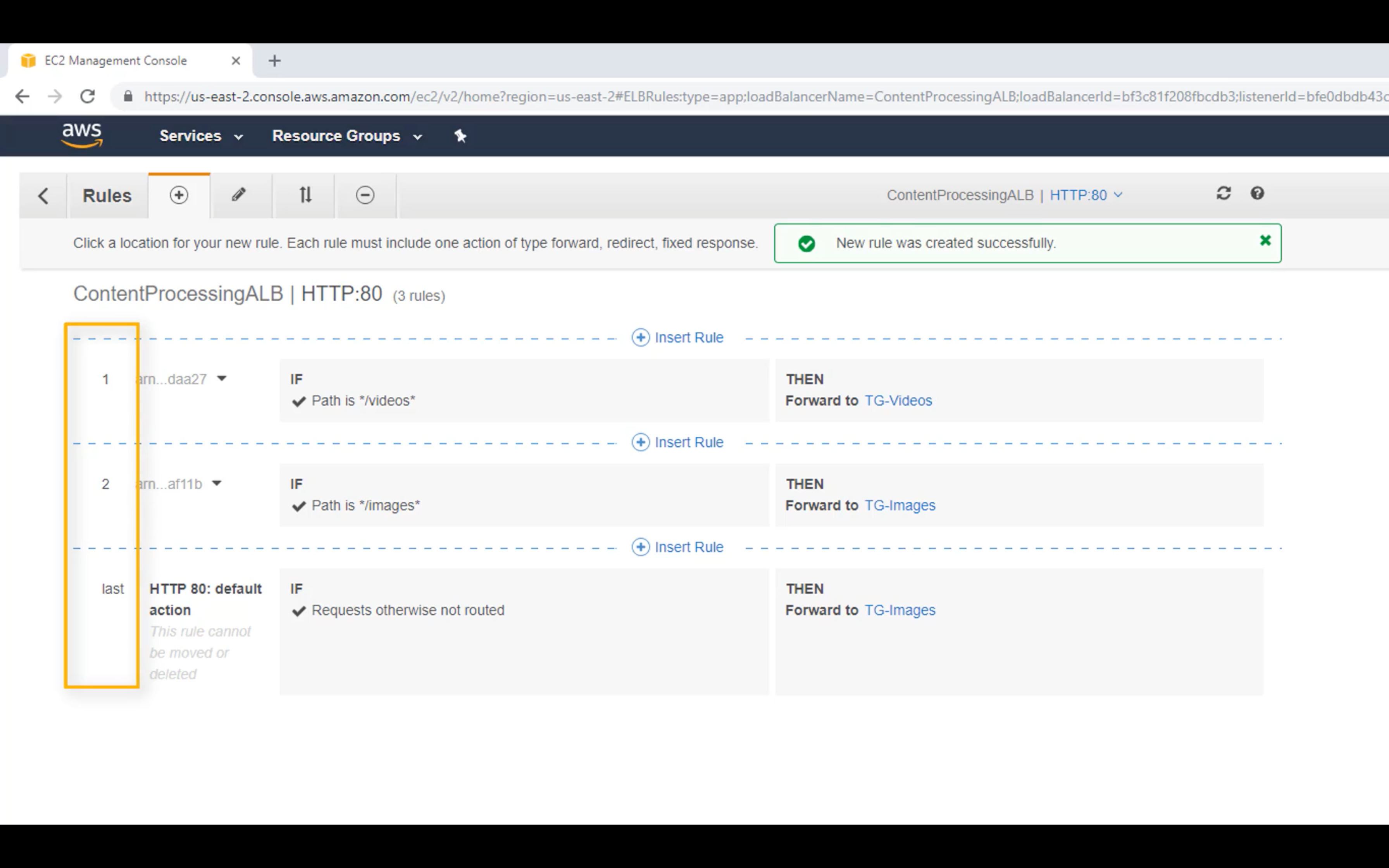

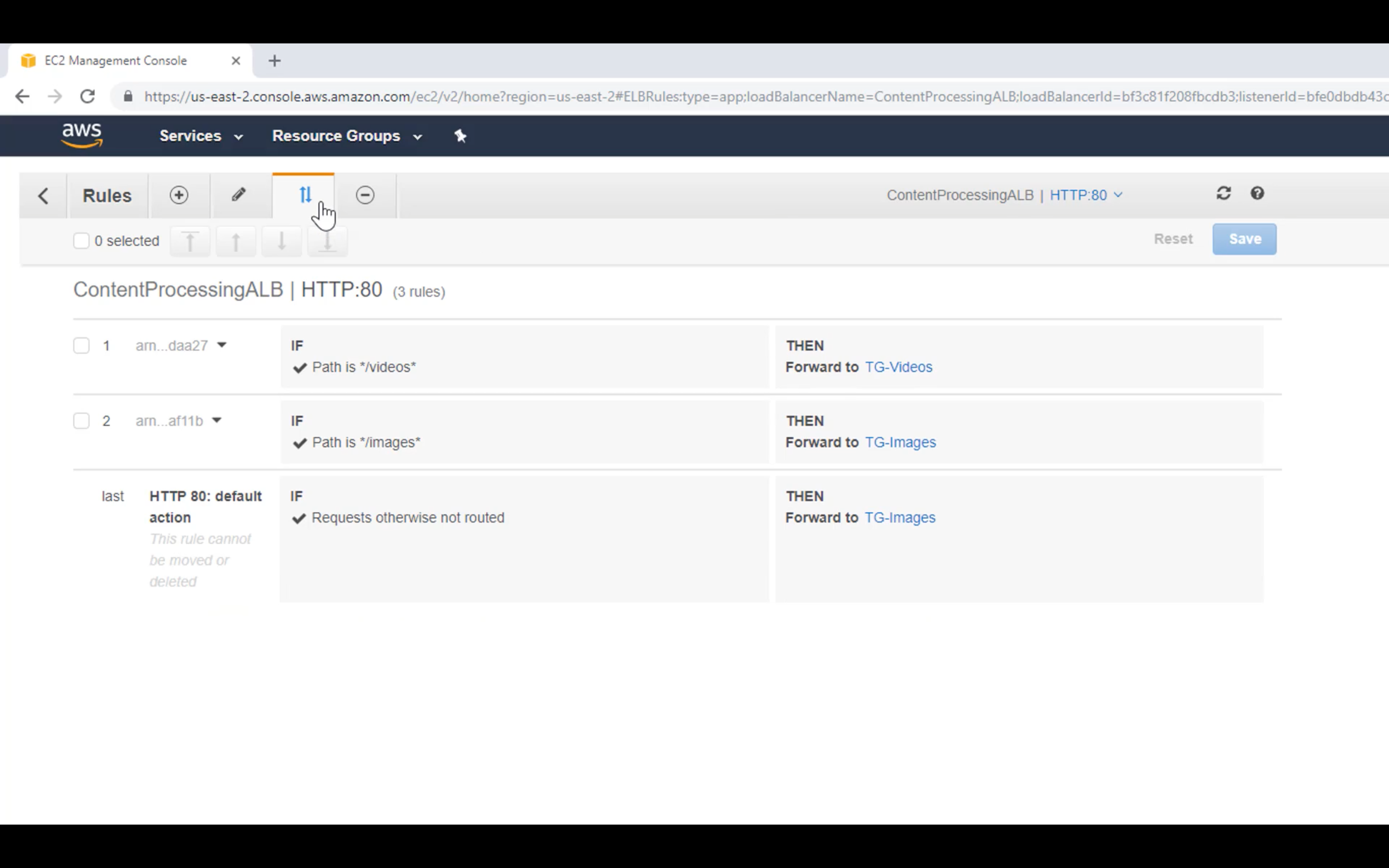

Edit Rules

Forward the request to Image Target Group if the request matched the path */image*

Change the order if you need

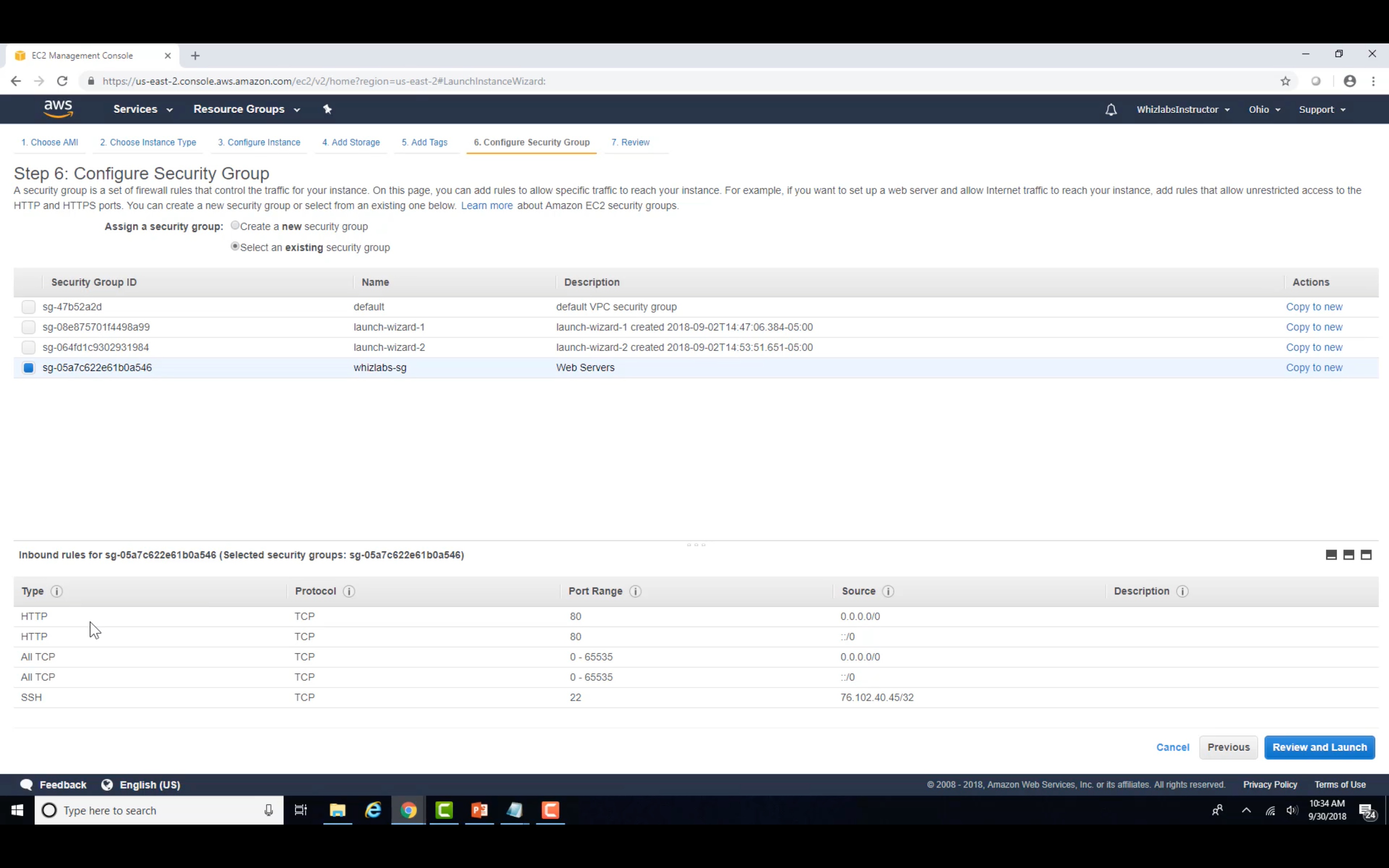

ALB Security Policy

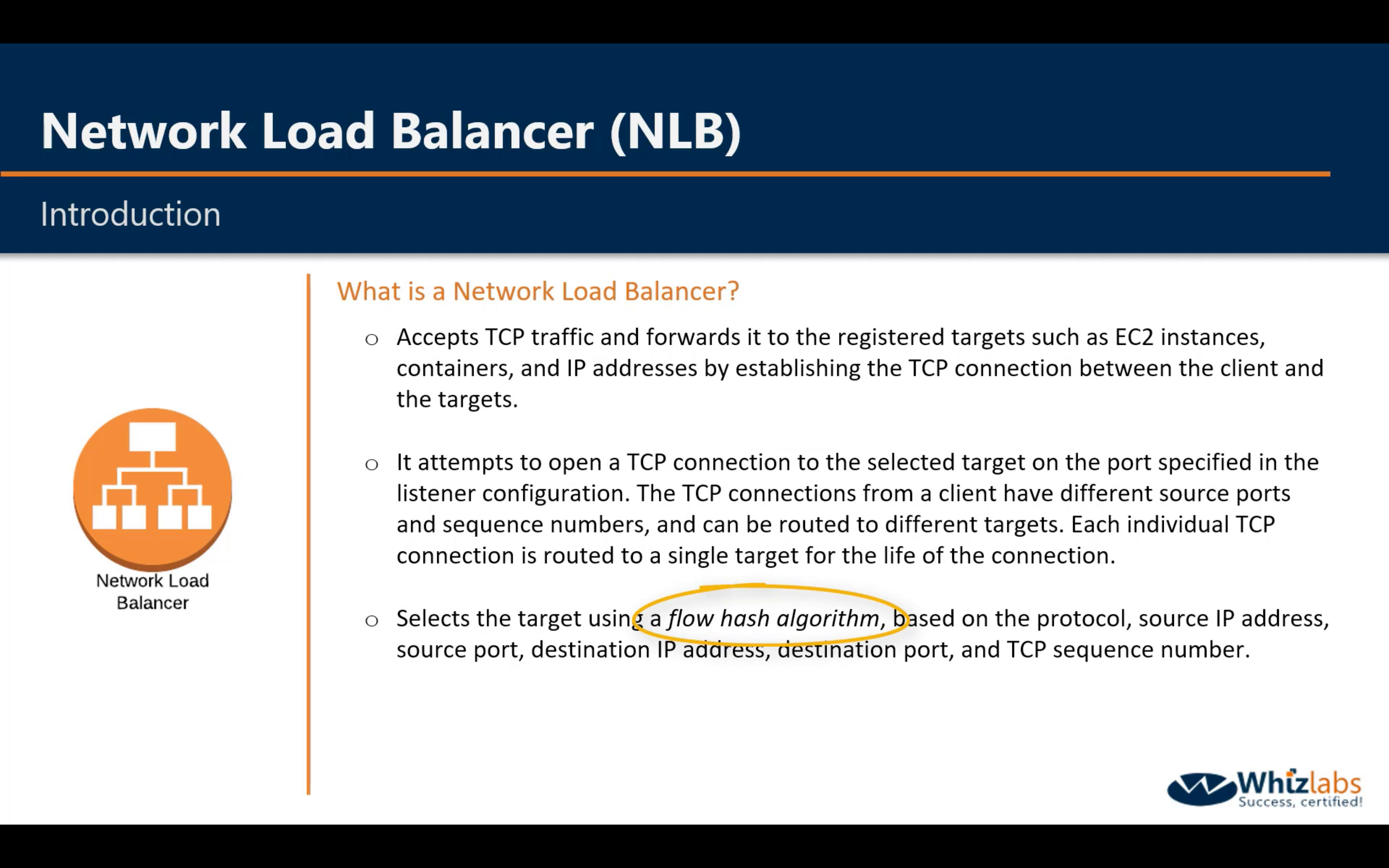

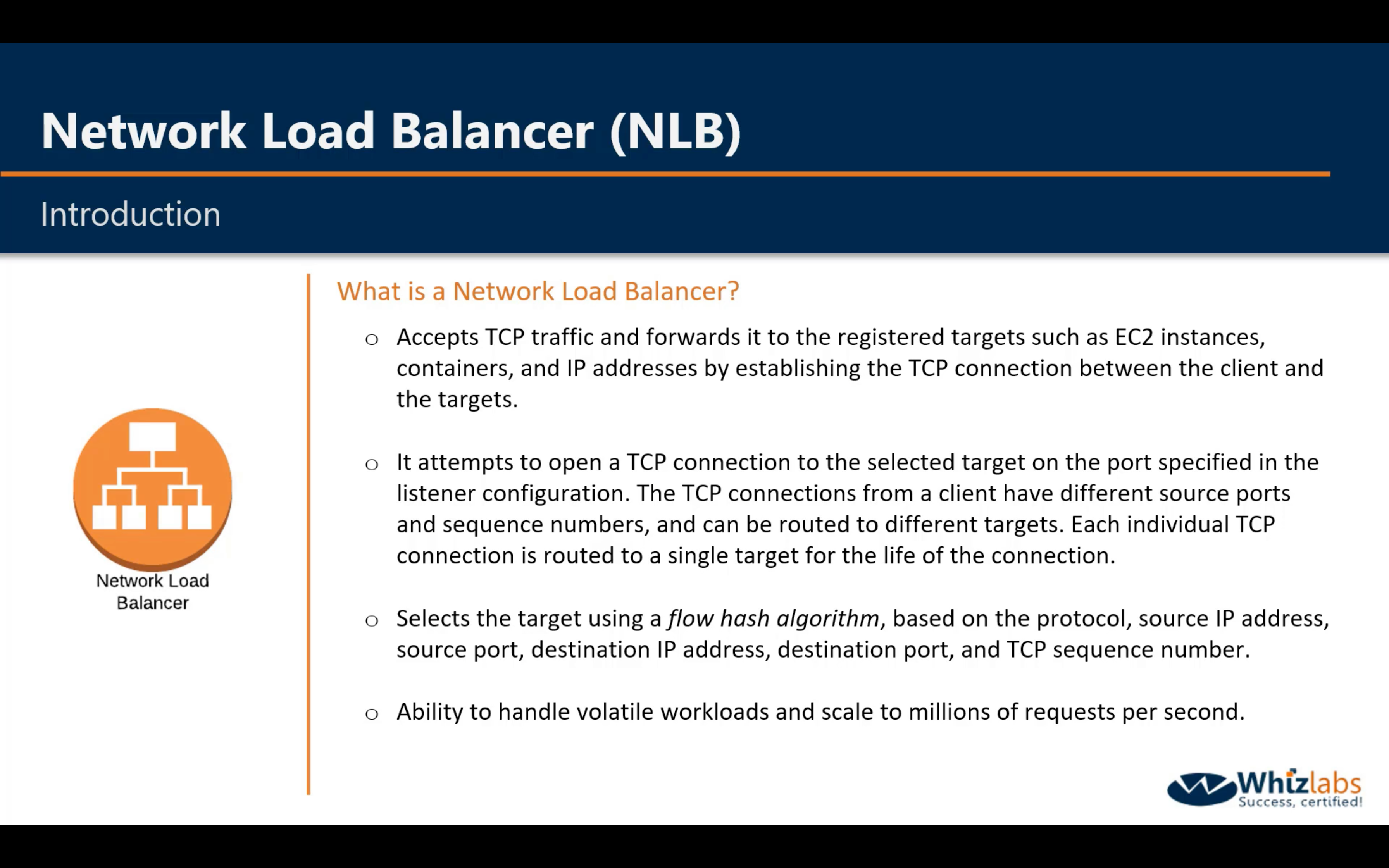

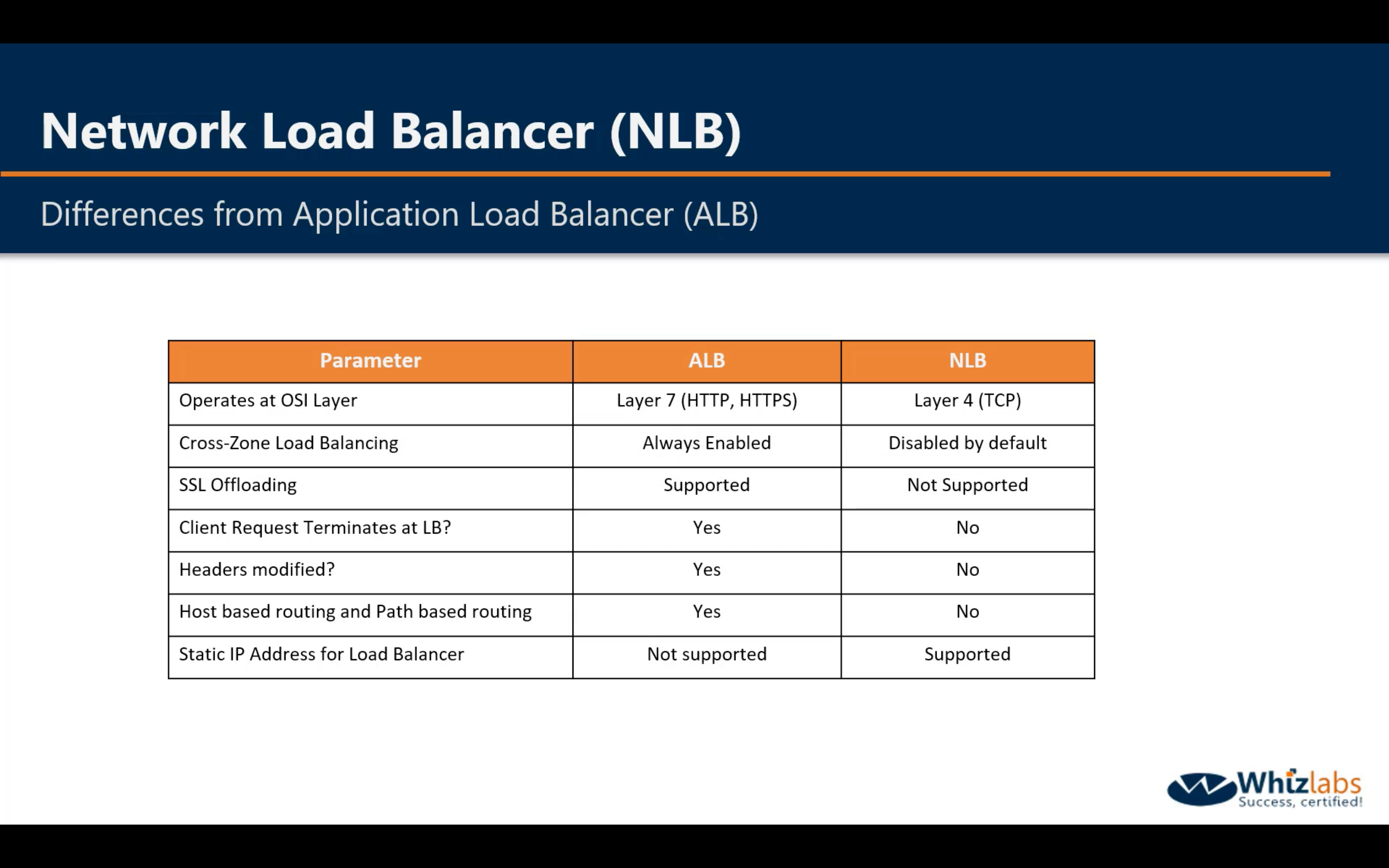

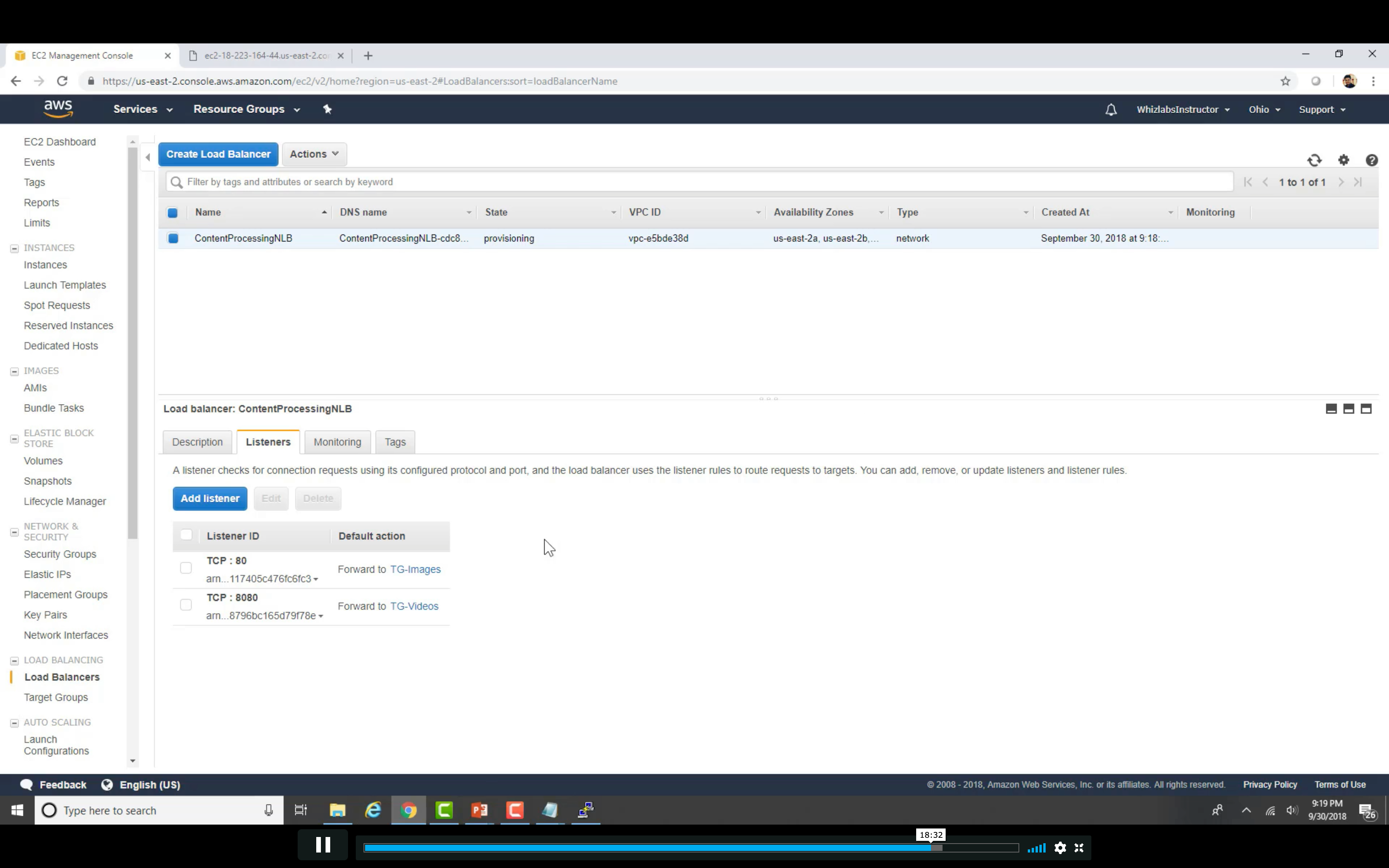

NLB

Difference

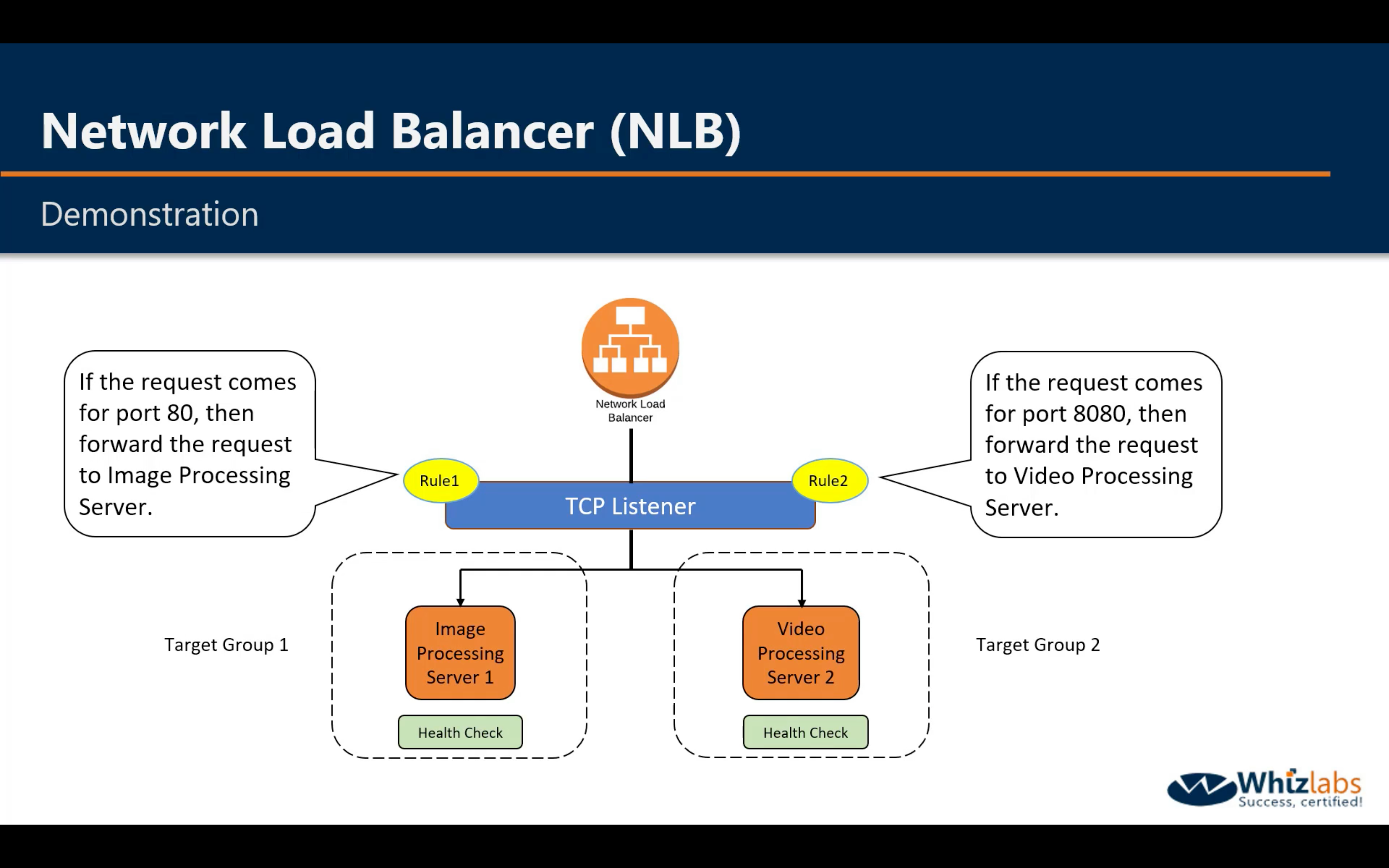

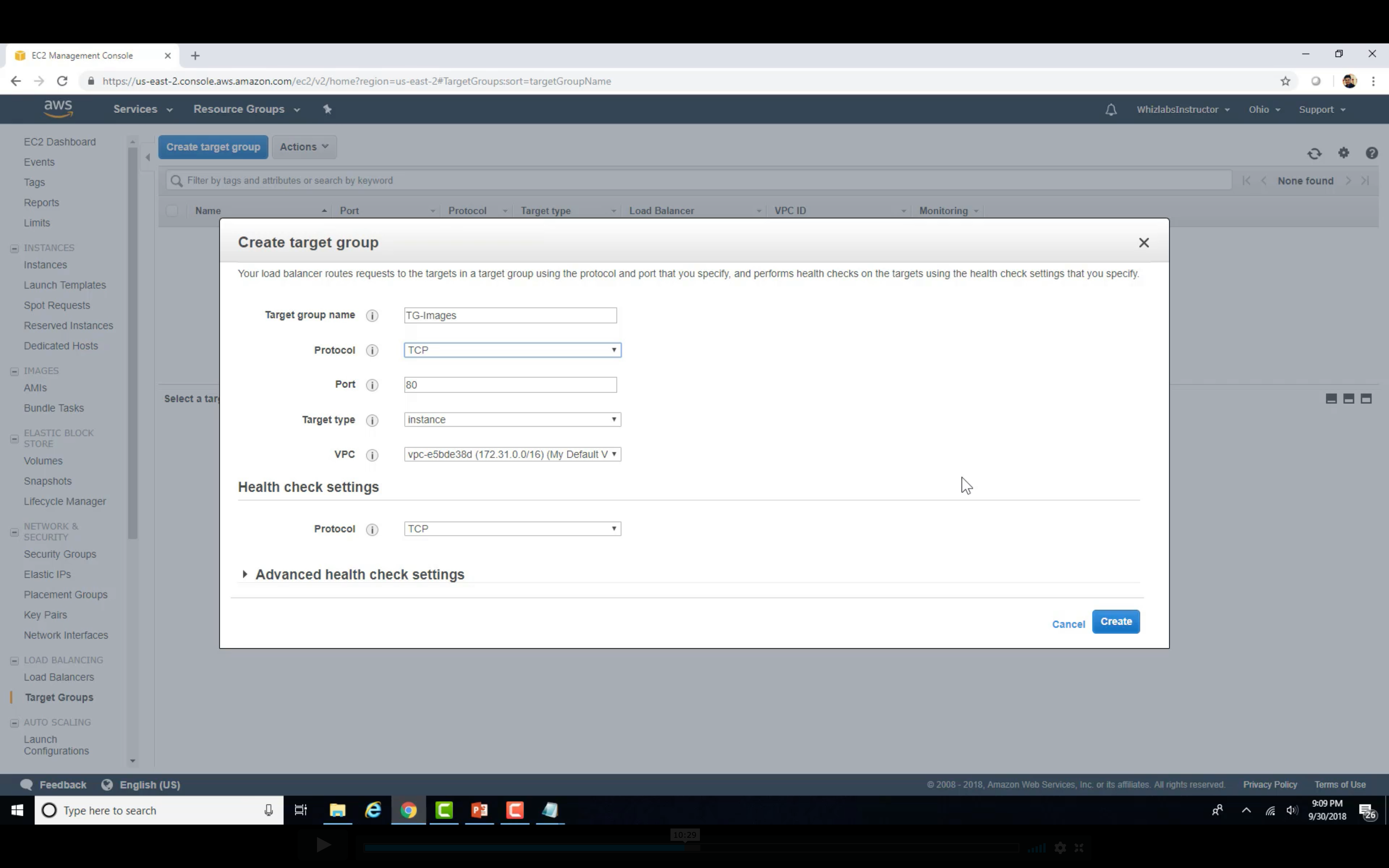

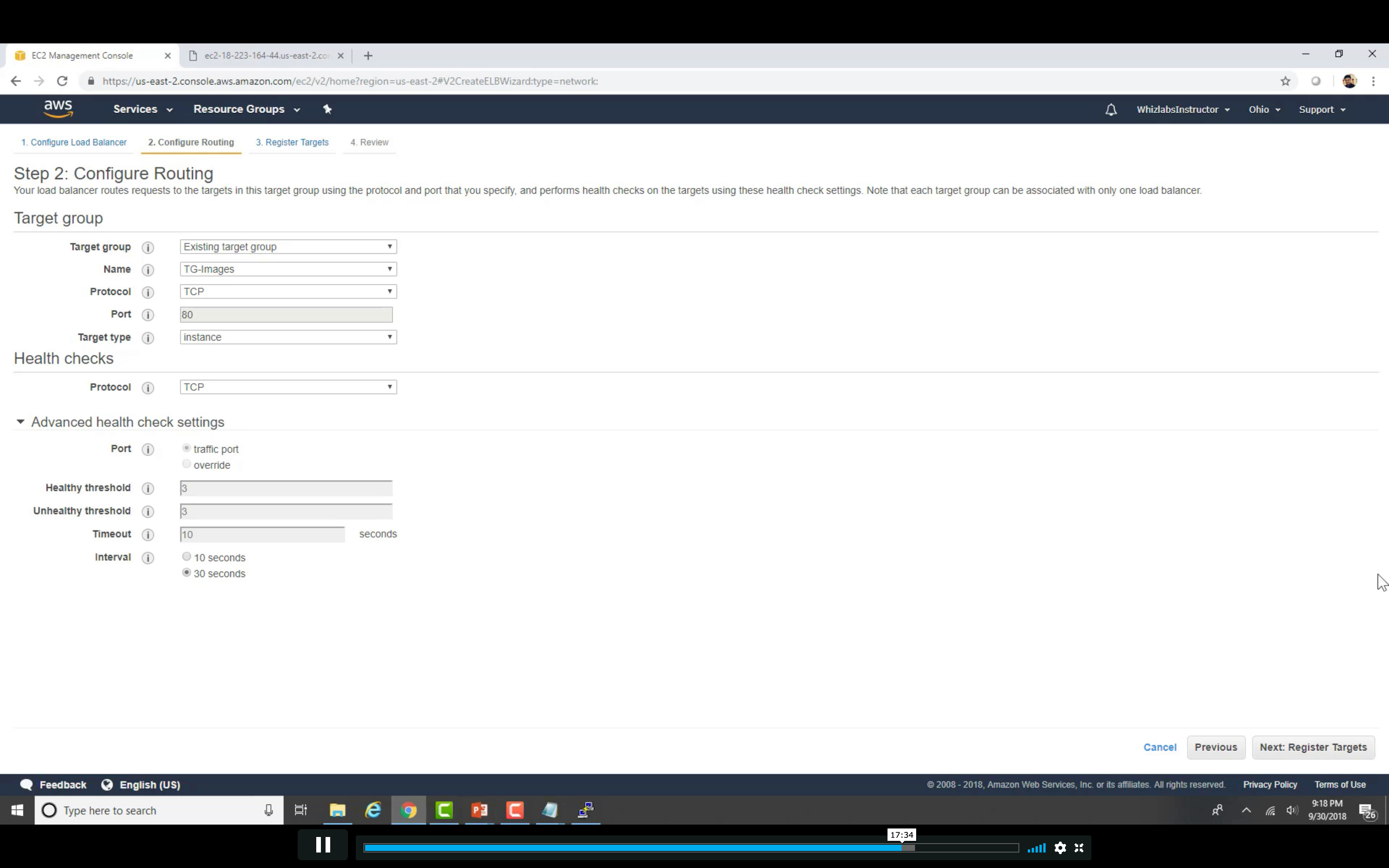

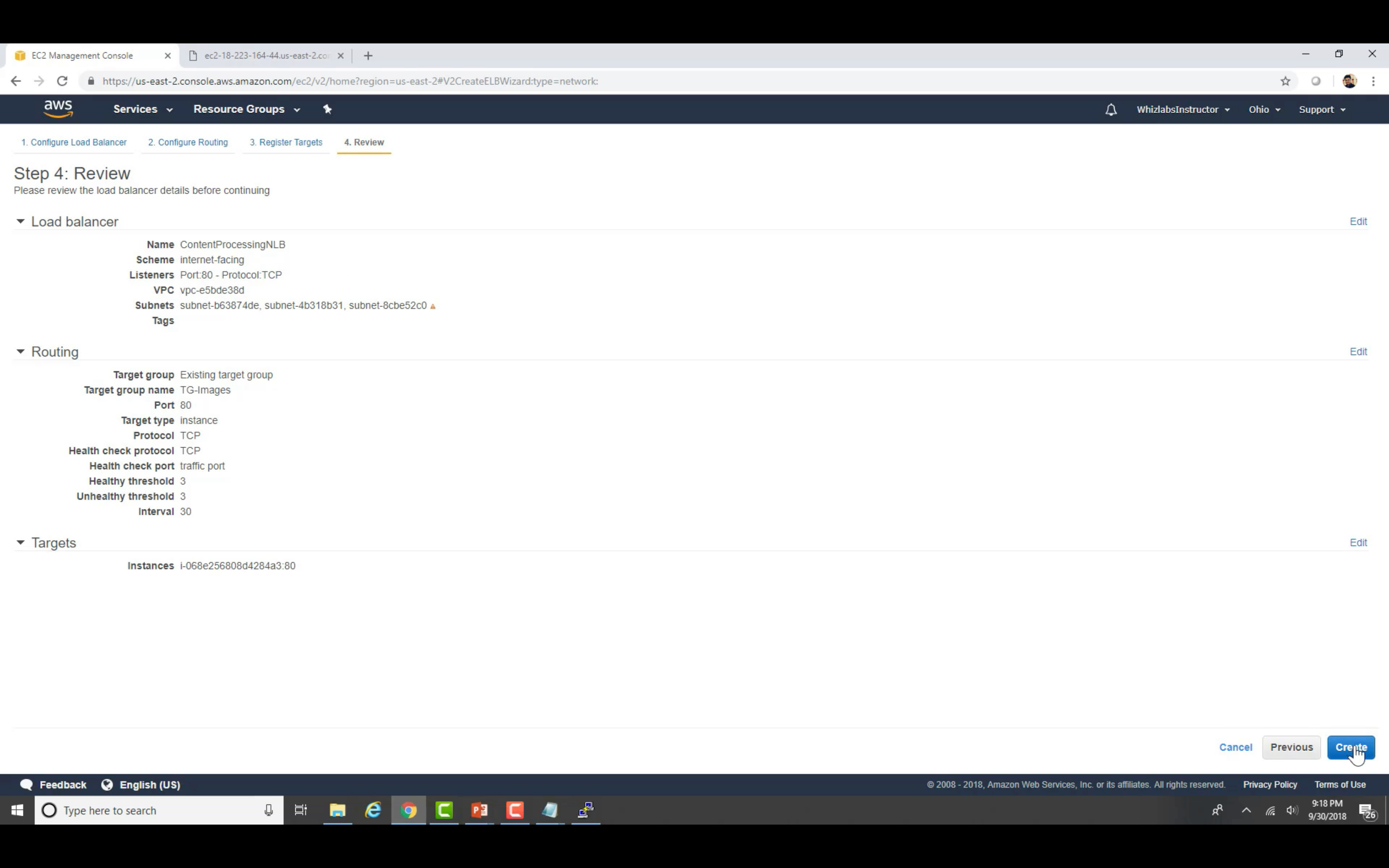

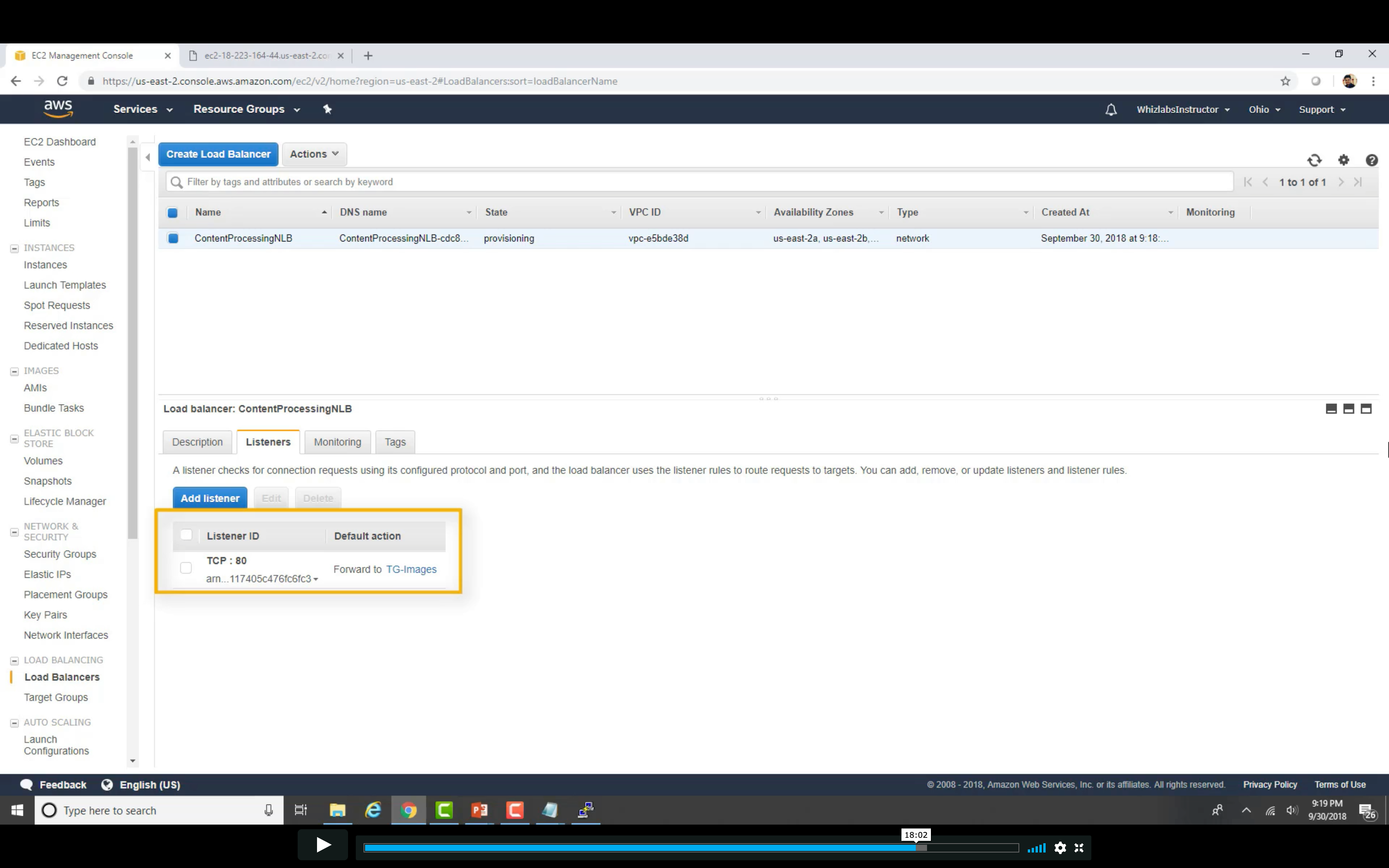

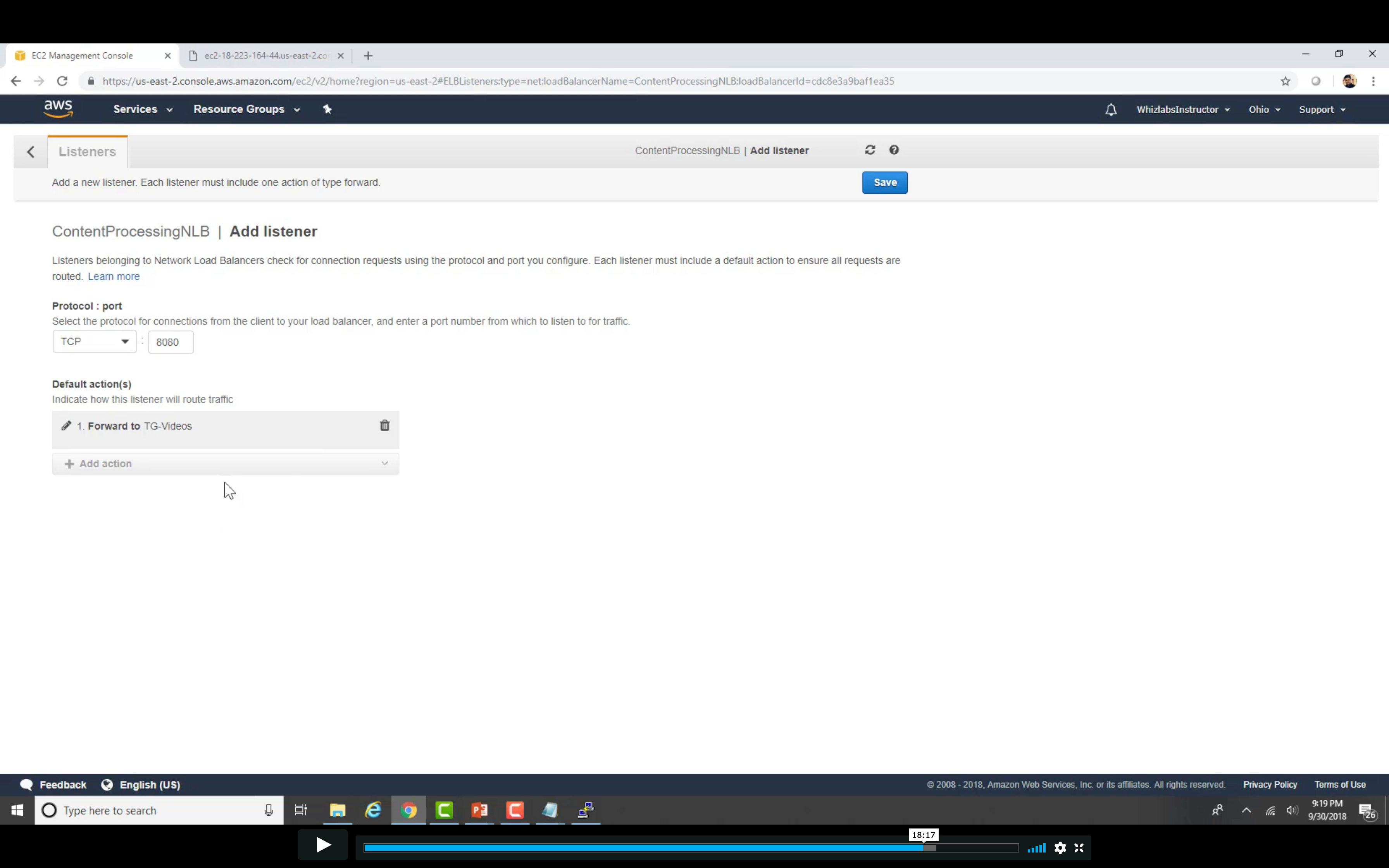

Demo

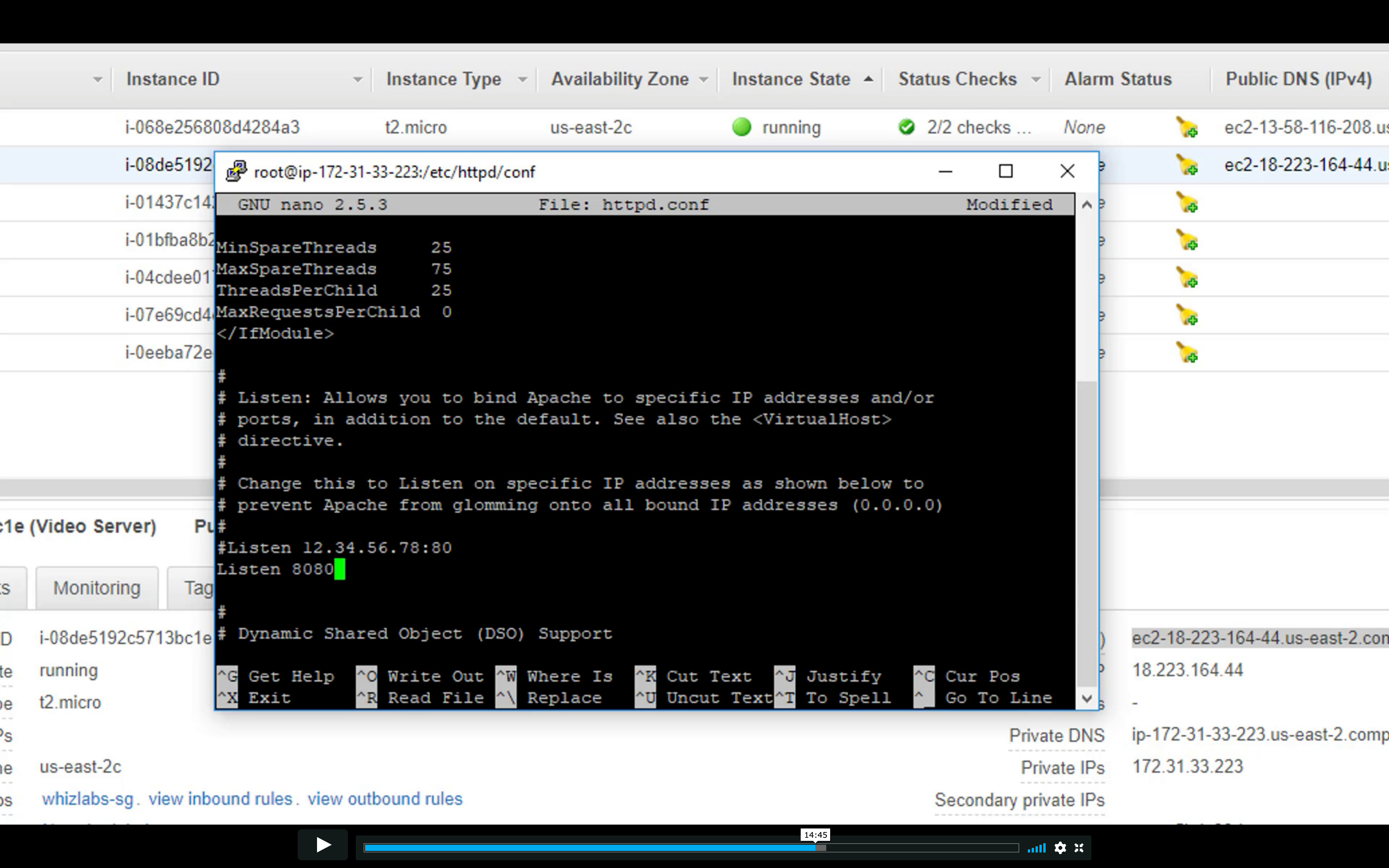

Change the http conf to listen 8080 on video server

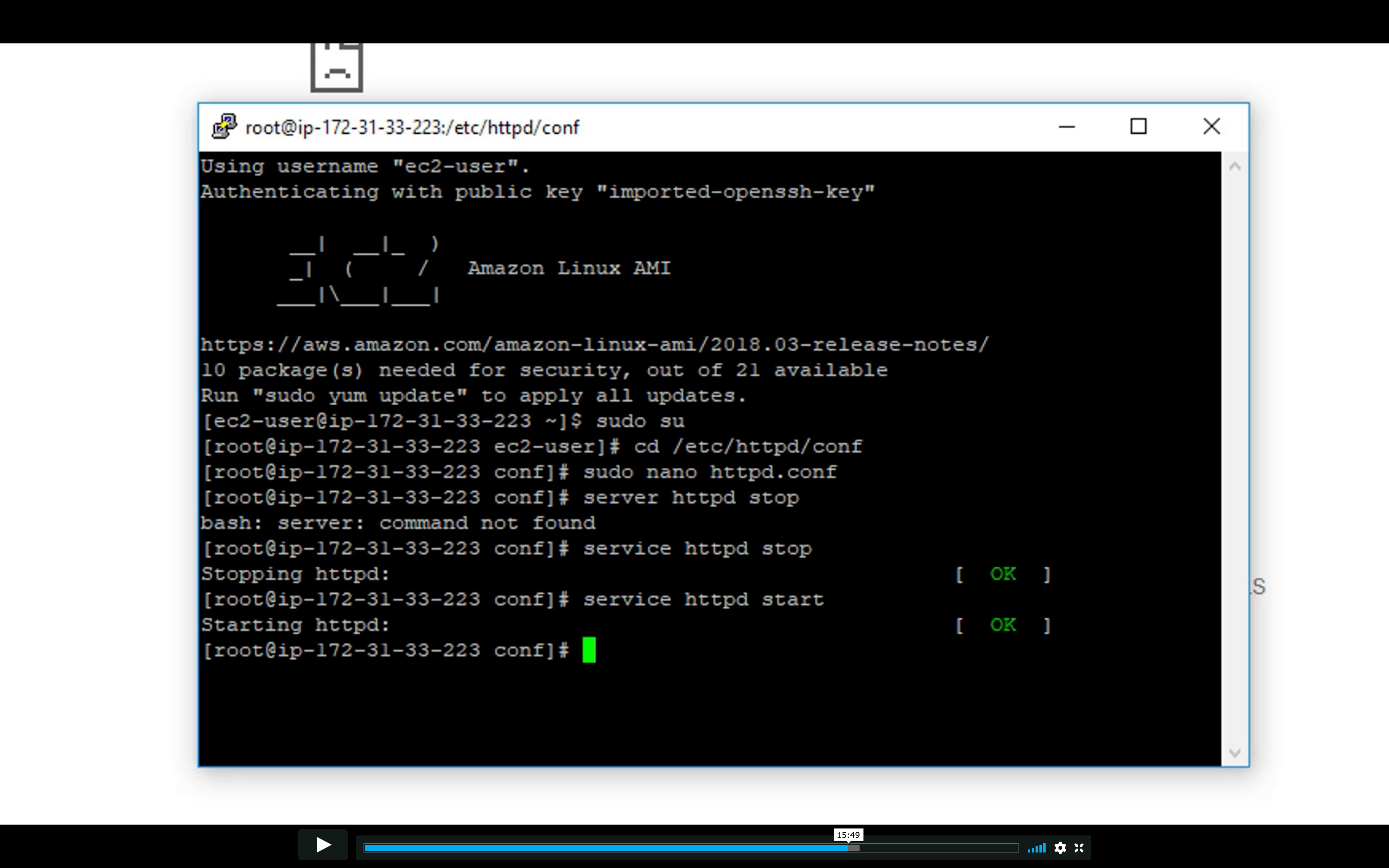

Restart http service

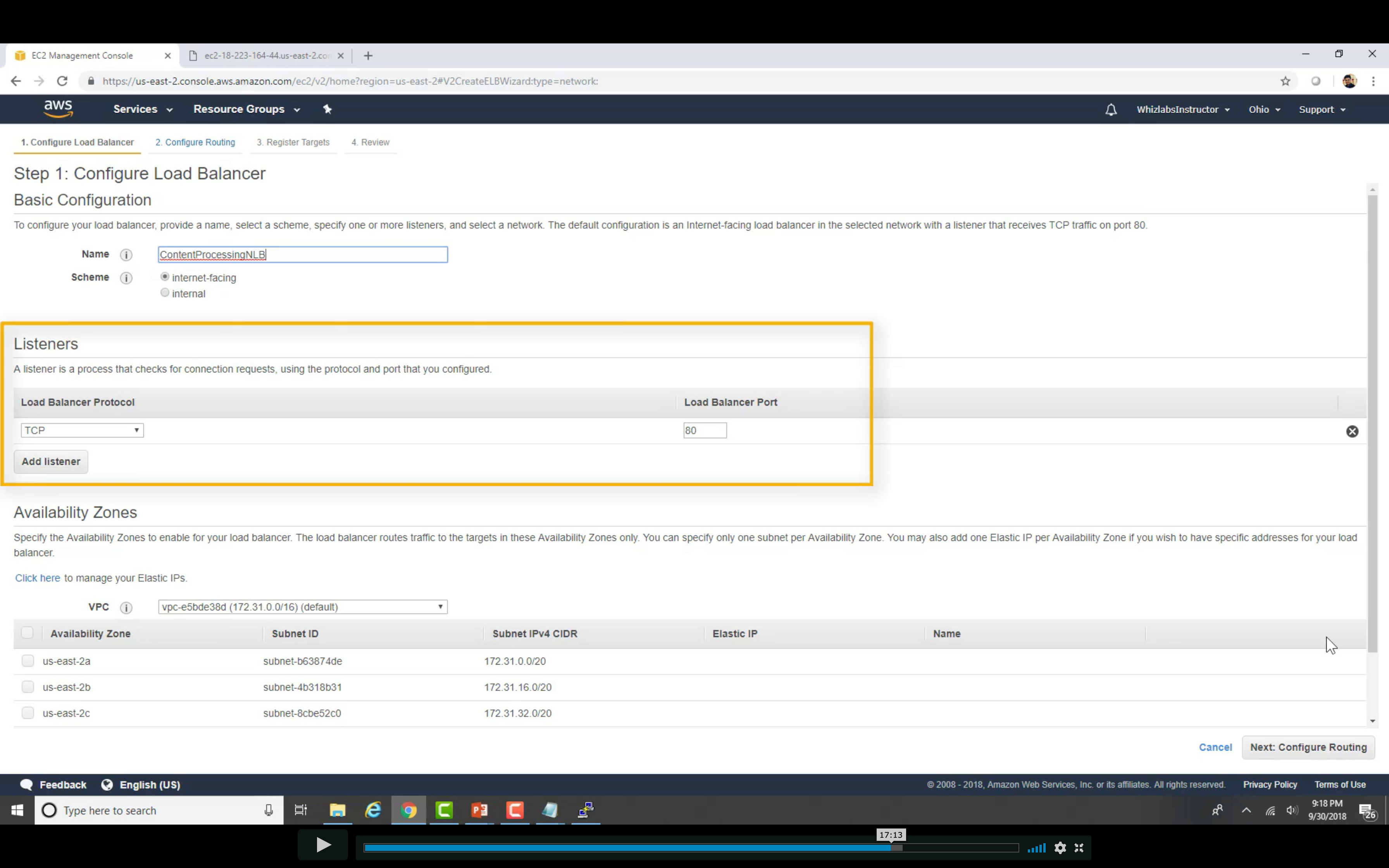

Create Load Balancer

Edit Rules

Distribute incoming traffic across multiple targets

Storage

Amazon S3 (Simple Storage Service)

Scalable storage in the cloud

- Storage management and monitoring

- Storage classes

- Access management and security

- Query in place

- Transferring large amounts of data

Connection

| Connection Type/S3 Type | Public S3 on Internet | Private S3 in VPC |

|---|---|---|

| Internet Access | IGW&NGW | No |

| VPC Access | No | Gateway Endpoint |

| Cross Region | IGW&NGW | Replication |

| Cross VPC | No | S3 Proxy running on EC2 |

- For VPC endpoints for Amazon S3 provide secure connections to S3 buckets that do not require a gateway or NAT instances.

- NAT Gateways and Internet Gateways still route traffic over the Internet to the public endpoint for Amazon S3.

- As per AWS, S3 VPC endpoints doesn’t support cross region requests.

When you create a VPC endpoint for Amazon S3, any requests to an Amazon S3 endpoint within the Region (for example, s3.us-west-2.amazonaws.com) are routed to a private Amazon S3 endpoint within the Amazon network. You don’t need to modify your applications running on EC2 instances in your VPC—the endpoint name remains the same, but the route to Amazon S3 stays entirely within the Amazon network, and does not access the public internet. - VPC Gateway endpoints are not supported outside VPC. On other words, Endpoint connections cannot be extended out of a VPC. Resources on the other side of a VPN connection, VPC peering connection, AWS Direct Connect connection, or ClassicLink connection in your VPC cannot use the endpoint to communicate with resources in the endpoint service.

https://docs.aws.amazon.com/AmazonVPC/latest/UserGuide/vpce-gateway.html#vpc- endpoints-limitations

So, to support such use cases, we can setup an S3 proxy server on AWS EC2 instance as shown below.

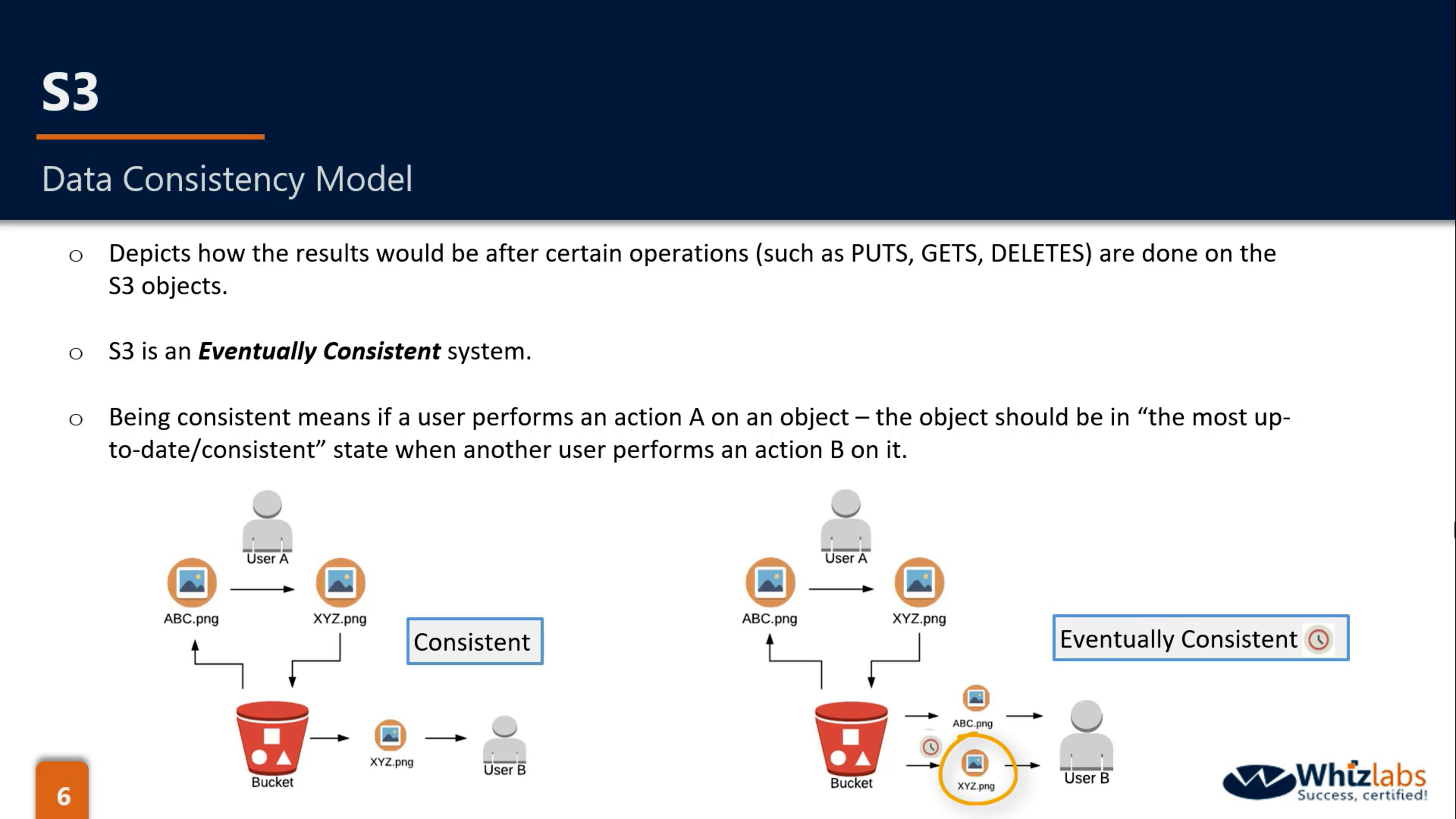

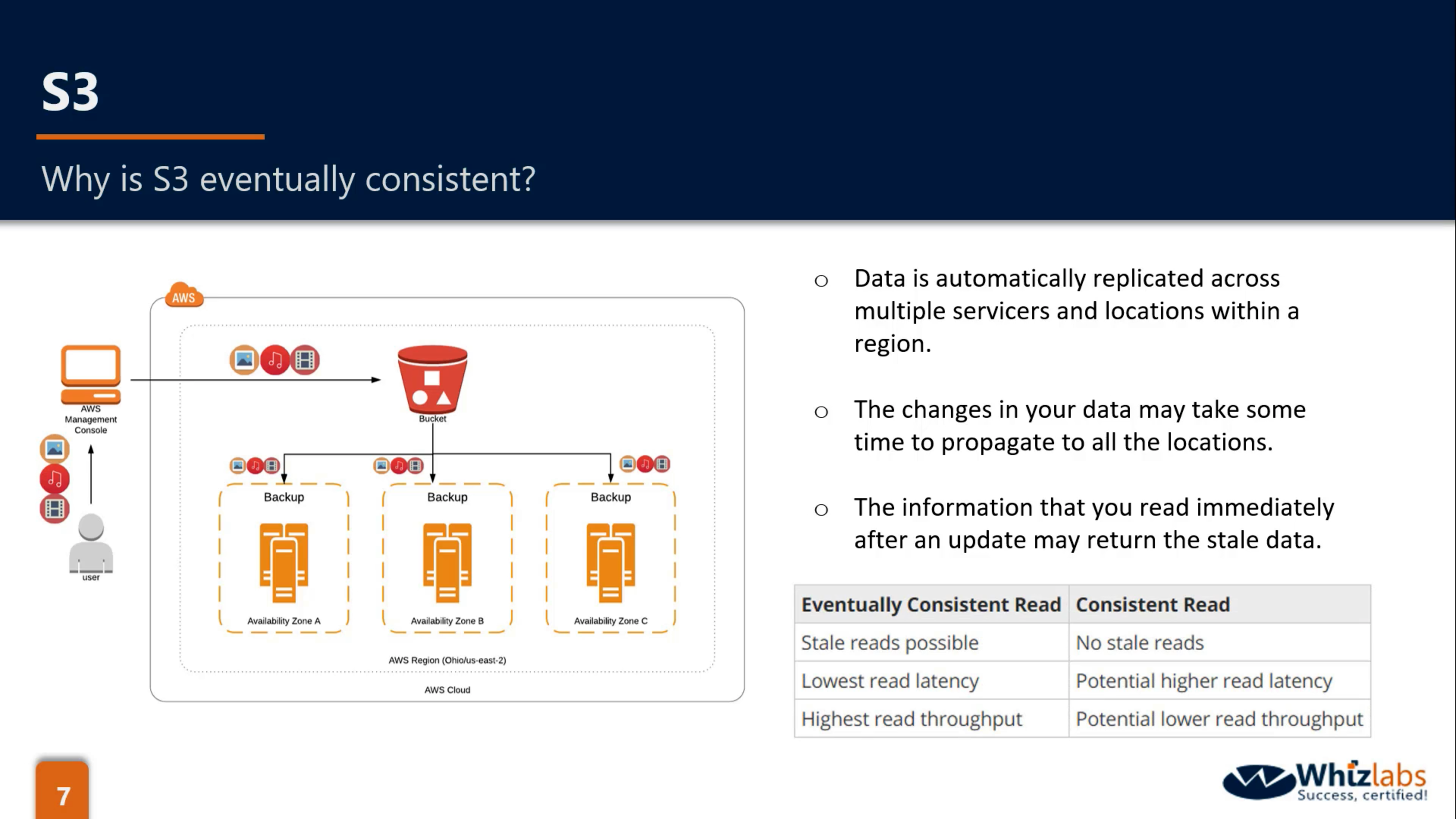

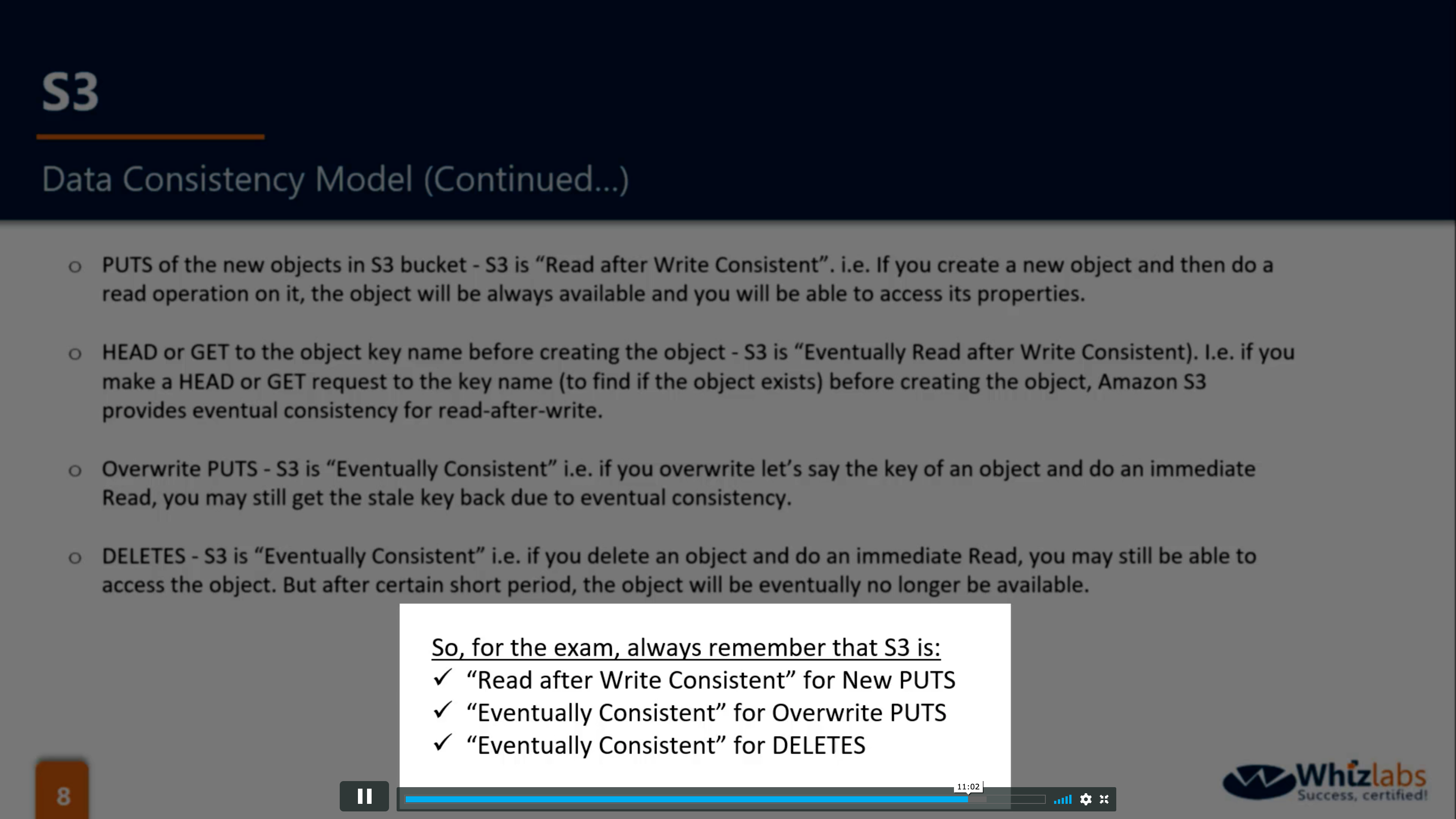

Eventually Consistent

- New PUTS: Read after Write Consistent

- Overwrite PUTS & DELETES: Eventually Consistent

https://docs.aws.amazon.com/AmazonS3/latest/dev/Introduction.html

API

REST: HTTP & HTTPS

SOAP: Not recommend for HTTP

Performance

Your application can achieve at least 3,500 PUT/COPY/POST/DELETE or 5,500 GET/HEAD requests per second per prefix in a bucket

There are no limits to the number of prefixes in a bucket. You can increase your read or write performance by parallelizing reads. For example, if you create 10 prefixes in an Amazon S3 bucket to parallelize reads, you could scale your read performance to 55,000 read requests per second.

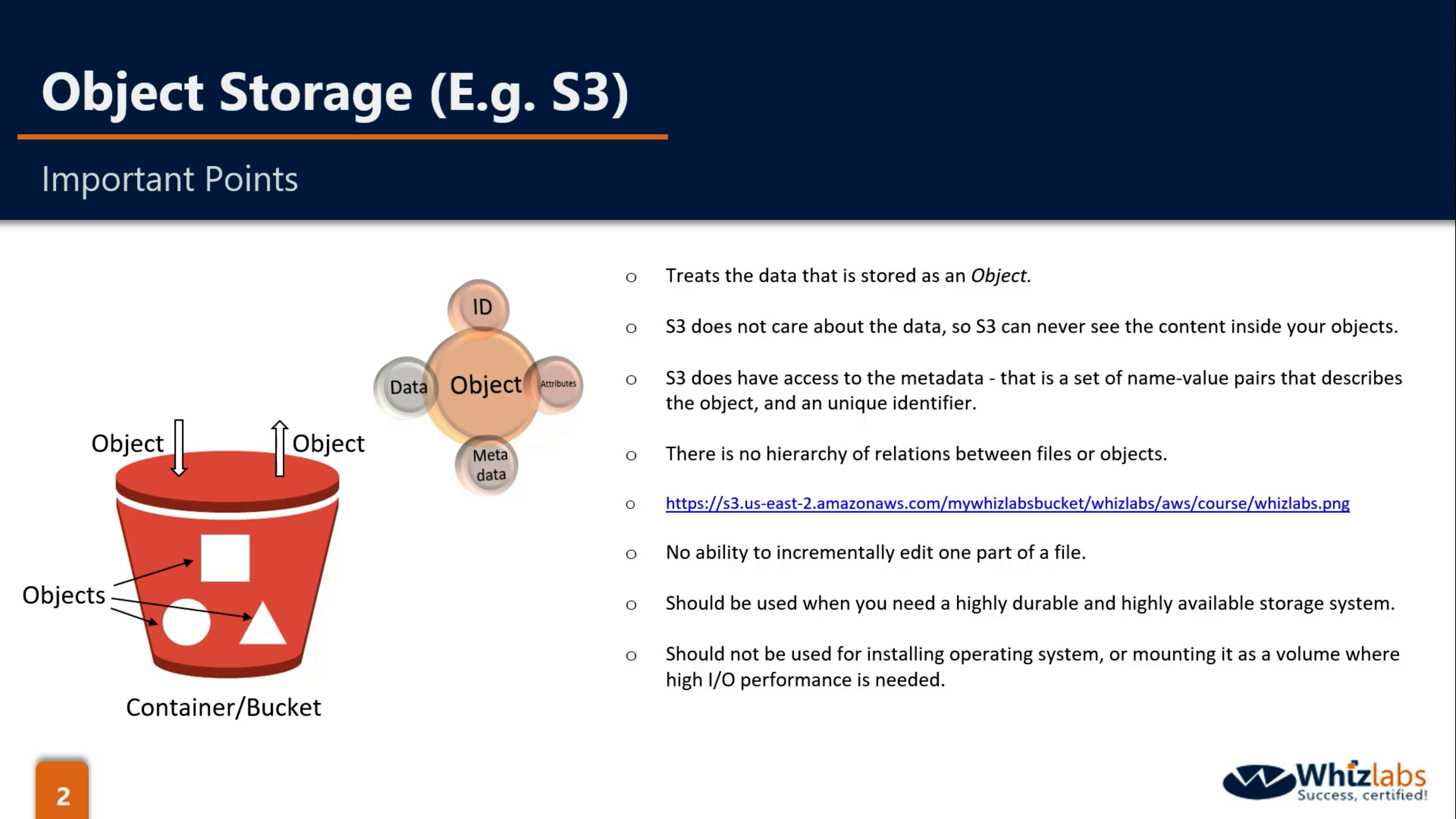

Object Storage vs. Block Storage

Metadata: Name-Value pairs, the unique identifer for object

System Defined Metadata:

| Name | Description | Can User Modify the Value? |

|---|---|---|

Date |

Current date and time. | No |

Content-Length |

Object size in bytes. | No |

Content-Type |

Object type. | Yes |

Last-Modified |

Object creation date or the last modified date, whichever is the latest. | No |

Content-MD5 |

The base64-encoded 128-bit MD5 digest of the object. | No |

x-amz-server-side-encryption |

Indicates whether server-side encryption is enabled for the object, and whether that encryption is from the AWS Key Management Service (AWS KMS) or from Amazon S3 managed encryption (SSE-S3). For more information, see Protecting data using server-side encryption. | Yes |

x-amz-version-id |

Object version. When you enable versioning on a bucket, Amazon S3 assigns a version number to objects added to the bucket. For more information, see Using versioning. | No |

x-amz-delete-marker |

In a bucket that has versioning enabled, this Boolean marker indicates whether the object is a delete marker. | No |

x-amz-storage-class |

Storage class used for storing the object. For more information, see Amazon S3 Storage Classes. | Yes |

x-amz-website-redirect-location |

Redirects requests for the associated object to another object in the same bucket or an external URL. For more information, see (Optional) configuring a webpage redirect. | Yes |

x-amz-server-side-encryption-aws-kms-key-id |

If x-amz-server-side-encryption is present and has the value of aws:kms, this indicates the ID of the AWS KMS symmetric customer master key (CMK) that was used for the object. | Yes |

x-amz-server-side-encryption-customer-algorithm |

Indicates whether server-side encryption with customer-provided encryption keys (SSE-C) is enabled. For more information, see Protecting data using server-side encryption with customer-provided encryption keys (SSE-C). | Yes |

User-Defined Object Metadata

When uploading an object, you can also assign metadata to the object. You provide this optional information as a name-value (key-value) pair when you send a PUT or POST request to create the object.

- REST:

- When you upload objects using the REST API, the optional user-defined metadata names must begin with “x-amz-meta-“ to distinguish them from other HTTP headers.

- When you retrieve the object using the REST API, this prefix is returned.

- SOAP:

- When you upload objects using the SOAP API, the prefix is not required.

- When you retrieve the object using the SOAP API, the prefix is removed, regardless of which API you used to upload the object.

- Supported Key

Cache-ControlContent-DispositionContent-EncodingContent-LanguageContent-TypeExpiresWebsite-Redirect-Locationx-amz-meta-

S3

- S3 is Object storage

- Flat structure which means NO hierarchy

- High durable & High scalable but not for OS or mount point (because of High I/O)

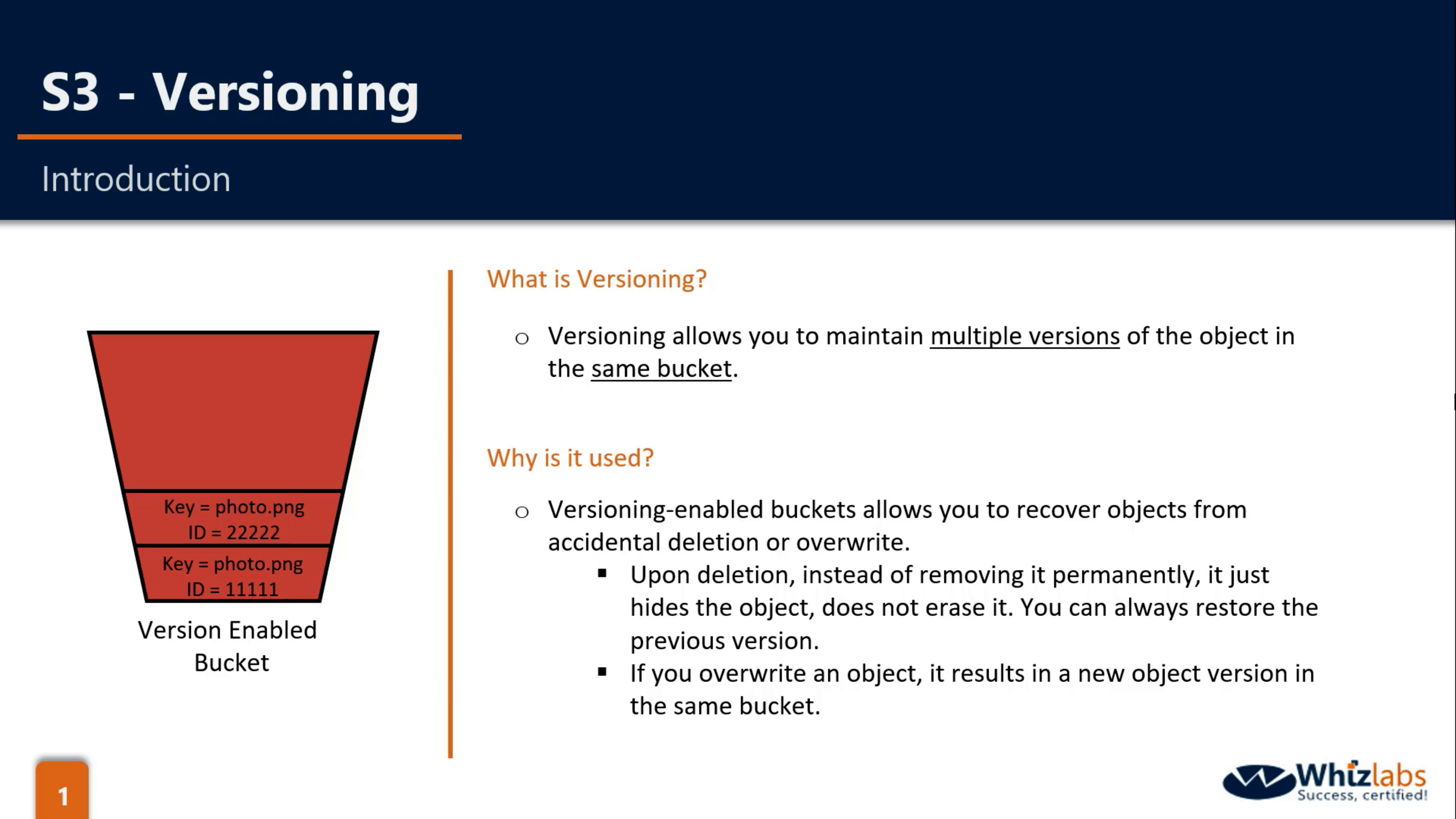

Bucket Properties: Versioning

State of the buckets:

- Un-versioned(default)

- Versioning-Enabled

- Versioning-Suspended

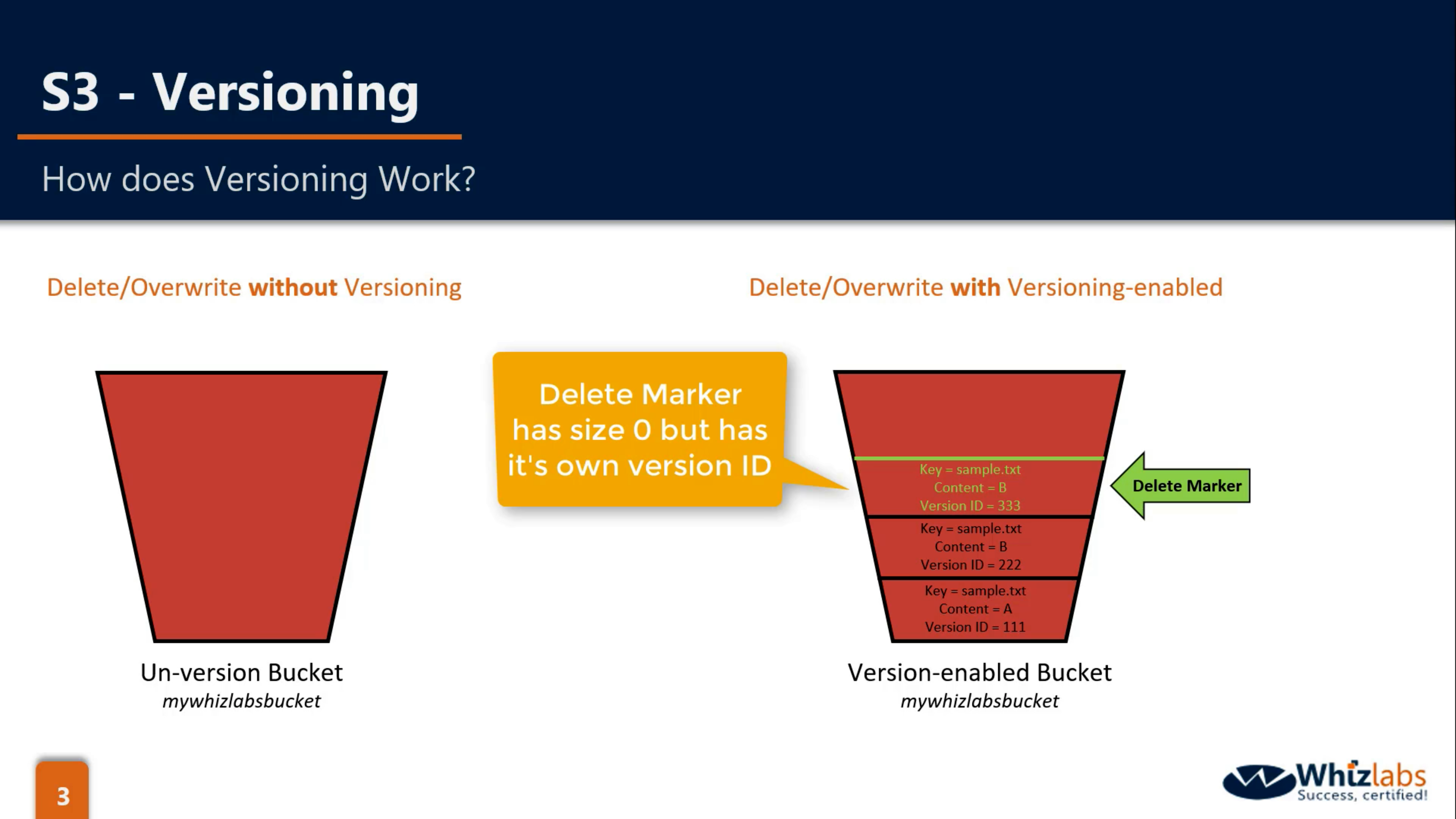

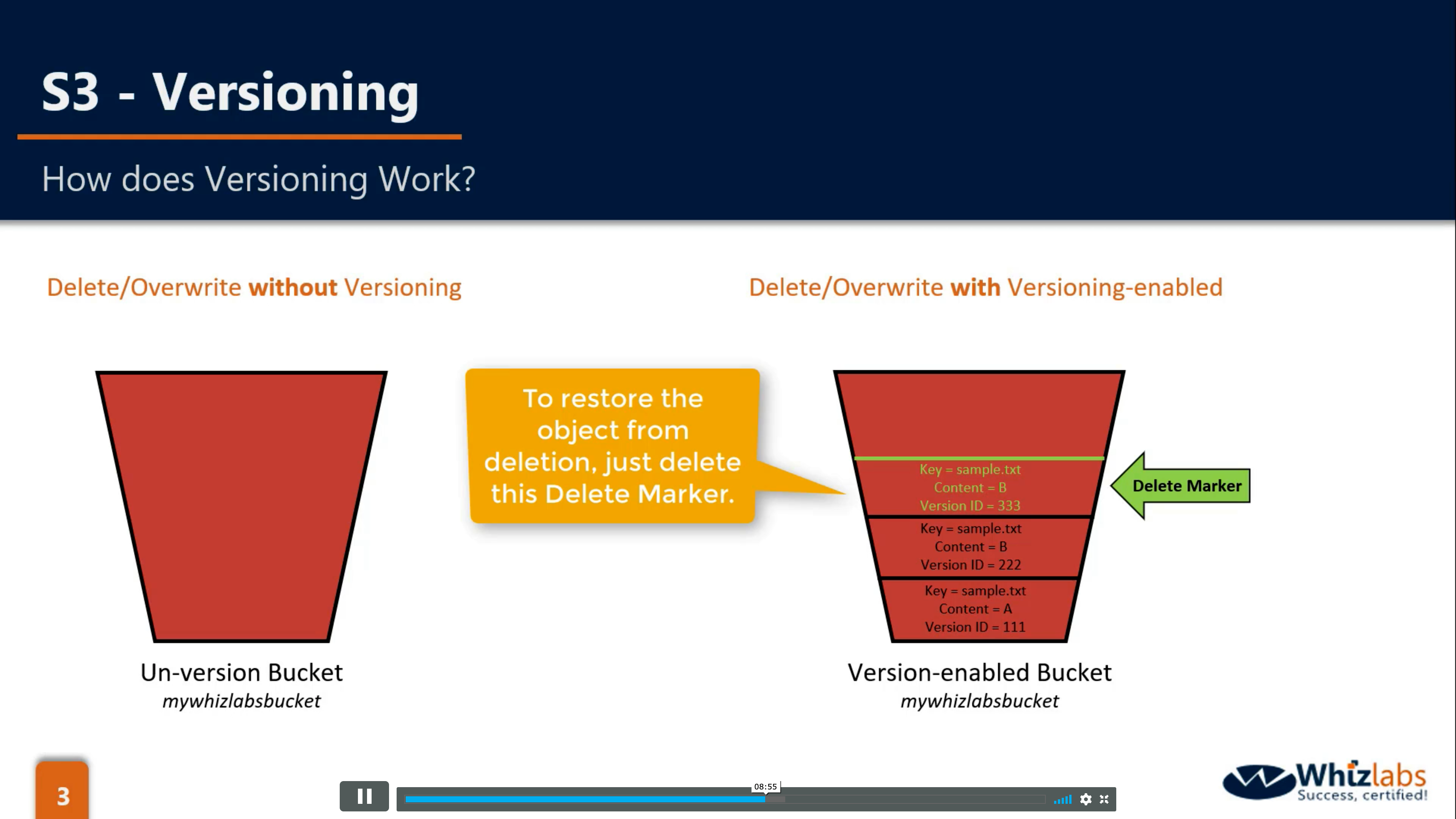

Object:

- Un-versioned object has null as version ID

- when you enable versioning on an Un-versioned object, it will has null as the first version ID

- new version is private as default even the pervious version is public

- delete-marker to mark the object, not really delete

- when you suspend a Versioning-Enabled bucket, the latest updated object will has a null version ID and others will not change.

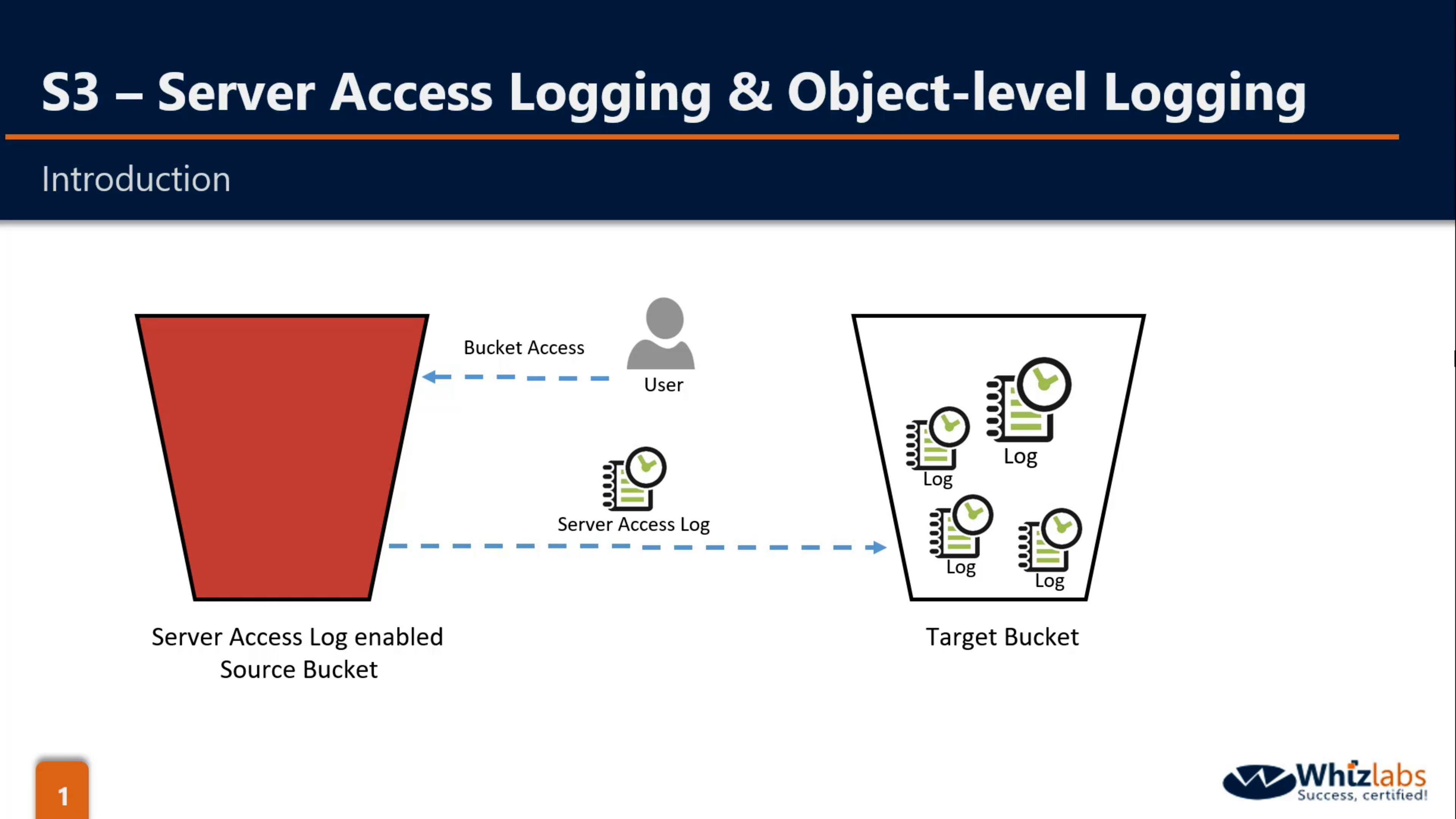

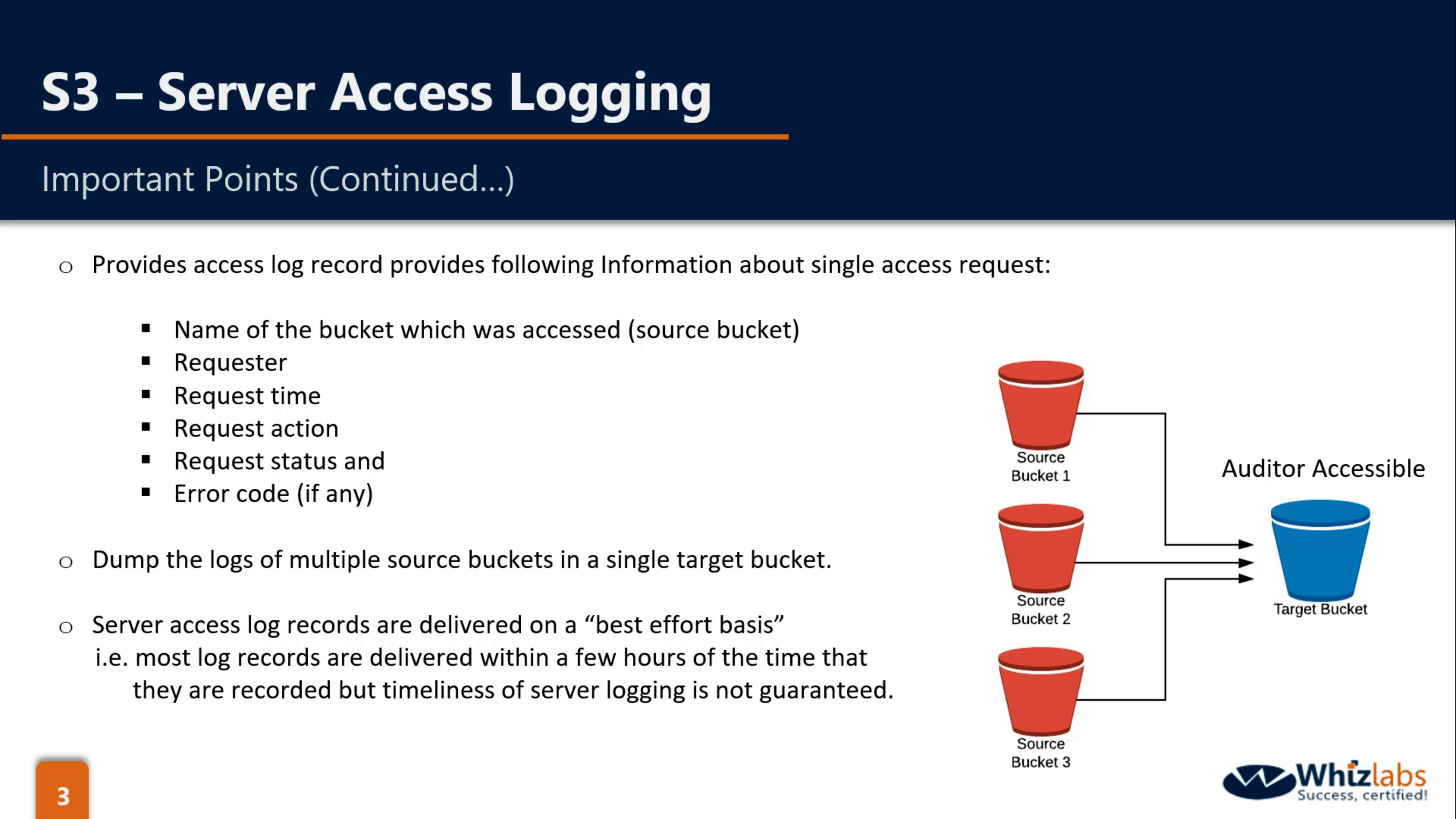

Bucket Properties: Server Access Logging & Object-Level Logging (default disabled)

Server Access Logging (default disabled):

- Store in the target bucket.

- source bucket and target bucket could be same.

- source bucket and target bucket should be in the same region.

- make prefix to identify logs.

- need Log Delivery group write permission on target bucket.

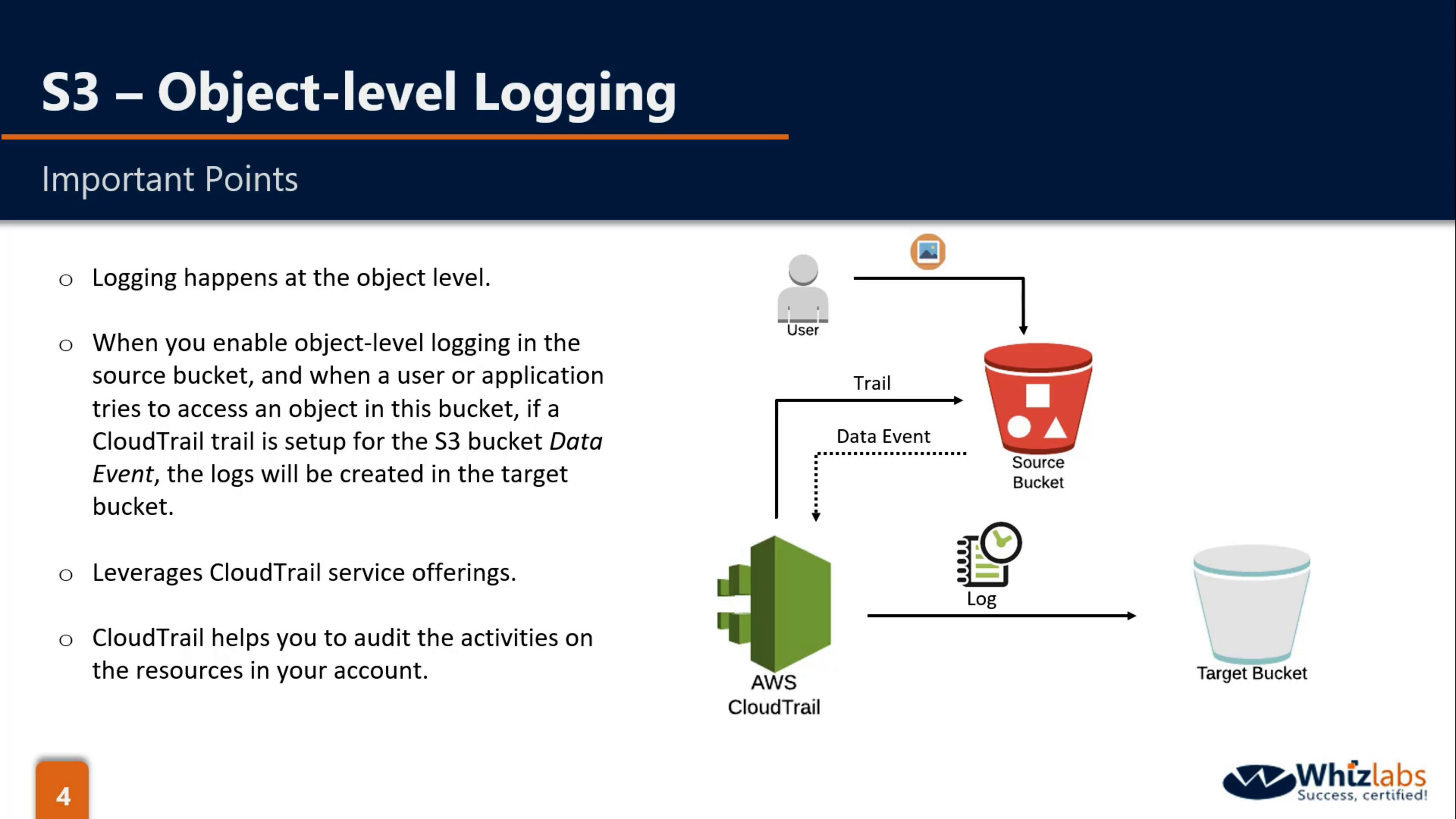

Object-level logging (default disabled):

- Store in the target bucket when CloudTrail is enabled.

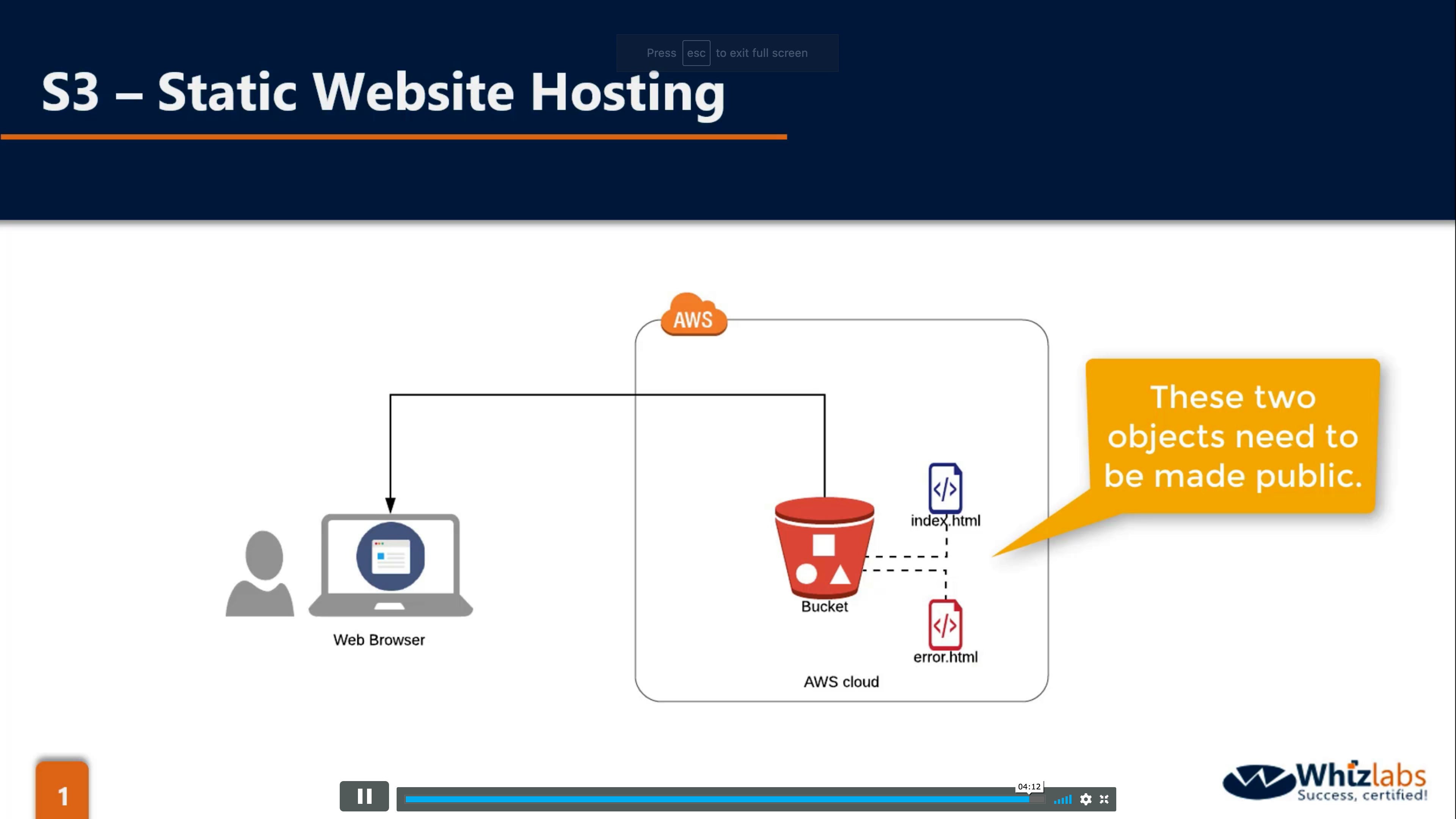

Bucket Properties: Statistic Website (default disabled)

Bucket & Object Properties: Encryption (default disabled)

Distinguish the concepts:

- Encryption on the object level.

- Customer Master Key (CMK) is used for encrypt encryption key, which means you can only have encryption key for encrypting and decrypting data.

- CMK is not mandatory for encryption key that managed and created by client.

- Who create and manage the encryption key and the master key?

- Who encrypt and decrypt the data?

SSE-S3: S3(2 keys), S3

SSE-KMS: KMS(Encryption Key), Client(CMK), S3

SSE-C(May not have any CMK): Client(Encryption key), S3

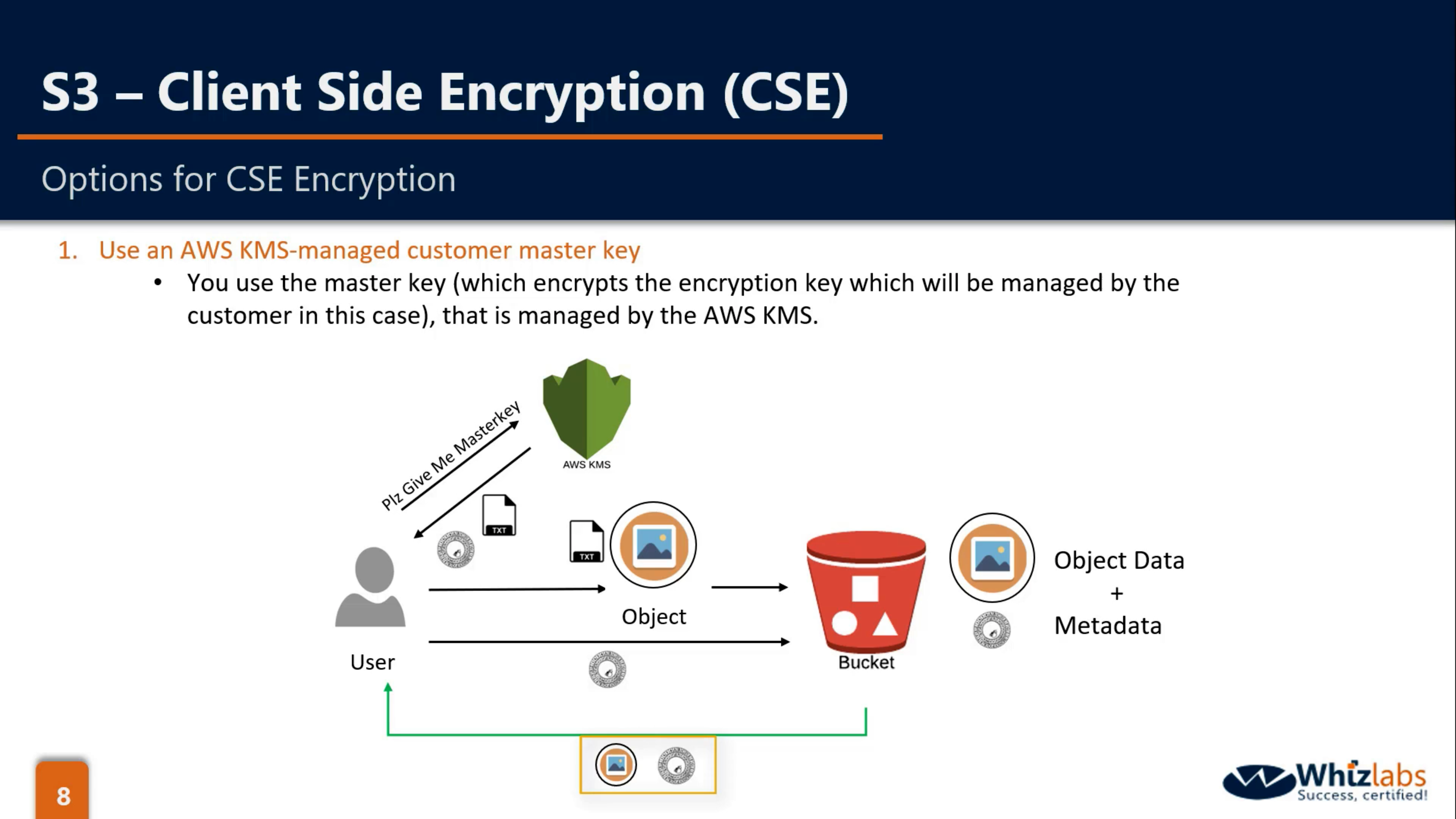

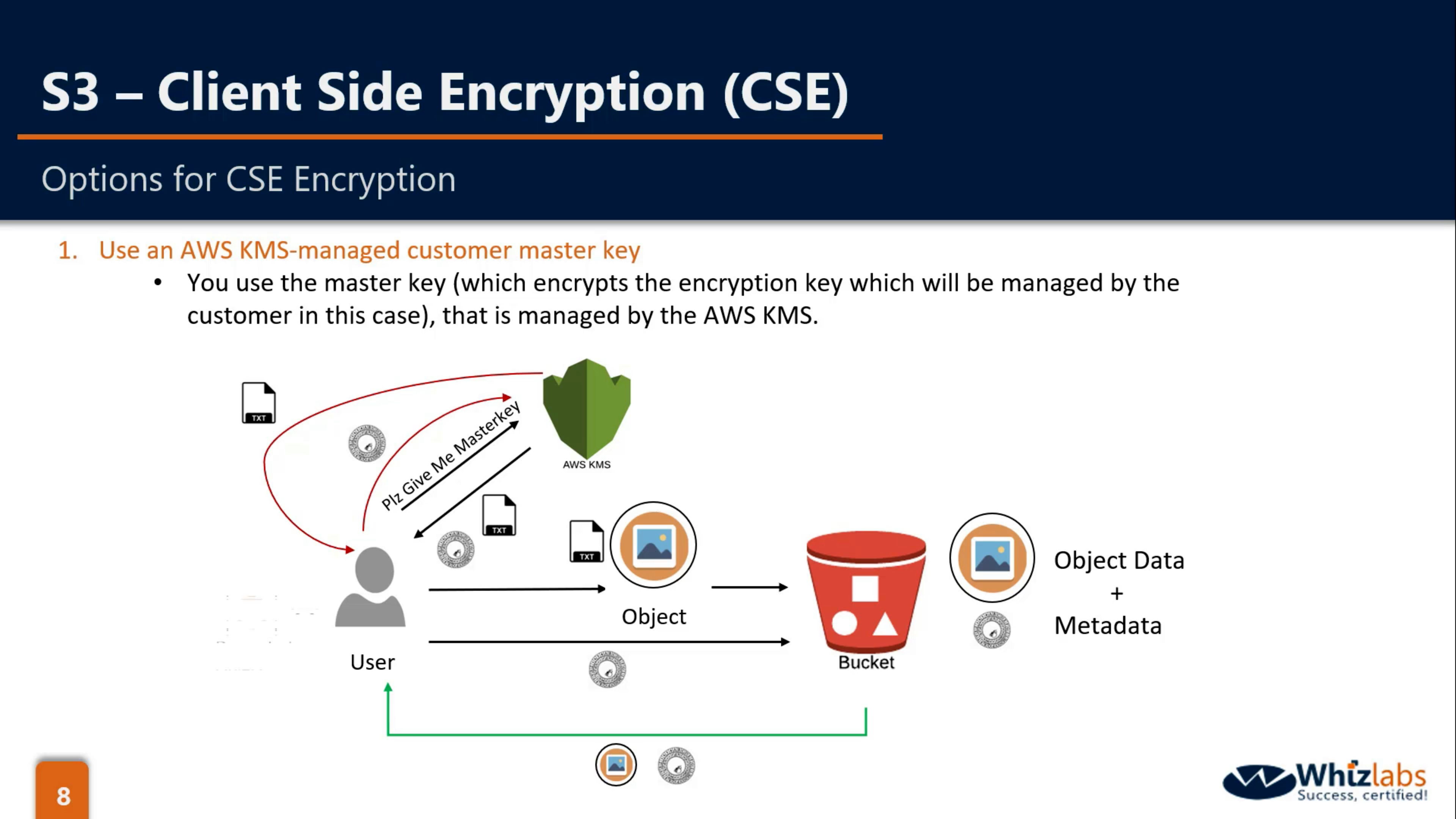

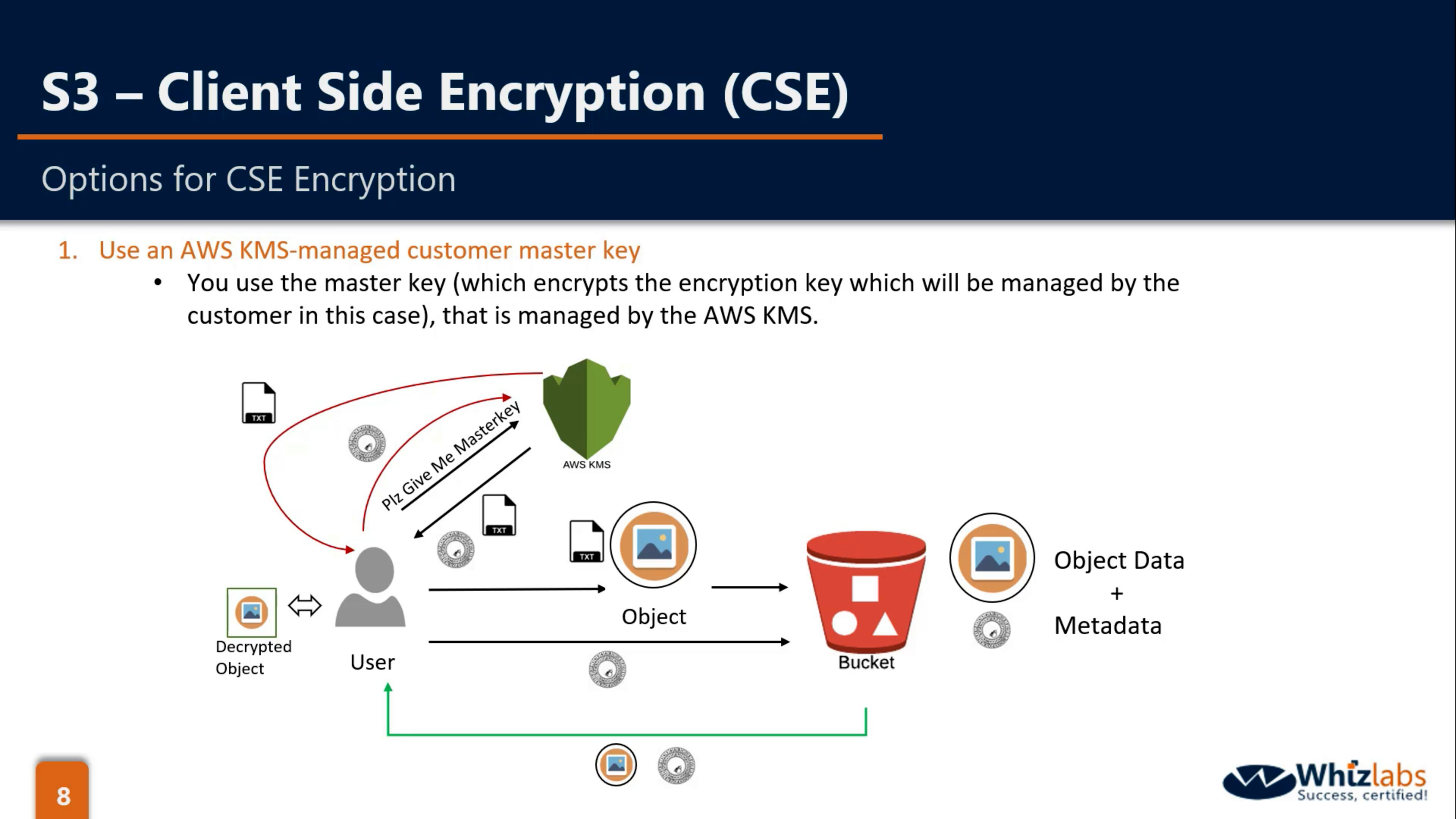

CSE-KMS: KMS(CMK), Client(Use Encryption key from KMS to encrypt and decrypt data)

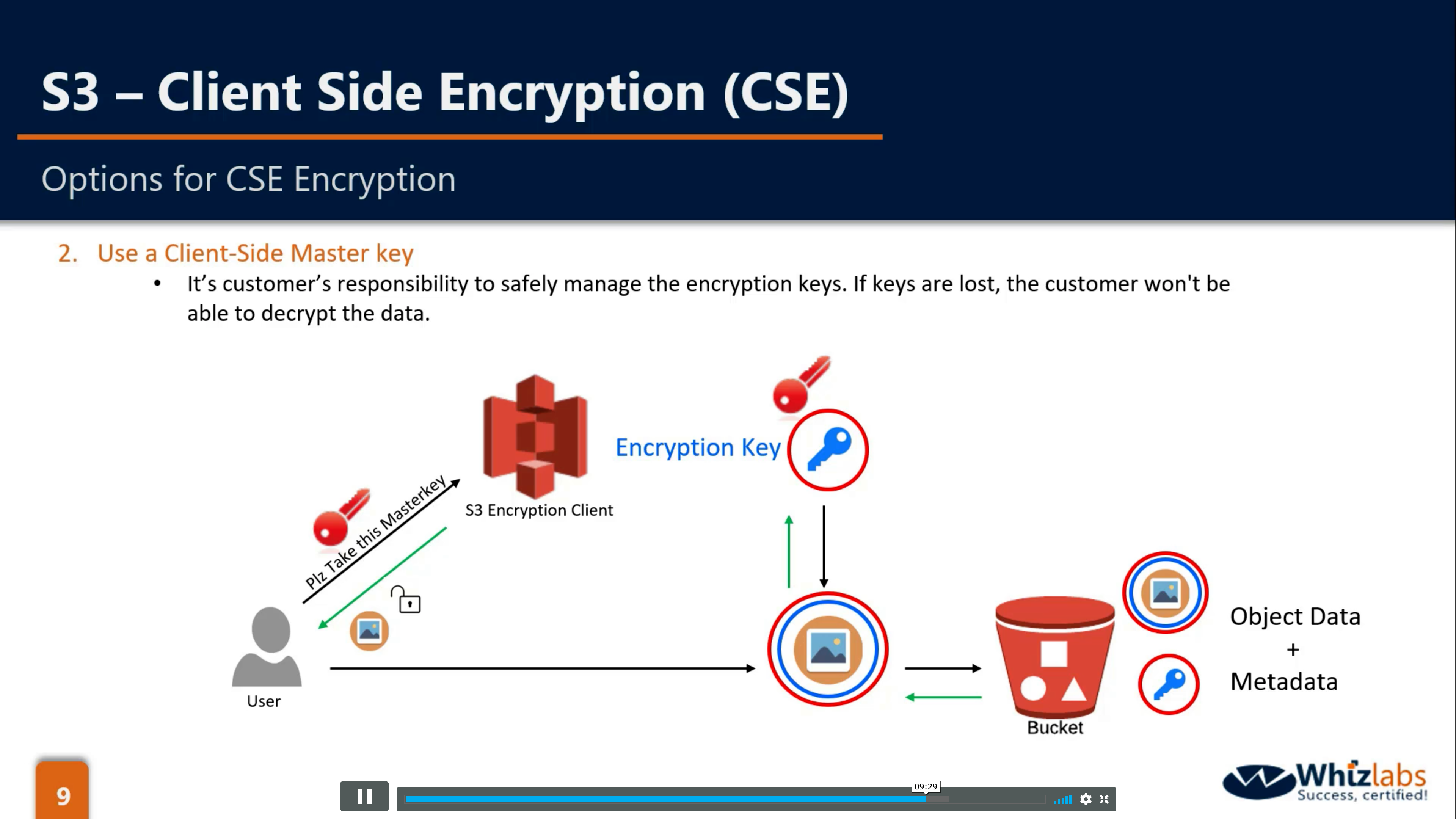

CSE-C: Client(CMK), S3 Encryption Client(Use CMK from customer to encrypt encryption key, then use encryption key to encrypt or decrypt data)

SSE-S3

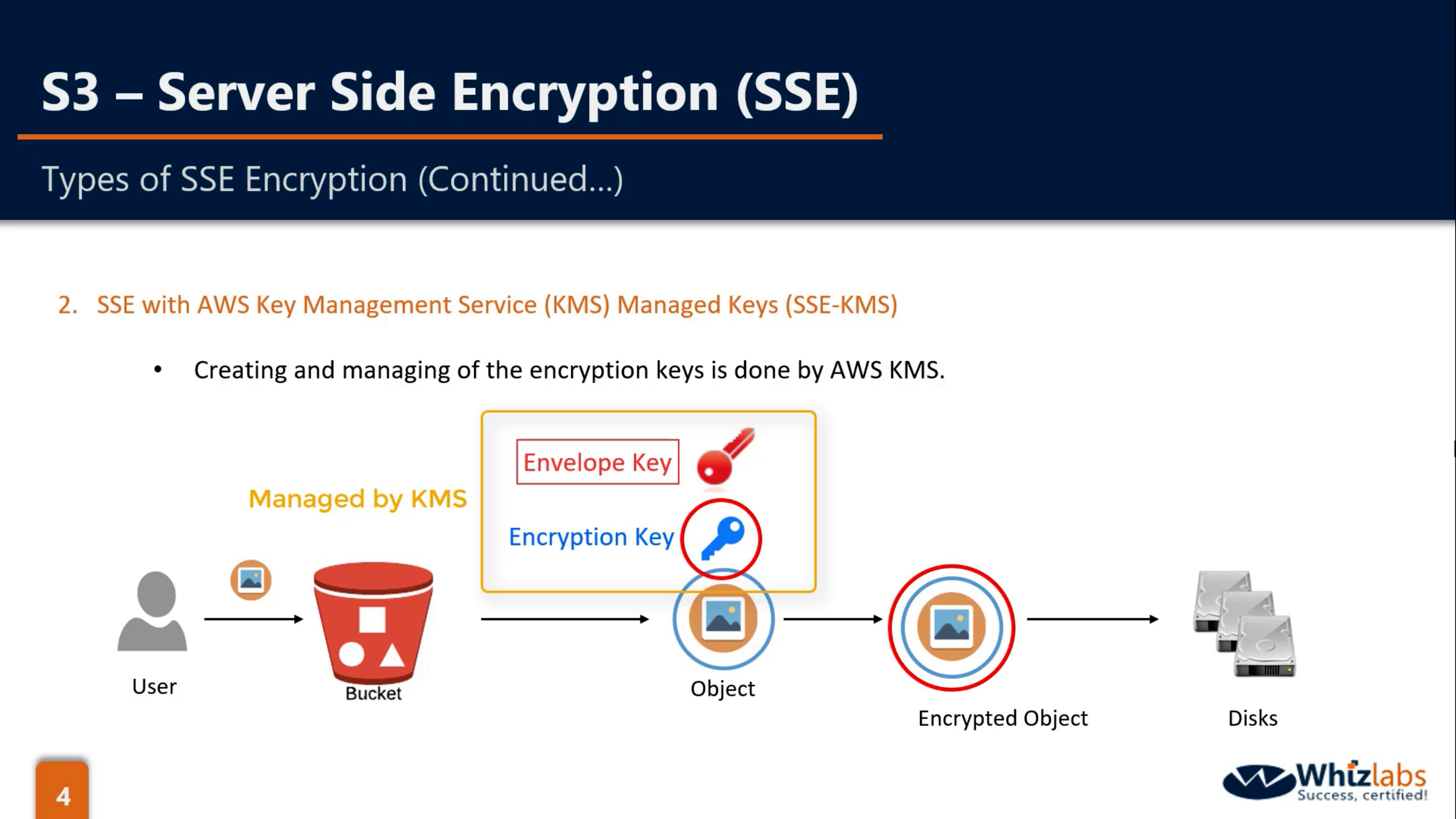

SSE-KMS

SSE-KMS vs. SSE-S3

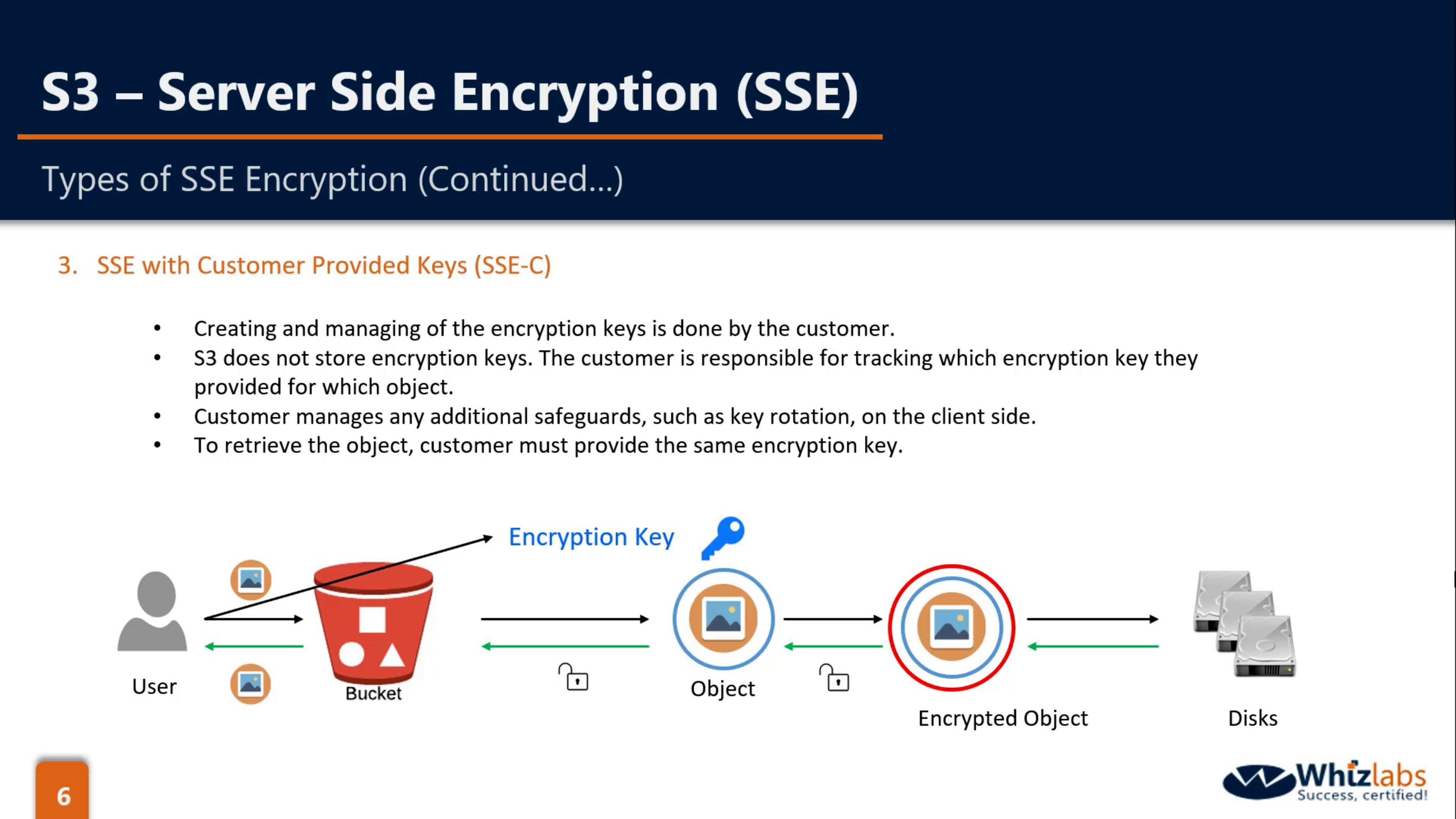

SSE-C

CSE-KMS

CSE-C

Summary

Bucket & Object Properties: Tag

- AWS Console Organization

- Cost Allocation

- Automation

- Access Control

One Key could has up to 10 different values.

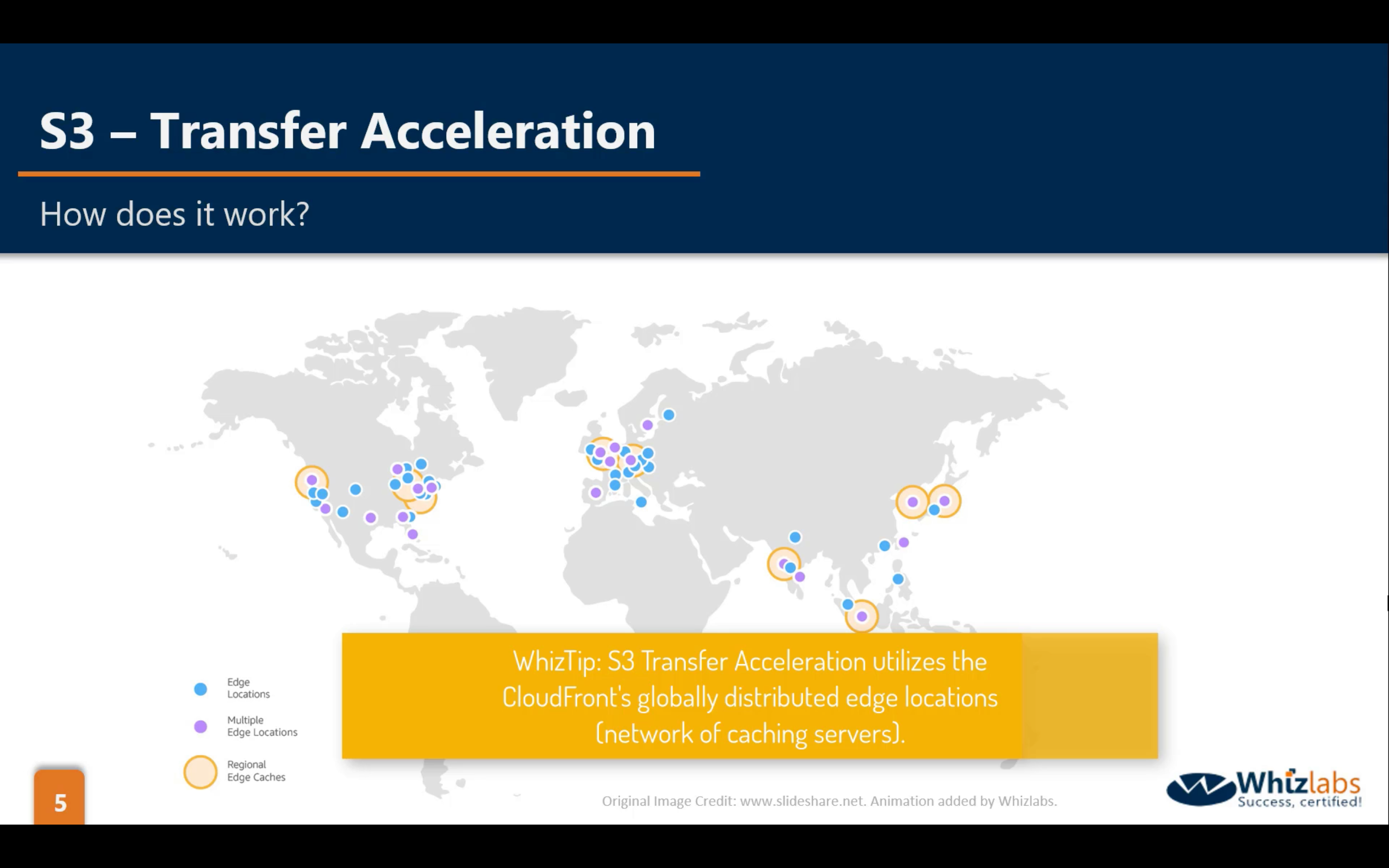

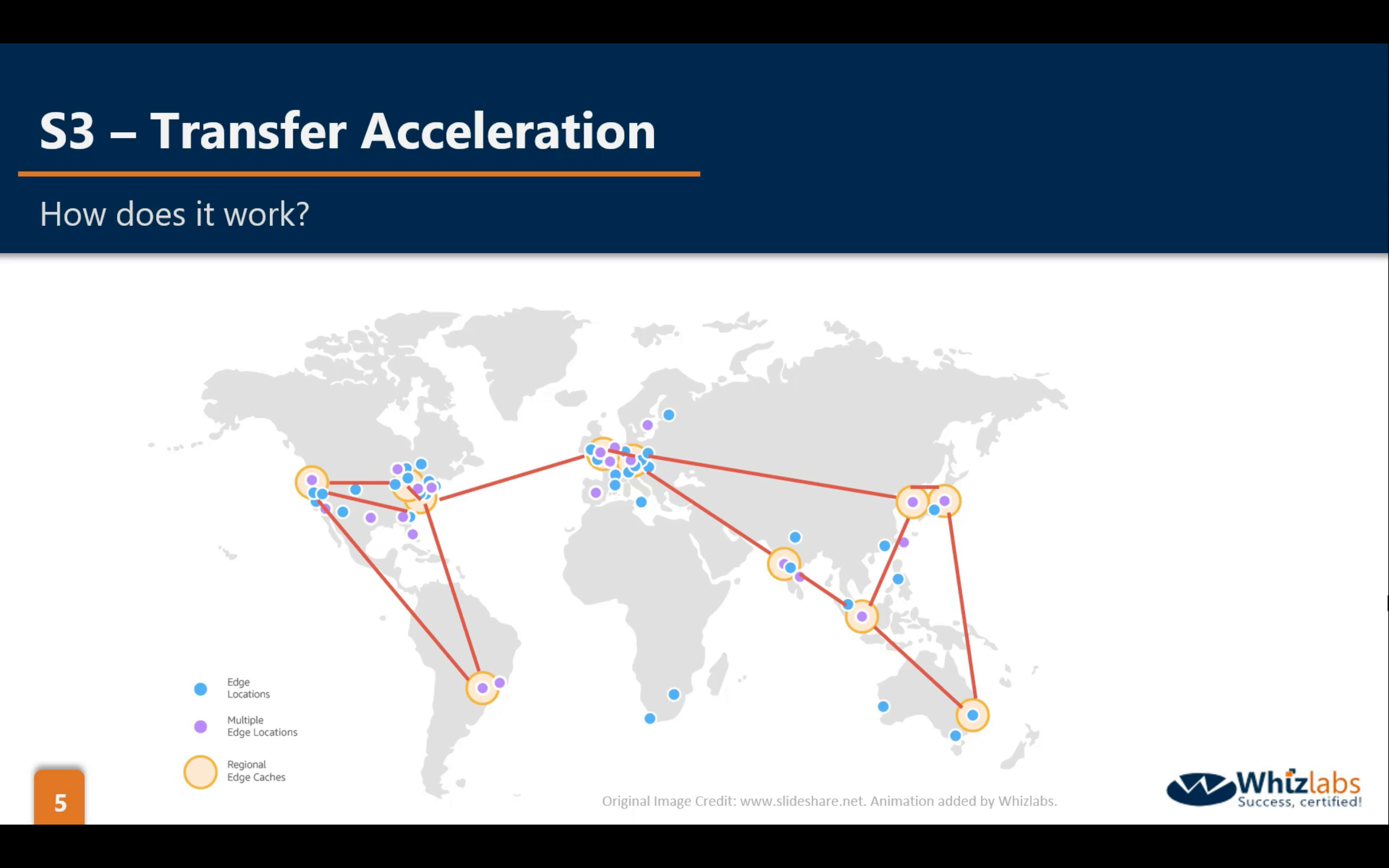

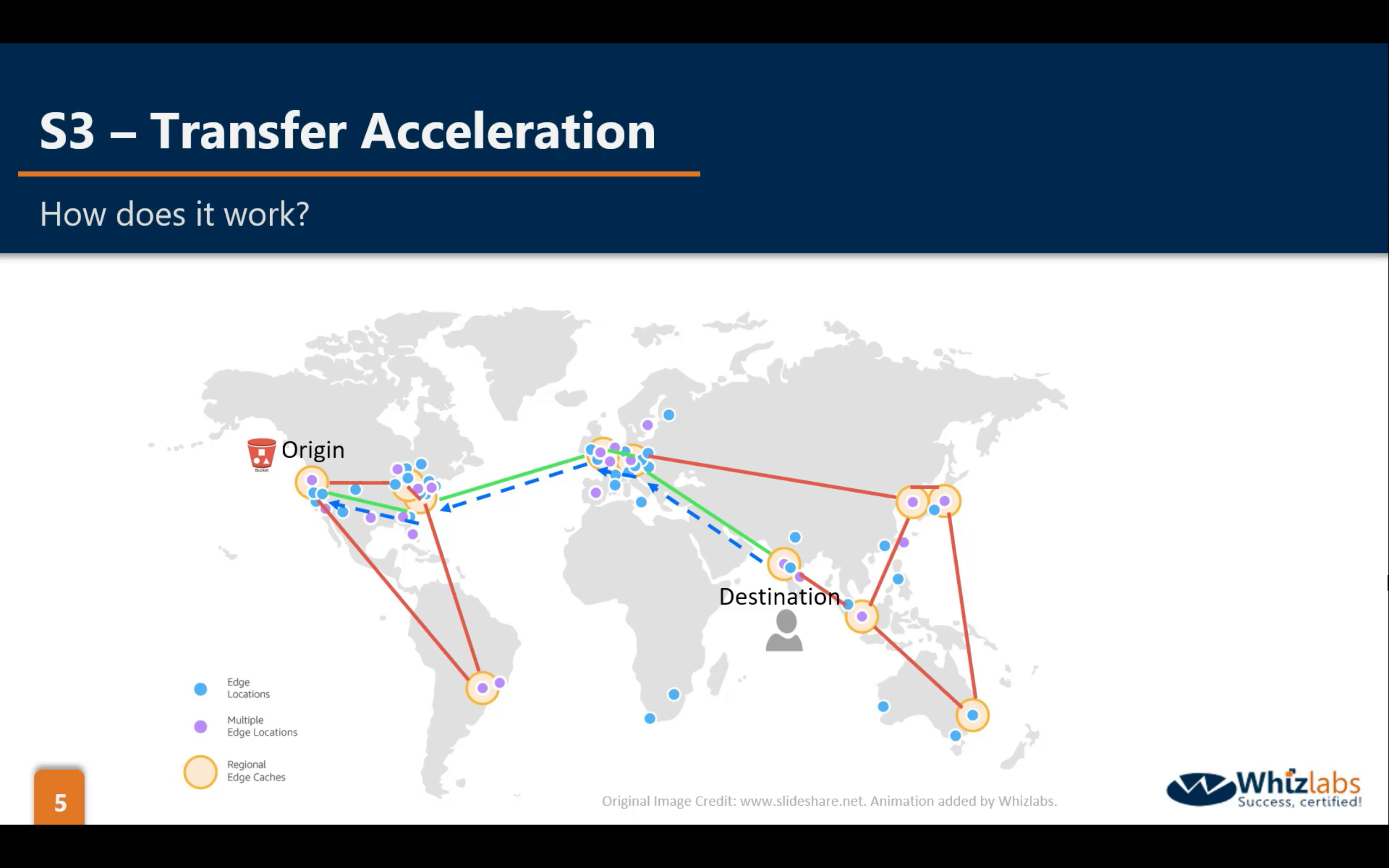

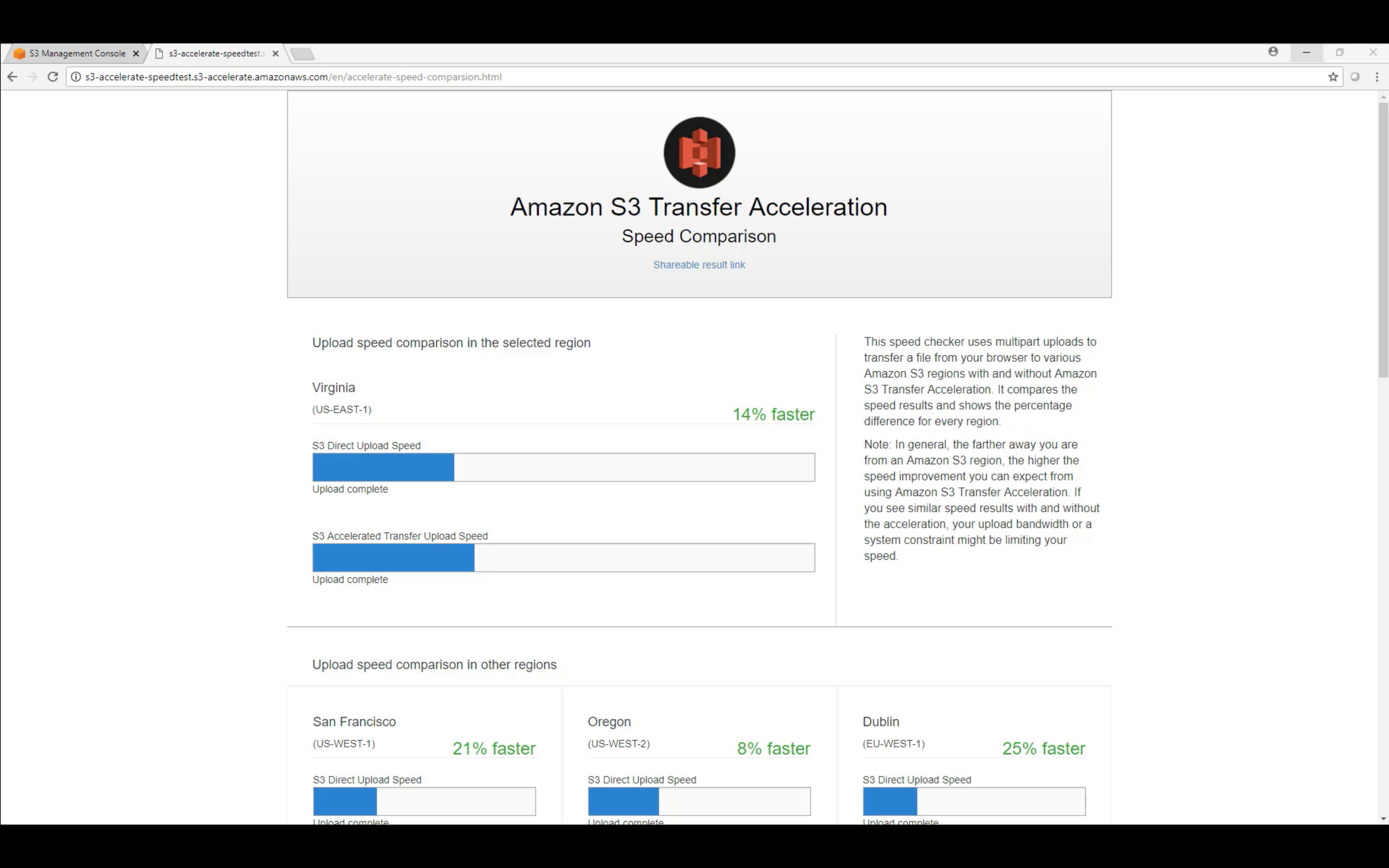

Bucket Properties: Transfer Acceleration (default disabled)

Transfer Acceleration is based on CloudFront.

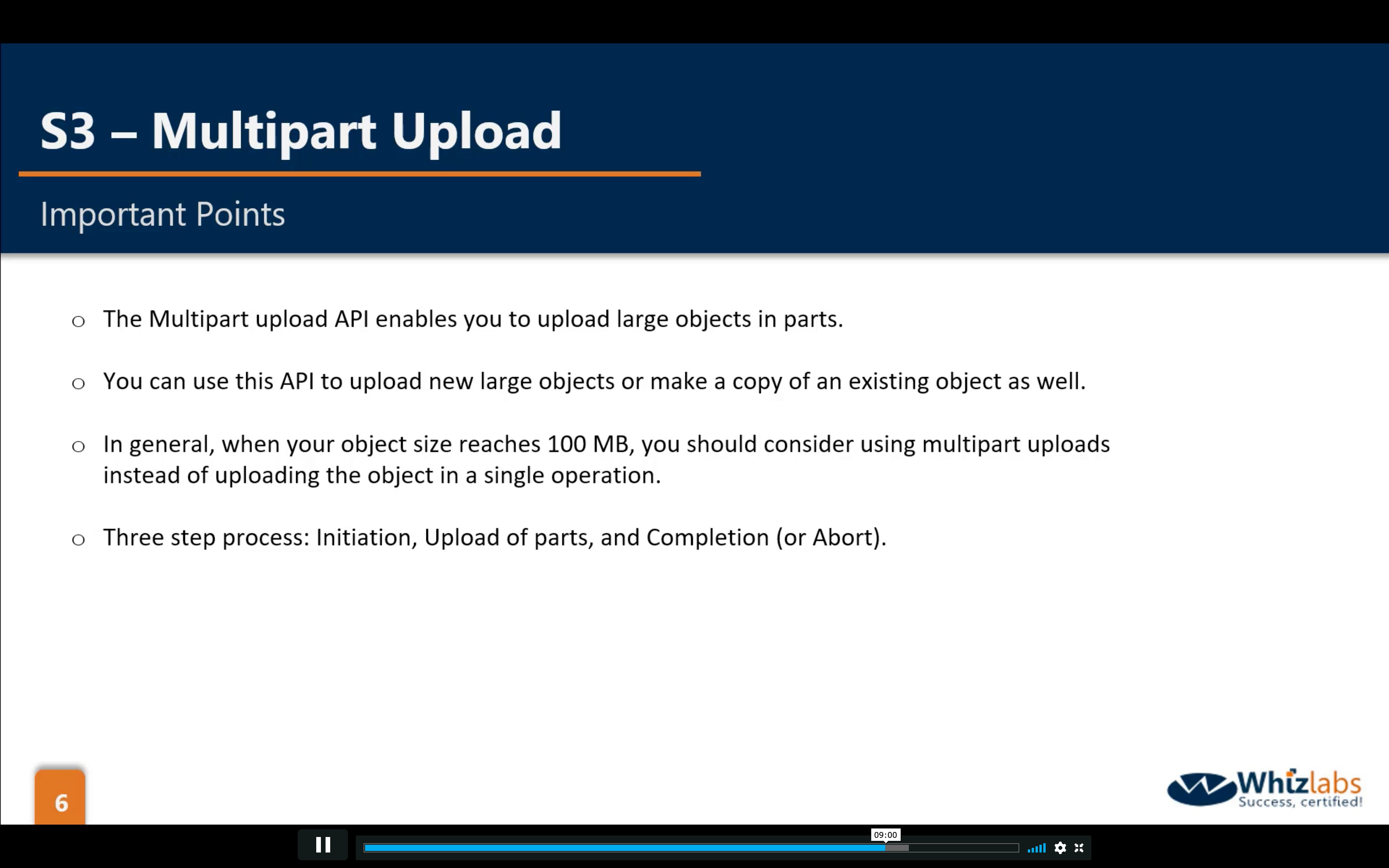

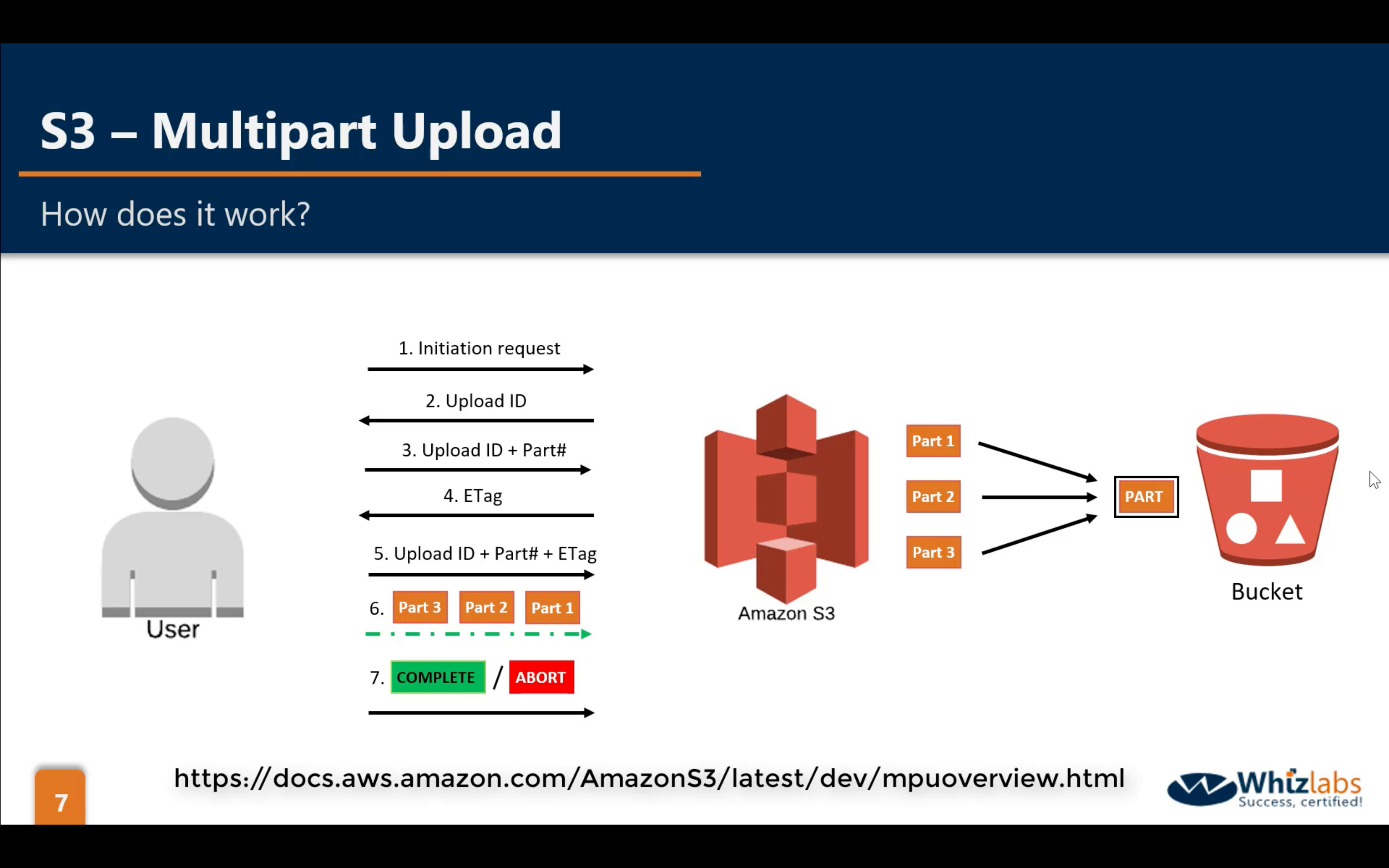

Multipart Upload (default disabled)

Single Largest PUT size: 5 GB

Summary

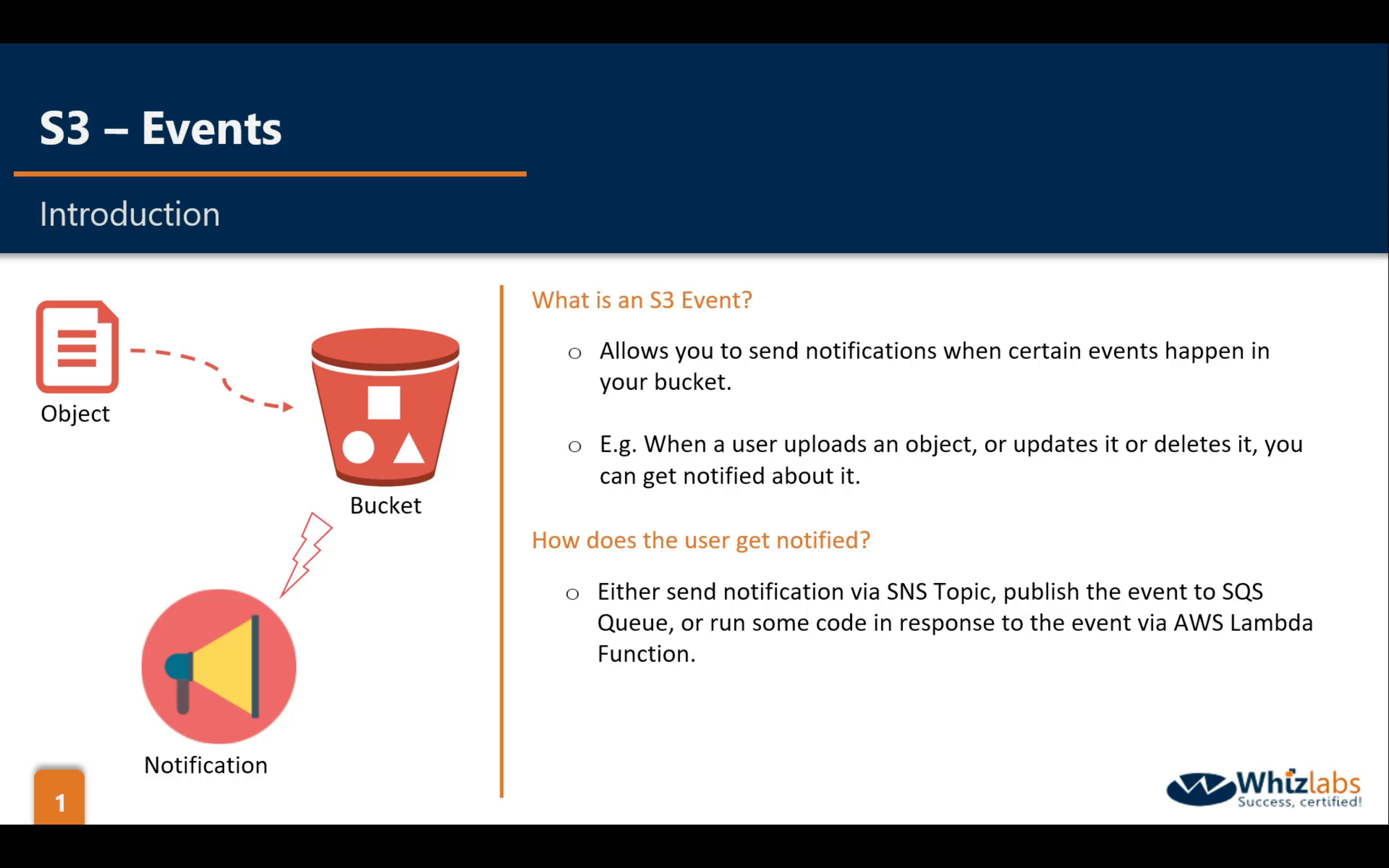

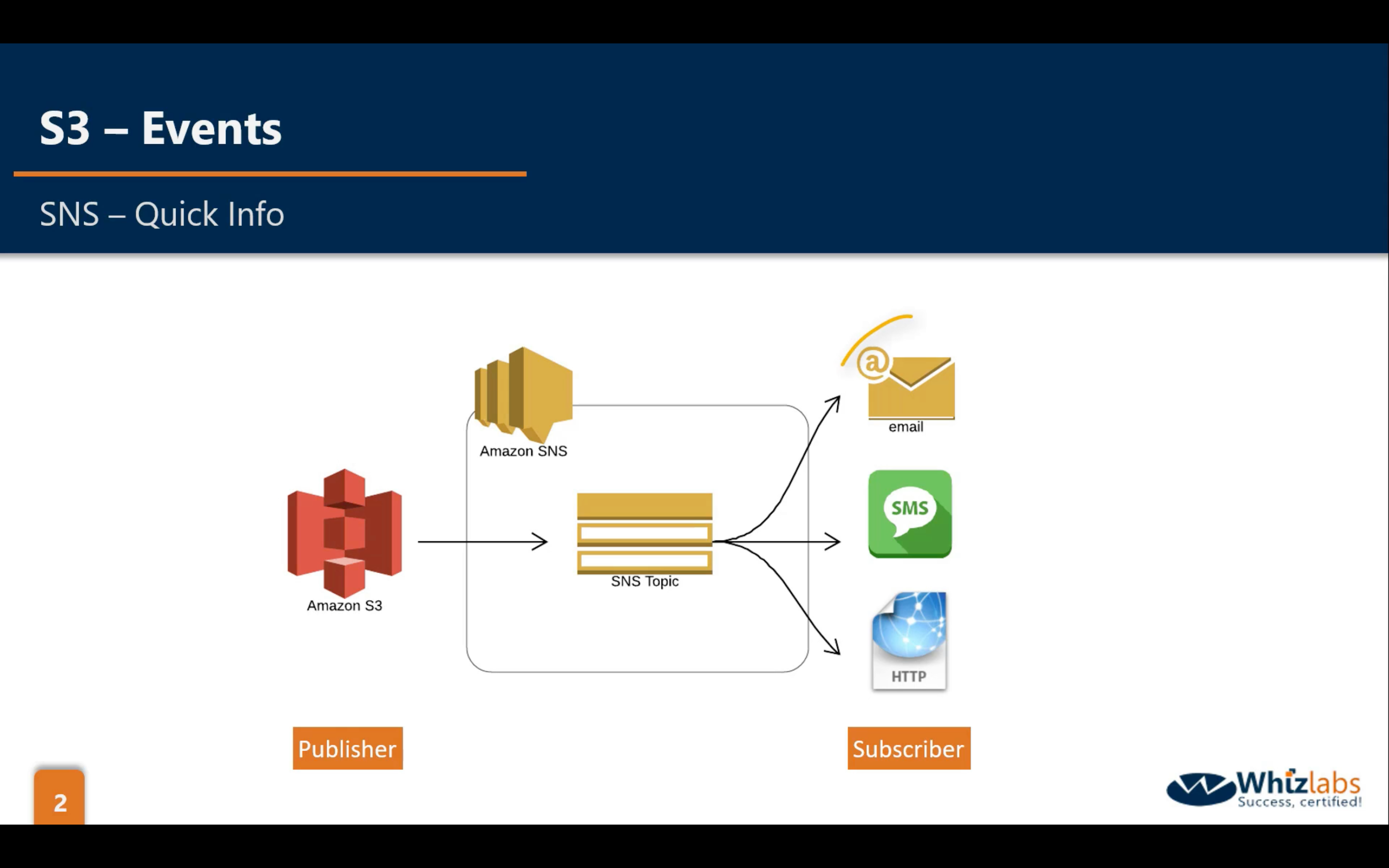

Bucket Properties: Events

Work with one of following services:

- SNS

- SQS

- Lambda

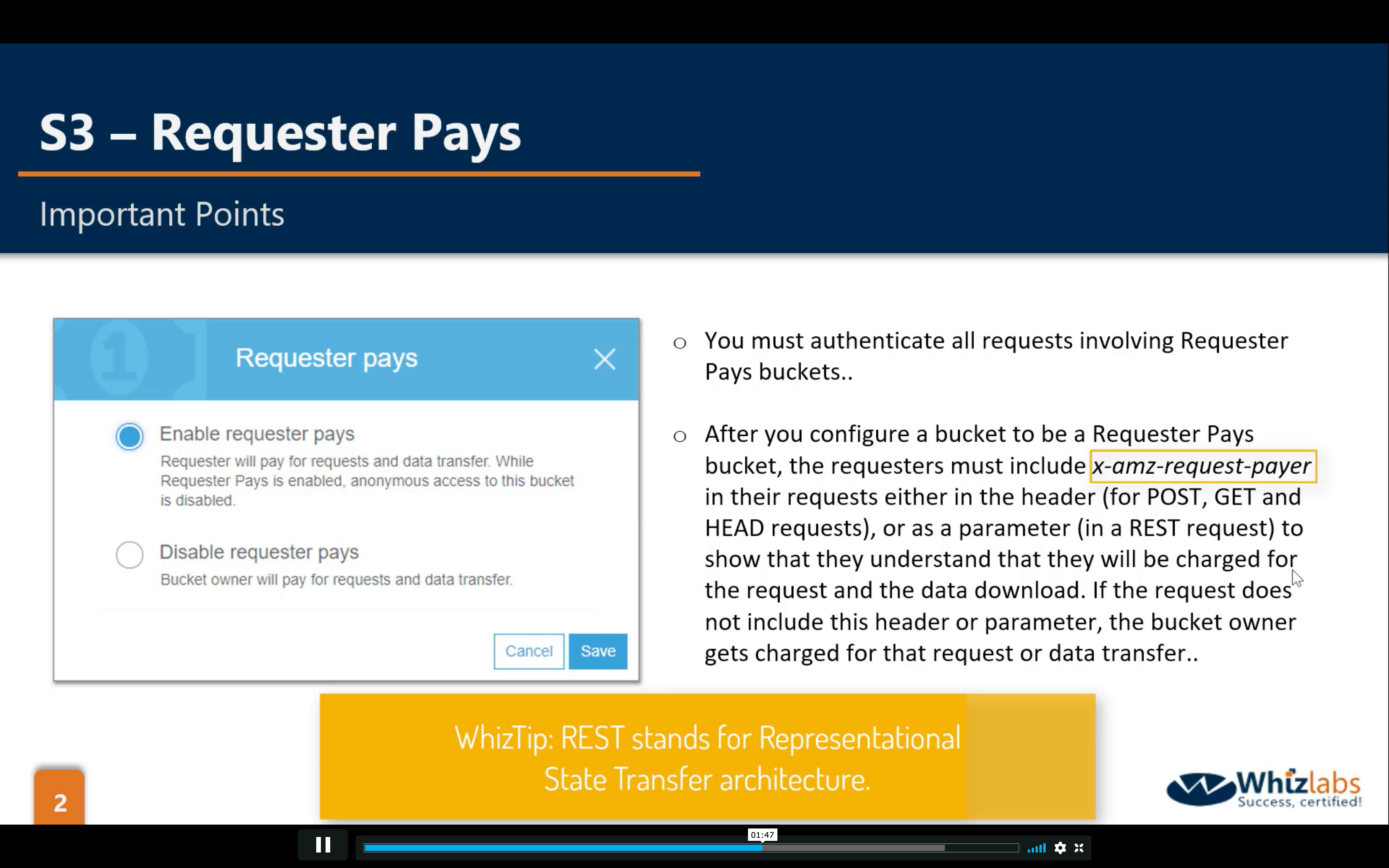

Bucket Properties: Requester Pays (default disabled)

You will be charge of the storage and transfer fee of a S3 bucket.

- x-amz-request-payer in the requests either in the header is REQUIRED for trigger the Requester Pays. Otherwise, you will pay for the data transfer fee.

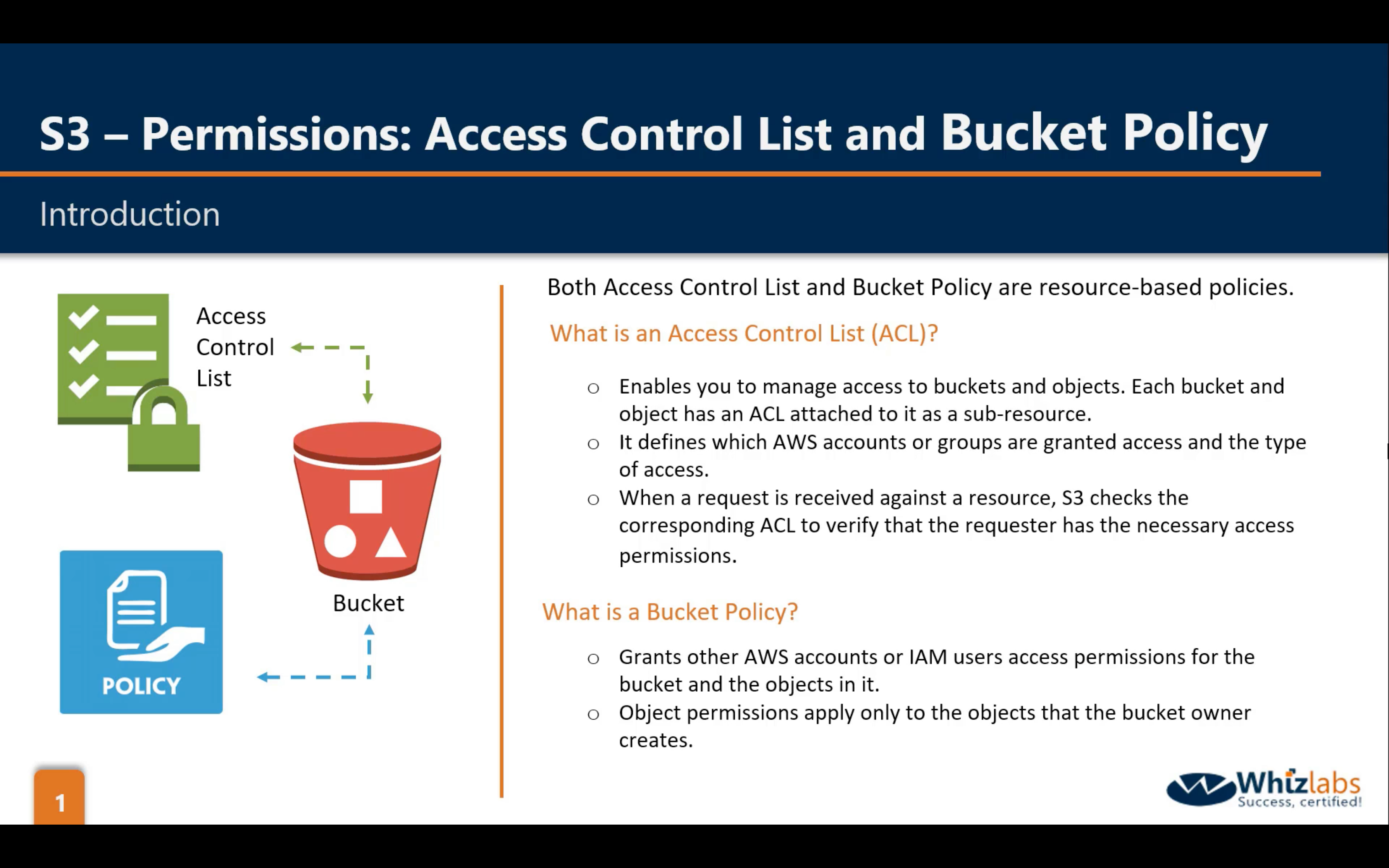

Bucket Permissions: ACL & Bucket Policy, Object Permission: ACL

There is one Bucket Policy working on Bucket level.

- If the AWS account that owns the object also owns the bucket, then it can write a bucket policy to manage the object permissions.

- If the AWS account that owns the object wants to grant permission to a user in its account, it can use a user policy.

- If an AWS account that owns a bucket wants to grant permission to users in its account, it can use either a bucket policy or a user policy. But in the following scenarios, you will need to use a bucket policy.

| Bucket Owner | Object Owner | User in its Account | Policy or ACL |

|---|---|---|---|

| 1 | 1 | 2 | Bucket Policy |

| 0 | 1 | 1 | User Policy |

| 0 | 1 | 0 | Object ACL |

| 1 | 1 | 1 | Bucket or User Policy |

There are two kinds of ACL:

- Bucket ACL: The ONLY recommended use case for the bucket ACL is to grant write permission to the Amazon S3 Log Delivery group to write access log objects to your bucket (see Amazon S3 server access logging).

- Object ACL

- An object ACL is the only way to manage access to objects not owned by the bucket owner

- Permissions vary by object and you need to manage permissions at the object level

- Object ACLs control only object-level permissions

Guidelines for using the available access policy options

Note: A bucket owner CANNOT grant permissions on objects it does not own. For example, a bucket policy granting object permissions applies only to objects owned by the bucket owner. However, the bucket owner, who pays the bills, can write a bucket policy to DENY access to any objects in the bucket, regardless of who owns it. The bucket owner can also delete any objects in the bucket.

IAM defines which role or user could access the specific services.

ACL & Bucket Policy define which resource could be access by the specific users.

When to use IAM policies vs. S3 policies

Use IAM policies if:

- You need to control access to AWS services other than S3. IAM policies will be easier to manage since you can centrally manage all of your permissions in IAM, instead of spreading them between IAM and S3.

- You have numerous S3 buckets each with different permissions requirements. IAM policies will be easier to manage since you don’t have to define a large number of S3 bucket policies and can instead rely on fewer, more detailed IAM policies.

- You prefer to keep access control policies in the IAM environment.

Use S3 bucket policies if:

- You want a simple way to grant cross-account access to your S3 environment, without using IAM roles.

- Your IAM policies bump up against the size limit (up to 2 kb for users, 5 kb for groups, and 10 kb for roles). S3 supports bucket policies of up 20 kb.

- You prefer to keep access control policies in the S3 environment.

What about S3 ACLs?

As a general rule, AWS recommends using S3 bucket policies or IAM policies for access control. S3 ACLs is a legacy access control mechanism that predates IAM. However, if you already use S3 ACLs and you find them sufficient, there is no need to change.

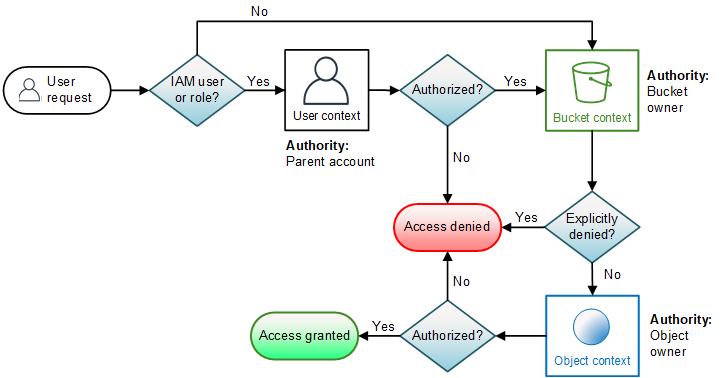

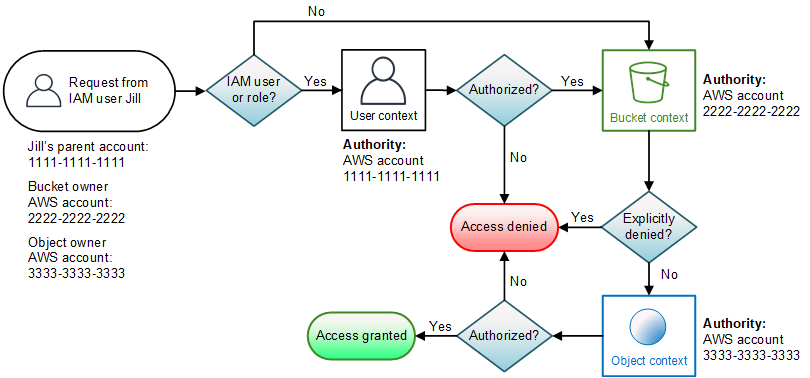

So there are two ways to access the object:

If IAM enabled:

IAM -> Bucket context -> Object context

If IAM disabled:

Bucket context -> Object context

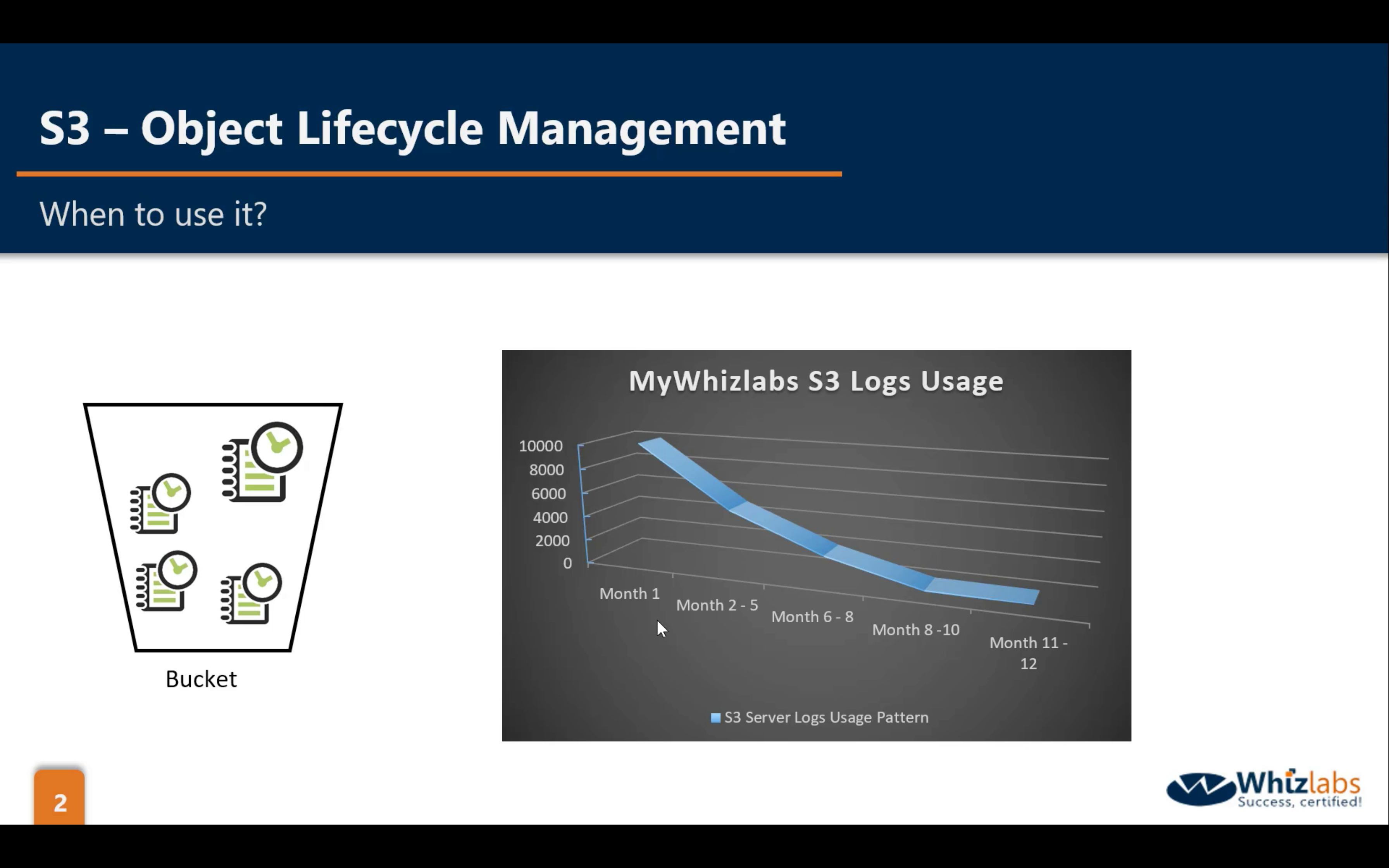

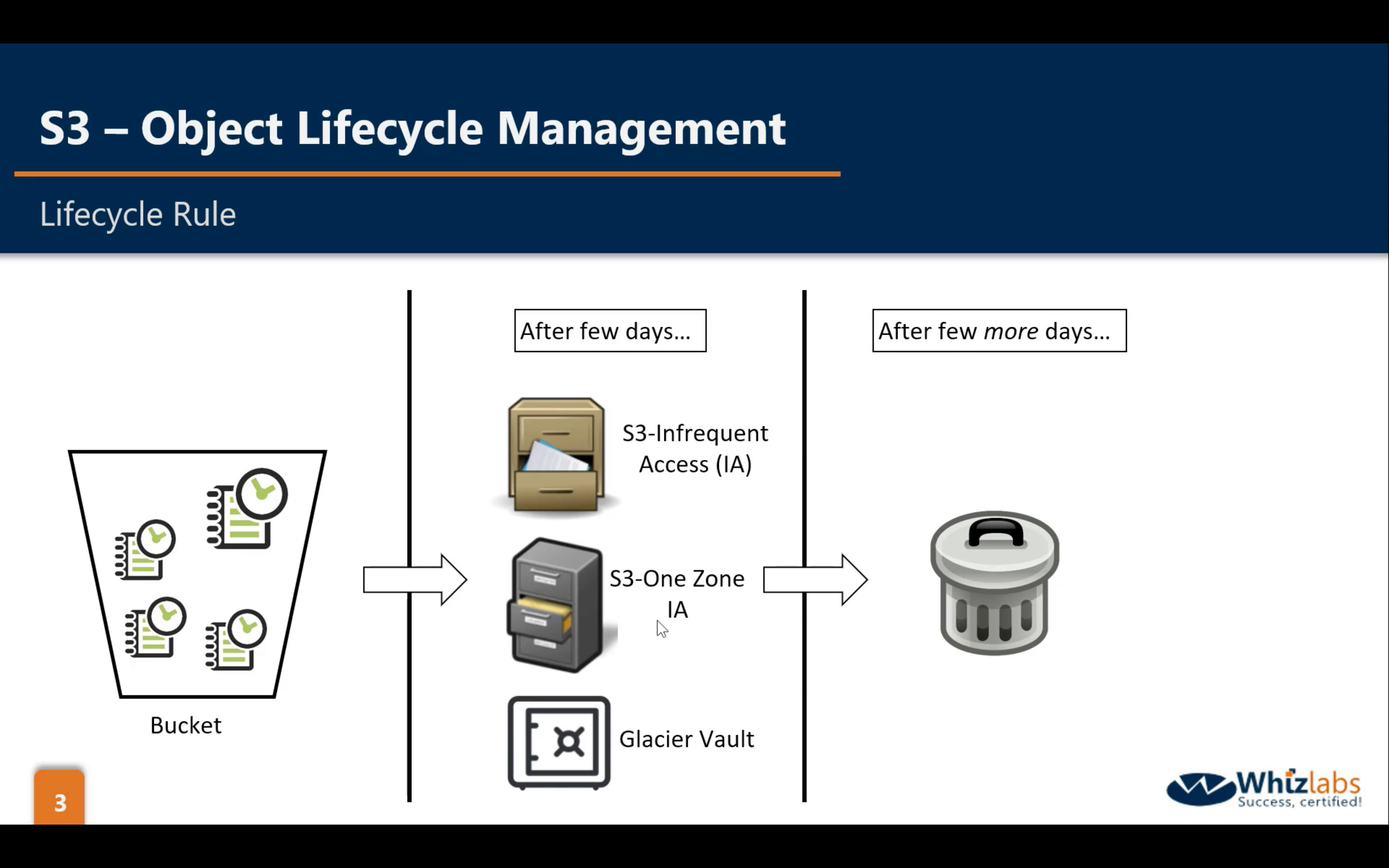

Bucket Management: OLM (Object Lifecycle Management)

- Change Object storage class (at least 30 days)

- Remove the objects that have delete marker (at least 1 day)

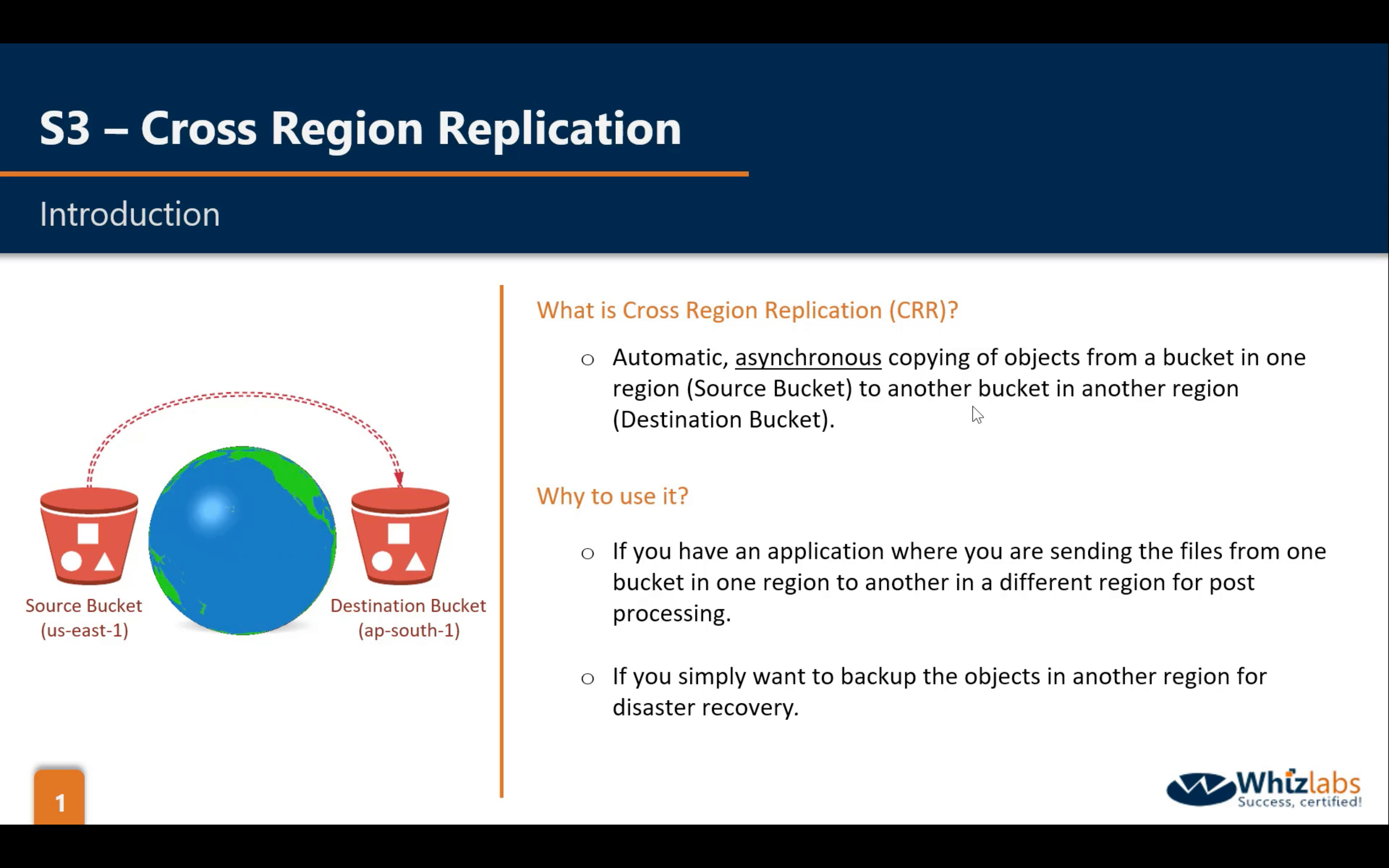

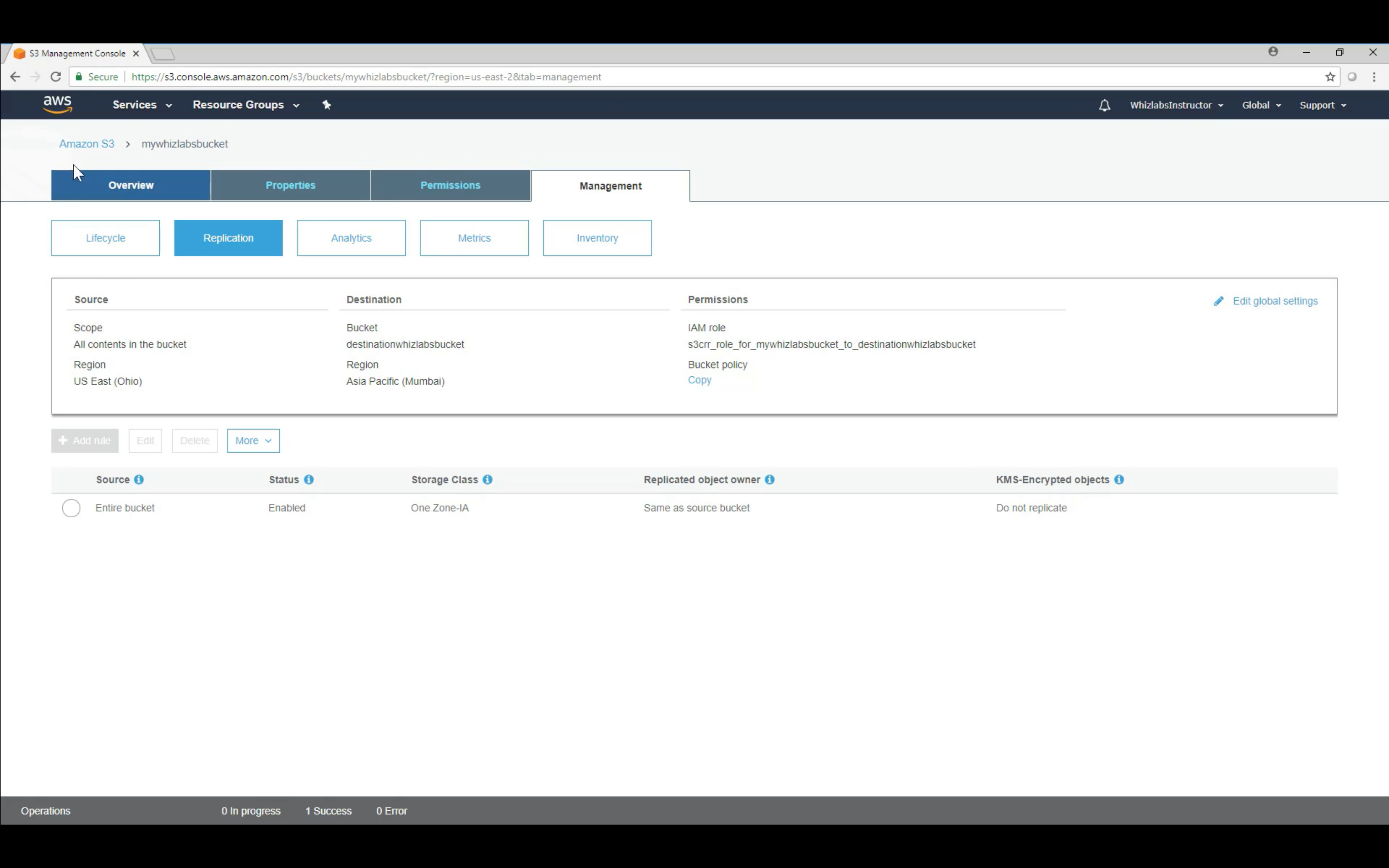

Bucket Permissions: CRR (Cross Region Replication)

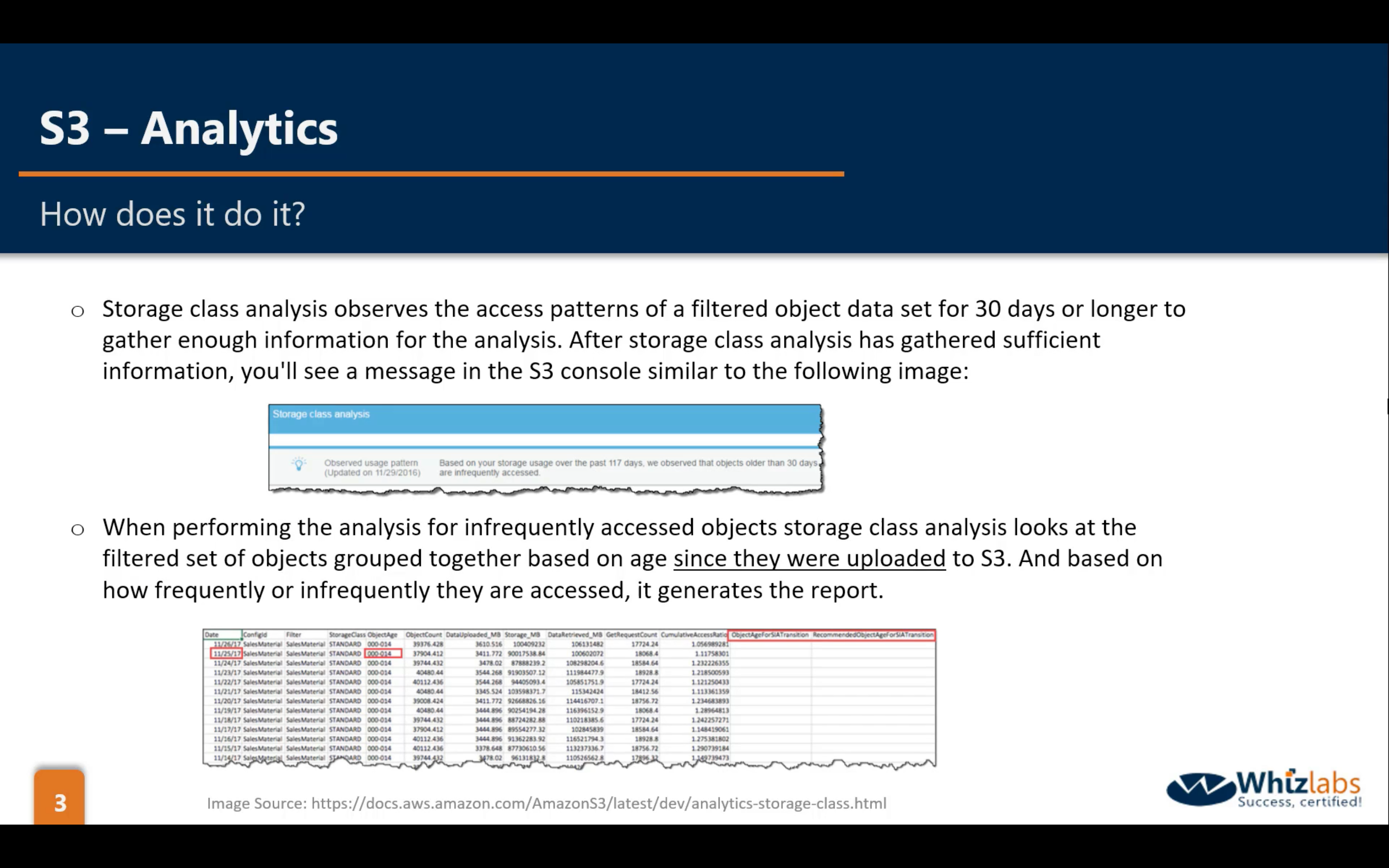

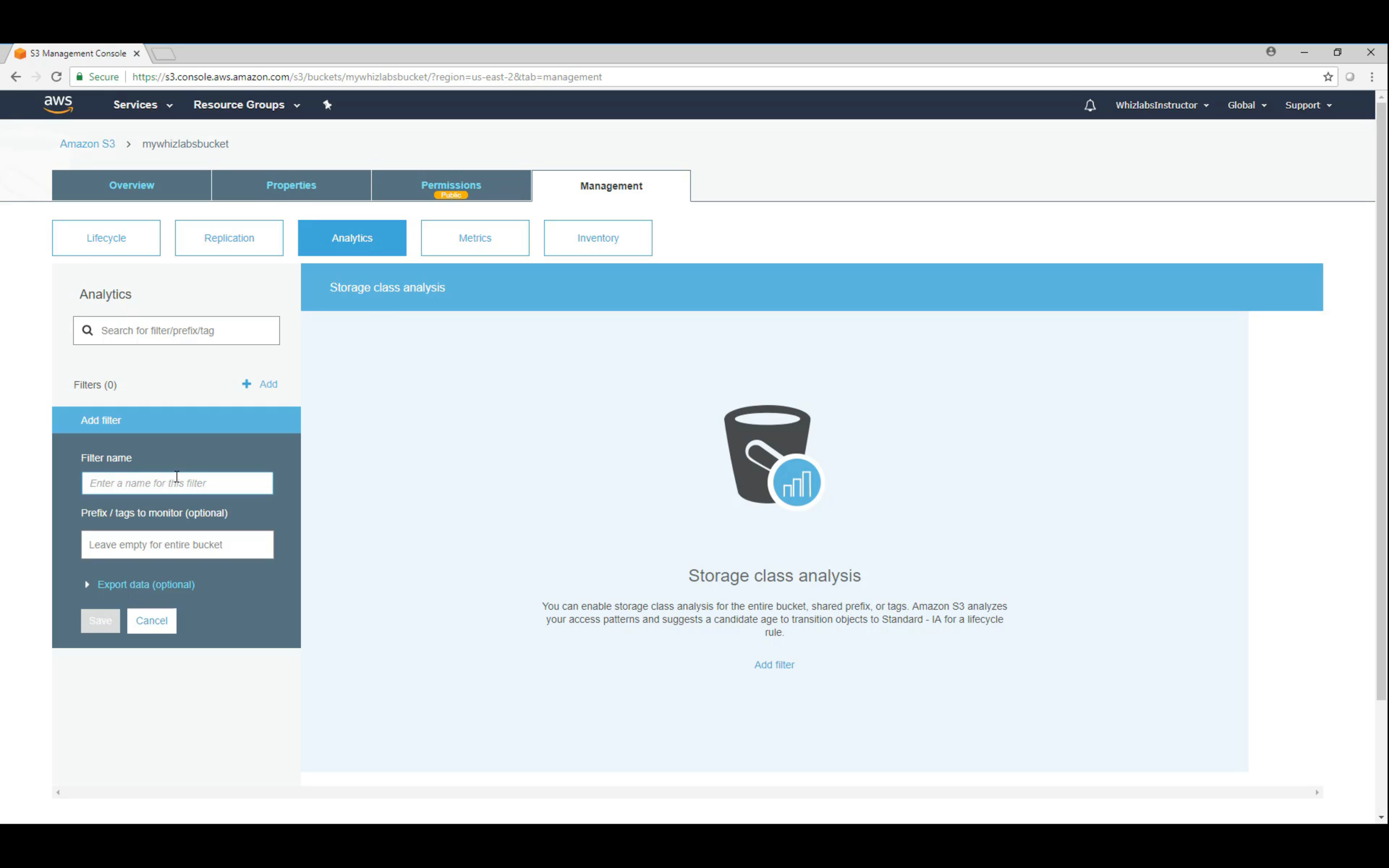

Bucket Management: Analytics, Metrics and Inventory

Analytics: statistical data to improve policies

Metrics: CloudWatch Metrics for S3 help you improve the performance

Inventory: helps you report on the replication and encryption

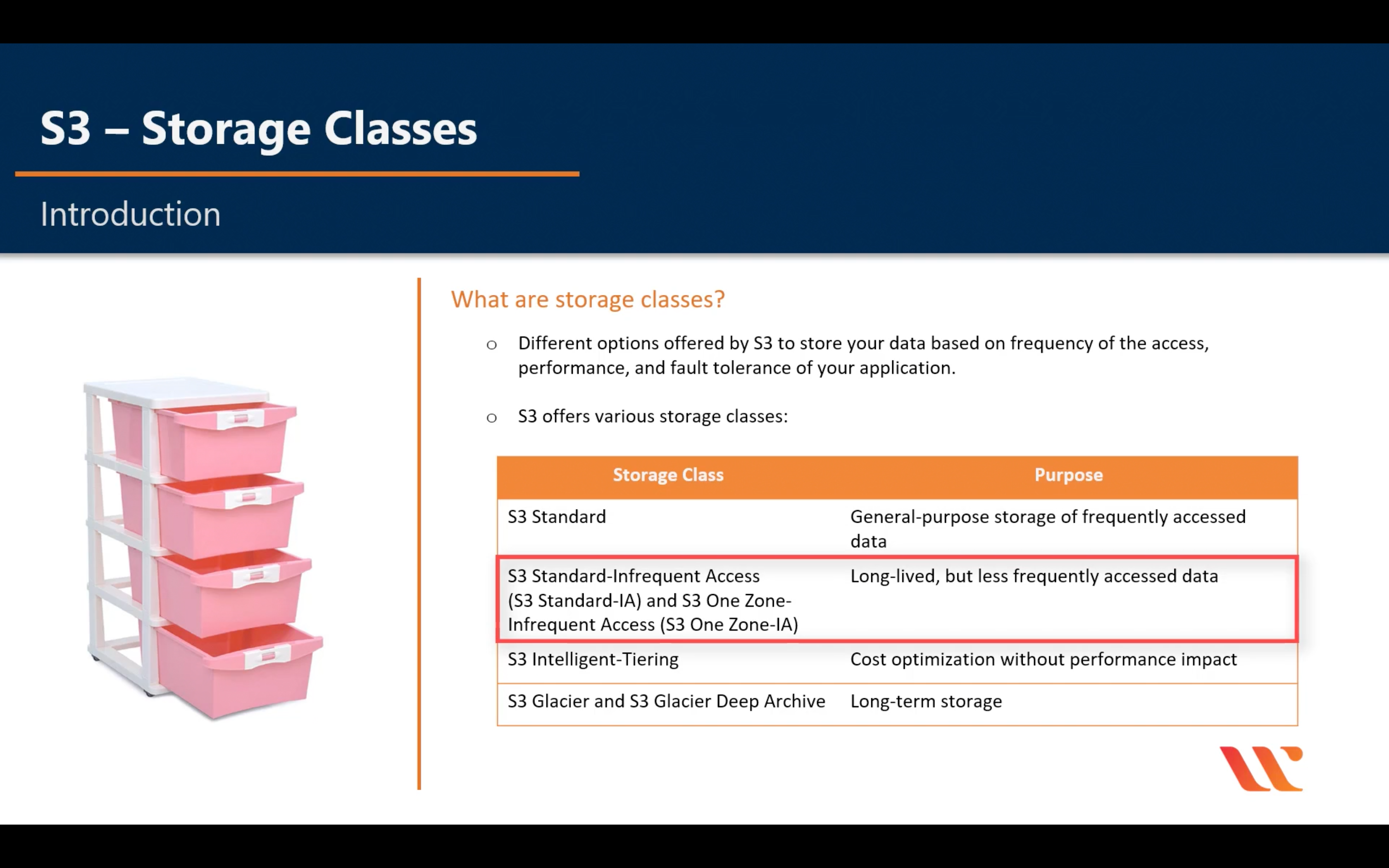

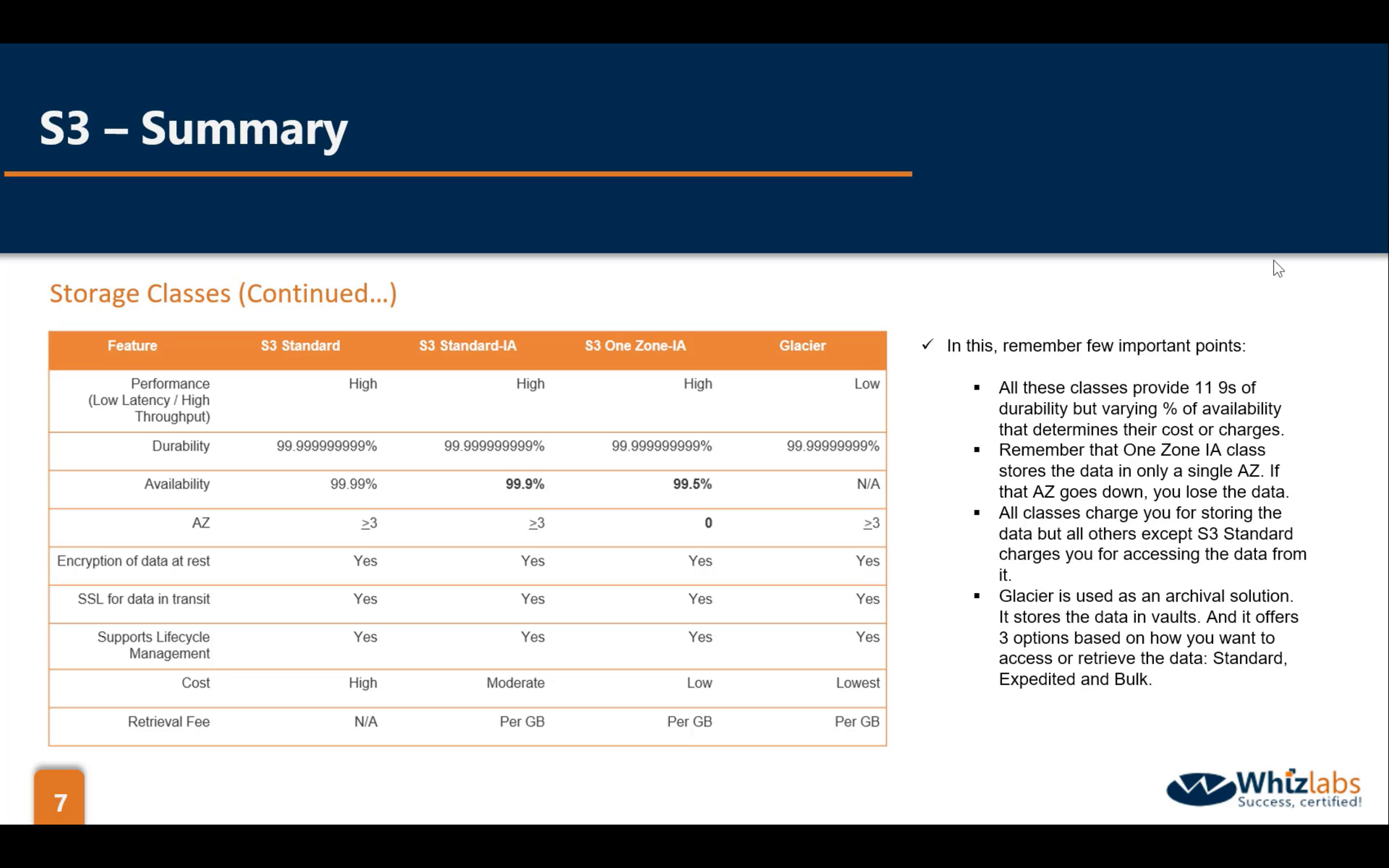

Object Properties: Storage Classes

Single file storage range: 0B ~ 5TB

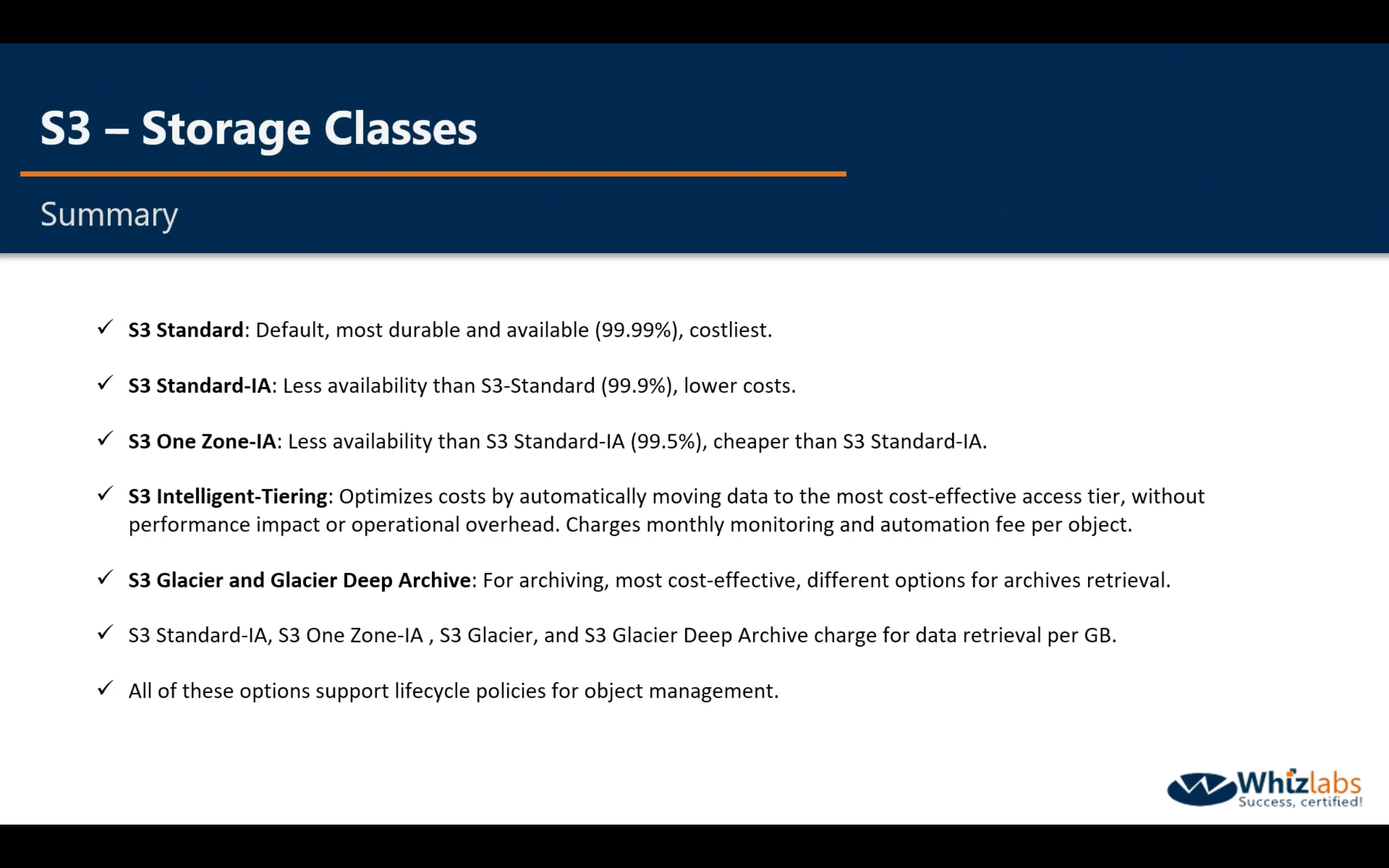

Standard

Standard-IA

One Zone-IA

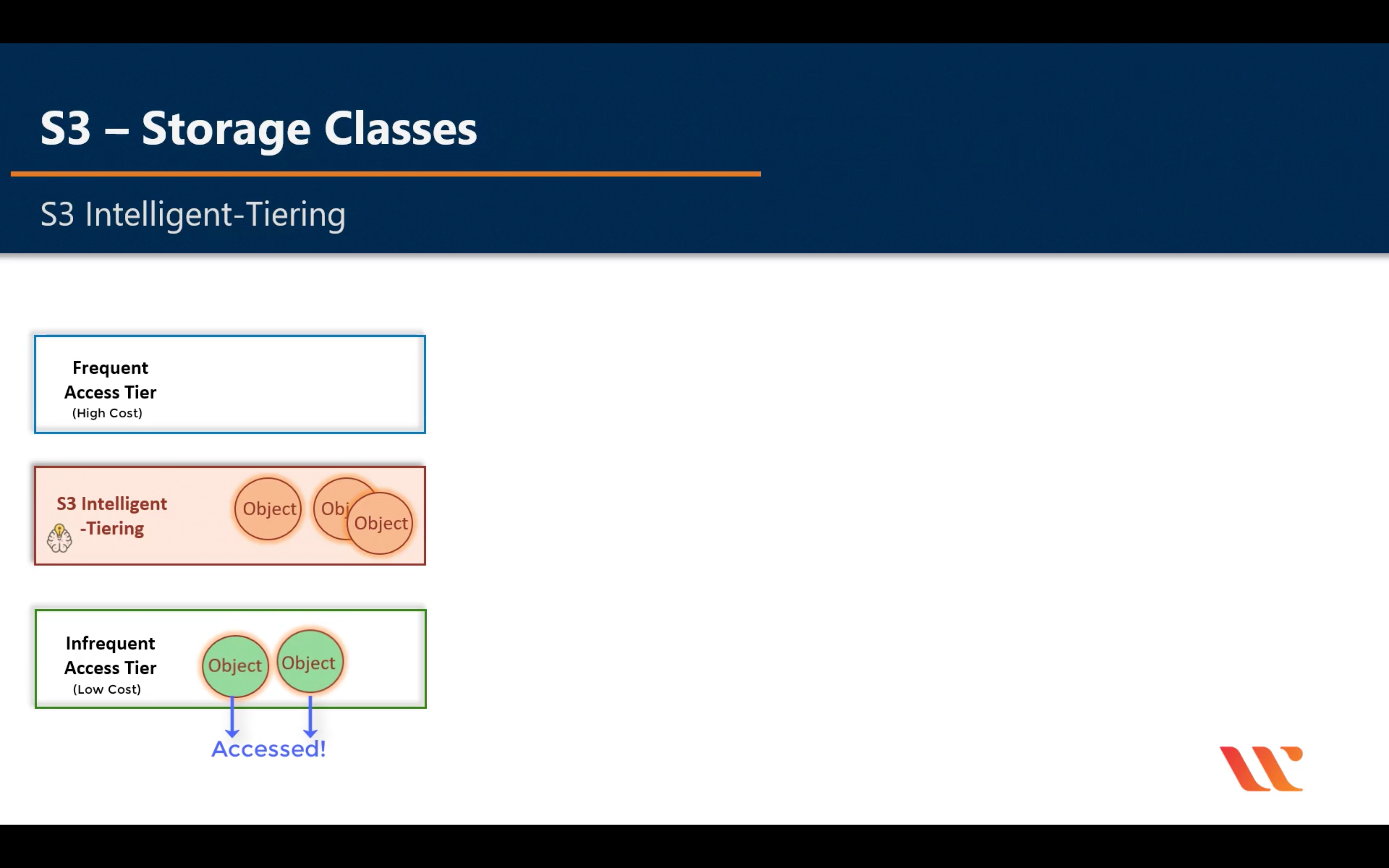

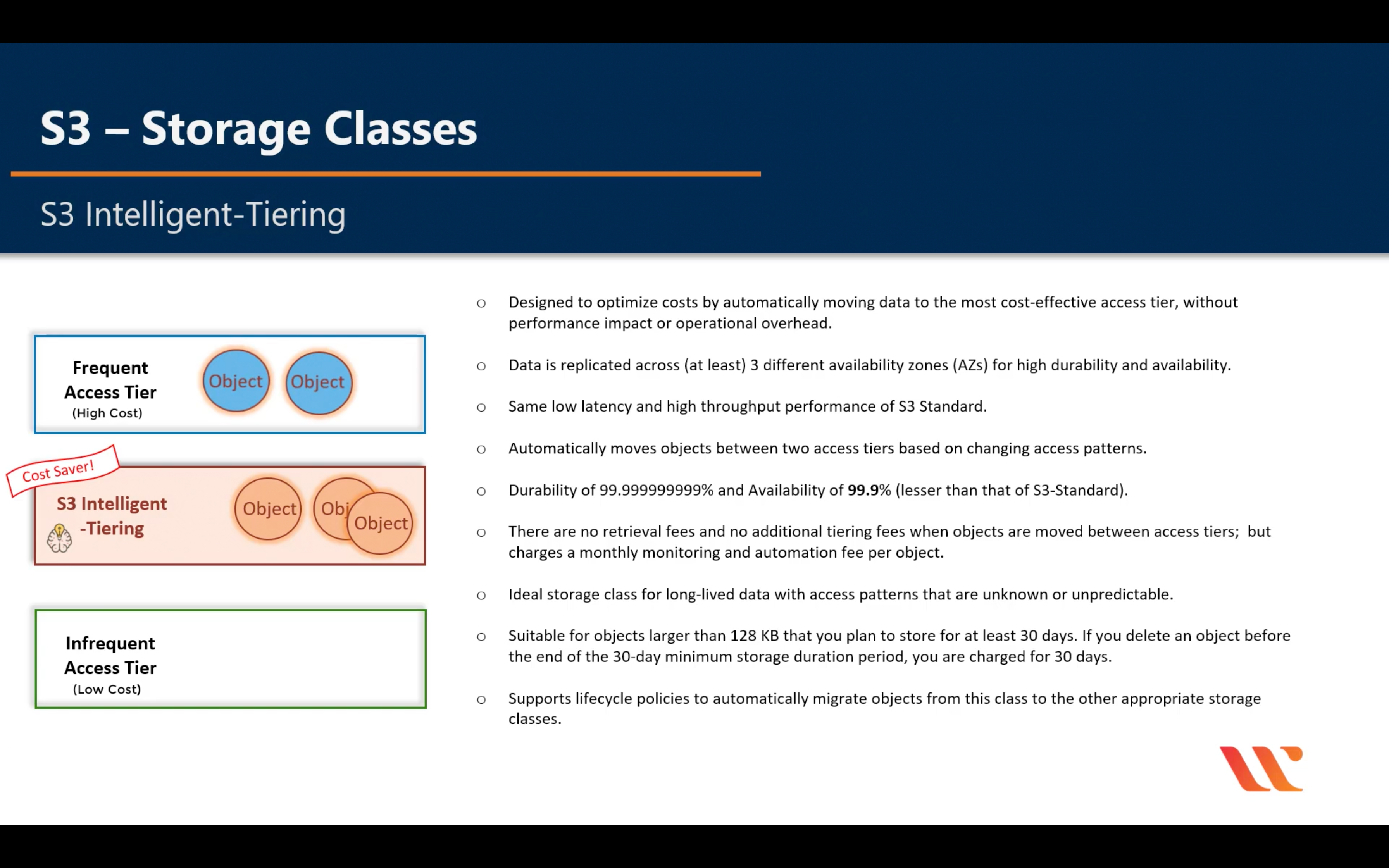

Intelligent-Tiering

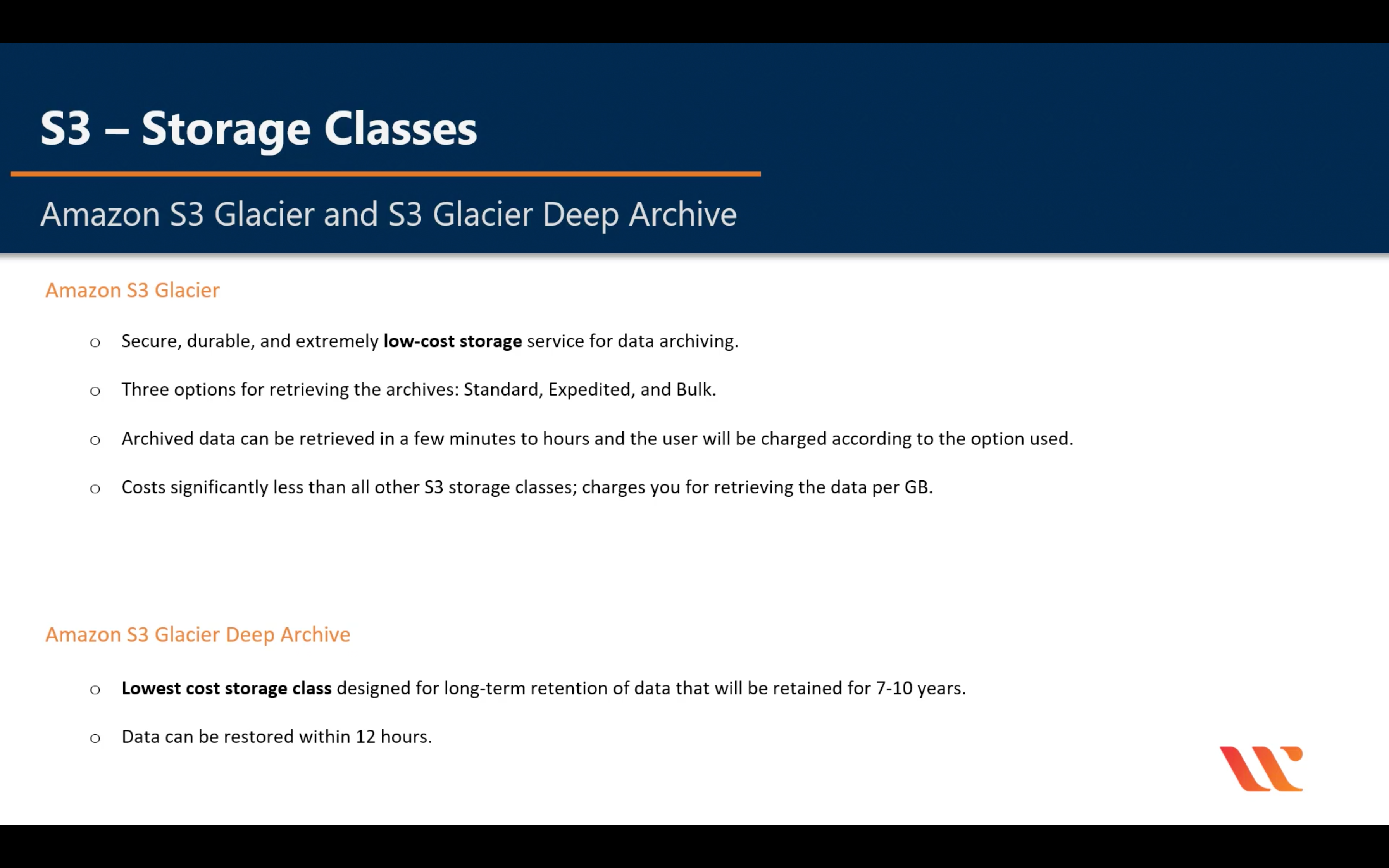

Glacier & Glacier Deep Archive

Comparison

| S3 Standard | S3 Intelligent-Tiering* |

S3 Standard-IA |

S3 One Zone-IA† |

S3 Glacier |

S3 Glacier Deep Archive |

|

|---|---|---|---|---|---|---|

| Designed for durability |

99.999999999% (11 9’s) |

99.999999999% (11 9’s) |

99.999999999% (11 9’s) |

99.999999999% (11 9’s) |

99.999999999% (11 9’s) |

99.999999999% (11 9’s) |

| Designed for availability |

99.99% | 99.9% | 99.9% | 99.5% | 99.99% | 99.99% |

| Availability SLA | 99.9% | 99% | 99% | 99% | 99.9% |

99.9% |

| Availability Zones | ≥3 | ≥3 | ≥3 | 1 | ≥3 | ≥3 |

| Minimum capacity charge per object | N/A | N/A | 128KB | 128KB | 40KB | 40KB |

| Minimum storage duration charge | N/A | 30 days | 30 days | 30 days | 90 days | 180 days |

| Retrieval fee | N/A |

N/A |

per GB retrieved |

per GB retrieved | per GB retrieved | per GB retrieved |

| First byte latency | milliseconds | milliseconds | milliseconds | milliseconds | select minutes or hours | select hours |

| Storage type | Object | Object | Object | Object | Object | Object |

| Lifecycle transitions | Yes | Yes | Yes | Yes | Yes | Yes |

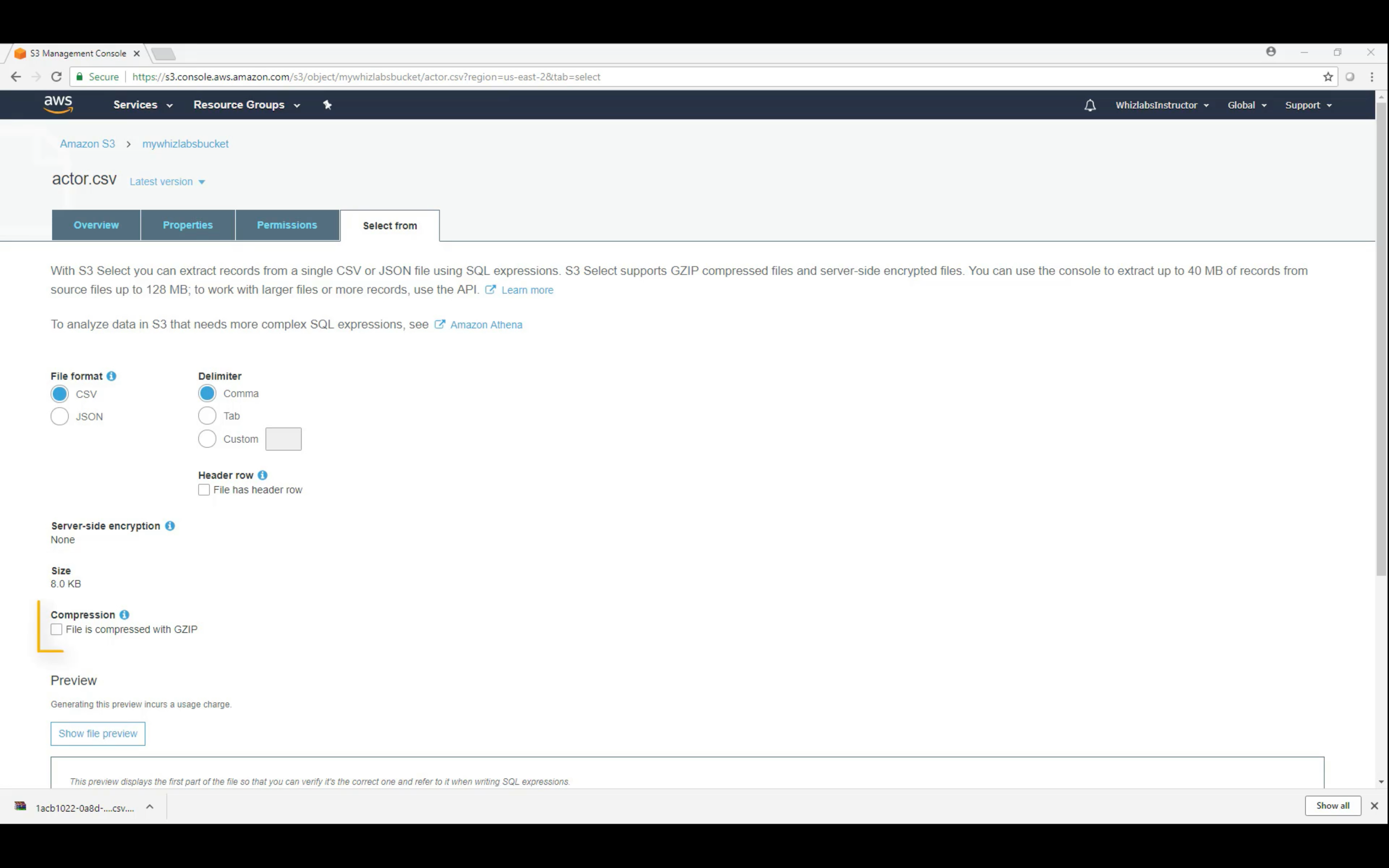

Object Select from: (SQL) Select From (CSV)

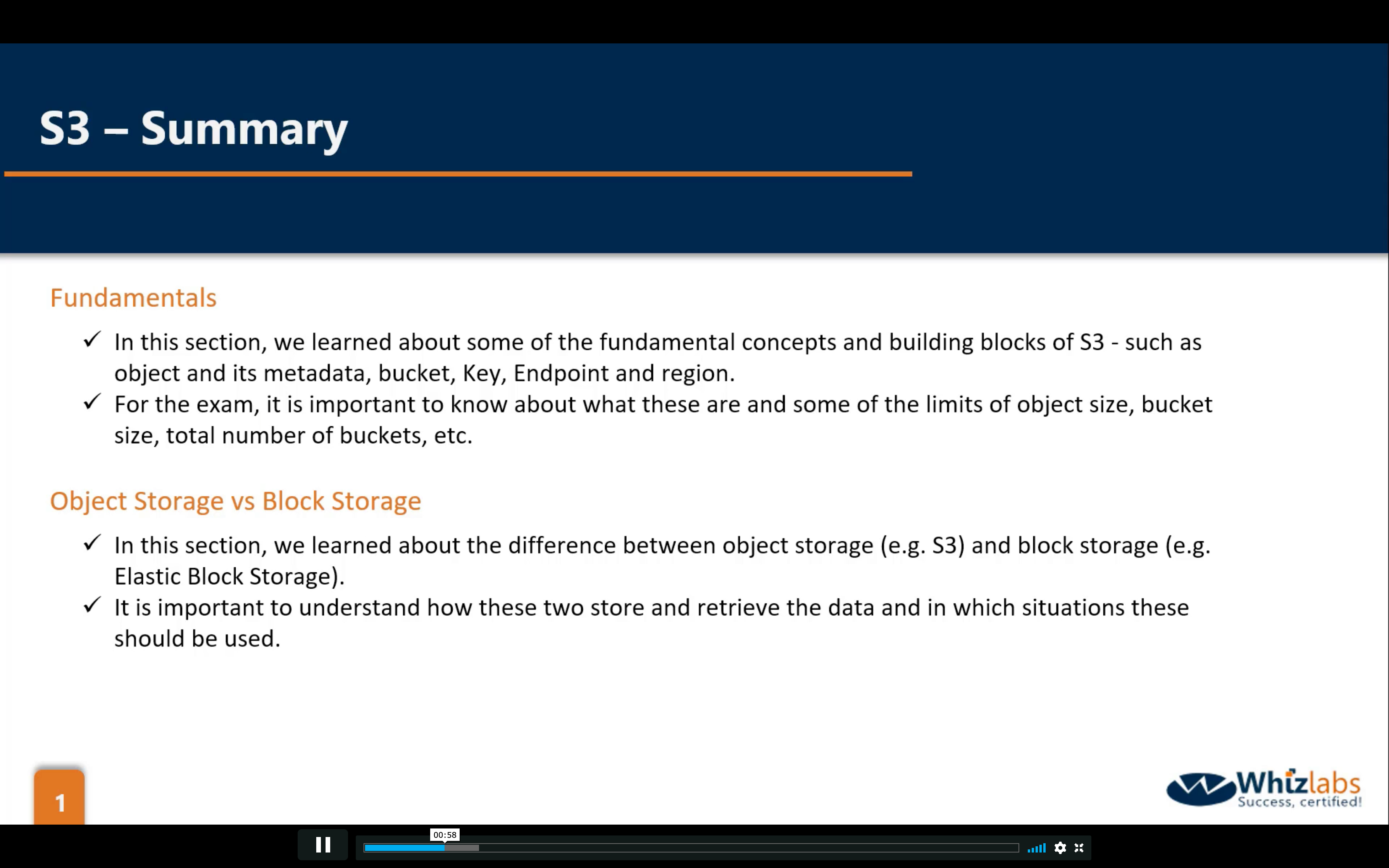

S3 Summary

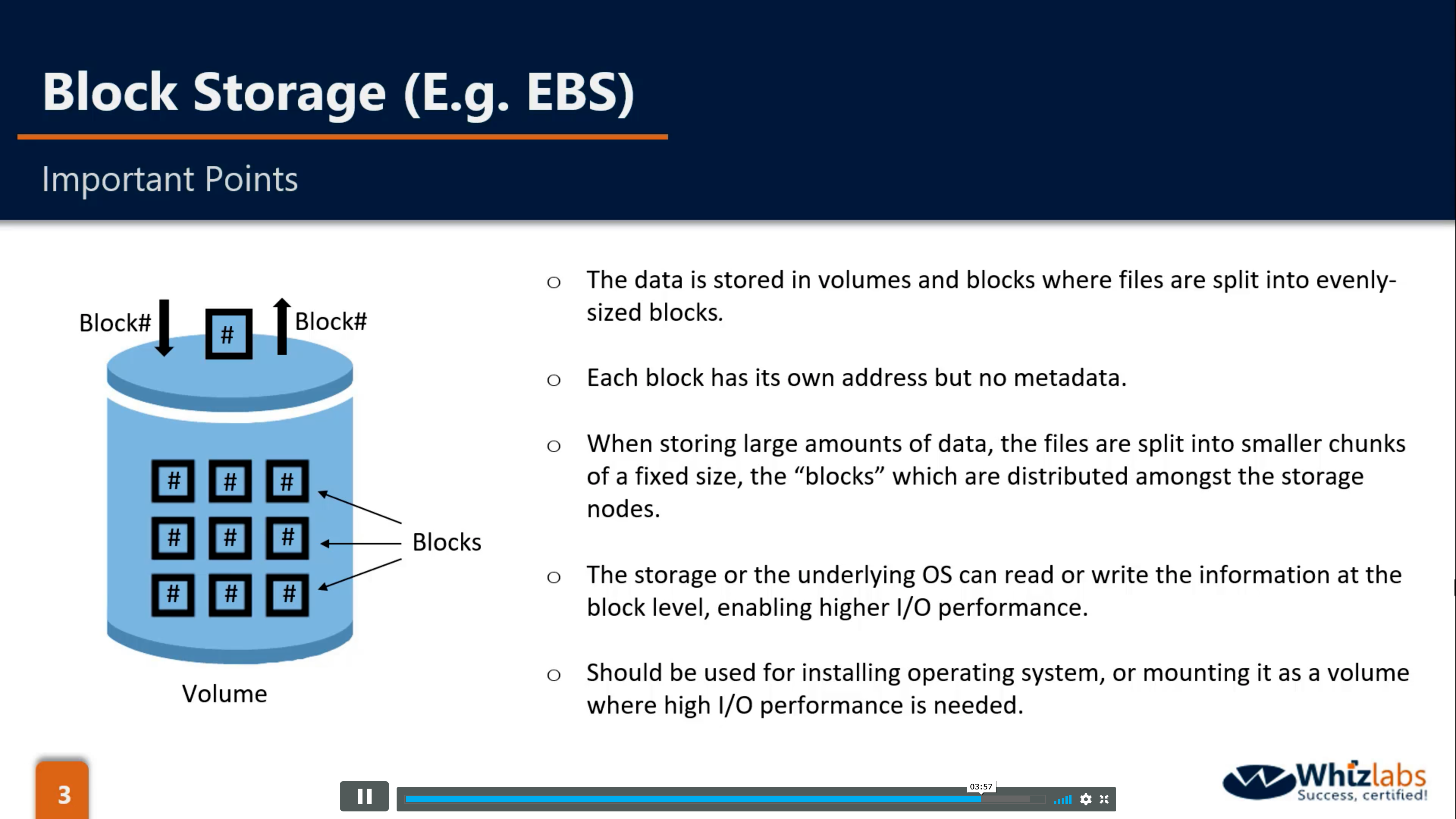

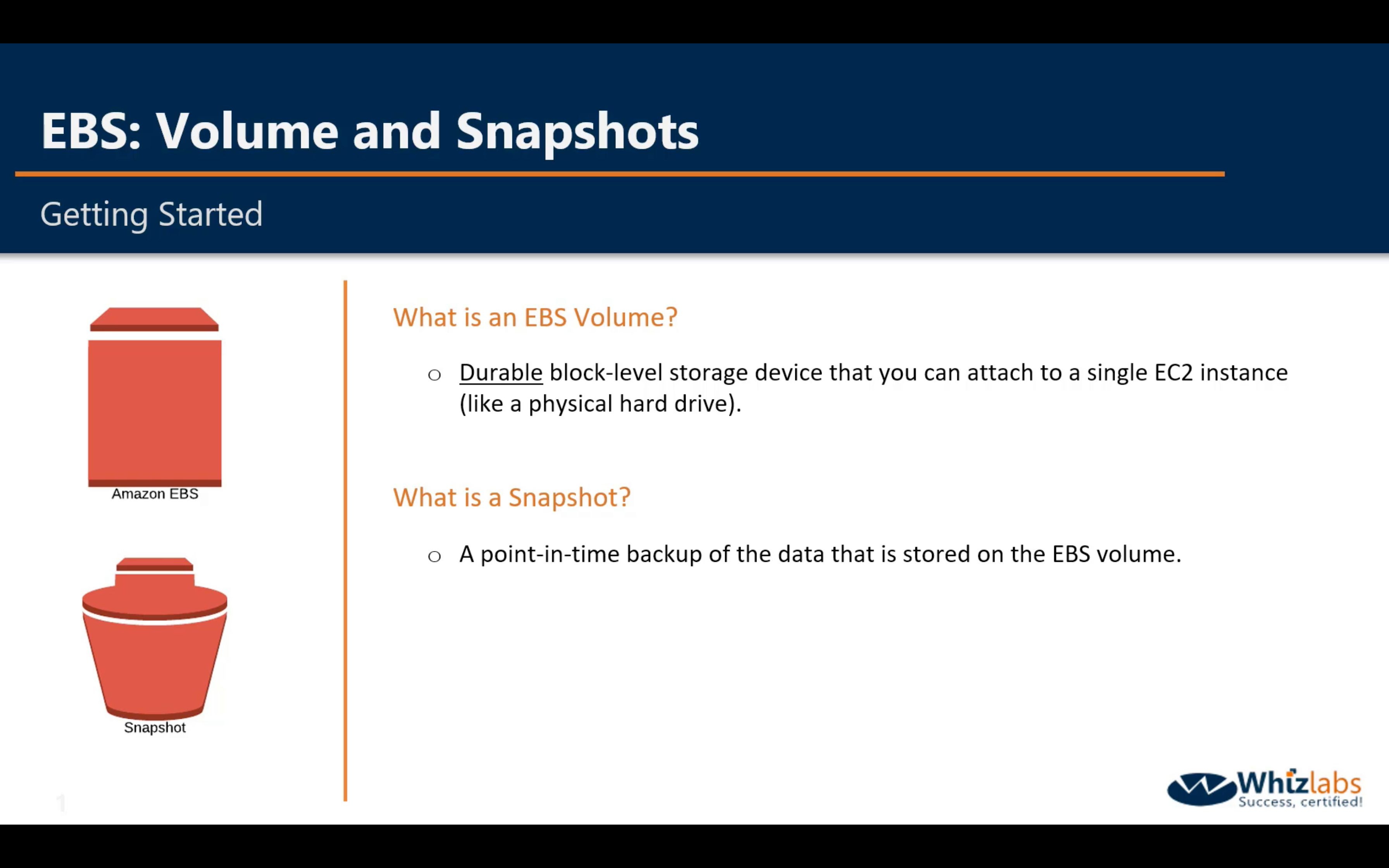

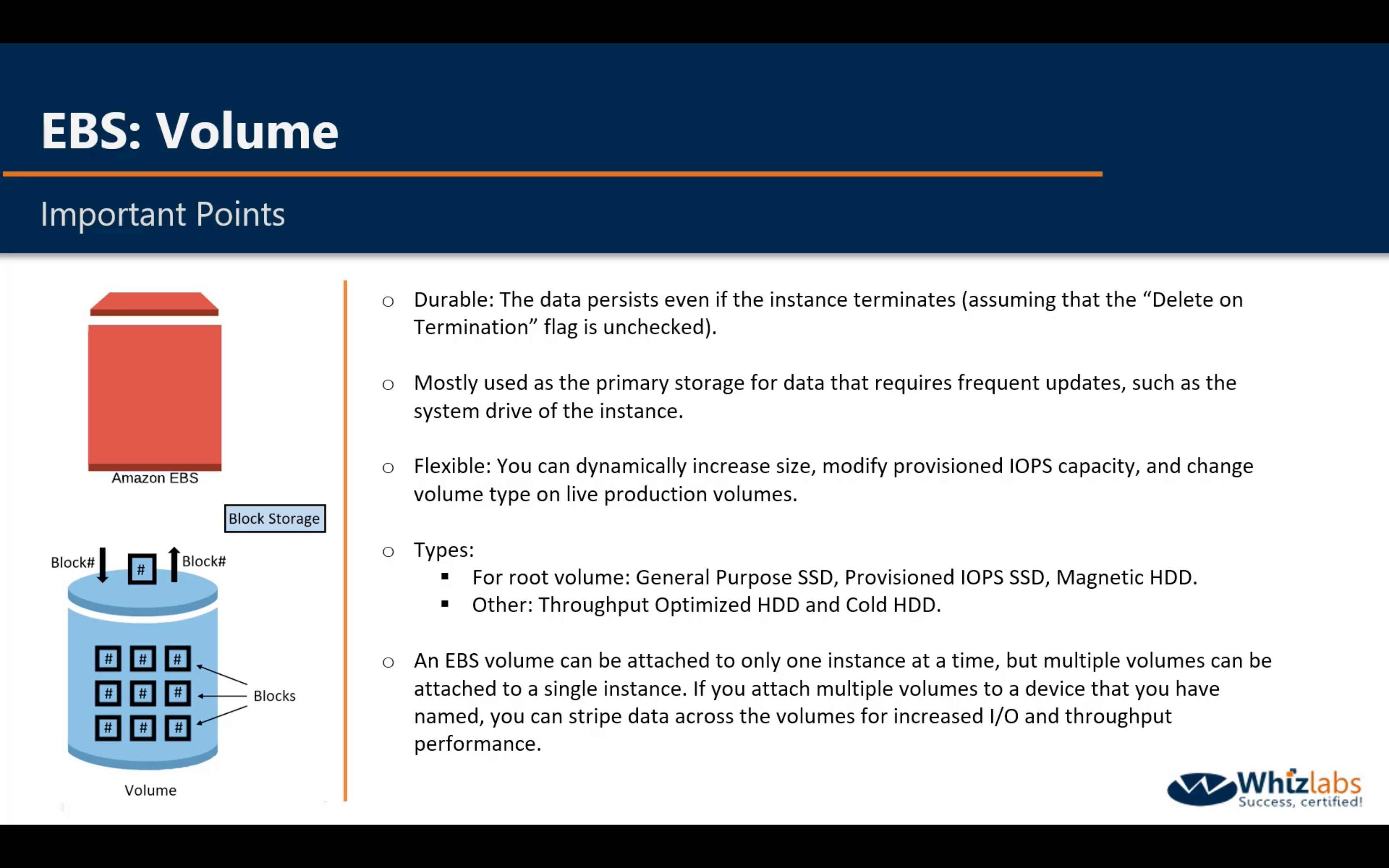

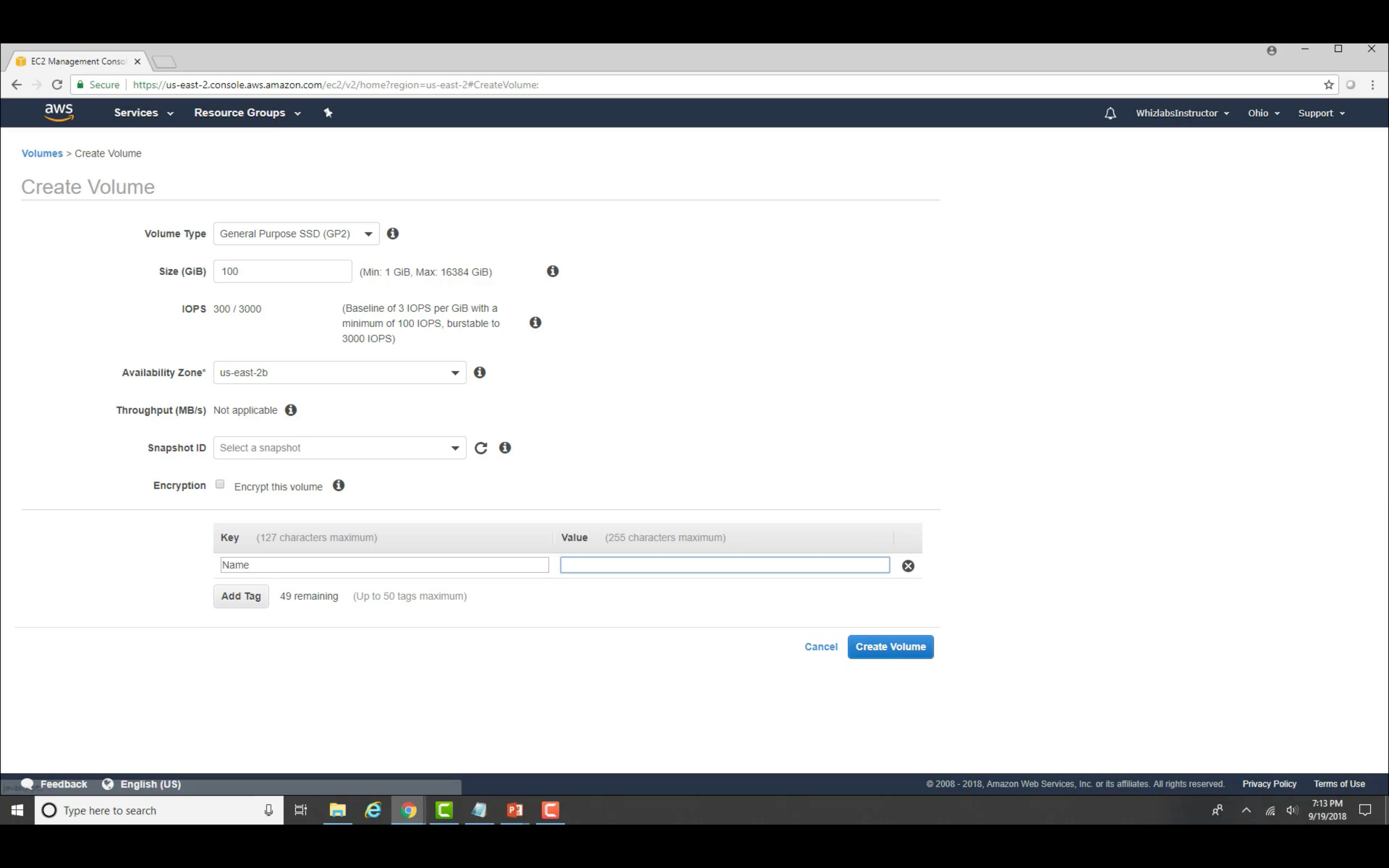

Amazon EBS (Elastic Block Store - Block Level Storage)

Easy to use, high performance block storage at any scale

- Performance for any workload

- Easy to Use

- Highly available and durable

- Virtually unlimited scale

- Secure

- Cost-effective

| Solid State Drives (SSD) | Hard Disk Drives (HDD) | |||

|---|---|---|---|---|

| Volume Type | EBS Provisioned IOPS SSD (io1) | EBS General Purpose SSD (gp2)* | Throughput Optimized HDD (st1) | Cold HDD (sc1) |

Short Description |

Highest performance SSD volume designed for latency-sensitive transactional workloads |

General Purpose SSD volume that balances price performance for a wide variety of transactional workloads |

Low cost HDD volume designed for frequently accessed, throughput intensive workloads | Lowest cost HDD volume designed for less frequently accessed workloads |

Use Cases |

I/O-intensive NoSQL and relational databases |

Boot volumes, low-latency interactive apps, dev & test |

Big data, data warehouses, log processing | Colder data requiring fewer scans per day |

API Name |

io1 |

gp2 |

st1 | sc1 |

Volume Size |

4 GB - 16 TB |

1 GB - 16 TB |

500 GB - 16 TB | 500 GB - 16 TB |

Max IOPS**/Volume |

64,000 |

16,000 |

500 | 250 |

Max Throughput***/Volume |

1,000 MB/s |

250 MB/s |

500 MB/s | 250 MB/s |

Max IOPS/Instance |

80,000 |

80,000 |

80,000 | 80,000 |

Max Throughput/Instance |

2,375 MB/s |

2,375 MB/s |

2,375 MB/s | 2,375 MB/s |

Price |

$0.125/GB-month $0.065/provisioned IOPS |

$0.10/GB-month |

$0.045/GB-month | $0.025/GB-month |

Dominant Performance Attribute |

IOPS |

IOPS |

MB/s | MB/s |

EBS Provisioned IOPS SSD provides sustained performance for mission-critical low-latency workloads.

EBS General Purpose SSD can provide bursts of performance up to 3,000 IOPS and have a maximum baseline performance of 10,000 IOPS for volume sizes greater than 3.3 TB.

The 2 HDD options are lower cost, high throughput volumes.

An Amazon EBS–optimized instance uses an optimized configuration stack and provides additional, dedicated capacity for Amazon EBS I/O. This optimization provides the best performance for your EBS volumes by minimizing contention between Amazon EBS I/O and other traffic from your instance.

EBS Volume encryption is NOT supported for all kinds of EC2 instances.

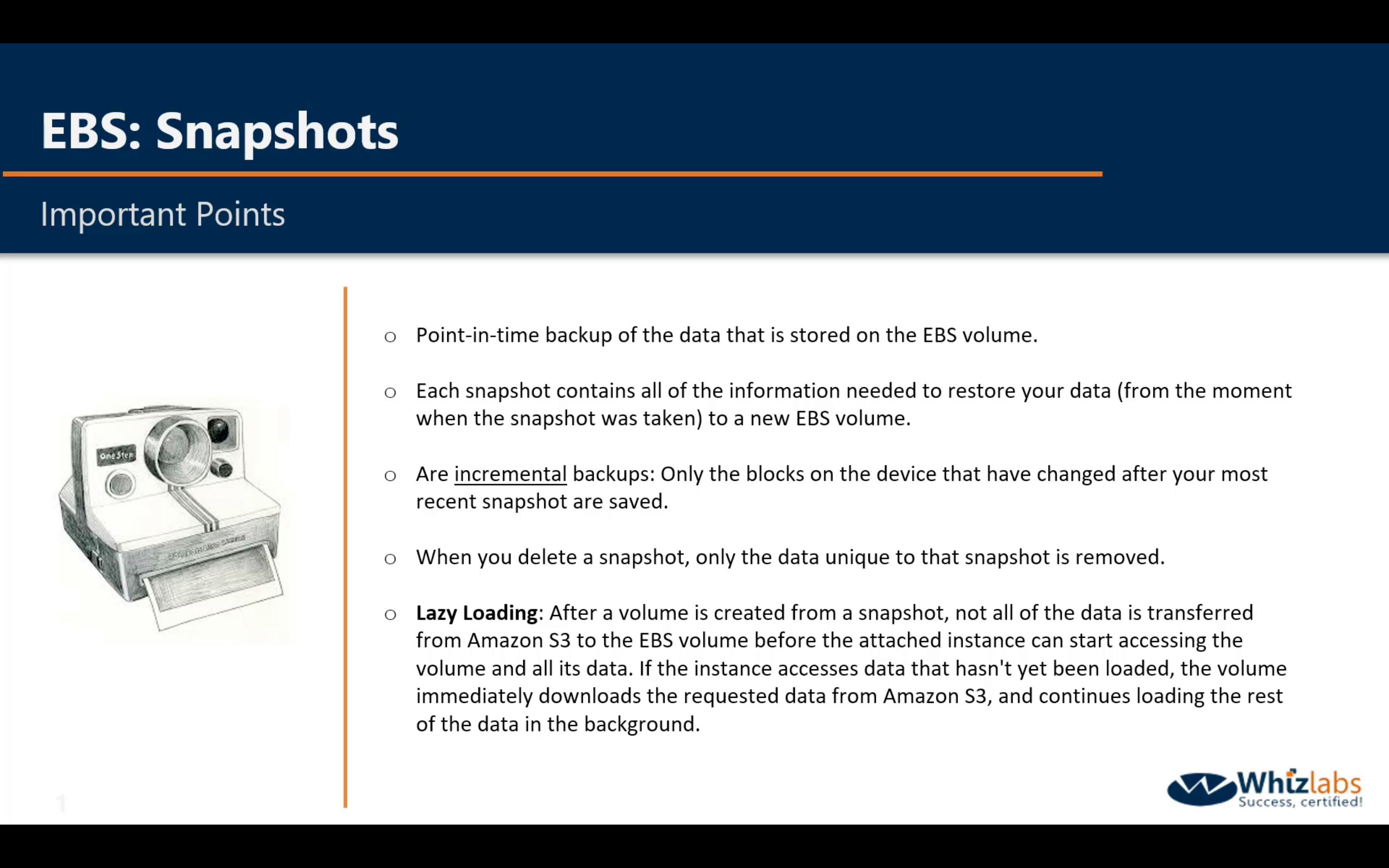

Automating the Amazon EBS Snapshot Lifecycle

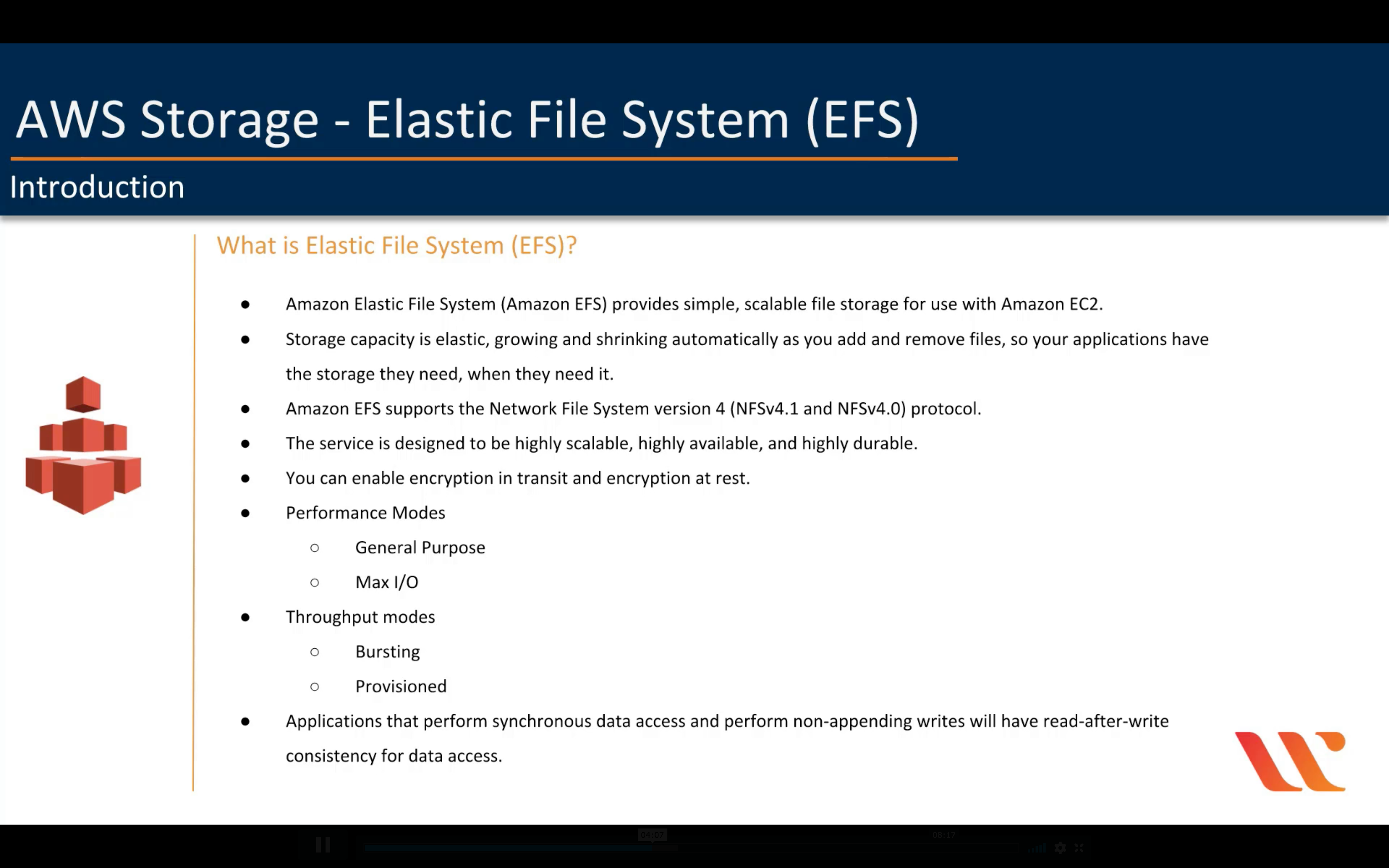

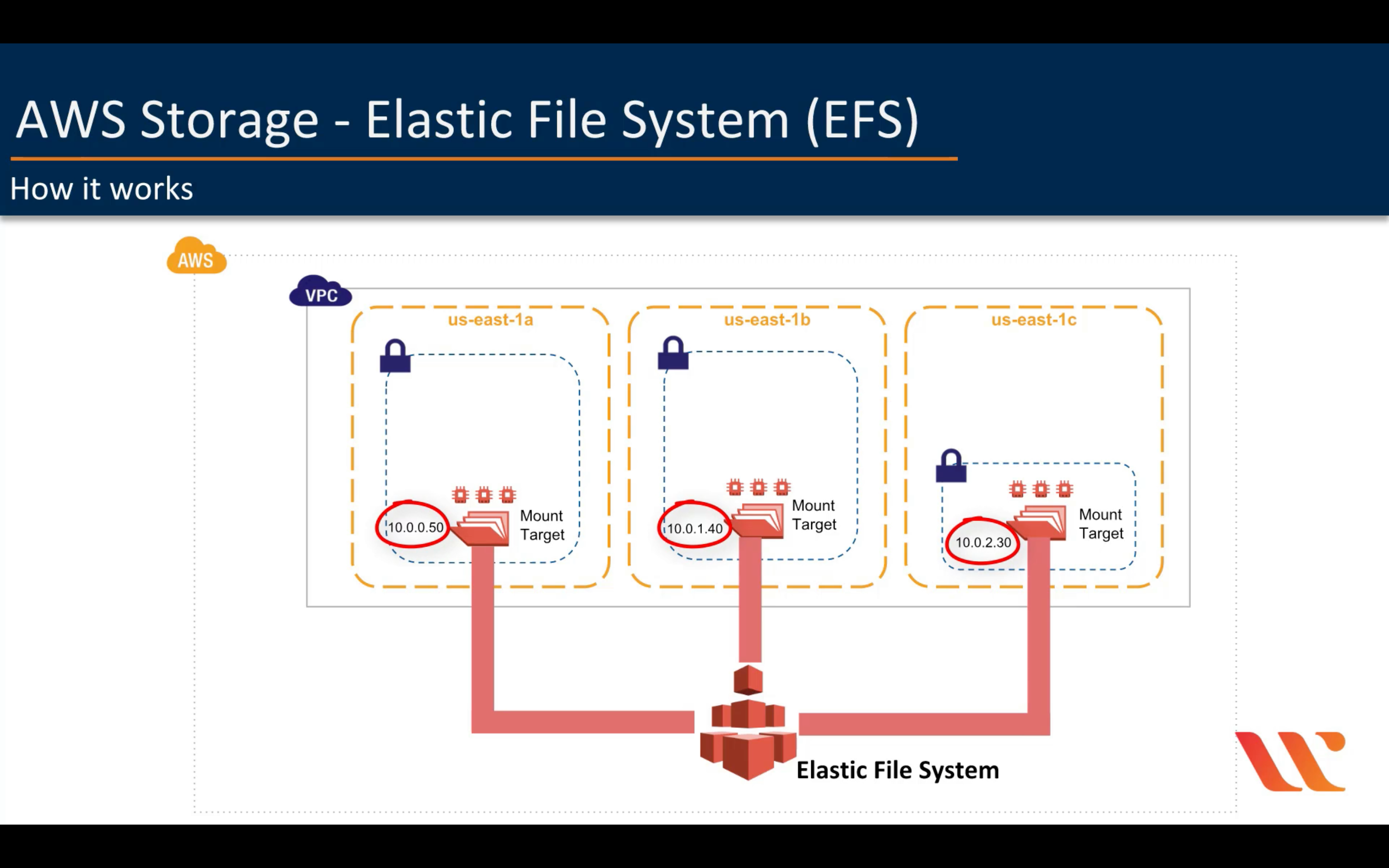

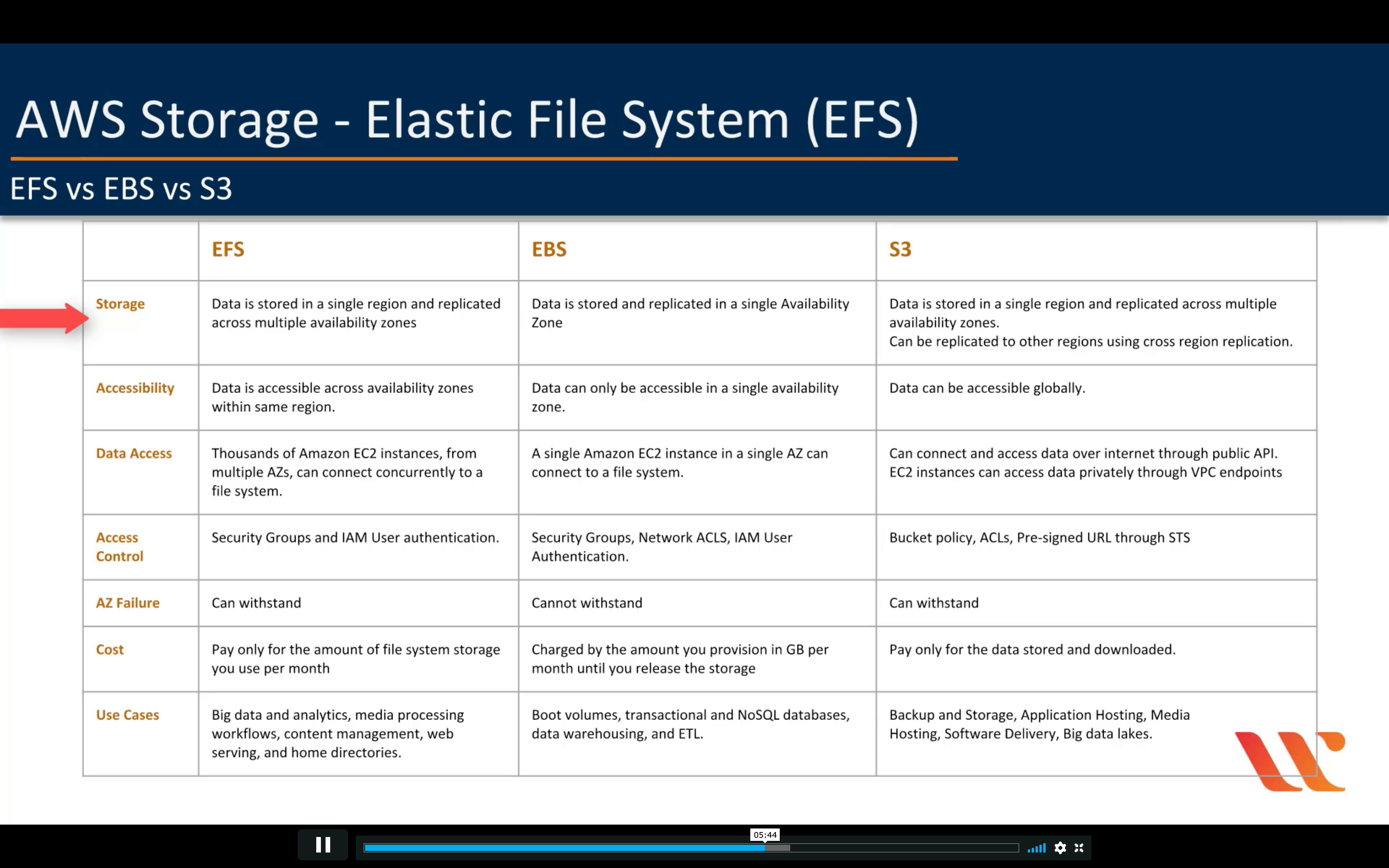

Amazon EFS (Elastic File System - File Level Storage)

Scalable, elastic, cloud-native NFS file system for $0.08/GB-Month*

- Fully managed

- Highly available and durable

- Storage classes and lifecycle management

- Security and compliance

- Scalable performance

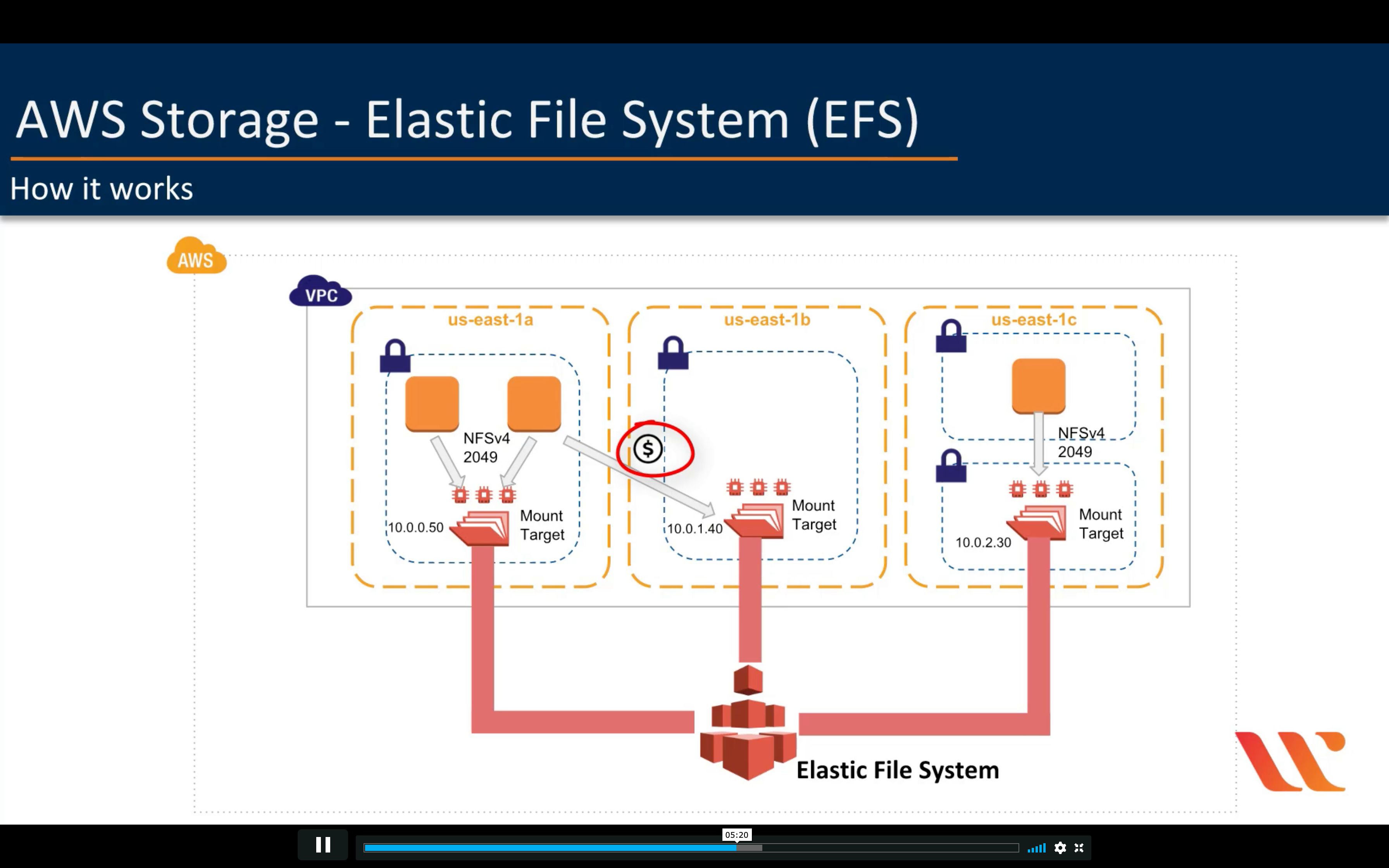

- Shared file system with NFS v4.0 and v4.1 support

- Performance modes

- Throughput modes

- Elastic and scalable

- Encryption

- Data transfer and backup

- AWS DataSync

- AWS Backup

EFS vs EBS vs S3

Encryption:

- Encryption at rest: EFS supports encrypting data at rest but can ONLY be done during creation.

- Encryption in transit: https://docs.aws.amazon.com/efs/latest/ug/encryption-in-transit.html. To enable encryption of data in transit, you connect to Amazon EFS using TLS. We recommend using the mount helper because it’s the simplest option.

To enable encryption of data in transit, you connect to Amazon EFS using TLS. We recommend using the mount helper because it’s the simplest option.

Performance Modes

To support a wide variety of cloud storage workloads, Amazon EFS offers two performance modes. You select a file system’s performance mode when you create it.

The two performance modes have no additional costs, so your Amazon EFS file system is billed and metered the same, regardless of your performance mode. For information about file system limits, see Limits for Amazon EFS File Systems.

Note: An Amazon EFS file system’s performance mode can’t be changed after the file system has been created.

General Purpose Performance Mode