AWS RDS

Introduction

Set up, operate, and scale a relational database in the cloud with just a few clicks.

Amazon Relational Database Service (Amazon RDS) makes it easy to set up, operate, and scale a relational database in the cloud. It provides cost-efficient and resizable capacity while automating time-consuming administration tasks such as hardware provisioning, database setup, patching and backups. It frees you to focus on your applications so you can give them the fast performance, high availability, security and compatibility they need.

Amazon RDS is available on several database instance types - optimized for memory, performance or I/O - and provides you with six familiar database engines to choose from, including Amazon Aurora, PostgreSQL, MySQL, MariaDB, Oracle Database, and SQL Server. You can use the AWS Database Migration Service to easily migrate or replicate your existing databases to Amazon RDS.

Performance

DB instance size

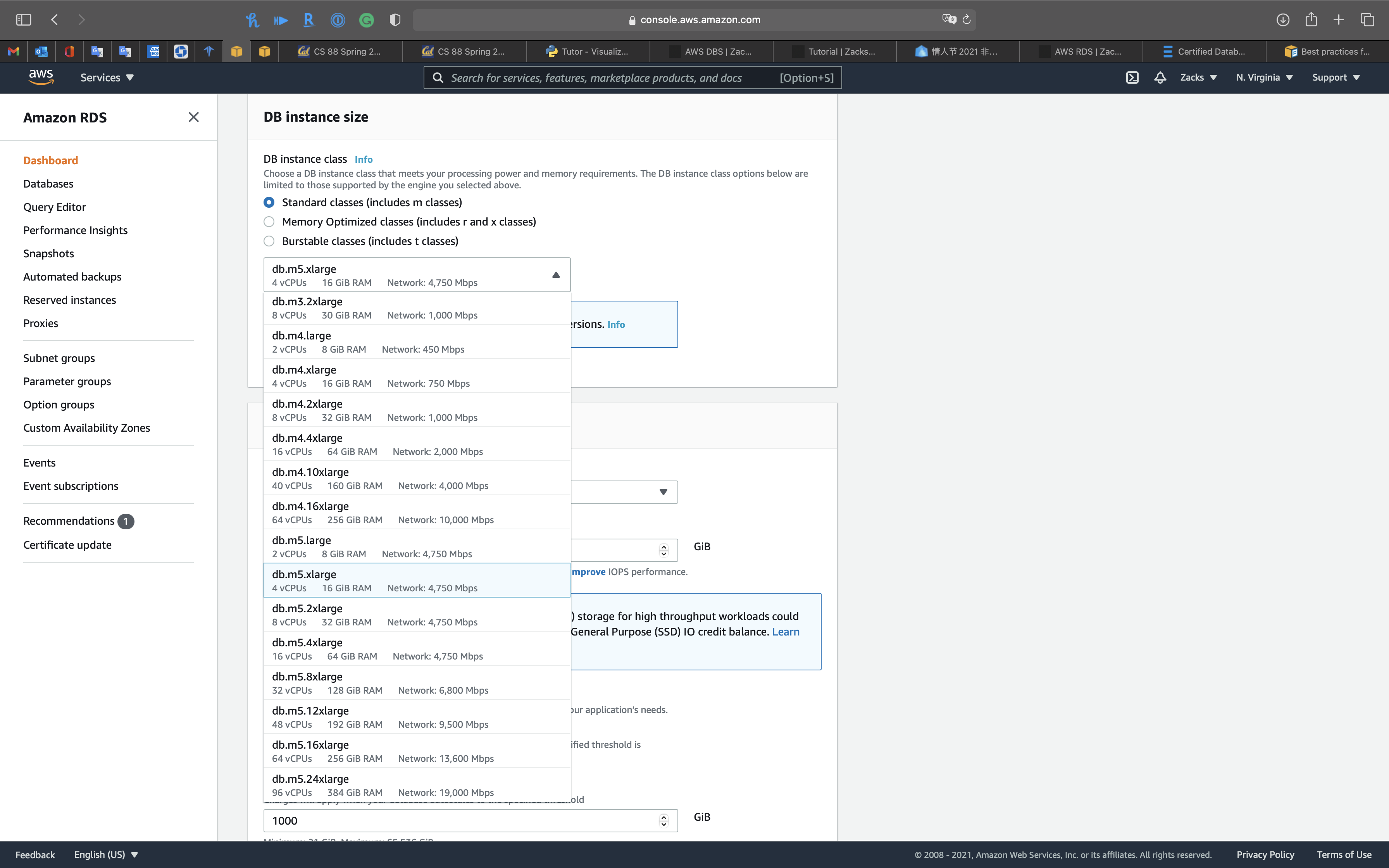

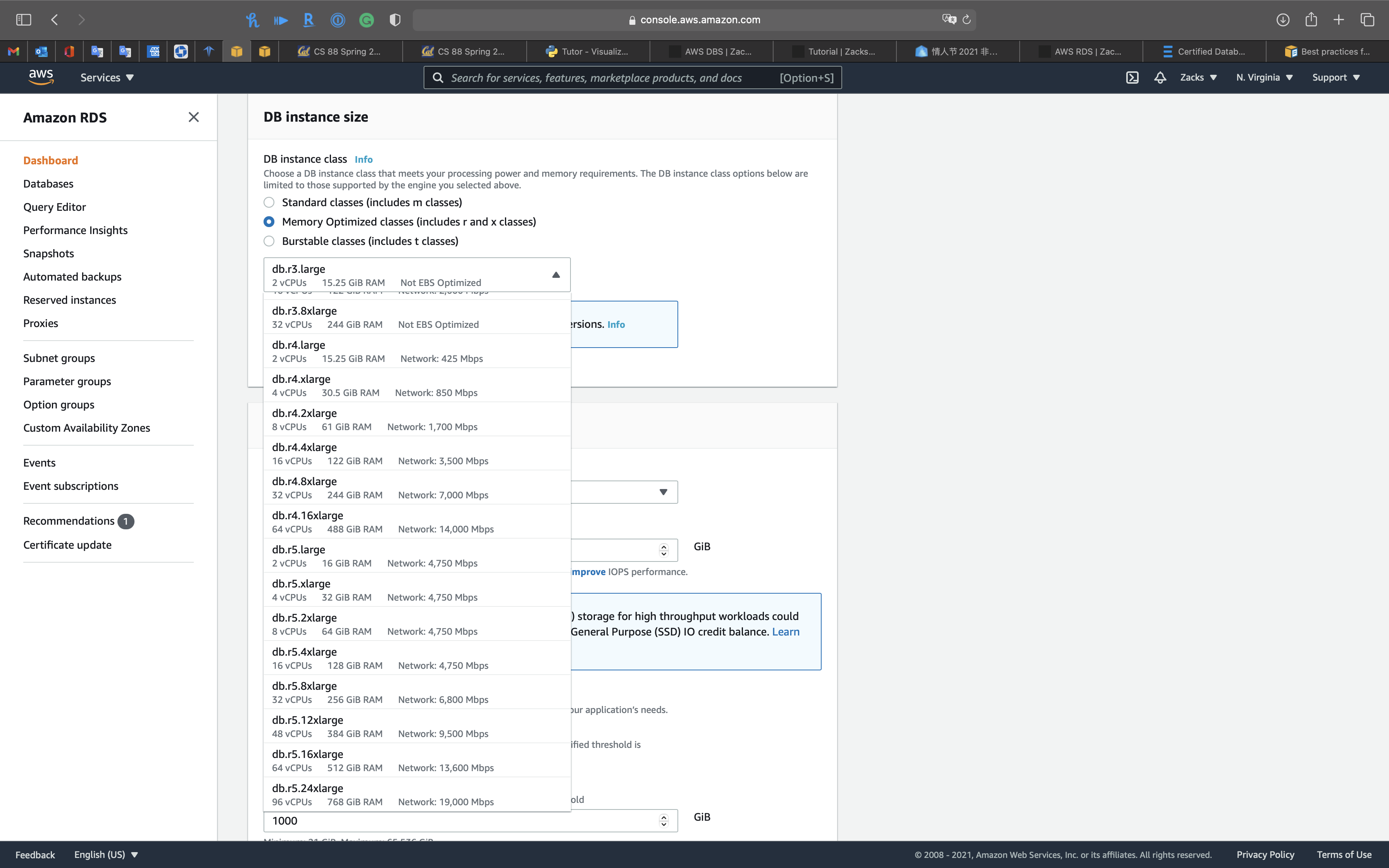

Both Standard classes (includes m classes) and Memory Optimized classes (includes r and x classes) provide up to 19,000 Mbps bandwidth

- If your database workload requires more I/O than you have provisioned, recovery after a failover or database failure will be slow. To increase the I/O capacity of a DB instance, do any or all of the following:

- Migrate to a different DB instance class with high I/O capacity.

- Convert from magnetic storage to either General Purpose or Provisioned IOPS storage, depending on how much of an increase you need. For information on available storage types, see Amazon RDS storage types.

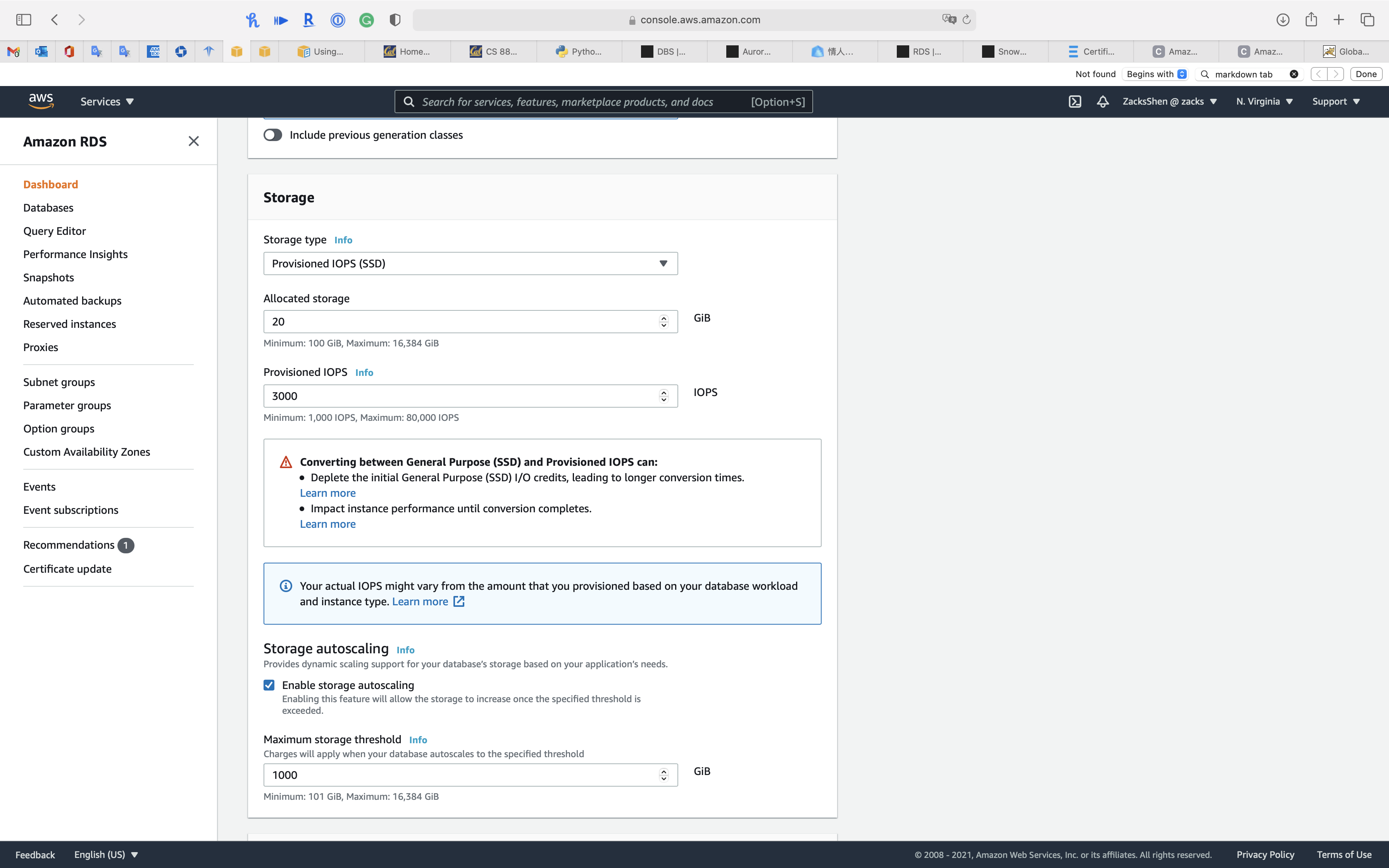

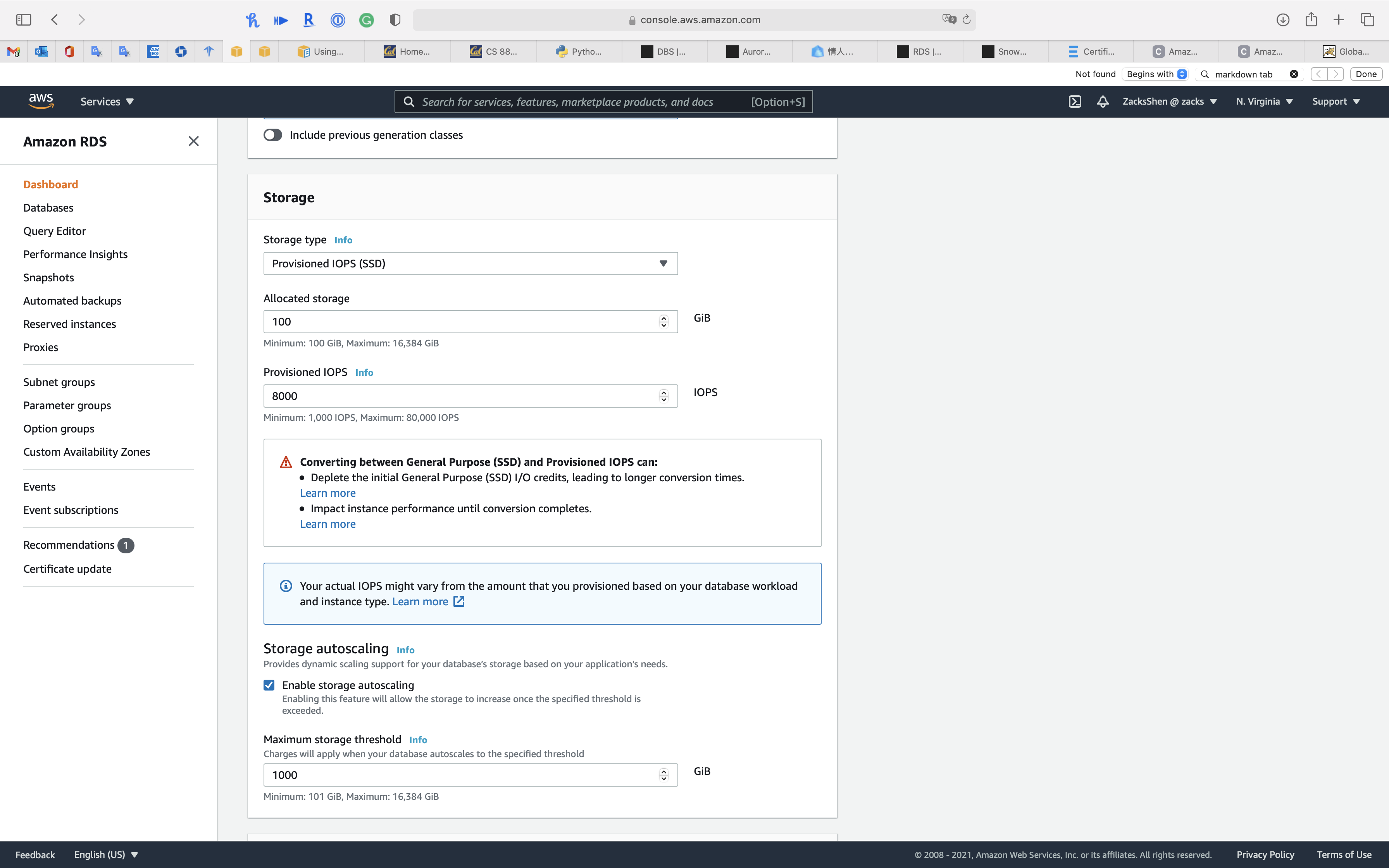

If you convert to Provisioned IOPS storage, make sure you also use a DB instance class that is optimized for Provisioned IOPS. For information on Provisioned IOPS, see Provisioned IOPS SSD storage. - If you are already using Provisioned IOPS storage, provision additional throughput capacity.

Storage

Amazon RDS storage types

Amazon RDS provides three storage types: General Purpose SSD (also known as gp2), Provisioned IOPS SSD (also known as io1), and magnetic (also known as standard). They differ in performance characteristics and price, which means that you can tailor your storage performance and cost to the needs of your database workload. You can create MySQL, MariaDB, Oracle, and PostgreSQL RDS DB instances with up to 64 tebibytes (TiB) of storage. You can create SQL Server RDS DB instances with up to 16 TiB of storage. For this amount of storage, use the Provisioned IOPS SSD and General Purpose SSD storage types.

The following list briefly describes the three storage types:

- General Purpose SSD – General Purpose SSD volumes offer cost-effective storage that is ideal for a broad range of workloads. These volumes deliver single-digit millisecond latencies and the ability to burst to 3,000 IOPS for extended periods of time. Baseline performance for these volumes is determined by the volume’s size.

For more information about General Purpose SSD storage, including the storage size ranges, see General Purpose SSD storage. - Provisioned IOPS – Provisioned IOPS storage is designed to meet the needs of I/O-intensive workloads, particularly database workloads, that require low I/O latency and consistent I/O throughput.

For more information about provisioned IOPS storage, including the storage size ranges, see Provisioned IOPS SSD storage. - Magnetic – Amazon RDS also supports magnetic storage for backward compatibility. We recommend that you use General Purpose SSD or Provisioned IOPS for any new storage needs. The maximum amount of storage allowed for DB instances on magnetic storage is less than that of the other storage types. For more information, see Magnetic storage.

Several factors can affect the performance of Amazon EBS volumes, such as instance configuration, I/O characteristics, and workload demand. For more information about getting the most out of your Provisioned IOPS volumes, see Amazon EBS volume performance.

If your DB instance runs out of storage space, it might no longer be available. To recover from this scenario, add more storage space to your instance using the ModifyDBInstance action. To prevent storage space issues from happening in the future, enable storage autoscaling.

Add more storage space to the DB instance using the ModifyDBInstance action.

If you change Storage type

You can customize IOPS

Manageability

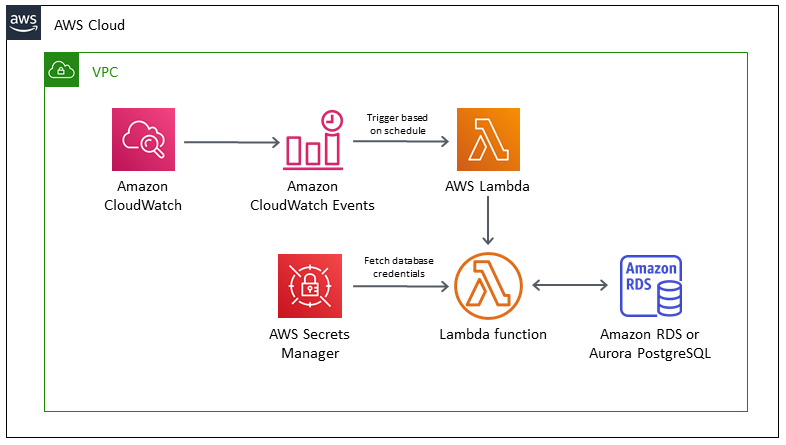

Cron job

Schedule jobs for Amazon RDS and Aurora PostgreSQL using Lambda and Secrets Manager

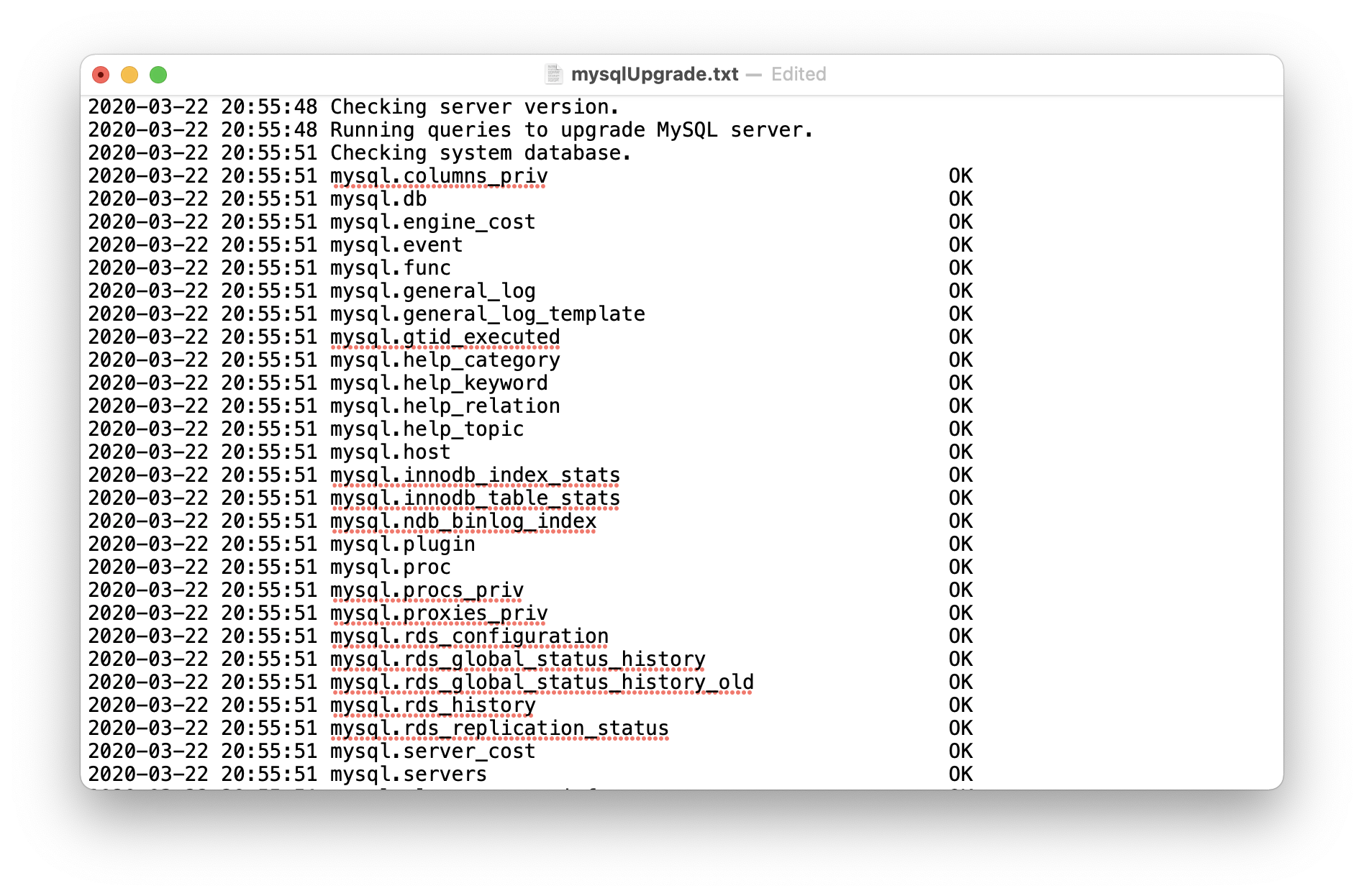

Upgrading a DB instance engine version

Upgrading a DB instance engine version

Upgrading the MySQL DB engine

Amazon RDS provides newer versions of each supported database engine so you can keep your DB instance up-to-date. Newer versions can include bug fixes, security enhancements, and other improvements for the database engine. When Amazon RDS supports a new version of a database engine, you can choose how and when to upgrade your database DB instances.

There are two kinds of upgrades: major version upgrades and minor version upgrades. In general, a major engine version upgrade can introduce changes that are not compatible with existing applications. In contrast, a minor version upgrade includes only changes that are backward-compatible with existing applications.

The version numbering sequence is specific to each database engine. For example, Amazon RDS MySQL 5.7 and 8.0 are major engine versions and upgrading from any 5.7 version to any 8.0 version is a major version upgrade. Amazon RDS MySQL version 5.7.22 and 5.7.23 are minor versions and upgrading from 5.7.22 to 5.7.23 is a minor version upgrade.

If your MySQL DB instance is using read replicas, you must upgrade all of the read replicas before upgrading the source instance. If your DB instance is in a Multi-AZ deployment, both the primary and standby replicas are upgraded. Your DB instance will not be available until the upgrade is complete.

Maintaining a DB instance

Maintenance for Multi-AZ deployments

Running a DB instance as a Multi-AZ deployment can further reduce the impact of a maintenance event, because Amazon RDS applies operating system updates by following these steps:

- Perform maintenance on the standby.

- Promote the standby to primary.

- Perform maintenance on the old primary, which becomes the new standby.

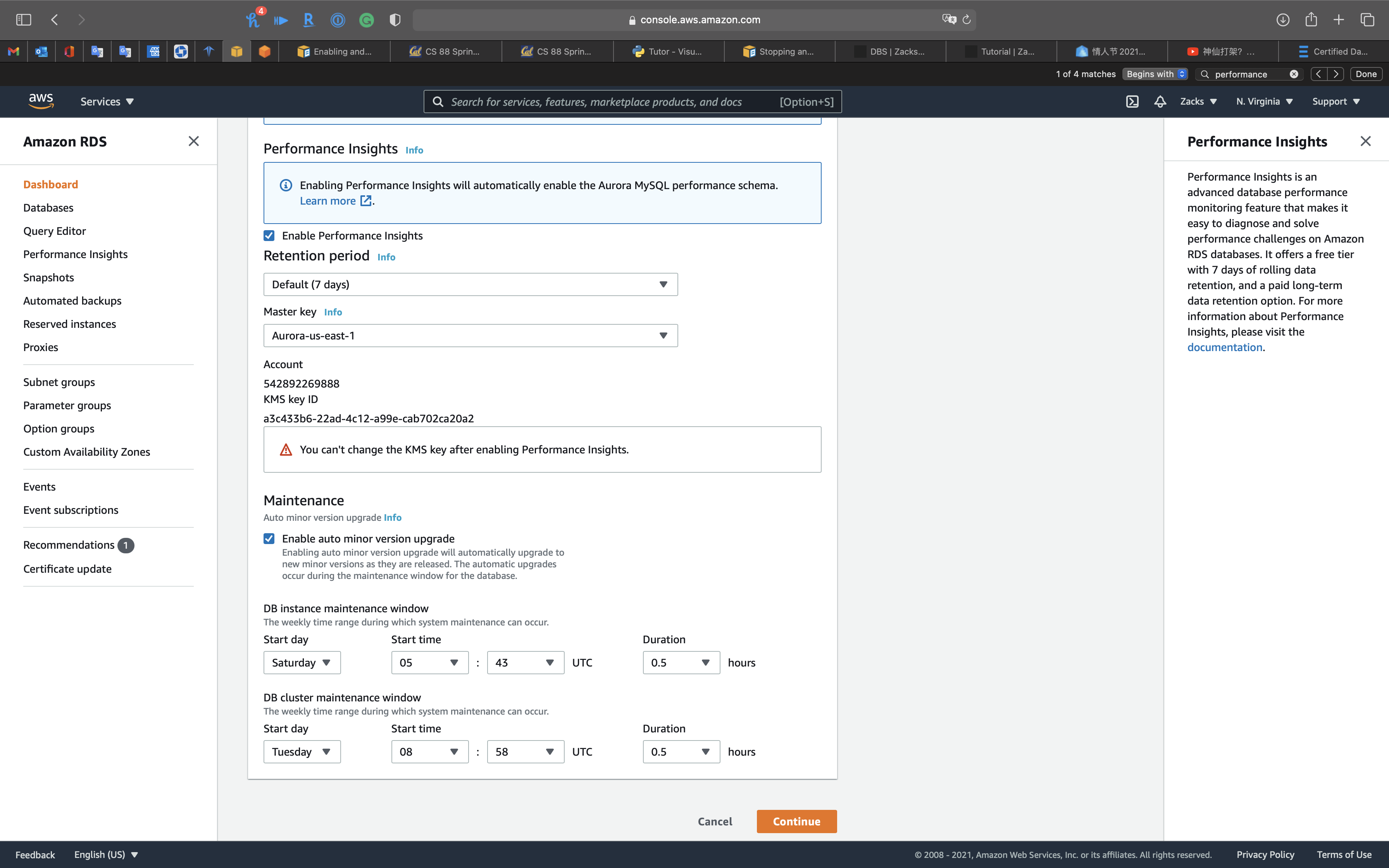

The Amazon RDS maintenance window

Every DB instance has a weekly maintenance window during which any system changes are applied. You can think of the maintenance window as an opportunity to control when modifications and software patching occur, in the event either are requested or required. If a maintenance event is scheduled for a given week, it is initiated during the 30-minute maintenance window you identify. Most maintenance events also complete during the 30-minute maintenance window, although larger maintenance events may take more than 30 minutes to complete.

The 30-minute maintenance window is selected at random from an 8-hour block of time per region. If you don’t specify a preferred maintenance window when you create the DB instance, then Amazon RDS assigns a 30-minute maintenance window on a randomly selected day of the week.

RDS will consume some of the resources on your DB instance while maintenance is being applied. You might observe a minimal effect on performance. For a DB instance, on rare occasions, a Multi-AZ failover might be required for a maintenance update to complete.

Following, you can find the time blocks for each region from which default maintenance windows are assigned

| Region Name | Region | Time Block |

|---|---|---|

| US East (Ohio) | us-east-2 | 03:00–11:00 UTC |

| US East (N. Virginia) | us-east-1 | 03:00–11:00 UTC |

| US West (N. California) | us-west-1 | 06:00–14:00 UTC |

| US West (Oregon) | us-west-2 | 06:00–14:00 UTC |

| Africa (Cape Town) | af-south-1 | 03:00–11:00 UTC |

| Asia Pacific (Hong Kong) | ap-east-1 | 06:00–14:00 UTC |

| Asia Pacific (Mumbai) | ap-south-1 | 06:00–14:00 UTC |

| Asia Pacific (Osaka-Local) | ap-northeast-3 | 22:00–23:59 UTC |

| Asia Pacific (Seoul) | ap-northeast-2 | 13:00–21:00 UTC |

| Asia Pacific (Singapore) | ap-southeast-1 | 14:00–22:00 UTC |

| Asia Pacific (Sydney) | ap-southeast-2 | 12:00–20:00 UTC |

| Asia Pacific (Tokyo) | ap-northeast-1 | 13:00–21:00 UTC |

| Canada (Central) | ca-central-1 | 03:00–11:00 UTC |

| China (Beijing) | cn-north-1 | 06:00–14:00 UTC |

| China (Ningxia) | cn-northwest-1 | 06:00–14:00 UTC |

| Europe (Frankfurt) | eu-central-1 | 21:00–05:00 UTC |

| Europe (Ireland) | eu-west-1 | 22:00–06:00 UTC |

| Europe (London) | eu-west-2 | 22:00–06:00 UTC |

| Europe (Paris) | eu-west-3 | 23:59–07:29 UTC |

| Europe (Milan) | eu-south-1 | 02:00–10:00 UTC |

| Europe (Stockholm) | eu-north-1 | 23:00–07:00 UTC |

| Middle East (Bahrain) | me-south-1 | 06:00–14:00 UTC |

| South America (São Paulo) | sa-east-1 | 00:00–08:00 UTC |

| AWS GovCloud (US-East) | us-gov-east-1 | 17:00–01:00 UTC |

| AWS GovCloud (US-West) | us-gov-west-1 | 06:00–14:00 UTC |

Adjusting the preferred DB instance maintenance window

The maintenance window should fall at the time of lowest usage and thus might need modification from time to time. Your DB instance will only be unavailable during this time if the system changes, such as a change in DB instance class, are being applied and require an outage, and only for the minimum amount of time required to make the necessary changes.

Working with storage

Increase the DB instance storage capacity using the AWS Management Console, Amazon RDS API, or the AWS CLI.

In most cases, scaling storage doesn’t require any outage and doesn’t degrade performance of the server. After you modify the storage size for a DB instance, the status of the DB instance is storage-optimization. The DB instance is fully operational after a storage modification.

Managing capacity automatically with Amazon RDS storage autoscaling

If your workload is unpredictable, you can enable storage autoscaling for an Amazon RDS DB instance. To do so, you can use the Amazon RDS console, the Amazon RDS API, or the AWS CLI.

For example, you might use this feature for a new mobile gaming application that users are adopting rapidly. In this case, a rapidly increasing workload might exceed the available database storage. To avoid having to manually scale up database storage, you can use Amazon RDS storage autoscaling.

With storage autoscaling enabled, when Amazon RDS detects that you are running out of free database space it automatically scales up your storage. Amazon RDS starts a storage modification for an autoscaling-enabled DB instance when these factors apply:

- Free available space is less than 10 percent of the allocated storage.

- The low-storage condition lasts at least five minutes.

- At least six hours have passed since the last storage modification.

The additional storage is in increments of whichever of the following is greater:

- 5 GiB

- 10 percent of currently allocated storage

- Storage growth prediction for 7 hours based on the

FreeStorageSpacemetrics change in the past hour. For more information on metrics, see Monitoring with Amazon CloudWatch.

Security

Connection

Create custom rules in the security group for your DB instances that allow connections from the security group you created for your Amazon EC2 instances. This would allow instances associated with the security group to access the DB instances. Including bastion hosts in your VPC environment enables you to securely connect to your database instances running in private subnets.

DB SGs allows inbound connection from EC2 SGs.

Database authentication with Amazon RDS

Data protection in Amazon RDS

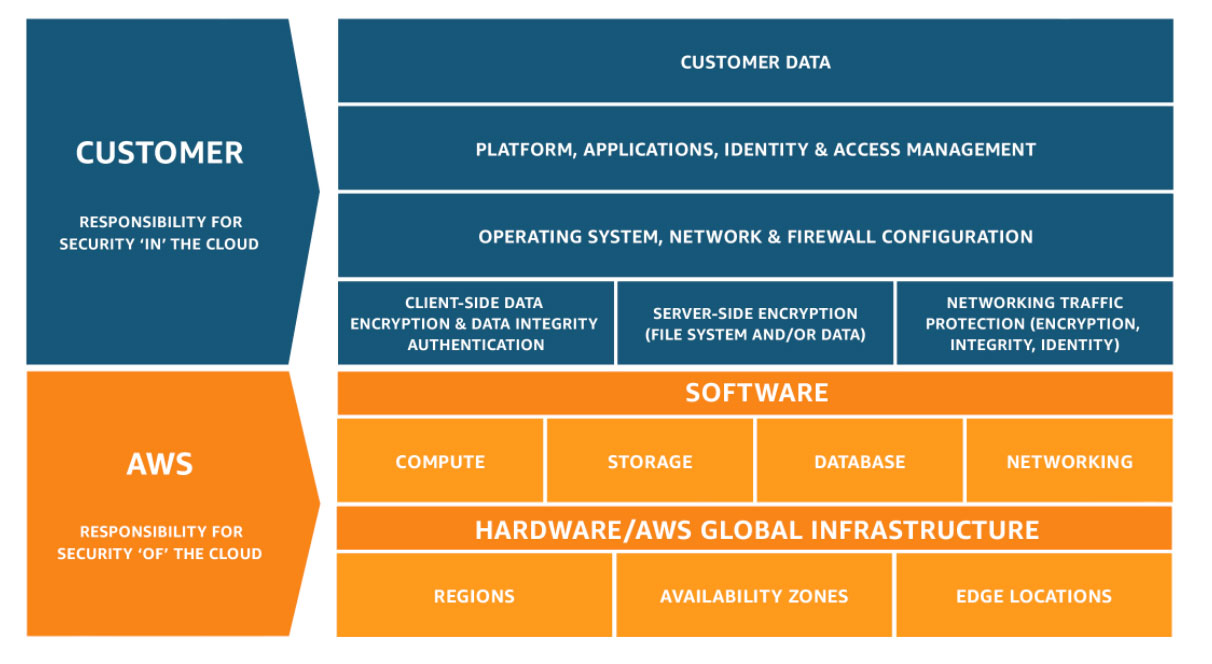

Shared Responsibility Model

The AWS shared responsibility model applies to data protection in Amazon Relational Database Service. As described in this model, AWS is responsible for protecting the global infrastructure that runs all of the AWS Cloud. You are responsible for maintaining control over your content that is hosted on this infrastructure. This content includes the security configuration and management tasks for the AWS services that you use. For more information about data privacy, see the Data Privacy FAQ. For information about data protection in Europe, see the AWS Shared Responsibility Model and GDPR blog post on the AWS Security Blog.

For data protection purposes, we recommend that you protect AWS account credentials and set up individual user accounts with AWS Identity and Access Management (IAM). That way each user is given only the permissions necessary to fulfill their job duties. We also recommend that you secure your data in the following ways:

- Use multi-factor authentication (MFA) with each account.

- Use SSL/TLS to communicate with AWS resources. We recommend TLS 1.2 or later.

- Set up API and user activity logging with AWS CloudTrail.

- Use AWS encryption solutions, along with all default security controls within AWS services.

- Use advanced managed security services such as Amazon Macie, which assists in discovering and securing personal data that is stored in Amazon S3.

- If you require FIPS 140-2 validated cryptographic modules when accessing AWS through a command line interface or an API, use a FIPS endpoint. For more information about the available FIPS endpoints, see Federal Information Processing Standard (FIPS) 140-2.

We strongly recommend that you never put sensitive identifying information, such as your customers’ account numbers, into free-form fields such as a Name field. This includes when you work with Amazon RDS or other AWS services using the console, API, AWS CLI, or AWS SDKs. Any data that you enter into Amazon RDS or other services might get picked up for inclusion in diagnostic logs. When you provide a URL to an external server, don’t include credentials information in the URL to validate your request to that server.

Identity and access management in Amazon RDS

AWS Identity and Access Management (IAM) is an AWS service that helps an administrator securely control access to AWS resources. IAM administrators control who can be authenticated (signed in) and authorized (have permissions) to use Amazon RDS resources. IAM is an AWS service that you can use with no additional charge.

Amazon RDS identity-based policy examples

Policy best practices

Identity-based policies are very powerful. They determine whether someone can create, access, or delete Amazon RDS resources in your account. These actions can incur costs for your AWS account. When you create or edit identity-based policies, follow these guidelines and recommendations:

- Get Started Using AWS Managed Policies – To start using Amazon RDS quickly, use AWS managed policies to give your employees the permissions they need. These policies are already available in your account and are maintained and updated by AWS. For more information, see Get started using permissions with AWS managed policies in the IAM User Guide.

- Grant Least Privilege – When you create custom policies, grant only the permissions required to perform a task. Start with a minimum set of permissions and grant additional permissions as necessary. Doing so is more secure than starting with permissions that are too lenient and then trying to tighten them later. For more information, see Grant least privilege in the IAM User Guide.

- Enable MFA for Sensitive Operations – For extra security, require IAM users to use multi-factor authentication (MFA) to access sensitive resources or API operations. For more information, see Using multi-factor authentication (MFA) in AWS in the IAM User Guide.

- Use Policy Conditions for Extra Security – To the extent that it’s practical, define the conditions under which your identity-based policies allow access to a resource. For example, you can write conditions to specify a range of allowable IP addresses that a request must come from. You can also write conditions to allow requests only within a specified date or time range, or to require the use of SSL or MFA. For more information, see IAM JSON policy elements: Condition in the IAM User Guide.

Secrets Management

AWS Secrets Manager helps you protect secrets needed to access your applications, services, and IT resources. The service enables you to easily rotate, manage, and retrieve database credentials, API keys, and other secrets throughout their lifecycle. Users and applications retrieve secrets with a call to Secrets Manager APIs, eliminating the need to hardcode sensitive information in plain text. Secrets Manager offers secret rotation with built-in integration for Amazon RDS, Amazon Redshift, and Amazon DocumentDB. Also, the service is extensible to other types of secrets, including API keys and OAuth tokens. In addition, Secrets Manager enables you to control access to secrets using fine-grained permissions and audit secret rotation centrally for resources in the AWS Cloud, third-party services, and on-premises.

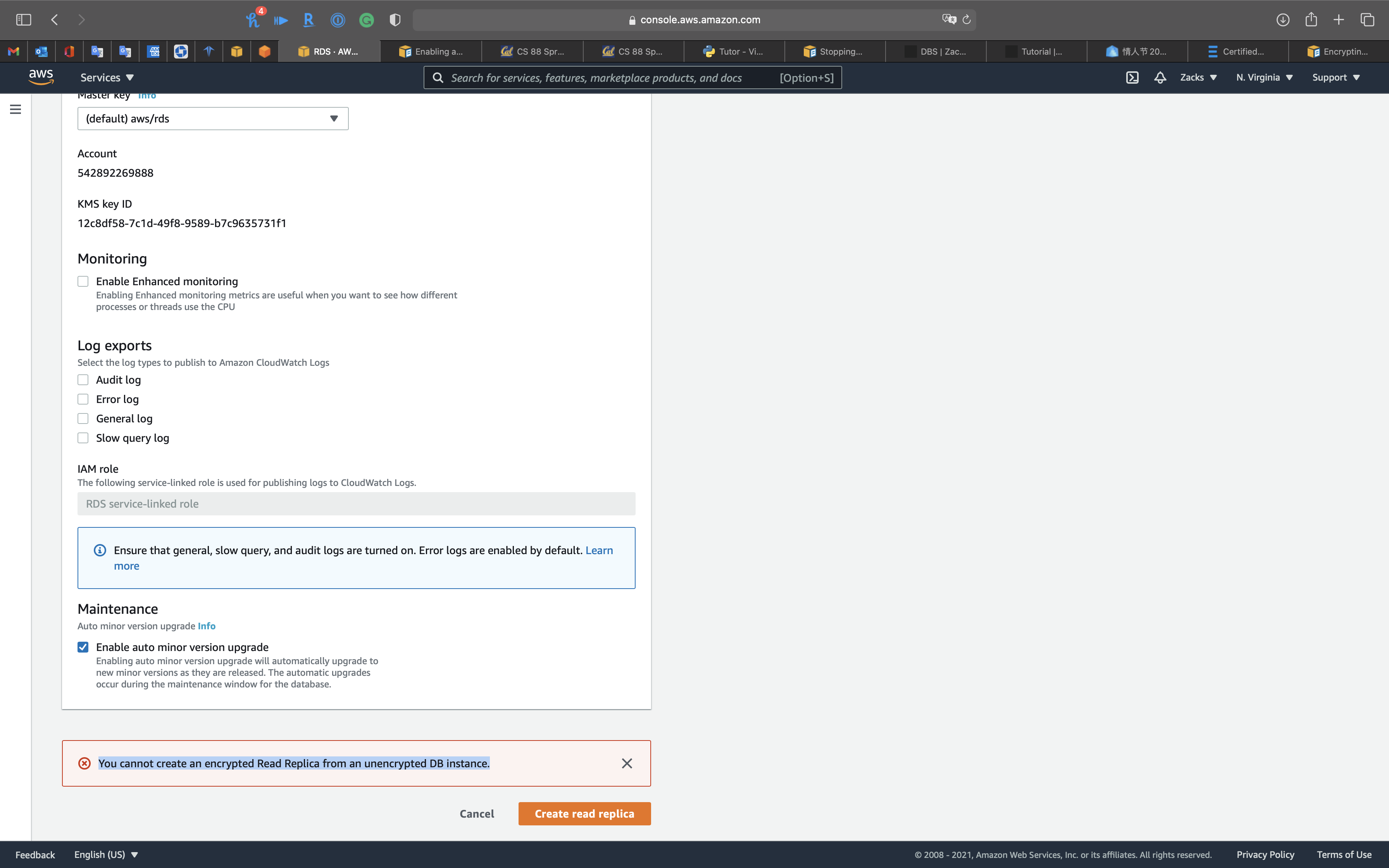

Encryption

Encryption at rest during creating a RDS DB instance

To enable encryption at rest for an existing unencrypted DB instance, you can create a snapshot of your DB instance, and then create an encrypted copy of that snapshot. You can then restore a DB instance from the encrypted snapshot so you have an encrypted copy of your original DB instance.

- Create a snapshot of the database.

- Create an encrypted copy of the snapshot.

- Create a new database from the encrypted snapshot.

Encryption at rest for an unencrypted RDS DB instance

You cannot create an encrypted Read Replica from an unencrypted DB instance.

Limitations of Amazon RDS encrypted DB instances

The following limitations exist for Amazon RDS encrypted DB instances:

- You can only enable encryption for an Amazon RDS DB instance when you create it, not after the DB instance is created.

However, because you can encrypt a copy of an unencrypted snapshot, you can effectively add encryption to an unencrypted DB instance. That is, you can create a snapshot of your DB instance, and then create an encrypted copy of that snapshot. You can then restore a DB instance from the encrypted snapshot, and thus you have an encrypted copy of your original DB instance. For more information, see Copying a snapshot.

Availability and Durability

Automated Backup

Manual Snapshot

Creating a DB snapshot

Exporting DB snapshot data to Amazon S3

Creating a DB snapshot

Unlike automated backups, manual snapshots aren’t subject to the backup retention period. Snapshots don’t expire.

For very long-term backups of MariaDB, MySQL, and PostgreSQL data, we recommend exporting snapshot data to Amazon S3. If the major version of your DB engine is no longer supported, you can’t restore to that version from a snapshot. For more information, see Exporting DB snapshot data to Amazon S3.

Share snapshot

An Operations team in a large company wants to centrally manage resource provisioning for its development teams across multiple accounts. When a new AWS account is created, the Developers require full privileges for a database environment that uses the same configuration, data schema, and source data as the company’s production Amazon RDS for MySQL DB instance.

How can the operations team achieve this?

A manual DB snapshot can be shared privately with other AWS accounts. AWS CloudFormation StackSets extends the functionality of stacks by enabling you to create, update, or delete stacks across multiple accounts and Regions with a single operation. Using an administrator account, you define and manage an AWS CloudFormation template, and use the template as the basis for provisioning stacks into selected target accounts across specified Regions.

Take a manual snapshot of the source DB instance and share the snapshot privately with the new account.

Specify the snapshot ARN in an RDS resource in an AWS CloudFormation template and use StackSets to deploy to the new account.

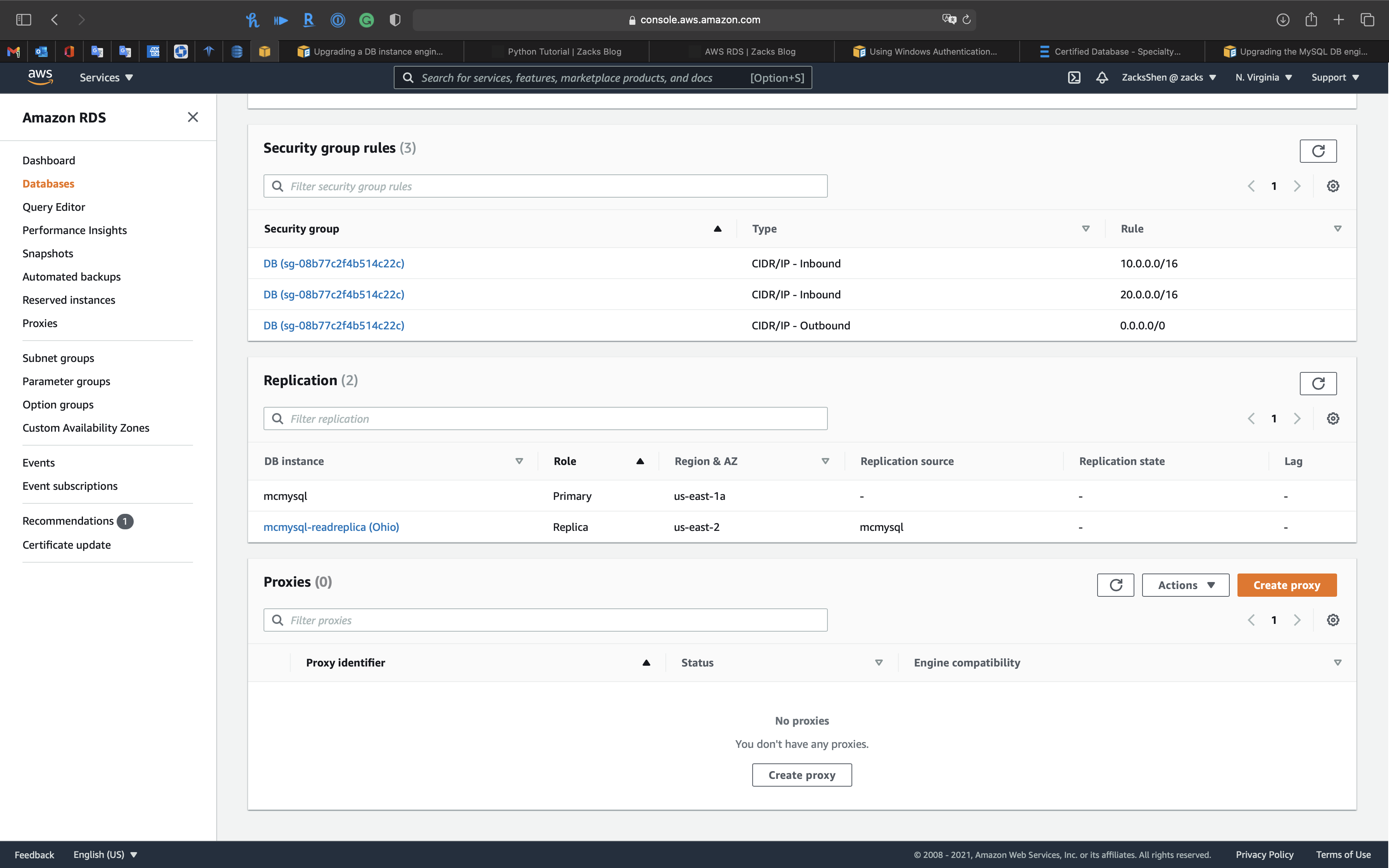

Multi-AZs

Read Replica

Under Connectivity & security tab

Prerequisite

Working with MySQL read replicas

Working with Oracle replicas for Amazon RDS

Working with PostgreSQL read replicas in Amazon RDS

Before a RDS DB instance can serve as a replication source, make sure to enable automatic backups on the source DB instance

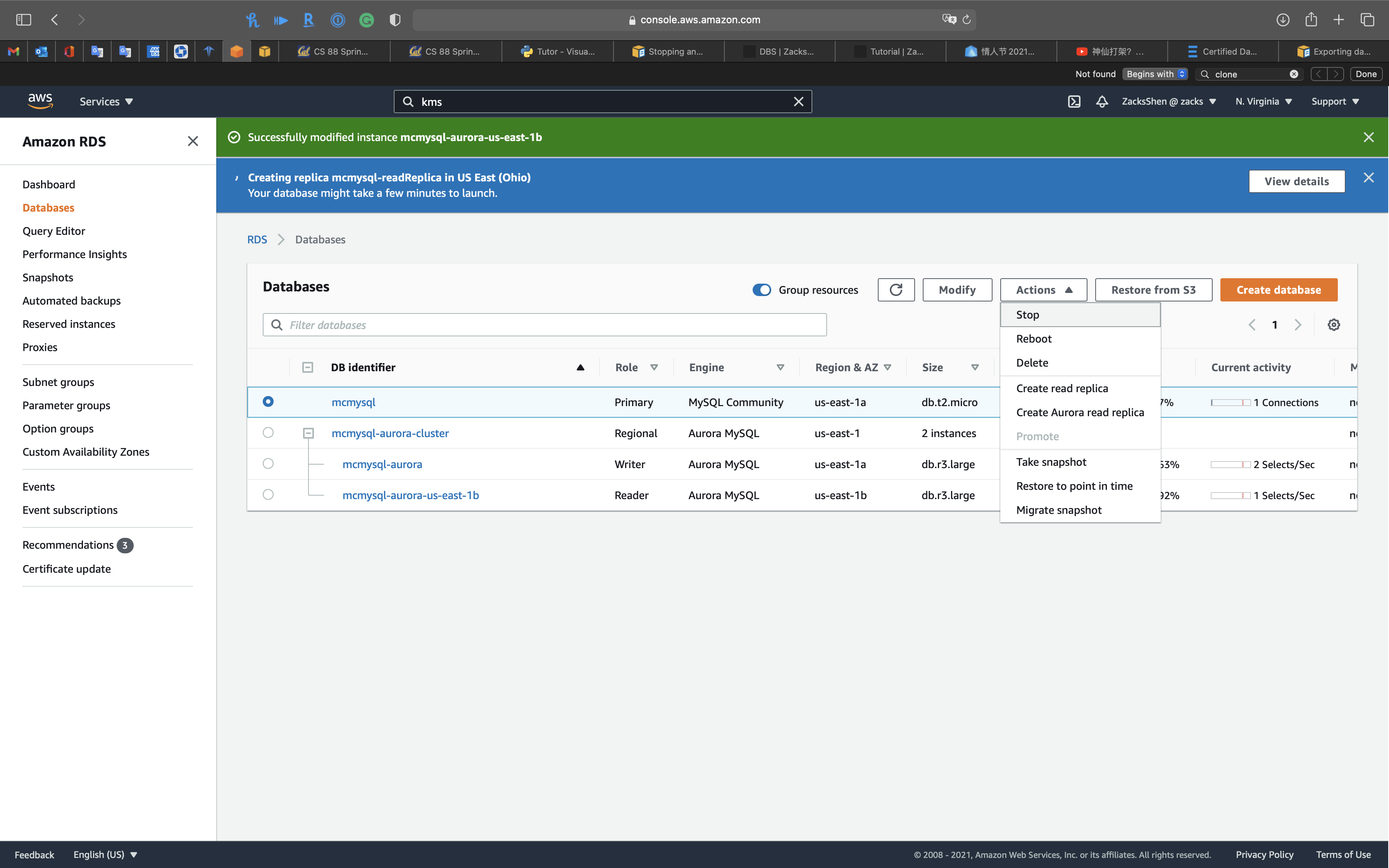

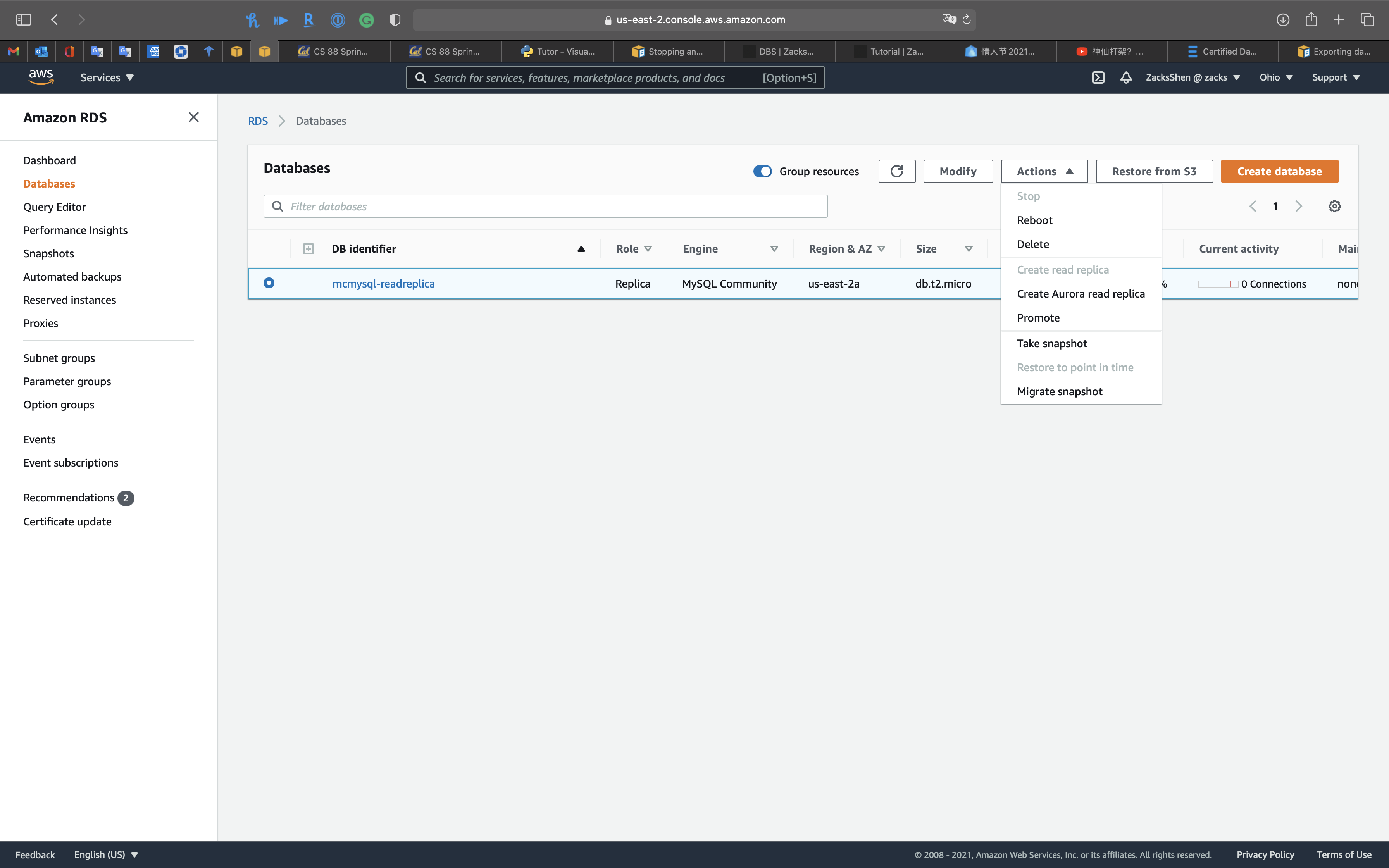

Delete Read Replica

Delete each read replica before stopping its corresponding source instance

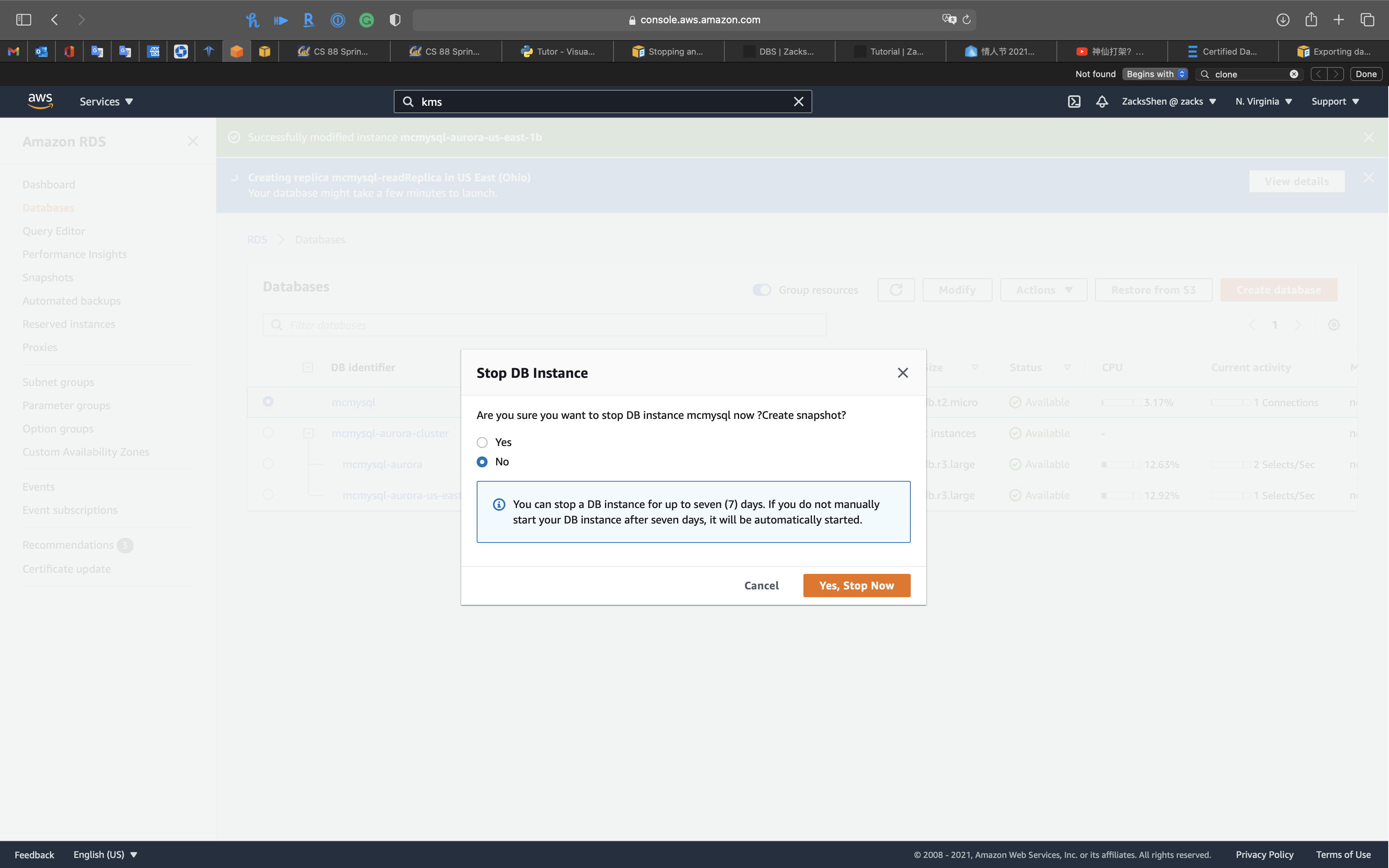

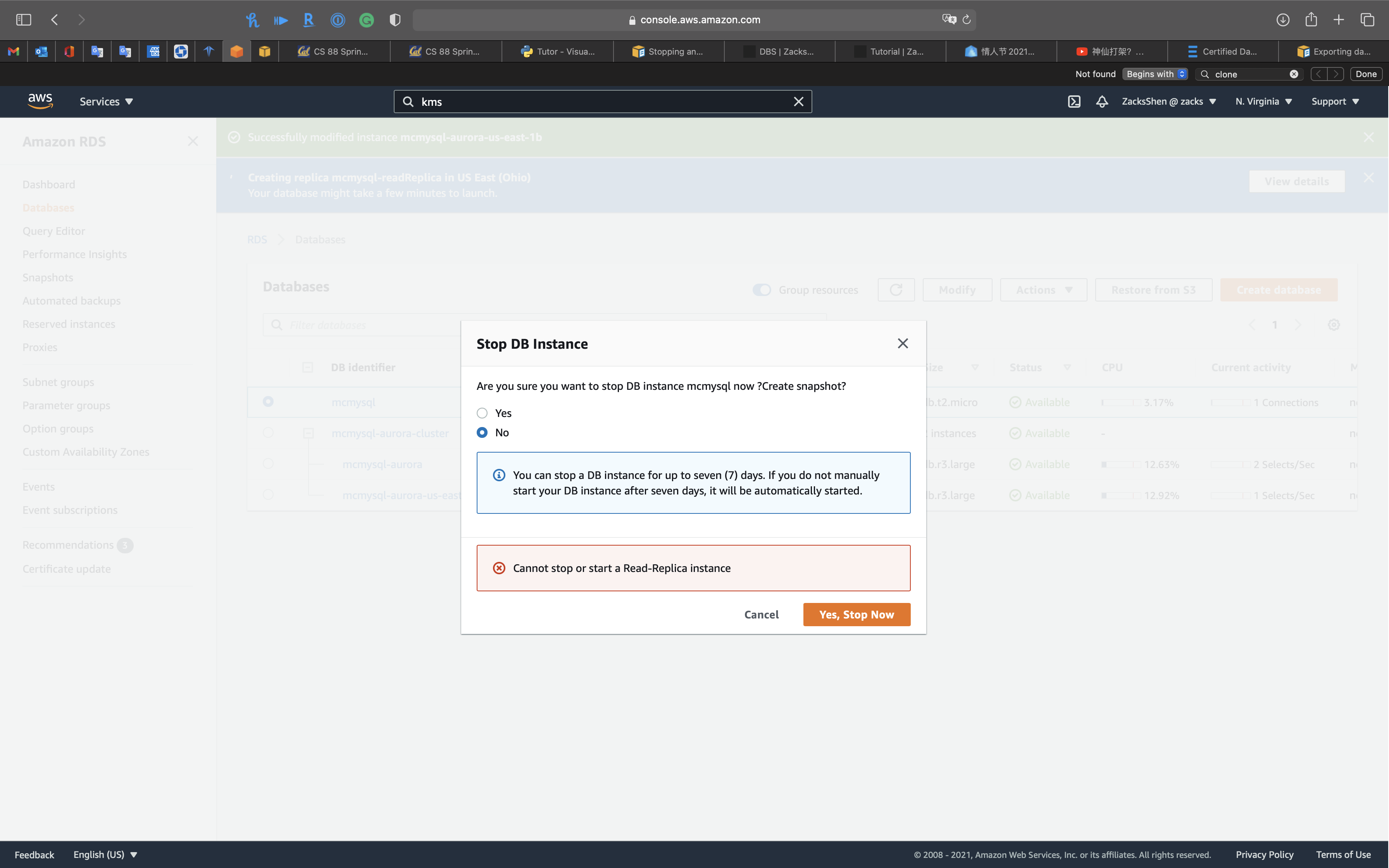

Stopping an Amazon RDS DB instance temporarily

Starting and stopping replication with MySQL read replicas

Stopping an Amazon RDS DB instance - Limitation

The following are some limitations to stopping and starting a DB instance:

- You can’t stop a DB instance that has a read replica, or that is a read replica.

- You can’t stop an Amazon RDS for SQL Server DB instance in a Multi-AZ configuration.

- You can’t modify a stopped DB instance.

- You can’t delete an option group that is associated with a stopped DB instance.

- You can’t delete a DB parameter group that is associated with a stopped DB instance.

Starting and stopping replication with MySQL read replicas

If replication is stopped for more than 30 consecutive days, either manually or due to a replication error, Amazon RDS terminates replication between the source DB instance and all read replicas. It does so to prevent increased storage requirements on the source DB instance and long failover times. The read replica DB instance is still available. However, replication can’t be resumed because the binary logs required by the read replica are deleted from the source DB instance after replication is terminated. You can create a new read replica for the source DB instance to reestablish replication.

If you try to stop a RDS DB instance that has a read replica

And there is NO option for stopping a read replica through AWS console

Diaster Recover

RTO & RPO

Disaster Recovery (DR) Objectives

Plan for Disaster Recovery (DR)

Implementing a disaster recovery strategy with Amazon RDS

| Feature | RTO | RPO | Cost | Scope |

|---|---|---|---|---|

| Automated backups(once a day) | Good | Better | Low | Single Region |

| Manual snapshots | Better | Good | Medium | Cross-Region |

| Read replicas | Best | Best | High | Cross-Region |

In addition to availability objectives, your resiliency strategy should also include Disaster Recovery (DR) objectives based on strategies to recover your workload in case of a disaster event. Disaster Recovery focuses on one-time recovery objectives in response natural disasters, large-scale technical failures, or human threats such as attack or error. This is different than availability which measures mean resiliency over a period of time in response to component failures, load spikes, or software bugs.

Define recovery objectives for downtime and data loss: The workload has a recovery time objective (RTO) and recovery point objective (RPO).

- Recovery Time Objective (RTO) is defined by the organization. RTO is the maximum acceptable delay between the interruption of service and restoration of service. This determines what is considered an acceptable time window when service is unavailable.

- Recovery Point Objective (RPO) is defined by the organization. RPO is the maximum acceptable amount of time since the last data recovery point. This determines what is considered an acceptable loss of data between the last recovery point and the interruption of service.

Recovery Strategies

Use defined recovery strategies to meet the recovery objectives: A disaster recovery (DR) strategy has been defined to meet objectives. Choose a strategy such as: backup and restore, active/passive (pilot light or warm standby), or active/active.

When architecting a multi-region disaster recovery strategy for your workload, you should choose one of the following multi-region strategies. They are listed in increasing order of complexity, and decreasing order of RTO and RPO. DR Region refers to an AWS Region other than the one primary used for your workload (or any AWS Region if your workload is on premises).

- Backup and restore (RPO in hours, RTO in 24 hours or less): Back up your data and applications using point-in-time backups into the DR Region. Restore this data when necessary to recover from a disaster.

- Pilot light (RPO in minutes, RTO in hours): Replicate your data from one region to another and provision a copy of your core workload infrastructure. Resources required to support data replication and backup such as databases and object storage are always on. Other elements such as application servers are loaded with application code and configurations, but are switched off and are only used during testing or when Disaster Recovery failover is invoked.

- Warm standby (RPO in seconds, RTO in minutes): Maintain a scaled-down but fully functional version of your workload always running in the DR Region. Business-critical systems are fully duplicated and are always on, but with a scaled down fleet. When the time comes for recovery, the system is scaled up quickly to handle the production load. The more scaled-up the Warm Standby is, the lower RTO and control plane reliance will be. When scaled up to full scale this is known as a Hot Standby.

- Multi-region (multi-site) active-active (RPO near zero, RTO potentially zero): Your workload is deployed to, and actively serving traffic from, multiple AWS Regions. This strategy requires you to synchronize data across Regions. Possible conflicts caused by writes to the same record in two different regional replicas must be avoided or handled. Data replication is useful for data synchronization and will protect you against some types of disaster, but it will not protect you against data corruption or destruction unless your solution also includes options for point-in-time recovery. Use services like Amazon Route 53 or AWS Global Accelerator to route your user traffic to where your workload is healthy. For more details on AWS services you can use for active-active architectures see the AWS Regions section of Use Fault Isolation to Protect Your Workload.

Recommendation

The difference between Pilot Light and Warm Standby can sometimes be difficult to understand. Both include an environment in your DR Region with copies of your primary region assets. The distinction is that Pilot Light cannot process requests without additional action taken first, while Warm Standby can handle traffic (at reduced capacity levels) immediately. Pilot Light will require you to turn on servers, possibly deploy additional (non-core) infrastructure, and scale up, while Warm Standby only requires you to scale up (everything is already deployed and running). Choose between these based on your RTO and RPO needs.

Tips

Test disaster recovery implementation to validate the implementation: Regularly test failover to DR to ensure that RTO and RPO are met.

A pattern to avoid is developing recovery paths that are rarely executed. For example, you might have a secondary data store that is used for read-only queries. When you write to a data store and the primary fails, you might want to fail over to the secondary data store. If you don’t frequently test this failover, you might find that your assumptions about the capabilities of the secondary data store are incorrect. The capacity of the secondary, which might have been sufficient when you last tested, may be no longer be able to tolerate the load under this scenario. Our experience has shown that the only error recovery that works is the path you test frequently. This is why having a small number of recovery paths is best. You can establish recovery patterns and regularly test them. If you have a complex or critical recovery path, you still need to regularly execute that failure in production to convince yourself that the recovery path works. In the example we just discussed, you should fail over to the standby regularly, regardless of need.

Manage configuration drift at the DR site or region: Ensure that your infrastructure, data, and configuration are as needed at the DR site or region. For example, check that AMIs and service quotas are up to date.

AWS Config continuously monitors and records your AWS resource configurations. It can detect drift and trigger AWS Systems Manager Automation to fix it and raise alarms. AWS CloudFormation can additionally detect drift in stacks you have deployed.

Automate recovery: Use AWS or third-party tools to automate system recovery and route traffic to the DR site or region.

Based on configured health checks, AWS services, such as Elastic Load Balancing and AWS Auto Scaling, can distribute load to healthy Availability Zones while services, such as Amazon Route 53 and AWS Global Accelerator, can route load to healthy AWS Regions.

For workloads on existing physical or virtual data centers or private clouds CloudEndure Disaster Recovery, available through AWS Marketplace, enables organizations to set up an automated disaster recovery strategy to AWS. CloudEndure also supports cross-region / cross-AZ disaster recovery in AWS.

Examples

Backup and restore is the most cost-effective solution to provide a 2-hour RTO and 8-hour RPO. Manual hourly snapshots need to be copied to the second Region to be available for the creation of the new database. Taking the snapshots every hour will keep the incremental snapshot size low, reduce the time to copy the snapshot across Regions, and meet the RPO. Also, taking snapshots frequently does not impact the cost. A pair of AWS Lambda functions can be scheduled to take the snapshot and copy it to the second Region.

Schedule an AWS Lambda function to create an hourly snapshot of the DB instance and another Lambda function to copy the snapshot to the second Region. For disaster recovery, create a new RDS Multi-AZ DB instance from the last snapshot.

Migration

Monitoring

Event

RDS Event Notification

Amazon RDS uses the Amazon Simple Notification Service (Amazon SNS) to provide notification when an Amazon RDS event occurs. These notifications can be in any notification form supported by Amazon SNS for an AWS Region, such as an email, a text message, or a call to an HTTP endpoint.

Log

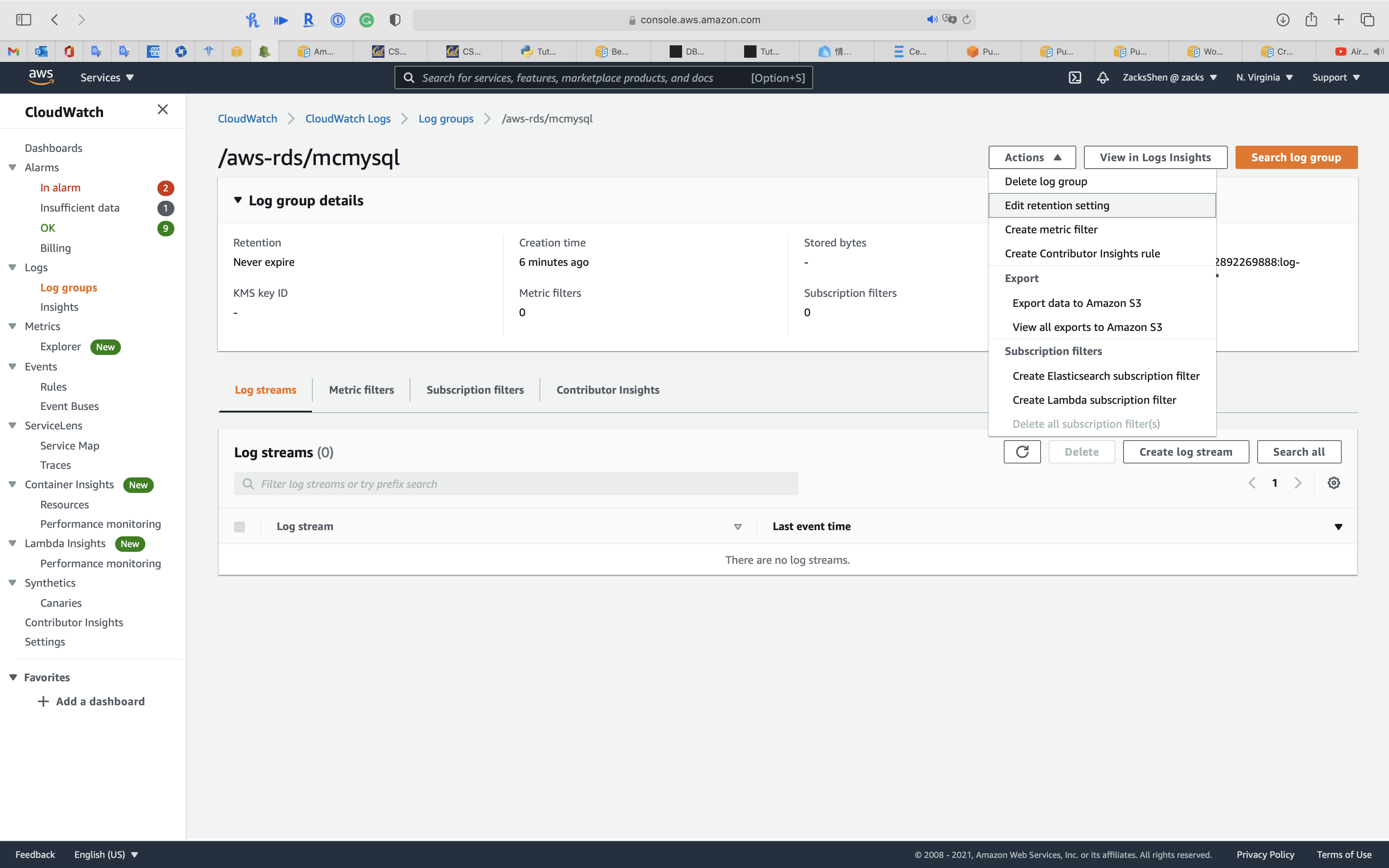

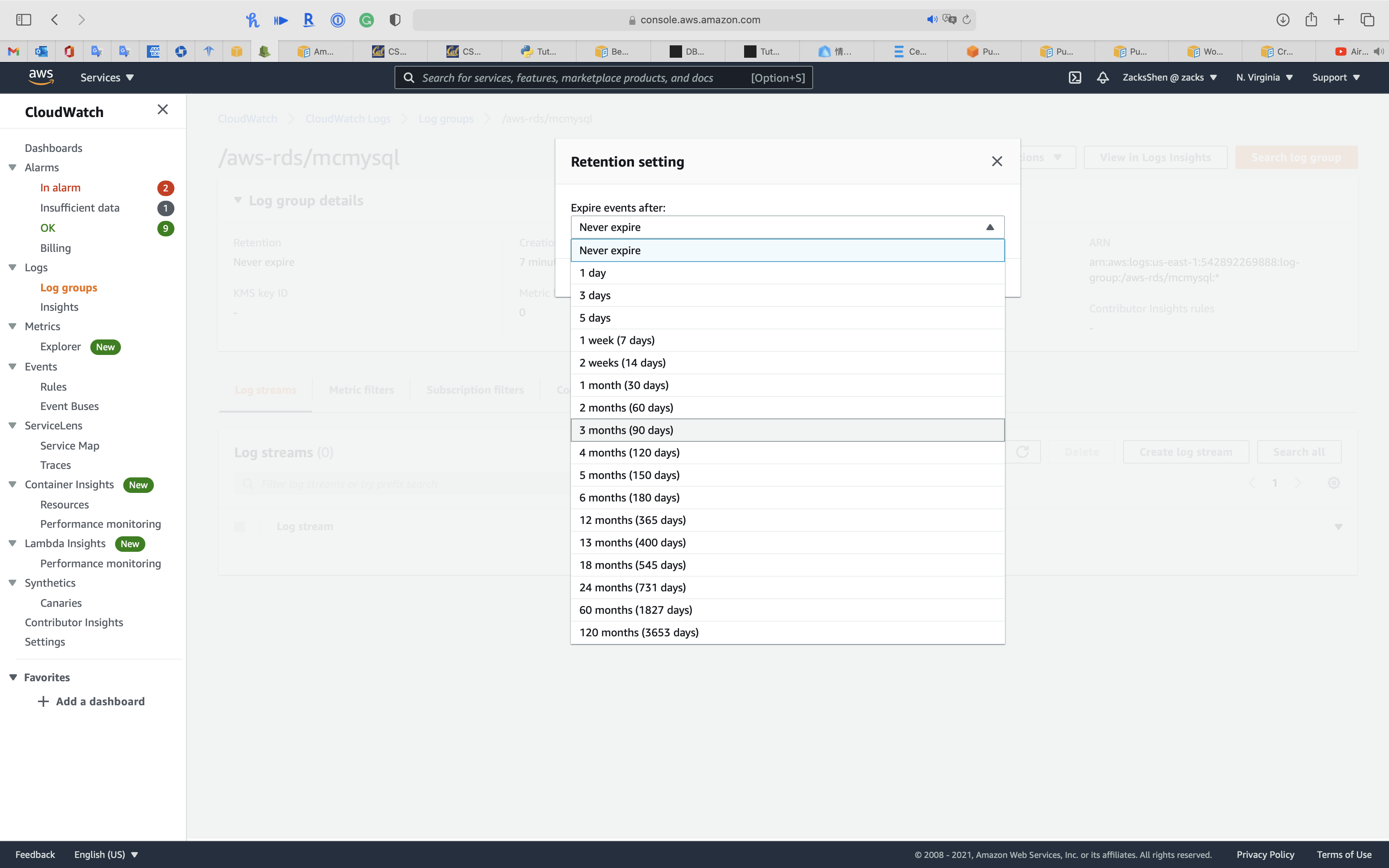

Subscribe Log and Update Retention Period

Subscribe Log

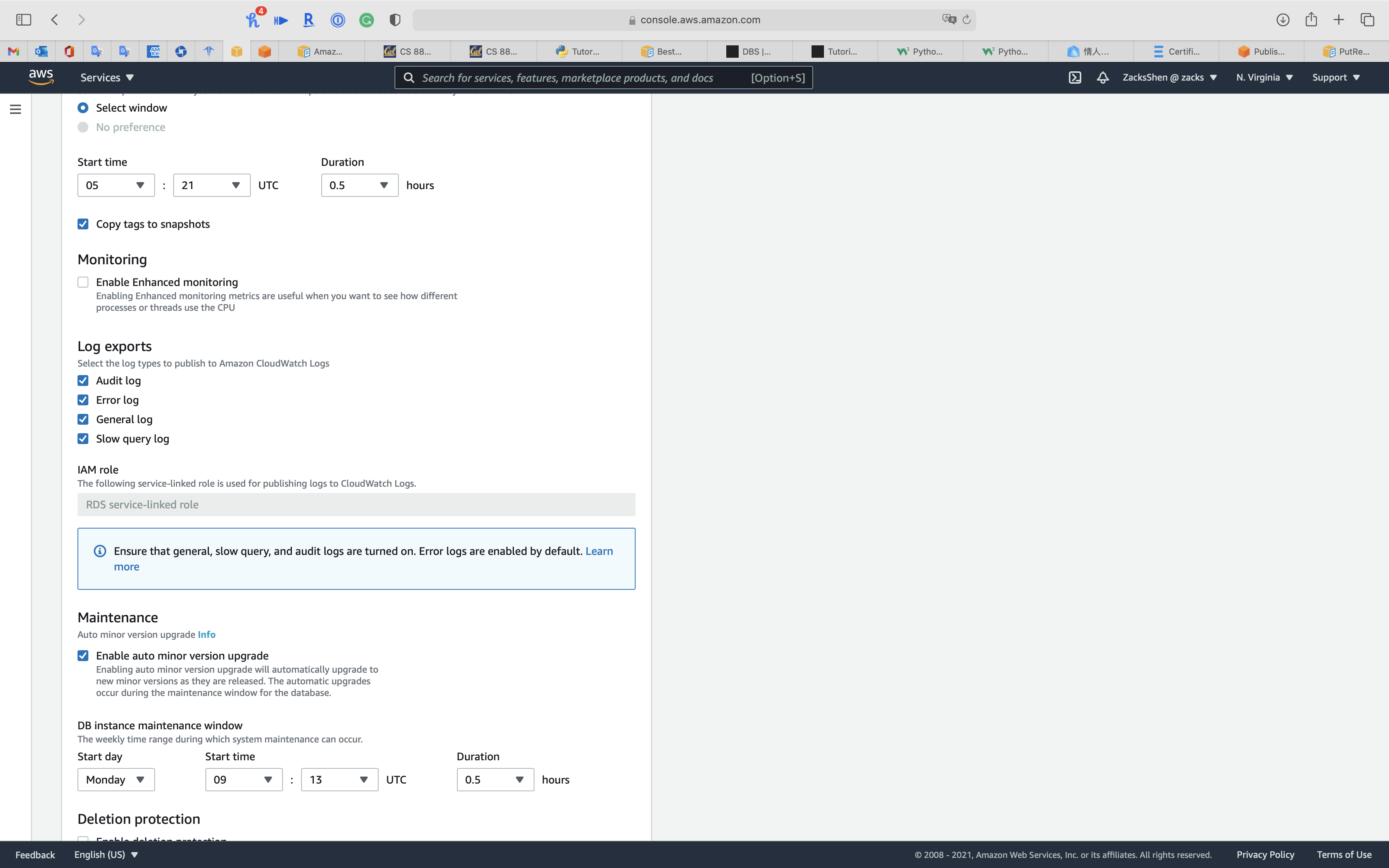

- Modify the RDS databases to publish log to Amazon CloudWatch Logs.

- Change the log retention policy for each log group to expire the events after 90 days.

How do I publish logs for Amazon RDS or Aurora for MySQL instances to CloudWatch?

PutRetentionPolicy

Publishing MySQL logs to CloudWatch Logs

Accessing Amazon RDS database log files

Publishing database engine logs to Amazon CloudWatch Logs

Creating Metrics From Log Events Using Filters

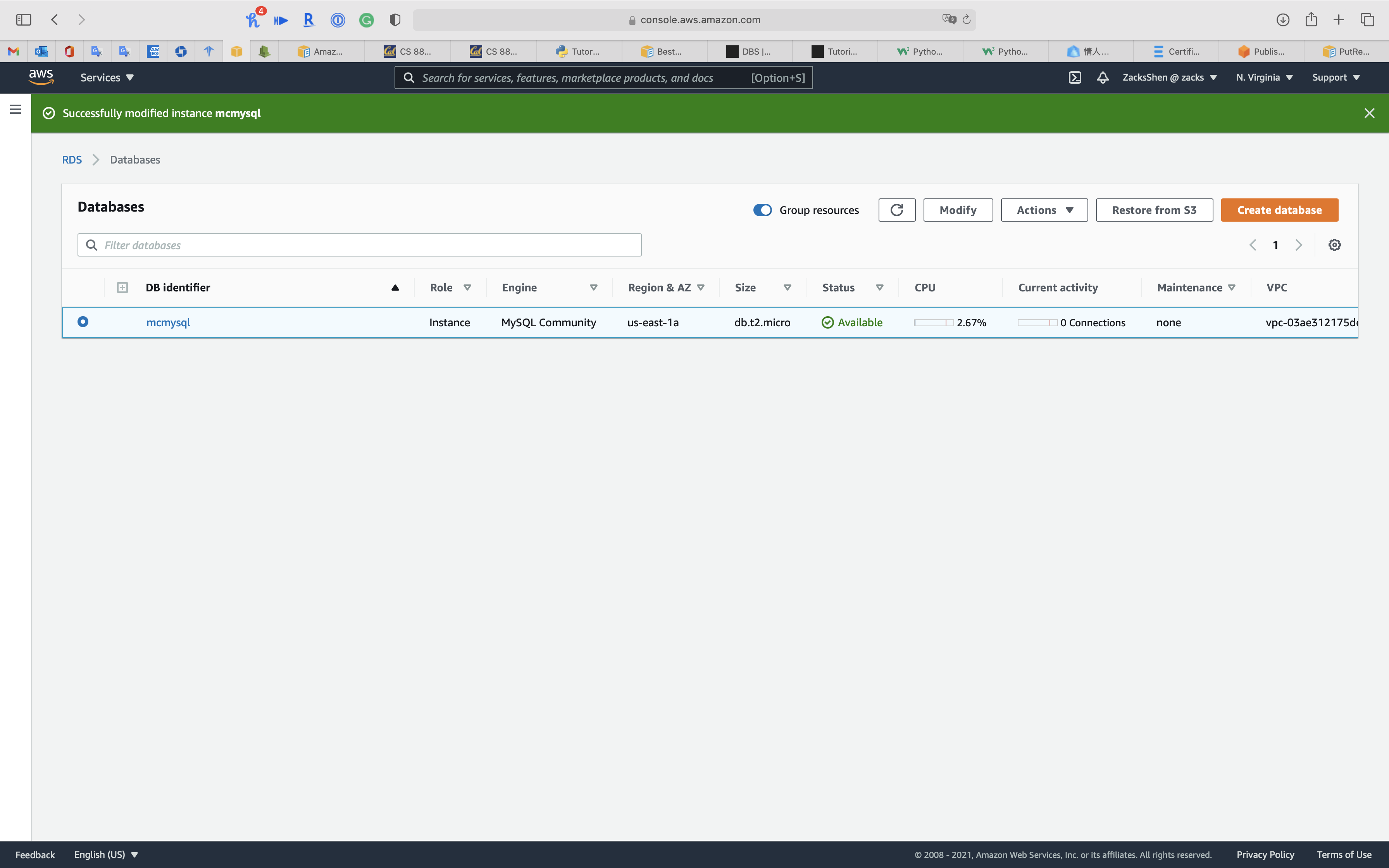

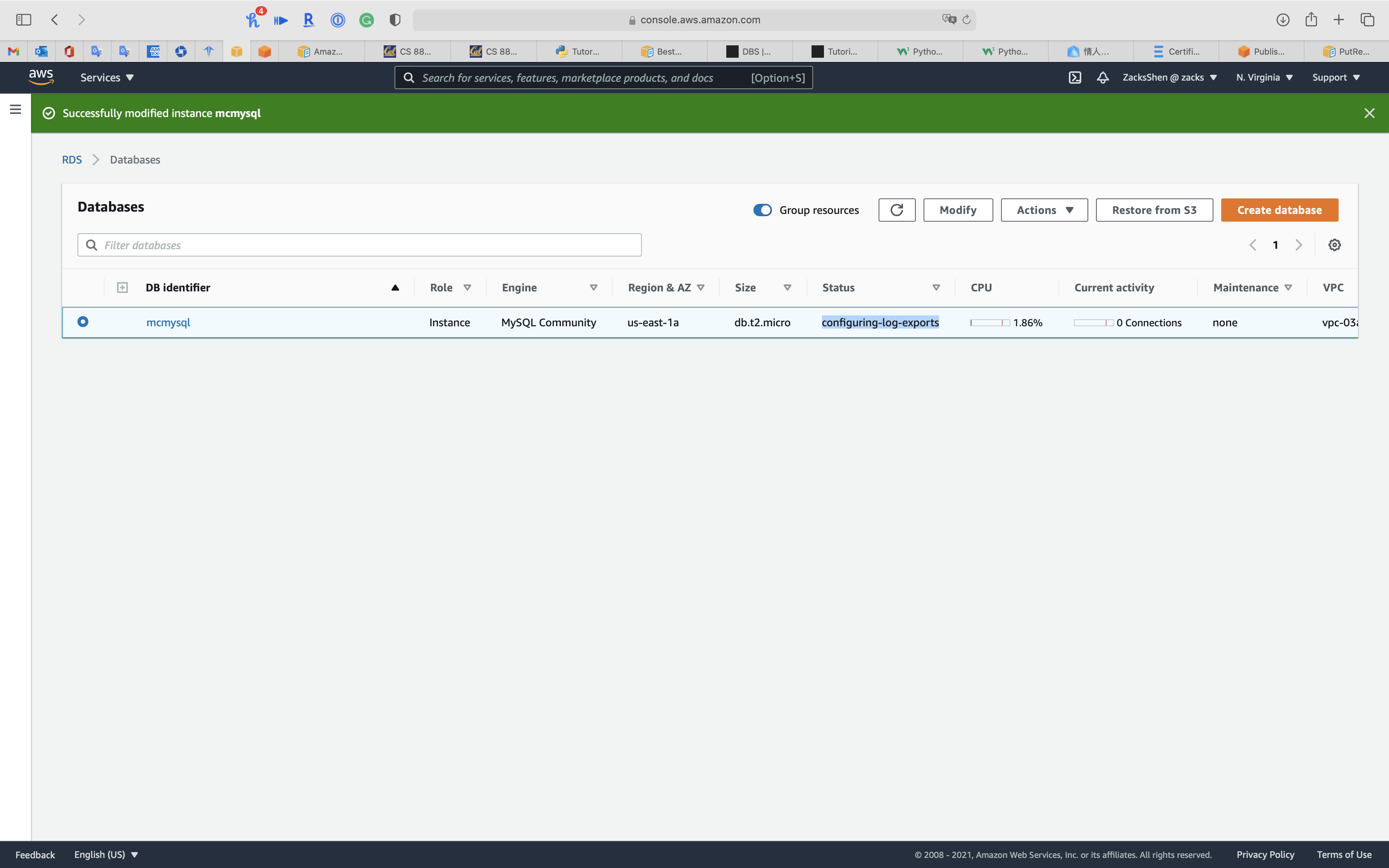

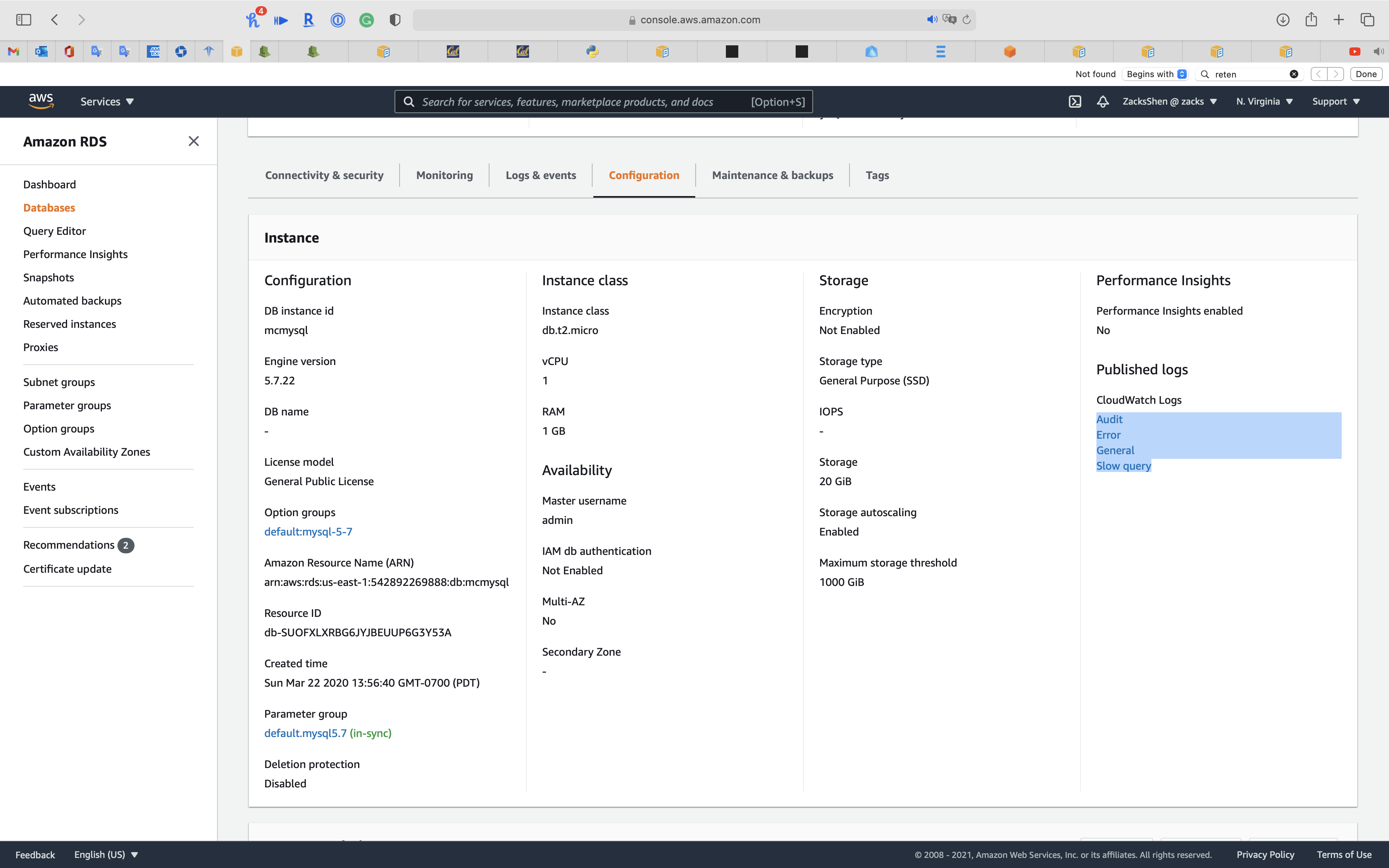

Services -> RDS -> Databases

Select your RDS DB instance and click on Modify

Scroll down and edit Log exports

Select the log types to publish to Amazon CloudWatch Logs

Wait until status switches to Available

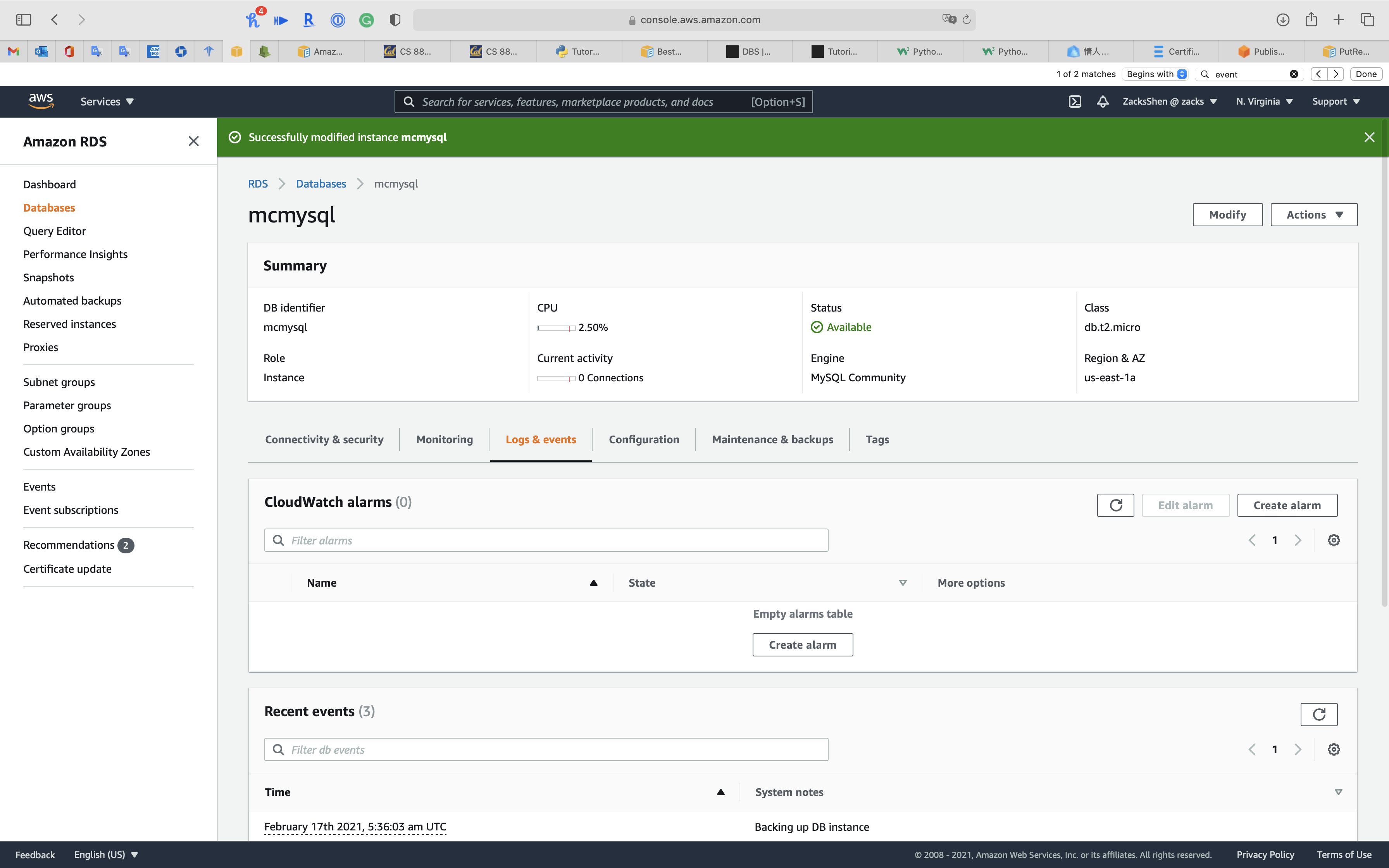

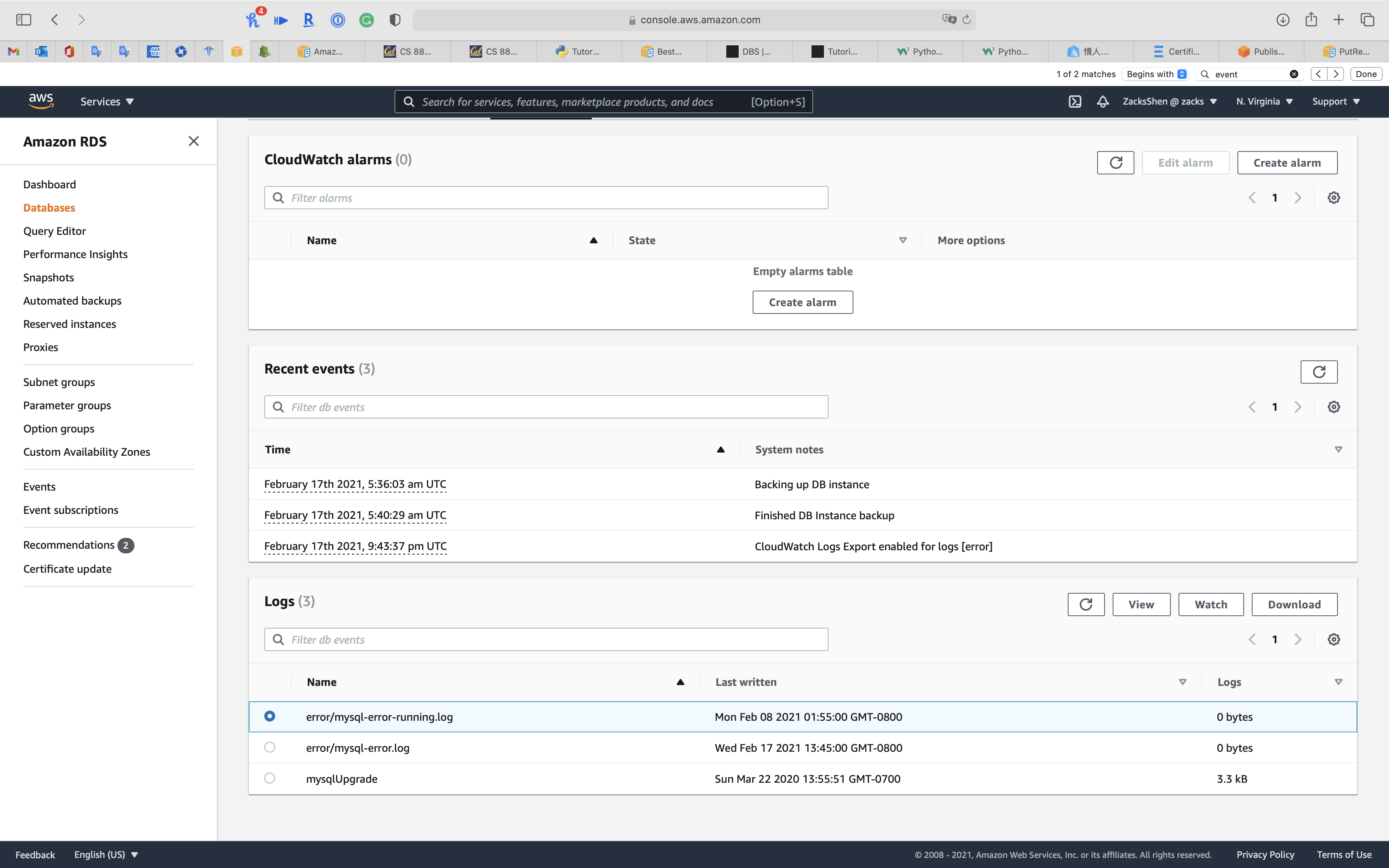

Click on your RDS DB instance and click on Logs & events tab

Scroll down to View, Watch, or Download logs

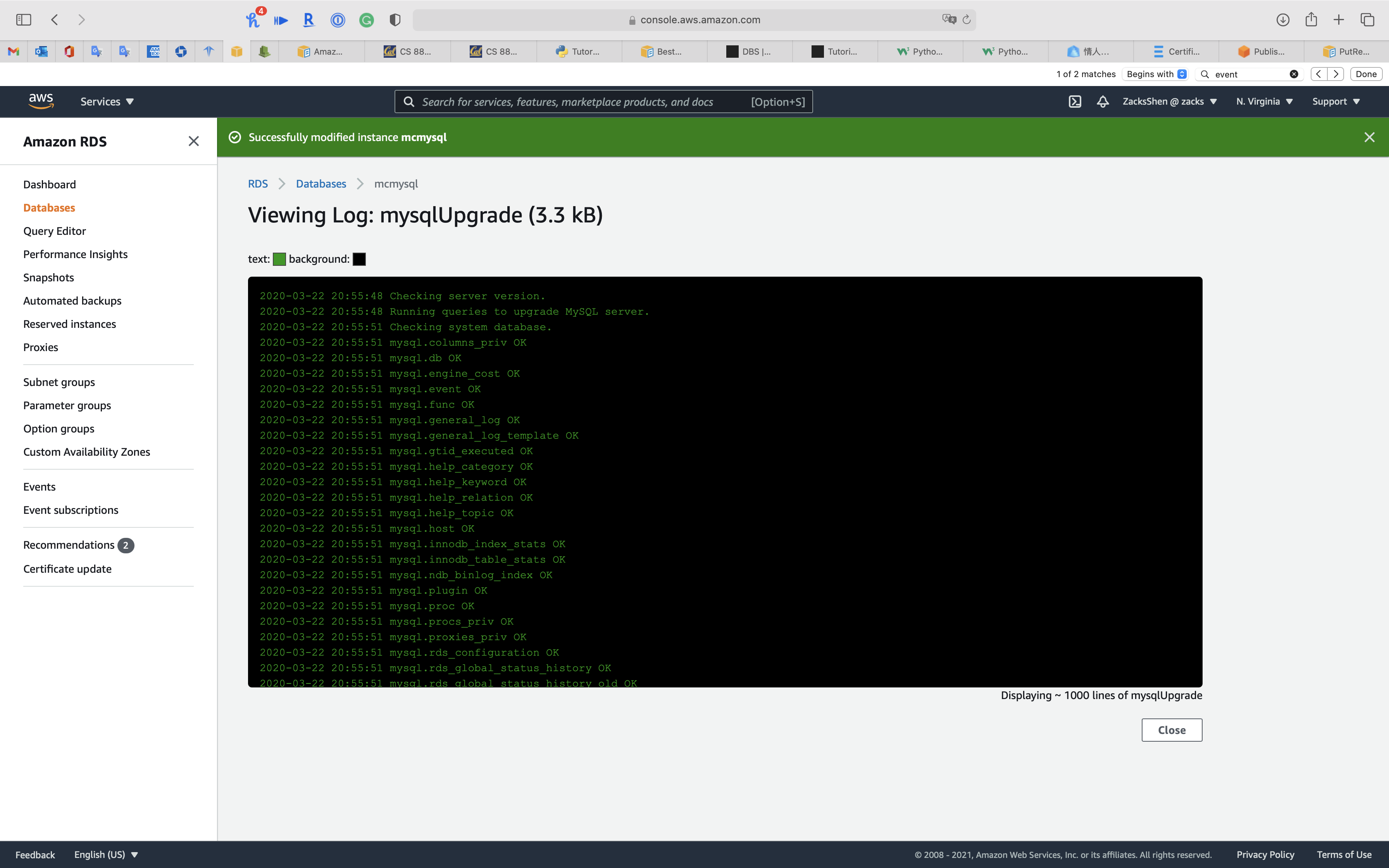

View

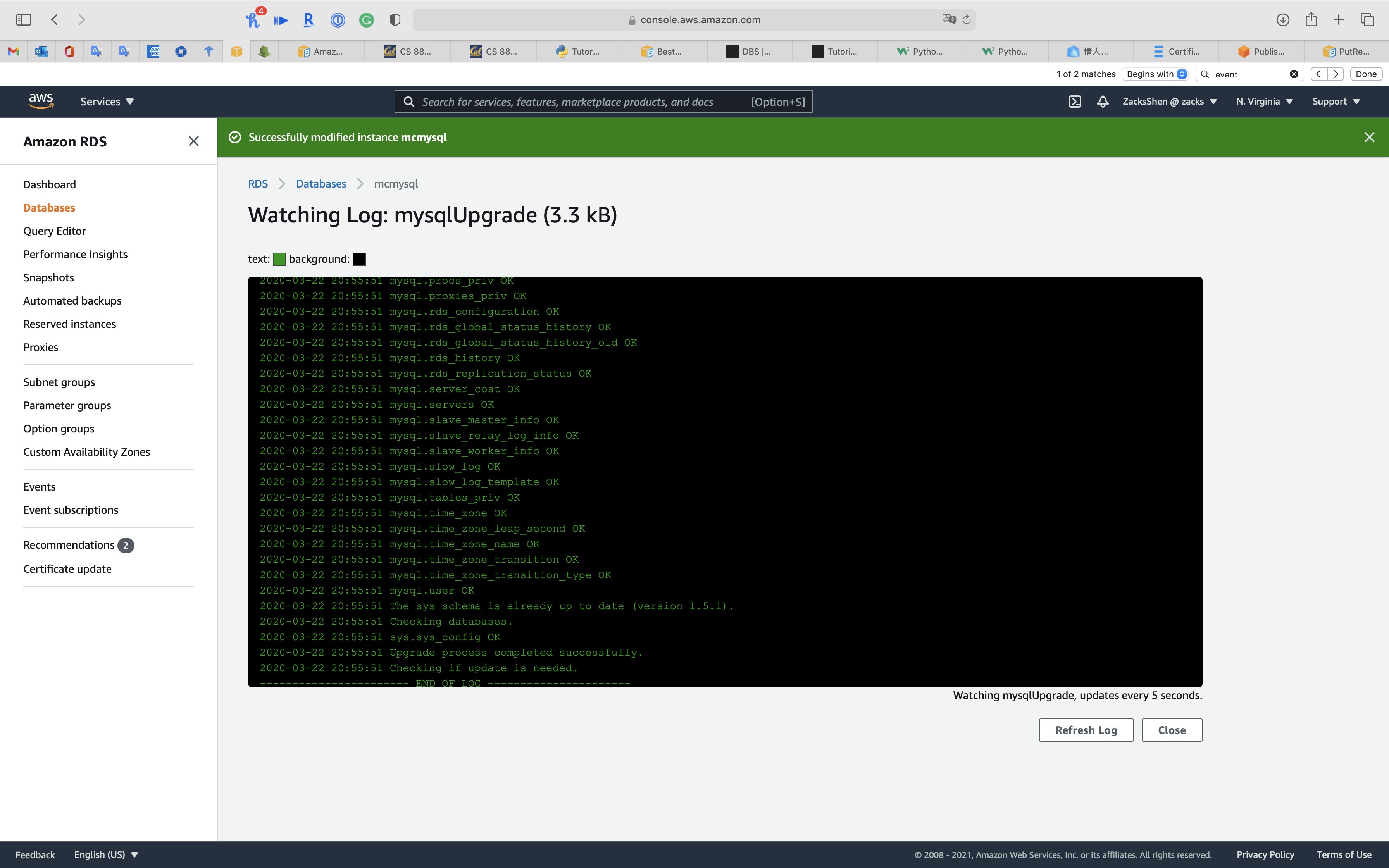

Watch

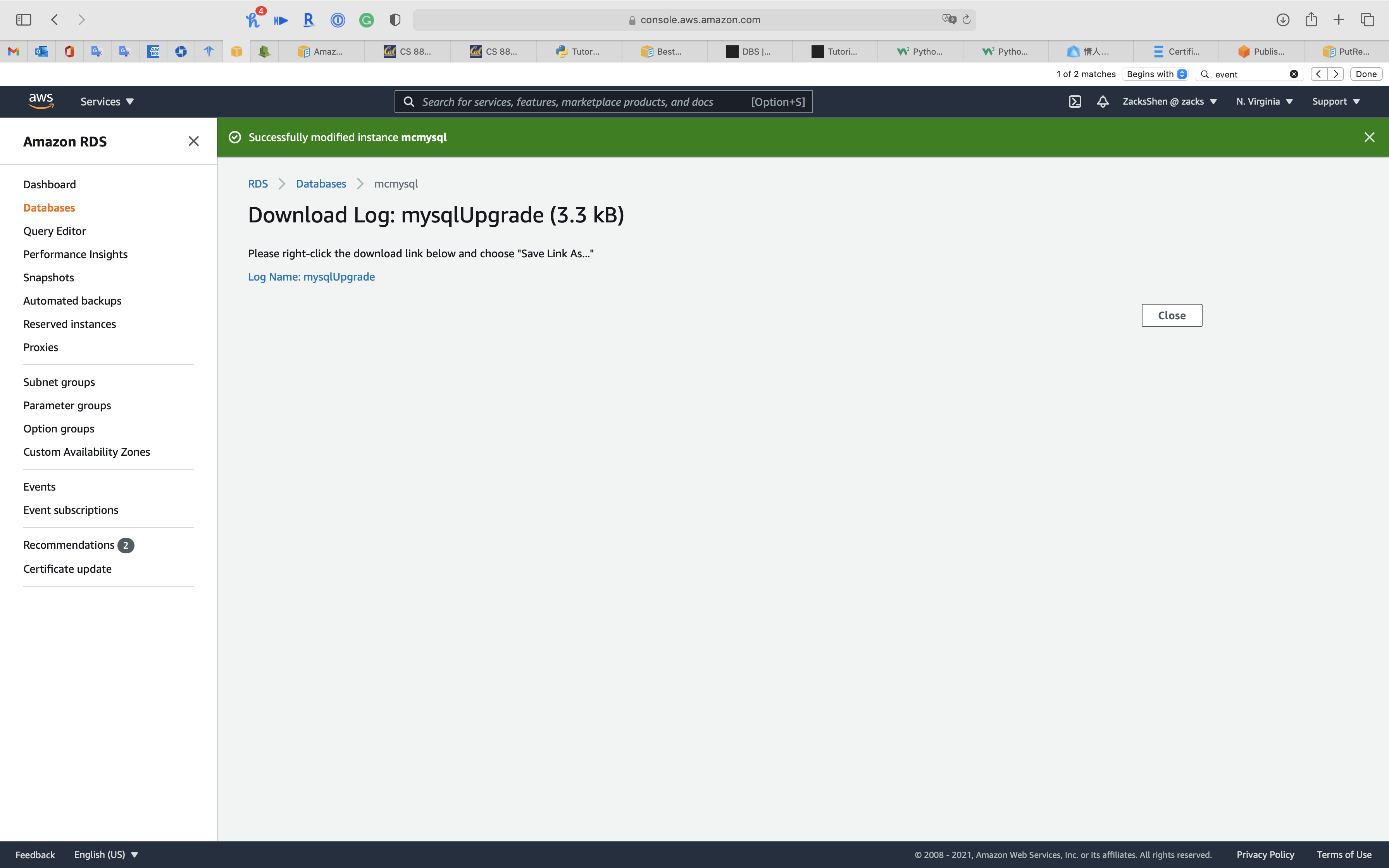

Download

Update Log Retention Period

Click on Configuration tab

You can see the log type you subscripted

Click one of the log type

Click on Actions -> Edit retention setting

Select the retention period that satisfies your requirement.

Publishing MySQL logs to CloudWatch Logs

You can configure your Amazon RDS MySQL DB instance to publish log data to a log group in Amazon CloudWatch Logs. With CloudWatch Logs, you can perform real-time analysis of the log data, and use CloudWatch to create alarms and view metrics. You can use CloudWatch Logs to store your log records in highly durable storage.

Amazon RDS publishes each MySQL database log as a separate database stream in the log group. For example, if you configure the export function to include the slow query log, slow query data is stored in a slow query log stream in the /aws/rds/instance/my_instance/slowquery log group.

Publishing database logs to Amazon CloudWatch Logs

In addition to viewing and downloading DB instance logs, you can publish logs to Amazon CloudWatch Logs. With CloudWatch Logs, you can perform real-time analysis of the log data, store the data in highly durable storage, and manage the data with the CloudWatch Logs Agent. AWS retains log data published to CloudWatch Logs for an indefinite time period unless you specify a retention period. For more information, see Change log data retention in CloudWatch Logs.

PutRetentionPolicy

Sets the retention of the specified log group. A retention policy allows you to configure the number of days for which to retain log events in the specified log group.

PostgreSQL database log files

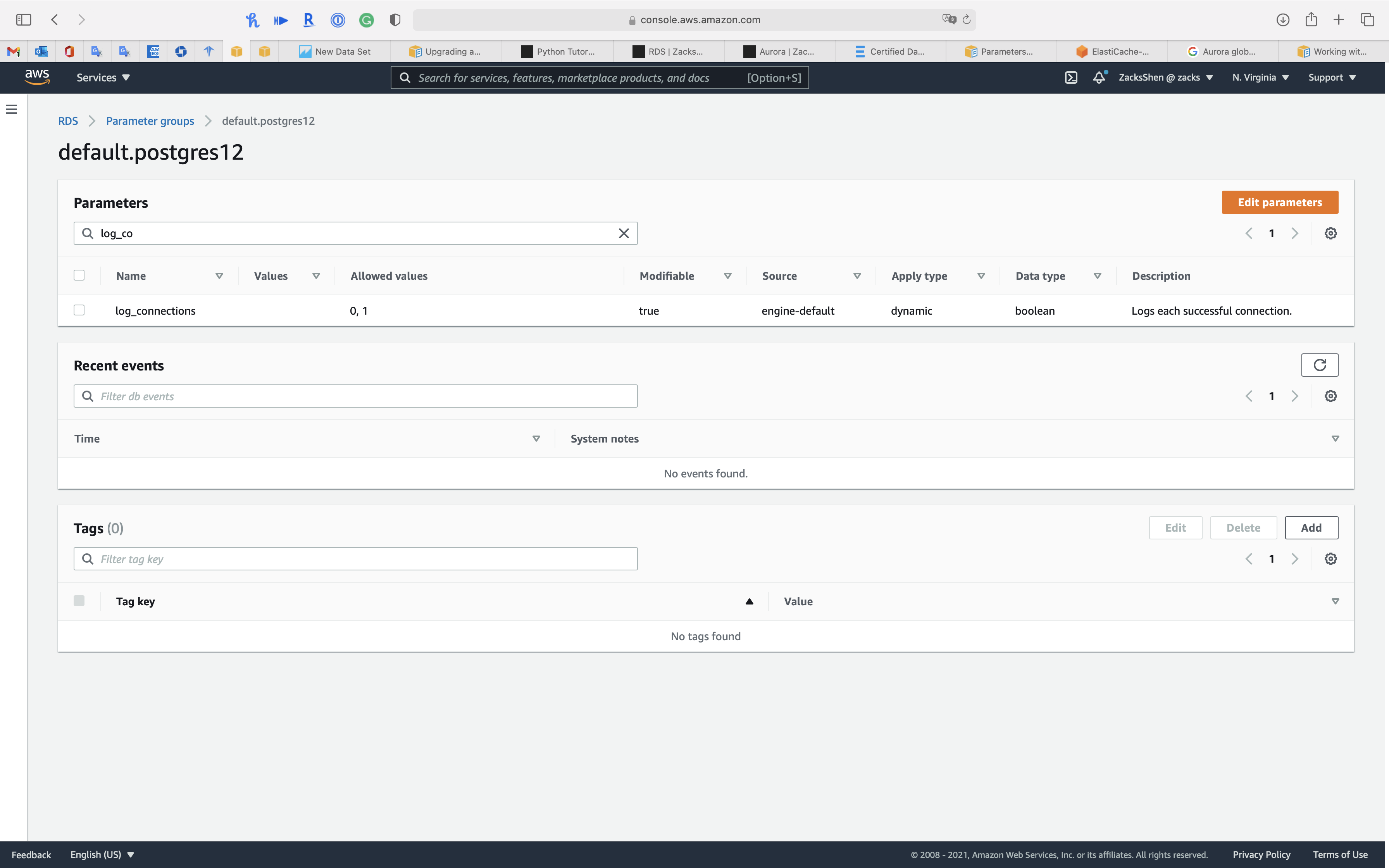

Parameter groups

Each Amazon RDS PostgreSQL instance is associated with a parameter group that contains the engine specific configurations. The engine configurations also include several parameters that control PostgreSQL logging behavior. AWS provides the parameter groups with default configuration settings to use for your instances. However, to change the default settings, you must create a clone of the default parameter group, modify it, and attach it to your instance.

To set logging parameters for a DB instance, set the parameters in a DB parameter group and associate that parameter group with the DB instance. For more information, see Working with DB parameter groups.

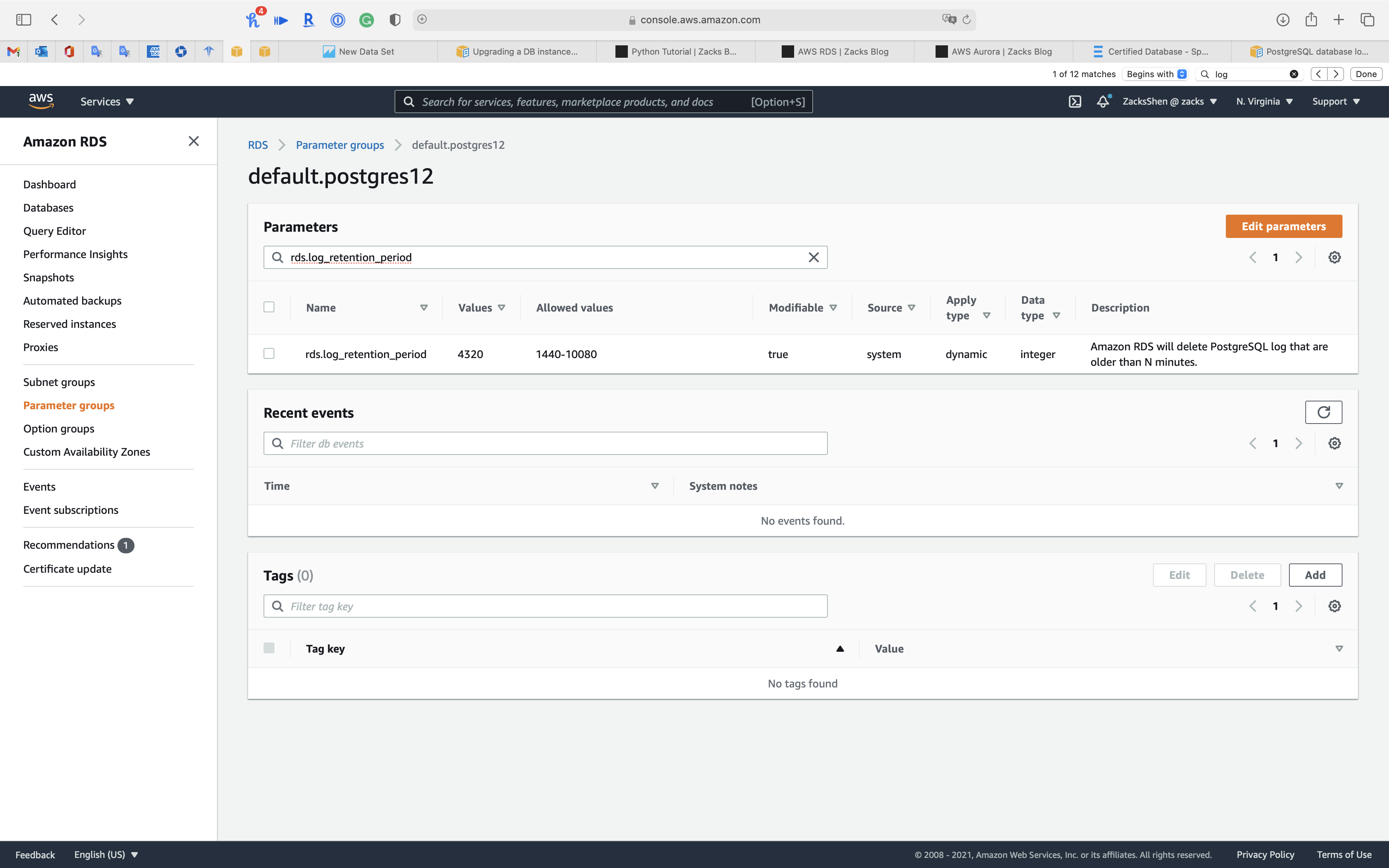

Setting the log retention period

To set the retention period for system logs, use the rds.log_retention_period parameter. You can find rds.log_retention_period in the DB parameter group associated with your DB instance. The unit for this parameter is minutes. For example, a setting of 1,440 retains logs for one day. The default value is 4,320 (three days). The maximum value is 10,080 (seven days). Your instance must have enough allocated storage to contain the retained log files.

To retain older logs, publish them to Amazon CloudWatch Logs. For more information, see Publishing PostgreSQL logs to CloudWatch Logs.

Metrics

CloudWatch Metrics on SQL Server

Amazon CloudWatch Application Insights uses machine learning classification algorithms to analyze metrics and identify signs of problems with your applications. Windows Event Viewer and SQL Server Error logs are included in the analysis. To receive notifications, you can create an Amazon EventBridge (in CloudWatch Events) rule for the Application Insights Problem Detected event.

- Configure Amazon CloudWatch Application Insights for .NET and SQL Server to monitor and detect signs of potential problems.

- Configure CloudWatch Events to send notifications to an Amazon SNS topic.

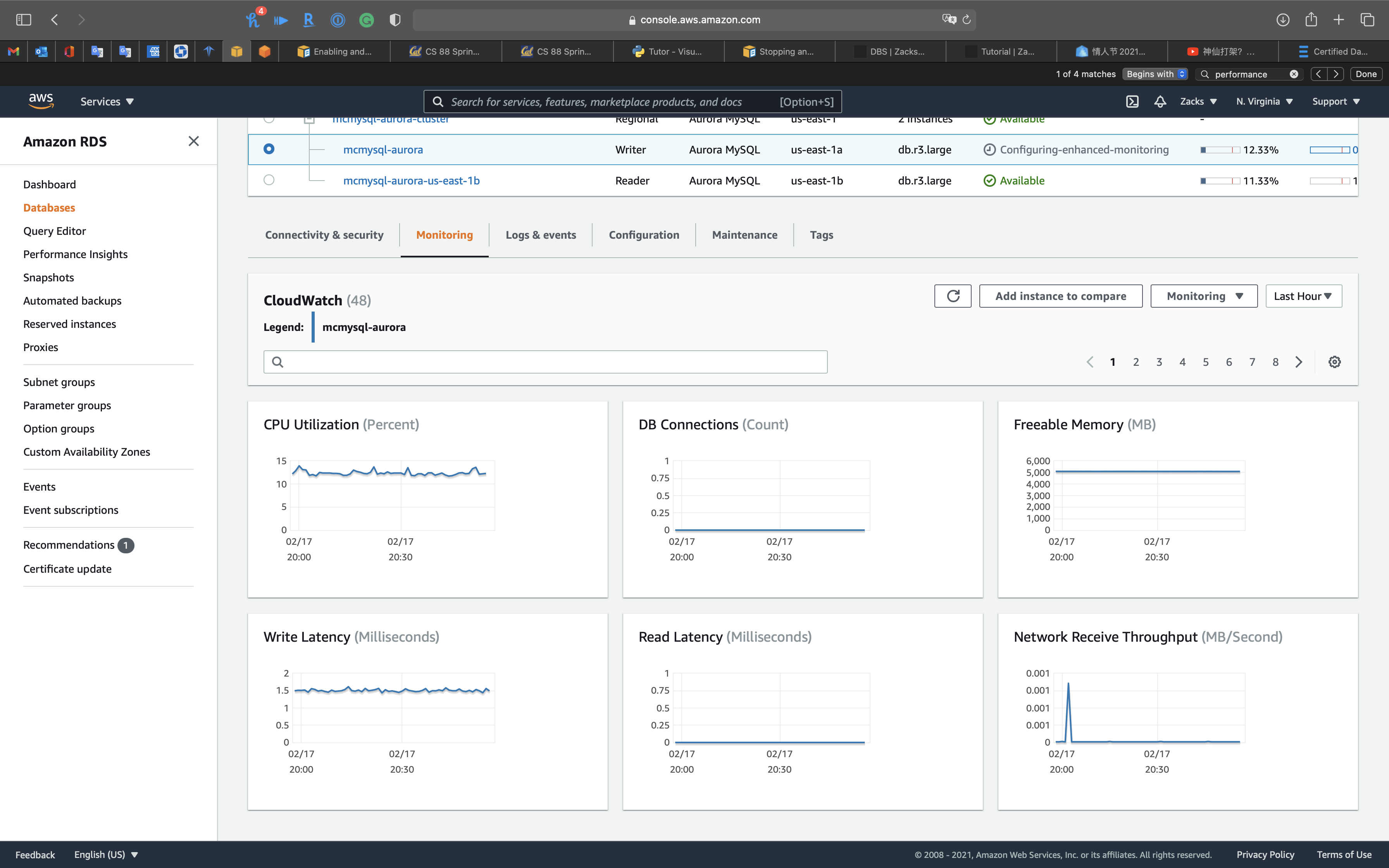

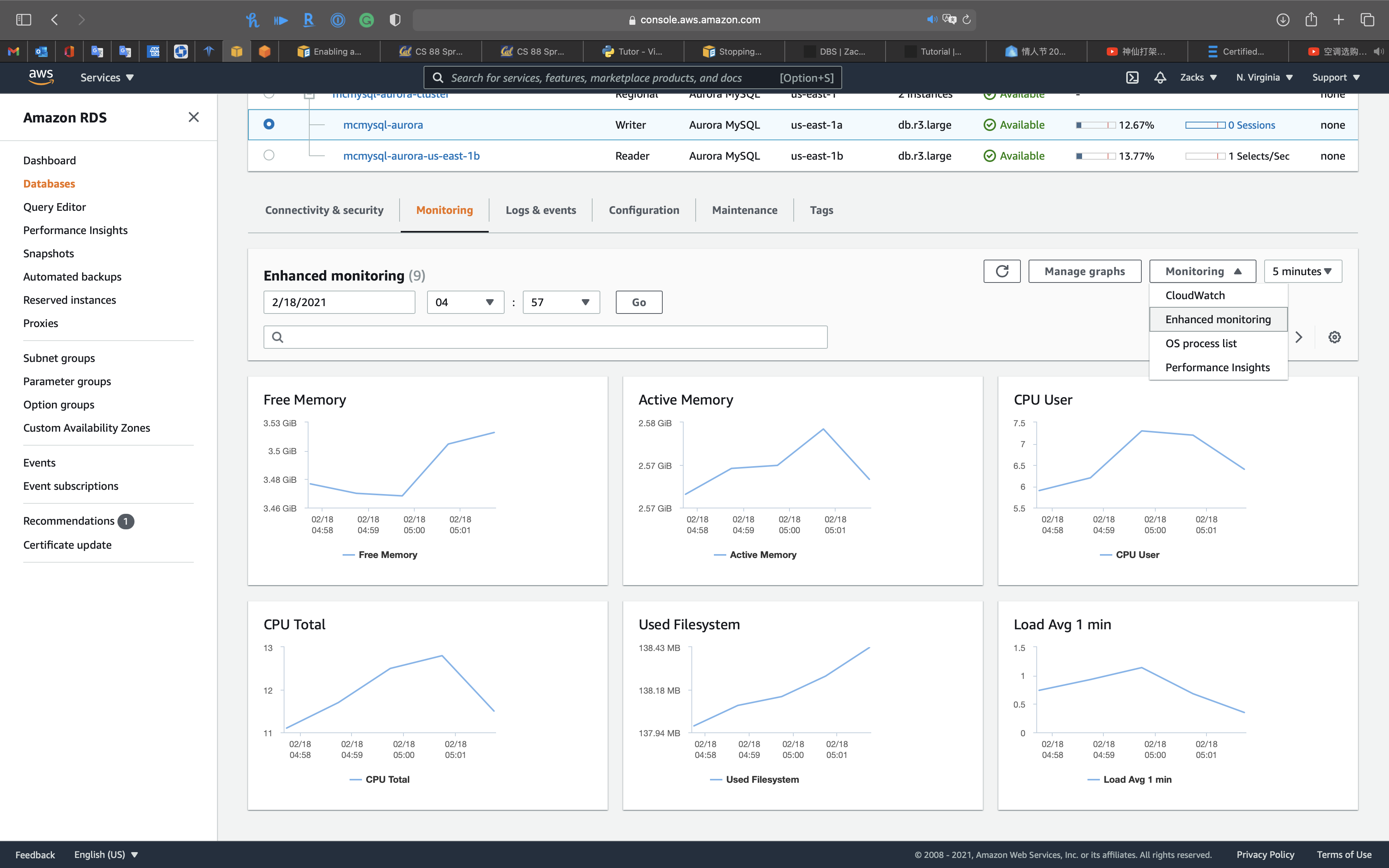

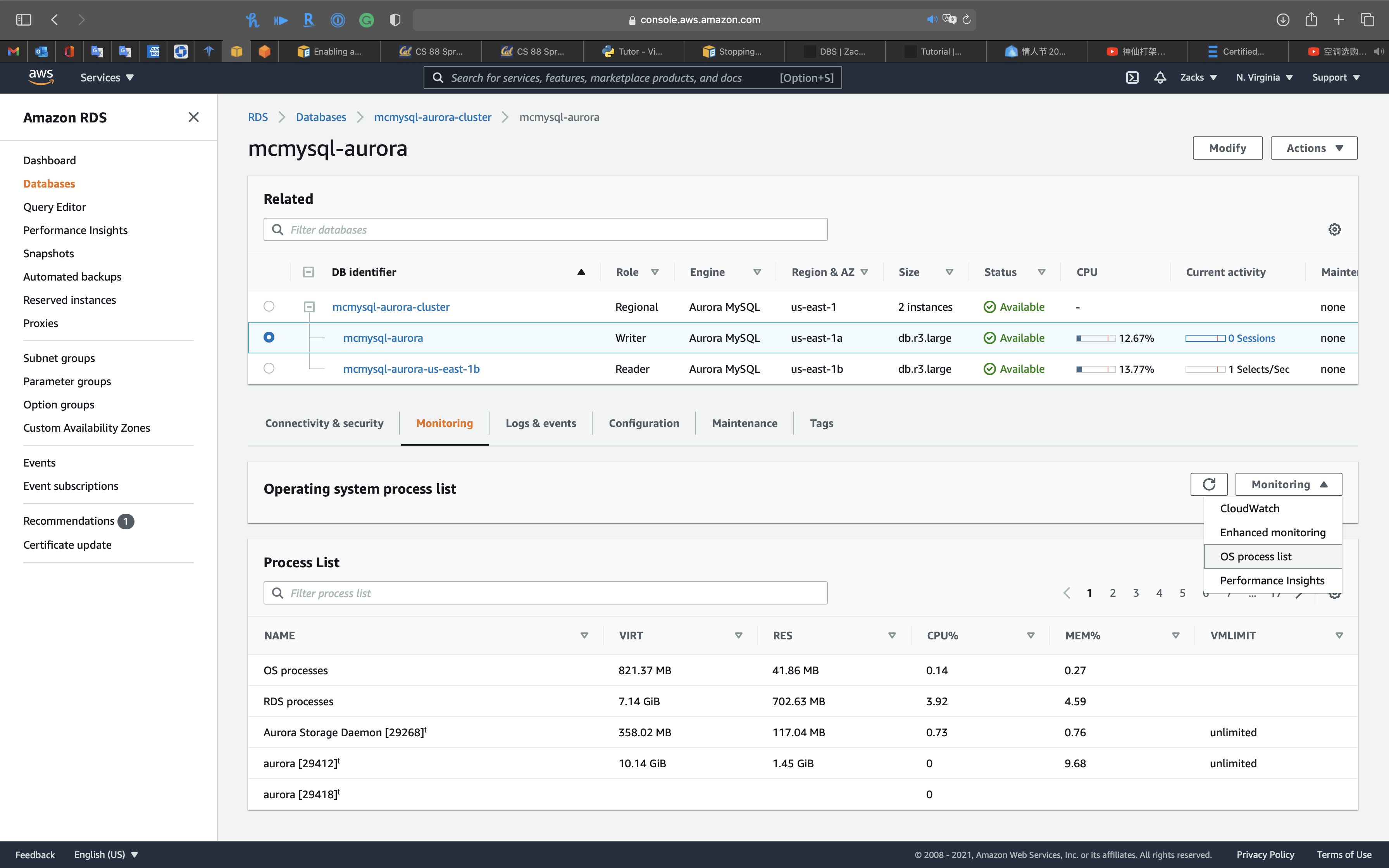

Enhanced monitoring & Performance insights

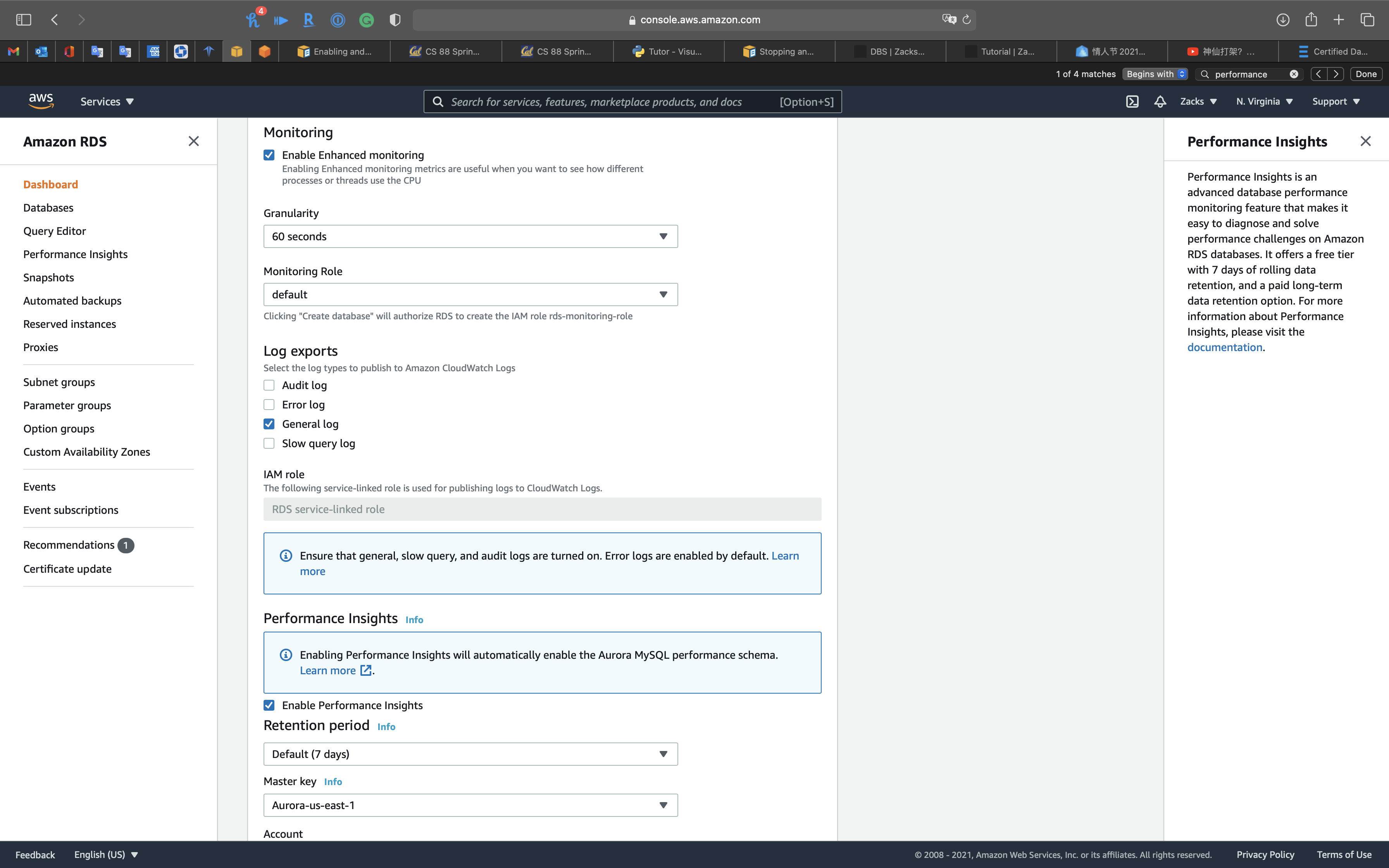

Enable Enhanced monitoring

Enabling Enhanced monitoring metrics are useful when you want to see how different processes or threads use the CPU.

You can select Enable Enhanced monitoring function in the creating database period or modifying database period.

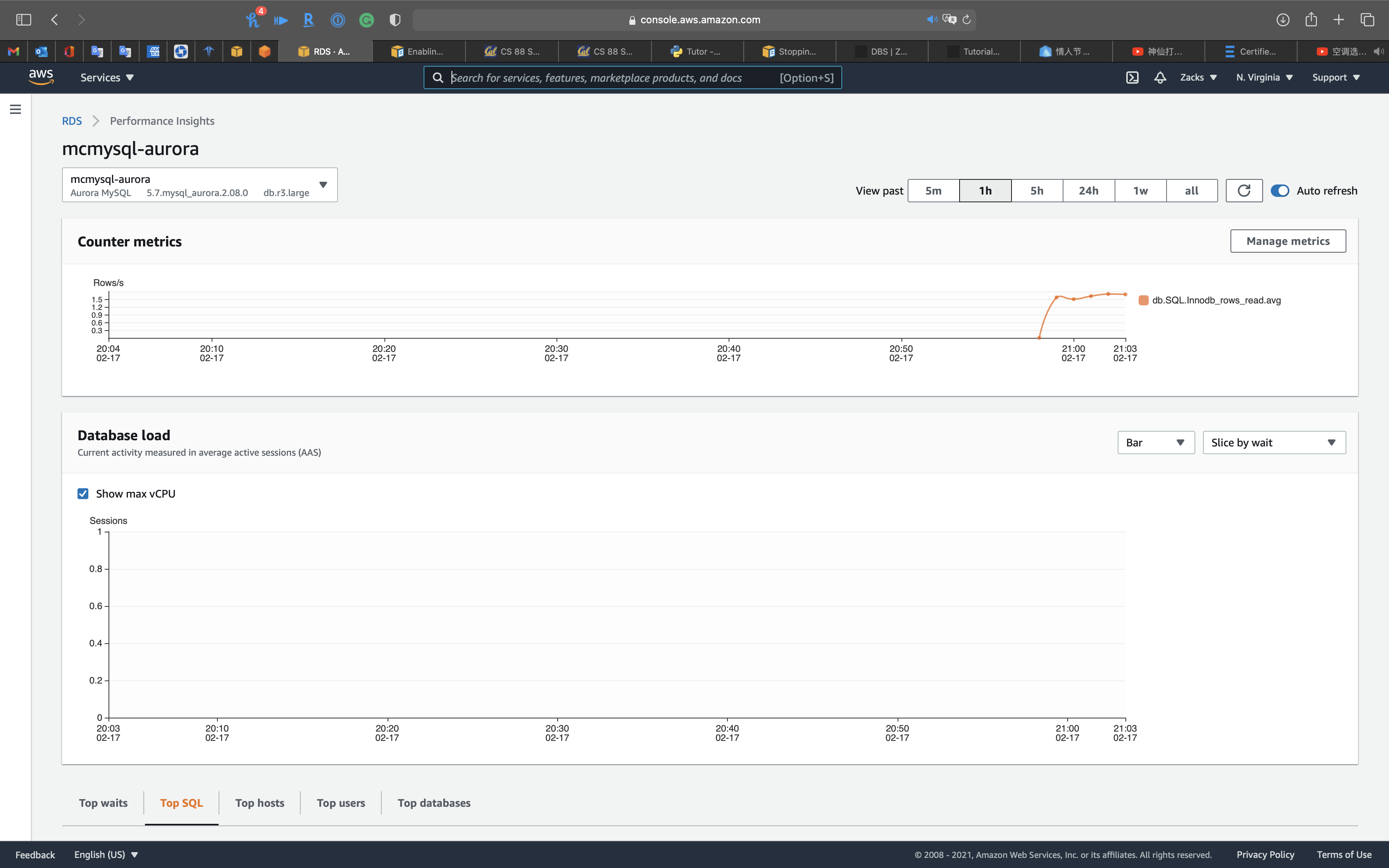

Performance Insights

Performance Insights is an advanced database performance monitoring feature that makes it easy to diagnose and solve performance challenges on Amazon RDS databases. It offers a free tier with 7 days of rolling data retention, and a paid long-term data retention option. For more information about Performance Insights, please visit the

CloudWatch

Enhanced monitoring

OS process list

Performance insights: you can review

- Top waits

- Top SQL

- Top hosts

- Top users

- Top databases

Configuring an Amazon RDS DB instance

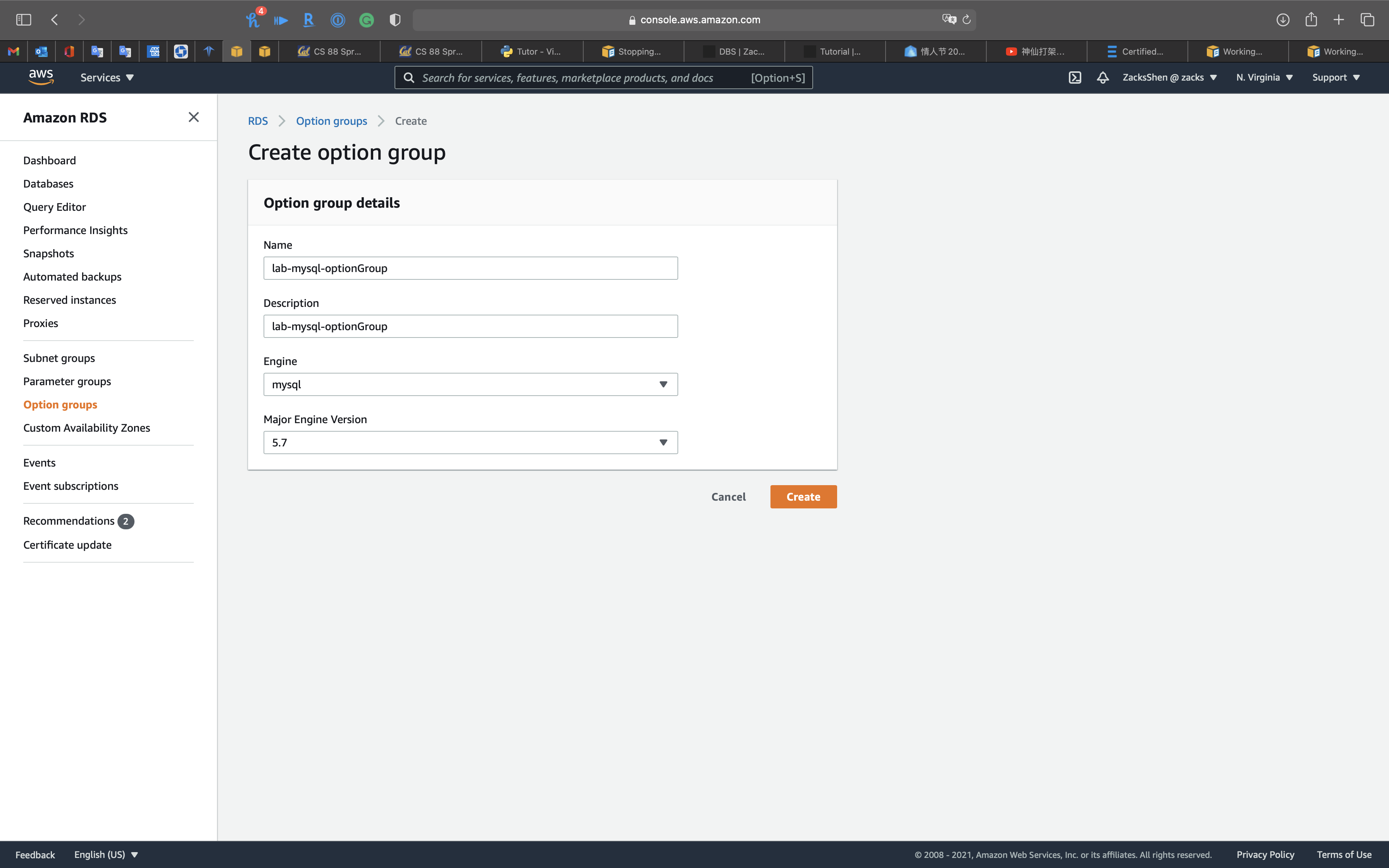

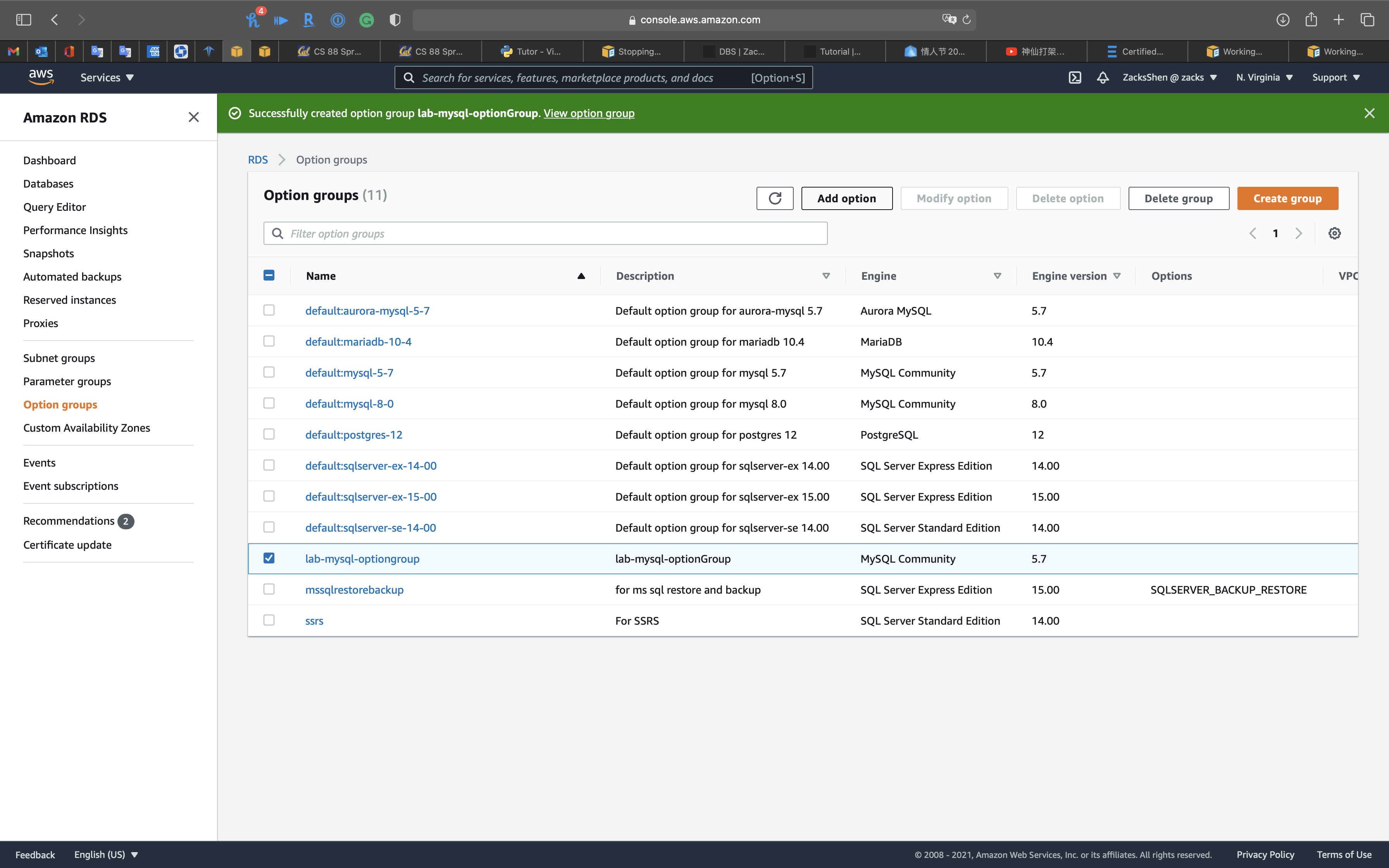

Working with option groups

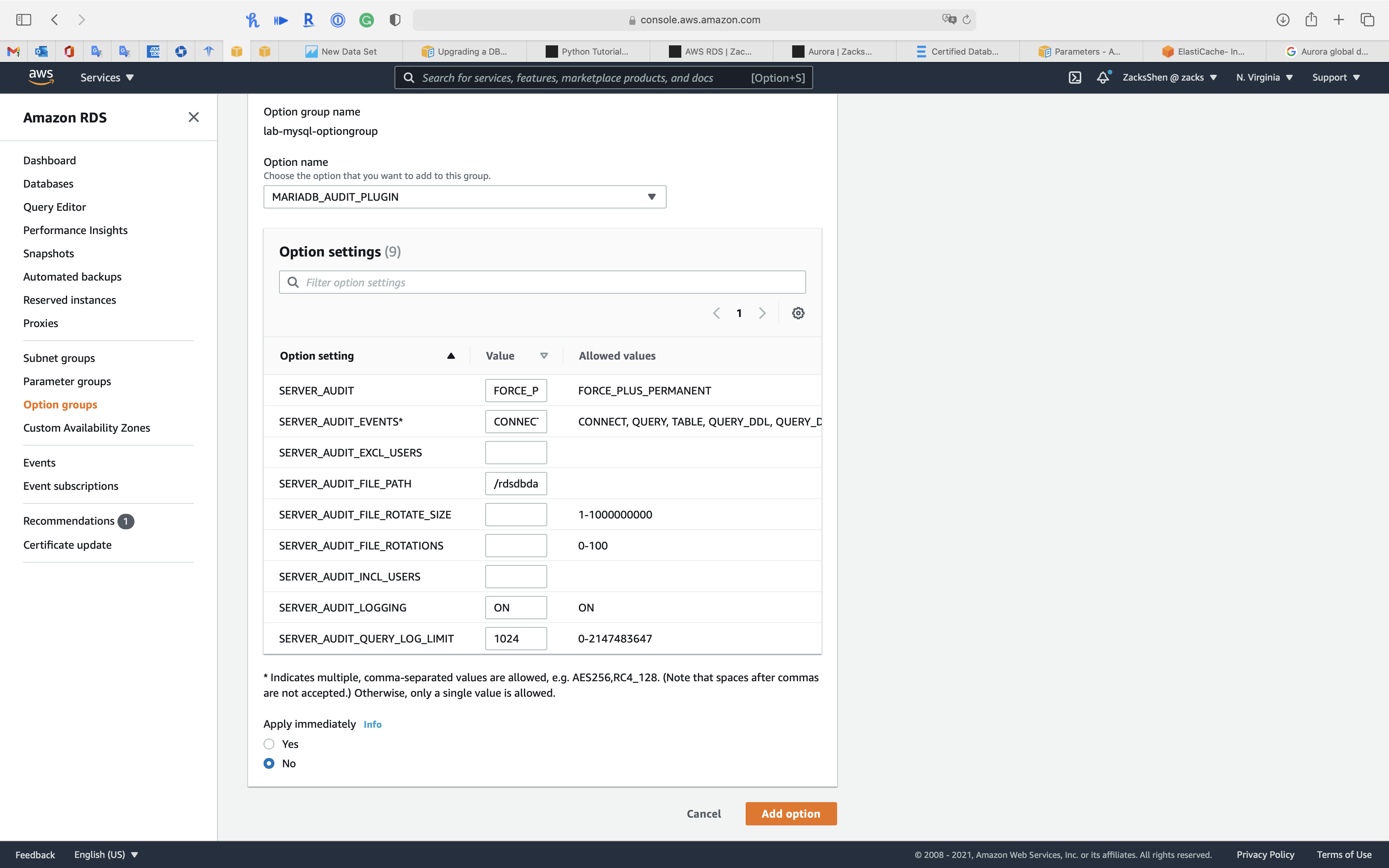

Some DB engines offer additional features that make it easier to manage data and databases, and to provide additional security for your database. Amazon RDS uses option groups to enable and configure these features. An option group can specify features, called options, that are available for a particular Amazon RDS DB instance. Options can have settings that specify how the option works. When you associate a DB instance with an option group, the specified options and option settings are enabled for that DB instance.

Option groups overview

Amazon RDS provides an empty default option group for each new DB instance. You cannot modify this default option group, but any new option group that you create derives its settings from the default option group. To apply an option to a DB instance, you must do the following:

- Create a new option group, or copy or modify an existing option group.

- Add one or more options to the option group.

- Associate the option group with the DB instance.

Click on Add option to your option group

For example, there are 9 options for MySQL 5.7 option group - MARIADB_ADUIT_PLUGIN

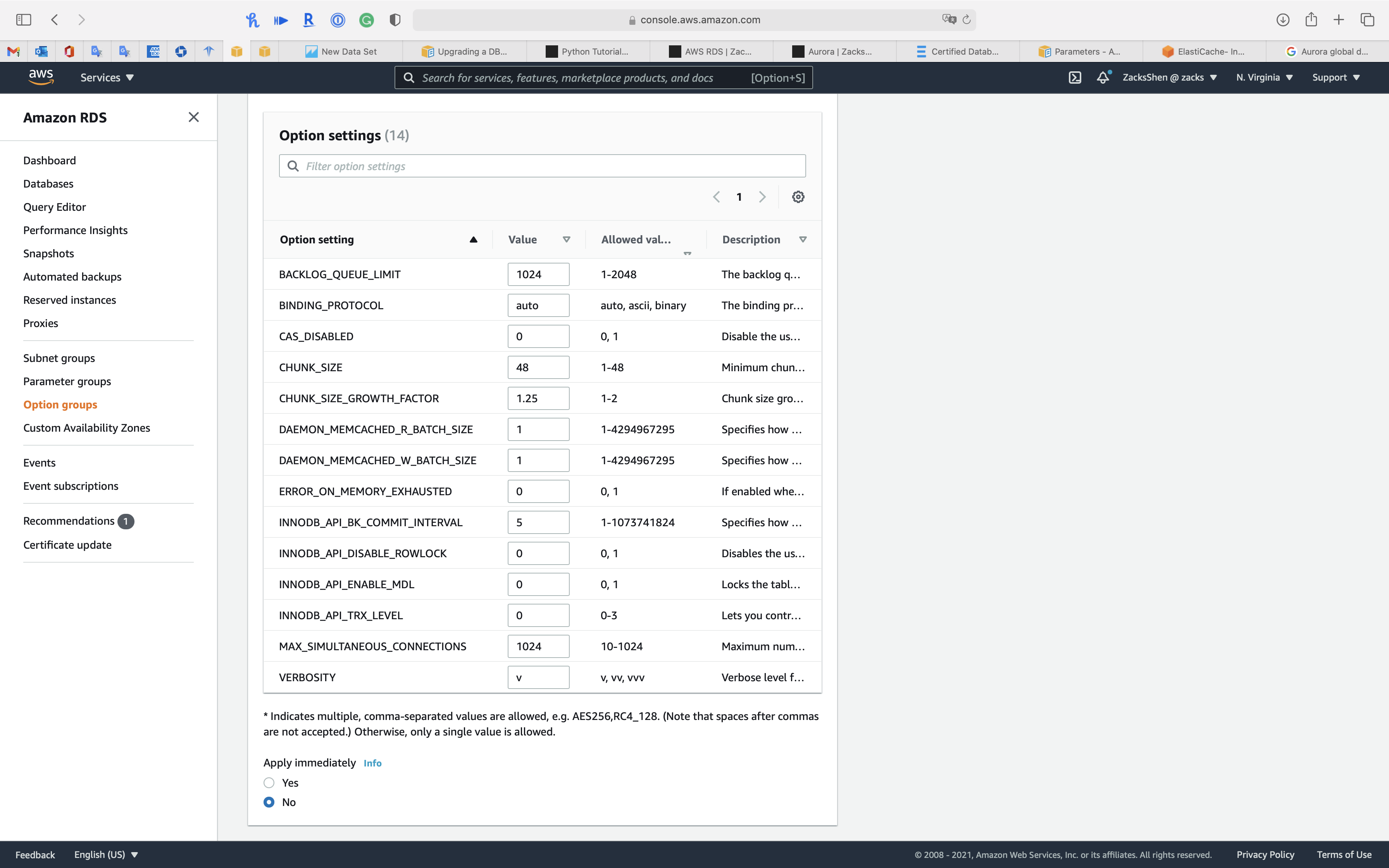

For example, there are 14 options for MySQL 5.7 option group - MEMCACHED

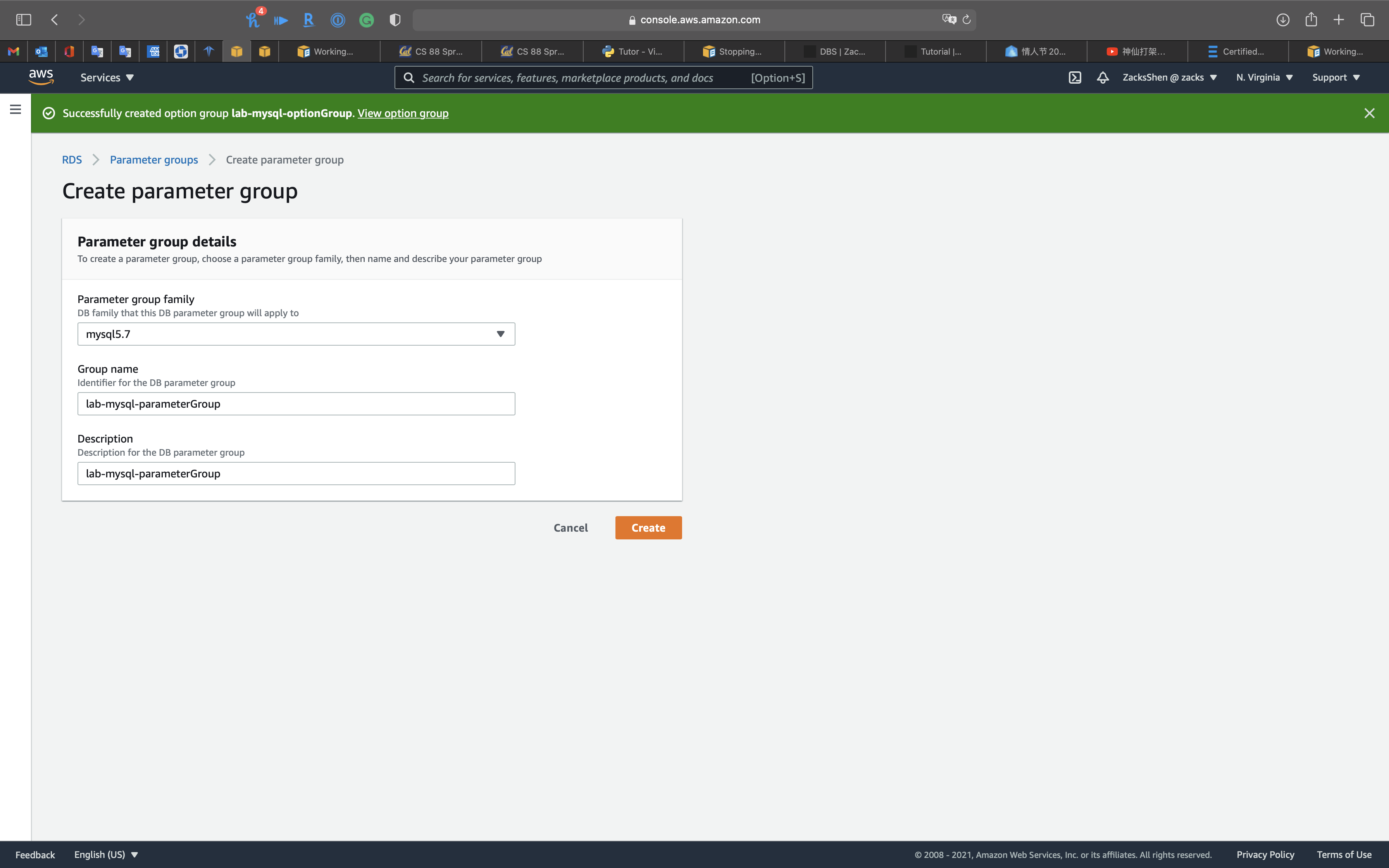

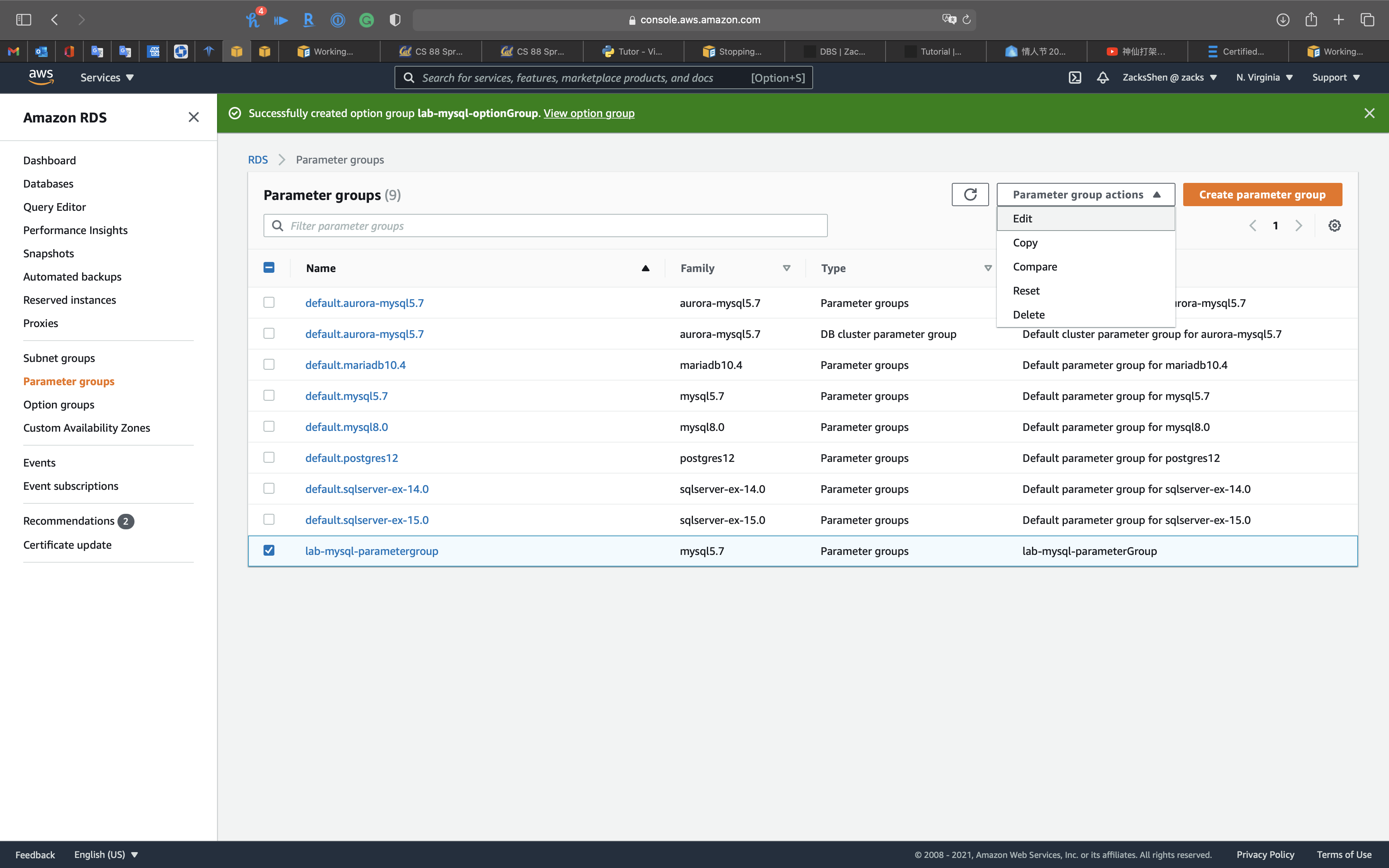

Working with DB parameter groups

You manage your DB engine configuration by associating your DB instances with parameter groups. Amazon RDS defines parameter groups with default settings that apply to newly created DB instances.

A DB parameter group acts as a container for engine configuration values that are applied to one or more DB instances.

If you create a DB instance without specifying a DB parameter group, the DB instance uses a default DB parameter group. Each default DB parameter group contains database engine defaults and Amazon RDS system defaults based on the engine, compute class, and allocated storage of the instance. You can’t modify the parameter settings of a default parameter group. Instead, you create your own parameter group where you choose your own parameter settings. Not all DB engine parameters can be changed in a parameter group that you create.

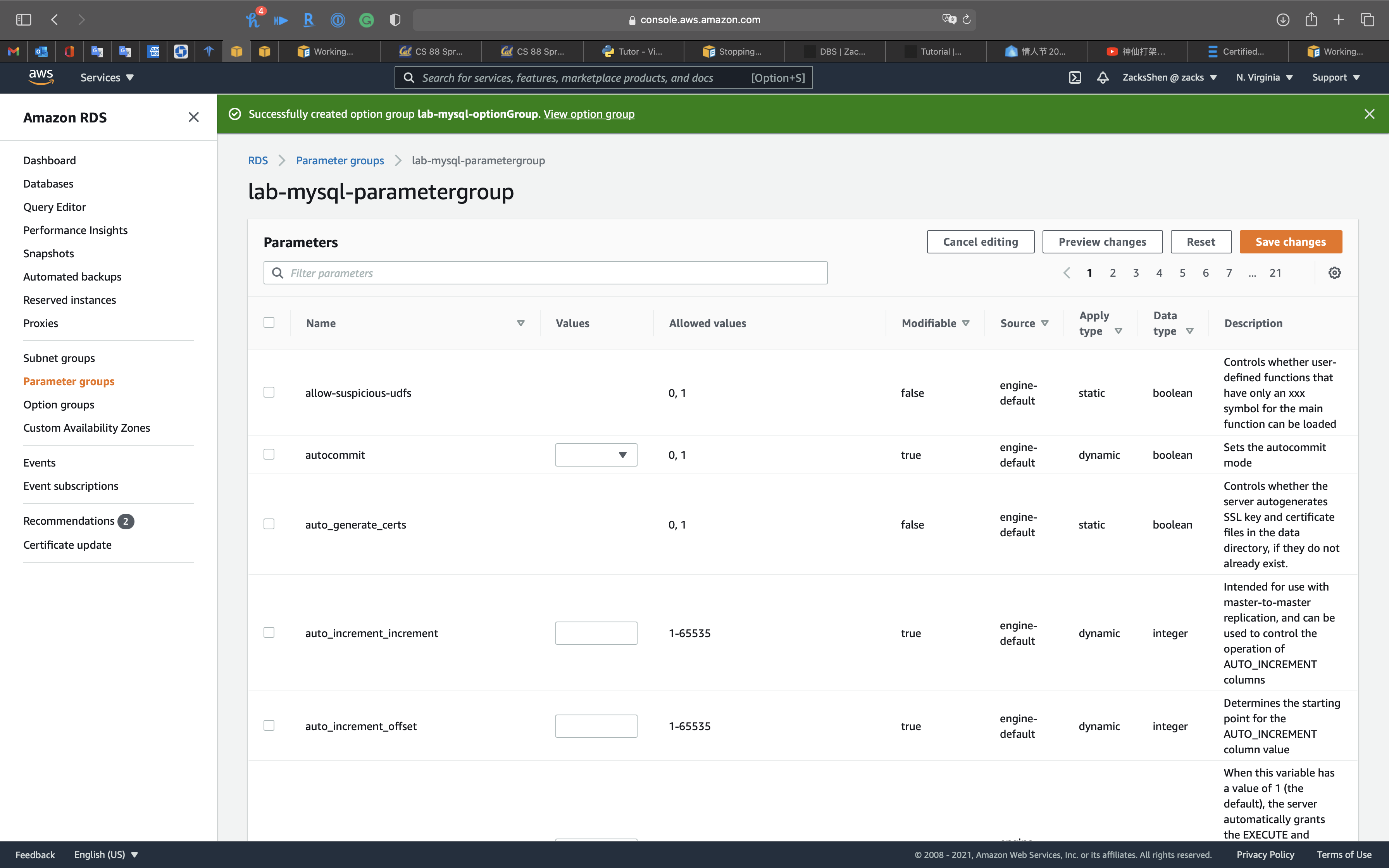

Click on `Edit to your parameter group

Here are some important points about working with parameters in a DB parameter group:

- When you change a dynamic parameter and save the DB parameter group, the change is applied immediately regardless of the Apply Immediately setting. When you change a static parameter and save the DB parameter group, the parameter change takes effect after you manually reboot the DB instance. You can reboot a DB instance using the RDS console, by calling the reboot-db-instance CLI command, or by calling the RebootDbInstance API operation. The requirement to reboot the associated DB instance after a static parameter change helps mitigate the risk of a parameter misconfiguration affecting an API call, such as calling ModifyDBInstance to change DB instance class or scale storage.

If a DB instance isn’t using the latest changes to its associated DB parameter group, the AWS Management Console shows the DB parameter group with a status of pending-reboot. The pending-reboot parameter groups status doesn’t result in an automatic reboot during the next maintenance window. To apply the latest parameter changes to that DB instance, manually reboot the DB instance. - When you change the DB parameter group associated with a DB instance, you must manually reboot the instance before the DB instance can use the new DB parameter group. For more information about changing the DB parameter group, see Modifying an Amazon RDS DB instance.

- You can specify the value for a DB parameter as an integer or as an integer expression built from formulas, variables, functions, and operators. Functions can include a mathematical log expression. For more information, see DB parameter values.

- Set any parameters that relate to the character set or collation of your database in your parameter group before creating the DB instance and before you create a database in your DB instance. This ensures that the default database and new databases in your DB instance use the character set and collation values that you specify. If you change character set or collation parameters for your DB instance, the parameter changes are not applied to existing databases.

You can change character set or collation values for an existing database using the ALTER DATABASE command, for example:

1 | ALTER DATABASE database_name CHARACTER SET character_set_name COLLATE collation; |

- Improperly setting parameters in a DB parameter group can have unintended adverse effects, including degraded performance and system instability. Always exercise caution when modifying database parameters and back up your data before modifying a DB parameter group. Try out parameter group setting changes on a test DB instance before applying those parameter group changes to a production DB instance.

- To determine the supported parameters for your DB engine, you can view the parameters in the DB parameter group used by the DB instance. For more information, see Viewing parameter values for a DB parameter group.

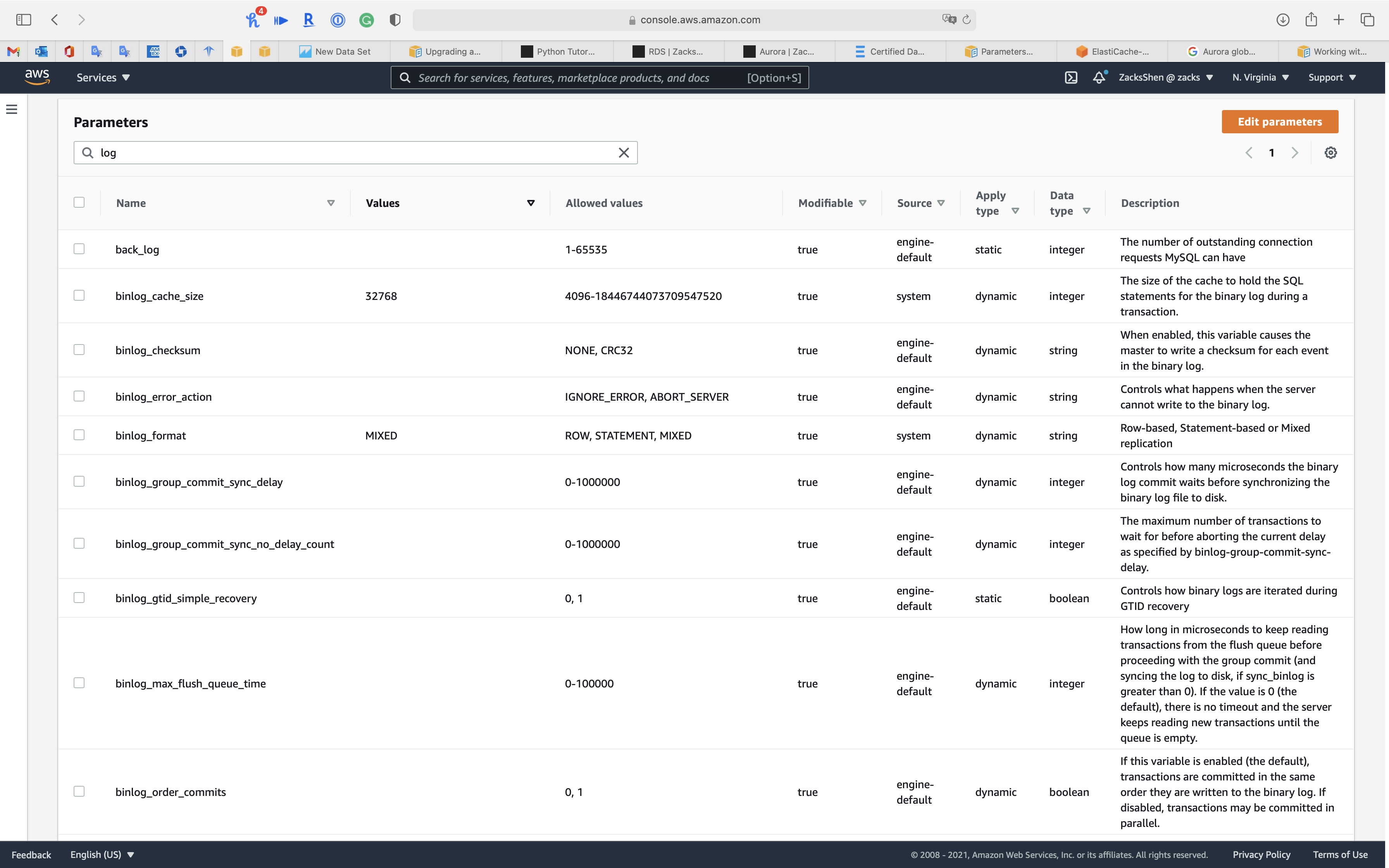

Parameter groups overview

There are a huge number of parameters for MySQL 5.7.

For example: you can filter log as keyword.

SQL Server

Active Directory

Using Windows Authentication with an Amazon RDS for SQL Server DB instance

To set up Windows authentication for a SQL Server DB instance, do the following steps, explained in greater detail in Setting up Windows Authentication for SQL Server DB instances:

- Use AWS Managed Microsoft AD, either from the AWS Management Console or AWS Directory Service API, to create an AWS Managed Microsoft AD directory.

- If you use the AWS CLI or Amazon RDS API to create your SQL Server DB instance, create an AWS Identity and Access Management (IAM) role. This role uses the managed IAM policy

AmazonRDSDirectoryServiceAccessand allows Amazon RDS to make calls to your directory. If you use the console to create your SQL Server DB instance, AWS creates the IAM role for you.

For the role to allow access, the AWS Security Token Service (AWS STS) endpoint must be activated in the AWS Region for your AWS account. AWS STS endpoints are active by default in all AWS Regions, and you can use them without any further actions. For more information, see Managing AWS STS in an AWS Region in the IAM User Guide. - Create and configure users and groups in the AWS Managed Microsoft AD directory using the Microsoft Active Directory tools. For more information about creating users and groups in your Active Directory, see Manage users and groups in AWS Managed Microsoft AD in the AWS Directory Service Administration Guide.

- If you plan to locate the directory and the DB instance in different VPCs, enable cross-VPC traffic.

- Use Amazon RDS to create a new SQL Server DB instance either from the console, AWS CLI, or Amazon RDS API. In the create request, you provide the domain identifier (“

d-*“ identifier) that was generated when you created your directory and the name of the role you created. You can also modify an existing SQL Server DB instance to use Windows Authentication by setting the domain and IAM role parameters for the DB instance. - Use the Amazon RDS master user credentials to connect to the SQL Server DB instance as you do any other DB instance. Because the DB instance is joined to the AWS Managed Microsoft AD domain, you can provision SQL Server logins and users from the Active Directory users and groups in their domain. (These are known as SQL Server “Windows” logins.) Database permissions are managed through standard SQL Server permissions granted and revoked to these Windows logins.