Data8

Data Science

DATA 8

What is Data Science?

The Best Python Libraries for Data Science and Machine Learning

Test statistics explained

What is Data Science?

What is Data Science?

Data Science is about drawing useful conclusions from large and diverse data sets through exploration, prediction, and inference.

- Exploration involves identifying patterns in information.

- Primary tools for exploration are visualizations and descriptive statistics.

- Prediction involves using information we know to make informed guesses about values we wish we knew.

- For prediction are machine learning and optimization

- Inference involves quantifying our degree of certainty: will the patterns that we found in our data also appear in new observations? How accurate are our predictions?

- For inference are statistical tests and models.

Components

- Statistics is a central component of data science because statistics studies how to make robust conclusions based on incomplete information.

- Computing is a central component because programming allows us to apply analysis techniques to the large and diverse data sets that arise in real-world applications: not just numbers, but text, images, videos, and sensor readings.

- Data science is all of these things, but it is more than the sum of its parts because of the applications.

Through understanding a particular domain, data scientists learn to ask appropriate questions about their data and correctly interpret the answers provided by our inferential and computational tools.

Introduction

Data are descriptions of the world around us, collected through observation and stored on computers. Computers enable us to infer properties of the world from these descriptions. Data science is the discipline of drawing conclusions from data using computation. There are three core aspects of effective data analysis: exploration, prediction, and inference. This text develops a consistent approach to all three, introducing statistical ideas and fundamental ideas in computer science concurrently. We focus on a minimal set of core techniques that can be applied to a vast range of real-world applications. A foundation in data science requires not only understanding statistical and computational techniques, but also recognizing how they apply to real scenarios.

For whatever aspect of the world we wish to study—whether it’s the Earth’s weather, the world’s markets, political polls, or the human mind—data we collect typically offer an incomplete description of the subject at hand. A central challenge of data science is to make reliable conclusions using this partial information.

In this endeavor, we will combine two essential tools: computation and randomization. For example, we may want to understand climate change trends using temperature observations. Computers will allow us to use all available information to draw conclusions. Rather than focusing only on the average temperature of a region, we will consider the whole range of temperatures together to construct a more nuanced analysis. Randomness will allow us to consider the many different ways in which incomplete information might be completed. Rather than assuming that temperatures vary in a particular way, we will learn to use randomness as a way to imagine many possible scenarios that are all consistent with the data we observe.

Applying this approach requires learning to program a computer, and so this text interleaves a complete introduction to programming that assumes no prior knowledge. Readers with programming experience will find that we cover several topics in computation that do not appear in a typical introductory computer science curriculum. Data science also requires careful reasoning about numerical quantities, but this text does not assume any background in mathematics or statistics beyond basic algebra. You will find very few equations in this text. Instead, techniques are described to readers in the same language in which they are described to the computers that execute them—a programming language.

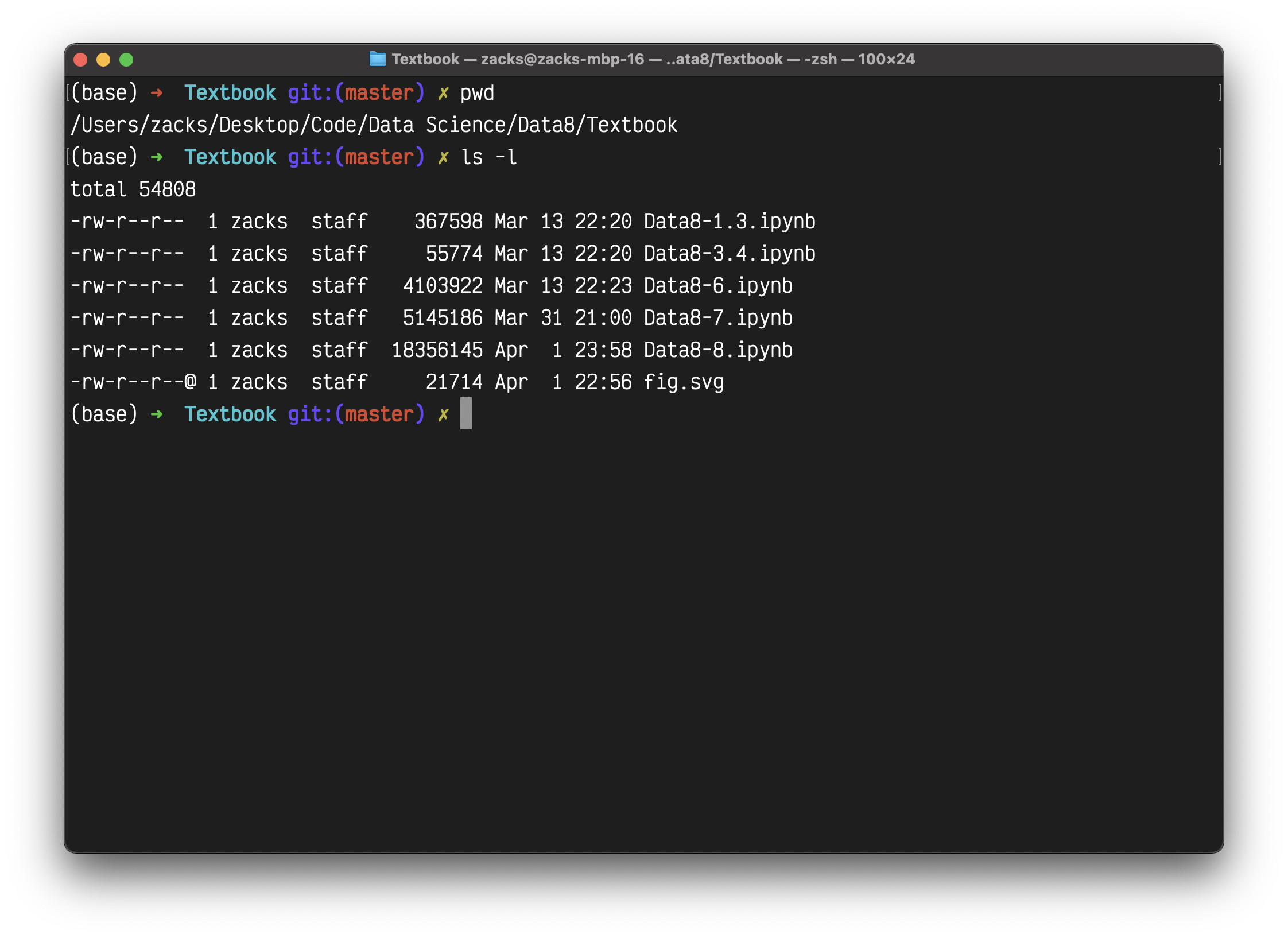

Computational Tools

This text uses the Python 3 programming language, along with a standard set of numerical and data visualization tools that are used widely in commercial applications, scientific experiments, and open-source projects. Python has recruited enthusiasts from many professions that use data to draw conclusions. By learning the Python language, you will join a million-person-strong community of software developers and data scientists.

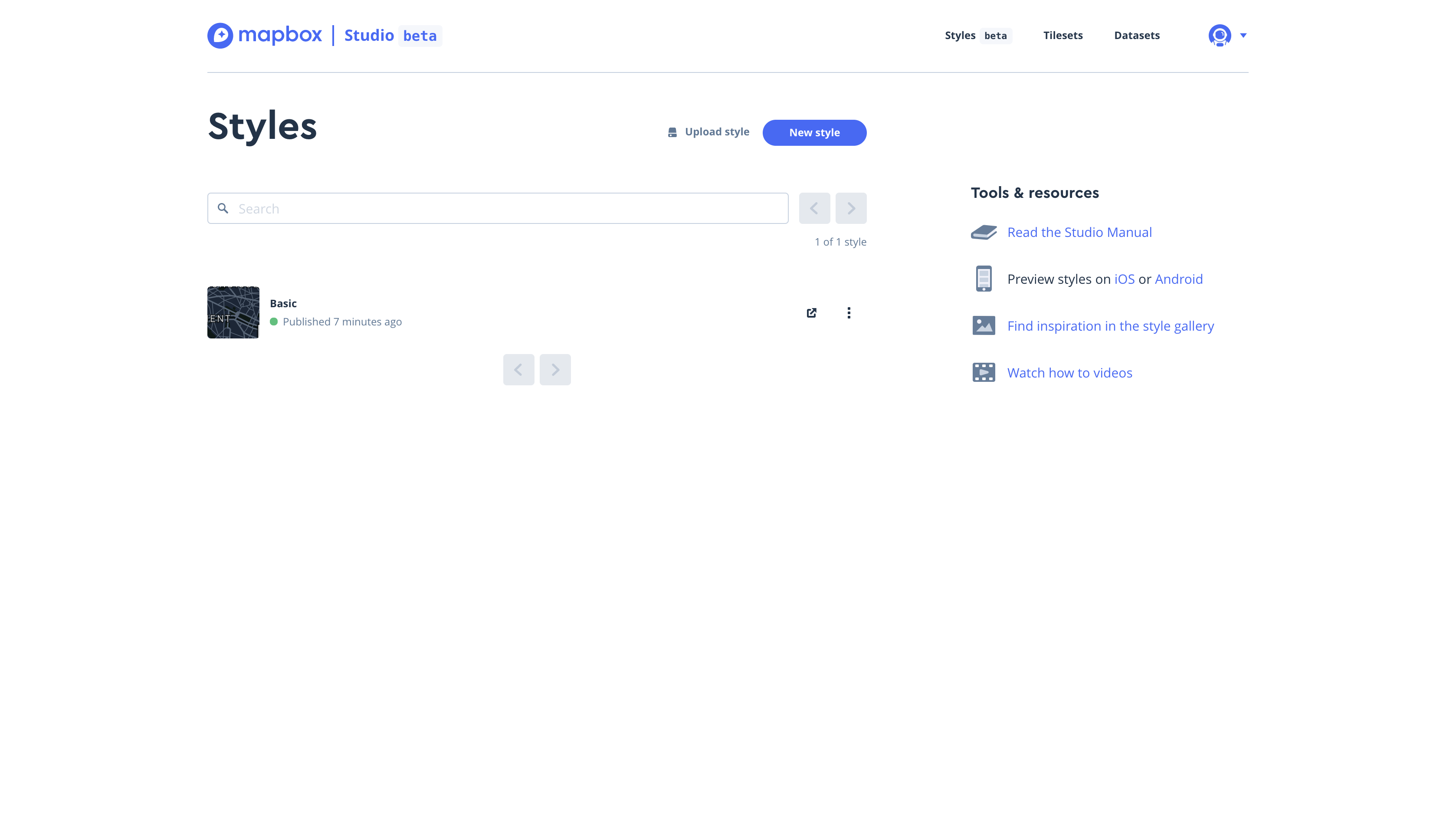

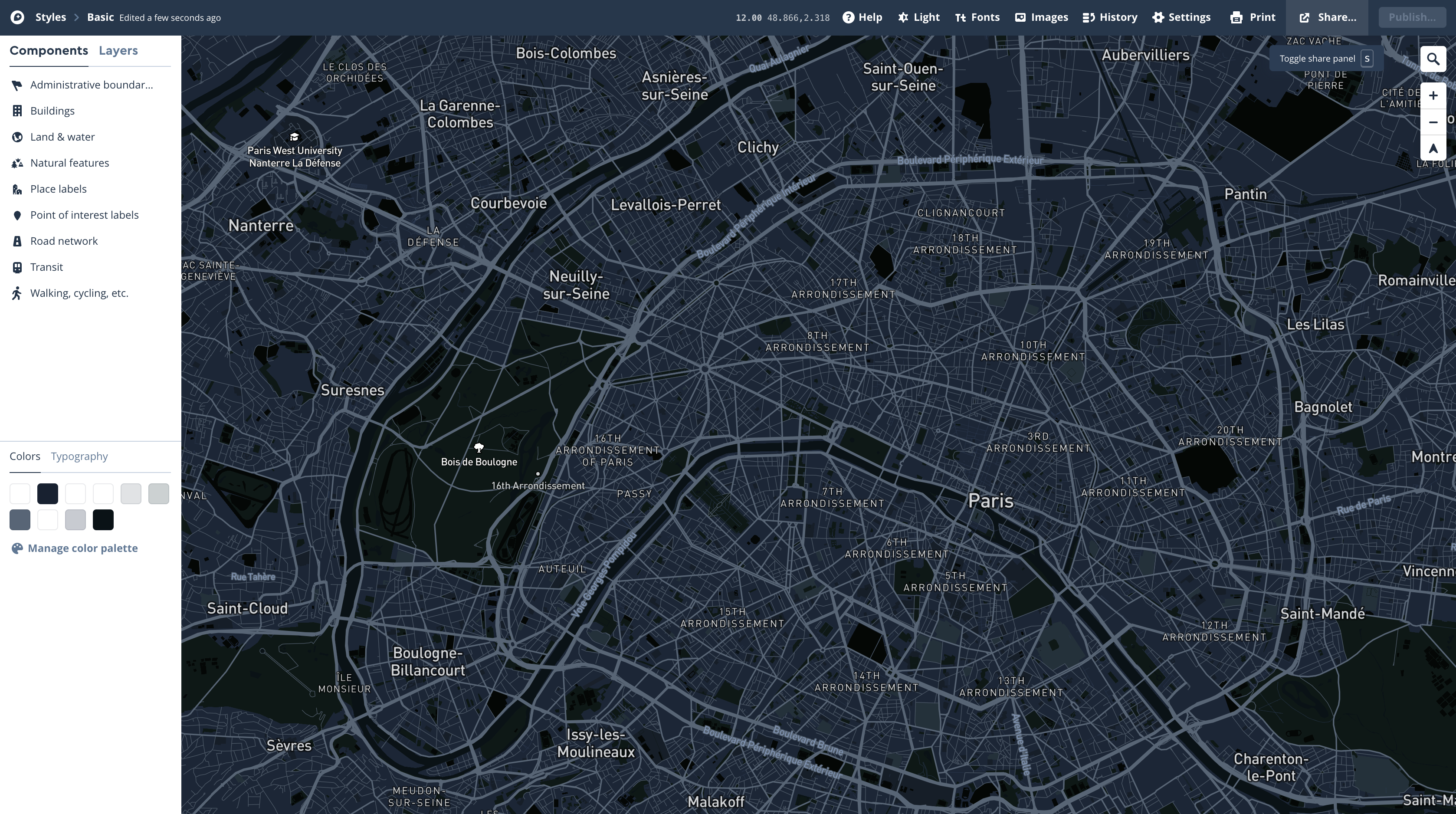

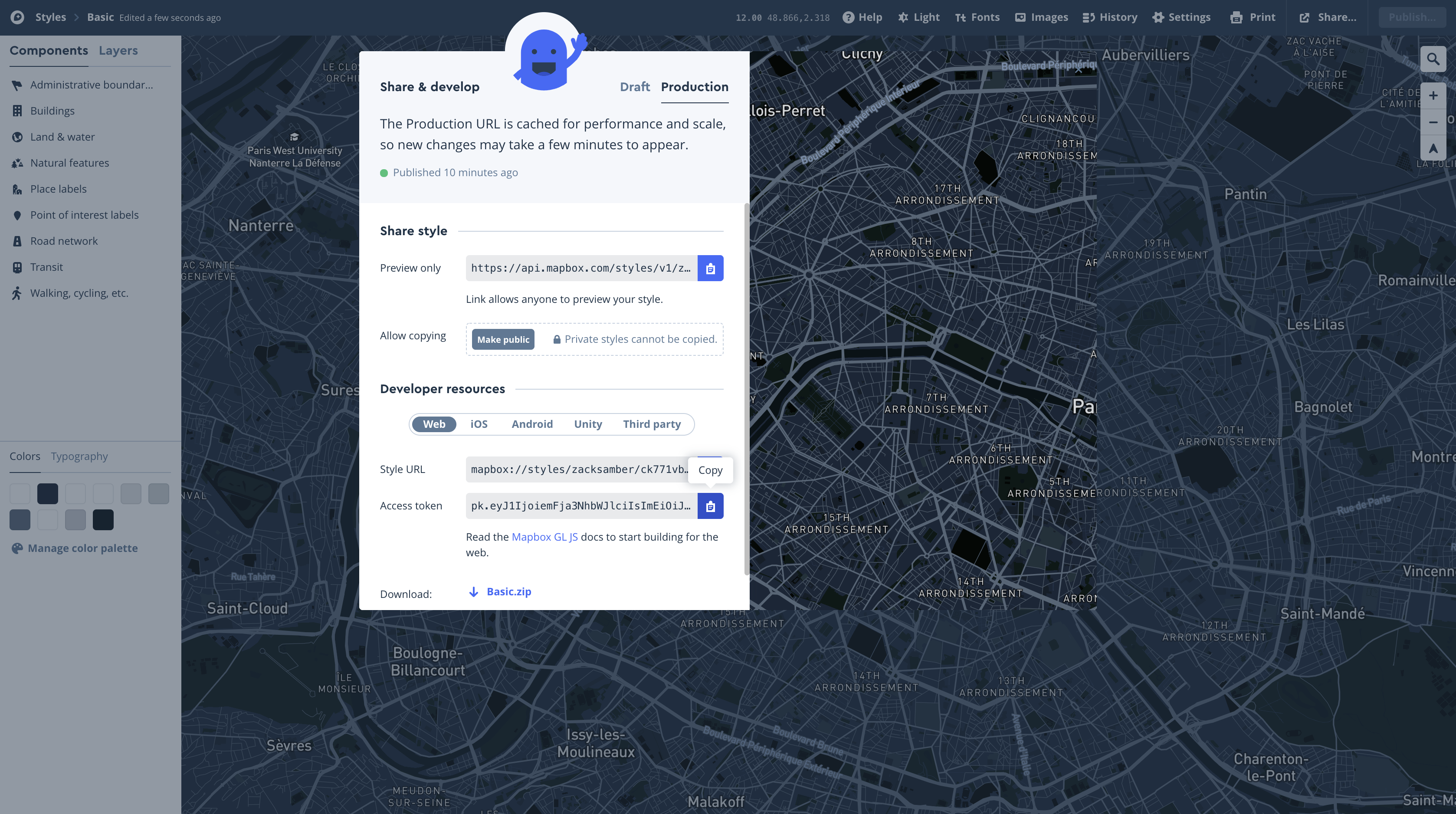

Getting Started. The easiest and recommended way to start writing programs in Python is to log into the companion site for this text, datahub.berkeley.edu. If you have a @berkeley.edu email address, you already have full access to the programming environment hosted on that site. If not, please complete this form to request access.

You are not at all restricted to using this web-based programming environment. A Python program can be executed by any computer, regardless of its manufacturer or operating system, provided that support for the language is installed. If you wish to install the version of Python and its accompanying libraries that will match this text, we recommend the Anaconda distribution that packages together the Python 3 language interpreter, IPython libraries, and the Jupyter notebook environment.

This text includes a complete introduction to all of these computational tools. You will learn to write programs, generate images from data, and work with real-world data sets that are published online.

Statistical Techniques

The discipline of statistics has long addressed the same fundamental challenge as data science: how to draw robust conclusions about the world using incomplete information. One of the most important contributions of statistics is a consistent and precise vocabulary for describing the relationship between observations and conclusions. This text continues in the same tradition, focusing on a set of core inferential problems from statistics: testing hypotheses, estimating confidence, and predicting unknown quantities.

Data science extends the field of statistics by taking full advantage of computing, data visualization, machine learning, optimization, and access to information. The combination of fast computers and the Internet gives anyone the ability to access and analyze vast datasets: millions of news articles, full encyclopedias, databases for any domain, and massive repositories of music, photos, and video.

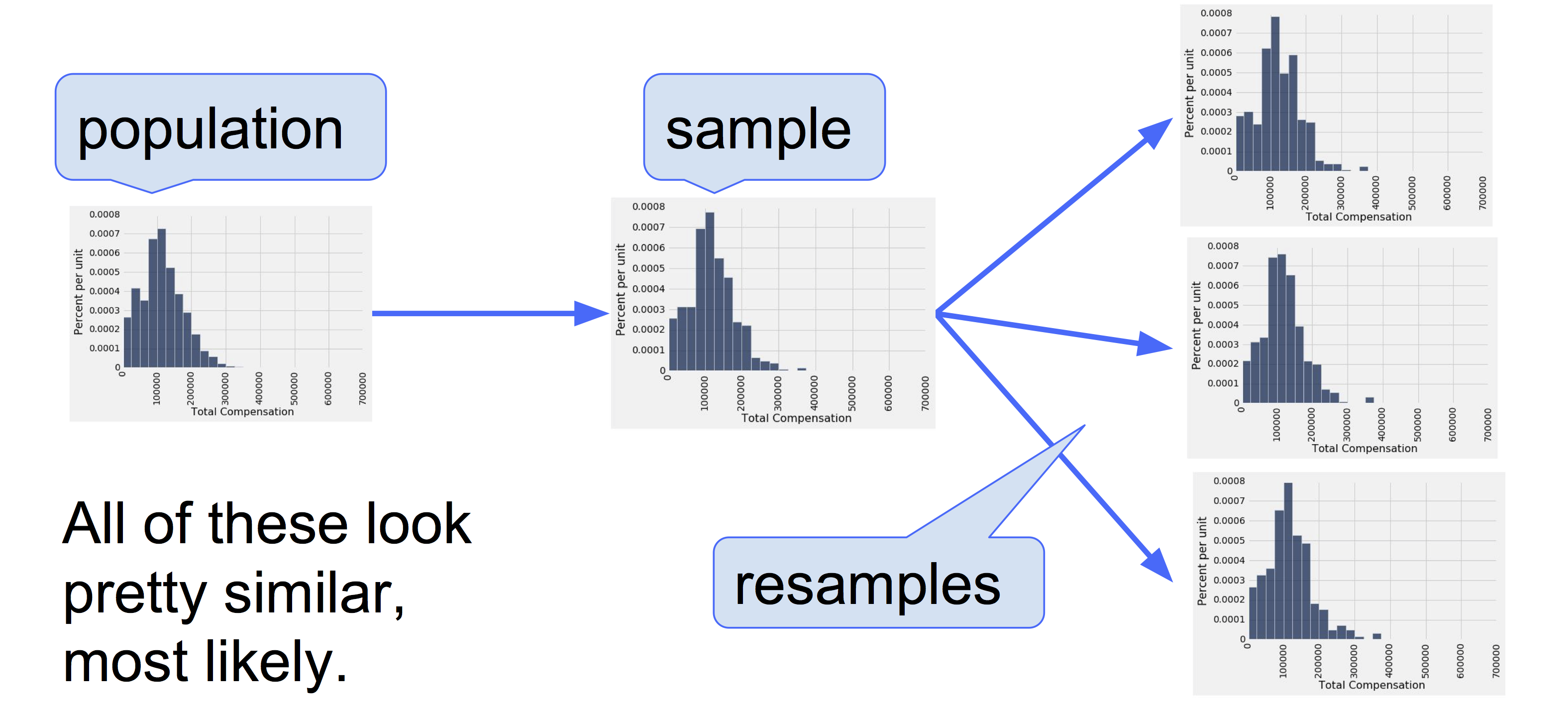

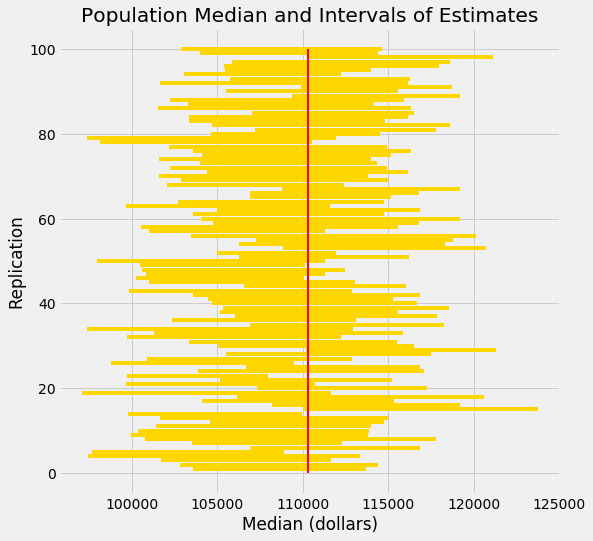

Applications to real data sets motivate the statistical techniques that we describe throughout the text. Real data often do not follow regular patterns or match standard equations. The interesting variation in real data can be lost by focusing too much attention on simplistic summaries such as average values. Computers enable a family of methods based on resampling that apply to a wide range of different inference problems, take into account all available information, and require few assumptions or conditions. Although these techniques have often been reserved for advanced courses in statistics, their flexibility and simplicity are a natural fit for data science applications.

Why Data Science?

Most important decisions are made with only partial information and uncertain outcomes. However, the degree of uncertainty for many decisions can be reduced sharply by access to large data sets and the computational tools required to analyze them effectively. Data-driven decision making has already transformed a tremendous breadth of industries, including finance, advertising, manufacturing, and real estate. At the same time, a wide range of academic disciplines are evolving rapidly to incorporate large-scale data analysis into their theory and practice.

Studying data science enables individuals to bring these techniques to bear on their work, their scientific endeavors, and their personal decisions. Critical thinking has long been a hallmark of a rigorous education, but critiques are often most effective when supported by data. A critical analysis of any aspect of the world, may it be business or social science, involves inductive reasoning; conclusions can rarely been proven outright, but only supported by the available evidence. Data science provides the means to make precise, reliable, and quantitative arguments about any set of observations. With unprecedented access to information and computing, critical thinking about any aspect of the world that can be measured would be incomplete without effective inferential techniques.

The world has too many unanswered questions and difficult challenges to leave this critical reasoning to only a few specialists. All educated members of society can build the capacity to reason about data. The tools, techniques, and data sets are all readily available; this text aims to make them accessible to everyone.

Plotting the classics

In this example, we will explore statistics for two classic novels: The Adventures of Huckleberry Finn by Mark Twain, and Little Women by Louisa May Alcott. The text of any book can be read by a computer at great speed. Books published before 1923 are currently in the public domain, meaning that everyone has the right to copy or use the text in any way. Project Gutenberg is a website that publishes public domain books online. Using Python, we can load the text of these books directly from the web.

This example is meant to illustrate some of the broad themes of this text. Don’t worry if the details of the program don’t yet make sense. Instead, focus on interpreting the images generated below. Later sections of the text will describe most of the features of the Python programming language used below.

First, we read the text of both books into lists of chapters, called huck_finn_chapters and little_women_chapters. In Python, a name cannot contain any spaces, and so we will often use an underscore _ to stand in for a space. The = in the lines below give a name on the left to the result of some computation described on the right. A uniform resource locator or URL is an address on the Internet for some content; in this case, the text of a book. The # symbol starts a comment, which is ignored by the computer but helpful for people reading the code.

1 | import urllib.request |

While a computer cannot understand the text of a book, it can provide us with some insight into the structure of the text. The name huck_finn_chapters is currently bound to a list of all the chapters in the book. We can place them into a table to see how each chapter begins.

1 | import pandas as pd |

| Chapters | |

|---|---|

| 0 | I.YOU don't know about me without you have rea... |

| 1 | II.WE went tiptoeing along a path amongst the ... |

| 2 | III.WELL, I got a good going-over in the morni... |

| 3 | IV.WELL, three or four months run along, and i... |

| 4 | V.I had shut the door to. Then I turned aroun... |

| ... | ... |

| 38 | XXXIX.IN the morning we went up to the village... |

| 39 | XL.WE was feeling pretty good after breakfast,... |

| 40 | XLI.THE doctor was an old man; a very nice, ki... |

| 41 | XLII.THE old man was uptown again before break... |

| 42 | THE LASTTHE first time I catched Tom private I... |

Each chapter begins with a chapter number in Roman numerals, followed by the first sentence of the chapter. Project Gutenberg has printed the first word of each chapter in upper case.

Literary Characters

The Adventures of Huckleberry Finn describes a journey that Huck and Jim take along the Mississippi River. Tom Sawyer joins them towards the end as the action heats up. Having loaded the text, we can quickly visualize how many times these characters have each been mentioned at any point in the book.

1 | import numpy as np |

| Jim | Tom | Huck | Chapter | |

|---|---|---|---|---|

| 0 | 0 | 6 | 3 | 1 |

| 1 | 16 | 30 | 5 | 2 |

| 2 | 16 | 35 | 7 | 3 |

| 3 | 24 | 35 | 8 | 4 |

| 4 | 24 | 35 | 8 | 5 |

| ... | ... | ... | ... | ... |

| 38 | 345 | 177 | 69 | 39 |

| 39 | 358 | 188 | 72 | 40 |

| 40 | 358 | 196 | 72 | 41 |

| 41 | 370 | 226 | 74 | 42 |

| 42 | 376 | 232 | 78 | 43 |

1 | # Plot the cumulative counts: |

In the plot above, the horizontal axis shows chapter numbers and the vertical axis shows how many times each character has been mentioned up to and including that chapter.

You can see that Jim is a central character by the large number of times his name appears. Notice how Tom is hardly mentioned for much of the book until he arrives and joins Huck and Jim, after Chapter 30. His curve and Jim’s rise sharply at that point, as the action involving both of them intensifies. As for Huck, his name hardly appears at all, because he is the narrator.

Little Women is a story of four sisters growing up together during the civil war. In this book, chapter numbers are spelled out and chapter titles are written in all capital letters.

1 | # The chapters of Little Women, in a table |

| Chapters | |

|---|---|

| 0 | ONEPLAYING PILGRIMS"Christmas won't be Christm... |

| 1 | TWOA MERRY CHRISTMASJo was the first to wake i... |

| 2 | THREETHE LAURENCE BOY"Jo! Jo! Where are you?... |

| 3 | FOURBURDENS"Oh, dear, how hard it does seem to... |

| 4 | FIVEBEING NEIGHBORLY"What in the world are you... |

| ... | ... |

| 42 | FORTY-THREESURPRISESJo was alone in the twilig... |

| 43 | FORTY-FOURMY LORD AND LADY"Please, Madam Mothe... |

| 44 | FORTY-FIVEDAISY AND DEMII cannot feel that I h... |

| 45 | FORTY-SIXUNDER THE UMBRELLAWhile Laurie and Am... |

| 46 | FORTY-SEVENHARVEST TIMEFor a year Jo and her P... |

We can track the mentions of main characters to learn about the plot of this book as well. The protagonist Jo interacts with her sisters Meg, Beth, and Amy regularly, up until Chapter 27 when she moves to New York alone.

1 | # Get data little_women_chapters |

| Amy | Beth | Jo | Meg | Laurie | Chapter | |

|---|---|---|---|---|---|---|

| 0 | 23 | 26 | 44 | 26 | 0 | 1 |

| 1 | 36 | 38 | 65 | 46 | 0 | 2 |

| 2 | 38 | 40 | 127 | 82 | 16 | 3 |

| 3 | 52 | 58 | 161 | 99 | 16 | 4 |

| 4 | 58 | 72 | 216 | 112 | 51 | 5 |

| ... | ... | ... | ... | ... | ... | ... |

| 42 | 619 | 459 | 1435 | 673 | 571 | 43 |

| 43 | 632 | 459 | 1444 | 673 | 581 | 44 |

| 44 | 633 | 461 | 1450 | 675 | 581 | 45 |

| 45 | 635 | 462 | 1506 | 679 | 583 | 46 |

| 46 | 645 | 465 | 1543 | 685 | 596 | 47 |

1 | # Plot the cumulative counts. |

Laurie is a young man who marries one of the girls in the end. See if you can use the plots to guess which one.

Inspiration

See if we can use this tech to count the number of positive reviews and negative reviews on a company in a news for judging the influence of the breaking news on its stock price.

Another Kind of Character

In some situations, the relationships between quantities allow us to make predictions. This text will explore how to make accurate predictions based on incomplete information and develop methods for combining multiple sources of uncertain information to make decisions.

As an example of visualizing information derived from multiple sources, let us first use the computer to get some information that would be tedious to acquire by hand. In the context of novels, the word “character” has a second meaning: a printed symbol such as a letter or number or punctuation symbol. Here, we ask the computer to count the number of characters and the number of periods in each chapter of both Huckleberry Finn and Little Women.

1 | # In each chapter, count the number of all characters; |

Here are the data for Huckleberry Finn. Each row of the table corresponds to one chapter of the novel and displays the number of characters as well as the number of periods in the chapter. Not surprisingly, chapters with fewer characters also tend to have fewer periods, in general: the shorter the chapter, the fewer sentences there tend to be, and vice versa. The relation is not entirely predictable, however, as sentences are of varying lengths and can involve other punctuation such as question marks.

1 | chars_periods_huck_finn |

| Huck Finn Chapter Length | Number of Periods | |

|---|---|---|

| 0 | 6970 | 66 |

| 1 | 11874 | 117 |

| 2 | 8460 | 72 |

| 3 | 6755 | 84 |

| 4 | 8095 | 91 |

| ... | ... | ... |

| 38 | 10763 | 96 |

| 39 | 11386 | 60 |

| 40 | 13278 | 77 |

| 41 | 15565 | 92 |

| 42 | 21461 | 228 |

Here are the corresponding data for Little Women.

1 | chars_periods_little_women |

| Little Women Chapter Length | Number of Periods | |

|---|---|---|

| 0 | 21496 | 189 |

| 1 | 21941 | 188 |

| 2 | 20335 | 231 |

| 3 | 25213 | 195 |

| 4 | 23115 | 255 |

| ... | ... | ... |

| 42 | 32811 | 305 |

| 43 | 10166 | 95 |

| 44 | 12390 | 96 |

| 45 | 27078 | 234 |

| 46 | 40592 | 392 |

You can see that the chapters of Little Women are in general longer than those of Huckleberry Finn. Let us see if these two simple variables – the length and number of periods in each chapter – can tell us anything more about the two books. One way to do this is to plot both sets of data on the same axes.

In the plot below, there is a dot for each chapter in each book. Blue dots correspond to Huckleberry Finn and gold dots to Little Women. The horizontal axis represents the number of periods and the vertical axis represents the number of characters.

1 | import plotly.graph_objects as go |

The plot shows us that many but not all of the chapters of Little Women are longer than those of Huckleberry Finn, as we had observed by just looking at the numbers. But it also shows us something more. Notice how the blue points are roughly clustered around a straight line, as are the yellow points. Moreover, it looks as though both colors of points might be clustered around the same straight line.

Now look at all the chapters that contain about 100 periods. The plot shows that those chapters contain about 10,000 characters to about 15,000 characters, roughly. That’s about 100 to 150 characters per period.

Indeed, it appears from looking at the plot that on average both books tend to have somewhere between 100 and 150 characters between periods, as a very rough estimate. Perhaps these two great 19th century novels were signaling something so very familiar to us now: the 140-character limit of Twitter.

Causality and Experiments

Causality and Experiments

“These problems are, and will probably ever remain, among the inscrutable secrets of nature. They belong to a class of questions radically inaccessible to the human intelligence.” —The Times of London, September 1849, on how cholera is contracted and spread

Does the death penalty have a deterrent effect? Is chocolate good for you? What causes breast cancer?

All of these questions attempt to assign a cause to an effect. A careful examination of data can help shed light on questions like these. In this section you will learn some of the fundamental concepts involved in establishing causality.

Observation is a key to good science. An observational study is one in which scientists make conclusions based on data that they have observed but had no hand in generating. In data science, many such studies involve observations on a group of individuals, a factor of interest called a treatment, and an outcome measured on each individual.

It is easiest to think of the individuals as people. In a study of whether chocolate is good for the health, the individuals would indeed be people, the treatment would be eating chocolate, and the outcome might be a measure of heart disease. But individuals in observational studies need not be people. In a study of whether the death penalty has a deterrent effect, the individuals could be the 50 states of the union. A state law allowing the death penalty would be the treatment, and an outcome could be the state’s murder rate.

The fundamental question is whether the treatment has an effect on the outcome. Any relation between the treatment and the outcome is called an association. If the treatment causes the outcome to occur, then the association is causal. Causality is at the heart of all three questions posed at the start of this section. For example, one of the questions was whether chocolate directly causes improvements in health, not just whether there there is a relation between chocolate and health.

The establishment of causality often takes place in two stages. First, an association is observed. Next, a more careful analysis leads to a decision about causality.

Observation and Visualization: John Snow and the Broad Street Pump

One of the most powerful examples of astute observation eventually leading to the establishment of causality dates back more than 150 years. To get your mind into the right timeframe, try to imagine London in the 1850’s. It was the world’s wealthiest city but many of its people were desperately poor. Charles Dickens, then at the height of his fame, was writing about their plight. Disease was rife in the poorer parts of the city, and cholera was among the most feared. It was not yet known that germs cause disease; the leading theory was that “miasmas” were the main culprit. Miasmas manifested themselves as bad smells, and were thought to be invisible poisonous particles arising out of decaying matter. Parts of London did smell very bad, especially in hot weather. To protect themselves against infection, those who could afford to held sweet-smelling things to their noses.

For several years, a doctor by the name of John Snow had been following the devastating waves of cholera that hit England from time to time. The disease arrived suddenly and was almost immediately deadly: people died within a day or two of contracting it, hundreds could die in a week, and the total death toll in a single wave could reach tens of thousands. Snow was skeptical of the miasma theory. He had noticed that while entire households were wiped out by cholera, the people in neighboring houses sometimes remained completely unaffected. As they were breathing the same air—and miasmas—as their neighbors, there was no compelling association between bad smells and the incidence of cholera.

Snow had also noticed that the onset of the disease almost always involved vomiting and diarrhea. He therefore believed that the infection was carried by something people ate or drank, not by the air that they breathed. His prime suspect was water contaminated by sewage.

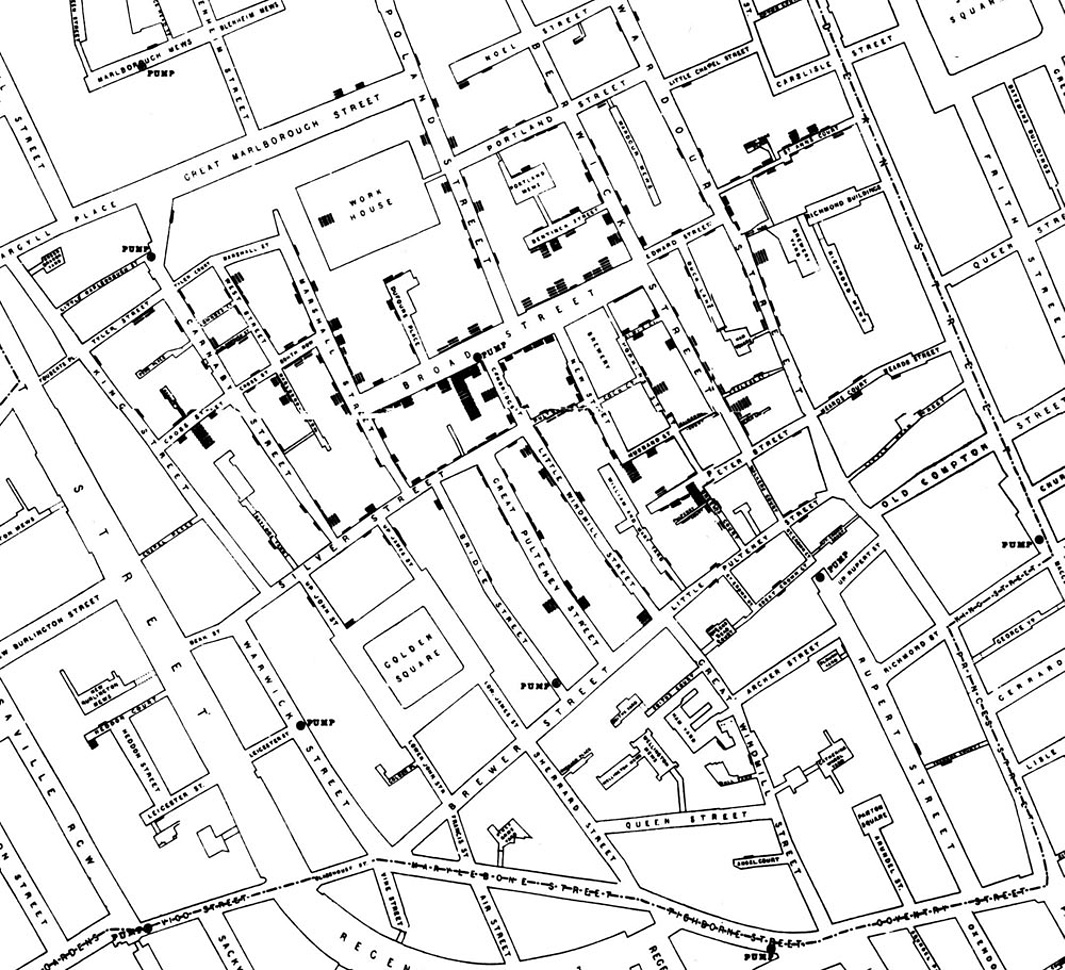

At the end of August 1854, cholera struck in the overcrowded Soho district of London. As the deaths mounted, Snow recorded them diligently, using a method that went on to become standard in the study of how diseases spread: he drew a map. On a street map of the district, he recorded the location of each death.

Here is Snow’s original map. Each black bar represents one death. When there are multiple deaths at the same address, the bars corresponding to those deaths are stacked on top of each other. The black discs mark the locations of water pumps. The map displays a striking revelation—the deaths are roughly clustered around the Broad Street pump.

Snow studied his map carefully and investigated the apparent anomalies. All of them implicated the Broad Street pump. For example:

- There were deaths in houses that were nearer the Rupert Street pump than the Broad Street pump. Though the Rupert Street pump was closer as the crow flies, it was less convenient to get to because of dead ends and the layout of the streets. The residents in those houses used the Broad Street pump instead.

- There were no deaths in two blocks just east of the pump. That was the location of the Lion Brewery, where the workers drank what they brewed. If they wanted water, the brewery had its own well.

- There were scattered deaths in houses several blocks away from the Broad Street pump. Those were children who drank from the Broad Street pump on their way to school. The pump’s water was known to be cool and refreshing.

The final piece of evidence in support of Snow’s theory was provided by two isolated deaths in the leafy and genteel Hampstead area, quite far from Soho. Snow was puzzled by these until he learned that the deceased were Mrs. Susannah Eley, who had once lived in Broad Street, and her niece. Mrs. Eley had water from the Broad Street pump delivered to her in Hampstead every day. She liked its taste.

Later it was discovered that a cesspit that was just a few feet away from the well of the Broad Street pump had been leaking into the well. Thus the pump’s water was contaminated by sewage from the houses of cholera victims.

Snow used his map to convince local authorities to remove the handle of the Broad Street pump. Though the cholera epidemic was already on the wane when he did so, it is possible that the disabling of the pump prevented many deaths from future waves of the disease.

The removal of the Broad Street pump handle has become the stuff of legend. At the Centers for Disease Control (CDC) in Atlanta, when scientists look for simple answers to questions about epidemics, they sometimes ask each other, “Where is the handle to this pump?”

Snow’s map is one of the earliest and most powerful uses of data visualization. Disease maps of various kinds are now a standard tool for tracking epidemics.

Towards Causality

Though the map gave Snow a strong indication that the cleanliness of the water supply was the key to controlling cholera, he was still a long way from a convincing scientific argument that contaminated water was causing the spread of the disease. To make a more compelling case, he had to use the method of comparison.

Scientists use comparison to identify an association between a treatment and an outcome. They compare the outcomes of a group of individuals who got the treatment (the treatment group) to the outcomes of a group who did not (the control group). For example, researchers today might compare the average murder rate in states that have the death penalty with the average murder rate in states that don’t.

If the results are different, that is evidence for an association. To determine causation, however, even more care is needed.

Snow’s “Grand Experiment”

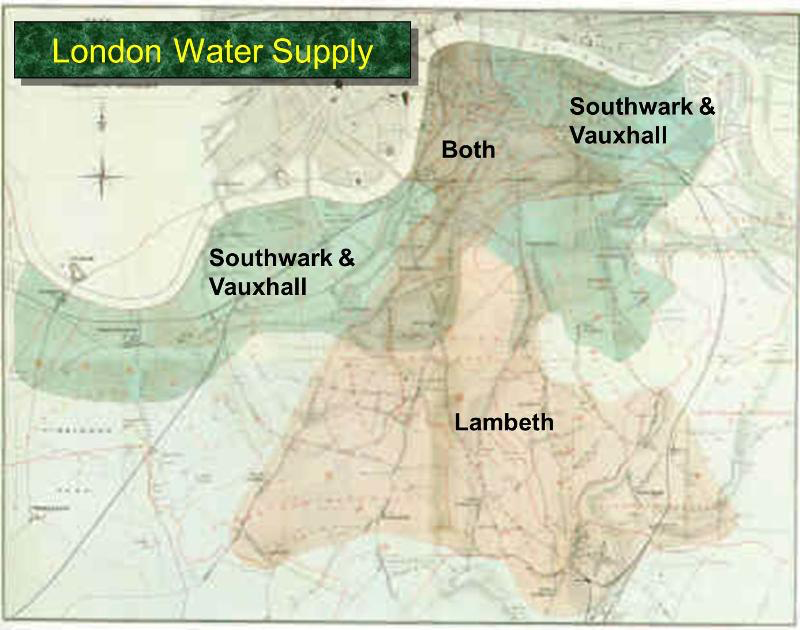

Encouraged by what he had learned in Soho, Snow completed a more thorough analysis. For some time, he had been gathering data on cholera deaths in an area of London that was served by two water companies. The Lambeth water company drew its water upriver from where sewage was discharged into the River Thames. Its water was relatively clean. But the Southwark and Vauxhall (S&V) company drew its water below the sewage discharge, and thus its supply was contaminated.

The map below shows the areas served by the two companies. Snow honed in on the region where the two service areas overlap.

Snow noticed that there was no systematic difference between the people who were supplied by S&V and those supplied by Lambeth. “Each company supplies both rich and poor, both large houses and small; there is no difference either in the condition or occupation of the persons receiving the water of the different Companies … there is no difference whatever in the houses or the people receiving the supply of the two Water Companies, or in any of the physical conditions with which they are surrounded …”

The only difference was in the water supply, “one group being supplied with water containing the sewage of London, and amongst it, whatever might have come from the cholera patients, the other group having water quite free from impurity.”

Confident that he would be able to arrive at a clear conclusion, Snow summarized his data in the table below.

| Supply Area | Number of houses | cholera deaths | deaths per 10,000 houses |

|---|---|---|---|

| S&V | 40,046 | 1,263 | 315 |

| Lambeth | 26,107 | 98 | 37 |

| Rest of London | 256,423 | 1,422 | 59 |

The numbers pointed accusingly at S&V. The death rate from cholera in the S&V houses was almost ten times the rate in the houses supplied by Lambeth.

Establishing Causality

In the language developed earlier in the section, you can think of the people in the S&V houses as the treatment group, and those in the Lambeth houses at the control group. A crucial element in Snow’s analysis was that the people in the two groups were comparable to each other, apart from the treatment.

In order to establish whether it was the water supply that was causing cholera, Snow had to compare two groups that were similar to each other in all but one aspect—their water supply. Only then would he be able to ascribe the differences in their outcomes to the water supply. If the two groups had been different in some other way as well, it would have been difficult to point the finger at the water supply as the source of the disease. For example, if the treatment group consisted of factory workers and the control group did not, then differences between the outcomes in the two groups could have been due to the water supply, or to factory work, or both. The final picture would have been much more fuzzy.

Snow’s brilliance lay in identifying two groups that would make his comparison clear. He had set out to establish a causal relation between contaminated water and cholera infection, and to a great extent he succeeded, even though the miasmatists ignored and even ridiculed him. Of course, Snow did not understand the detailed mechanism by which humans contract cholera. That discovery was made in 1883, when the German scientist Robert Koch isolated the Vibrio cholerae, the bacterium that enters the human small intestine and causes cholera.

In fact the Vibrio cholerae had been identified in 1854 by Filippo Pacini in Italy, just about when Snow was analyzing his data in London. Because of the dominance of the miasmatists in Italy, Pacini’s discovery languished unknown. But by the end of the 1800’s, the miasma brigade was in retreat. Subsequent history has vindicated Pacini and John Snow. Snow’s methods led to the development of the field of epidemiology, which is the study of the spread of diseases.

Confounding

Let us now return to more modern times, armed with an important lesson that we have learned along the way:

In an observational study, if the treatment and control groups differ in ways other than the treatment, it is difficult to make conclusions about causality.

An underlying difference between the two groups (other than the treatment) is called a confounding factor, because it might confound you (that is, mess you up) when you try to reach a conclusion.

Example: Coffee and lung cancer. Studies in the 1960’s showed that coffee drinkers had higher rates of lung cancer than those who did not drink coffee. Because of this, some people identified coffee as a cause of lung cancer. But coffee does not cause lung cancer. The analysis contained a confounding factor—smoking. In those days, coffee drinkers were also likely to have been smokers, and smoking does cause lung cancer. Coffee drinking was associated with lung cancer, but it did not cause the disease.

Confounding factors are common in observational studies. Good studies take great care to reduce confounding and to account for its effects.

Randomization

An excellent way to avoid confounding is to assign individuals to the treatment and control groups at random, and then administer the treatment to those who were assigned to the treatment group. Randomization keeps the two groups similar apart from the treatment.

If you are able to randomize individuals into the treatment and control groups, you are running a randomized controlled experiment, also known as a randomized controlled trial (RCT). Sometimes, people’s responses in an experiment are influenced by their knowing which group they are in. So you might want to run a blind experiment in which individuals do not know whether they are in the treatment group or the control group. To make this work, you will have to give the control group a placebo, which is something that looks exactly like the treatment but in fact has no effect.

Randomized controlled experiments have long been a gold standard in the medical field, for example in establishing whether a new drug works. They are also becoming more commonly used in other fields such as economics.

Example: Welfare subsidies in Mexico. In Mexican villages in the 1990’s, children in poor families were often not enrolled in school. One of the reasons was that the older children could go to work and thus help support the family. Santiago Levy , a minister in Mexican Ministry of Finance, set out to investigate whether welfare programs could be used to increase school enrollment and improve health conditions. He conducted an RCT on a set of villages, selecting some of them at random to receive a new welfare program called PROGRESA. The program gave money to poor families if their children went to school regularly and the family used preventive health care. More money was given if the children were in secondary school than in primary school, to compensate for the children’s lost wages, and more money was given for girls attending school than for boys. The remaining villages did not get this treatment, and formed the control group. Because of the randomization, there were no confounding factors and it was possible to establish that PROGRESA increased school enrollment. For boys, the enrollment increased from 73% in the control group to 77% in the PROGRESA group. For girls, the increase was even greater, from 67% in the control group to almost 75% in the PROGRESA group. Due to the success of this experiment, the Mexican government supported the program under the new name OPORTUNIDADES, as an investment in a healthy and well educated population.

In some situations it might not be possible to carry out a randomized controlled experiment, even when the aim is to investigate causality. For example, suppose you want to study the effects of alcohol consumption during pregnancy, and you randomly assign some pregnant women to your “alcohol” group. You should not expect cooperation from them if you present them with a drink. In such situations you will almost invariably be conducting an observational study, not an experiment. Be alert for confounding factors.

Endnote

In the terminology that we have developed, John Snow conducted an observational study, not a randomized experiment. But he called his study a “grand experiment” because, as he wrote, “No fewer than three hundred thousand people … were divided into two groups without their choice, and in most cases, without their knowledge …”

Studies such as Snow’s are sometimes called “natural experiments.” However, true randomization does not simply mean that the treatment and control groups are selected “without their choice.”

The method of randomization can be as simple as tossing a coin. It may also be quite a bit more complex. But every method of randomization consists of a sequence of carefully defined steps that allow chances to be specified mathematically. This has two important consequences.

- It allows us to account—mathematically—for the possibility that randomization produces treatment and control groups that are quite different from each other.

- It allows us to make precise mathematical statements about differences between the treatment and control groups. This in turn helps us make justifiable conclusions about whether the treatment has any effect.

In this course, you will learn how to conduct and analyze your own randomized experiments. That will involve more detail than has been presented in this chapter. For now, just focus on the main idea: to try to establish causality, run a randomized controlled experiment if possible. If you are conducting an observational study, you might be able to establish association but it will be harder to establish causation. Be extremely careful about confounding factors before making conclusions about causality based on an observational study.

Terminology

- observational study

- treatment

- outcome

- association

- causal association

- causality

- comparison

- treatment group

- control group

- epidemiology

- confounding

- randomization

- randomized controlled experiment

- randomized controlled trial (RCT)

- blind

- placebo

Fun facts

John Snow is sometimes called the father of epidemiology, but he was an anesthesiologist by profession. One of his patients was Queen Victoria, who was an early recipient of anesthetics during childbirth.

Florence Nightingale, the originator of modern nursing practices and famous for her work in the Crimean War, was a die-hard miasmatist. She had no time for theories about contagion and germs, and was not one for mincing her words. “There is no end to the absurdities connected with this doctrine,” she said. “Suffice it to say that in the ordinary sense of the word, there is no proof such as would be admitted in any scientific enquiry that there is any such thing as contagion.”

A later RCT established that the conditions on which PROGRESA insisted—children going to school, preventive health care—were not necessary to achieve increased enrollment. Just the financial boost of the welfare payments was sufficient.

Good reads

The Strange Case of the Broad Street Pump: John Snow and the Mystery of Cholera by Sandra Hempel, published by our own University of California Press, reads like a whodunit. It was one of the main sources for this section’s account of John Snow and his work. A word of warning: some of the contents of the book are stomach-churning.

Poor Economics, the best seller by Abhijit Banerjee and Esther Duflo of MIT, is an accessible and lively account of ways to fight global poverty. It includes numerous examples of RCTs, including the PROGRESA example in this section.

Programming in Python

Programming in Python

Programming can dramatically improve our ability to collect and analyze information about the world, which in turn can lead to discoveries through the kind of careful reasoning demonstrated in the previous section. In data science, the purpose of writing a program is to instruct a computer to carry out the steps of an analysis. Computers cannot study the world on their own. People must describe precisely what steps the computer should take in order to collect and analyze data, and those steps are expressed through programs.

Expressions

Programming languages are much simpler than human languages. Nonetheless, there are some rules of grammar to learn in any language, and that is where we will begin. In this text, we will use the Python programming language. Learning the grammar rules is essential, and the same rules used in the most basic programs are also central to more sophisticated programs.

Programs are made up of expressions, which describe to the computer how to combine pieces of data. For example, a multiplication expression consists of a * symbol between two numerical expressions. Expressions, such as 3 * 4, are evaluated by the computer. The value (the result of evaluation) of the last expression in each cell, 12 in this case, is displayed below the cell.

1 | 3 * 4 |

The grammar rules of a programming language are rigid. In Python, the * symbol cannot appear twice in a row. The computer will not try to interpret an expression that differs from its prescribed expression structures. Instead, it will show a SyntaxError error. The Syntax of a language is its set of grammar rules, and a SyntaxError indicates that an expression structure doesn’t match any of the rules of the language.

1 | 3 * * 4 |

Small changes to an expression can change its meaning entirely. Below, the space between the ‘s has been removed. Because ** appears between two numerical expressions, the expression is a well-formed exponentiation expression (the first number raised to the power of the second: 3 times 3 times 3 times 3). The symbols * and * are called operators, and the values they combine are called operands.

1 | 3 ** 4 |

Common Operators. Data science often involves combining numerical values, and the set of operators in a programming language are designed to so that expressions can be used to express any sort of arithmetic. In Python, the following operators are essential. See more Python Operators

| Expression Type | Operator | Example | Value |

|---|---|---|---|

| Addition | + |

2 + 3 |

5 |

| Subtraction | - |

2 - 3 |

-1 |

| Multiplication | * |

2 * 3 |

6 |

| Division | / |

7 / 3 |

2.66667 |

| Remainder | % |

7 % 3 |

1 |

| Exponentiation | ** |

2 ** 0.5 |

1.41421 |

Python expressions obey the same familiar rules of precedence as in algebra: multiplication and division occur before addition and subtraction. Parentheses can be used to group together smaller expressions within a larger expression.

1 | 1 + 2 * 3 * 4 * 5 / 6 ** 3 + 7 + 8 - 9 + 10 |

1 | 1 + 2 * (3 * 4 * 5 / 6) ** 3 + 7 + 8 - 9 + 10 |

This chapter introduces many types of expressions. Learning to program involves trying out everything you learn in combination, investigating the behavior of the computer. What happens if you divide by zero? What happens if you divide twice in a row? You don’t always need to ask an expert (or the Internet); many of these details can be discovered by trying them out yourself.

Names

Names are given to values in Python using an assignment statement. In an assignment, a name is followed by =, which is followed by any expression. The value of the expression to the right of = is assigned to the name. Once a name has a value assigned to it, the value will be substituted for that name in future expressions.

1 | a = 10 |

A previously assigned name can be used in the expression to the right of =.

1 | quarter = 1/4 |

However, only the current value of an expression is assigned to a name. If that value changes later, names that were defined in terms of that value will not change automatically.

1 | quarter = 4 |

Names must start with a letter, but can contain both letters and numbers. A name cannot contain a space; instead, it is common to use an underscore character _ to replace each space. Names are only as useful as you make them; it’s up to the programmer to choose names that are easy to interpret. Typically, more meaningful names can be invented than a and b. For example, to describe the sales tax on a $5 purchase in Berkeley, CA, the following names clarify the meaning of the various quantities involved.

1 | purchase_price = 5 |

Example: Growth Rates

The relationship between two measurements of the same quantity taken at different times is often expressed as a growth rate. For example, the United States federal government employed 2,766,000 people in 2002 and 2,814,000 people in 2012. To compute a growth rate, we must first decide which value to treat as the initial amount. For values over time, the earlier value is a natural choice. Then, we divide the difference between the changed and initial amount by the initial amount.

1 | initial = 2766000 |

It is also typical to subtract one from the ratio of the two measurements, which yields the same value.

1 | (changed/initial) - 1 |

This value is the growth rate over 10 years. A useful property of growth rates is that they don’t change even if the values are expressed in different units. So, for example, we can express the same relationship between thousands of people in 2002 and 2012.

1 | initial = 2766 |

In 10 years, the number of employees of the US Federal Government has increased by only 1.74%. In that time, the total expenditures of the US Federal Government increased from $2.37 trillion to $3.38 trillion in 2012.

1 | initial = 2.37 |

A 42.6% increase in the federal budget is much larger than the 1.74% increase in federal employees. In fact, the number of federal employees has grown much more slowly than the population of the United States, which increased 9.21% in the same time period from 287.6 million people in 2002 to 314.1 million in 2012.

1 | initial = 287.6 |

A growth rate can be negative, representing a decrease in some value. For example, the number of manufacturing jobs in the US decreased from 15.3 million in 2002 to 11.9 million in 2012, a -22.2% growth rate.

1 | initial = 15.3 |

An annual growth rate is a growth rate of some quantity over a single year. An annual growth rate of 0.035, accumulated each year for 10 years, gives a much larger ten-year growth rate of 0.41 (or 41%).

1 | 1.035 * 1.035 * 1.035 * 1.035 * 1.035 * 1.035 * 1.035 * 1.035 * 1.035 * 1.035 - 1 |

This same computation can be expressed using names and exponents.

1 | annual_growth_rate = 0.035 |

Likewise, a ten-year growth rate can be used to compute an equivalent annual growth rate. Below, t is the number of years that have passed between measurements. The following computes the annual growth rate of federal expenditures over the last 10 years.

1 | initial = 2.37 |

The total growth over 10 years is equivalent to a 3.6% increase each year.

In summary, a growth rate g is used to describe the relative size of an initial amount and a changed amount after some amount of time t. To compute changed , apply the growth rate g repeatedly, t times using exponentiation.

1 | initial * (1 + g) ** t |

To compute g, raise the total growth to the power of 1/t and subtract one.

1 | (changed/initial) ** (1/t) - 1 |

Call Expressions

Call expressions invoke functions, which are named operations. The name of the function appears first, followed by expressions in parentheses.

1 | abs(-12) |

1 | round(5 - 1.3) |

1 | max(2, 2 + 3, 4) |

In this last example, the max function is called on three arguments: 2, 5, and 4. The value of each expression within parentheses is passed to the function, and the function returns the final value of the full call expression. The max function can take any number of arguments and returns the maximum.

A few functions are available by default, such as abs and round, but most functions that are built into the Python language are stored in a collection of functions called a module. An import statement is used to provide access to a module, such as math or operator.

1 | import math |

An equivalent expression could be expressed using the + and ** operators instead.

1 | (4 + 5) ** 0.5 |

Operators and call expressions can be used together in an expression. The percent difference between two values is used to compare values for which neither one is obviously initial or changed. For example, in 2014 Florida farms produced 2.72 billion eggs while Iowa farms produced 16.25 billion eggs (http://quickstats.nass.usda.gov/). The percent difference is 100 times the absolute value of the difference between the values, divided by their average. In this case, the difference is larger than the average, and so the percent difference is greater than 100.

1 | florida = 2.72 |

Learning how different functions behave is an important part of learning a programming language. A Jupyter notebook can assist in remembering the names and effects of different functions. When editing a code cell, press the tab key after typing the beginning of a name to bring up a list of ways to complete that name. For example, press tab after math. to see all of the functions available in the math module. Typing will narrow down the list of options. To learn more about a function, place a ? after its name. For example, typing math.log? will bring up a description of the log function in the math module.

1 | math.log? |

Return the logarithm of x to the given base.

If the base not specified, returns the natural logarithm (base e) of x.

The square brackets in the example call indicate that an argument is optional. That is, log can be called with either one or two arguments.

1 | math.log(16, 2) |

1 | math.log(16)/math.log(2) |

The list of Python’s built-in functions is quite long and includes many functions that are never needed in data science applications. The list of mathematical functions in the math module is similarly long. This text will introduce the most important functions in context, rather than expecting the reader to memorize or understand these lists.

Introduction to Tables

We can now apply Python to analyze data. We will work with data stored in Table structures.

Tables are a fundamental way of representing data sets. A table can be viewed in two ways:

- (NoSQL)a sequence of named columns that each describe a single attribute of all entries in a data set, or

- (SQL)a sequence of rows that each contain all information about a single individual in a data set.

We will study tables in great detail in the next several chapters. For now, we will just introduce a few methods without going into technical details.

The table cones has been imported for us; later we will see how, but here we will just work with it. First, let’s take a look at it.

1 | import pandas as pd |

1 | # Show cones |

| Flavor | Color | Price | |

|---|---|---|---|

| 0 | strawberry | pink | 3.55 |

| 1 | chocolate | light brown | 4.75 |

| 2 | chocolate | dark brown | 5.25 |

| 3 | strawberry | pink | 5.25 |

| 4 | chocolate | dark brown | 5.25 |

| 5 | bubblegem | pink | 4.75 |

The table has six rows. Each row corresponds to one ice cream cone. The ice cream cones are the individuals.

Each cone has three attributes: flavor, color, and price. Each column contains the data on one of these attributes, and so all the entries of any single column are of the same kind. Each column has a label. We will refer to columns by their labels.

A table method is just like a function, but it must operate on a table. So the call looks like

name_of_table.method(arguments)

For example, if you want to see just the first two rows of a table, you can use the table method show.

1 | # Show first two rows |

| Flavor | Color | Price | |

|---|---|---|---|

| 0 | strawberry | pink | 3.55 |

| 1 | chocolate | light brown | 4.75 |

… (4 rows omitted)

You can replace 2 by any number of rows. If you ask for more than six, you will only get six, because cones only has six rows.

Choosing Sets of Columns

The method loc creates a new table consisting of only the specified columns.

1 | # Show Flavor |

| Flavor | |

|---|---|

| 0 | strawberry |

| 1 | chocolate |

| 2 | chocolate |

| 3 | strawberry |

| 4 | chocolate |

| 5 | bubblegem |

This leaves the original table unchanged.

1 | # Show cones |

| Flavor | Color | Price | |

|---|---|---|---|

| 0 | strawberry | pink | 3.55 |

| 1 | chocolate | light brown | 4.75 |

| 2 | chocolate | dark brown | 5.25 |

| 3 | strawberry | pink | 5.25 |

| 4 | chocolate | dark brown | 5.25 |

| 5 | bubblegem | pink | 4.75 |

You can loc more than one column, by separating the column labels by commas.

1 | # Show Flavor, Price |

| Flavor | Price | |

|---|---|---|

| 0 | strawberry | 3.55 |

| 1 | chocolate | 4.75 |

| 2 | chocolate | 5.25 |

| 3 | strawberry | 5.25 |

| 4 | chocolate | 5.25 |

| 5 | bubblegem | 4.75 |

You can also drop columns you don’t want. The table above can be created by dropping the Color column.

1 | # Drop Color |

| Flavor | Price | |

|---|---|---|

| 0 | strawberry | 3.55 |

| 1 | chocolate | 4.75 |

| 2 | chocolate | 5.25 |

| 3 | strawberry | 5.25 |

| 4 | chocolate | 5.25 |

| 5 | bubblegem | 4.75 |

You can name this new table and look at it again by just typing its name.

1 | # Redefine cones |

| Flavor | Price | |

|---|---|---|

| 0 | strawberry | 3.55 |

| 1 | chocolate | 4.75 |

| 2 | chocolate | 5.25 |

| 3 | strawberry | 5.25 |

| 4 | chocolate | 5.25 |

| 5 | bubblegem | 4.75 |

Like loc, the drop method creates a smaller table and leaves the original table unchanged. In order to explore your data, you can create any number of smaller tables by using choosing or dropping columns. It will do no harm to your original data table.

Sorting Rows

The sort method creates a new table by arranging the rows of the original table in ascending order of the values in the specified column. Here the cones table has been sorted in ascending order of the price of the cones.

1 | # Sort Price |

| Flavor | Color | Price | |

|---|---|---|---|

| 0 | strawberry | pink | 3.55 |

| 1 | chocolate | light brown | 4.75 |

| 5 | bubblegem | pink | 4.75 |

| 2 | chocolate | dark brown | 5.25 |

| 3 | strawberry | pink | 5.25 |

| 4 | chocolate | dark brown | 5.25 |

To sort in descending order, you can use an optional argument to sort. As the name implies, optional arguments don’t have to be used, but they can be used if you want to change the default behavior of a method.

By default, sort sorts in increasing order of the values in the specified column. To sort in decreasing order, use the optional argument descending=True.

1 | # Sort Price Descending |

| Flavor | Color | Price | |

|---|---|---|---|

| 2 | chocolate | dark brown | 5.25 |

| 3 | strawberry | pink | 5.25 |

| 4 | chocolate | dark brown | 5.25 |

| 1 | chocolate | light brown | 4.75 |

| 5 | bubblegem | pink | 4.75 |

| 0 | strawberry | pink | 3.55 |

Like loc, the drop method, the sort method leaves the original table unchanged.

Selecting Rows that Satisfy a Condition

The where method creates a new table consisting only of the rows that satisfy a given condition. In this section we will work with a very simple condition, which is that the value in a specified column must be equal to a value that we also specify. Thus the where method has two arguments.

The code in the cell below creates a table consisting only of the rows corresponding to chocolate cones.

1 | # Making boolean series for a cones |

| Flavor | Color | Price | |

|---|---|---|---|

| 0 | NaN | NaN | NaN |

| 1 | chocolate | light brown | 4.75 |

| 2 | chocolate | dark brown | 5.25 |

| 3 | NaN | NaN | NaN |

| 4 | chocolate | dark brown | 5.25 |

| 5 | NaN | NaN | NaN |

OR

1 | # Making boolean series for a cones |

| Flavor | Color | Price | |

|---|---|---|---|

| 1 | chocolate | light brown | 4.75 |

| 2 | chocolate | dark brown | 5.25 |

| 4 | chocolate | dark brown | 5.25 |

The arguments, separated by a comma, are the label of the column and the value we are looking for in that column. The where method can also be used when the condition that the rows must satisfy is more complicated. In those situations the call will be a little more complicated as well.

It is important to provide the value exactly. For example, if we specify Chocolate instead of chocolate, then where correctly finds no rows where the flavor is Chocolate.

1 | # Making boolean series for a cones |

| Flavor | Color | Price |

|---|

1 | # Making boolean series for a cones |

| Flavor | Color | Price | |

|---|---|---|---|

| 0 | NaN | NaN | NaN |

| 1 | NaN | NaN | NaN |

| 2 | NaN | NaN | NaN |

| 3 | NaN | NaN | NaN |

| 4 | NaN | NaN | NaN |

| 5 | NaN | NaN | NaN |

Like all the other table methods in this section, where leaves the original table unchanged.

Example: Salaries in the NBA

“The NBA is the highest paying professional sports league in the world,” reported CNN in March 2016. The table nba contains the salaries of all National Basketball Association players in 2015-2016.

Each row represents one player. The columns are:

| Column Label | Description |

|---|---|

PLAYER |

Player's name |

POSITION |

Player's position on team |

TEAM |

Team name |

SALARY |

Player's salary in 2015-2016, in millions of dollars |

The code for the positions is PG (Point Guard), SG (Shooting Guard), PF (Power Forward), SF (Small Forward), and C (Center). But what follows doesn’t involve details about how basketball is played.

The first row shows that Paul Millsap, Power Forward for the Atlanta Hawks, had a salary of almost $18.7 million in 2015-2016.

1 | import pandas as pd |

| RANK | PLAYER | POSITION | TEAM | SALARY ($M) | |

|---|---|---|---|---|---|

| 0 | 1 | Kobe Bryant | SF | Los Angeles Lakers | 25.000000 |

| 1 | 2 | Joe Johnson | SF | Brooklyn Nets | 24.894863 |

| 2 | 3 | LeBron James | SF | Cleveland Cavaliers | 22.970500 |

| 3 | 4 | Carmelo Anthony | SF | New York Knicks | 22.875000 |

| 4 | 5 | Dwight Howard | C | Houston Rockets | 22.359364 |

| ... | ... | ... | ... | ... | ... |

| 412 | 413 | Elliot Williams | SG | Memphis Grizzlies | 0.055722 |

| 413 | 414 | Phil Pressey | PG | Phoenix Suns | 0.055722 |

| 414 | 415 | Jordan McRae | SG | Phoenix Suns | 0.049709 |

| 415 | 416 | Cory Jefferson | PF | Phoenix Suns | 0.049709 |

| 416 | 417 | Thanasis Antetokounmpo | SF | New York Knicks | 0.030888 |

417 rows × 5 columns

By default, the first 20 lines of a table are displayed. You can use head to display table more or fewer from the first row. To display the entire table, set pd.set_option("display.max_rows", None), then call the table directly.

1 | # Get default max_rows to show |

1 | nba |

Fans of Stephen Curry can find his row by using loc.

1 | # Filter "Stephen Curry" |

| RANK | PLAYER | POSITION | TEAM | SALARY ($M) | |

|---|---|---|---|---|---|

| 56 | 57 | Stephen Curry | PG | Golden State Warriors | 11.370786 |

We can also create a new table called warriors consisting of just the data for the Golden State Warriors.

1 | # Filter "Golden State Warriors" |

| RANK | PLAYER | POSITION | TEAM | SALARY ($M) | |

|---|---|---|---|---|---|

| 27 | 28 | Klay Thompson | SG | Golden State Warriors | 15.501000 |

| 33 | 34 | Draymond Green | PF | Golden State Warriors | 14.260870 |

| 37 | 38 | Andrew Bogut | C | Golden State Warriors | 13.800000 |

| 55 | 56 | Andre Iguodala | SF | Golden State Warriors | 11.710456 |

| 56 | 57 | Stephen Curry | PG | Golden State Warriors | 11.370786 |

| 100 | 101 | Jason Thompson | PF | Golden State Warriors | 7.008475 |

| 127 | 128 | Shaun Livingston | PG | Golden State Warriors | 5.543725 |

| 177 | 178 | Harrison Barnes | SF | Golden State Warriors | 3.873398 |

| 178 | 179 | Marreese Speights | C | Golden State Warriors | 3.815000 |

| 236 | 237 | Leandro Barbosa | SG | Golden State Warriors | 2.500000 |

| 267 | 268 | Festus Ezeli | C | Golden State Warriors | 2.008748 |

| 312 | 313 | Brandon Rush | SF | Golden State Warriors | 1.270964 |

| 335 | 336 | Kevon Looney | SF | Golden State Warriors | 1.131960 |

| 402 | 403 | Anderson Varejao | PF | Golden State Warriors | 0.289755 |

The nba table is sorted in alphabetical order of the team names. To see how the players were paid in 2015-2016, it is useful to sort the data by salary. Remember that by default, the sorting is in increasing order.

1 | # Sort nba based on SALARY, ascending=True |

| RANK | PLAYER | POSITION | TEAM | SALARY ($M) | |

|---|---|---|---|---|---|

| 416 | 417 | Thanasis Antetokounmpo | SF | New York Knicks | 0.030888 |

| 415 | 416 | Cory Jefferson | PF | Phoenix Suns | 0.049709 |

| 414 | 415 | Jordan McRae | SG | Phoenix Suns | 0.049709 |

| 411 | 412 | Orlando Johnson | SG | Phoenix Suns | 0.055722 |

| 413 | 414 | Phil Pressey | PG | Phoenix Suns | 0.055722 |

| ... | ... | ... | ... | ... | ... |

| 4 | 5 | Dwight Howard | C | Houston Rockets | 22.359364 |

| 3 | 4 | Carmelo Anthony | SF | New York Knicks | 22.875000 |

| 2 | 3 | LeBron James | SF | Cleveland Cavaliers | 22.970500 |

| 1 | 2 | Joe Johnson | SF | Brooklyn Nets | 24.894863 |

| 0 | 1 | Kobe Bryant | SF | Los Angeles Lakers | 25.000000 |

417 rows × 5 columns

These figures are somewhat difficult to compare as some of these players changed teams during the season and received salaries from more than one team; only the salary from the last team appears in the table.

The CNN report is about the other end of the salary scale – the players who are among the highest paid in the world. To identify these players we can sort in descending order of salary and look at the top few rows.

1 | # Sort nba based on SALARY, ascending=False |

| RANK | PLAYER | POSITION | TEAM | SALARY ($M) | |

|---|---|---|---|---|---|

| 0 | 1 | Kobe Bryant | SF | Los Angeles Lakers | 25.000000 |

| 1 | 2 | Joe Johnson | SF | Brooklyn Nets | 24.894863 |

| 2 | 3 | LeBron James | SF | Cleveland Cavaliers | 22.970500 |

| 3 | 4 | Carmelo Anthony | SF | New York Knicks | 22.875000 |

| 4 | 5 | Dwight Howard | C | Houston Rockets | 22.359364 |

| ... | ... | ... | ... | ... | ... |

| 412 | 413 | Elliot Williams | SG | Memphis Grizzlies | 0.055722 |

| 413 | 414 | Phil Pressey | PG | Phoenix Suns | 0.055722 |

| 414 | 415 | Jordan McRae | SG | Phoenix Suns | 0.049709 |

| 415 | 416 | Cory Jefferson | PF | Phoenix Suns | 0.049709 |

| 416 | 417 | Thanasis Antetokounmpo | SF | New York Knicks | 0.030888 |

417 rows × 5 columns

Kobe Bryant, since retired, was the highest earning NBA player in 2015-2016.

R.I.P Kobe.

Data Types

Every value has a type, and the built-in type function returns the type of the result of any expression.

One type we have encountered already is a built-in function. Python indicates that the type is a builtin_function_or_method; the distinction between a function and a method is not important at this stage.

1 | type(abs) |

This chapter will explore many useful types of data.

Numbers

Computers are designed to perform numerical calculations, but there are some important details about working with numbers that every programmer working with quantitative data should know. Python (and most other programming languages) distinguishes between two different types of numbers:

- Integers are called

intvalues in the Python language. They can only represent whole numbers (negative, zero, or positive) that don’t have a fractional component. - Real numbers are called

floatvalues (or floating point values) in the Python language. They can represent whole or fractional numbers but have some limitations.

The type of a number is evident from the way it is displayed: int values have no decimal point and float values always have a decimal point.

1 | # Some int values |

1 | 1 + 3 |

1 | -1234567890000000000 |

1 | # Some float values |

1 | 3.0 |

When a float value is combined with an int value using some arithmetic operator, then the result is always a float value. In most cases, two integers combine to form another integer, but any number (int or float) divided by another will be a float value. Very large or very small float values are displayed using scientific notation.

1 | 1.5 + 2 |

1 | 3 / 1 |

1 | -12345678900000000000.0 |

The type function can be used to find the type of any number.

1 | type(3) |

1 | type(3 / 1) |

The type of an expression is the type of its final value. So, the type function will never indicate that the type of an expression is a name, because names are always evaluated to their assigned values.

1 | x = 3 |

1 | type(x + 2.5) |

More About Float Values

Float values are very flexible, but they do have limits.

- A float can represent extremely large and extremely small numbers. There are limits, but you will rarely encounter them.

- A float only represents 15 or 16 significant digits for any number; the remaining precision is lost. This limited precision is enough for the vast majority of applications.

- After combining float values with arithmetic, the last few digits may be incorrect. Small rounding errors are often confusing when first encountered.

The first limit can be observed in two ways. If the result of a computation is a very large number, then it is represented as infinite. If the result is a very small number, then it is represented as zero.

1 | 2e306 * 10 |

1 | 2e306 * 100 |

1 | 2e-322 / 10 |

1 | 2e-322 / 100 |

The second limit can be observed by an expression that involves numbers with more than 15 significant digits. These extra digits are discarded before any arithmetic is carried out.

1 | 0.6666666666666666 - 0.6666666666666666123456789 |

The third limit can be observed when taking the difference between two expressions that should be equivalent. For example, the expression 2 ** 0.5 computes the square root of 2, but squaring this value does not exactly recover 2.

1 | 2 ** 0.5 |

1 | (2 ** 0.5) * (2 ** 0.5) |

1 | (2 ** 0.5) * (2 ** 0.5) - 2 |

The final result above is 0.0000000000000004440892098500626, a number that is very close to zero. The correct answer to this arithmetic expression is 0, but a small error in the final significant digit appears very different in scientific notation. This behavior appears in almost all programming languages because it is the result of the standard way that arithmetic is carried out on computers.

Although float values are not always exact, they are certainly reliable and work the same way across all different kinds of computers and programming languages.

Strings

Much of the world’s data is text, and a piece of text represented in a computer is called a string. A string can represent a word, a sentence, or even the contents of every book in a library. Since text can include numbers (like this: 5) or truth values (True), a string can also describe those things.

The meaning of an expression depends both upon its structure and the types of values that are being combined. So, for instance, adding two strings together produces another string. This expression is still an addition expression, but it is combining a different type of value.

1 | "data" + "science" |

Addition is completely literal; it combines these two strings together without regard for their contents. It doesn’t add a space because these are different words; that’s up to the programmer (you) to specify.

1 | "data" + " " + "science" |

Single and double quotes can both be used to create strings: 'hi' and "hi" are identical expressions. Double quotes are often preferred because they allow you to include apostrophes inside of strings.

1 | "This won't work with a single-quoted string!" |

Why not? Try it out.

The str function returns a string representation of any value. Using this function, strings can be constructed that have embedded values.

1 | "That's " + str(1 + 1) + ' ' + str(True) |

String Methods

From an existing string, related strings can be constructed using string methods, which are functions that operate on strings. These methods are called by placing a dot after the string, then calling the function.

For example, the following method generates an uppercased version of a string.

1 | "loud".upper() |

Perhaps the most important method is replace, which replaces all instances of a substring within the string. The replace method takes two arguments, the text to be replaced and its replacement.

1 | 'hitchhiker'.replace('hi', 'ma') |

String methods can also be invoked using variable names, as long as those names are bound to strings. So, for instance, the following two-step process generates the word “degrade” starting from “train” by first creating “ingrain” and then applying a second replacement.

1 | s = "train" |

Note that the line t = s.replace(‘t’, ‘ing’) doesn’t change the string s, which is still “train”. The method call s.replace(‘t’, ‘ing’) just has a value, which is the string “ingrain”.

1 | s |

This is the first time we’ve seen methods, but methods are not unique to strings. As we will see shortly, other types of objects can have them.

Comparisons

Boolean values most often arise from comparison operators. Python includes a variety of operators that compare values. For example, 3 is larger than 1 + 1.

1 | 3 > 1 + 1 |

The value True indicates that the comparison is valid; Python has confirmed this simple fact about the relationship between 3 and 1+1. The full set of common comparison operators are listed below.

| Comparison | Operator | True example | False Example |

|---|---|---|---|

| Less than | < | 2 < 3 | 2 < 2 |

| Greater than | > | 3>2 | 3>3 |

| Less than or equal | <= | 2 <= 2 | 3 <= 2 |

| Greater or equal | >= | 3 >= 3 | 2 >= 3 |

| Equal | == | 3 == 3 | 3 == 2 |

| Not equal | != | 3 != 2 | 2 != 2 |

An expression can contain multiple comparisons, and they all must hold in order for the whole expression to be True. For example, we can express that 1+1 is between 1 and 3 using the following expression.

1 | 1 < 1 + 1 < 3 |

The average of two numbers is always between the smaller number and the larger number. We express this relationship for the numbers x and y below. You can try different values of x and y to confirm this relationship.

1 | x = 12 |

Strings can also be compared, and their order is alphabetical. A shorter string is less than a longer string that begins with the shorter string.

1 | "Dog" > "Catastrophe" > "Cat" |

Sequences

Sequences

Values can be grouped together into collections, which allows programmers to organize those values and refer to all of them with a single name. By grouping values together, we can write code that performs a computation on many pieces of data at once.

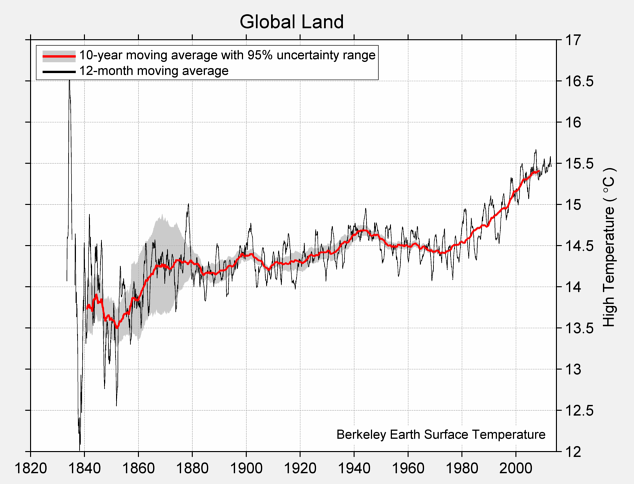

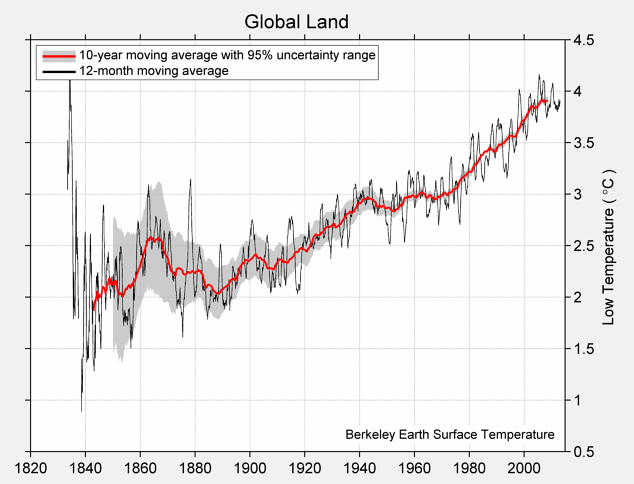

Calling the function np.array on several values places them into an array, which is a kind of sequential collection. Below, we collect four different temperatures into an array called highs. These are the estimated average daily high temperatures over all land on Earth (in degrees Celsius) for the decades surrounding 1850, 1900, 1950, and 2000, respectively, expressed as deviations from the average absolute high temperature between 1951 and 1980, which was 14.48 degrees.

1 | import numpy as np |

Collections allow us to pass multiple values into a function using a single name. For instance, the sum function computes the sum of all values in a collection, and the len function computes its length. (That’s the number of values we put in it.) Using them together, we can compute the average of a collection.

1 | sum(highs)/len(highs) |

The complete chart of daily high and low temperatures appears below.

Mean of Daily High Temperature

Mean of Daily Low Temperature

Arrays

While there are many kinds of collections in Python, we will work primarily with arrays in this class. We’ve already seen that the np.array function can be used to create arrays of numbers.

Arrays can also contain strings or other types of values, but a single array can only contain a single kind of data. (It usually doesn’t make sense to group together unlike data anyway.) For example:

1 | import numpy as np |

Returning to the temperature data, we create arrays of average daily high temperatures for the decades surrounding 1850, 1900, 1950, and 2000.

1 | baseline_high = 14.48 |

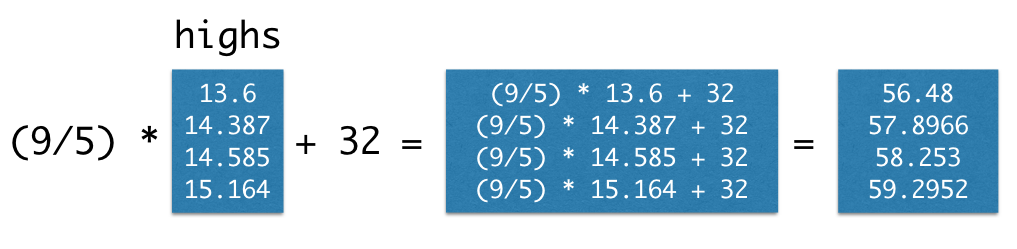

Arrays can be used in arithmetic expressions to compute over their contents. When an array is combined with a single number, that number is combined with each element of the array. Therefore, we can convert all of these temperatures to Fahrenheit by writing the familiar conversion formula.

1 | (9/5) * highs + 32 |

Arrays also have methods, which are functions that operate on the array values. The mean of a collection of numbers is its average value: the sum divided by the length. Each pair of parentheses in the examples below is part of a call expression; it’s calling a function with no arguments to perform a computation on the array called highs.

1 | highs.size |

1 | highs.sum() |

1 | highs.mean() |

Functions on Arrays

The numpy package, abbreviated np in programs, provides Python programmers with convenient and powerful functions for creating and manipulating arrays

1 | import numpy as np |

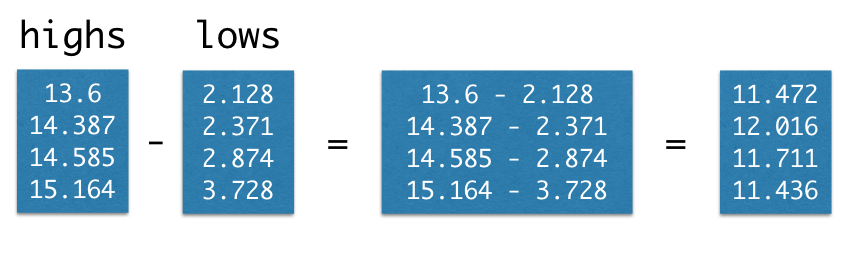

For example, the diff function computes the difference between each adjacent pair of elements in an array. The first element of the diff is the second element minus the first.

1 | np.diff(highs) |

1 | np.diff(np.array([4, 3, 2, 1])) |

The full Numpy reference lists these functions exhaustively, but only a small subset are used commonly for data processing applications. These are grouped into different packages within np. Learning this vocabulary is an important part of learning the Python language, so refer back to this list often as you work through examples and problems.

However, you don’t need to memorize these. Use this as a reference.

Each of these functions takes an array as an argument and returns a single value.

| Function | Description |

|---|---|

np.prod |

Multiply all elements together |

np.sum |

Add all elements together |

np.all |

Test whether all elements are true values (non-zero numbers are true) |

np.any |

Test whether any elements are true values (non-zero numbers are true) |

np.count_nonzero |

Count the number of non-zero elements |

Each of these functions takes an array as an argument and returns an array of values.

| Function | Description |

|---|---|

np.diff |

Difference between adjacent elements |

np.round |

Round each number to the nearest integer (whole number) |

np.cumprod |

A cumulative product: for each element, multiply all elements so far |

np.cumsum |

A cumulative sum: for each element, add all elements so far |

np.exp |

Exponentiate each element |

np.log |

Take the natural logarithm of each element |

np.sqrt |

Take the square root of each element |

np.sort |

Sort the elements |

Each of these functions takes an array of strings and returns an array.

| Function | Description |

|---|---|

np.char.lower |

Lowercase each element |

np.char.upper |

Uppercase each element |

np.char.strip |

Remove spaces at the beginning or end of each element |

np.char.isalpha |

Whether each element is only letters (no numbers or symbols) |

np.char.isnumeric |

Whether each element is only numeric (no letters) |

Each of these functions takes both an array of strings and a search string; each returns an array.

| Function | Description |

|---|---|

np.char.count |

Count the number of times a search string appears among the elements of an array |

np.char.find |

The position within each element that a search string is found first |

np.char.rfind |

The position within each element that a search string is found last |

np.char.startswith |

Whether each element starts with the search string |

Ranges

A range is an array of numbers in increasing or decreasing order, each separated by a regular interval. Ranges are useful in a surprisingly large number of situations, so it’s worthwhile to learn about them.

Ranges are defined using the np.arange function, which takes either one, two, or three arguments: a start, and stop, and a step.

If you pass one argument to np.arange, this becomes the stop value, with start=0, step=1 assumed. Two arguments give the start and stop with step=1 assumed. Three arguments give the start, stop and step explicitly.

A range always includes its start value, but does not include its end value. It counts up by step, and it stops before it gets to the end.

np.arange(stop): An array starting with 0 of increasing consecutive integers, stopping before stop.

1 | import numpy as np |

Notice how the array starts at 0 and goes only up to 4, not to the end value of 5.

np.arange(start=0, stop): An array of consecutive increasing integers from start, stopping before stop.

- The following two examples are the same.

1 | np.arange(3, 9) |

np.arange(start=0, stop, step=1): A range with a difference of step between each pair of consecutive values, starting from start and stopping before end.

- The following two examples are the same.

1 | np.arange(3, 30, 5) |

This array starts at 3, then takes a step of 5 to get to 8, then another step of 5 to get to 13, and so on.

When you specify a step, the start, end, and step can all be either positive or negative and may be whole numbers or fractions.

- The following two examples are the same.

1 | np.arange(1.5, -2, -0.5) |

Example: Leibniz’s formula for $\pi$

The great German mathematician and philosopher Gottfried Wilhelm Leibniz (1646 - 1716) discovered a wonderful formula for $\pi$ as an infinite sum of simple fractions. The formula is

$$\pi = 4 \cdot \left(1 - \frac{1}{3} + \frac{1}{5} - \frac{1}{7} + \frac{1}{9} - \frac{1}{11} + \dots\right)$$

Though some math is needed to establish this, we can use arrays to convince ourselves that the formula works. Let’s calculate the first 5000 terms of Leibniz’s infinite sum and see if it is close to $\pi$.

$$4 \cdot \left(1 - \frac{1}{3} + \frac{1}{5} - \frac{1}{7} + \frac{1}{9} - \frac{1}{11} + \dots - \frac{1}{9999} \right)$$

We will calculate this finite sum by adding all the positive terms first and then subtracting the sum of all the negative terms[1] :

$$4 \cdot \left( \left(1 + \frac{1}{5} + \frac{1}{9} + \dots + \frac{1}{9997} \right) - \left(\frac{1}{3} + \frac{1}{7} + \frac{1}{11} + \dots + \frac{1}{9999} \right) \right)$$