AWS Aurora

Introduction

MySQL and PostgreSQL-compatible relational database built for the cloud. Performance and availability of commercial-grade databases at 1/10th the cost.

Amazon Aurora is a MySQL and PostgreSQL-compatible relational database built for the cloud, that combines the performance and availability of traditional enterprise databases with the simplicity and cost-effectiveness of open source databases.

Amazon Aurora is up to five times faster than standard MySQL databases and three times faster than standard PostgreSQL databases. It provides the security, availability, and reliability of commercial databases at 1/10th the cost. Amazon Aurora is fully managed by Amazon Relational Database Service (RDS), which automates time-consuming administration tasks like hardware provisioning, database setup, patching, and backups.

Amazon Aurora features a distributed, fault-tolerant, self-healing storage system that auto-scales up to 128TB per database instance. It delivers high performance and availability with up to 15 low-latency read replicas, point-in-time recovery, continuous backup to Amazon S3, and replication across three Availability Zones (AZs).

Visit the Amazon RDS Management Console to create your first Aurora database instance and start migrating your MySQL and PostgreSQL databases.

- Performance

- CPU

- Memory

- Storage

- IOPS

- Manageability

- Security

- Access Control

- Encryption

- Availability and Durability

- Automated Backup

- Manual Snapshot

- Multi-AZs

- Read Replica

- Diaster Recovery

- Migration

- Monitoring

Amazon Aurora connection management

Types of Aurora endpoints

- Cluster endpoint: A cluster endpoint (or writer endpoint) for an Aurora DB cluster connects to the current primary DB instance for that DB cluster. Each Aurora DB cluster has one cluster endpoint and one primary DB instance. Cluster endpoint is the only endpoint has write capacity.

mydbcluster.cluster-123456789012.us-east-1.rds.amazonaws.com:3306

- Reader endpoint: A reader endpoint for an Aurora DB cluster provides load-balancing support for read-only connections to the DB cluster. Each Aurora DB cluster has one reader endpoint. If the cluster contains one or more Aurora Replicas, the reader endpoint load-balances each connection request among the Aurora Replicas. The reader endpoint load-balances connections to available Aurora Replicas in an Aurora DB cluster. It doesn’t load-balance individual queries. If you want to load-balance each query to distribute the read workload for a DB cluster, open a new connection to the reader endpoint for each query. If your cluster contains only a primary instance and no Aurora Replicas, the reader endpoint connects to the primary instance. In that case, you can perform write operations through this endpoint.

mydbcluster.cluster-ro-123456789012.us-east-1.rds.amazonaws.com:3306

- Custom endpoint: A custom endpoint for an Aurora cluster represents a set of DB instances that you choose.

myendpoint.cluster-custom-123456789012.us-east-1.rds.amazonaws.com:3306

- Instance endpoint: An instance endpoint connects to a specific DB instance within an Aurora cluster. The instance endpoint provides direct control over connections to the DB cluster, for scenarios where using the cluster endpoint or reader endpoint might not be appropriate. For example, your client application might require more fine-grained load balancing based on workload type. In this case, you can configure multiple clients to connect to different Aurora Replicas in a DB cluster to distribute read workloads.

mydbinstance.123456789012.us-east-1.rds.amazonaws.com:3306

Performance

Managing Amazon Aurora MySQL

Managing performance and scaling for Amazon Aurora MySQL

| Instance class | max_connections default value |

|---|---|

|

db.t2.small |

45 |

|

db.t2.medium |

90 |

|

db.t3.small |

45 |

|

db.t3.medium |

90 |

|

db.r3.large |

1000 |

|

db.r3.xlarge |

2000 |

|

db.r3.2xlarge |

3000 |

|

db.r3.4xlarge |

4000 |

|

db.r3.8xlarge |

5000 |

|

db.r4.large |

1000 |

|

db.r4.xlarge |

2000 |

|

db.r4.2xlarge |

3000 |

|

db.r4.4xlarge |

4000 |

|

db.r4.8xlarge |

5000 |

|

db.r4.16xlarge |

6000 |

|

db.r5.large |

1000 |

|

db.r5.xlarge |

2000 |

|

db.r5.2xlarge |

3000 |

|

db.r5.4xlarge |

4000 |

|

db.r5.8xlarge |

5000 |

|

db.r5.12xlarge |

6000 |

|

db.r5.16xlarge |

6000 |

|

db.r5.24xlarge |

7000 |

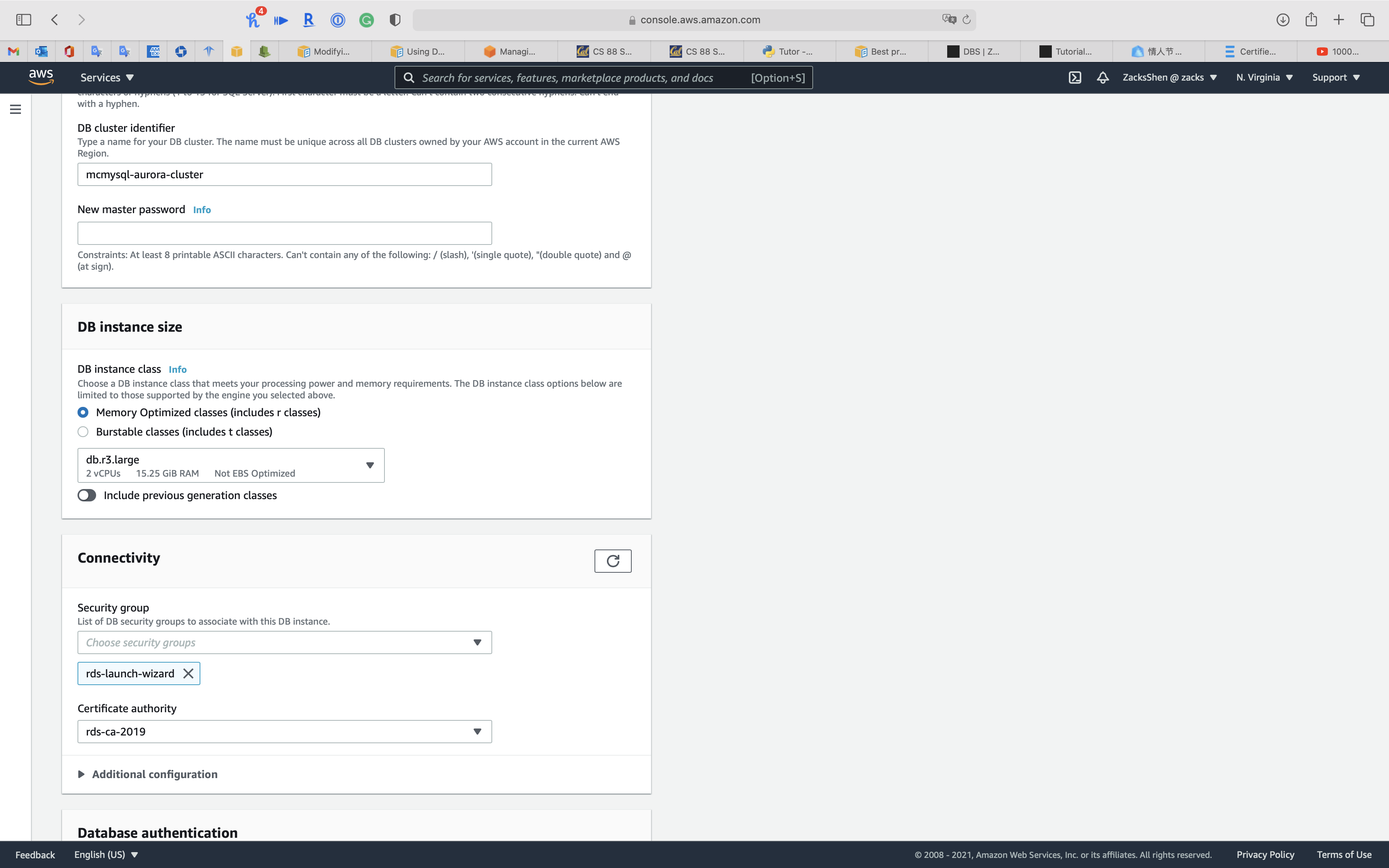

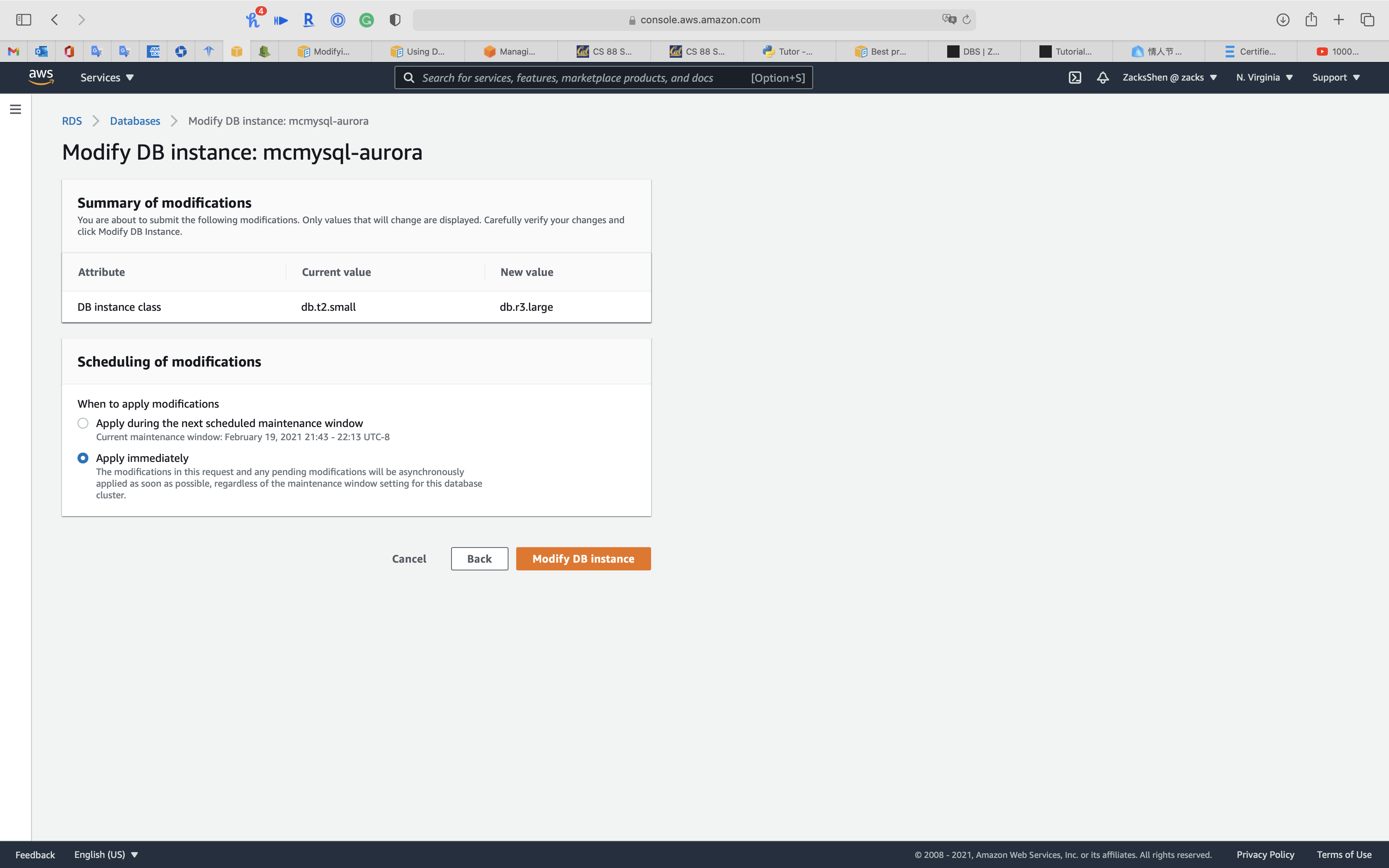

Resize Node

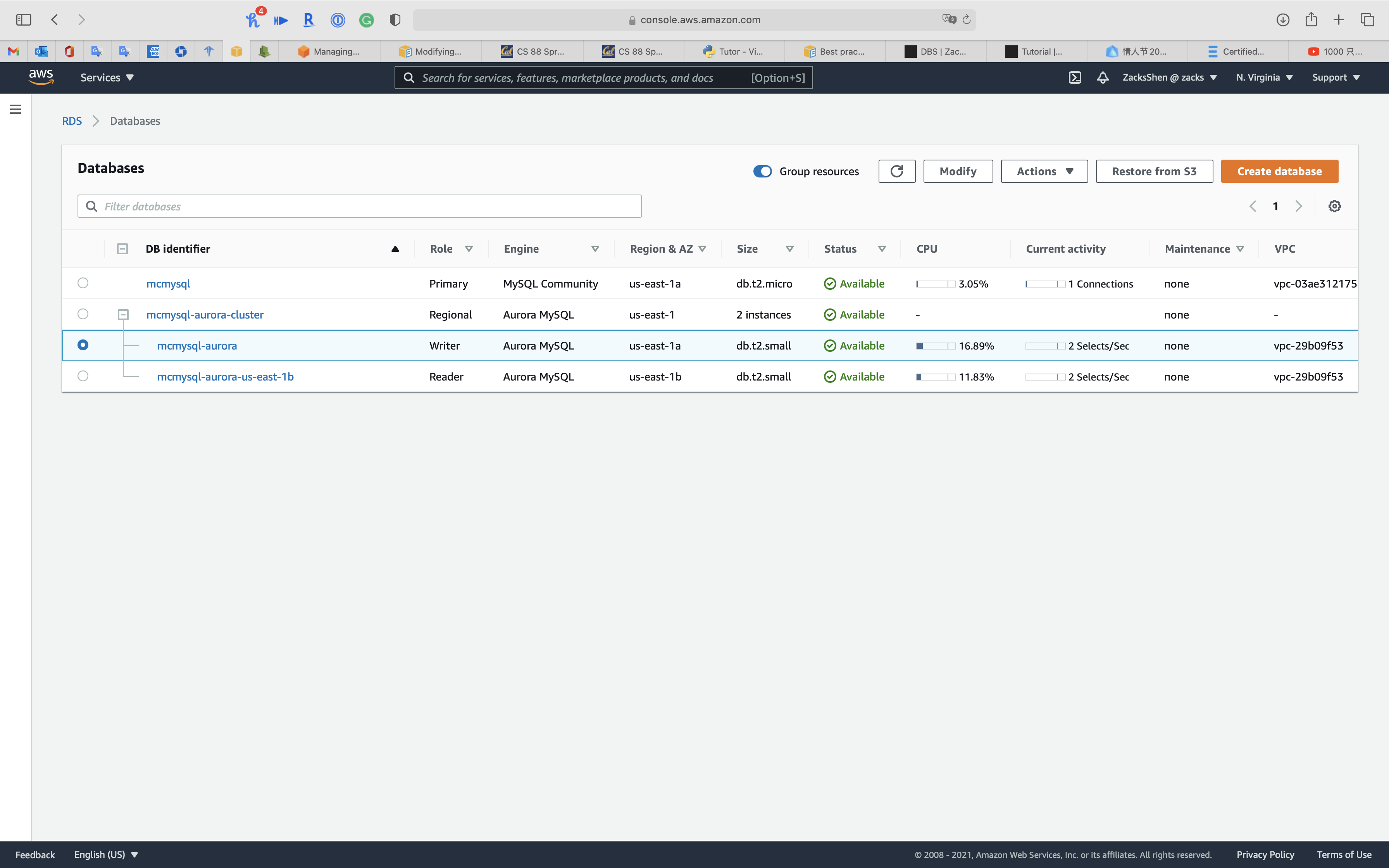

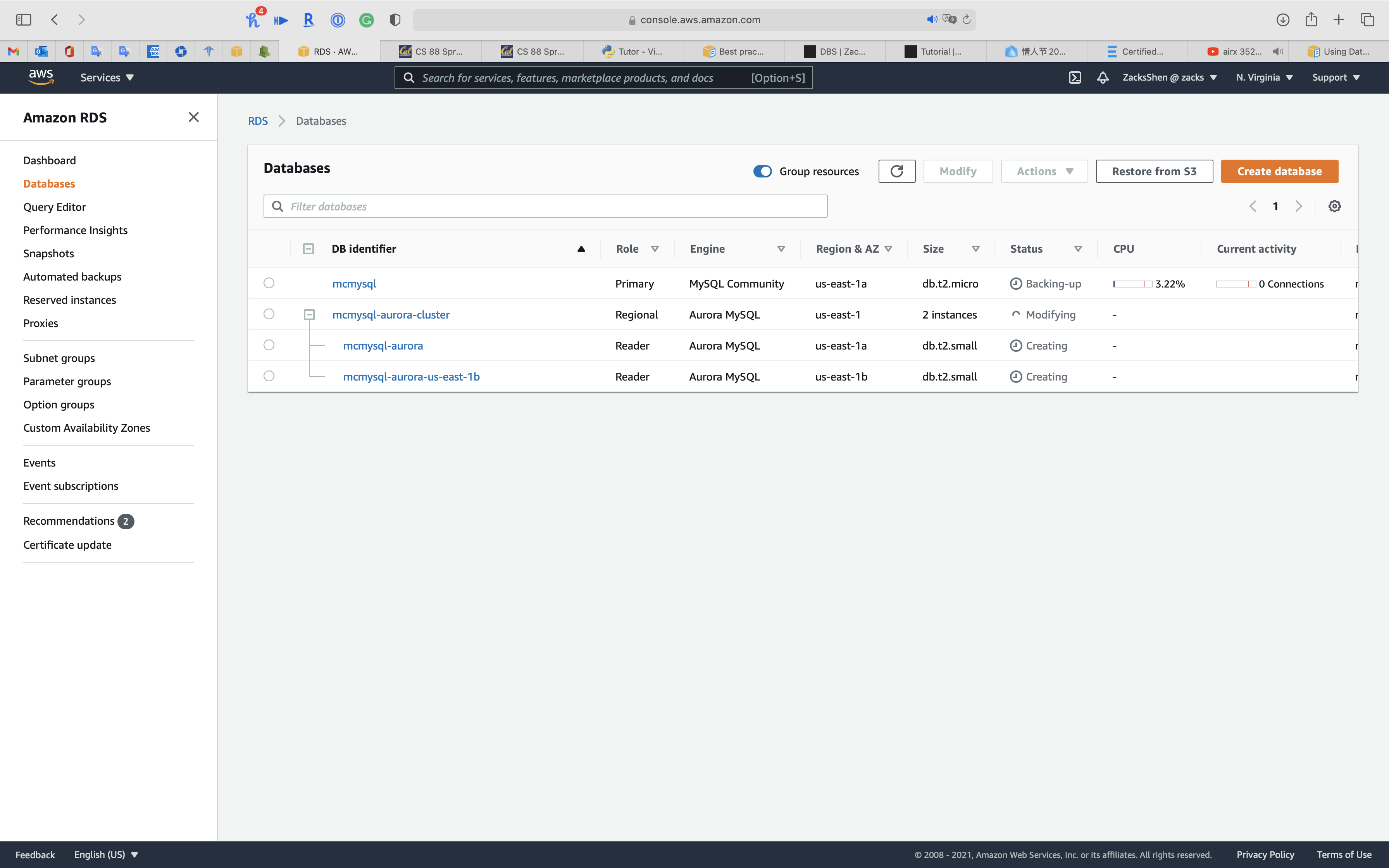

Service -> RDS -> Databases

Select one node of your aurora cluster then click on Modify

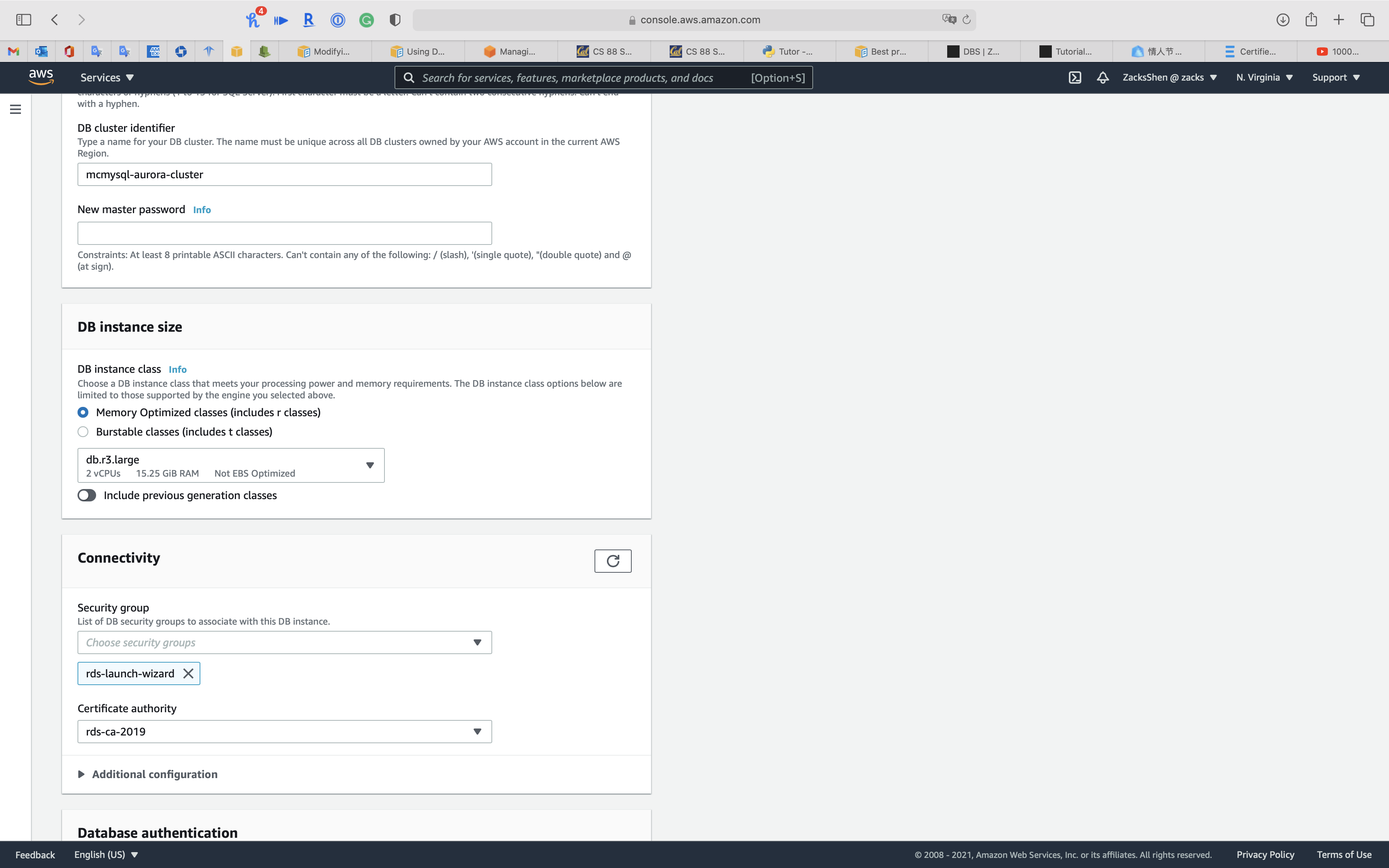

Under DB instance size

Select Memory Optimized classes (includes r classes) for powerful use case. Also, t series does not support Database Activity Streams through Kinesis

Select db.r3.large

Click on Continue

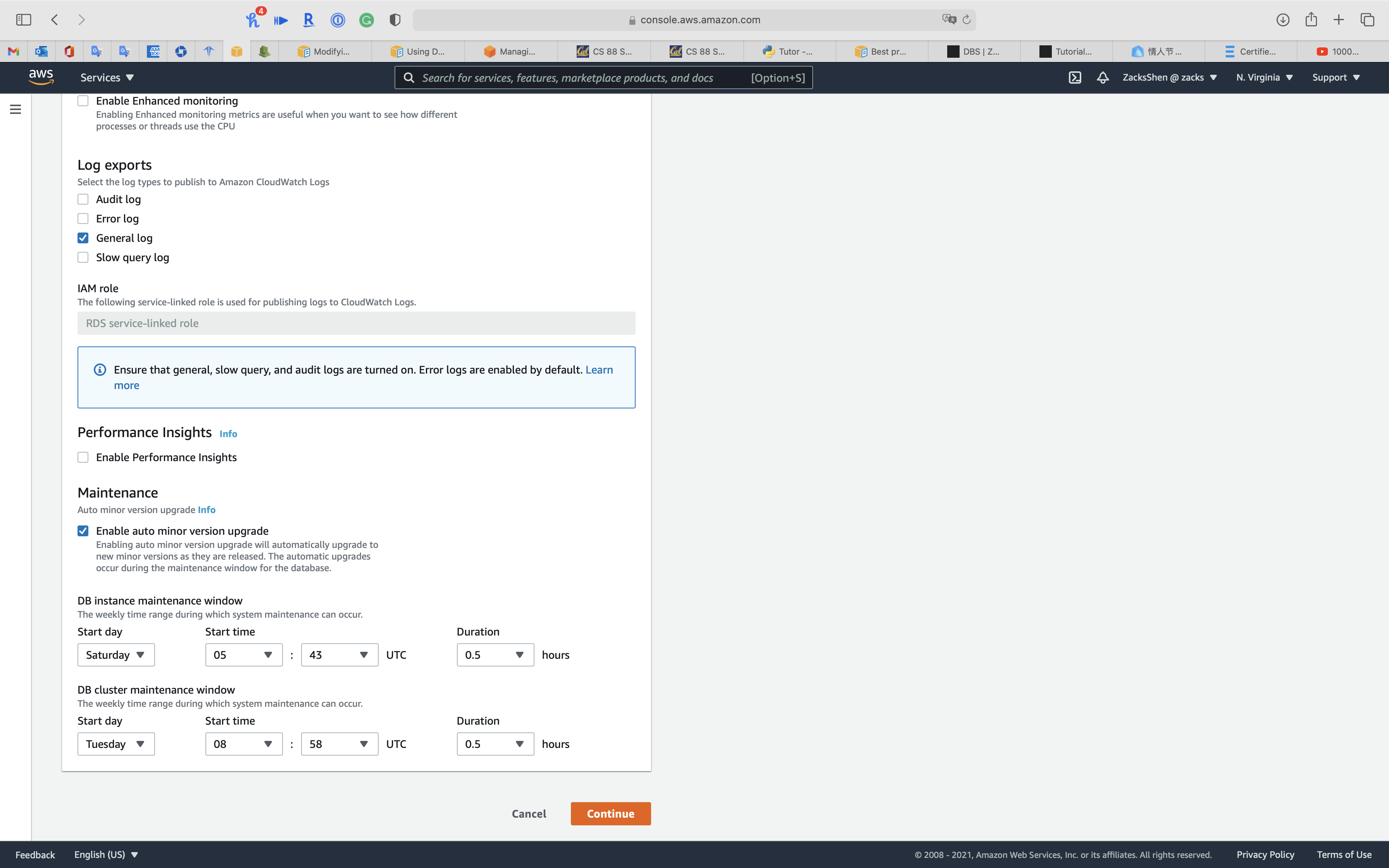

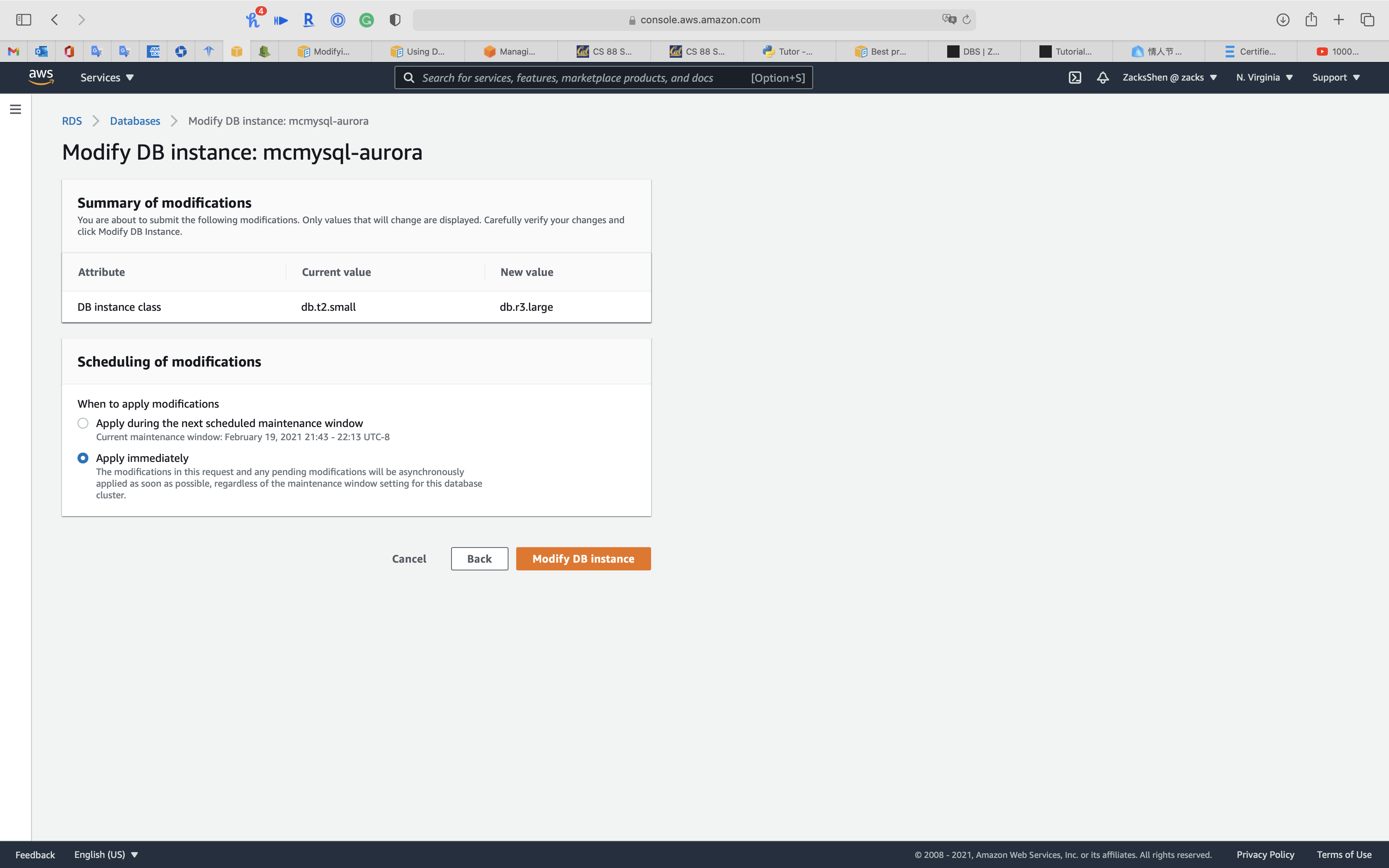

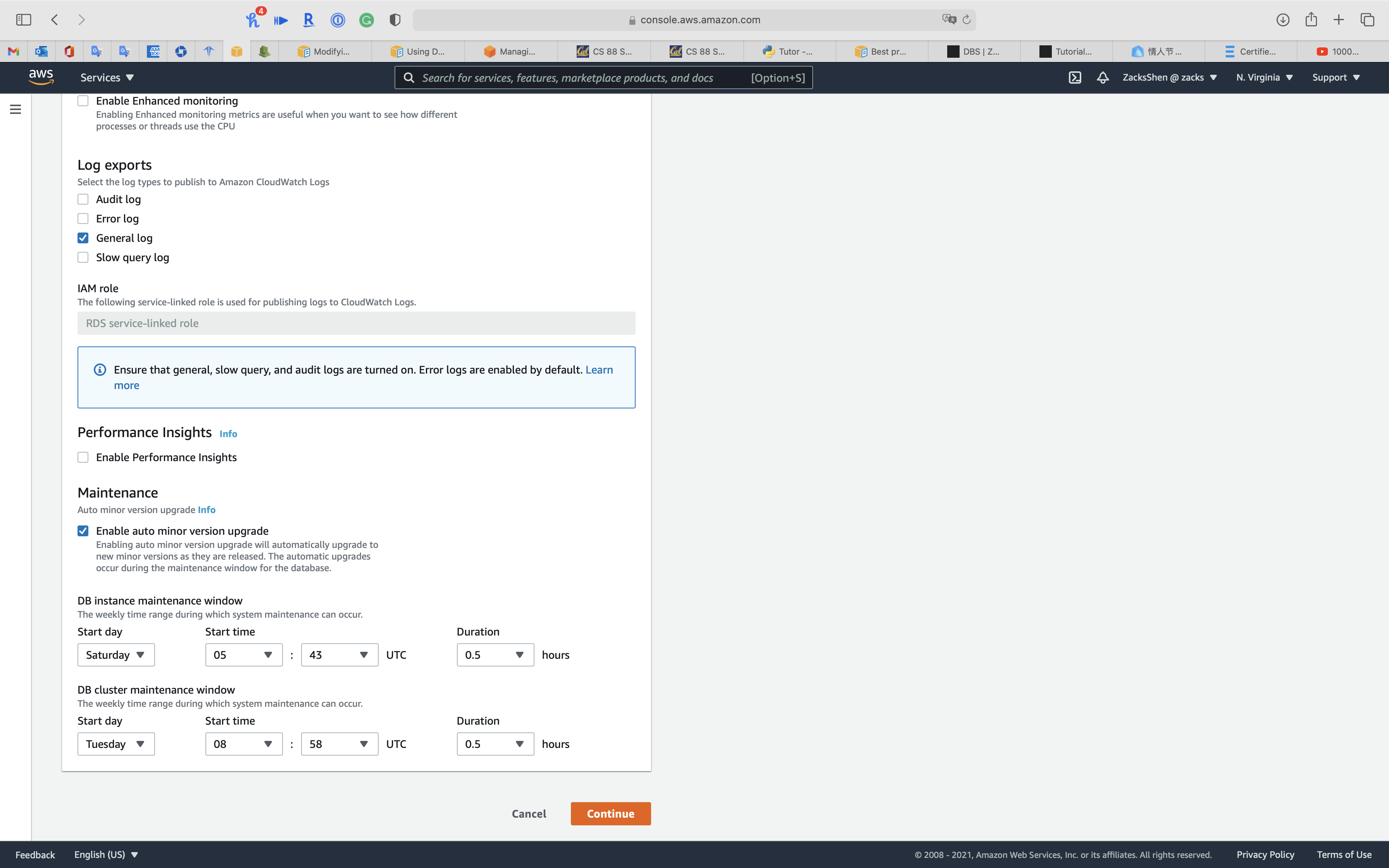

Select Apply immediately

Click on Modify DB instance

Manageability

Managing Aurora DB Cluster

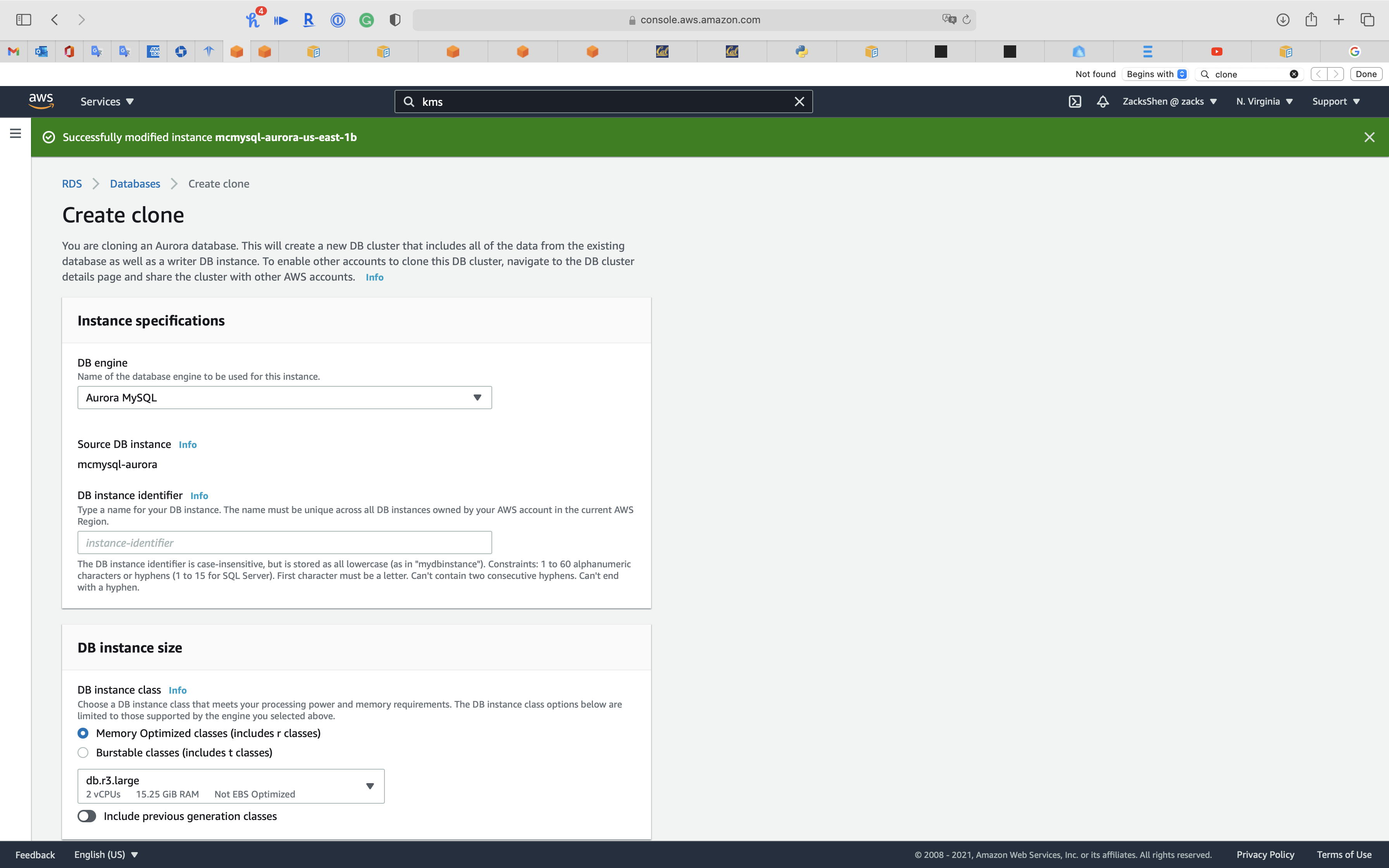

Cloning an Aurora DB cluster volume

Using the Aurora cloning feature, you can quickly and cost-effectively create a new cluster containing a duplicate of an Aurora cluster volume and all its data. We refer to the new cluster and its associated cluster volume as a clone. Creating a clone is faster and more space-efficient than physically copying the data using a different technique such as restoring a snapshot.

Managing Amazon Aurora MySQL

Testing Amazon Aurora using fault injection queries

You can test the fault tolerance of your Amazon Aurora DB cluster by using fault injection queries. Fault injection queries are issued as SQL commands to an Amazon Aurora instance and they enable you to schedule a simulated occurrence of one of the following events:

- A crash of a writer or reader DB instance

- A failure of an Aurora Replica

- A disk failure

- Disk congestion

When a fault injection query specifies a crash, it forces a crash of the Aurora DB instance. The other fault injection queries result in simulations of failure events, but don’t cause the event to occur. When you submit a fault injection query, you also specify an amount of time for the failure event simulation to occur for.

You can submit a fault injection query to one of your Aurora Replica instances by connecting to the endpoint for the Aurora Replica. For more information, see Amazon Aurora connection management.

Backtracking an Aurora DB cluster

With Amazon Aurora with MySQL compatibility, you can backtrack a DB cluster to a specific time, without restoring data from a backup.

Overview of backtracking

Backtracking “rewinds” the DB cluster to the time you specify. Backtracking is not a replacement for backing up your DB cluster so that you can restore it to a point in time. However, backtracking provides the following advantages over traditional backup and restore:

- You can easily undo mistakes. If you mistakenly perform a destructive action, such as a DELETE without a WHERE clause, you can backtrack the DB cluster to a time before the destructive action with minimal interruption of service.

- You can backtrack a DB cluster quickly. Restoring a DB cluster to a point in time launches a new DB cluster and restores it from backup data or a DB cluster snapshot, which can take hours. Backtracking a DB cluster doesn’t require a new DB cluster and rewinds the DB cluster in minutes.

- You can explore earlier data changes. You can repeatedly backtrack a DB cluster back and forth in time to help determine when a particular data change occurred. For example, you can backtrack a DB cluster three hours and then backtrack forward in time one hour. In this case, the backtrack time is two hours before the original time.

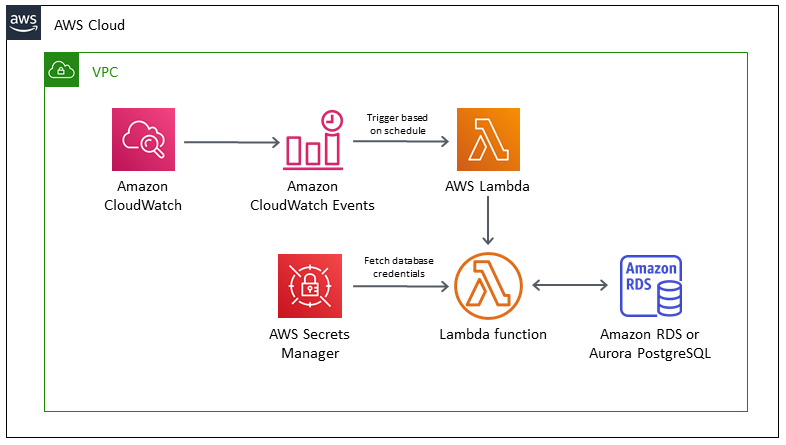

Cron job

Schedule jobs for Amazon RDS and Aurora PostgreSQL using Lambda and Secrets Manager

Security

Access Control

You can authenticate to your DB cluster using AWS IAM database authentication. With this authentication method, you don’t need to use a password when you connect to a DB cluster. Instead, you use an authentication token that expires 15 minutes after creation.

- Enable IAM database authentication on the Aurora cluster.

- Create a database user for each team member without a password.

- Attach an IAM policy to each administrator’s IAM user account that grants the connect privilege using their database user account.

Encryption

SSL/TLS

Using SSL/TLS to encrypt a connection to a DB cluster

Security with Amazon Aurora MySQL

Using SSL/TLS to encrypt a connection to a DB cluster

Amazon Virtual Private Cloud VPCs and Amazon Aurora

Updating applications to connect to Aurora MySQL DB clusters using new SSL/TLS certificates

How do I troubleshoot problems connecting to Amazon Aurora?

How Does SSL Work? | SSL Certificates and TLS

When the require_secure_transport parameter is set to ON for a DB cluster, a database client can connect to it if it can establish an encrypted connection. Otherwise, an error message similar to the following is returned to the client:

1 | MySQL Error 3159 (HY000): Connections using insecure transport are prohibited while --require_secure_transport=ON. |

To get a root certificate that works for all AWS Regions, excluding opt-in AWS Regions, download it from https://s3.amazonaws.com/rds-downloads/rds-ca-2019-root.pem

This root certificate is a trusted root entity and should work in most cases but might fail if your application doesn’t accept certificate chains. If your application doesn’t accept certificate chains, download the AWS Region–specific certificate from the list of intermediate certificates found later in this section.

To get a certificate bundle that contains both the intermediate and root certificates, download from https://s3.amazonaws.com/rds-downloads/rds-combined-ca-bundle.pem

If your application is on Microsoft Windows and requires a PKCS7 file, you can download the PKCS7 certificate bundle. This bundle contains both the intermediate and root certificates at https://s3.amazonaws.com/rds-downloads/rds-combined-ca-bundle.p7b

How does SSL/TLS work?

These are the essential principles to grasp for understanding how SSL/TLS works:

- Secure communication begins with a TLS handshake, in which the two communicating parties open a secure connection and exchange the public key

- During the TLS handshake, the two parties generate session keys, and the session keys encrypt and decrypt all communications after the TLS handshake

- Different session keys are used to encrypt communications in each new session

- TLS ensures that the party on the server side, or the website the user is interacting with, is actually who they claim to be

- TLS also ensures that data has not been altered, since a message authentication code (MAC) is included with transmissions

Symmetric encryption with session keys

Unlike asymmetric encryption, in symmetric encryption the two parties in a conversation use the same key. After the TLS handshake, both sides use the same session keys for encryption. Once session keys are in use, the public and private keys are not used anymore. Session keys are temporary keys that are not used again once the session is terminated. A new, random set of session keys will be created for the next session.

What is an SSL certificate?

An SSL certificate is a file installed on a website’s origin server. It’s simply a data file containing the public key and the identity of the website owner, along with other information. Without an SSL certificate, a website’s traffic can’t be encrypted with TLS.

Technically, any website owner can create their own SSL certificate, and such certificates are called self-signed certificates. However, browsers do not consider self-signed certificates to be as trustworthy as SSL certificates issued by a certificate authority.

How does a website get an SSL certificate?

Website owners need to obtain an SSL certificate from a certificate authority, and then install it on their web server (often a web host can handle this process). A certificate authority is an outside party who can confirm that the website owner is who they say they are. They keep a copy of the certificates they issue.

Availability and Durability

Automated Backup

Manual Snapshot

Multi-AZs

Read Replica & Failover

Overview

Customizable failover order for Amazon Aurora read replicas

Managing an Amazon Aurora DB cluster

High availability for Amazon Aurora

Adding Aurora replicas to a DB cluster

Customizable failover order for Amazon Aurora read replicas

Amazon Aurora now allows you to assign a promotion priority tier to each instance on your database cluster. The priority tiers give you more control over replica promotion during failovers. When the primary instance fails, Amazon RDS will promote the replica with the highest priority to primary. You can assign lower priority tiers to replicas that you don’t want promoted to the primary instance.

High availability for Aurora DB instances

For a cluster using single-master replication, after you create the primary instance, you can create up to 15 read-only Aurora Replicas. The Aurora Replicas are also known as reader instances.

During day-to-day operations, you can offload some of the work for read-intensive applications by using the reader instances to process SELECT queries. When a problem affects the primary instance, one of these reader instances takes over as the primary instance. This mechanism is known as failover.

High availability across AWS Regions with Aurora global databases

For high availability across multiple AWS Regions, you can set up Aurora global databases. Each Aurora global database spans multiple AWS Regions, enabling low latency global reads and disaster recovery from outages across an AWS Region. Aurora automatically handles replicating all data and updates from the primary AWS Region to each of the secondary Regions.

Fault tolerance for an Aurora DB cluster

An Aurora DB cluster is fault tolerant by design. The cluster volume spans multiple Availability Zones in a single AWS Region, and each Availability Zone contains a copy of the cluster volume data. This functionality means that your DB cluster can tolerate a failure of an Availability Zone without any loss of data and only a brief interruption of service.

If the primary instance in a DB cluster using single-master replication fails, Aurora automatically fails over to a new primary instance in one of two ways:

- By promoting an existing Aurora Replica to the new primary instance

- By creating a new primary instance

If the DB cluster has one or more Aurora Replicas, then an Aurora Replica is promoted to the primary instance during a failure event. A failure event results in a brief interruption, during which read and write operations fail with an exception. However, service is typically restored in less than 120 seconds, and often less than 60 seconds. To increase the availability of your DB cluster, we recommend that you create at least one or more Aurora Replicas in two or more different Availability Zones.

You can customize the order in which your Aurora Replicas are promoted to the primary instance after a failure by assigning each replica a priority. Priorities range from 0 for the first priority to 15 for the last priority. If the primary instance fails, Amazon RDS promotes the Aurora Replica with the better priority to the new primary instance. You can modify the priority of an Aurora Replica at any time. Modifying the priority doesn’t trigger a failover.

More than one Aurora Replica can share the same priority, resulting in promotion tiers. If two or more Aurora Replicas share the same priority, then Amazon RDS promotes the replica that is largest in size. If two or more Aurora Replicas share the same priority and size, then Amazon RDS promotes an arbitrary replica in the same promotion tier.

If the DB cluster doesn’t contain any Aurora Replicas, then the primary instance is recreated during a failure event. A failure event results in an interruption during which read and write operations fail with an exception. Service is restored when the new primary instance is created, which typically takes less than 10 minutes. Promoting an Aurora Replica to the primary instance is much faster than creating a new primary instance.

Suppose that the primary instance in your cluster is unavailable because of an outage that affects an entire AZ. In this case, the way to bring a new primary instance online depends on whether your cluster uses a multi-AZ configuration. If the cluster contains any reader instances in other AZs, Aurora uses the failover mechanism to promote one of those reader instances to be the new primary instance. If your provisioned cluster only contains a single DB instance, or if the primary instance and all reader instances are in the same AZ, you must manually create one or more new DB instances in another AZ. If your cluster uses Aurora Serverless, Aurora automatically creates a new DB instance in another AZ. However, this process involves a host replacement and thus takes longer than a failover.

Adding Aurora replicas to a DB cluster

An Aurora DB cluster with single-master replication has one primary DB instance and up to 15 Aurora Replicas. The primary DB instance supports read and write operations, and performs all data modifications to the cluster volume. Aurora Replicas connect to the same storage volume as the primary DB instance, but support read operations only. You use Aurora Replicas to offload read workloads from the primary DB instance.

- Priority: Choose a failover priority for the instance. If you don’t select a value, the default is tier-1. This priority determines the order in which Aurora Replicas are promoted when recovering from a primary instance failure.

Fast recovery after failover with cluster cache management for Aurora PostgreSQL

Fast recovery after failover with cluster cache management for Aurora PostgreSQL

For fast recovery of the writer DB instance in your Aurora PostgreSQL clusters if there’s a failover, use cluster cache management for Amazon Aurora PostgreSQL. Cluster cache management ensures that application performance is maintained if there’s a failover.

In a typical failover situation, you might see a temporary but large performance degradation after failover. This degradation occurs because when the failover DB instance starts, the buffer cache is empty. An empty cache is also known as a cold cache. A cold cache degrades performance because the DB instance has to read from the slower disk, instead of taking advantage of values stored in the buffer cache.

With cluster cache management, you set a specific reader DB instance as the failover target. Cluster cache management ensures that the data in the designated reader’s cache is kept synchronized with the data in the writer DB instance’s cache. The designated reader’s cache with prefilled values is known as a warm cache. If a failover occurs, the designated reader uses values in its warm cache immediately when it’s promoted to the new writer DB instance. This approach provides your application much better recovery performance.

Cluster cache management requires that the designated reader instance have the same instance class type and size (db.r5.2xlarge or db.r5.xlarge, for example) as the writer. Keep this in mind when you create your Aurora PostgreSQL DB clusters so that your cluster can recover during a failover. For a listing of instance class types and sizes, see Hardware specifications for DB instance classes for Aurora.

For more information, see Fast recovery after failover with cluster cache management for Aurora PostgreSQL

Key steps:

- Enabling cluster cache management: Set the value of the

apg_ccm_enabledcluster parameter to 1. - For the writer DB instance: choose tier-0 for the Failover priority.

- For one reader DB instance: choose tier-0 for the Failover priority.

Diaster Recovery

RTO & RPO

Disaster Recovery (DR) Objectives

Plan for Disaster Recovery (DR)

Implementing a disaster recovery strategy with Amazon RDS

| Feature | RTO | RPO | Cost | Scope |

|---|---|---|---|---|

| Automated backups(once a day) | Good | Better | Low | Single Region |

| Manual snapshots | Better | Good | Medium | Cross-Region |

| Read replicas | Best | Best | High | Cross-Region |

In addition to availability objectives, your resiliency strategy should also include Disaster Recovery (DR) objectives based on strategies to recover your workload in case of a disaster event. Disaster Recovery focuses on one-time recovery objectives in response natural disasters, large-scale technical failures, or human threats such as attack or error. This is different than availability which measures mean resiliency over a period of time in response to component failures, load spikes, or software bugs.

Define recovery objectives for downtime and data loss: The workload has a recovery time objective (RTO) and recovery point objective (RPO).

- Recovery Time Objective (RTO) is defined by the organization. RTO is the maximum acceptable delay between the interruption of service and restoration of service. This determines what is considered an acceptable time window when service is unavailable.

- Recovery Point Objective (RPO) is defined by the organization. RPO is the maximum acceptable amount of time since the last data recovery point. This determines what is considered an acceptable loss of data between the last recovery point and the interruption of service.

Recovery Strategies

Use defined recovery strategies to meet the recovery objectives: A disaster recovery (DR) strategy has been defined to meet objectives. Choose a strategy such as: backup and restore, active/passive (pilot light or warm standby), or active/active.

When architecting a multi-region disaster recovery strategy for your workload, you should choose one of the following multi-region strategies. They are listed in increasing order of complexity, and decreasing order of RTO and RPO. DR Region refers to an AWS Region other than the one primary used for your workload (or any AWS Region if your workload is on premises).

- Backup and restore (RPO in hours, RTO in 24 hours or less): Back up your data and applications using point-in-time backups into the DR Region. Restore this data when necessary to recover from a disaster.

- Pilot light (RPO in minutes, RTO in hours): Replicate your data from one region to another and provision a copy of your core workload infrastructure. Resources required to support data replication and backup such as databases and object storage are always on. Other elements such as application servers are loaded with application code and configurations, but are switched off and are only used during testing or when Disaster Recovery failover is invoked.

- Warm standby (RPO in seconds, RTO in minutes): Maintain a scaled-down but fully functional version of your workload always running in the DR Region. Business-critical systems are fully duplicated and are always on, but with a scaled down fleet. When the time comes for recovery, the system is scaled up quickly to handle the production load. The more scaled-up the Warm Standby is, the lower RTO and control plane reliance will be. When scaled up to full scale this is known as a Hot Standby.

- Multi-region (multi-site) active-active (RPO near zero, RTO potentially zero): Your workload is deployed to, and actively serving traffic from, multiple AWS Regions. This strategy requires you to synchronize data across Regions. Possible conflicts caused by writes to the same record in two different regional replicas must be avoided or handled. Data replication is useful for data synchronization and will protect you against some types of disaster, but it will not protect you against data corruption or destruction unless your solution also includes options for point-in-time recovery. Use services like Amazon Route 53 or AWS Global Accelerator to route your user traffic to where your workload is healthy. For more details on AWS services you can use for active-active architectures see the AWS Regions section of Use Fault Isolation to Protect Your Workload.

Recommendation

The difference between Pilot Light and Warm Standby can sometimes be difficult to understand. Both include an environment in your DR Region with copies of your primary region assets. The distinction is that Pilot Light cannot process requests without additional action taken first, while Warm Standby can handle traffic (at reduced capacity levels) immediately. Pilot Light will require you to turn on servers, possibly deploy additional (non-core) infrastructure, and scale up, while Warm Standby only requires you to scale up (everything is already deployed and running). Choose between these based on your RTO and RPO needs.

Tips

Test disaster recovery implementation to validate the implementation: Regularly test failover to DR to ensure that RTO and RPO are met.

A pattern to avoid is developing recovery paths that are rarely executed. For example, you might have a secondary data store that is used for read-only queries. When you write to a data store and the primary fails, you might want to fail over to the secondary data store. If you don’t frequently test this failover, you might find that your assumptions about the capabilities of the secondary data store are incorrect. The capacity of the secondary, which might have been sufficient when you last tested, may be no longer be able to tolerate the load under this scenario. Our experience has shown that the only error recovery that works is the path you test frequently. This is why having a small number of recovery paths is best. You can establish recovery patterns and regularly test them. If you have a complex or critical recovery path, you still need to regularly execute that failure in production to convince yourself that the recovery path works. In the example we just discussed, you should fail over to the standby regularly, regardless of need.

Manage configuration drift at the DR site or region: Ensure that your infrastructure, data, and configuration are as needed at the DR site or region. For example, check that AMIs and service quotas are up to date.

AWS Config continuously monitors and records your AWS resource configurations. It can detect drift and trigger AWS Systems Manager Automation to fix it and raise alarms. AWS CloudFormation can additionally detect drift in stacks you have deployed.

Automate recovery: Use AWS or third-party tools to automate system recovery and route traffic to the DR site or region.

Based on configured health checks, AWS services, such as Elastic Load Balancing and AWS Auto Scaling, can distribute load to healthy Availability Zones while services, such as Amazon Route 53 and AWS Global Accelerator, can route load to healthy AWS Regions.

For workloads on existing physical or virtual data centers or private clouds CloudEndure Disaster Recovery, available through AWS Marketplace, enables organizations to set up an automated disaster recovery strategy to AWS. CloudEndure also supports cross-region / cross-AZ disaster recovery in AWS.

Fast failover with Amazon Aurora PostgreSQL

There are several things you can do to make a failover perform faster with Aurora PostgreSQL. This section discusses each of the following ways:

- Aggressively set TCP keepalives to ensure that longer running queries that are waiting for a server response will be stopped before the read timeout expires in the event of a failure.

- Set the Java DNS caching timeouts aggressively to ensure the Aurora read-only endpoint can properly cycle through read-only nodes on subsequent connection attempts.

- Set the timeout variables used in the JDBC connection string as low as possible. Use separate connection objects for short and long running queries.

- Use the provided read and write Aurora endpoints to establish a connection to the cluster.

- Use RDS APIs to test application response on server side failures and use a packet dropping tool to test application response for client-side failures.

Fast recovery after failover with cluster cache management for Aurora PostgreSQL

Fast recovery after failover with cluster cache management for Aurora PostgreSQL

For fast recovery of the writer DB instance in your Aurora PostgreSQL clusters if there’s a failover, use cluster cache management for Amazon Aurora PostgreSQL. Cluster cache management ensures that application performance is maintained if there’s a failover.

In a typical failover situation, you might see a temporary but large performance degradation after failover. This degradation occurs because when the failover DB instance starts, the buffer cache is empty. An empty cache is also known as a cold cache. A cold cache degrades performance because the DB instance has to read from the slower disk, instead of taking advantage of values stored in the buffer cache.

With cluster cache management, you set a specific reader DB instance as the failover target. Cluster cache management ensures that the data in the designated reader’s cache is kept synchronized with the data in the writer DB instance’s cache. The designated reader’s cache with prefilled values is known as a warm cache. If a failover occurs, the designated reader uses values in its warm cache immediately when it’s promoted to the new writer DB instance. This approach provides your application much better recovery performance.

Migration

From Aurora cluster

Create clone

You are cloning an Aurora database. This will create a new DB cluster that includes all of the data from the existing database as well as a writer DB instance. To enable other accounts to clone this DB cluster, navigate to the DB cluster details page and share the cluster with other AWS accounts.

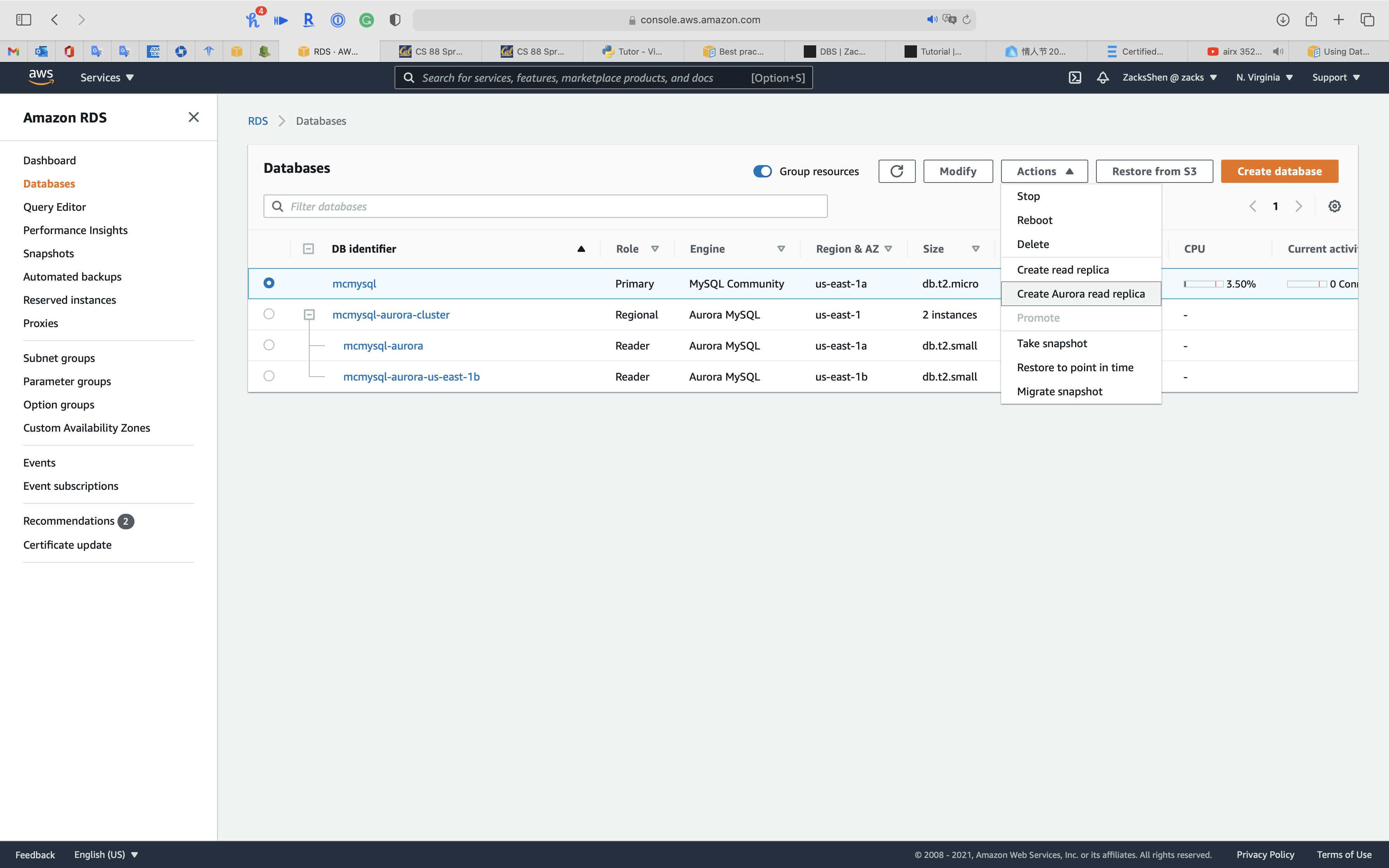

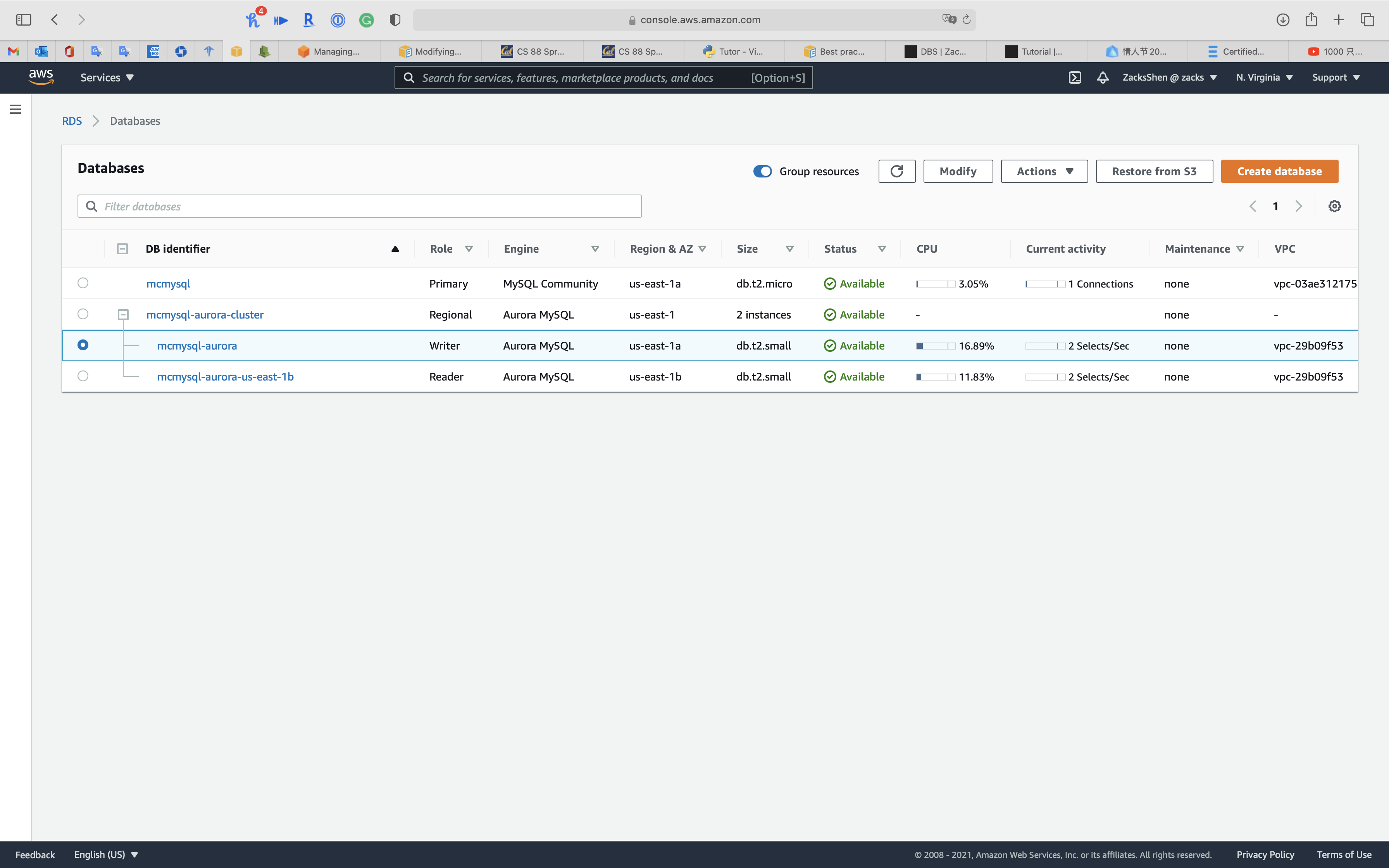

From RDS MySQL

Migrating data to an Amazon Aurora MySQL DB cluster

Migrating data from a MySQL DB instance to an Amazon Aurora MySQL DB cluster by using an Aurora read replica

- You can migrate from an RDS MySQL DB instance by first creating an Aurora MySQL read replica of a MySQL DB instance.

- When the replica lag between the MySQL DB instance and the Aurora MySQL read replica is 0, you can direct your client applications to read from the Aurora read replica and then stop replication to make the Aurora MySQL read replica a standalone Aurora MySQL DB cluster for reading and writing. For details, see Migrating data from a MySQL DB instance to an Amazon Aurora MySQL DB cluster by using an Aurora read replica.

Select the RDS MySQL instance you want to migrate.

Click on Action -> Create Aurora read replica

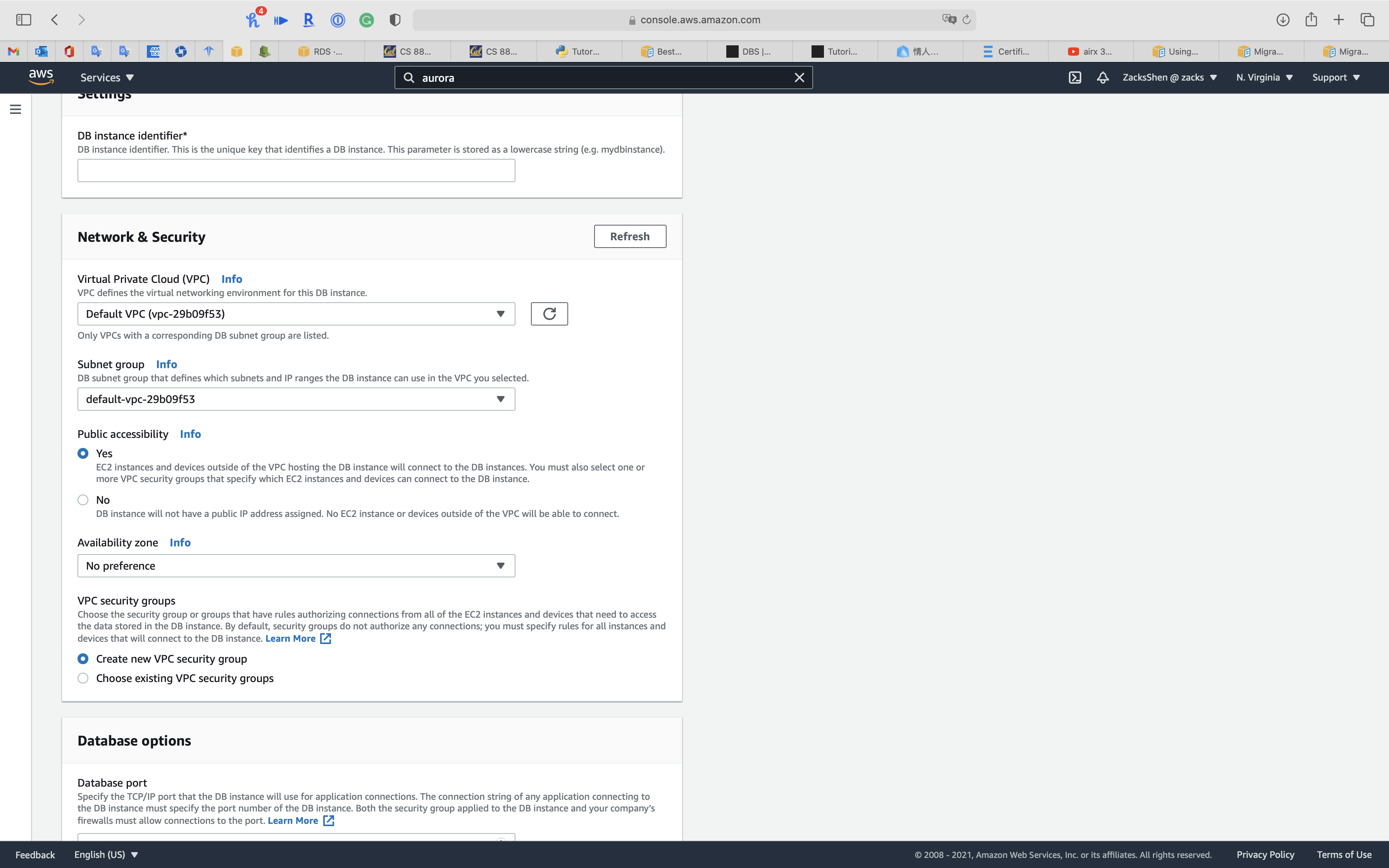

Configure the Aurora Read Replica Settings. see Creating an Aurora read replica

See status of cluster is Modifying

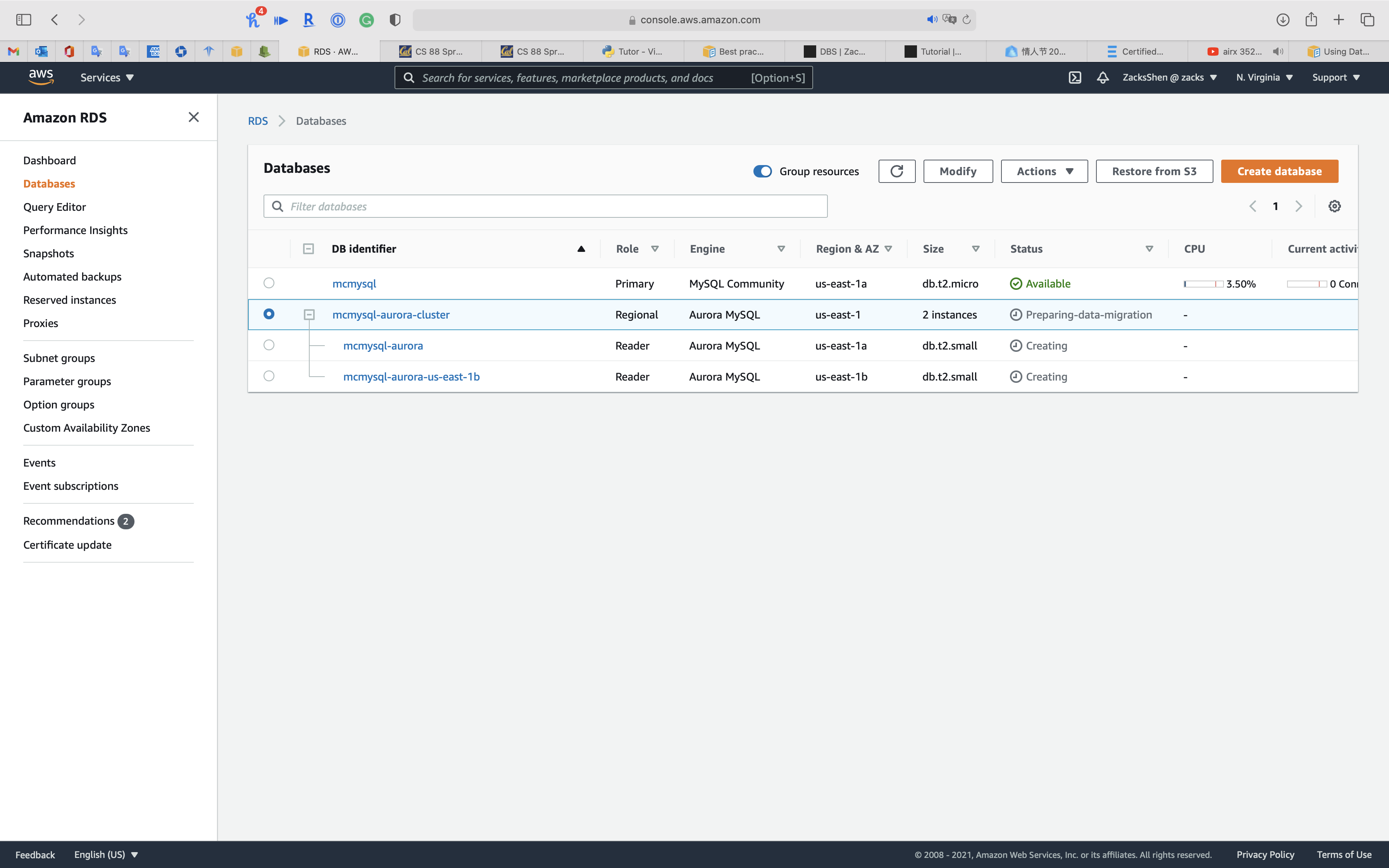

See status of cluster is Preparing-data-migration, wait several minutes.

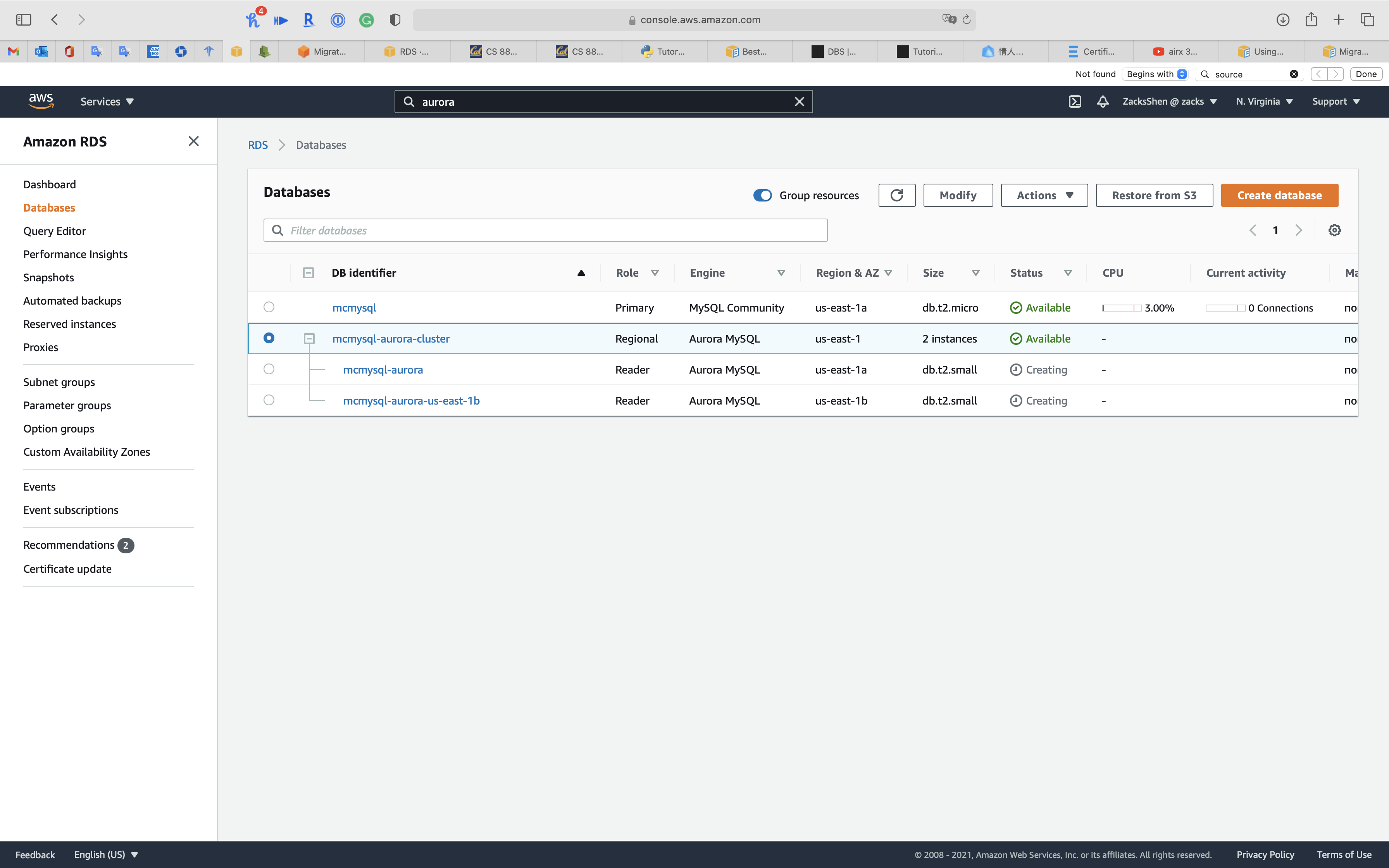

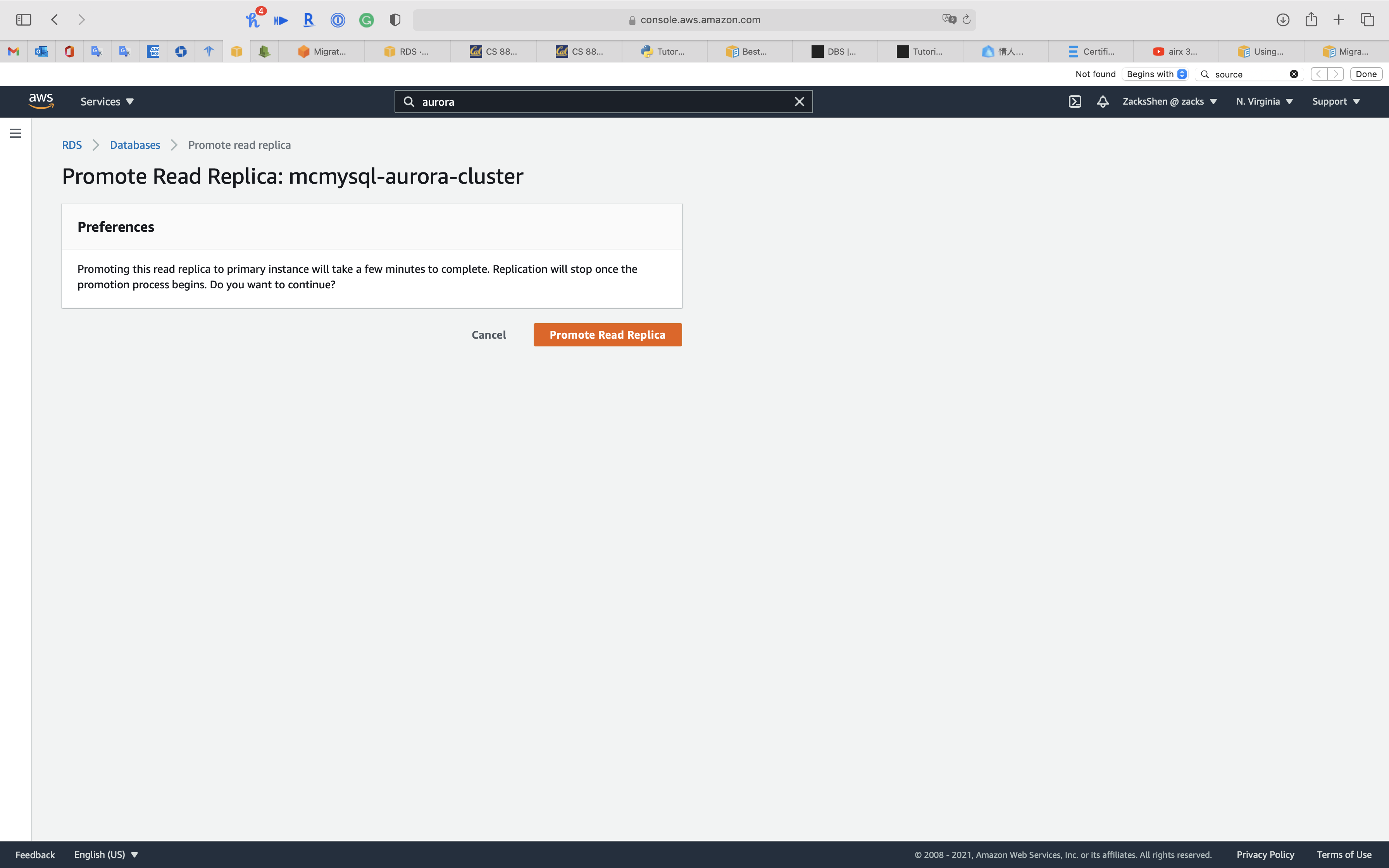

When the status of cluster switches to Available, click on your cluster. See Promoting an Aurora read replica

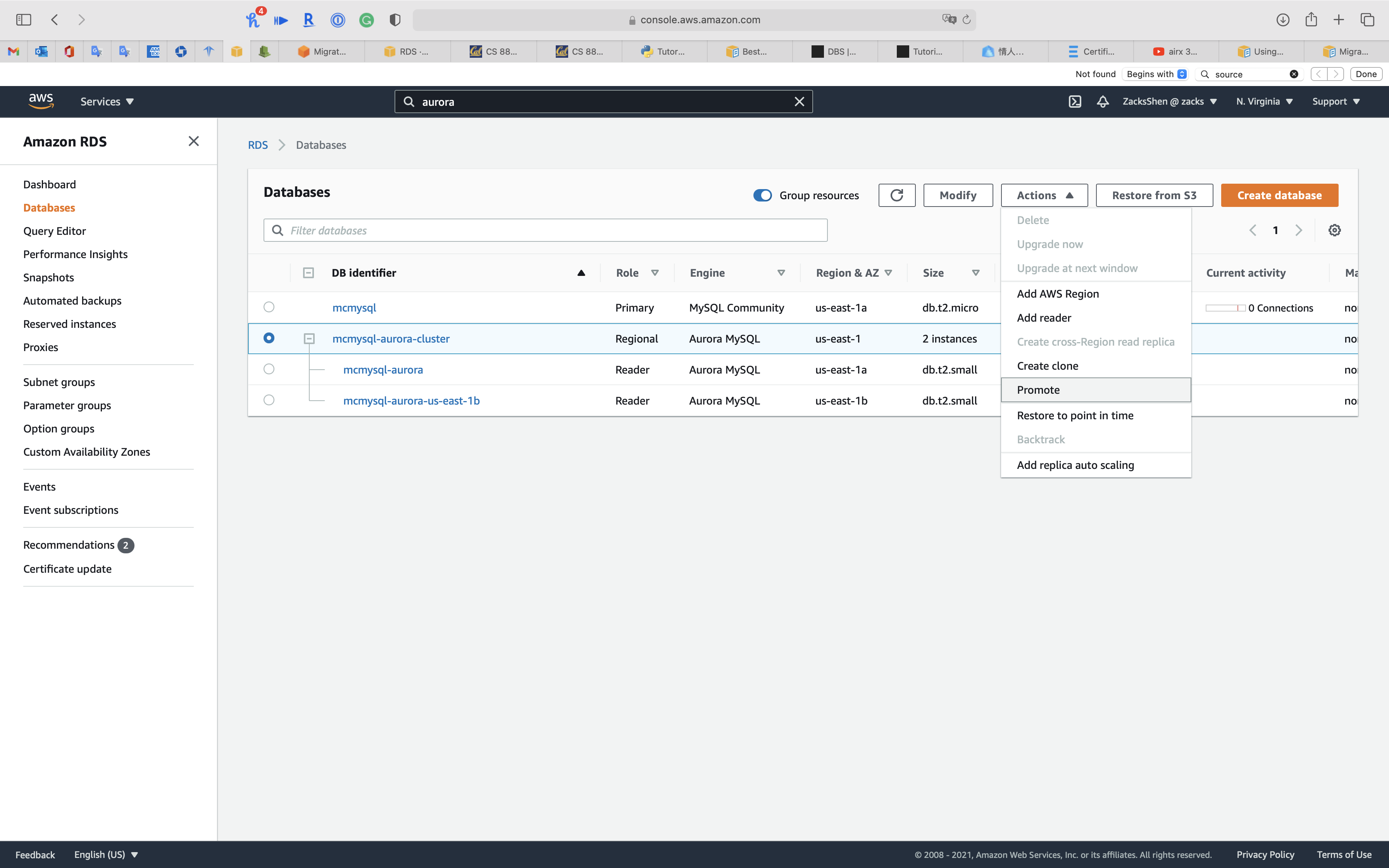

Click on Action -> Promote

Before Promote

Click on Promote Read Replica

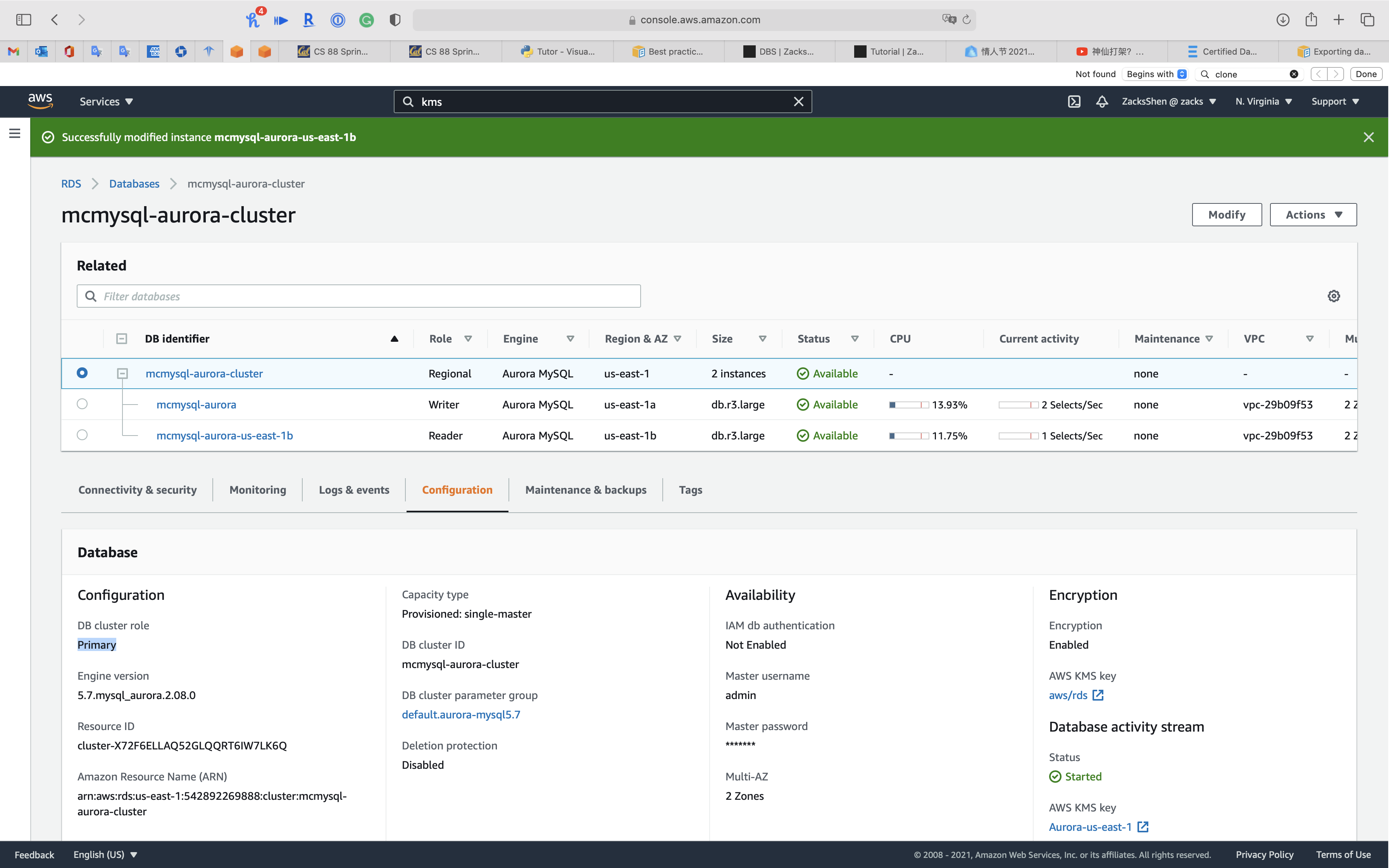

After Promote

You can also subscript an Event to confirm promote status

Service -> RDS -> Events

On the Events page, verify that there is a Promoted Read Replica cluster to a stand-alone database cluster event for the cluster that you promoted.

From RDS PostgreSQL

Migrating data to Amazon Aurora with PostgreSQL compatibility

To migrate from an Amazon RDS for PostgreSQL DB instance to an Amazon Aurora PostgreSQL DB cluster.

- Create an Aurora Replica of your source PostgreSQL DB instance.

- When the replica lag between the PostgreSQL DB instance and the Aurora PostgreSQL Replica is zero, you can promote the Aurora Replica to be a standalone Aurora PostgreSQL DB cluster.

Monitoring

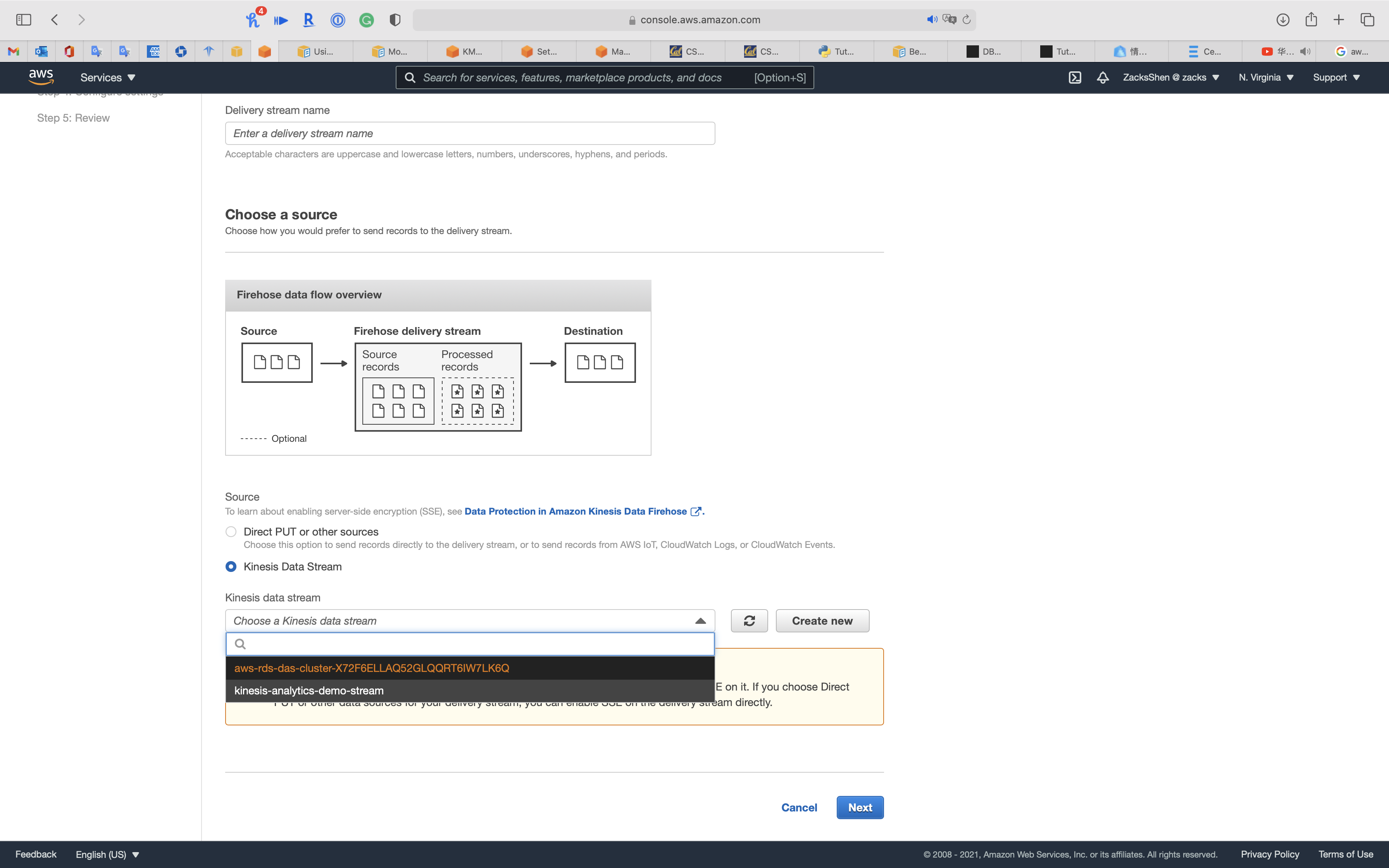

Streaming Analysis

Using Database Activity Streams with Amazon Aurora

Network prerequisites for Aurora MySQL Database Activity Streams

Prerequisite(part)

- For Aurora PostgreSQL, database activity streams are supported for version 2.3 or higher and versions 3.0 or higher.

- For Aurora MySQL, database activity streams are supported for version 2.08 or higher, which is compatible with MySQL version 5.7.

- You can start a database activity stream on the primary or secondary cluster of an Aurora global database.

- Database activity streams support the DB instance classes listed for Aurora in Supported DB engines for DB instance classes, with some exceptions:

- For Aurora PostgreSQL, you can’t use streams with the db.t3.medium instance class.

- For Aurora MySQL, you can’t use streams with any of the db.t2 or db.t3 instance classes.

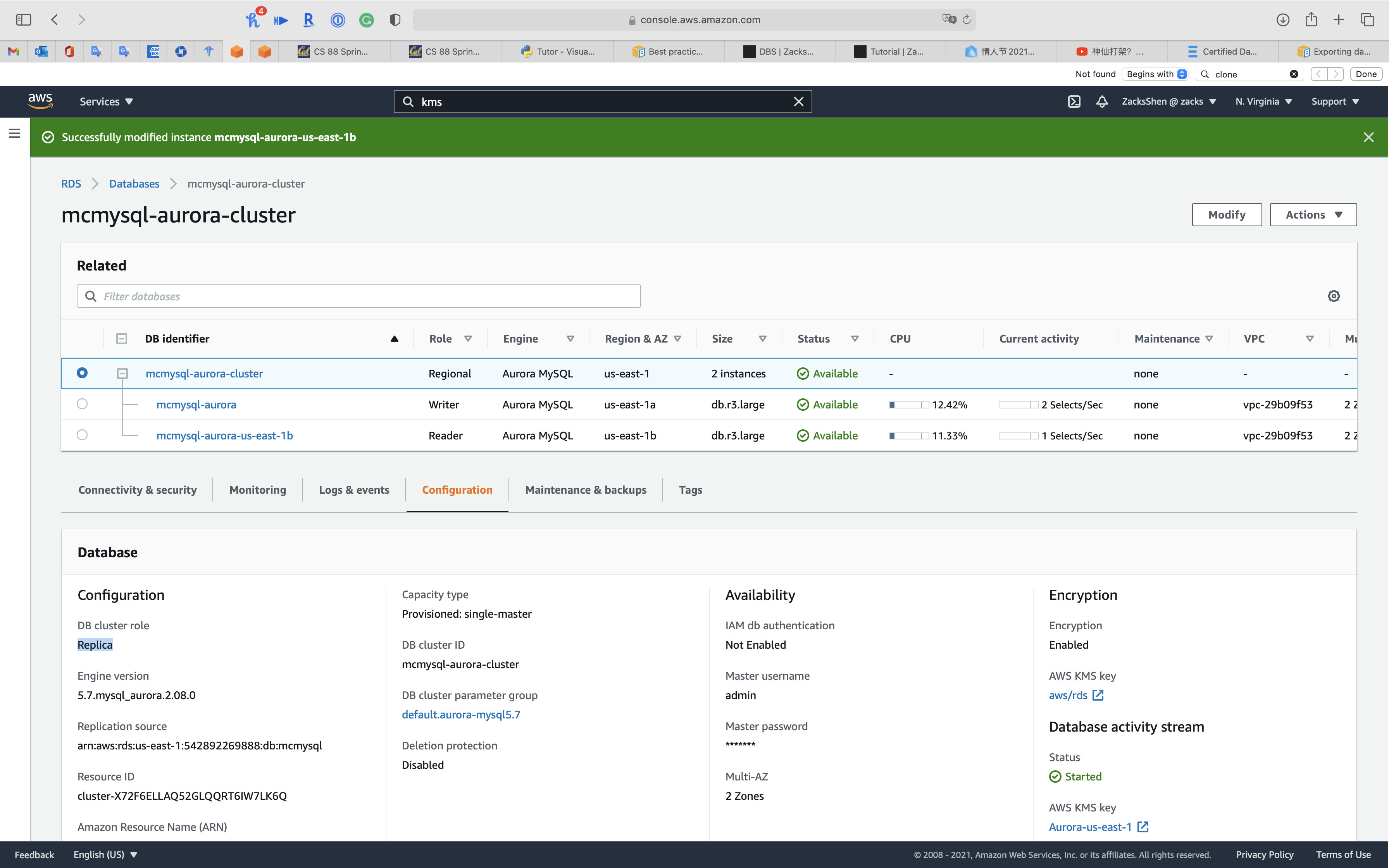

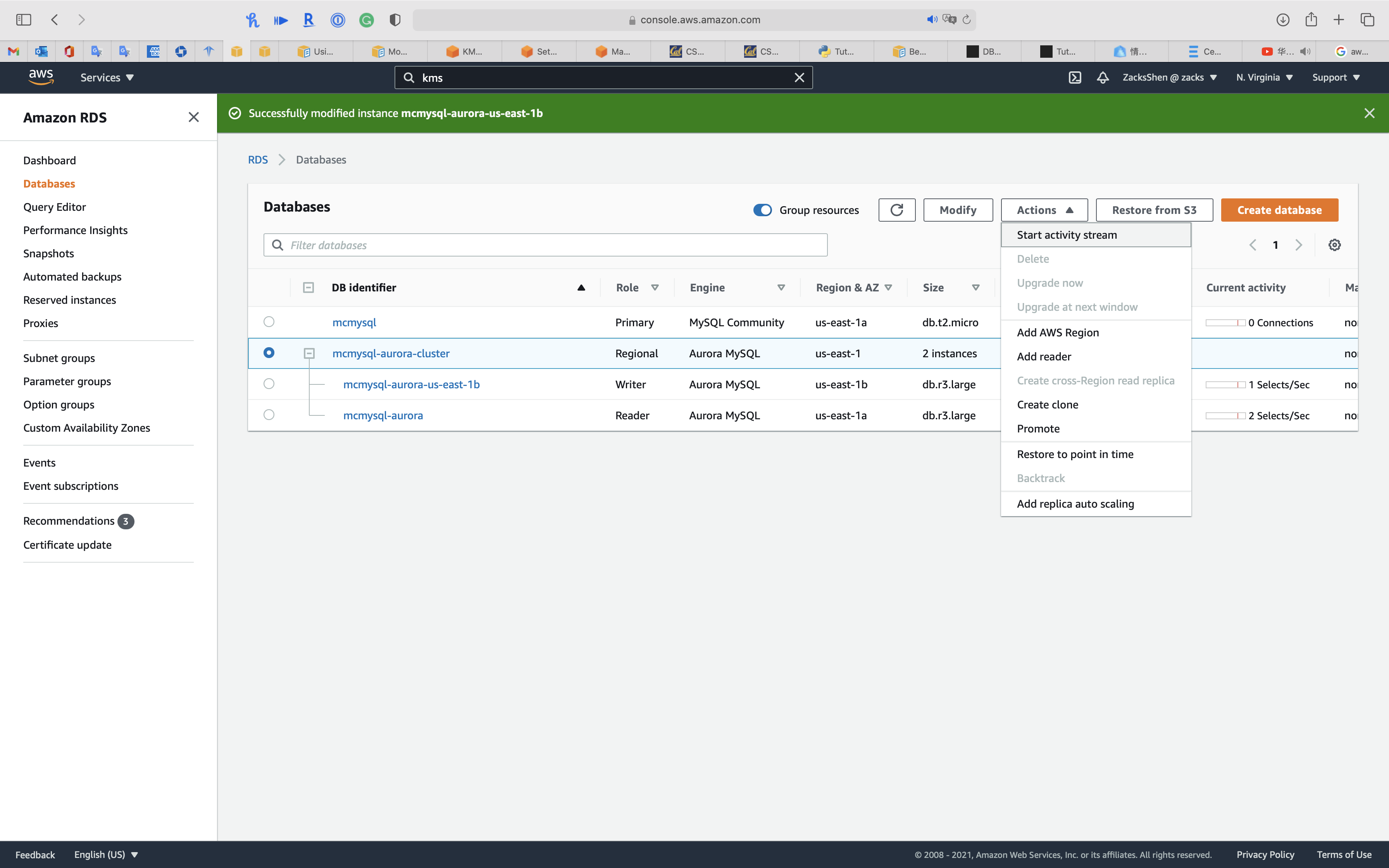

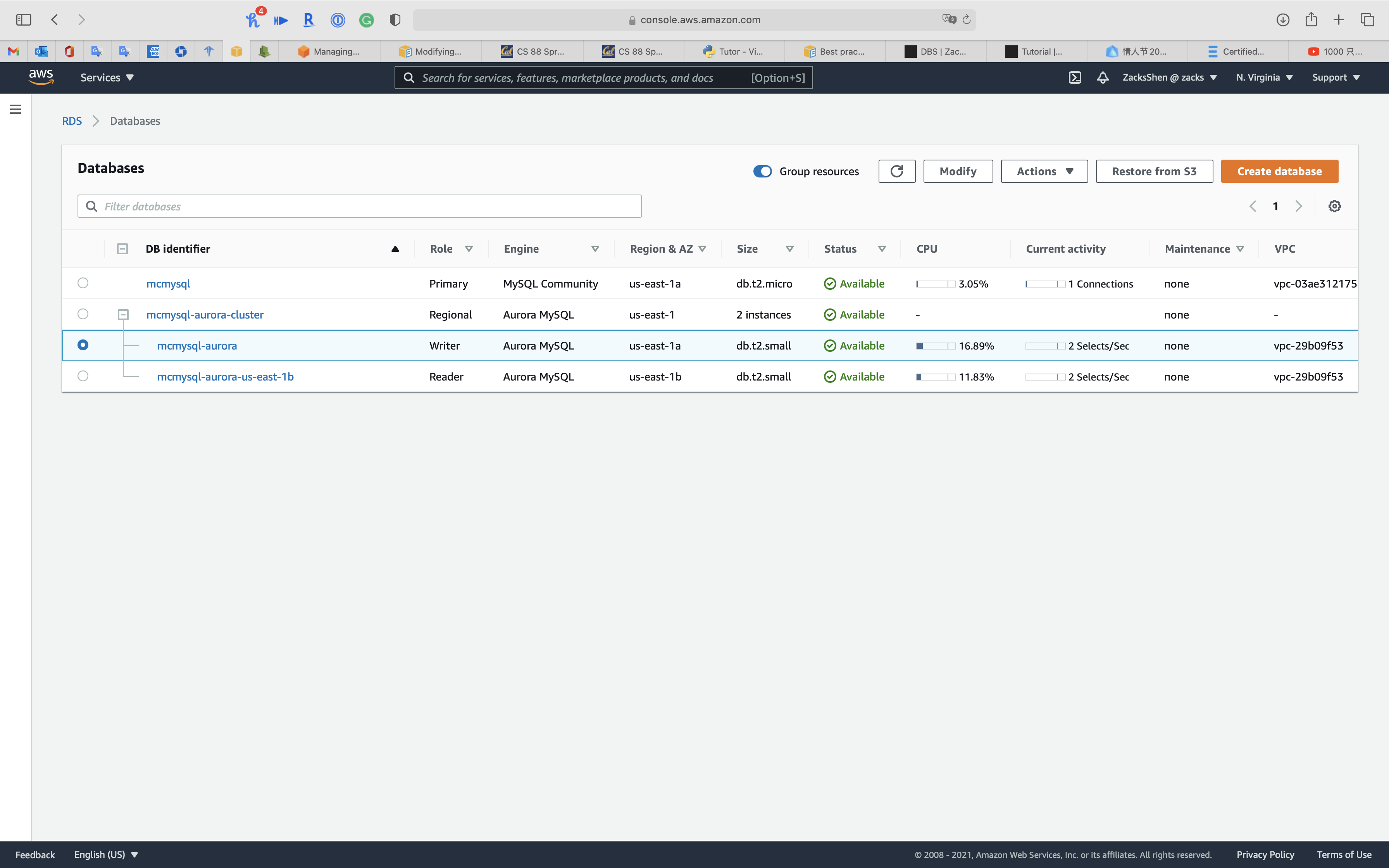

Service -> RDS -> Databases

Select one node of your aurora cluster then click on Modify

Under DB instance size

Select Memory Optimized classes (includes r classes) for powerful use case. Also, t series does not support Database Activity Streams through Kinesis

Select db.r3.large

Click on Continue

Select Apply immediately

Click on Modify DB instance

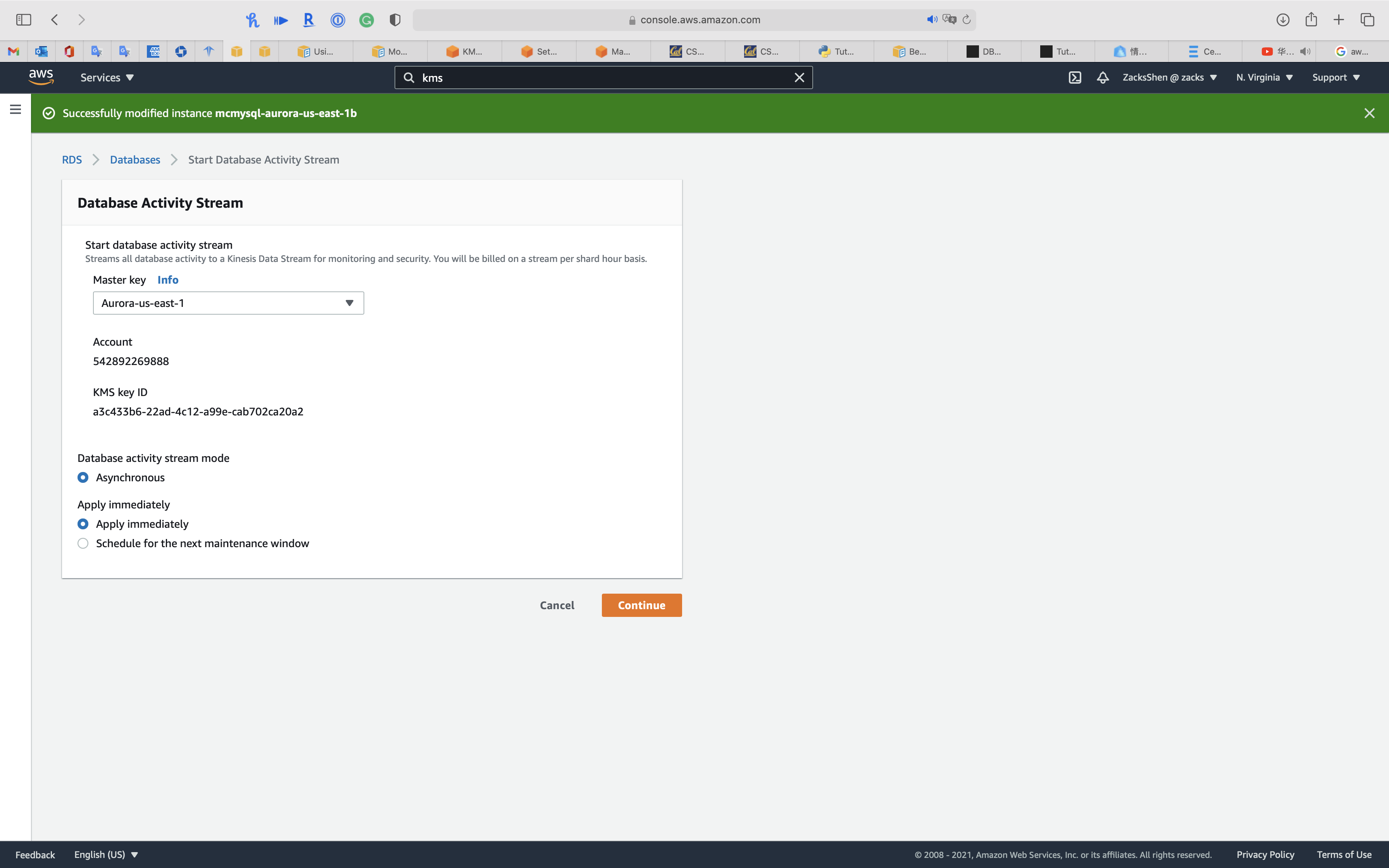

Select your cluster

Click on Action -> Start activity stream

Select one of your KMS CMK

Select Apply immediately

Click on Continue

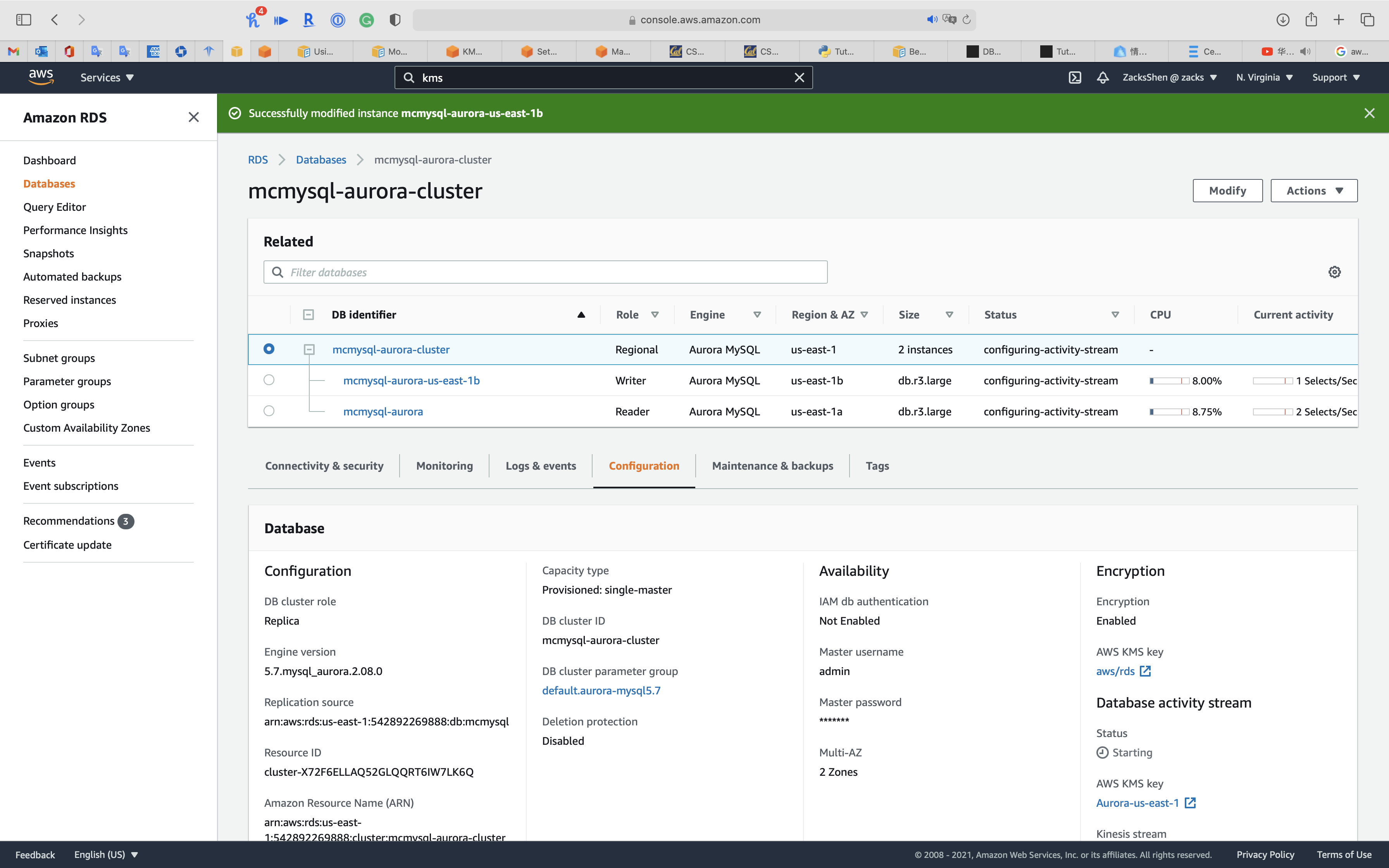

Click on your cluster

Click on Configuration tab

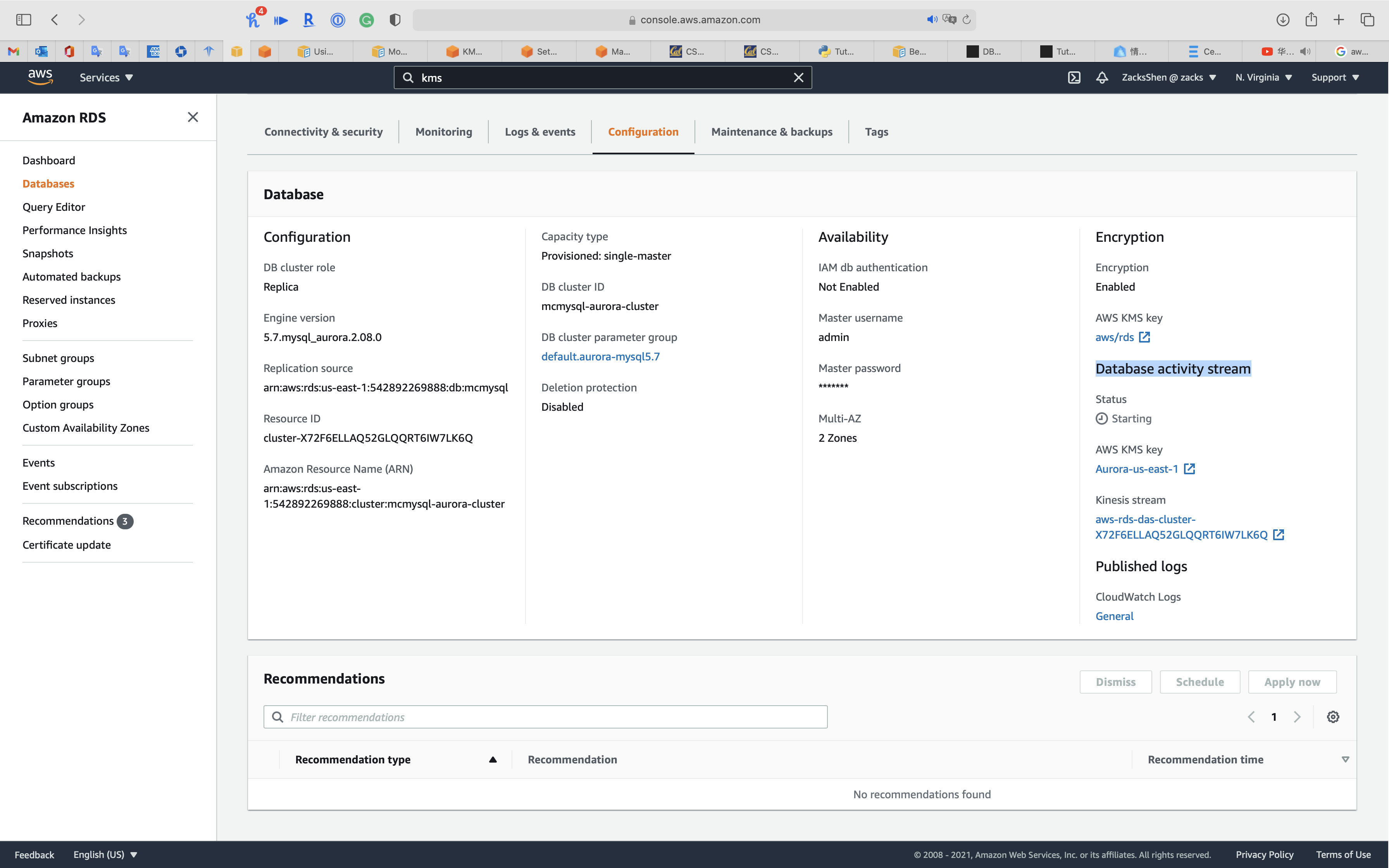

You can see Database activity stream and Kinesis stream

Service -> Kinesis -> Data Firehose

Once you create a Kinesis Data Firehose, you can see your aurora cluster as Kinesis Data Firehose source

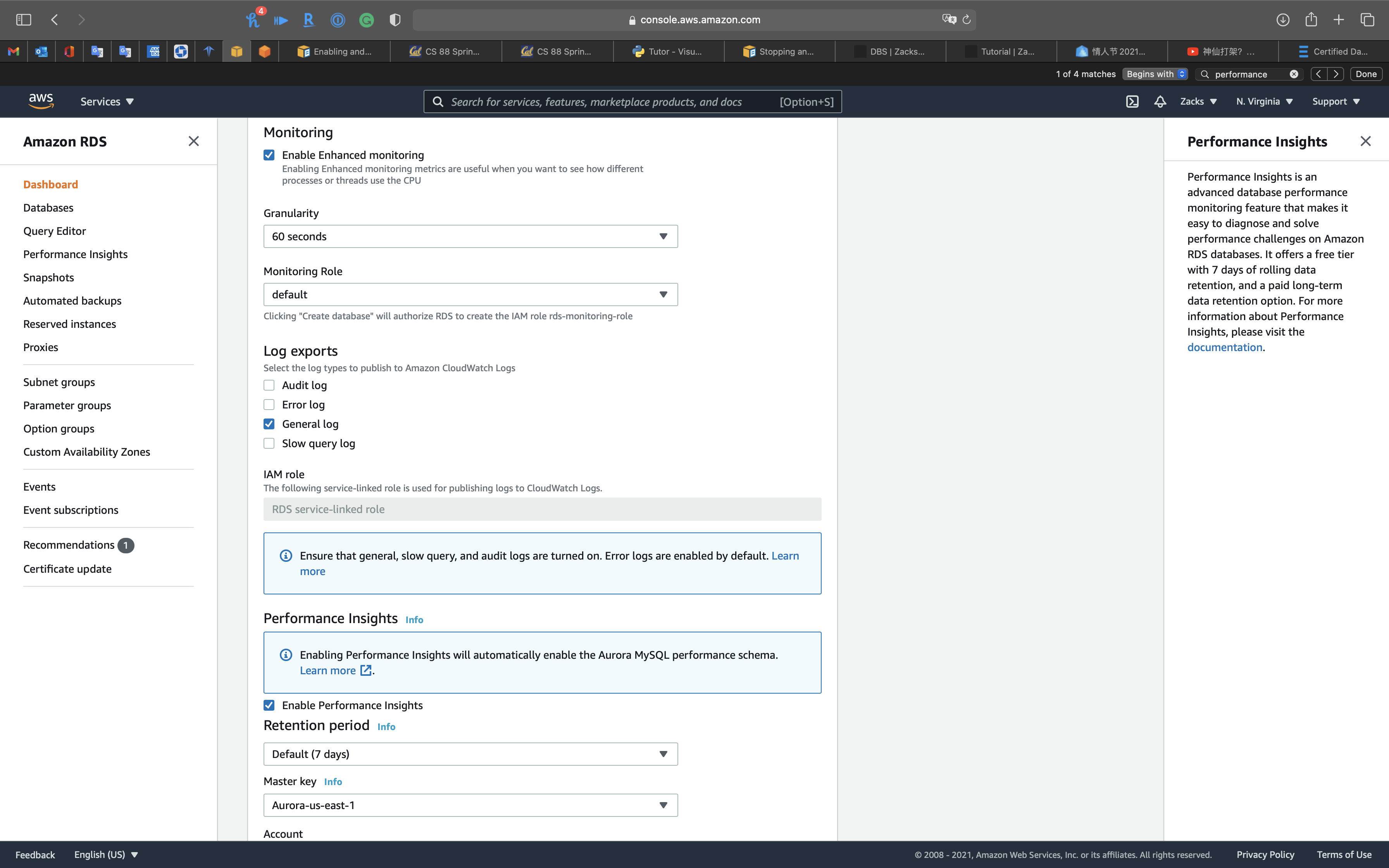

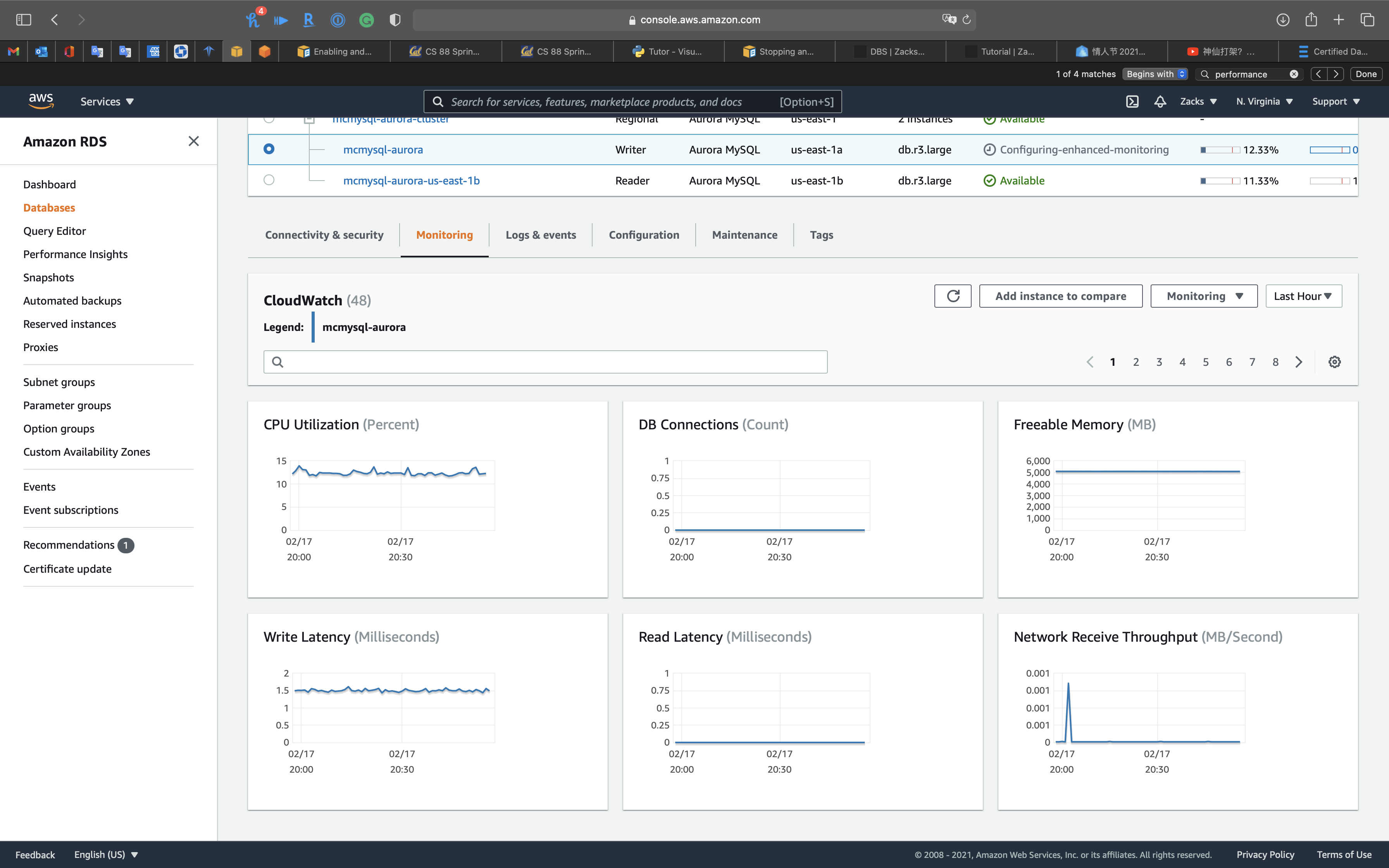

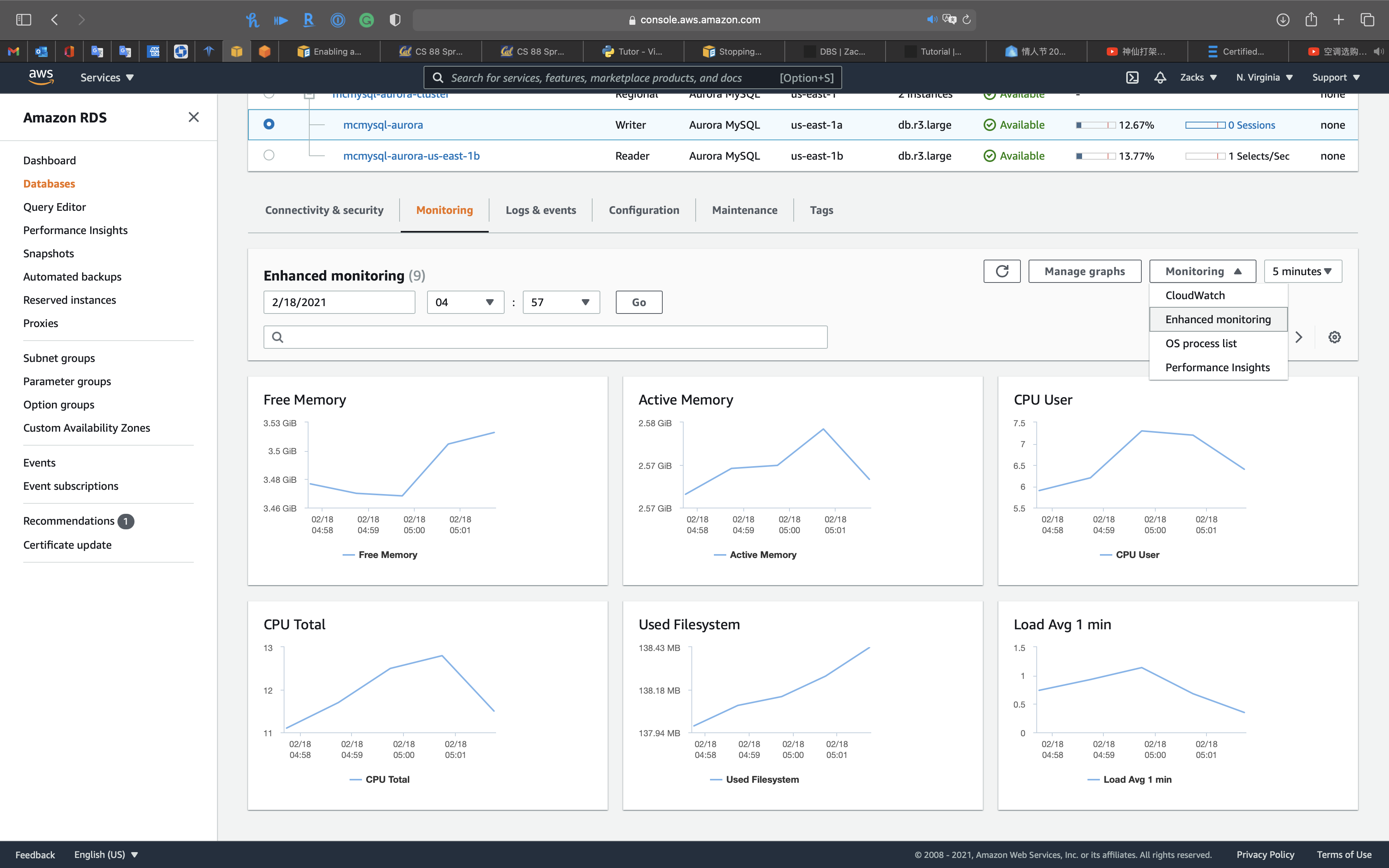

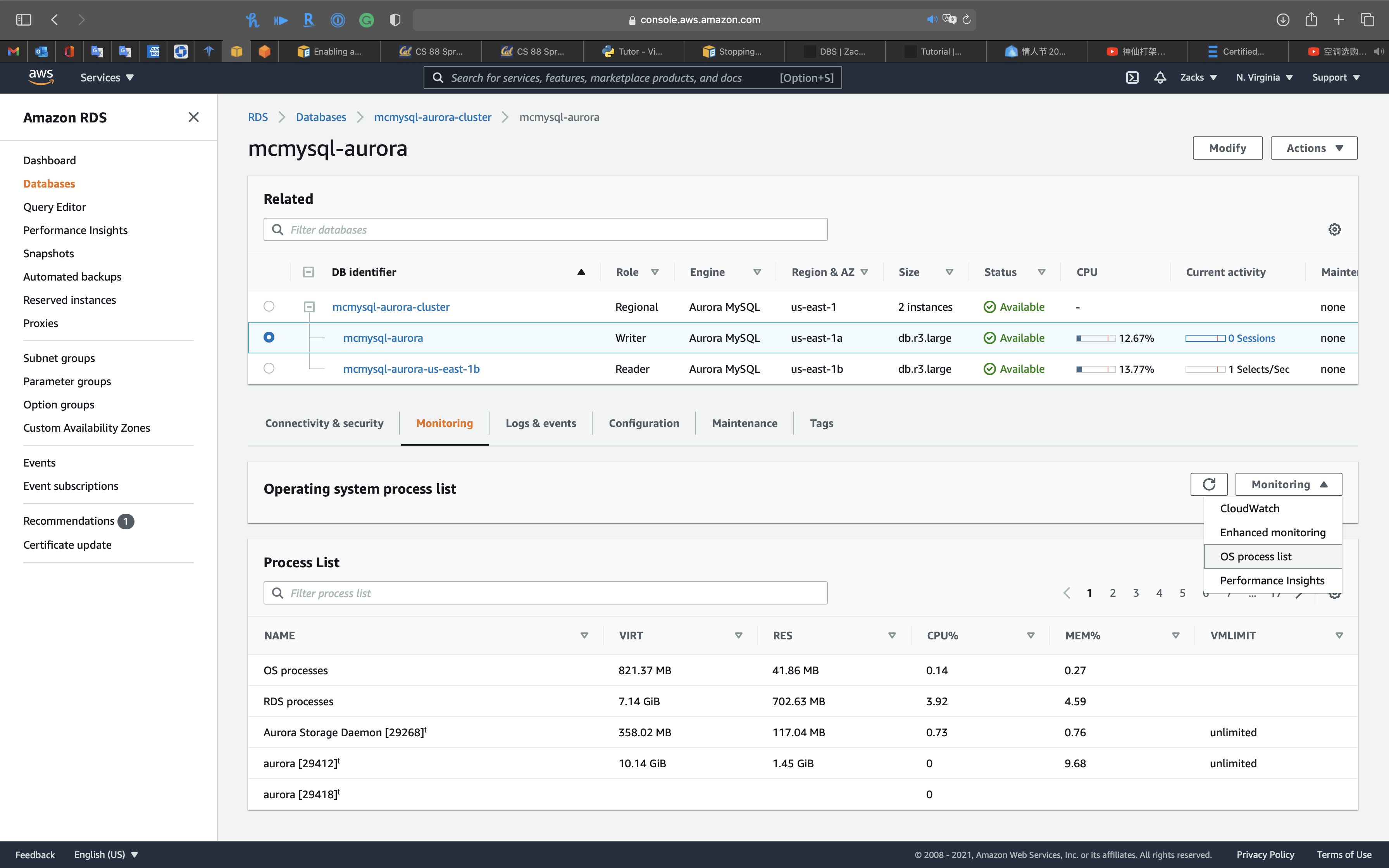

Enhanced monitoring & Performance insights

Enable Enhanced monitoring

Enabling Enhanced monitoring metrics are useful when you want to see how different processes or threads use the CPU.

You can select Enable Enhanced monitoring function in the creating database period or modifying database period.

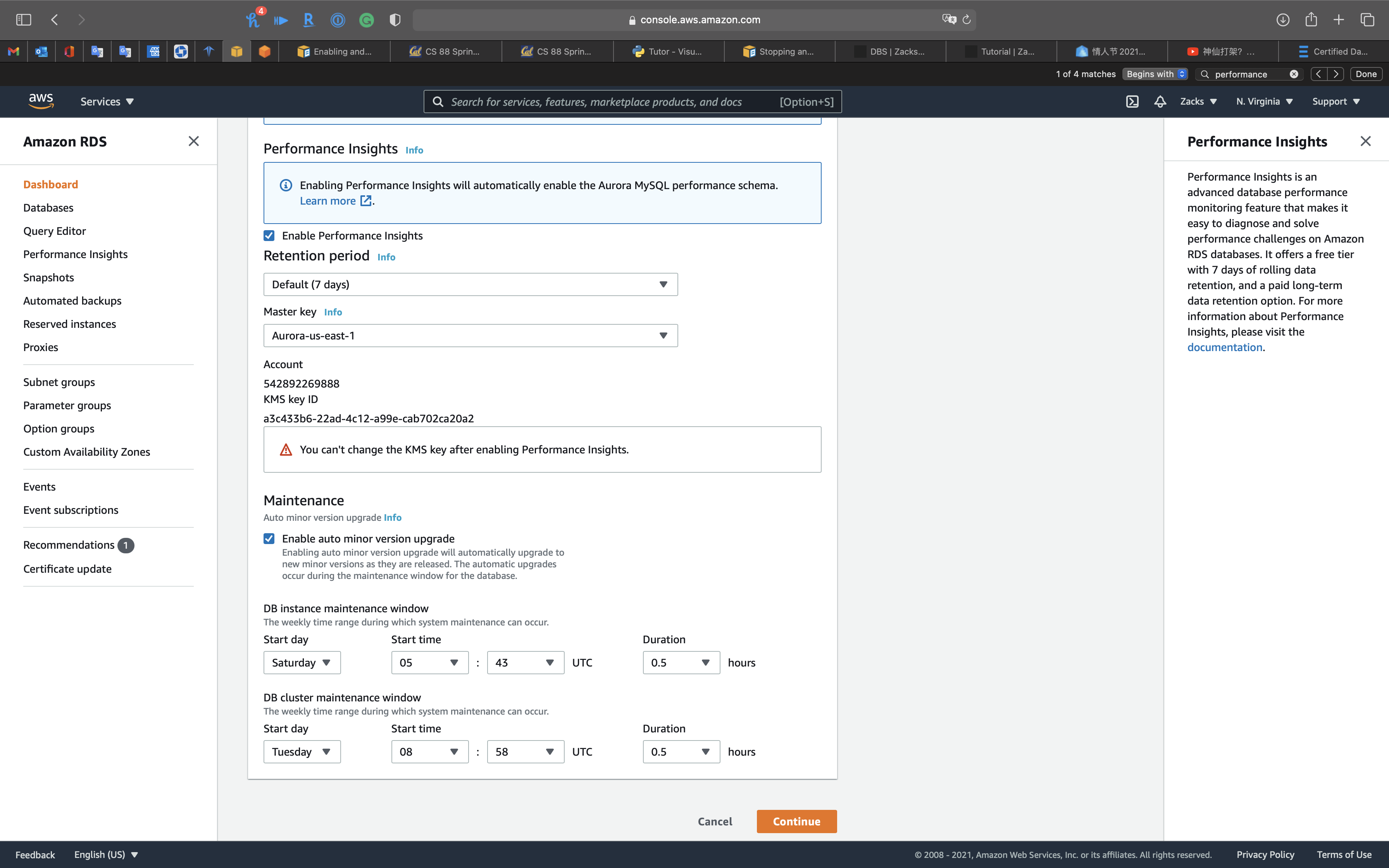

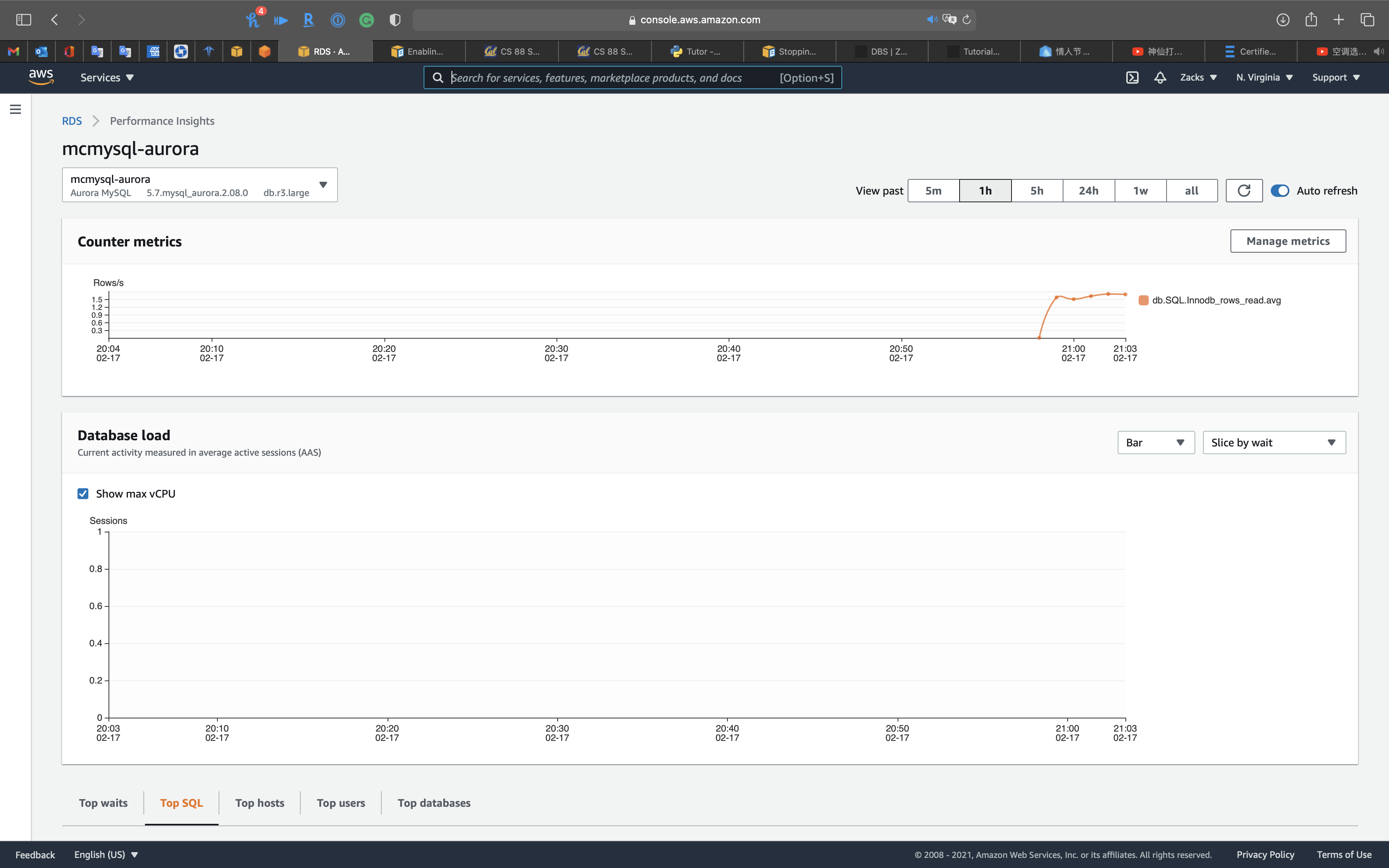

Performance Insights

Performance Insights is an advanced database performance monitoring feature that makes it easy to diagnose and solve performance challenges on Amazon RDS databases. It offers a free tier with 7 days of rolling data retention, and a paid long-term data retention option. For more information about Performance Insights, please visit the

CloudWatch

Enhanced monitoring

OS process list

Performance insights: you can review

- Top waits

- Top SQL

- Top hosts

- Top users

- Top databases

Advanced Auditing with an Amazon Aurora MySQL DB cluster

Using advanced auditing with an Amazon Aurora MySQL DB cluster

You can use the high-performance Advanced Auditing feature in Amazon Aurora MySQL to audit database activity. To do so, you enable the collection of audit logs by setting several DB cluster parameters. When Advanced Auditing is enabled, you can use it to log any combination of supported events. You can view or download the audit logs to review them.

server_audit_logging

Enables or disables Advanced Auditing. This parameter defaults to OFF; set it to ON to enable Advanced Auditing.

server_audit_events

Contains the comma-delimited list of events to log. Events must be specified in all caps, and there should be no white space between the list elements, for example: CONNECT,QUERY_DDL. This parameter defaults to an empty string.

You can log any combination of the following events:

CONNECT– Logs both successful and failed connections and also disconnections. This event includes user information.QUERY– Logs all queries in plain text, including queries that fail due to syntax or permission errors.QUERY_DCL– Similar to the QUERY event, but returns only data control language (DCL) queries (GRANT, REVOKE, and so on).QUERY_DDL– Similar to the QUERY event, but returns only data definition language (DDL) queries (CREATE, ALTER, and so on).QUERY_DML– Similar to the QUERY event, but returns only data manipulation language (DML) queries (INSERT, UPDATE, and so on, and also SELECT).TABLE– Logs the tables that were affected by query execution.

Best Practices

This topic includes information on best practices and options for using or migrating data to an Amazon Aurora PostgreSQL DB cluster.

Basic operational guidelines for Amazon Aurora

The following are basic operational guidelines that everyone should follow when working with Amazon Aurora. The Amazon RDS Service Level Agreement requires that you follow these guidelines:

- Monitor your memory, CPU, and storage usage. You can set up Amazon CloudWatch to notify you when usage patterns change or when you approach the capacity of your deployment. This way, you can maintain system performance and availability.

- If your client application is caching the Domain Name Service (DNS) data of your DB instances, set a time-to-live (TTL) value of less than 30 seconds. The underlying IP address of a DB instance can change after a failover. Thus, caching the DNS data for an extended time can lead to connection failures if your application tries to connect to an IP address that no longer is in service. Aurora DB clusters with multiple read replicas can experience connection failures also when connections use the reader endpoint and one of the read replica instances is in maintenance or is deleted.

- Test failover for your DB cluster to understand how long the process takes for your use case. Testing failover can help you ensure that the application that accesses your DB cluster can automatically connect to the new DB cluster after failover.

Fast failover with Amazon Aurora PostgreSQL

There are several things you can do to make a failover perform faster with Aurora PostgreSQL. This section discusses each of the following ways:

- Aggressively set TCP keepalives to ensure that longer running queries that are waiting for a server response will be stopped before the read timeout expires in the event of a failure.

- Set the Java DNS caching timeouts aggressively to ensure the Aurora read-only endpoint can properly cycle through read-only nodes on subsequent connection attempts.

- Set the timeout variables used in the JDBC connection string as low as possible. Use separate connection objects for short and long running queries.

- Use the provided read and write Aurora endpoints to establish a connection to the cluster.

- Use RDS APIs to test application response on server side failures and use a packet dropping tool to test application response for client-side failures.

Aurora Serverless

Using Amazon Aurora Serverless v1

Using Amazon Aurora Serverless v2 (preview)

Amazon Aurora FAQs

Serverless is Aurora capacity option other than Provisioned, which means you cannot just enable or disable. You must MIGRATE your existing DB.

Q: Can I migrate an existing Aurora DB cluster to Aurora Serverless?

Yes, you can restore a snapshot taken from an existing Aurora provisioned cluster into an Aurora Serverless DB Cluster (and vice versa).

Amazon Aurora Serverless v1

Aurora Serverless v1 is designed for the following use cases:

- Infrequently used applications – You have an application that is only used for a few minutes several times per day or week, such as a low-volume blog site. With Aurora Serverless v1, you pay for only the database resources that you consume on a per-second basis.

- New applications – You’re deploying a new application and you’re unsure about the instance size you need. By using Aurora Serverless v1, you can create a database endpoint and have the database autoscale to the capacity requirements of your application.

- Variable workloads – You’re running a lightly used application, with peaks of 30 minutes to several hours a few times each day, or several times per year. Examples are applications for human resources, budgeting, and operational reporting applications. With Aurora Serverless v1, you no longer need to provision for peak or average capacity.

- Unpredictable workloads – You’re running daily workloads that have sudden and unpredictable increases in activity. An example is a traffic site that sees a surge of activity when it starts raining. With Aurora Serverless v1, your database autoscales capacity to meet the needs of the application’s peak load and scales back down when the surge of activity is over.

- Development and test databases – Your developers use databases during work hours but don’t need them on nights or weekends. With Aurora Serverless v1, your database automatically shuts down when it’s not in use.

- Multi-tenant applications – With Aurora Serverless v1, you don’t have to individually manage database capacity for each application in your fleet. Aurora Serverless v1 manages individual database capacity for you.

Troubleshooting for Aurora

No space left on device

You might encounter one of the following error messages.

- From Amazon Aurora MySQL:

ERROR 3 (HY000): Error writing file '/rdsdbdata/tmp/XXXXXXXX' (Errcode: 28 - No space left on device) - From Amazon Aurora PostgreSQL:

ERROR: could not write block XXXXXXXX of temporary file: No space left on device.

Each DB instance in an Amazon Aurora DB cluster uses local solid state drive (SSD) storage to store temporary tables for a session. This local storage for temporary tables doesn’t automatically grow like the Aurora cluster volume. Instead, the amount of local storage is limited. The limit is based on the DB instance class for DB instances in your DB cluster.

To show the amount of storage available for temporary tables and logs, you can use the CloudWatch metric FreeLocalStorage. This metric is for per-instance temporary volumes, not the cluster volume. For more information on available metrics, see Monitoring Amazon Aurora metrics with Amazon CloudWatch.

In some cases, you can’t modify your workload to reduce the amount temporary storage required. If so, modify your DB instances to use a DB instance class that has more local SSD storage. For more information, see DB instance classes.