AWS Neptune

Introduction

Fast, reliable graph database built for the cloud

Amazon Neptune is a fast, reliable, fully managed graph database service that makes it easy to build and run applications that work with highly connected datasets. The core of Amazon Neptune is a purpose-built, high-performance graph database engine optimized for storing billions of relationships and querying the graph with milliseconds latency. Amazon Neptune supports popular graph models Property Graph and W3C’s RDF, and their respective query languages Apache TinkerPop Gremlin and SPARQL, allowing you to easily build queries that efficiently navigate highly connected datasets. Neptune powers graph use cases such as recommendation engines, fraud detection, knowledge graphs, drug discovery, and network security.

Amazon Neptune is highly available, with read replicas, point-in-time recovery, continuous backup to Amazon S3, and replication across Availability Zones. Neptune is secure with support for HTTPS encrypted client connections and encryption at rest. Neptune is fully managed, so you no longer need to worry about database management tasks such as hardware provisioning, software patching, setup, configuration, or backups.

Loading data into Amazon Neptune

There are several different ways to load graph data into Amazon Neptune:

- If you only need to load a relatively small amount of data, you can use queries such as SPARQL

INSERTstatements or GremlinaddVandaddEsteps. - You can take advantage of Neptune’s Bulk Loader to ingest large amounts of data that reside in external files. The bulk loader command is faster and has less overhead than the query-language commands. It is optimized for large datasets, and supports both RDF (Resource Description Framework) data and Gremlin data.

- You can use AWS Database Migration Service (AWS DMS) to import data from other data stores (see Using AWS Database Migration Service to load data into Amazon Neptune from a different data store, and AWS Database Migration Service User Guide).

Neptune’s Bulk Loader

Amazon Neptune provides a Loader command for loading data from external files directly into a Neptune DB instance. You can use this command instead of executing a large number of INSERT statements, addV and addE steps, or other API calls.

The Neptune Loader command is faster, has less overhead, is optimized for large datasets, and supports both Gremlin data and the RDF (Resource Description Framework) data used by SPARQL.

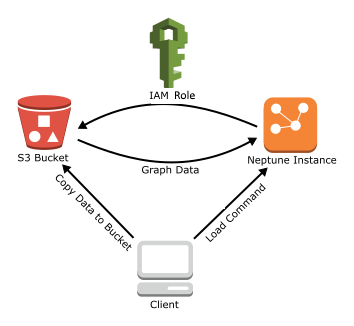

The following diagram shows an overview of the load process:

Here are the steps of the loading process:

- Copy the data files to an Amazon Simple Storage Service (Amazon S3) bucket.

- Create an IAM role with Read and List access to the bucket.

- Create an Amazon S3 VPC endpoint.

- Start the Neptune loader by sending a request via HTTP to the Neptune DB instance.

- The Neptune DB instance assumes the IAM role to load the data from the bucket.

IAM Role and Amazon S3 Access

Prerequisites: IAM Role and Amazon S3 Access

Loading data from an Amazon Simple Storage Service (Amazon S3) bucket requires an AWS Identity and Access Management (IAM) role that has access to the bucket. Amazon Neptune assumes this role to load the data.

Load Data Formats

The Amazon Neptune Load API currently requires specific formats for incoming data. The following formats are available, and are listed with their identifiers for the Neptune loader API in parentheses.

- CSV format (

csv) for property graph / Gremlin - N -Triples (

ntriples) format for RDF / SPARQL - N-Quads (

nquads) format for RDF / SPARQL - RDF/XML (

rdfxml) format for RDF / SPARQL - Turtle (turtle) format for RDF / SPARQL

Important

All files must be encoded in UTF-8 format. If a file is not in UTF format, Neptune tries to load it anyway as UTF-8 data.

Load data using DMS

Using AWS Database Migration Service to load data into Amazon Neptune from a different data store