AWS DeepRacer Lab

AWS DeepRacer

DeepRacer

Demo: Reinforcement Learning with AWS DeepRacer

Task Details

- Build a new vehicle

DeepRacer Configuration

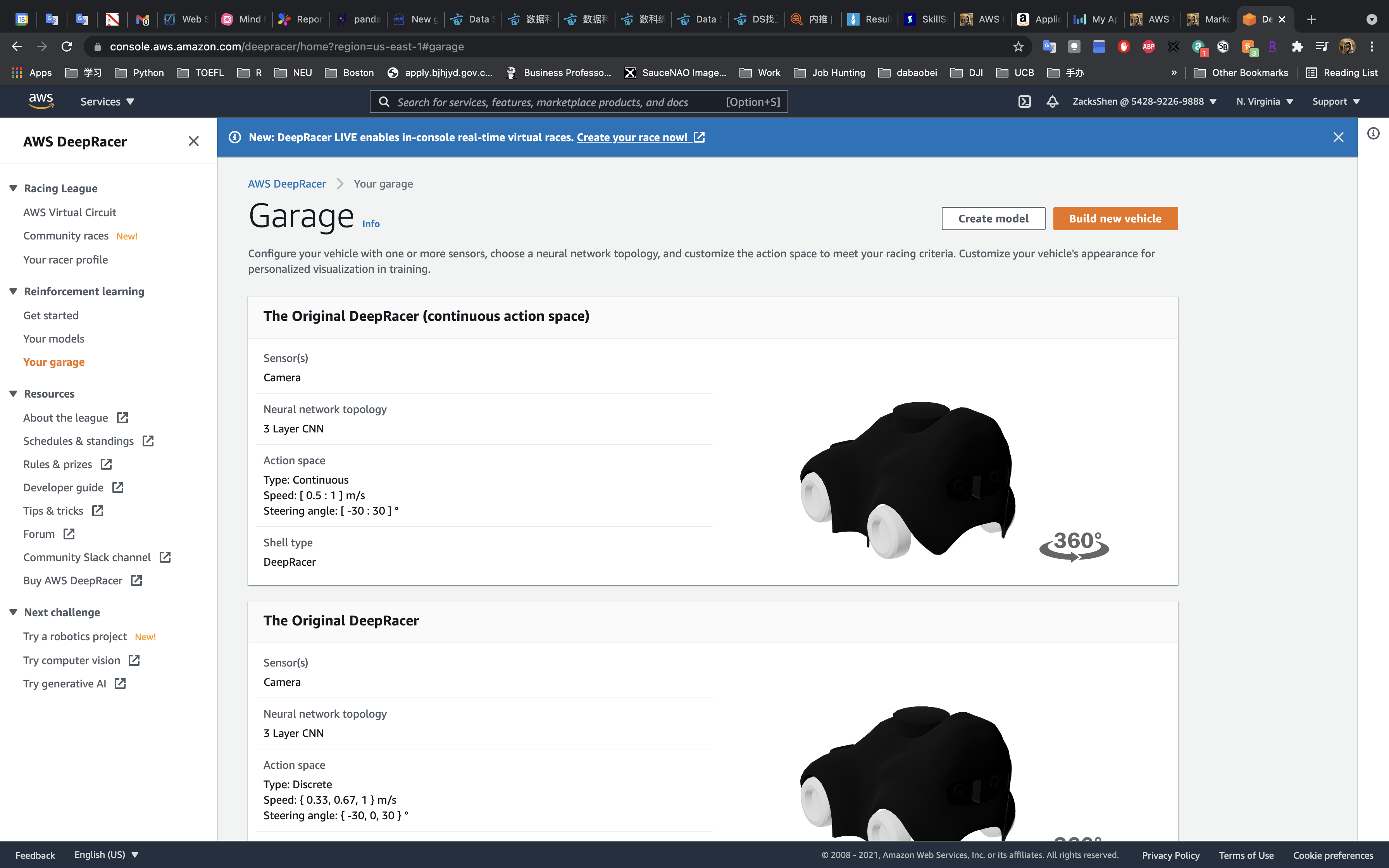

Services -> DeepRacer -> Your garage

Build a new vehicle

Click on Build new vehicle

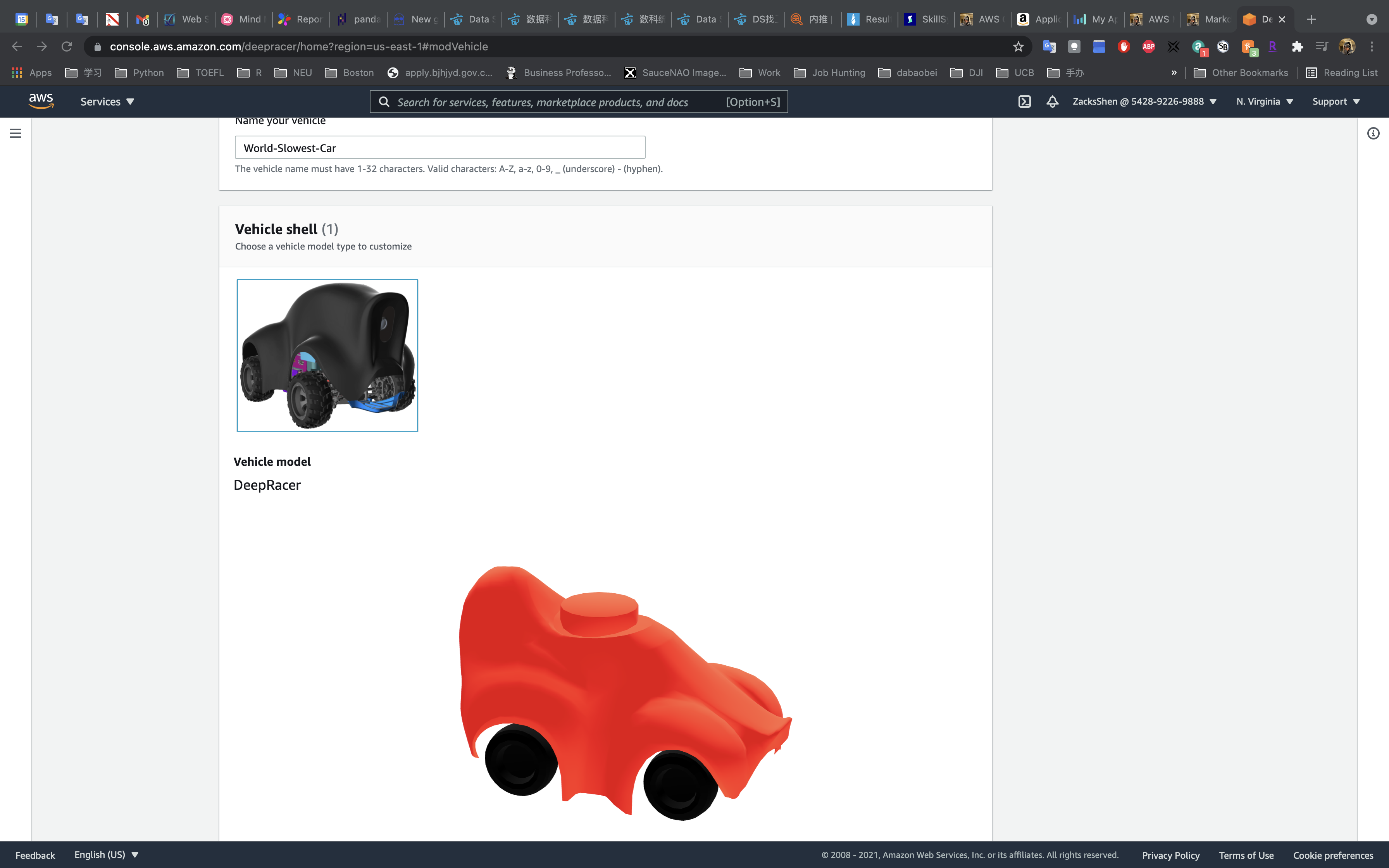

Step 1: Personalization

- Name your vehicle: any name

- Vehicle: choose any one

- Color: choose a color

Click on Next

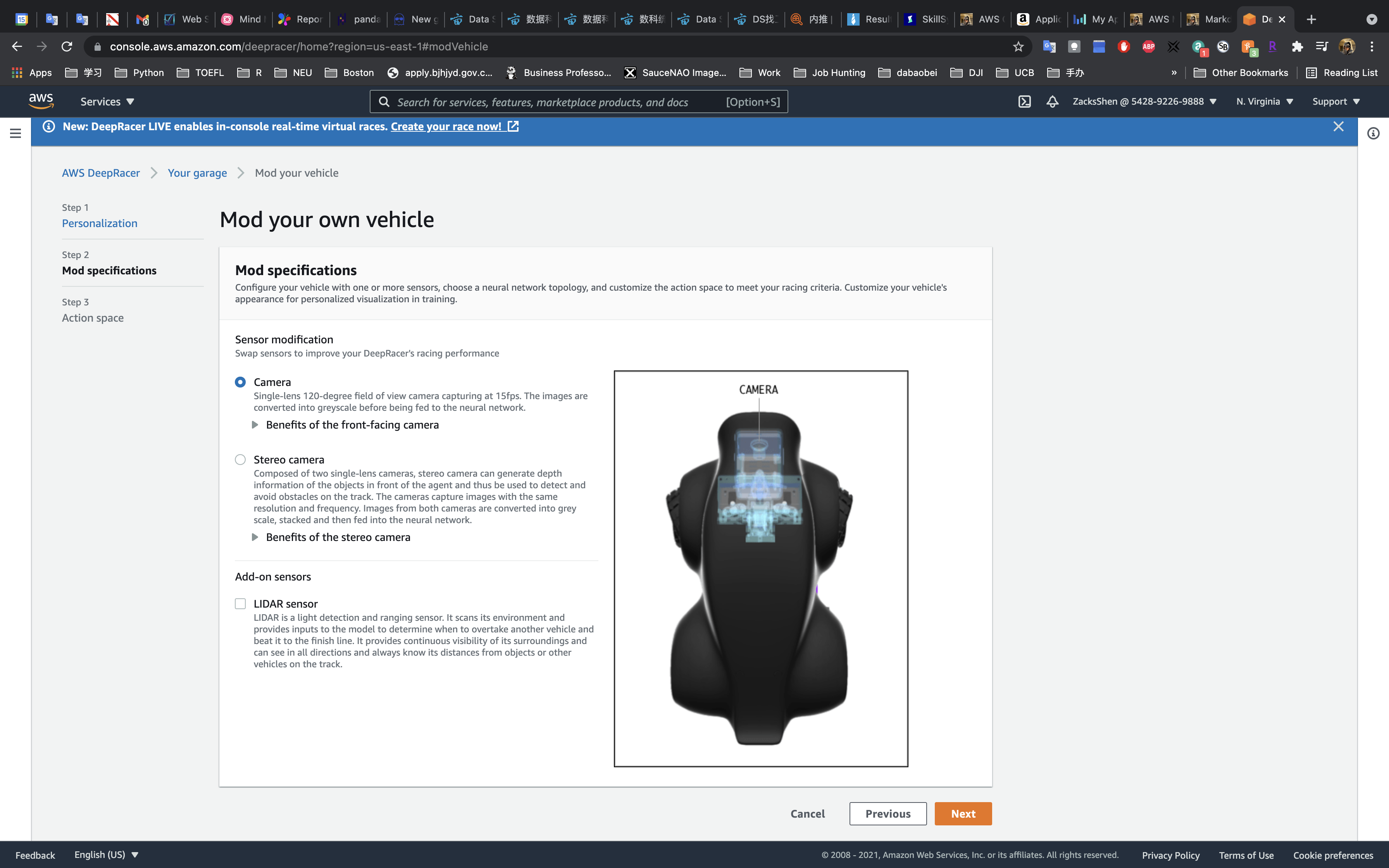

Step 2: Mod specifications

- Sensor modification:

CameraStereo camerafor gathering depth information

- Add-on sensor:

LIDAR sensorfor gathering distance information

For the first model, only choose Camera to make it simple.

Click on Next

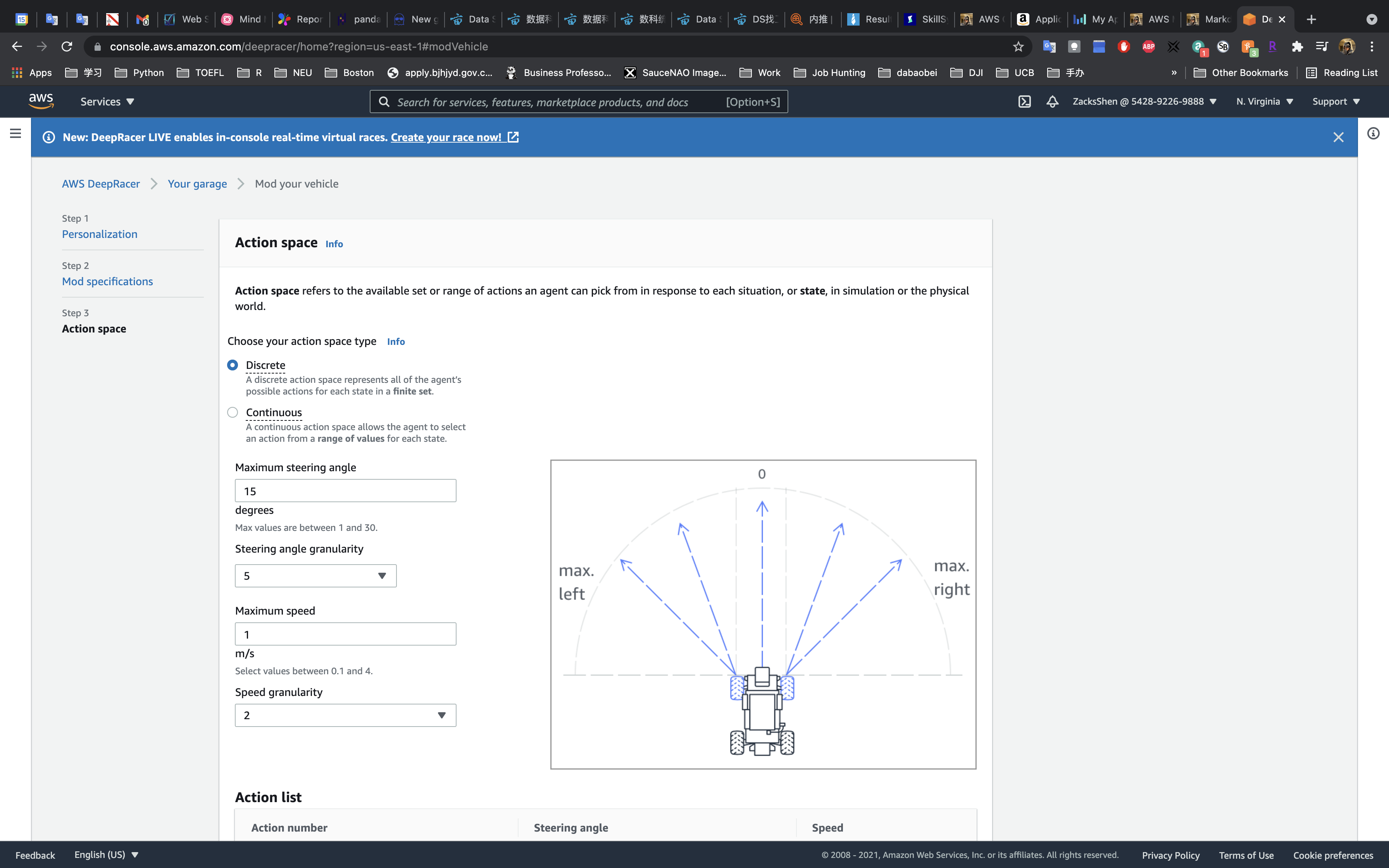

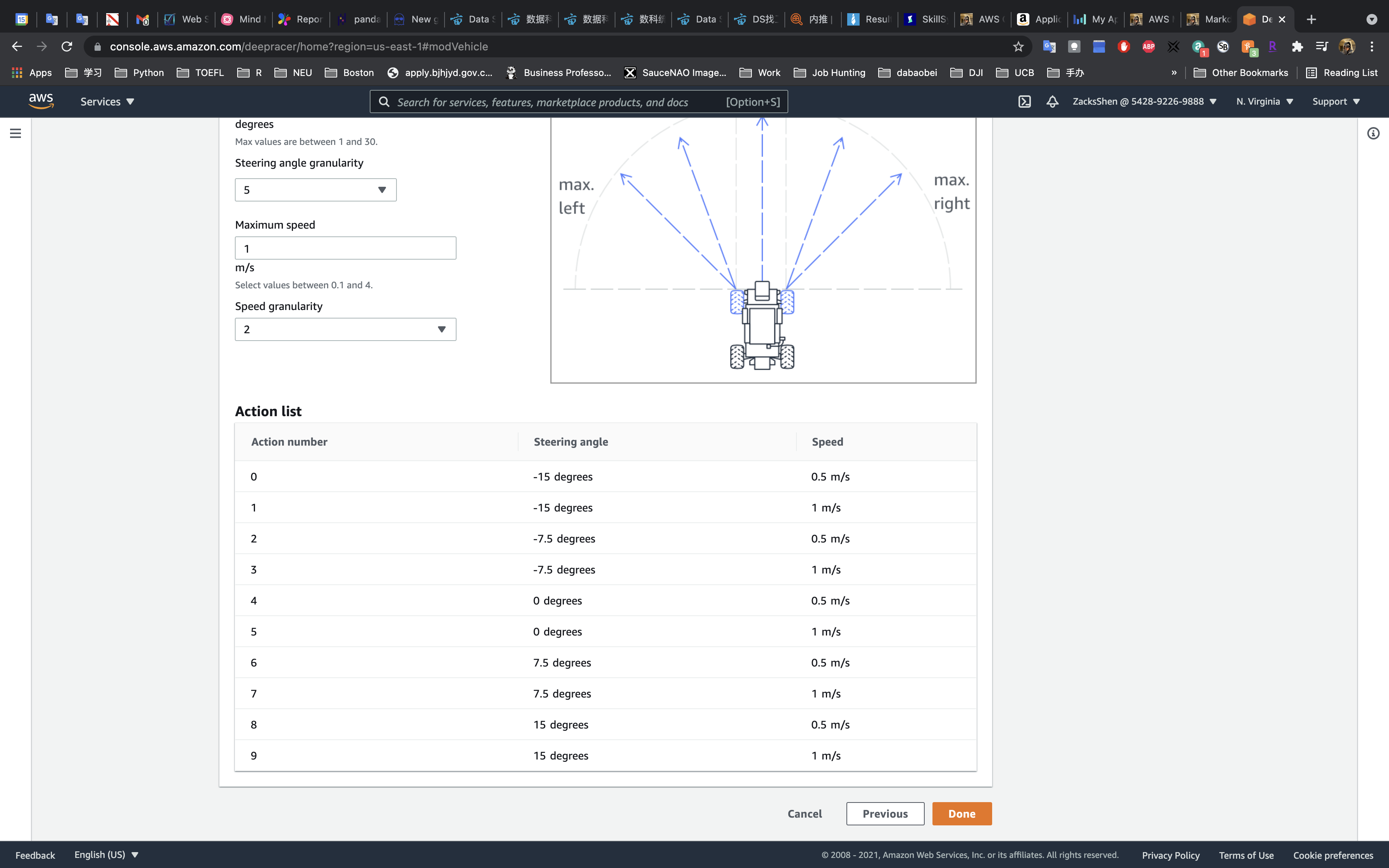

Step 3: Action space

- Choose your action space type

Discrete

- Maximum steering angle

15

Click on Done

Train your car

Services -> DeepRacer -> Your models

Create model

Click on Create model

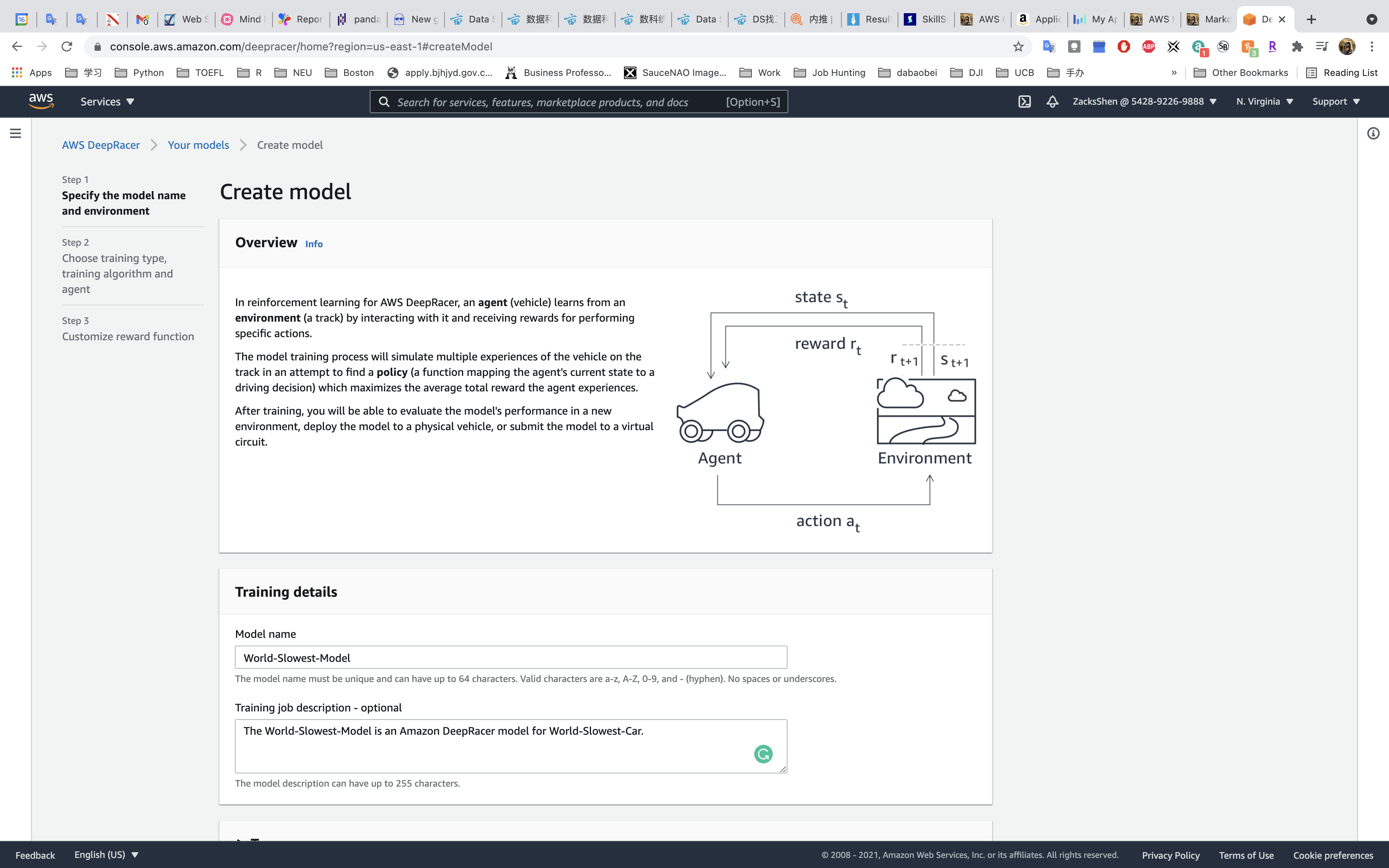

Step 1: Specify the model name and environment

- Model name: type your model name

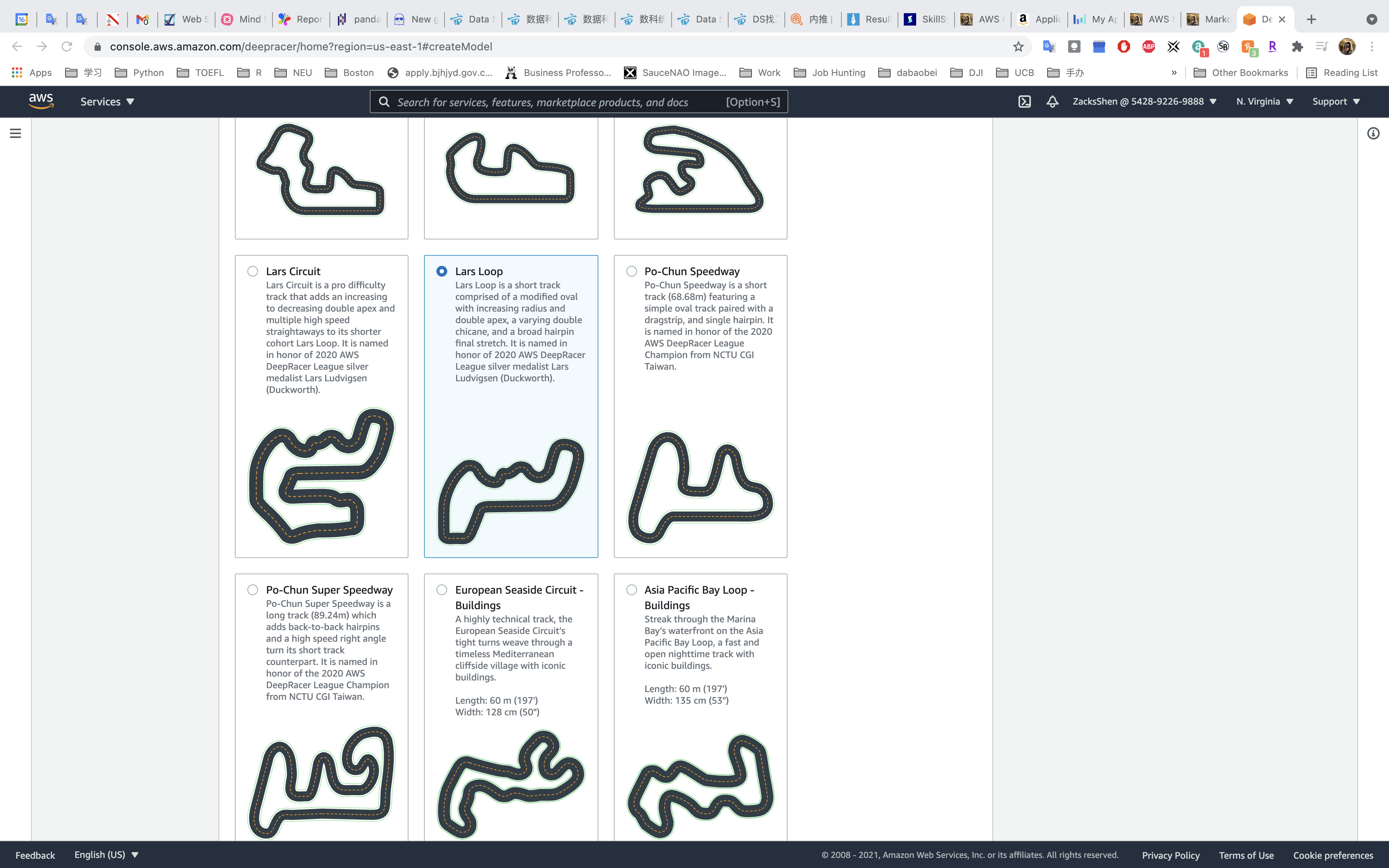

- Environment simulation:

Lars Loop

Click on Next

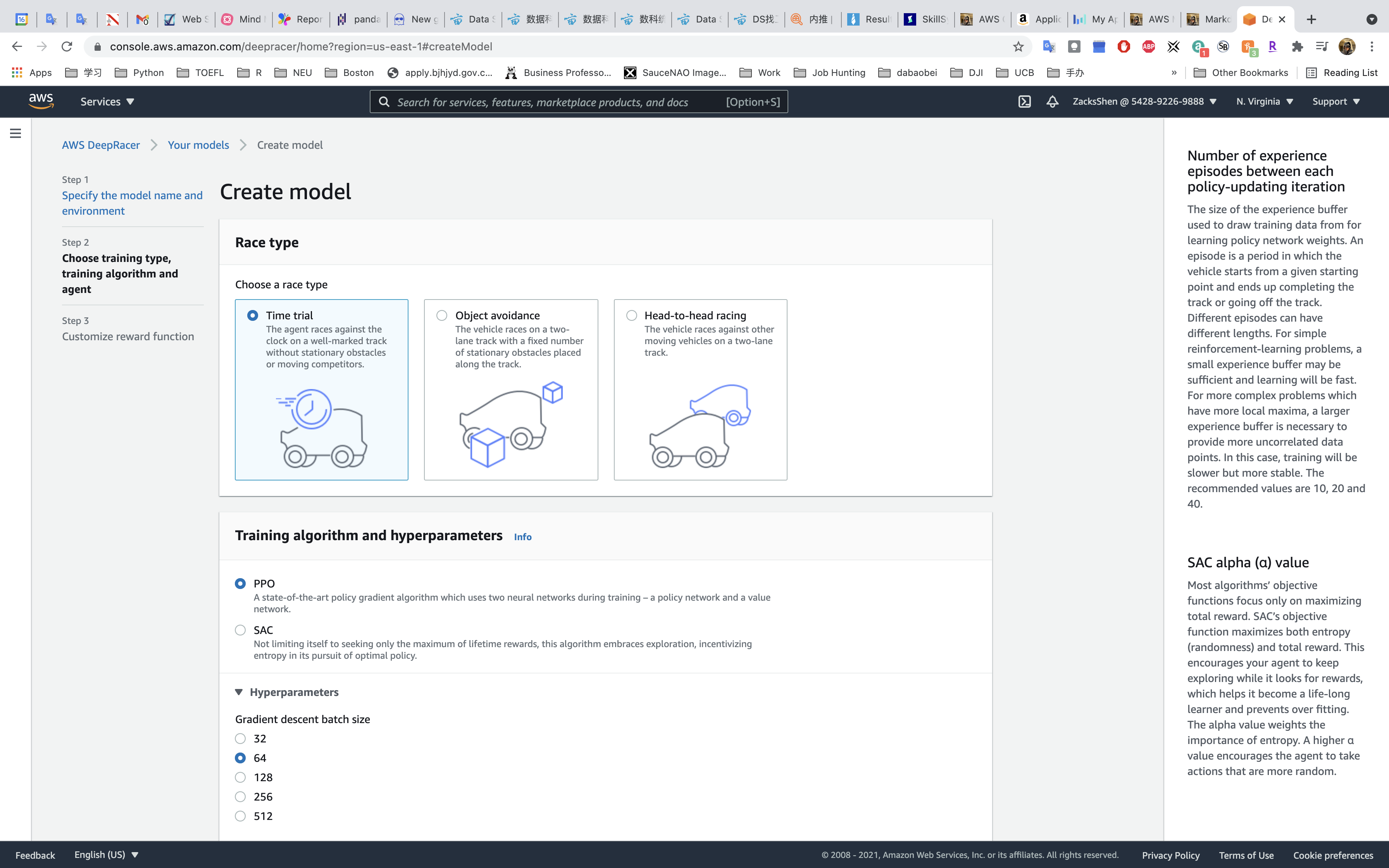

Step 2: Choose training type, training algorithm and agent

- Race type:

Time trial - Training algorithm and hyperparameters:

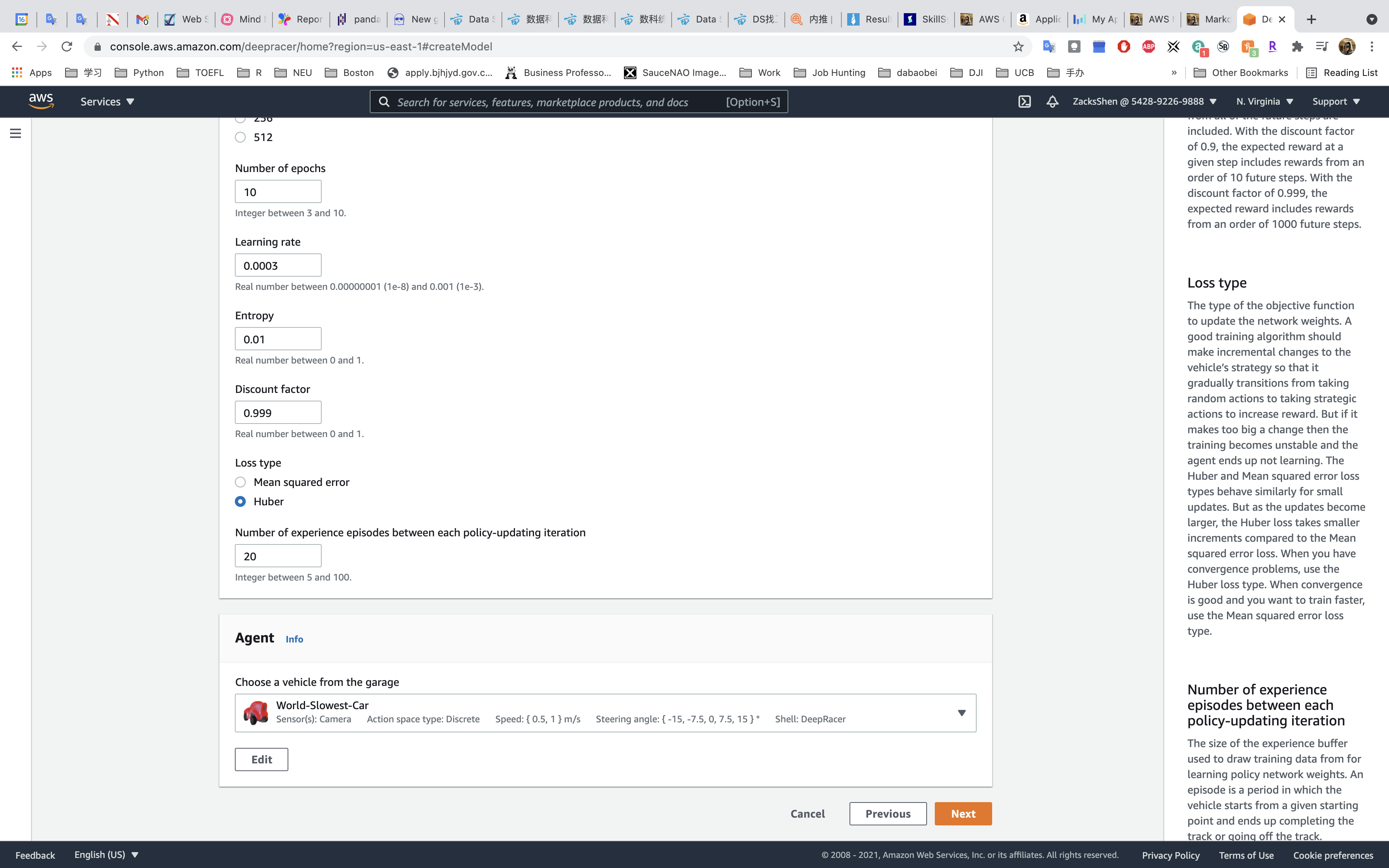

PPO - Hyperparameters

- Gradient descent batch size:

64

- Gradient descent batch size:

- Agent: your vehicle

Click on Next

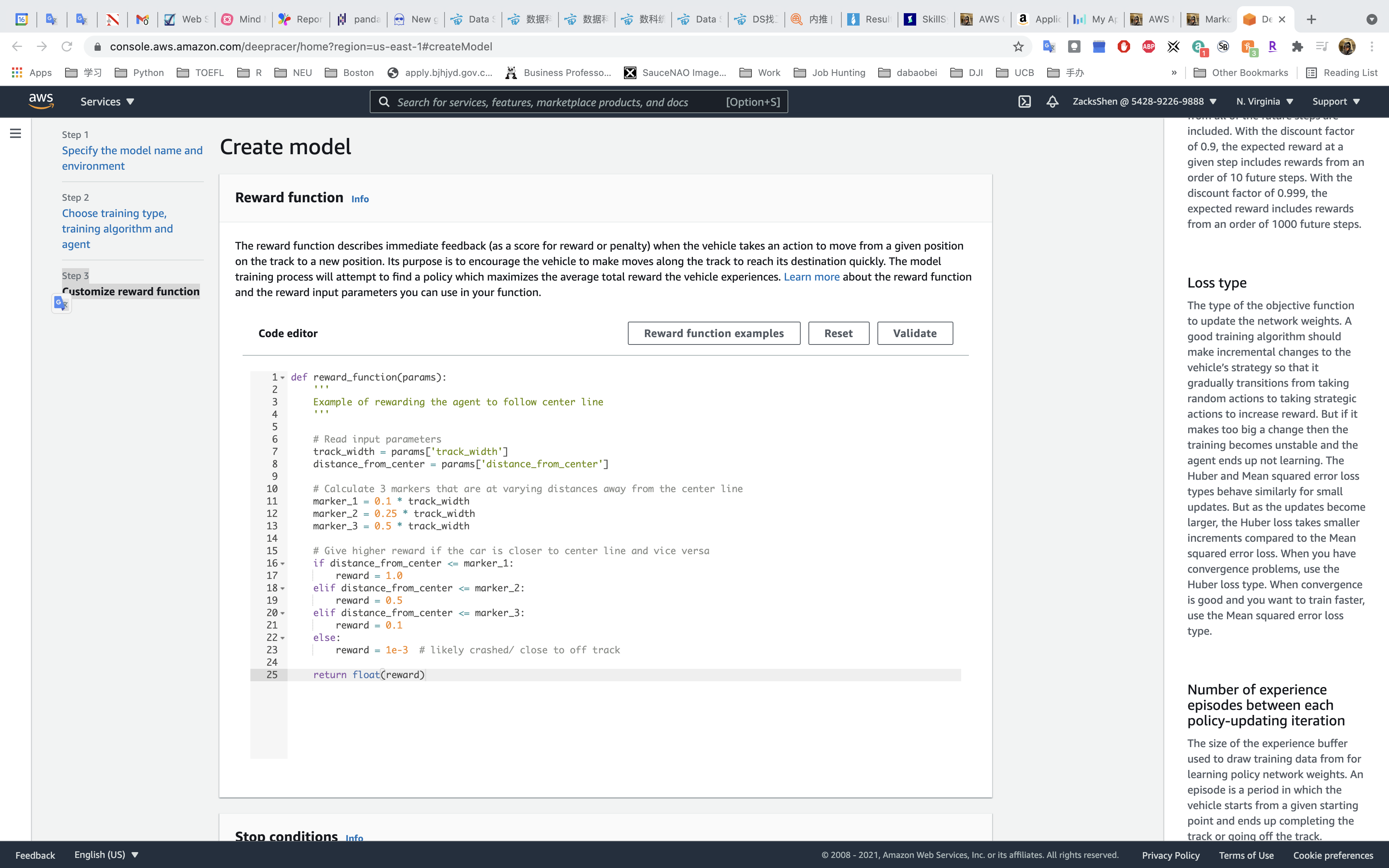

Step 3: Customize reward function

Use the default reward function.

The sample reward function:

1 | def reward_function(params): |

Click on Validate

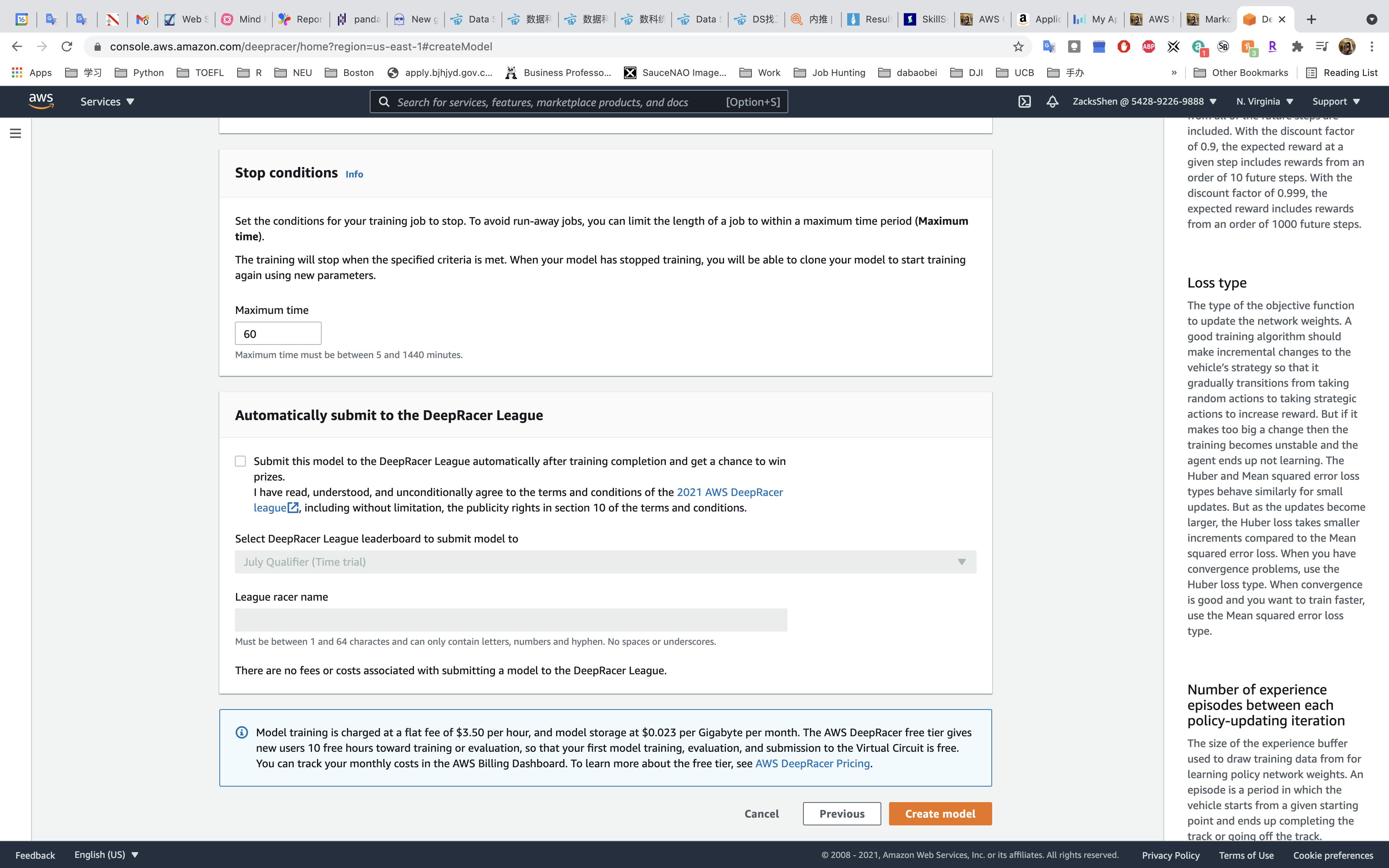

Uncheck submission.

- Stop conditions:

60

Click on Create model

Wait 6 minutes for initializing.

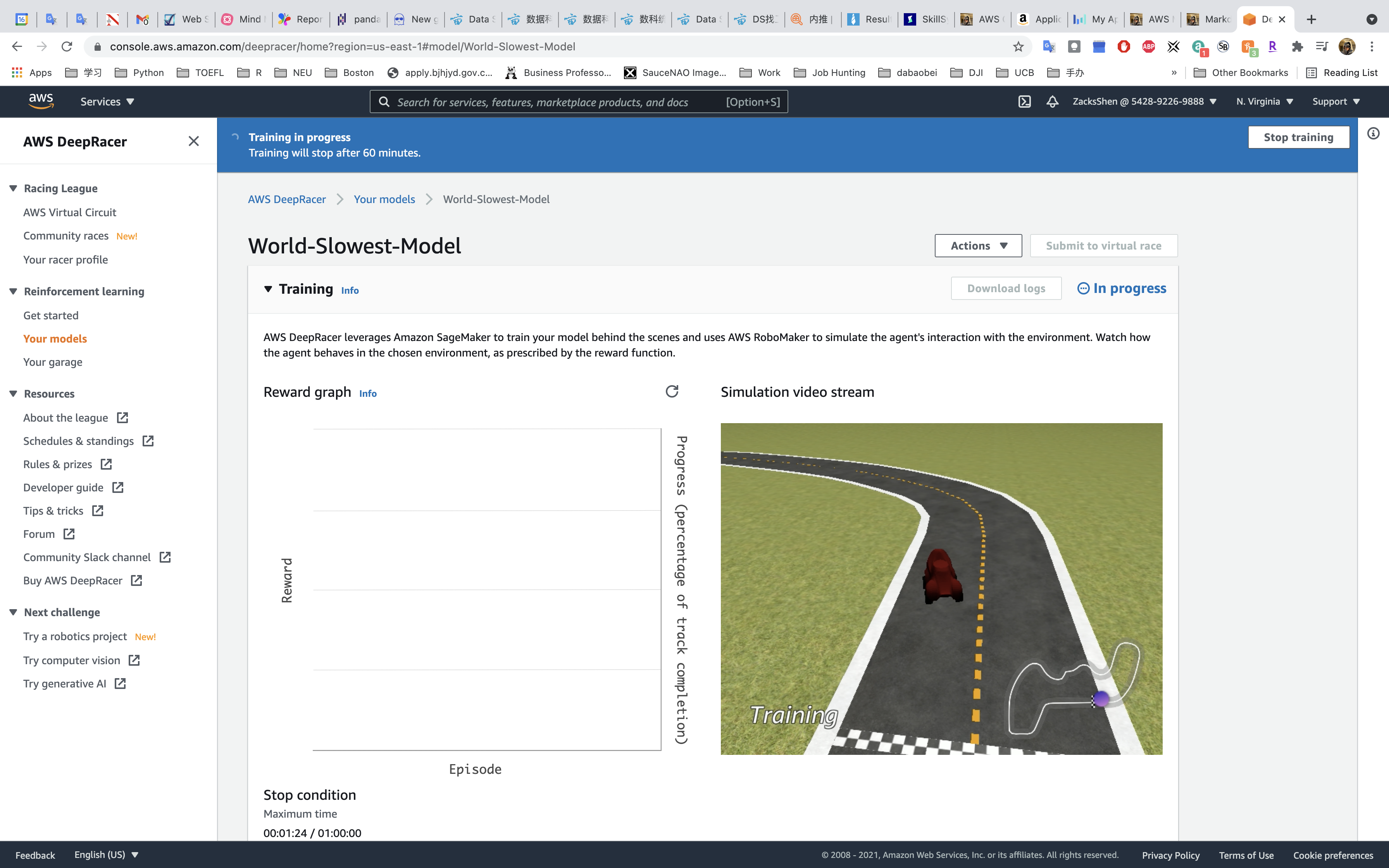

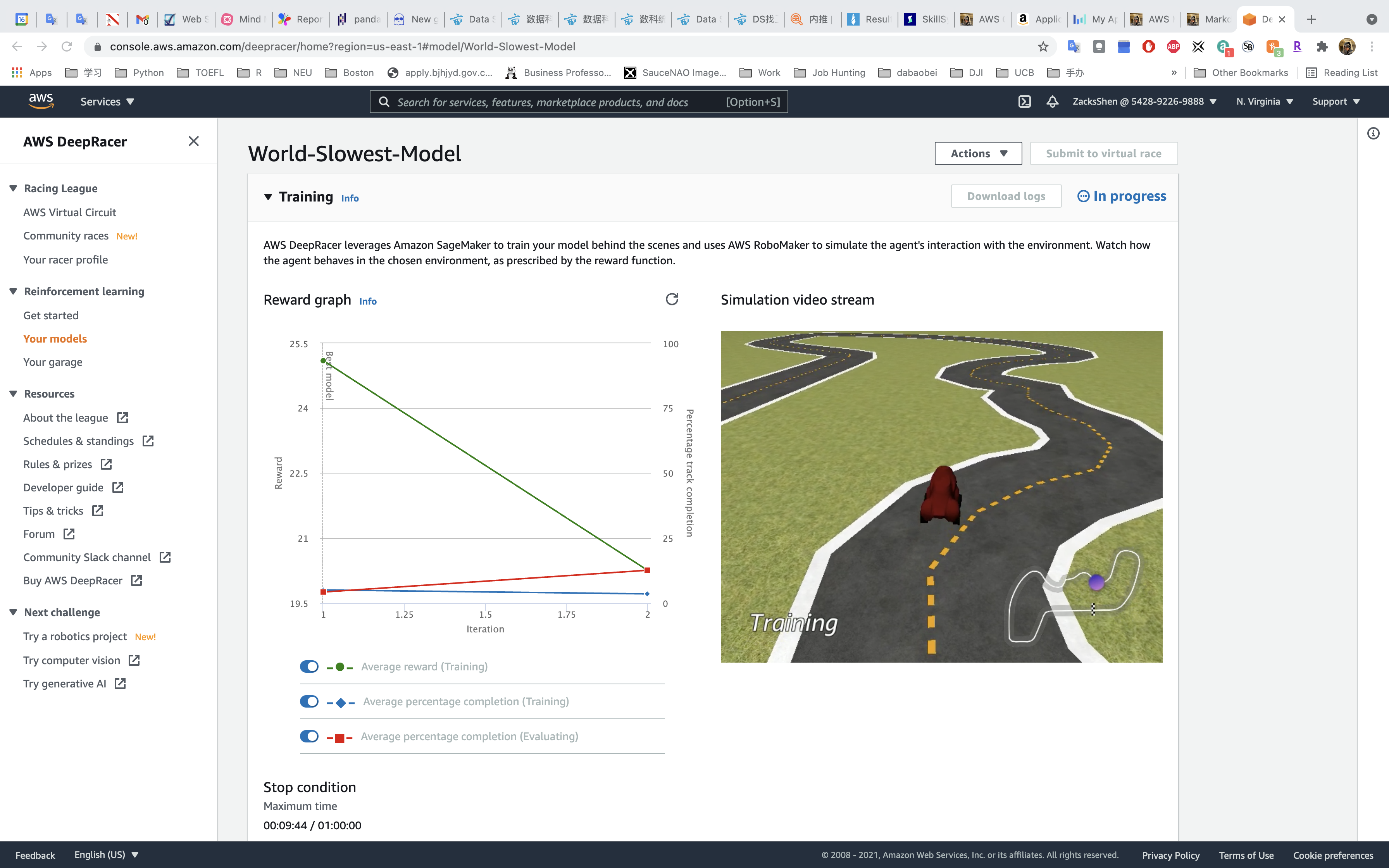

Wait until the model well trained. You can come back after the time reach the Stop condition.

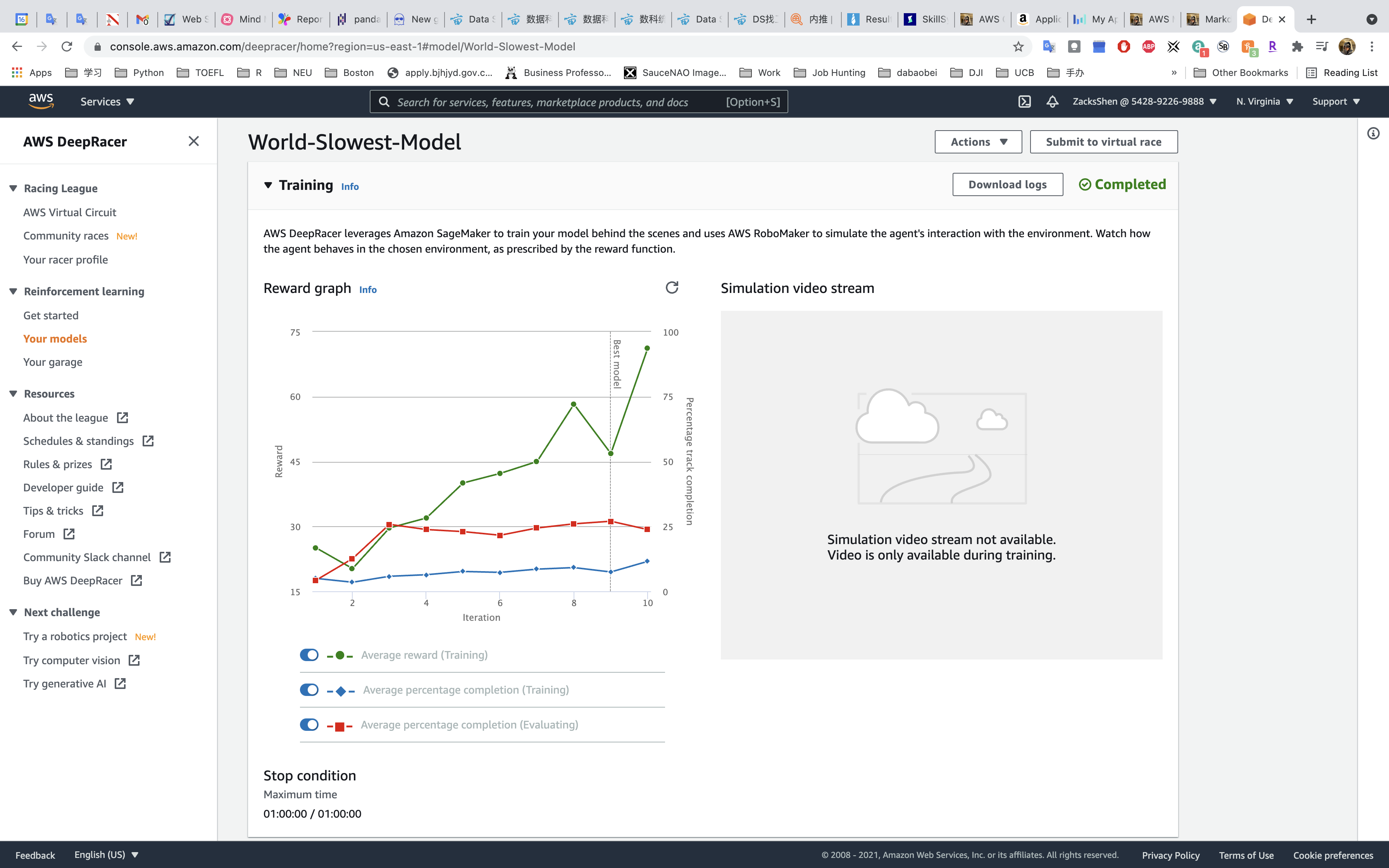

Completed

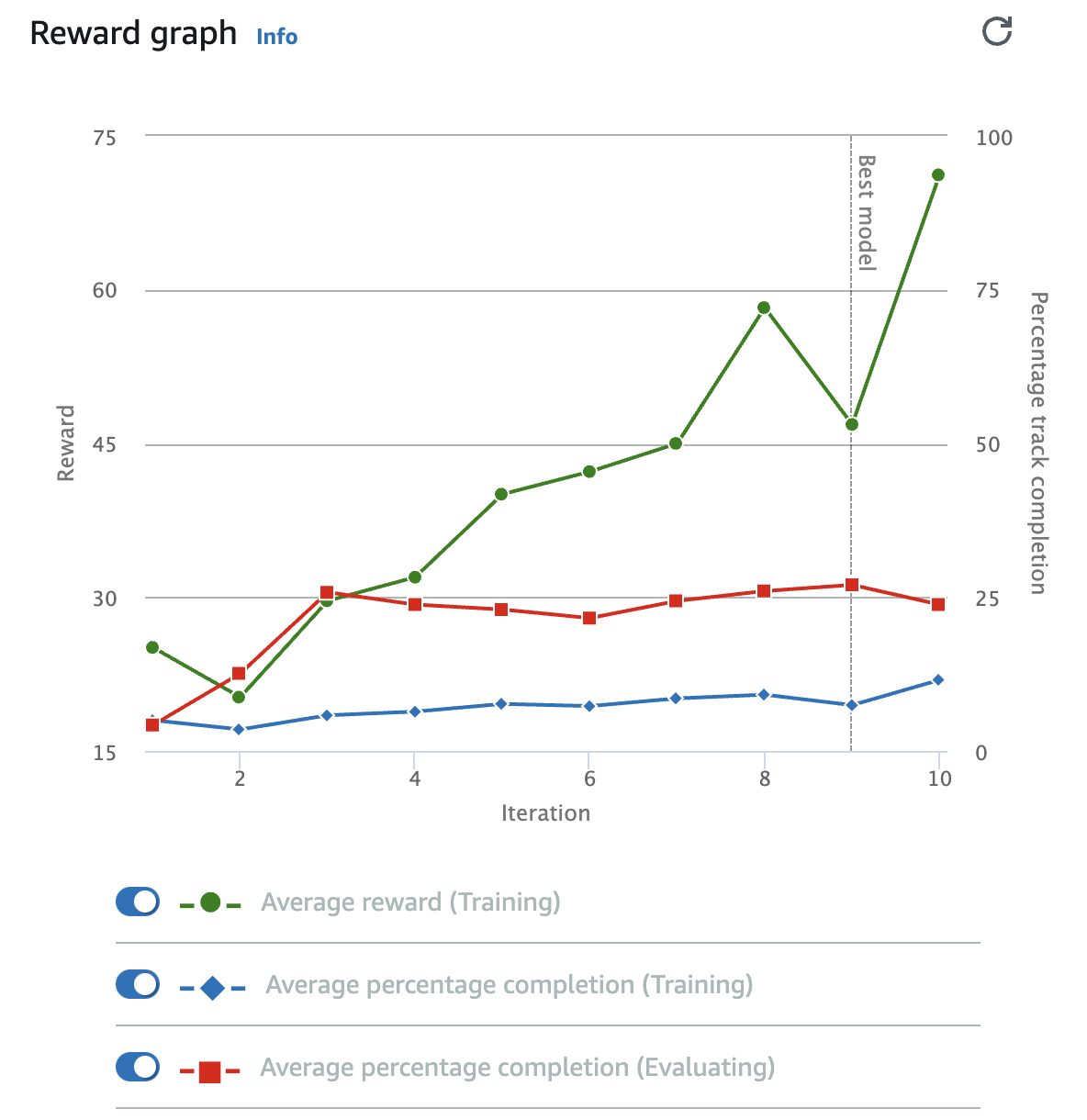

Find the following on your reward graph:

- Average reward

- Average percentage completion (training)

- Average percentage completion (evaluation)

- Best model line

- Reward primary y-axis

- Percentage track completion secondary y-axis

- Iteration x-axis

Char Explanation

Exercise Solution

To get a sense of how well your training is going, watch the reward graph. Here is a list of its parts and what they do:

- Average reward

- This graph represents the average reward the agent earns during a training iteration. The average is calculated by averaging the reward earned across all episodes in the training iteration. An episode begins at the starting line and ends when the agent completes one loop around the track or at the place the vehicle left the track or collided with an object. Toggle the switch to hide this data.

- Average percentage completion (training)

- The training graph represents the average percentage of the track completed by the agent in all training episodes in the current training. It shows the performance of the vehicle while experience is being gathered.

Average percentage completion (evaluation)

- While the model is being updated, the performance of the existing model is evaluated. The evaluation graph line is the average percentage of the track completed by the agent in all episodes run during the evaluation period.

- Best model line

- This line allows you to see which of your model iterations had the highest average progress during the evaluation. The checkpoint for this iteration will be stored. A checkpoint is a snapshot of a model that is captured after each training (policy-updating) iteration.

- Reward primary y-axis

- This shows the reward earned during a training iteration. To read the exact value of a reward, hover your mouse over the data point on the graph.

- Percentage track completion secondary y-axis

- This shows you the percentage of the track the agent completed during a training iteration.

Iteration x-axis

- This shows the number of iterations completed during your training job.

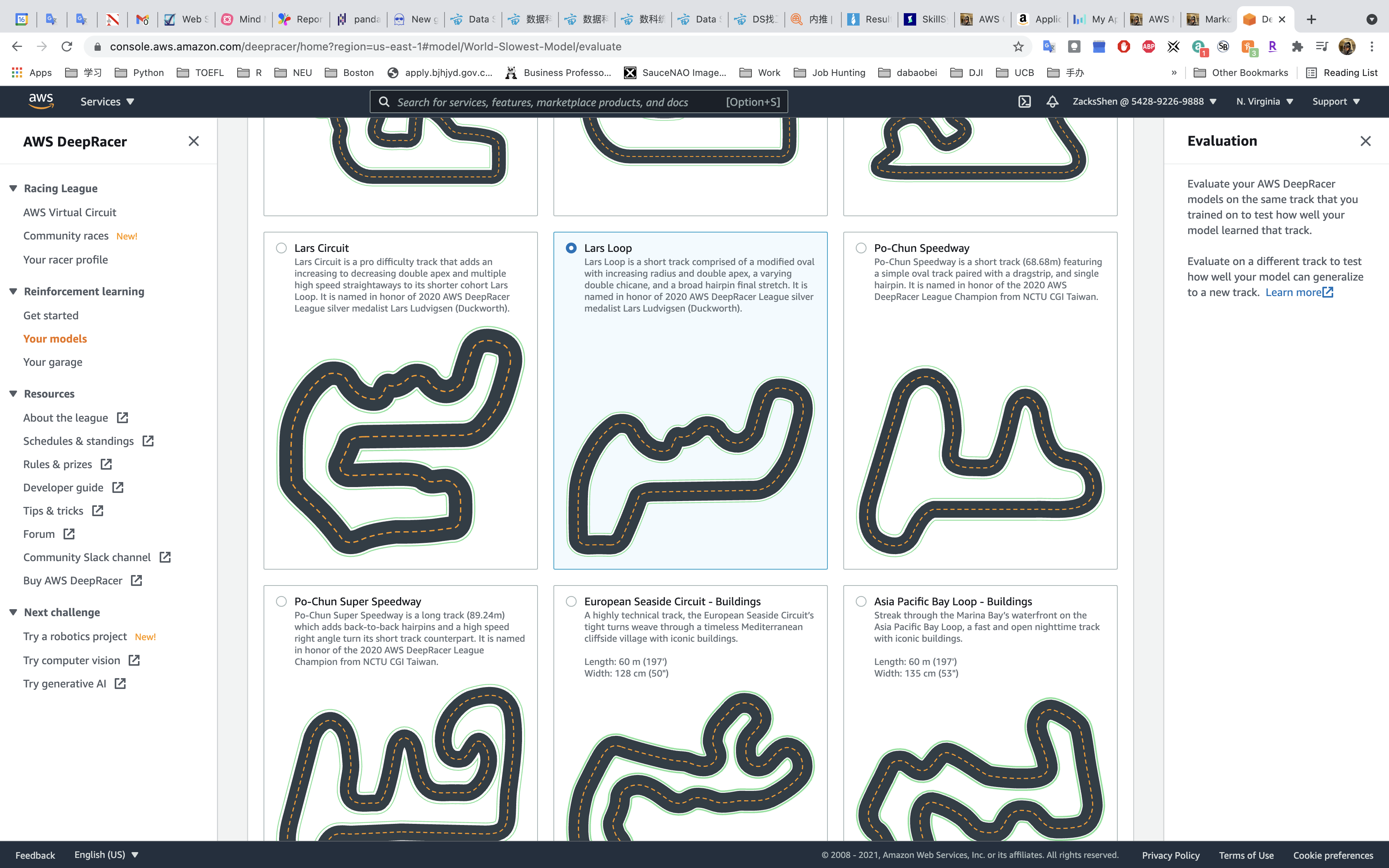

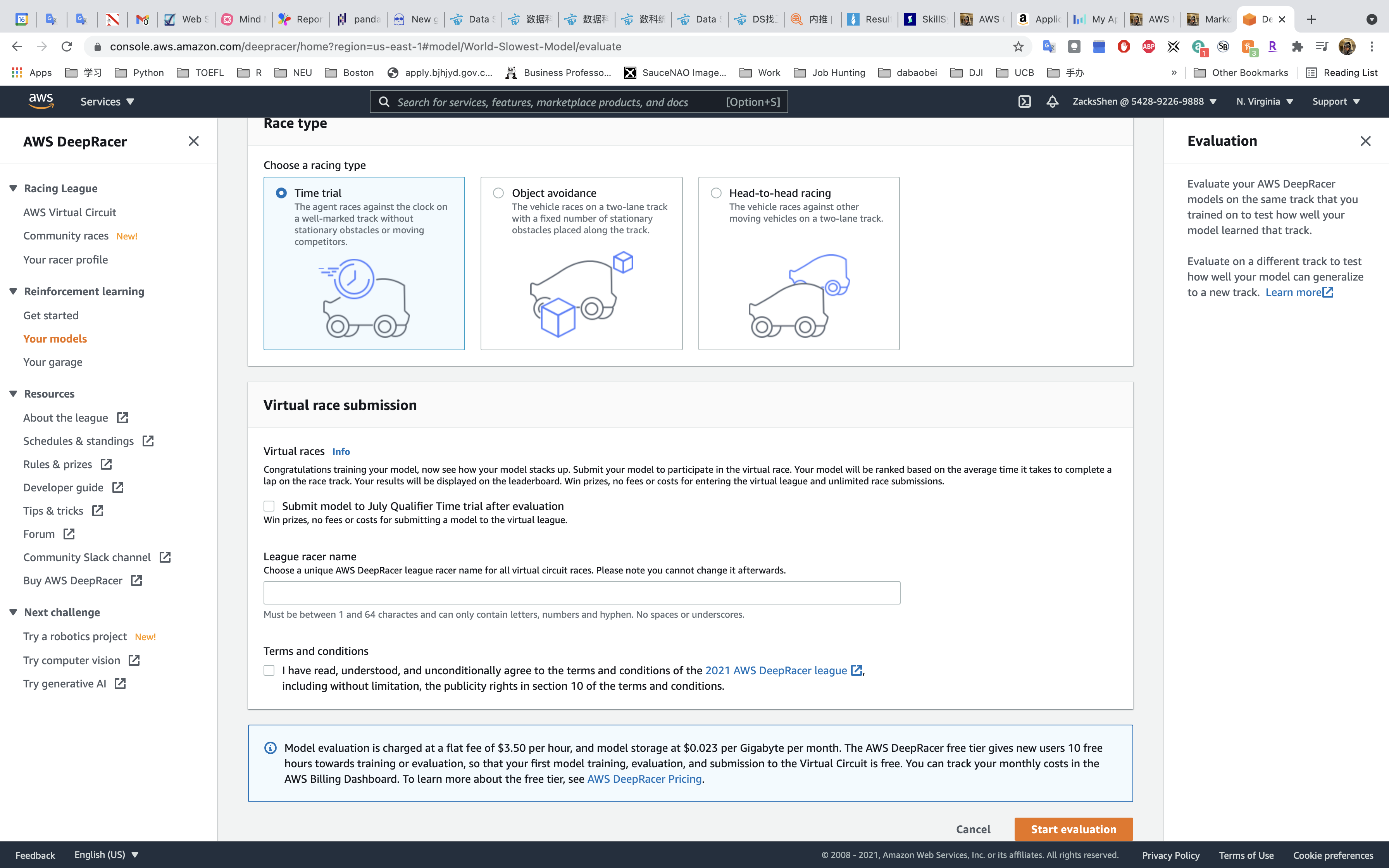

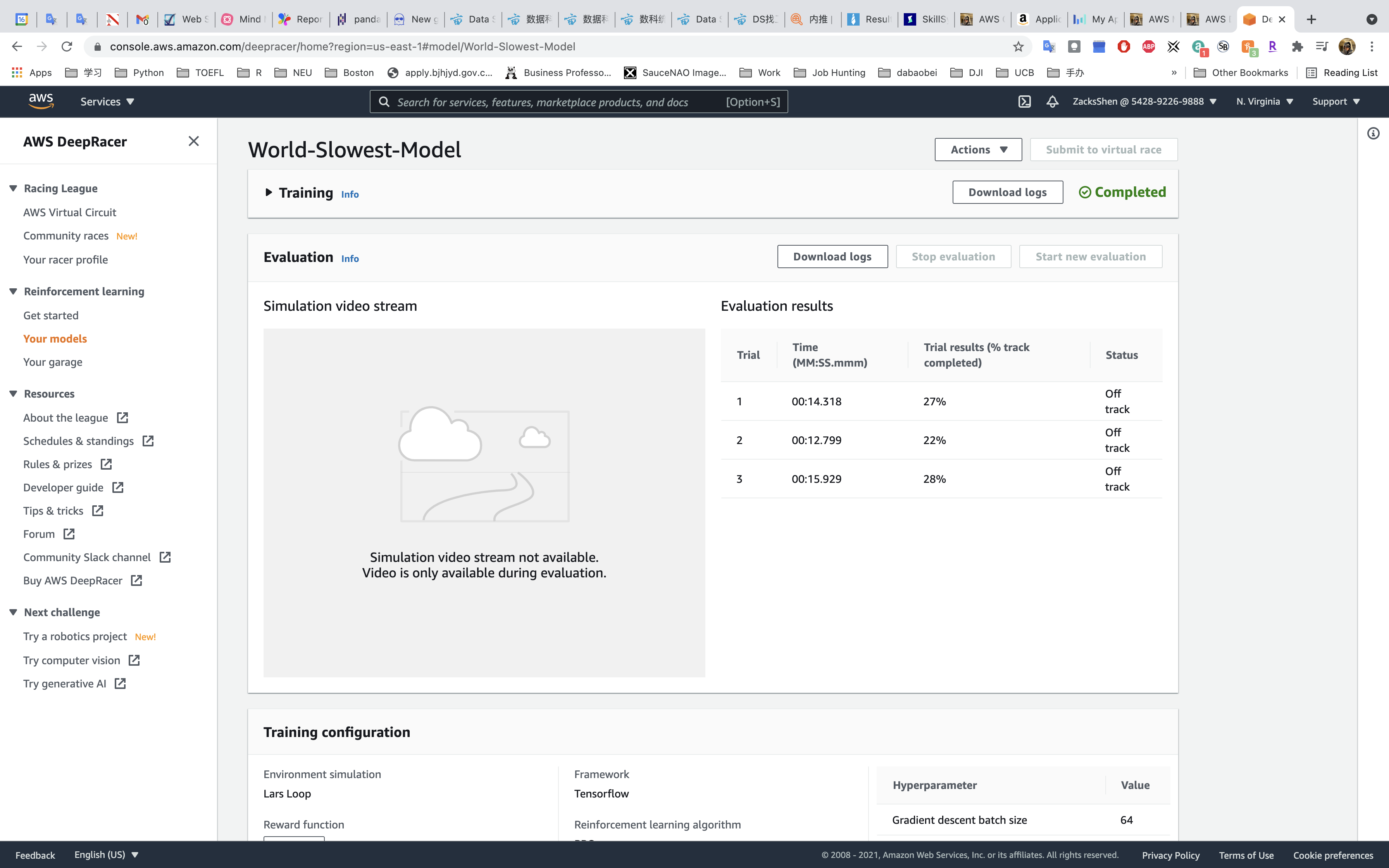

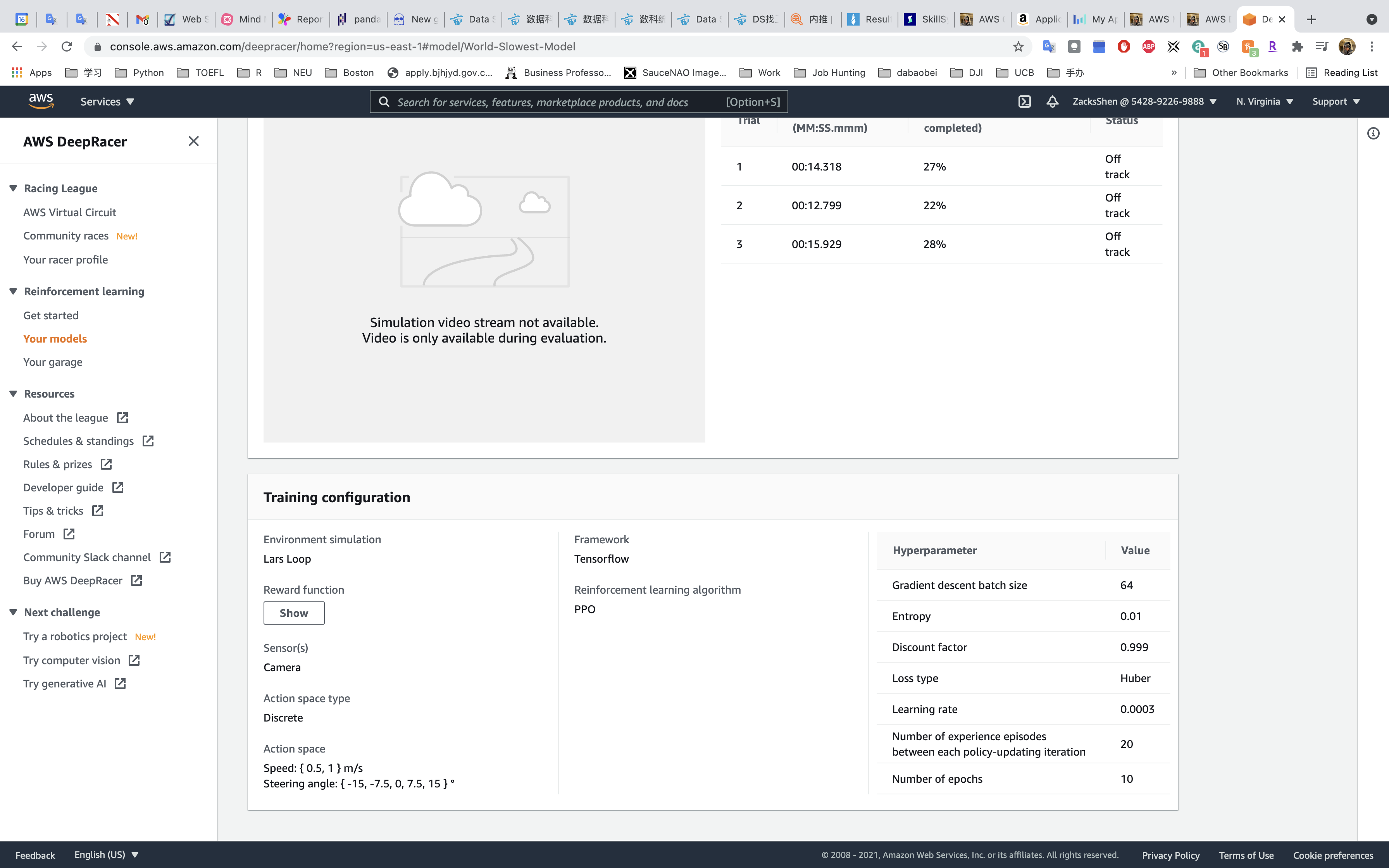

Evaluate your model

Services -> DeepRacer -> Your models

Select your model then click on Start evaluation

- Evaluate criteria:

Lars Loop

- Race Type:

Time trail

Uncheck Submit model to July Qualifier Time trial after evaluation

Click on Star evaluation

Our model is not good as expectation since there is no track completed.

You can try to update the Training configuration or replace another car or or train your model longer for a better performance. For more information, visit Exercise Solution: AWS DeepRacer

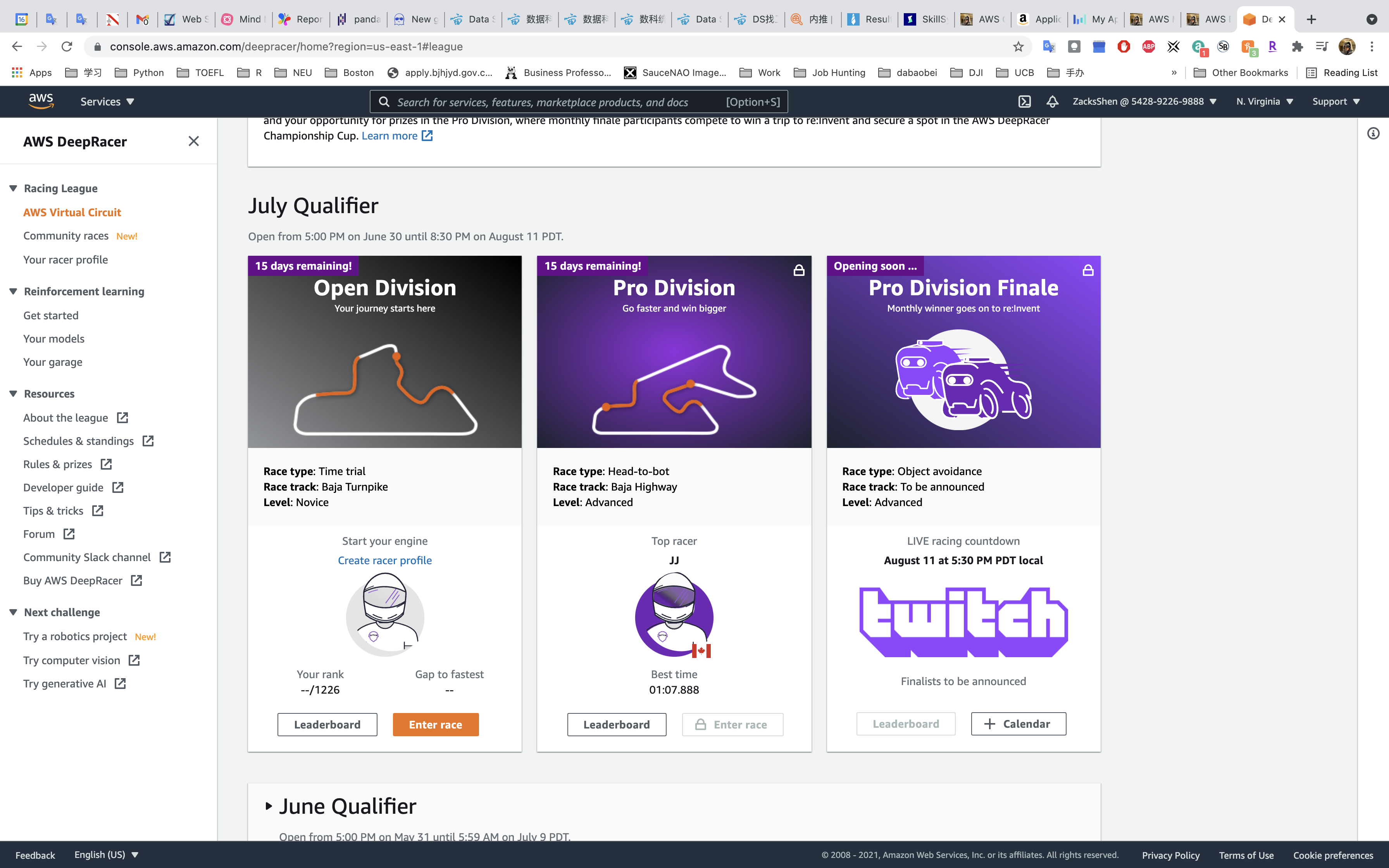

Virtual Circuit

Services -> DeepRacer -> AWS Virtual Circuit

Click on Enter race to join the AWS monthly race track.

Edit racer profile

Services -> DeepRacer -> Your racer profile

Click on Edit to edit your racer profile.